Compiler Chapter 1

- 1. 1.1 Compilers:A compiler is a program that reads a program written in one language –– the source language –– and translates it into an equivalent program in another language –– the target language1

- 2. 1.1 Compilers:As an important part of this translation process, the compiler reports to its user the presence of errors in the source program.2

- 4. 1.1 Compilers:At first glance, the variety of compilers may appear overwhelming.There are thousands of source languages, ranging from traditional programming languages such as FORTRAN and Pascal to specialized languages.4

- 5. 1.1 Compilers:Target languages are equally as varied;A target language may be another programming language, or the machine language of any computer.5

- 6. 1.1 Compilers:Compilers are sometimes classified as:single-passmulti-passload-and-goDebuggingoptimizing6

- 7. 1.1 Compilers:The basic tasks that any compiler must perform are essentially the same.By understanding these tasks, we can construct compilers for a wide variety of source languages and target machines using the same basic techniques.7

- 8. 1.1 Compilers:Throughout the 1950’s, compilers were considered notoriously difficult programs to write.The first FORTRAN compiler, for example, took 18 staff-years to implement.8

- 9. 9

- 10. The Analysis-Synthesis Model of Compilation:There are two parts of compilation:AnalysisSynthesis10

- 11. The Analysis-Synthesis Model of Compilation:The analysis part breaks up the source program into constituent piecescreates an intermediate representation of the source program.11

- 12. The Analysis-Synthesis Model of Compilation:The synthesis part constructs the desired target program from the intermediate representation.12

- 13. The Analysis-Synthesis Model of Compilation:FrontEndBackEndIRsourcecodemachinecodeerrors13

- 14. The Analysis-Synthesis Model of Compilation:During analysis, the operations implied by the source program are determined and recorded in a hierarchical structure called a tree. Often, a special kind of tree called a syntax tree is used.14

- 15. The Analysis-Synthesis Model of Compilation:In syntax tree each node represents an operation and the children of the node represent the arguments of the operation.For example, a syntax tree of an assignment statement is shown below.15

- 16. The Analysis-Synthesis Model of Compilation:16

- 17. 17

- 18. Analysis of the Source Program:In compiling, analysis consists of three phases:Linear Analysis:Hierarchical Analysis:Semantic Analysis:18

- 19. Analysis of the Source Program:Linear Analysis:In which the stream of characters making up the source program is read from left-to-right and grouped into tokens that are sequences of characters having a collective meaning. 19

- 20. Scanning or Lexical Analysis (Linear Analysis):In a compiler, linear analysis is called lexical analysis or scanning.For example, in lexical analysis the characters in the assignment statementPosition: = initial + rate * 60Would be grouped into the following tokens:20

- 21. Scanning or Lexical Analysis (Linear Analysis):The identifier, position.The assignment symbol :=The identifier initial.The plus sign.The identifier rate.The multiplication sign.The number 60.21

- 22. Scanning or Lexical Analysis (Linear Analysis):The blanks separating the characters of these tokens would normally be eliminated during the lexical analysis.22

- 23. 23

- 24. Analysis of the Source Program:Hierarchical Analysis:In which characters or tokens are grouped hierarchically into nested collections with collective meaning.24

- 25. Syntax Analysis or Hierarchical Analysis (Parsing):Hierarchical analysis is called parsing or syntax analysis.It involves grouping the tokens of the source program into grammatical phases that are used by the compiler to synthesize output.25

- 26. Syntax Analysis or Hierarchical Analysis (Parsing):The grammatical phrases of the source program are represented by a parse tree.26

- 27. Syntax Analysis or Hierarchical Analysis (Parsing):27

- 28. Syntax Analysis or Hierarchical Analysis (Parsing):In the expression initial + rate * 60, the phrase rate * 60 is a logical unit because the usual conventions of arithmetic expressions tell us that the multiplication is performed before addition. Because the expression initial + rate is followed by a *, it is not grouped into a single phrase by itself28

- 29. Syntax Analysis or Hierarchical Analysis (Parsing):The hierarchical structure of a program is usually expressed by recursive rules.For example, we might have the following rules, as part of the definition of expression:29

- 30. Syntax Analysis or Hierarchical Analysis (Parsing):Any identifier is an expression.Any number is an expressionIf expression1 and expression2 are expressions, then so areExpression1 + expression2Expression1 * expression2(Expression1 )30

- 31. 31

- 32. Analysis of the Source Program:Semantic Analysis:In which certain checks are performed to ensure that the components of a program fit together meaningfully.32

- 33. Semantic Analysis: The semantic analysis phase checks the source program for semantic errors and gathers type information for the subsequent code-generation phase.33

- 34. Semantic Analysis: It uses the hierarchical structure determined by the syntax-analysis phase to identify the operators and operand of expressions and statements.34

- 35. Semantic Analysis: An important component of semantic analysis is type checking. Here are the compiler checks that each operator has operands that are permitted by the source language specification.35

- 36. Semantic Analysis: For example, when a binary arithmetic operator is applied to an integer and real. In this case, the compiler may need to be converting the integer to a real. As shown in figure given below36

- 38. 38

- 39. 1.3 The Phases of a Compiler:A compiler operates in phases.Each of which transforms the source program from one representation to another.A typical decomposition of a compiler is shown in fig given below39

- 40. 1.3 The Phases of a Compiler:40

- 41. 1.3 The Phases of a Compiler:Linear Analysis:In which the stream of characters making up the source program is read from left-to-right and grouped into tokens that are sequences of characters having a collective meaning. 41

- 42. 1.3 The Phases of a Compiler:In a compiler, linear analysis is called lexical analysis or scanning.For example, in lexical analysis the characters in the assignment statementPosition: = initial + rate * 60Would be grouped into the following tokens:42

- 43. 1.3 The Phases of a Compiler:The identifier, position.The assignment symbol :=The identifier initial.The plus sign.The identifier rate.The multiplication sign.The number 60.43

- 44. 1.3 The Phases of a Compiler:The blanks separating the characters of these tokens would normally be eliminated during the lexical analysis.44

- 45. 1.3 The Phases of a Compiler:Hierarchical Analysis:In which characters or tokens are grouped hierarchically into nested collections with collective meaning.45

- 46. 1.3 The Phases of a Compiler:Hierarchical analysis is called parsing or syntax analysis.It involves grouping the tokens of the source program into grammatical phases that are used by the compiler to synthesize output.46

- 47. 1.3 The Phases of a Compiler:The grammatical phrases of the source program are represented by a parse tree.47

- 48. 1.3 The Phases of a Compiler:48

- 49. 1.3 The Phases of a Compiler:In the expression initial + rate * 60, the phrase rate * 60 is a logical unit because the usual conventions of arithmetic expressions tell us that the multiplication is performed before addition. Because the expression initial + rate is followed by a *, it is not grouped into a single phrase by itself49

- 50. 1.3 The Phases of a Compiler:The hierarchical structure of a program is usually expressed by recursive rules.For example, we might have the following rules, as part of the definition of expression:50

- 51. 1.3 The Phases of a Compiler:Any identifier is an expression.Any number is an expressionIf expression1 and expression2 are expressions, then so areExpression1 + expression2Expression1 * expression2(Expression1 )51

- 52. 1.3 The Phases of a Compiler:Semantic Analysis:In which certain checks are performed to ensure that the components of a program fit together meaningfully.52

- 53. 1.3 The Phases of a Compiler:The semantic analysis phase checks the source program for semantic errors and gathers type information for the subsequent code-generation phase.53

- 54. 1.3 The Phases of a Compiler:It uses the hierarchical structure determined by the syntax-analysis phase to identify the operators and operand of expressions and statements.54

- 55. 1.3 The Phases of a Compiler:An important component of semantic analysis is type checking. Here are the compiler checks that each operator has operands that are permitted by the source language specification.55

- 56. 1.3 The Phases of a Compiler:For example, when a binary arithmetic operator is applied to an integer and real. In this case, the compiler may need to be converting the integer to a real. As shown in figure given below56

- 57. 1.3 The Phases of a Compiler:57

- 58. 1.3 The Phases of a Compiler:Symbol Table Management:An essential function of a compiler is to record the identifiers used in the source program and collect information about various attributes of each identifier.These attributes may provide information about the storage allocated for an identifier, its type, its scope.58

- 59. 1.3 The Phases of a Compiler:The symbol table is a data structure containing a record for each identifier with fields for the attributes of the identifier.When an identifier in the source program is detected by the lexical analyzer, the identifier is entered into the symbol table59

- 60. 1.3 The Phases of a Compiler:However, the attributes of an identifier cannot normally be determined during lexical analysis.For example, in a Pascal declaration likeVar position, initial, rate : real;The type real is not known when position, initial and rate are seen by the lexical analyzer.60

- 61. 1.3 The Phases of a Compiler:The remaining phases gets information about identifiers into the symbol table and then use this information in various ways.For example, when doing semantic analysis and intermediate code generation, we need to know what the types of identifiers are, so we can check that the source program uses them in valid ways, and so that we can generate the proper operations on them.61

- 62. 1.3 The Phases of a Compiler:The code generator typically enters and uses detailed information about the storage assigned to identifiers.62

- 63. 63

- 64. Error Detection and Reporting:Each phase can encounter errors.However, after detecting an error, a phase must somehow deal with that error, so that compilation can proceed, allowing further errors in the source program to be detected.64

- 65. Error Detection and Reporting:A compiler that stops when it finds the first error is not as helpful as it could be.The syntax and semantic analysis phases usually handle a large fraction of the errors detectable by the compiler.65

- 66. Error Detection and Reporting:Errors where the token stream violates the structure rules (syntax) of the language are determined by the syntax analysis phase.The lexical phase can detect errors where the characters remaining in the input do not form any token of the language.66

- 67. 67

- 68. Intermediate Code Generation: After Syntax and semantic analysis, some compilers generate an explicit intermediate representation of the source program.We can think of this intermediate representation as a program for an abstract machine.68

- 69. Intermediate Code Generation: This intermediate representation should have two important properties; it should be easy to produce, easy to translate into the target program.69

- 70. Intermediate Code Generation: We consider an intermediate form called “three-address code,”which is like the assembly language for a machine in which every memory location can act like a register.70

- 71. Intermediate Code Generation: Three-address code consists of a sequence of instructions, each of which has at most three operands. The source program in (1.1) might appear in three-address code as71

- 72. Intermediate Code Generation: (1.3)Temp1 := inttoreal (60)Temp2 := id3 * temp1Temp3 := id2 + temp2id1 := temp372

- 73. 73

- 74. Code Optimization: The code optimization phase attempts to improve the intermediate code, so that faster-running machine code will result.74

- 75. Code Optimization: Some optimizations are trivial.For example, a natural algorithm generates the intermediate code (1.3), using an instruction for each operator in the tree representation after semantic analysis, even though there is a better way to perform the same calculation, using the two instructions.75

- 76. Code Optimization: (1.4)Temp1 := id3 * 60.0id := id2 + temp1 There is nothing wrong with this simple algorithm, since the problem can be fixed during the code-optimization phase.76

- 77. Code Optimization: That is, the compiler can deduce that the conversion of 60 from integer to real representation can be done once and for all at compile time, so the inttoreal operation can be eliminated.77

- 78. Code Optimization: Besides, temp3 is used only once, to transmit its value to id1. It then becomes safe to substitute id1 for temp3, whereupon the last statement of 1.3 is not needed and the code of 1.4 results.78

- 79. 79

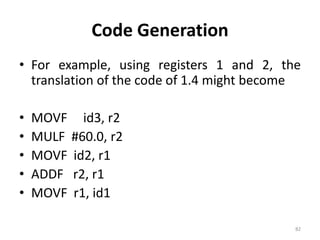

- 80. Code GenerationThe final phase of the compiler is the generation of target codeconsisting normally of relocatable machine code or assembly code.80

- 81. Code GenerationMemory locations are selected for each of the variables used by the program.Then, intermediate instructions are each translated into a sequence of machine instructions that perform the same task.A crucial aspect is the assignment of variables to registers.81

- 82. Code GenerationFor example, using registers 1 and 2, the translation of the code of 1.4 might becomeMOVF id3, r2MULF #60.0, r2MOVF id2, r1ADDF r2, r1MOVF r1, id182

- 83. Code GenerationThe first and second operands of each instruction specify a source and destination, respectively. The F in each instruction tells us that instructions deal with floating-point numbers.83

- 84. Code GenerationThis code moves the contents of the address id3 into register 2, and then multiplies it with the real-constant 60.0.The # signifies that 60.0 is to be treated as a constant.84

- 85. Code GenerationThe third instruction moves id2 into register 1 and adds to it the value previously computed in register 2Finally, the value in register 1 is moved into the address of id1.85

- 86. 86

- 87. 1.4 Cousins of the Compiler:As we saw in given figure, the input to a compiler may be produced by one or more preprocessors, and further processing of the compiler’s output may be needed before running machine code is obtained.87

- 88. Library, Relocatable object files1.3. A language-processing system88

- 89. 1.4 Cousins of the Compiler:Preprocessors: preprocessors produce input to compilers. They may perform the following functions:Macro Processing:File inclusion:“Rational” Preprocessors:Language extensions:89

- 90. Preprocessors: Macro Processing:A preprocessor may allow a user to define macros that are shorthand’s for longer constructs.90

- 91. Preprocessors: File inclusion:A preprocessor may include header files into the program text.For example, the C preprocessor causes the contents of the file <global.h> to replace the statement #include <global.h> when it processes a file containing this statement.91

- 93. Preprocessors: “Rational” Preprocessors:These processors augment older languages with more modern flow-of-control and data-structuring facilities.93

- 94. Preprocessors: Language extensions:These processors attempt to add capabilities to the language by what amounts to built-in macros. For example, the language Equal is a database query language embedded in C. Statements beginning with ## are taken by the preprocessor to be database-access statements, unrelated to C, and are translated into procedure calls on routines that perform the database access.94

- 95. Assemblers:Some compilers produce assembly code that is passed to an assembler for further processing.Other compilers perform the job of the assembler, producing relocatable machine code that can be passed directly to the loader/link-editor.95

- 96. Assemblers:Here we shall review the relationship between assembly and machine code.96

- 97. Assemblers:Assembly code is a mnemonic version of machine code.In which names are used instead of binary codes for operations, and names are also given to memory addresses.97

- 98. Assemblers:A typical sequence of assembly instructions might beMOV a , R1ADD #2 , R1MOV R1 , b 98

- 99. Assemblers:This code moves the contents of the address a into register 1, then adds the constant 2 to it, reading the contents of register 1 as a fixed-point number, and finally stores the result in the location named by b. thus, it computes b:=a+2.99

- 101. Two-Pass Compiler:The simplest form of assembler makes two passes over the input.101

- 102. Two-Pass Compiler:in the first pass, all the identifiers that denote storage locations are found and stored in a symbol tableIdentifiers are assigned storage locations as they are encountered for the first time, so after reading 1.6, for example, the symbol table might contain the entries shown in given below.102

- 104. Two-Pass Compiler:In the second pass, the assembler scans the input again.This time, it translates each operation code into the sequence of bits representing that operation in machine language.The output of the 2nd pass is usually relocatable machine code. 104

- 105. Loaders and Link-Editors:usually, a program called a loader performs the two functions of loading and link-editing.105

- 106. Loaders and Link-Editors:The process of loading consists of taking relocatable machine code, altering the relocatable addresses, and placing the altered instructions and data in memory at the proper location.106

- 107. Loaders and Link-Editors:The link-editor allows us to make a single program from several files of relocatable machine code.107

- 108. 108

- 109. 1.5The Grouping of Phases:109

- 110. Front and Back Ends:The phases are collected into a front end and a back end.The front end consists of those phases that depend primarily on the source language and are largely independent of the target machine.110

- 111. Front and Back Ends:These normally include lexical and syntactic analysis, the creating of the symbol table, semantic analysis, and the generation of intermediate code.A certain among of code optimization can be done by the front end as well.111

- 112. Front and Back Ends:The front end also includes the error handling that goes along with each of these phases.112

- 113. Front and Back Ends:The back end includes those portions of the compiler that depend on the target machine.And generally, these portions do not depend on the source language, depend on just the intermediate language.113

- 114. Front and Back Ends:In the back end, we find aspects of the code optimization phase, and we find code generation, along with the necessary error handling and symbol table operations.114

- 115. 115

- 116. Passes:116

- 117. 117

- 118. 118

- 119. Compiler-Construction Tools:The compiler writer, like any programmer, can profitably use tools such asDebuggers,Version managers,Profilers and so on. 119

- 120. Compiler-Construction Tools:In addition to these software-development tools, other more specialized tools have been developed for helping implement various phases of a compiler. 120

- 121. Compiler-Construction Tools:Shortly after the first compilers were written, systems to help with the compiler-writing process appeared.These systems have often been referred to as Compiler-compilers,Compiler-generators,Or Translator-writing systems.121

- 122. Compiler-Construction Tools:Some general tools have been created for the automatic design of specific compiler components.These tools use specialized languages for specifying and implementing the component, and many use algorithms that are quite sophisticated.122

- 123. Compiler-Construction Tools:The most successful tools are those that hide the details of the generation algorithm and produce components that can be easily integrated into the remainder of a compiler.123

- 124. Compiler-Construction Tools:The following is a list of some useful compiler-construction tools:Parser generatorsScanner generatorsSyntax directed translation enginesAutomatic code generatorsData-flow engines124

- 125. Compiler-Construction Tools:Parser generatorsThese produce syntax analyzers, normally from input that is based on a context-free grammar.In early compilers, syntax analysis consumed not only a large fraction of the running time of a compiler, but a large fraction of the intellectual effort of writing a compiler.This phase is considered one of the easiest to implement.125

- 126. Compiler-Construction Tools:Scanner generators:These tools automatically generate lexical analyzers, normally from a specification based on regular expressions.The basic organization of the resulting lexical analyzer is in effect a finite automaton.126

- 127. Compiler-Construction Tools:Syntax directed translation engines:These produce collections of routines that walk the parse tree, generating intermediate code.The basic idea is that one or more “translations” are associated with each node of the parse tree, and each translation is defined in terms of translations at its neighbor nodes in the tree.127

- 128. Compiler-Construction Tools:Automatic code generators:Such a tool takes a collection of rules that define the translation of each operation of the intermediate language into the machine language for the target machine.128

- 129. Data-flow engines:Much of the information needed to perform good code optimization involves “data-flow analysis,” the gathering of information how values are transmitted from one part of a program to each other part. 129