concurrency_c#_public

- 2. Profile Paul Churchward - Senior software consultant at Infusion - Financial Risk Manager charter holder (FRM) - Completed Canadian SecuritiesCourse - Infusion Clients:TD Bank, Credit Suisse, one of the largest US hedge funds - Prior to Infusion: RBC Capital Markets & RBC Royal Bank - Specializing in Java, J2EE, Unit testing and refactoring - Very interested in R, Spark - Building a credit-risk calculation engine using SparkR

- 3. You have a problem and you say “I’ll solve it with concurrency” … Now you have 10 problems.

- 4. ThreadTypes A foreground thread will prevent the managed execution environment from shutting down. e.g. Shutting down the webserver while foreground threads are running will block until the threads are stopped. A background thread will NOT prevent the managed execution environment from shutting down. Once all foreground threads have completed, it will shutdown regardless of any background threads running. Use case: Heart beat service Foreground Background

- 5. Running Code Asynchronously Thread Very crude. Usually a bad idea Thread Pool Much safer than Thread, but limited options Task Best of both worlds

- 6. Synchronous Execution Running code synchronously Nothing special here.This code runs in the caller’s thread. public class PiWorker { long _pi; public long pi { get { return _pi; } } public void CalculatePi() { //do stuff } } PiWorker piWorker = new PiWorker(); piWorker.CalculatePi();

- 7. Thread Running code asynchronously This code runs in a separate thread.The caller’s thread fires it off and moves on to the next piece of work. public class PiWorker { long _pi; public long pi { get { return _pi; } } public void CalculatePi() { //do stuff } } PiWorker piWorker = new PiWorker(); Thread piThread = new Thread(piWorker.CalculatePi); piThread.Start(); //fire off CalculatePi() in the background DoSomeOtherWork(); //run DoSomeOtherWork() without waiting for CalculatePi() to finish

- 8. Thread Pools A thread pool is a collection of idle background threads managed by the runtime that stand ready to receive work that will be executed asynchronously. When a piece of work is submitted to the pool with available threads, a thread is removed from the pool to run the work, and put back when the work is complete. If you submit an item of work and there are no available threads (all are running), it will sit in a queue and picked up when a thread becomes available.The program will NOT block at this step. The runtime will decide how many idle threads to keep in the pool at a given time and what the maximum number of threads should be at a given time.These can be overwritten by you. Uncaught exceptions in threadpool threads will kill the application. Why use thread Pools? - Limit the number of threads that are active at any given time - Reduce the overhead of creating a new thread when a completed one can be reused - Let the runtime manage creation of new threads; it knows better than you

- 9. Thread Pools public class Foo { PiWorker piWorker = new PiWorker(); ThreadPool.QueueUserWorkItem(new WaitCallback(piWorker.CalculatePi)); } Alternate Syntax: public class Foo { PiWorker piWorker = new PiWorker(); ThreadPool.QueueUserWorkItem(piWorker.CalculatePi); } You can force a maximum and minimum number of threads in the pool: ThreadPool.SetMaxThreads(100); ThreadPool.SetMinThreads(30); WARNING:ThreadPool is a static class.This will affect any place in the application you use a thread pool.

- 10. Tasks - When aTask is created, it is submitted to theThreadPool automatically - Tasks provide more options than usingThreadPool directly - SinceTask usesThreadPool, it creates background threads var piCalculatorTask = Task.Run( () => { PiWorker piWorker = new PiWorker(); piWorker.CalculatePi(); Console.WriteLine(piCalculator.pi); } ); Task<TResult> can be used to return a value to the calling thread var piCalculatorTask = Task<long>.Run( () => { PiWorker piWorker = new PiWorker(); piWorker.CalculatePi(); return piCalculator.pi; } ); //Accessing the getter property will block until the asynchronous operation is complete Console.WriteLine(piCalculatorTask.Result)

- 11. Task Continuation - Tasks allow you to create a chain of tasks running each task in the chain after its parent completes; these are called Continuations. - A continuation will run no matter how its parent comes to competition: normally or faulting public void Foo() { Task<String> firstTask = Task<String>. Run(Bar); Task<String> secondTask = firstTask.ContinueWith(Baz); Console.WriteLine(secondTask.Result); //prints Bar-Baz } private String Bar() { return "Bar"; } private String Baz(Task<String> previousTask) { return previousTask.Result+"- Baz"; }

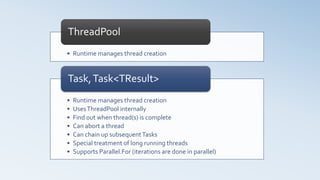

- 12. • Runtime manages thread creation ThreadPool • Runtime manages thread creation • UsesThreadPool internally • Find out when thread(s) is complete • Can abort a thread • Can chain up subsequentTasks • Special treatment of long running threads • Supports Parallel.For (iterations are done in parallel) Task,Task<TResult>

- 13. Race Conditions A race condition is logic that allows two or more threads to change a shared piece of code at the same time, resulting in an undesirable state. e.g. public class Foo { private static bool flag=true; private static int foo = 10; public static void DoStuff() { if (flag==true) { flag = false; //++ is a 2 step operation. foo++; } } } [Thread A] check flag [Thread A] flag == true [Thread B] check the flag [Thread B] flag == true [Thread A] set flag = false [Thread A] evaluates foo == 10 [Thread B] set flag = false [Thread B] evaluates foo == 10 [Thread B] increments foo, foo == 11 [Thread A] increments foo, foo == 12

- 14. Safe vs Unsafe Code

- 15. Safe Code Two threads are operating on two separate instances of a class public class Foo { private int value = 13; public void DoStuff() { value=12; } } Foo foo = new Foo(); Thread aThread = new Thread(foo.DoStuff); Foo foo2 = new Foo(); Thread bThread = new Thread(foo2.DoStuff); aThread.Start(); bThread.Start();

- 16. Safe Code Two threads are operating on two separate instances of a class (using aTask) public class Foo { private int value = 13; public void DoStuff() { value=12; } } Foo foo = new Foo(); Task task1 = Task.Run(() => foo.DoStuff()); Foo foo2 = new Foo(); Task task2 = Task.Run(() => foo2.DoStuff());

- 17. Safe Code Two threads operating on the same instance without mutating anything. public class Foo { private int value = 13; public void DoStuff() { CallSomeService(value); } } Foo foo = new Foo(); Task task1 = Task.Run(() => foo.DoStuff()); Foo foo2 = new Foo(); Task task2 = Task.Run(() => foo.DoStuff());

- 18. Unsafe Code Two threads are operating on the same instances of a class public class Foo { private int value = 13; public void DoStuff() { if(value == 13) { value=++; } } } Foo foo = new Foo(); Task task1 = Task.Run(() => foo.DoStuff()); Task task2 = Task.Run(() => foo.DoStuff()); Very similar to the race condition we saw in an earlier slide

- 19. Unsafe Code Two threads are operating on different instances of a class with a static method public class Foo { private static int value = 13; public static void DoStuff() { if(value == 13) { value=++; } } } Foo foo = new Foo(); Foo foo2 = new Foo(); Task task1 = Task.Run(() => foo.DoStuff()); Task task2 = Task.Run(() => foo2.DoStuff());

- 20. Locks - Race conditions are prevented with Synchronization Allowing only one tread at a time into shared code - The examples of unsafe code before were all examples of shared code without synchronization. Synchronization would make them safe. - Synchronization is achieved by a lock mechanism provided by C# - When a thread fails to obtain (lock) the lock because another thread has it, it blocks and waits. (Some locks have the ability to abandon right away or after a period of time) - A thread waiting for a lock will have a state of WaitSleepJoin - The runtime will decide which of the waiting threads will get the lock when it becomes available. - Multiple types of locks exist in C# to serve different use cases

- 21. Monitor - A Monitor is the simplest lock.They allow only one thread at a time access to shared code. - If an exception occurs in the lock block, the lock is automatically released by the runtime - A locked lock will never timeout - A thread will wait indefinitely for a lock to be freed e.g. public class Foo { private bool flag=true; private int foo = 10; private readonly Object LOCK = new Object(); public void DoStuff() { lock(LOCK) { if (flag==true) { flag = false; foo++; } } } }

- 22. Lock - Monitor is the long form of lock() { } and exposes more features - Monitor.Enter locks the lock and Moniter.Exit unlocks it - Call Monitor.Exit from a finally to make sure we unlock it no matter what exceptions don’t auto release lock public class Foo { private bool flag=true;` private int foo = 10; private readonly Object LOCK = new Object(); public void DoStuff() { Monitor.Enter(LOCK); try { if (flag==true) { flag = false; foo++; } } finally { Monitor.Exit(LOCK); } } }

- 23. Monitor – Key methods • Locks the specified object Enter(Object) • Releases the lock on the specified object Exit(Object) • Attempts to lock the specified object • If successful, it grabs the lock • If not successful, it returns false and does not block TryEnter(Object) • LikeTryEnter(), but will wait for the specified number of milliseconds to lock the object TryEnter(Object, Int32) • Release the lock on the object • Blocks the current thread until it reacquires the lock Wait(Object)

- 24. ReaderWriterLockSlim - A lock that supports multiple concurrent threads reading data and one thread writing data - Ideal for infrequent writes Can cause starvation of reader threads if there are too many threads trying to write - A lock can be held indefinitely - A thread can wait indefinitely for a lock - Used in caching Multiple threads reading the cache; one thread infrequently invalidating and updating - Supports timing out when attempting to obtain a lock Use Case Reader Threads Block Writer Thread Blocks Reader threads are executing & a new thread attempts to enter writer mode X Writer thread is executing & new threads attempt to enter reader mode X Writer thread is executing & new threads attempt to enter reader mode. There are queue threads waiting to enter writer mode X

- 25. private readonly ReaderWriterLockSlim lock = new ReaderWriterLockSlim(); public void ReadMyData { lock.EnterReadLock(); try { //do stuff } finally { lock.ExitReaderLock(); } } public void WriteMyData() { lock.EnterWriteLock(); try { //do stuff } finally { lock.ExitWriteLock(); } } ReaderWriterLockSlim

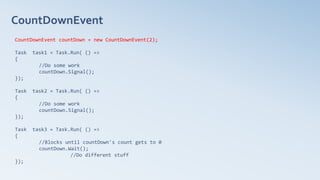

- 26. CountDownEvent - CountDownEvent is a synchronization tool used to signal a thread when it is safe to stop blocking and continue executing - Used to prevent a thread from executing a piece of logic until other threads have completed their work. - CountDownEvent instance is created and seeded with an initial counter, thread which needs to wait for the other threads will block invokingWait() on the CountDownEvent instnace - As other threads complete their work, the invoke Signal() on the CountDownEvent instance to decrement its counter - When the counter runs out, the blocking thread is moved into a running state - Using CountDownEvent to make a thread wait for others to complete can likely be achieved by callingWait() on all theTasks that need to complete beforehand. - A more ideal use would be when a thread doesn't have to wait for other threads to complete, but pass some point in their execution i.e. after each thread invokes Foo.Bar(), signal.When the count runs out the blocking thread wakes up and continues it work.

- 27. CountDownEvent CountDownEvent countDown = new CountDownEvent(2); Task task1 = Task.Run( () => { //Do some work countDown.Signal(); }); Task task2 = Task.Run( () => { //Do some work countDown.Signal(); }); Task task3 = Task.Run( () => { //Blocks until countDown's count gets to 0 countDown.Wait(); //Do different stuff });

- 28. Re-entrant Locks All locks in C# are Re-entrant A thread is allowed into multiple blocks protected by a lock it already owns private readonly Object aLock = new Object(); public void Foo { lock(aLock) { Bar(); } } public void Bar() { lock(aLock) { //do thread safe stuff } } private readonly Object aLock = new Object(); private readonly Object bLock = new Object(); public void Foo { lock(aLock) { Bar(); } } public void Bar() { lock(bLock) { //do thread safe stuff } } No need to lock aLock a second time MUST lock bLock

- 29. Deadlocks A deadlock occurs when threads request and release locks in different orders.ThreadA has a lockThreadB is waiting for andThreadB has a lockThreadA is waiting for.This will hang the application private readonly Object aLock = new Object(); private readonly Object bLock = new Object(); public void Foo() { lock(aLock) { Bar(); } } public void Bar() { lock(bLock) { Baz(); } } public void Baz() { lock(aLock) { } } [ThreadA] call Foo() and obtain aLock [ThreadB] call Bar() and obtain bLock [ThreadA] call Bar() and wait forThreadB to release bLock [ThreadB] call Baz() and wait forThreadA to release aLock TheadA andThreadB are DEADLOCKED

- 30. Volatile State changes to a field in one thread are not guaranteed be visible to other threads working on the same instance because each thread maintains a cache of fields and may not see the update.The volatile keyword is a simple fix for this. [MAIN THREAD] Set valueA="FOO" valueB="BAR" [MAIN THREAD] Start [NEW THREAD] [NEW THREAD] Sleep for 1 minute [MAIN THREAD] Set valueA="BAR" [NEW THREAD] Wake up [NEW THREAD] If valueA="BAR" set valueB="BAZ" [MAIN THREAD] valueA = "BAR" valueB="BAR" By declaring valueA and valueB with the volatile keyword, every time NEWTHREAD reads its value, it reads it from main memory and not its cache. Ensuring it gets the most up-to-date value. The act of forcing the thread to read the value form main memory and not its cache is called a memory barrier (aka memory fence) Using a lock has the same effect, but is more costly performance wise. Volatile is not a replacement for a lock

- 31. Output without memory barrier (volatile/lock): [Main Thread] _valueA = BAR [Main Thread] _valueB = BAR Output with memory barrier (volatile/lock): [Main Thread] _valueA = BAR [Main Thread] _valueB = BAZ

- 32. private volatile String _valueA = "FOO" private volatile String _valueB = "BAR" public void Foo() { Thread myThread = new Thread(Bar); myThread.Start(); _valueA="BAR"; Console.WriteLine("[Main Thread] _valueA = "+ _valueA); Console.WriteLine("[Main Thread] _valueB = "+ _valueB); } public void Bar() { Thread.Sleep(6000); if(_valueA.Equals("BAR")) { _valueB="BAZ"; } } Volatile vs Lock private String _valueA = "FOO" private String _valueB = "BAR" private Object LOCK = new Object(); public void Foo() { Thread myThread = new Thread(Bar); myThread.Start(); lock (LOCK) { _valueA="BAR"; } Console.WriteLine("[Main Thread] _valueA = "+ _valueA); Console.WriteLine("[Main Thread] _valueB = "+ _valueB); } public void Bar() { Thread.Sleep(6000); lock(LOCK) { if(_valueA.Equals("BAR")) { _valueB="BAZ"; } } } Memory barrier with lockMemory barrier with volatile Lock is not necessary in the case.Volatile will work well, but lock will produce same result

- 33. Tread-Safe Data Structures - Thread safe means race conditions are guarded against when accessed by multiple threads - Everyday data structures are not thread safe - Instead of making your code synchronize access to a data structure, use a thread safe one - Thread safe data structures we will look at: ConcurrentDictionary ConcurrentBag BlockingCollection

- 34. ConcurrentDictionary - Thread safe variant of a regular dictionary - Implements the IDictionary<TKey, TValue> interface like System.Collections.Generic.Dictionary<TKey, TValue> - Read operations are performed lock-free as they are safe Memory barrier is used to ensure up-to-date reads - Fine grained locking for better performance A single bucket is locked, not all of them

- 35. ConcurrentBag - Thread-safe, unordered collection of objects no guarantee in what order items come out. - Does not provide index access to elements, it decides what element is returned to you - Useful when you want to obtain the next item to process and don’t care how long it has been waiting • Attempts to obtain an item from the bag without removing it. • Returns true if an item is found, false if the bag is empty • The item is stored in the out parameter TryPeak(outT result) • Attempts to obtain an item from the bag and removes it • Returns true if an item is found, false if the bag is empty • The item is stored in the out parameter TryTake(outT result) • Adds an item to the bag • Allows duplicates and null Add(T)

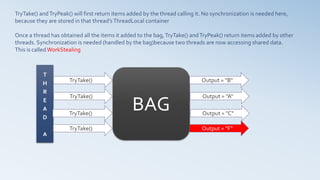

- 37. BAG AddItem("A") T H R E A D A AddItem("B") AddItem("C") T H R E A D B AddItem("D") AddItem("E") AddItem("F") THREAD A A | B | C THREAD B D | E | F Items added to the bag are actually stored in a special container inside the thread that added them ThreadLocal

- 38. BAG TryTake() T H R E A D A TryTake() TryTake() TryTake() Output = "B" Output = "A" Output = "C" Output = "F" TryTake() andTryPeak() will first return items added by the thread calling it. No synchronization is needed here, because they are stored in that thread’sThreadLocal container Once a thread has obtained all the items it added to the bag, TryTake() andTryPeak() return items added by other threads. Synchronization is needed (handled by the bag)because two threads are now accessing shared data. This is calledWorkStealing

- 39. BlockingCollection - Supports adding and removing of items in a thread safe manner - The order in which items are retrieved depends on the type of collection used when you create a BlockingCollection - Use constructor BlockingCollection<T>(IProducerConsumerCollection<T>) to set backing collection type Backing collections are: ConcurrentQueue<T> (FIFO) Default, ConcurrentStack<T> (LIFO), Any collection that implements IProducerConsumerCollection<T> interface - Very useful in implementing producer-consumer pattern

- 40. BlockingCollection •Removes an item from the collection •Blocks until an item exists if collection is empty Take() •Removes given item from the collection •Doesn’t block if collection is empty, returns false TryTake(T) •Removes an item from the collection •Blocks until an item exists if collection is empty until the specified time has elapsed •Returns false if time runs out TryTake(int32) •Adds item to the collection •If the collection was created with an upper bound and there is no space left, it blocks until there is space to add the item Add(T) •Tries to add item to the collection •Returns false if it cannot and does not block TryAdd(T) •Tries to add item to the collection •Blocks for specified amount of time if it cannot. •Returns false if time runs out TryAdd(T, int32)

- 41. Producer – Consumer BlockingCollection Messaging is a typical implementation of the Producer-Consumer pattern. BlockingCollection is perfect for this pattern. [ThreadA] 12:00 AM Listening for messages blockingCollection.Take() [ThreadA] 12:00 AM No message to consume. Keep listening [blocking] [ThreadB] 12:01 AM Publishes messages blockingCollection.Add() [ThreadA] 12:01 AM New message to consume. Unblock & consume, start listening blockingCollection.Take() [blocking] ------------------------------------------------------------------------------------- No more messages -------------------------------------------------------------------------------------- [ThreadA] 12:02 AM No message to consume. Keep listening [blocking] [ThreadA] 12:03 AM No message to consume. Keep listening [blocking] [ThreadA] 12:04 AM No message to consume. Keep listening [blocking] [ThreadB] 12:04 AM Publishes messages blockingCollection.Add() [ThreadC] 12:04 AM Publishes messages blockingCollection.Add() -------------------------------------------------------------------------------------- Collection full. [ThreadC] BLOCKS. Message not added. -------------------------------------------------------------------------------------- [ThreadA] 12:04 AM New message to consume. Unblock & consume, start listening blockingCollection.Take() [blocking] -------------------------------------------------------------------------------------- Collection empty. [ThreadC] RESUMES. Message added. -------------------------------------------------------------------------------------- [ThreadA] 12:04 AM New message to consume. Unblock & consume, start listening blockingCollection.Take() [blocking]

- 42. - Storage container in each thread, accessible only to that thread* (there is one exception we see later) - In most cases, one thread cannot access another thread's local storage - Comes in 3 flavours ThreadStatic variables, ThreadLocal<T> and Data Slots (not covered in this presentation) Use Case: You have an object that is not thread safe, but want to avoid adding synchronization logic to protect it from concurrent access. Solution: Store the object in thread local storage. Each thread will have it's own copy.When the same instance is used by multiple threads, each thread will have it's own copy of what is in thread local storage. Thread Local Storage

- 43. - Most basic form of thread local storage - Static variable that is static within each thread that operates on that object - Must know what type you want to put in thread local storage at compile time public class Baz { [ThreadStatic] private static SomeObject someObject; public void Foo() { someObject = new SomeObject(); someObject.DoStuff(); } } Baz baz1 = new Baz(); Each thread that operates on baz1 will have its own copy of someObject, making it thread safe. WARNING: Never initialize the variable when you declare it.The first thread will work, subsequent threads will throw a NullPointer. ThreadStatic

- 44. - A more versatile form of thread local storage - Supports generics - Lazily initialized Bar won't be instantiated until it is used by mybar.DoStuff(); - Each thread will initialize Bar just fine, unlike ThreadStatic where you need to check if it was initialized public class Baz<T> { private ThreadLocal<Bar<T>> threadLocal = new ThreadLocal<Bar<T>>(() => new Bar<T>()); public void Foo() { Bar<T> myBar = threadLocal.Value; myBar.DoStuff(); } } Baz<Foo> baz1 = new Baz<Foo>(); Each thread that operates on the baz1 instance will get its own copy of Bar. Even though they all use the same instance of Baz. ThreadLocal<T>

- 45. END

![Race Conditions

A race condition is logic that allows two or more threads to change a shared piece of code at the same time,

resulting in an undesirable state.

e.g.

public class Foo

{ private static bool flag=true;

private static int foo = 10;

public static void DoStuff()

{

if (flag==true)

{

flag = false;

//++ is a 2 step operation.

foo++;

}

}

}

[Thread A] check flag

[Thread A] flag == true

[Thread B] check the flag

[Thread B] flag == true

[Thread A] set flag = false

[Thread A] evaluates foo == 10

[Thread B] set flag = false

[Thread B] evaluates foo == 10

[Thread B] increments foo, foo == 11

[Thread A] increments foo, foo == 12](https://image.slidesharecdn.com/3fcec872-88da-4b27-b287-cf2048748cdd-160609010256/85/concurrency_c-_public-13-320.jpg)

![Deadlocks

A deadlock occurs when threads request and release locks in different orders.ThreadA has a lockThreadB is

waiting for andThreadB has a lockThreadA is waiting for.This will hang the application

private readonly Object aLock = new Object();

private readonly Object bLock = new Object();

public void Foo()

{

lock(aLock)

{

Bar();

}

}

public void Bar()

{

lock(bLock)

{

Baz();

}

}

public void Baz()

{

lock(aLock)

{

}

}

[ThreadA] call Foo() and obtain aLock

[ThreadB] call Bar() and obtain bLock

[ThreadA] call Bar() and wait forThreadB to release bLock

[ThreadB] call Baz() and wait forThreadA to release aLock

TheadA andThreadB are DEADLOCKED](https://image.slidesharecdn.com/3fcec872-88da-4b27-b287-cf2048748cdd-160609010256/85/concurrency_c-_public-29-320.jpg)

![Volatile

State changes to a field in one thread are not guaranteed be visible to other threads working on the same instance

because each thread maintains a cache of fields and may not see the update.The volatile keyword is a simple fix for

this.

[MAIN THREAD] Set valueA="FOO" valueB="BAR"

[MAIN THREAD] Start [NEW THREAD]

[NEW THREAD] Sleep for 1 minute

[MAIN THREAD] Set valueA="BAR"

[NEW THREAD] Wake up

[NEW THREAD] If valueA="BAR" set valueB="BAZ"

[MAIN THREAD] valueA = "BAR" valueB="BAR"

By declaring valueA and valueB with the volatile keyword, every time NEWTHREAD reads its value, it reads it from

main memory and not its cache. Ensuring it gets the most up-to-date value.

The act of forcing the thread to read the value form main memory and not its cache is called a memory barrier (aka

memory fence)

Using a lock has the same effect, but is more costly performance wise. Volatile is not a replacement for a lock](https://image.slidesharecdn.com/3fcec872-88da-4b27-b287-cf2048748cdd-160609010256/85/concurrency_c-_public-30-320.jpg)

![Output without memory barrier (volatile/lock):

[Main Thread] _valueA = BAR

[Main Thread] _valueB = BAR

Output with memory barrier (volatile/lock):

[Main Thread] _valueA = BAR

[Main Thread] _valueB = BAZ](https://image.slidesharecdn.com/3fcec872-88da-4b27-b287-cf2048748cdd-160609010256/85/concurrency_c-_public-31-320.jpg)

![private volatile String _valueA = "FOO"

private volatile String _valueB = "BAR"

public void Foo()

{

Thread myThread = new Thread(Bar);

myThread.Start();

_valueA="BAR";

Console.WriteLine("[Main Thread] _valueA = "+ _valueA);

Console.WriteLine("[Main Thread] _valueB = "+ _valueB);

}

public void Bar()

{

Thread.Sleep(6000);

if(_valueA.Equals("BAR"))

{

_valueB="BAZ";

}

}

Volatile vs Lock

private String _valueA = "FOO"

private String _valueB = "BAR"

private Object LOCK = new Object();

public void Foo()

{

Thread myThread = new Thread(Bar);

myThread.Start();

lock (LOCK) { _valueA="BAR"; }

Console.WriteLine("[Main Thread] _valueA = "+ _valueA);

Console.WriteLine("[Main Thread] _valueB = "+ _valueB);

}

public void Bar()

{

Thread.Sleep(6000);

lock(LOCK)

{

if(_valueA.Equals("BAR"))

{

_valueB="BAZ";

}

}

}

Memory barrier with lockMemory barrier with volatile

Lock is not necessary in the case.Volatile will work well, but lock will produce same result](https://image.slidesharecdn.com/3fcec872-88da-4b27-b287-cf2048748cdd-160609010256/85/concurrency_c-_public-32-320.jpg)

![Producer – Consumer BlockingCollection

Messaging is a typical implementation of the Producer-Consumer pattern.

BlockingCollection is perfect for this pattern.

[ThreadA] 12:00 AM Listening for messages blockingCollection.Take()

[ThreadA] 12:00 AM No message to consume. Keep listening [blocking]

[ThreadB] 12:01 AM Publishes messages blockingCollection.Add()

[ThreadA] 12:01 AM New message to consume. Unblock & consume, start listening blockingCollection.Take() [blocking]

-------------------------------------------------------------------------------------

No more messages

--------------------------------------------------------------------------------------

[ThreadA] 12:02 AM No message to consume. Keep listening [blocking]

[ThreadA] 12:03 AM No message to consume. Keep listening [blocking]

[ThreadA] 12:04 AM No message to consume. Keep listening [blocking]

[ThreadB] 12:04 AM Publishes messages blockingCollection.Add()

[ThreadC] 12:04 AM Publishes messages blockingCollection.Add()

--------------------------------------------------------------------------------------

Collection full. [ThreadC] BLOCKS. Message not added.

--------------------------------------------------------------------------------------

[ThreadA] 12:04 AM New message to consume. Unblock & consume, start listening blockingCollection.Take() [blocking]

--------------------------------------------------------------------------------------

Collection empty. [ThreadC] RESUMES. Message added.

--------------------------------------------------------------------------------------

[ThreadA] 12:04 AM New message to consume. Unblock & consume, start listening blockingCollection.Take() [blocking]](https://image.slidesharecdn.com/3fcec872-88da-4b27-b287-cf2048748cdd-160609010256/85/concurrency_c-_public-41-320.jpg)

![- Most basic form of thread local storage

- Static variable that is static within each thread that operates on that object

- Must know what type you want to put in thread local storage at compile time

public class Baz

{

[ThreadStatic]

private static SomeObject someObject;

public void Foo()

{

someObject = new SomeObject();

someObject.DoStuff();

}

}

Baz baz1 = new Baz();

Each thread that operates on baz1 will have its own copy of someObject, making it thread safe.

WARNING: Never initialize the variable when you declare it.The first thread will work, subsequent threads will

throw a NullPointer.

ThreadStatic](https://image.slidesharecdn.com/3fcec872-88da-4b27-b287-cf2048748cdd-160609010256/85/concurrency_c-_public-43-320.jpg)