Continuous Delivery: automated testing, continuous integration and continuous deployment.

22 likes2,412 views

Continuous Delivery: automated testing, continuous integration and continuous deployment.

1 of 43

Downloaded 46 times

Recommended

Continuous Delivery Workshop with Ansible x GitLab CI

Continuous Delivery Workshop with Ansible x GitLab CIChu-Siang Lai # DevOps 人一定要知道的持續交付技巧 - Ansible & GitLab CI 實戰演練

> Mirror from https://speakerdeck.com/chusiang/continuous-delivery-workshop-with-ansible-x-gitlab-ci

Ansible 是個與 Puppet, Salt, Chef 並列其四的 Infrastructure as Code 組態設定工具,其簡單易用的特性讓人愛不釋手;GitLab 是業界常見的 Git 私有版本控制服務,搭配其 GitLab CI 更能建立屬於自己的發佈流程。

本次凍仁將藉由工作坊的形式,帶領大家一探持續交付的世界和自動化的威力!

* 活動網址: https://www.meetup.com/DigitalOceanHsinchu/events/243518189/

* Gist note: https://gist.github.com/chusiang/56843a737b8c3931c1736d6939a4e172

#Ansible #GitLab #DevOps #Workshop

Continuous Delivery with Ansible x GitLab CI

Continuous Delivery with Ansible x GitLab CIChu-Siang Lai Mirror from https://speakerdeck.com/chusiang/continuous-delivery-with-ansible-x-gitlab-ci .

# DevOps 人一定要知道的 Ansible & GitLab CI 持續交付技巧

Ansible 是個與 Puppet, Salt, Chef 並列的 Infrastructure as Code 組態設定工具,其簡單易用的特性更讓人愛不釋手;GitLab 是業界很常見的 Git 私有版本控制服務,搭配其 GitLab CI 將能快速建立屬於自己的 CI/CD Pipeline 與自動化部署。

本次凍仁將會藉由 Ansible 和 GitLab 帶領大家一探持續部署的世界和 DevOps 的威力!

#DevOpsTaiwan #DevOpsTW #COSCUP #COSCUP2017

> http://coscup.org/2017/#/schedule/day1/3071450

用 Docker 改善團隊合作模式

用 Docker 改善團隊合作模式Bo-Yi Wu 網站: http://devopssummit.ithome.com.tw/

Blog: https://blog.wu-boy.com/

Github: https://github.com/appleboy

Scrum gathering 2012 Shanghai_精益与持续改进分会场演讲话题: 大型企业ci平台建设和实施分享(陈小光)

Scrum gathering 2012 Shanghai_精益与持续改进分会场演讲话题: 大型企业ci平台建设和实施分享(陈小光)JoXuZi 大型企业CI平台建设和实施分享

腾讯 陈小光

Agenda

.现状&挑战

.实践分享-平台建设

.实践分享-实施推广

.总结

.Q&A

.7年研发管理和优化经验,跨越银行、互联网等行业

.目前腾讯持续交付、持续集成实践者

.爱好广泛包括且不限敏捷实践,音乐,数学

.微博 @v陈小光v

.Mail [email protected]

现状&挑战-现状

.工程师>500

.同时进行的项目>20

.开发语言覆盖c,c++,php,java,以及手机平台等

.项目之间依赖复杂,涉及到多层循环

现状&挑战-挑战-平台

.同时存在CI平台五套,各自为政,平台不一,资源浪费,是否要整合?

.当项目代码量超过百万时,如何提升构建效率?如何管理复杂构建依赖?

.CI集群30台,如何能及时有效的更新工具和软件以及配置到相关环境里面?

.如何保证从开发->测试->上线三个步骤的源码和二进制包版本一致性?

.如果处理来自不同团队的对CI平台的需求?

现状&挑战-挑战-实施

.BU老大们对CI理解不一,如何获取他们的支持?

.开发对持续构建的失败漠不关心怎么办?

.如何让不到五人的CI团队,高满意度的支持大于五百人团队实施CI?

.如何提高开发编写单元测试的积极性?

.底层代码随意变更提交,影响到上层代码怎么办?

解决方案-平台-设计思想

.平台设计思想:

.统一平台和运维,减少维护成本和机器资源,成果共享

.环境保证绝对权威,跟线上、测试、开发保持一致

.提升易用性,减少用户学习成本

.统一测试和上线的包出口

.工具尽量使用开源

.Jenkins

.Subversion

.Testlink

解决方案-平台-拓扑结构

new.jpg

解决方案-平台-高效构建系统

. 基于开源scons自研的一套分布式统一构建系统

.支持c++,java,probuf,swig等多种语言

.代码变更后可以自动分析依赖关系

.集构建,测试,静态代码检查,高亮错误显示等功能

.支持增量和分布式编译和测试

. 解决了构建效率和依赖管理问题

.其他提升构建速度方法:

. 使用tmpfs,IO效率基本为0,需要大内存机器

. 使用cache机制,比如ccache

. 分布式编译,比如distcc

. 源码分层:抽离基础库代码专人维护,包括common和thirdparty

解决方案-构建分级

构建类型

.NightlyBuild:夜间,全量测试和构建,重建cache

.CheckInBuild:CheckInSvn,增量ut,增量构建,

基于cache

.HandleBuild:按需,全量构建和测试,为了打包

解决方案-平台-统一接入脚本

概述:统一某种类型的项目或代码的公共动作(比如编译,执行ut,静态检查,包上传等)到一

个脚本或工具里面,用户只需要输入路径配置即可完成CI

Scrum gathering 2012 shanghai 精益与持续改进分会场演讲话题: 大型企业ci平台建设和实施分享(陈小光)

Scrum gathering 2012 shanghai 精益与持续改进分会场演讲话题: 大型企业ci平台建设和实施分享(陈小光)LetAgileFly 大型企业CI平台建设和实施分享

腾讯 陈小光

Agenda

.现状&挑战

.实践分享-平台建设

.实践分享-实施推广

.总结

.Q&A

.7年研发管理和优化经验,跨越银行、互联网等行业

.目前腾讯持续交付、持续集成实践者

.爱好广泛包括且不限敏捷实践,音乐,数学

.微博 @v陈小光v

.Mail [email protected]

现状&挑战-现状

.工程师>500

.同时进行的项目>20

.开发语言覆盖c,c++,php,java,以及手机平台等

.项目之间依赖复杂,涉及到多层循环

现状&挑战-挑战-平台

.同时存在CI平台五套,各自为政,平台不一,资源浪费,是否要整合?

.当项目代码量超过百万时,如何提升构建效率?如何管理复杂构建依赖?

.CI集群30台,如何能及时有效的更新工具和软件以及配置到相关环境里面?

.如何保证从开发->测试->上线三个步骤的源码和二进制包版本一致性?

.如果处理来自不同团队的对CI平台的需求?

现状&挑战-挑战-实施

.BU老大们对CI理解不一,如何获取他们的支持?

.开发对持续构建的失败漠不关心怎么办?

.如何让不到五人的CI团队,高满意度的支持大于五百人团队实施CI?

.如何提高开发编写单元测试的积极性?

.底层代码随意变更提交,影响到上层代码怎么办?

解决方案-平台-设计思想

.平台设计思想:

.统一平台和运维,减少维护成本和机器资源,成果共享

.环境保证绝对权威,跟线上、测试、开发保持一致

.提升易用性,减少用户学习成本

.统一测试和上线的包出口

.工具尽量使用开源

.Jenkins

.Subversion

.Testlink

解决方案-平台-拓扑结构

new.jpg

解决方案-平台-高效构建系统

. 基于开源scons自研的一套分布式统一构建系统

.支持c++,java,probuf,swig等多种语言

.代码变更后可以自动分析依赖关系

.集构建,测试,静态代码检查,高亮错误显示等功能

.支持增量和分布式编译和测试

. 解决了构建效率和依赖管理问题

.其他提升构建速度方法:

. 使用tmpfs,IO效率基本为0,需要大内存机器

. 使用cache机制,比如ccache

. 分布式编译,比如distcc

. 源码分层:抽离基础库代码专人维护,包括common和thirdparty

解决方案-构建分级

构建类型

.NightlyBuild:夜间,全量测试和构建,重建cache

.CheckInBuild:CheckInSvn,增量ut,增量构建,

基于cache

.HandleBuild:按需,全量构建和测试,为了打包

解决方案-平台-统一接入脚本

概述:统一某种类型的项目或代码的公共动作(比如编译,执行ut,静态检查,包上传等)到一

个脚本或工具里面,用户只需要输入路径配置即可完成CI的配置,减少用户学习和使用

成本

JCConf2016 Jenkins Pipeline

JCConf2016 Jenkins PipelineChing Yi Chan JCConf 2016 For Jenkins Pipeline, see: https://github.com/qrtt1/JCConf2016_JenkinsPipeline

快速上手 Windows Containers 容器技術 (Docker Taipei)

快速上手 Windows Containers 容器技術 (Docker Taipei)Will Huang 本簡報是 Will 保哥 於 2016/11/19 在 Docker Global Mentor Week (Taipei Party) 的演講內容,幫助大家在短時間內快速上手 Windows Containers 容器技術!

活動網址: https://www.meetup.com/Docker-Taipei/events/234985809/

Announcing Docker Global Mentor Week 2016 - Docker Blog

https://blog.docker.com/2016/10/docker-global-mentor-week-2016/

Labs Manual:

https://gist.github.com/doggy8088/6389a0a110486aad409b184ec1331bec

前端工程師一定要知道的 Docker 虛擬化容器技巧

前端工程師一定要知道的 Docker 虛擬化容器技巧Chu-Siang Lai > Mirror from https://speakerdeck.com/chusiang/qian-duan-gong-cheng-shi-ding-yao-zhi-dao-de-docker-xu-ni-hua-rong-qi-ji-qiao

這是一份 IT 人寫給前端工程師的 Docker 入門簡報,包含了 Docker 觀念介紹、入門指令和一個小小的 e2e test demo。相信藉由一步步的等級提升,我們都可以早日成為大師,並提早下班的 (笑)。

* Demo code - https://github.com/chusiang/takaojs1607-docker-demo

Visual Studio Code 快速上手指南

Visual Studio Code 快速上手指南Shengyou Fan 於 2016/5/28 在 Laravel 台灣 高雄社群小聚所分享的 Lightning Talk 內容,討論如何使用 Visual Studio Code 做為開發 PHP 專案的工具。

現代 IT 人一定要知道的 Ansible 自動化組態技巧

現代 IT 人一定要知道的 Ansible 自動化組態技巧Chu-Siang Lai > Mirror from https://speakerdeck.com/chusiang/xian-dai-it-ren-ding-yao-zhi-dao-de-ansible-zi-dong-hua-zu-tai-ji-qiao

本次凍仁將會帶領大家一探 Ansible 這門技藝,是個比手刻 Shell script, Python 更適合用來自動化部署的技術,相信有了 Ansible 我們都可以提早下班了 (笑)。

* Blog - http://note.drx.tw/2016/05/automate-with-ansible-basic.html

* 簡報大綱 - https://gist.github.com/chusiang/60918f8f400c3f82944c86b924553b27

* KaLUG 1605 聚會 - http://kalug.kktix.cc/events/84f75129

CICD Workshop 20180922

CICD Workshop 20180922Earou Huang 2018/09/22 在 DevOpsDay Taipei 工作坊 Slide

https://devopsdays.tw/workshop.html

More Related Content

What's hot (20)

Scrum gathering 2012 Shanghai_精益与持续改进分会场演讲话题: 大型企业ci平台建设和实施分享(陈小光)

Scrum gathering 2012 Shanghai_精益与持续改进分会场演讲话题: 大型企业ci平台建设和实施分享(陈小光)JoXuZi 大型企业CI平台建设和实施分享

腾讯 陈小光

Agenda

.现状&挑战

.实践分享-平台建设

.实践分享-实施推广

.总结

.Q&A

.7年研发管理和优化经验,跨越银行、互联网等行业

.目前腾讯持续交付、持续集成实践者

.爱好广泛包括且不限敏捷实践,音乐,数学

.微博 @v陈小光v

.Mail [email protected]

现状&挑战-现状

.工程师>500

.同时进行的项目>20

.开发语言覆盖c,c++,php,java,以及手机平台等

.项目之间依赖复杂,涉及到多层循环

现状&挑战-挑战-平台

.同时存在CI平台五套,各自为政,平台不一,资源浪费,是否要整合?

.当项目代码量超过百万时,如何提升构建效率?如何管理复杂构建依赖?

.CI集群30台,如何能及时有效的更新工具和软件以及配置到相关环境里面?

.如何保证从开发->测试->上线三个步骤的源码和二进制包版本一致性?

.如果处理来自不同团队的对CI平台的需求?

现状&挑战-挑战-实施

.BU老大们对CI理解不一,如何获取他们的支持?

.开发对持续构建的失败漠不关心怎么办?

.如何让不到五人的CI团队,高满意度的支持大于五百人团队实施CI?

.如何提高开发编写单元测试的积极性?

.底层代码随意变更提交,影响到上层代码怎么办?

解决方案-平台-设计思想

.平台设计思想:

.统一平台和运维,减少维护成本和机器资源,成果共享

.环境保证绝对权威,跟线上、测试、开发保持一致

.提升易用性,减少用户学习成本

.统一测试和上线的包出口

.工具尽量使用开源

.Jenkins

.Subversion

.Testlink

解决方案-平台-拓扑结构

new.jpg

解决方案-平台-高效构建系统

. 基于开源scons自研的一套分布式统一构建系统

.支持c++,java,probuf,swig等多种语言

.代码变更后可以自动分析依赖关系

.集构建,测试,静态代码检查,高亮错误显示等功能

.支持增量和分布式编译和测试

. 解决了构建效率和依赖管理问题

.其他提升构建速度方法:

. 使用tmpfs,IO效率基本为0,需要大内存机器

. 使用cache机制,比如ccache

. 分布式编译,比如distcc

. 源码分层:抽离基础库代码专人维护,包括common和thirdparty

解决方案-构建分级

构建类型

.NightlyBuild:夜间,全量测试和构建,重建cache

.CheckInBuild:CheckInSvn,增量ut,增量构建,

基于cache

.HandleBuild:按需,全量构建和测试,为了打包

解决方案-平台-统一接入脚本

概述:统一某种类型的项目或代码的公共动作(比如编译,执行ut,静态检查,包上传等)到一

个脚本或工具里面,用户只需要输入路径配置即可完成CI

Scrum gathering 2012 shanghai 精益与持续改进分会场演讲话题: 大型企业ci平台建设和实施分享(陈小光)

Scrum gathering 2012 shanghai 精益与持续改进分会场演讲话题: 大型企业ci平台建设和实施分享(陈小光)LetAgileFly 大型企业CI平台建设和实施分享

腾讯 陈小光

Agenda

.现状&挑战

.实践分享-平台建设

.实践分享-实施推广

.总结

.Q&A

.7年研发管理和优化经验,跨越银行、互联网等行业

.目前腾讯持续交付、持续集成实践者

.爱好广泛包括且不限敏捷实践,音乐,数学

.微博 @v陈小光v

.Mail [email protected]

现状&挑战-现状

.工程师>500

.同时进行的项目>20

.开发语言覆盖c,c++,php,java,以及手机平台等

.项目之间依赖复杂,涉及到多层循环

现状&挑战-挑战-平台

.同时存在CI平台五套,各自为政,平台不一,资源浪费,是否要整合?

.当项目代码量超过百万时,如何提升构建效率?如何管理复杂构建依赖?

.CI集群30台,如何能及时有效的更新工具和软件以及配置到相关环境里面?

.如何保证从开发->测试->上线三个步骤的源码和二进制包版本一致性?

.如果处理来自不同团队的对CI平台的需求?

现状&挑战-挑战-实施

.BU老大们对CI理解不一,如何获取他们的支持?

.开发对持续构建的失败漠不关心怎么办?

.如何让不到五人的CI团队,高满意度的支持大于五百人团队实施CI?

.如何提高开发编写单元测试的积极性?

.底层代码随意变更提交,影响到上层代码怎么办?

解决方案-平台-设计思想

.平台设计思想:

.统一平台和运维,减少维护成本和机器资源,成果共享

.环境保证绝对权威,跟线上、测试、开发保持一致

.提升易用性,减少用户学习成本

.统一测试和上线的包出口

.工具尽量使用开源

.Jenkins

.Subversion

.Testlink

解决方案-平台-拓扑结构

new.jpg

解决方案-平台-高效构建系统

. 基于开源scons自研的一套分布式统一构建系统

.支持c++,java,probuf,swig等多种语言

.代码变更后可以自动分析依赖关系

.集构建,测试,静态代码检查,高亮错误显示等功能

.支持增量和分布式编译和测试

. 解决了构建效率和依赖管理问题

.其他提升构建速度方法:

. 使用tmpfs,IO效率基本为0,需要大内存机器

. 使用cache机制,比如ccache

. 分布式编译,比如distcc

. 源码分层:抽离基础库代码专人维护,包括common和thirdparty

解决方案-构建分级

构建类型

.NightlyBuild:夜间,全量测试和构建,重建cache

.CheckInBuild:CheckInSvn,增量ut,增量构建,

基于cache

.HandleBuild:按需,全量构建和测试,为了打包

解决方案-平台-统一接入脚本

概述:统一某种类型的项目或代码的公共动作(比如编译,执行ut,静态检查,包上传等)到一

个脚本或工具里面,用户只需要输入路径配置即可完成CI的配置,减少用户学习和使用

成本

JCConf2016 Jenkins Pipeline

JCConf2016 Jenkins PipelineChing Yi Chan JCConf 2016 For Jenkins Pipeline, see: https://github.com/qrtt1/JCConf2016_JenkinsPipeline

快速上手 Windows Containers 容器技術 (Docker Taipei)

快速上手 Windows Containers 容器技術 (Docker Taipei)Will Huang 本簡報是 Will 保哥 於 2016/11/19 在 Docker Global Mentor Week (Taipei Party) 的演講內容,幫助大家在短時間內快速上手 Windows Containers 容器技術!

活動網址: https://www.meetup.com/Docker-Taipei/events/234985809/

Announcing Docker Global Mentor Week 2016 - Docker Blog

https://blog.docker.com/2016/10/docker-global-mentor-week-2016/

Labs Manual:

https://gist.github.com/doggy8088/6389a0a110486aad409b184ec1331bec

前端工程師一定要知道的 Docker 虛擬化容器技巧

前端工程師一定要知道的 Docker 虛擬化容器技巧Chu-Siang Lai > Mirror from https://speakerdeck.com/chusiang/qian-duan-gong-cheng-shi-ding-yao-zhi-dao-de-docker-xu-ni-hua-rong-qi-ji-qiao

這是一份 IT 人寫給前端工程師的 Docker 入門簡報,包含了 Docker 觀念介紹、入門指令和一個小小的 e2e test demo。相信藉由一步步的等級提升,我們都可以早日成為大師,並提早下班的 (笑)。

* Demo code - https://github.com/chusiang/takaojs1607-docker-demo

Visual Studio Code 快速上手指南

Visual Studio Code 快速上手指南Shengyou Fan 於 2016/5/28 在 Laravel 台灣 高雄社群小聚所分享的 Lightning Talk 內容,討論如何使用 Visual Studio Code 做為開發 PHP 專案的工具。

現代 IT 人一定要知道的 Ansible 自動化組態技巧

現代 IT 人一定要知道的 Ansible 自動化組態技巧Chu-Siang Lai > Mirror from https://speakerdeck.com/chusiang/xian-dai-it-ren-ding-yao-zhi-dao-de-ansible-zi-dong-hua-zu-tai-ji-qiao

本次凍仁將會帶領大家一探 Ansible 這門技藝,是個比手刻 Shell script, Python 更適合用來自動化部署的技術,相信有了 Ansible 我們都可以提早下班了 (笑)。

* Blog - http://note.drx.tw/2016/05/automate-with-ansible-basic.html

* 簡報大綱 - https://gist.github.com/chusiang/60918f8f400c3f82944c86b924553b27

* KaLUG 1605 聚會 - http://kalug.kktix.cc/events/84f75129

Similar to Continuous Delivery: automated testing, continuous integration and continuous deployment. (20)

CICD Workshop 20180922

CICD Workshop 20180922Earou Huang 2018/09/22 在 DevOpsDay Taipei 工作坊 Slide

https://devopsdays.tw/workshop.html

Continuous Delivery Workshop with Ansible x GitLab CI (5th)

Continuous Delivery Workshop with Ansible x GitLab CI (5th)Chu-Siang Lai # DevOps 人一定要知道的持續交付技巧 - Ansible & GitLab CI 實戰演練 (5th)

> Mirror from https://speakerdeck.com/chusiang/continuous-delivery-workshop-with-ansible-x-gitlab-ci-5th

Ansible 是個與 Puppet, Salt, Chef 並列其四的 Infrastructure as Code 組態設定工具,其簡單易用的特性讓人愛不釋手;GitLab 是業界常見的 Git 私有版本控制服務,搭配其 GitLab CI 更能建立屬於自己的發佈流程。

本次凍仁將藉由工作坊的形式,讓 Agile Tour Taichung 2017 的敏捷鬥士們,在實戰過程中,體驗持續交付和自動化的世界!

* 活動網址: https://www.accupass.com/event/1711280738178163006690

* Wiki: https://gitlab.com/chusiang/continuous-delivery-workshop/wikis/home

#Ansible #GitLab #DevOps #Workshop

Continuous Delivery Workshop with Ansible x GitLab CI (3rd)

Continuous Delivery Workshop with Ansible x GitLab CI (3rd)Chu-Siang Lai # DevOps 人一定要知道的持續交付技巧 - Ansible & GitLab CI 實戰演練 (3rd)

> https://speakerdeck.com/chusiang/continuous-delivery-workshop-with-ansible-x-gitlab-ci-3rd

Ansible 是個與 Puppet, Salt, Chef 並列其四的 Infrastructure as Code 組態設定工具,其簡單易用的特性讓人愛不釋手;GitLab 是業界常見的 Git 私有版本控制服務,搭配其 GitLab CI 更能建立屬於自己的發佈流程。

本次凍仁將藉由工作坊的形式,讓國立臺中科技大學資訊與流通學院的領航員們,在實戰過程中,體驗持續交付和自動化的世界!

* 活動網址: Secret

* Wiki: https://gitlab.com/chusiang/continuous-delivery-workshop/wikis/home

#Ansible #GitLab #DevOps #Workshop

GitHub Action Introduction

GitHub Action IntroductionDuran Hsieh GitHub Action Introduction

https://fb.watch/8CL1T-p95U/

Git and Github basic with SourceTree

Git and Github basic with SourceTreeChu-Siang Lai 「手殘救星 - Git and GitHub」slides

* 現場共筆文件: http://goo.gl/aqTTYO

持續交付高品質程式碼 公開版

持續交付高品質程式碼 公開版Kirk Chen 快速且持續的交付產品,是敏捷精神中很重要的一環,透過不斷的交付、驗證、學習可以讓產品的方向能夠持續被修正並帶來價值, 本次的分享將和大家介紹如何在持續交付的情況下盡可能維持高品質的程式碼。分享中將可能會提到但不限於

* 使用者故事對照、實例化需求

* 單元、整合測試

* 程式碼品質分析工具

* Pair Programming

* 持續整合、部署工具

網站上線了,然後呢?

網站上線了,然後呢?Kirk Chen 分享關於網站上線之後,如何透過各種工具和方法來幫助網站的營運,讓網站能夠根據需求隨時調整改變,並同時兼顧網站的穩定性,讓網站的營運不再是一份辛苦的工作,還能透幫助網站不斷改善變的更好。

1. Immutable Infrastructure - 介紹如何透過 Infrastructure as code 讓系統架構程序化,還可以結合 Code Review, CI/ CD 等機制讓系統架構變更不再可怕並且有跡可尋

2. Log as Dashboard - 使用 ELK 讓網站的各種 Log 轉換成一目了然的數據,還可以輕鬆的剖析在特定情境下系統的運作情形

3. Chatops - 使用 bot 搭配 slack 等常用的通訊軟體,讓 Routing 的維運工作自動化,甚至只要會使用指令就可以操作,讓每個人都可以進行維運

4. System Monitor and Profiling - 透過監控機制觀察系統狀況,透過 Slack 發出警?告訊息,隨時透過 Slack 查詢系統狀況

Continuous Delivery with Ansible x GitLab CI (2e)

Continuous Delivery with Ansible x GitLab CI (2e)Chu-Siang Lai Mirror from https://speakerdeck.com/chusiang/continuous-delivery-with-ansible-x-gitlab-ci-2e

# DevOps 人一定要知道的 Ansible & GitLab CI 持續交付技巧 (2/e)

Ansible 是個與 Puppet, Salt, Chef 並列的 Infrastructure as Code 組態設定工具,其簡單易用的特性更讓人愛不釋手;GitLab 是業界很常見的 Git 私有版本控制服務,搭配其 GitLab CI 將能快速建立屬於自己的 CI/CD Pipeline 與自動化部署。

本次凍仁將會藉由 Ansible 和 GitLab 帶領大家一探持續部署的世界和 DevOps 的威力!

#DevOpsTaiwan #DevOpsTW #Agile #Meetup

> https://devops.kktix.cc/events/meetup-kaohsiung-1

Git and git hub

Git and git hub唯 李 A simple introduction for basic use of git and git hub.

Since I'm a rookie to Git, If there is anything wrong, please contact me.

Hope you'll enjoy it.

Github簡介

Github簡介Radian Jheng Step by step tutorial teach you what is git and teach you how to push your page to github pages.

More from Jimmy Lai (20)

[PyCon US 2025] Scaling the Mountain_ A Framework for Tackling Large-Scale Te...![[PyCon US 2025] Scaling the Mountain_ A Framework for Tackling Large-Scale Te...](https://cdn.slidesharecdn.com/ss_thumbnails/pyconus2025scalingthemountainaframeworkfortacklinglarge-scaletechdebt-250517122757-38c6df76-thumbnail.jpg?width=560&fit=bounds)

![[PyCon US 2025] Scaling the Mountain_ A Framework for Tackling Large-Scale Te...](https://cdn.slidesharecdn.com/ss_thumbnails/pyconus2025scalingthemountainaframeworkfortacklinglarge-scaletechdebt-250517122757-38c6df76-thumbnail.jpg?width=560&fit=bounds)

![[PyCon US 2025] Scaling the Mountain_ A Framework for Tackling Large-Scale Te...](https://cdn.slidesharecdn.com/ss_thumbnails/pyconus2025scalingthemountainaframeworkfortacklinglarge-scaletechdebt-250517122757-38c6df76-thumbnail.jpg?width=560&fit=bounds)

![[PyCon US 2025] Scaling the Mountain_ A Framework for Tackling Large-Scale Te...](https://cdn.slidesharecdn.com/ss_thumbnails/pyconus2025scalingthemountainaframeworkfortacklinglarge-scaletechdebt-250517122757-38c6df76-thumbnail.jpg?width=560&fit=bounds)

[PyCon US 2025] Scaling the Mountain_ A Framework for Tackling Large-Scale Te...Jimmy Lai Managing tech debt in large legacy codebases isn’t just a challenge—it’s an ongoing battle that can drain developer productivity and morale. In this talk, I’ll introduce a Python-powered Tech Debt Framework bar-raiser designed to help teams tackle even the most daunting tech debt problems with 100,000+ violations. This open-source framework empowers developers and engineering leaders by: - Tracking Progress: Measure and visualize the state of tech debt and trends over time. - Recognizing Contributions: Celebrate developer efforts and foster accountability with contribution leaderboards and automated shoutouts. - Automating Fixes: Save countless hours with codemods that address repetitive debt patterns, allowing developers to focus on higher-priority work.

Through real-world case studies, I’ll showcase how we: - Reduced 70,000+ pyright-ignore annotations to boost type-checking coverage from 60% to 99.5%. - Converted a monolithic sync codebase to async, addressing blocking IO issues and adopting asyncio effectively.

Attendees will gain actionable strategies for scaling Python automation, fostering team buy-in, and systematically reducing tech debt across massive codebases. Whether you’re dealing with type errors, legacy dependencies, or async transitions, this talk provides a roadmap for creating cleaner, more maintainable code at scale.

PyCon JP 2024 Streamlining Testing in a Large Python Codebase .pdf

PyCon JP 2024 Streamlining Testing in a Large Python Codebase .pdfJimmy Lai Maintaining code quality in a growing codebase is challenging. We faced issues like increased test suite execution time, slow test startups, and coverage reporting overhead. By leveraging open-source tools, we significantly enhanced testing efficiency. We utilized pytest-xdist for parallel test execution, reducing test times and accelerating development. Optimizing test startup with Docker and Kubernetes for CI, and pytest-hot-reloading for local development, improved productivity. Customizing coverage tools to target updated files minimized overhead. This resulted in an 8000-case increase in test volume, 85% test coverage, and CI tests completing in under 15 minutes.

EuroPython 2024 - Streamlining Testing in a Large Python Codebase

EuroPython 2024 - Streamlining Testing in a Large Python CodebaseJimmy Lai Maintaining code quality through effective testing becomes increasingly challenging as codebases expand and developer teams grow. In our rapidly expanding codebase, we encountered common obstacles such as increasing test suite execution time, slow test coverage reporting and delayed test startup. By leveraging innovative strategies using open-source tools, we achieved remarkable enhancements in testing efficiency and code quality.

As a result, in the past year, our test case volume increased by 8000, test coverage was elevated to 85%, and Continuous Integration (CI) test duration was maintained under 15 minute

Python Linters at Scale.pdf

Python Linters at Scale.pdfJimmy Lai Black, Flake8, isort, and Mypy are useful Python linters but it’s challenging to use them effectively at scale in the case of multiple codebases, in a large codebase, or with many developers. Manually managing consistent linter versions and configurations across codebases requires endless effort. Linter analysis on large codebases is slow. Linters may slow down developers by asking them to fix trivial issues. Running linters in distributed CI jobs makes it hard to understand the overall developer experience.

To handle these scale challenges, we developed a reusable linter framework that releases new linter updates automatically, reuses consistent configurations, runs linters on only updated code to speedup runtime, collects logs and metrics to provide observability, and builds auto fixes for common linter issues. Our linter runs are fast and scalable. Every week, they run 10k times on multiple millions of lines of code in over 25 codebases, generating 25k suggestions for more than 200 developers. Its autofixes also save 20 hours of developer time every week.

In this talk, we’ll walk you through popular Python linters and configuration recommendations, and we will discuss common issues and solutions when scaling them out. Using linters more effectively will make it much easier for you to apply best practices and more quickly write better code.

EuroPython 2022 - Automated Refactoring Large Python Codebases

EuroPython 2022 - Automated Refactoring Large Python CodebasesJimmy Lai Like many companies with multi-million-line Python codebases, Carta has struggled to adopt best practices like Black formatting and type annotation. The extra work needed to do the right thing competes with the almost overwhelming need for new development, and unclear code ownership and lack of insight into the size and scope of type problems add to the burden. We’ve greatly mitigated these problems by building an automated refactoring pipeline that applies Black formatting and backfills missing types via incremental Github pull requests. Our refactor applications use LibCST and MonkeyType to modify the Python syntax tree and use GitPython/PyGithub to create and manage pull requests. It divides changes into small, easily reviewed pull requests and assigns appropriate code owners to review them. After creating and merging more than 3,000 pull requests, we have fully converted our large codebase to Black format and have added type annotations to more than 50,000 functions. In this talk, you’ll learn to use LibCST to build automated refactoring tools that fix general Python code quality issues at scale and how to use GitPython/PyGithub to automate the code review process.

Annotate types in large codebase with automated refactoring

Annotate types in large codebase with automated refactoringJimmy Lai Add missing type annotations to a large Python codebase is not easy. The major challenges include: limited developer time, tons of missing types, code ownership, and active development. We solved the problem by building an automated refactoring pipeline that run CircleCI jobs to create incremental Github pull requests to backfill missing types using heuristic rules and MonkeyType. The refactor apps use LibCST to modify Python syntax tree. Changes are split into small reviewable pull requests and assigned to code owners to review. So far, the work has added type annotations to more than 45,000 Python functions and saved tons of engineering efforts.

The journey of asyncio adoption in instagram

The journey of asyncio adoption in instagramJimmy Lai In this talk, we share our strategy to adopt asyncio and the tools we built: including common helper library for asyncio testing/debugging/profiling, static analysis and profiling tools for identify call stack, bug fixes and optimizations for asyncio module, design patterns for asyncio, etc. Those experiences are learn from large scale project -- Instagram Django Service.

Data Analyst Nanodegree

Data Analyst NanodegreeJimmy Lai Hung-Che Lai successfully completed the Data Analyst Nanodegree program from Udacity in 2016. The certificate verifies that Hung-Che Lai learned data analysis skills and discovered insights from data. Sebastian Thrun, CEO of Udacity, certified that Hung-Che Lai completed the program on October 19, 2016.

Distributed system coordination by zookeeper and introduction to kazoo python...

Distributed system coordination by zookeeper and introduction to kazoo python...Jimmy Lai Zookeeper is a coordination tool to let people build distributed systems easier. In this slides, the author summarizes the usage of zookeeper and provides Kazoo Python library as example.

Build a Searchable Knowledge Base

Build a Searchable Knowledge BaseJimmy Lai In this talk, the speaker will demonstrate how to build a searchable knowledge base from scratch. The process includes data wrangling, entity indexing and full text search.

[LDSP] Solr Usage![[LDSP] Solr Usage](https://cdn.slidesharecdn.com/ss_thumbnails/searchengineldspsolr-140127194826-phpapp02-thumbnail.jpg?width=560&fit=bounds)

![[LDSP] Solr Usage](https://cdn.slidesharecdn.com/ss_thumbnails/searchengineldspsolr-140127194826-phpapp02-thumbnail.jpg?width=560&fit=bounds)

![[LDSP] Solr Usage](https://cdn.slidesharecdn.com/ss_thumbnails/searchengineldspsolr-140127194826-phpapp02-thumbnail.jpg?width=560&fit=bounds)

![[LDSP] Solr Usage](https://cdn.slidesharecdn.com/ss_thumbnails/searchengineldspsolr-140127194826-phpapp02-thumbnail.jpg?width=560&fit=bounds)

[LDSP] Solr UsageJimmy Lai In this slide, we introduce the mechanism of Solr used in Search Engine Back End API Solution for Fast Prototyping (LDSP). You will learn how to create a new core, update schema, query and sort in Solr.

[LDSP] Search Engine Back End API Solution for Fast Prototyping![[LDSP] Search Engine Back End API Solution for Fast Prototyping](https://cdn.slidesharecdn.com/ss_thumbnails/searchengineldsp-140126193834-phpapp02-thumbnail.jpg?width=560&fit=bounds)

![[LDSP] Search Engine Back End API Solution for Fast Prototyping](https://cdn.slidesharecdn.com/ss_thumbnails/searchengineldsp-140126193834-phpapp02-thumbnail.jpg?width=560&fit=bounds)

![[LDSP] Search Engine Back End API Solution for Fast Prototyping](https://cdn.slidesharecdn.com/ss_thumbnails/searchengineldsp-140126193834-phpapp02-thumbnail.jpg?width=560&fit=bounds)

![[LDSP] Search Engine Back End API Solution for Fast Prototyping](https://cdn.slidesharecdn.com/ss_thumbnails/searchengineldsp-140126193834-phpapp02-thumbnail.jpg?width=560&fit=bounds)

[LDSP] Search Engine Back End API Solution for Fast PrototypingJimmy Lai In this slides, I propose a solution for fast prototyping of search engine back end API. It consists of Linux + Django + Solr + Python (LDSP), and all are open source softwares. The solution also provides code repository with automation scripts. Everyone can build a Search Engine back end API in seconds by exploiting LDSP.

Text classification in scikit-learn

Text classification in scikit-learnJimmy Lai This document provides an overview of text classification in Scikit-learn. It discusses setting up necessary packages in Ubuntu, loading and preprocessing text data from the 20 newsgroups dataset, extracting features from text using CountVectorizer and TfidfVectorizer, performing feature selection, training classification models, evaluating performance through cross-validation, and visualizing results. The goal is to classify newsgroup posts by topic using machine learning techniques in Scikit-learn.

Big data analysis in python @ PyCon.tw 2013

Big data analysis in python @ PyCon.tw 2013Jimmy Lai Big data analysis involves several processes: collecting, storage, computing, analysis and visualization. In this slides, the author demonstrates these processes by using python tools to build a data product. The example is based on text-analyzing an online forum.

Text Classification in Python – using Pandas, scikit-learn, IPython Notebook ...

Text Classification in Python – using Pandas, scikit-learn, IPython Notebook ...Jimmy Lai Big data analysis relies on exploiting various handy tools to gain insight from data easily. In this talk, the speaker demonstrates a data mining flow for text classification using many Python tools. The flow consists of feature extraction/selection, model training/tuning and evaluation. Various tools are used in the flow, including: Pandas for feature processing, scikit-learn for classification, IPython, Notebook for fast sketching, matplotlib for visualization.

Software development practices in python

Software development practices in pythonJimmy Lai In this slides, the author demonstrates many software development practices in Python. Including: runtime environment setup, source code management, version control, unit test, coding convention, code duplication, documentation and automation.

Fast data mining flow prototyping using IPython Notebook

Fast data mining flow prototyping using IPython NotebookJimmy Lai Big data analysis requires fast prototyping on data mining process to gain insight into data. In this slides, the author introduces how to use IPython Notebook to sketch code pieces for data mining stages and make fast observations easily.

Documentation with sphinx @ PyHug

Documentation with sphinx @ PyHugJimmy Lai Sphinx is a tool for documentation. In this slides, the author provides overview of sphinx: features, setup steps, document type, syntax.

Apache thrift-RPC service cross languages

Apache thrift-RPC service cross languagesJimmy Lai This slides illustrate how to use Apache Thrift for building RPC service and provide demo example code in Python. The example scenario is: we have a prepared machine learning model, and we'd like to load the model in advance as a server for providing prediction service.

NetworkX - python graph analysis and visualization @ PyHug

NetworkX - python graph analysis and visualization @ PyHugJimmy Lai NetworkX is a Python package for analyzing and visualizing graphs and networks. It allows users to construct graphs from data, model network topology and examine properties like centrality and connectivity. The document provides instructions on installing NetworkX and links to tutorials, demonstrates analyzing a social network from a PTT bulletin board, and lists the top users by PageRank centrality.

Continuous Delivery: automated testing, continuous integration and continuous deployment.

- 1. Continuous Delivery: automated testing, continuous integration and continuous deployment. 持續發佈:利⽤用⾃自動化測試與持續 集成來持續發佈⾼高品質的軟體 Jimmy Lai 2014.10.15 ! Jimmy Lai是⾃自然語⾔言處理與機器學習領域的 Python愛好者。 更多他的分享: http://www.slideshare.net/jimmy_lai/

- 2. Outline 1. 持續發佈的概念 2. 持續發佈的⼯工具 3. 以Python專案為範例建構部署流⽔水 線 2

- 3. Continuous Delivery 持續發佈 • 軟體發佈了才算做完了 • 只有commit程式碼還不算完成 • 提交程式碼到軟體發佈的過程必須完全⾃自動 化 • 可重複使⽤用的發佈與部署流程,以確保軟體 品質 • 程式碼與設定檔等產出必須要進⾏行版本控 制,以便快速的追蹤與回復 3

- 4. Version Control 版本控制 • 需要版本控制的項⺫⽬目 • 原始碼 • 套件相依管理 • 系統參數設定 • 主流⼯工具: Git 4

- 5. Continuous Integration 持續整合 • 在合作開發的團隊中,持續地將每個⼈人的變 更整合在⼀一起,越頻繁地整合就可以減少嚴 重的衝突發⽣生 • 要件: • 頻繁的commit原始碼 • ⾃自動化的測試 • 簡短的建置與測試週期,以及早獲得回饋 • 主流⼯工具: Jenkins 5

- 6. Testing 測試 • ⾃自動化測試 • 單元測試: 針對function/class • 功能測試: 針對客⼾戶端需求 • 系統整合測試: 針對⼦子系統的整合 • 效能與覆載測試 • 安全性測試 • ⼿手動測試 • 探索性測試、使⽤用者接受度測試 • Python測試⼯工具: nosetests 6

- 7. 部署流⽔水線 1. 建置與單元測試(當commit程式碼時) 2. ⾃自動化的功能測試 3. 使⽤用者接受度測試(測試⼯工程師) 4. 發佈 過程中有任何錯誤即⾃自動通知開發者, 開發者應優先修復部署流⽔水線後再繼續 開發新功能 7

- 8. Git版本控制 • 分散式版本控制 • 每⼀一台機器都有⼯工作⺫⽬目錄和版本資料 庫 • 每⼀一台機器的版本資料庫都可以與任 意機器同步 • 開發時先對本地資料庫進⾏行commit, 不需要網路連線 http://git-scm.com/ 8

- 9. Git實務 • ⼯工作⺫⽬目錄 <-> 暫存區 <-> 版本資料庫 • ⼯工作⺫⽬目錄為某個版本分⽀支的狀態加上新的修改 • git add: 將檔案當前的狀態加⼊入暫存區 • git commit -m “commit message”: 將⺫⽬目前暫存區 當中的所有檔案更改儲存到版本資料庫 • git push origin master: 將當前分⽀支的本機更新推送 到遠端(origin)版本資料庫的master分⽀支 • git pull origin master: 將遠端(origin)版本資料庫的 master分⽀支的更新下載到當前⼯工作⺫⽬目錄分⽀支 http://git-scm.com/docs/gittutorial 9

- 10. Git Code Review • 透過Github的code review來確保軟體品質: 1. git checkout -b branch: 在本地建⽴立分⽀支並切換到該分⽀支 2. git commit: 在本地分⽀支提交變更 3. git push origin branch_a: 將變更推送到Github 4. 在Github建⽴立pull request 5. Reviewer進⾏行審查 6. 審查修改完善後將branch merge回master • master branch⽤用來發佈軟體,新的修改都先在其他分⽀支 進⾏行,經過審查後才merge • Code Review有助於團隊成員互相學習並提⾼高程式碼品質 http://nvie.com/posts/a-successful-git-branching-model/ 10

- 11. Git Code Review Demo https://github.com/jimmylai/continuous_delivery_demo

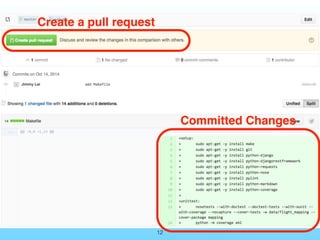

- 12. Git Code Review 範例 • 需求: 撰寫設定系統套件的Makefile, 並進⾏行Code Review • 步驟: 1. 於git建⽴立新分⽀支 2. 在新分⽀支提交修改 3. 更新到github後,建⽴立pull request 4. Review確認後merge 12

- 13. 13 Committed Changes Create a pull request

- 15. Post Review Message 15

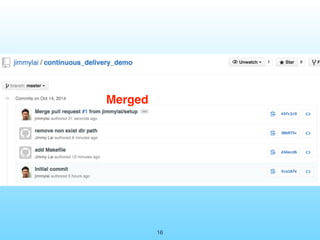

- 16. Update According to the Comments 16 Merge

- 17. 17 Merged

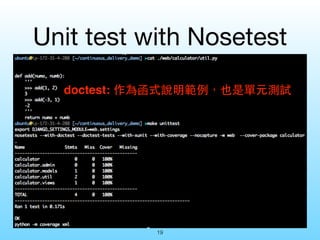

- 18. 單元測試 • 單元測試: 對程式碼單元(funtion, class)進 ⾏行撰寫測試案例 • 被測試的程式碼必須要減少與其他程式碼 的相依性才容易測試,通常可以透過適當 的封裝函數來達成。必要時必須要利⽤用 Mock技術來隔離相依性,例如呼叫資料 庫連線、外部IO. • Python測試⼯工具nosetest 18

- 19. Unit Test with nosetests https://github.com/jimmylai/continuous_delivery_demo/tree/ master/web/calculator

- 20. Unit Test with nosetests範例 • 需求: 撰寫⼀一個函式,有兩個整數作為 參數,回傳兩個參數相加之和 • 步驟: 1. 撰寫函式 2. 利⽤用doctest做為說明及測試 3. 撰寫測試函數 20

- 21. Unit test with Nosetest doctest: 作為函式說明範例,也是單元測試 21

- 22. 將測試檔案以xxx_test.py命名, 測試函式以! test_xxx()命名, nosetests就會找出來執⾏行 22

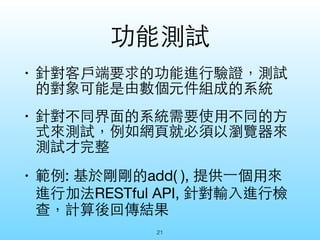

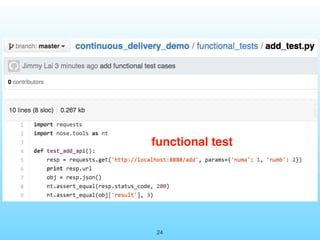

- 23. 功能測試 • 針對客⼾戶端要求的功能進⾏行驗證,測試 的對象可能是由數個元件組成的系統 • 針對不同界⾯面的系統需要使⽤用不同的⽅方 式來測試,例如網⾴頁就必須以瀏覽器來 測試才完整 • 範例: 基於先前的add( ), 提供⼀一個⽤用來 進⾏行加法RESTful API, 針對輸⼊入進⾏行檢 查,計算後回傳結果 23

- 24. Functional Test of RESTful API https://github.com/jimmylai/continuous_delivery_demo/tree/ master/functional_tests

- 25. Functional Test of RESTful API 範例 • 需求: 實作⼀一個Restful API, 透過HTTP GET 輸⼊入兩個參數, 呼叫前⼀一範例的add( )進⾏行加 總, 以json格式回傳結果 • 步驟: 1. 使⽤用Django rest framework撰寫API 2. 驗證輸⼊入的兩個參數存在且為整數 3. 將運算結果回傳 4. 測試客⼾戶端送出HTTP GET request來 進⾏行測試 25

- 26. Django RESTful API - View 26

- 28. 28 start web server functional test test successfully

- 29. Jenkins • 廣受歡迎的持續整合軟體 • 根據設定的條件(如提交程式碼時、定 時排程)觸發建置,如建置發⽣生錯誤便 ⽴立即通知開發者,建置順利可繼續下⼀一 階段建制或⾃自動發佈 • 建置的結果報表以網⾴頁的⽅方式呈現 • 建置的過程與產出皆有存檔,⽅方便回溯 http://jenkins-ci.org/ 29

- 30. Unitest when Commits with Jenkins

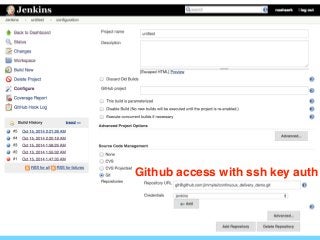

- 31. Unitest when Commits with Jenkins範例 • 需求:透過Jenkins Job, 當有新的程式 碼被提交時, 便⾃自動運⾏行unit test, 輸出 測試結果報表 • 步驟: 1. 設定Jenkins Job與Github的授權 2. 設定當有新的change時, 由Github 觸發Jenkins 3. 設定測試結果報表資訊 31

- 32. Github access with ssh key auth 32

- 33. Jenkins settings build when new changes come in Github settings 33

- 34. Unit test coverage Unit test report 34

- 35. 35

- 36. ⾃自動部署 • ⾃自動將建置好的軟體安裝與設定到不 同的環境,進⾏行後續的測試、交付產 品 • 不同的環境可能有不同的系統相依套 件、不同的設定 • 設定檔與部署程式都應進⾏行版本控制 36

- 37. Deploy after Unit Tests Success

- 38. Deploy after Unit Tests Success範例 • 需求: 使⽤用Apache作為Web server, 設 定Jenkins Job當unit test完成後, 發佈 到Apache Server • 步驟: 1. 準備Apache設定檔 2. 準備deploy scripts 3. 設定Jenkins job 38

- 39. Apache Config 39

- 40. Jenkins Setting 40 Deploy script

- 41. 部署流⽔水線 1. unittest: 由⼯工程師提交程式碼觸發 2. deploy 3. functional test 4. release, … 過程中有任何錯誤即⾃自動通知開發者, 開發者應優先修復部署流⽔水線後再繼續 開發新功能 41

- 42. 42

- 43. 43