Contrastive Learning with Adversarial Perturbations for Conditional Text Generation

0 likes277 views

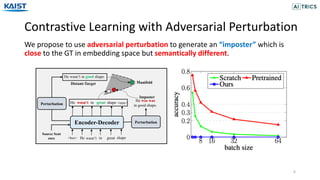

The document presents a novel contrastive learning framework for conditional text generation that addresses the exposure bias problem in sequence-to-sequence models. By leveraging adversarial perturbations to create challenging negative and positive examples, the method demonstrates improved performance on various tasks including machine translation, question generation, and summarization compared to existing baselines. Experimental results indicate the proposed approach outperforms traditional T5 models across key metrics, although future work will focus on enhancing sample efficiency and the quality of generated examples.

1 of 23

Download to read offline

![Experimental Setup – (2)

15

3) Baselines

• T5-MLE:

The T5 model trained with maximum likelihood estimation.

• T5-𝛼-MLE:

The T5 model trained with MLE but decode target sequence with temperature

scaling 𝛼 in softmax.

• T5-MLE-contrastive:

Naïve contrastive learning with MLE.

[Caccia 2020]Caccia et al., Language gans falling short, ICLR 2019](https://image.slidesharecdn.com/iclr2021contrastivelearningwithadversarialperturbationsforconditionaltextgeneration-210514140037/85/Contrastive-Learning-with-Adversarial-Perturbations-for-Conditional-Text-Generation-15-320.jpg)

![Experimental Setup – (2)

16

3) Baselines

• T5-SSMBA [Ng 2020]:

Generating additional examples by denoising and reconstructing target

sentences with masked language model

• T5-WordDropout Contrastive [Yang 2019]:

Generate negative examples by removing the most frequent word from the

target sentence.

• T5-R3f [Aghajanyan 2021]:

Add a Gaussian noise and enforce consistency loss.

[Ng 2020] Ng et al, Ssmba: Self-supervised manifold based data augmentation for improving out-of-domain robustness, EMNLP 2020

[Yang2021] Reducing word omission errors in neural machine translation: A contrastive learning approach, ACL 2019

[Aghajanyan 2019] Better fine-tuning by reducing representational collapse, ICLR2021](https://image.slidesharecdn.com/iclr2021contrastivelearningwithadversarialperturbationsforconditionaltextgeneration-210514140037/85/Contrastive-Learning-with-Adversarial-Perturbations-for-Conditional-Text-Generation-16-320.jpg)

Ad

Recommended

Adversarial Self-Supervised Contrastive Learning

Adversarial Self-Supervised Contrastive LearningMLAI2 The document presents a novel contrastive self-supervised learning framework (ROCL) that enables adversarial training of deep networks without relying on class labels. It demonstrates that instance-wise adversarial attacks can be used to generate robust representations and achieves comparable performance to supervised methods against both whitebox and blackbox attacks. The approach shows improved clean accuracy and robustness, particularly in transfer learning scenarios.

Lettres de mon moulin

Lettres de mon moulinAlex Ruiz Ovalles L'ouvrage 'Lettres de mon moulin' d'Alphonse Daudet est un recueil de contes inspirés de la Provence, illustrant des personnages pittoresques comme un curé et un meunier. L'auteur, né en 1840, a traversé des difficultés et a connu le succès grâce à ses écrits, notamment dans les années 1860 à 1880. Le livre, publié pour la première fois en 1869, reste l'œuvre la plus connue de Daudet.

Cleo

CleoDayna Oscherwitz This document discusses the 1962 French film Cleo from 5 to 7 directed by Agnes Varda. It provides discussion questions and analyzes elements of the film. The questions examine how Cleo responds to films by male directors of the time in its feminine perspective, and how it violates classical narration conventions. Settings in the film like interiors represent traditionally feminine spaces, while outdoor areas symbolize liberation. Overarching themes include women's liberation, self-discovery, and finding love as freedom. Revolutionary aspects noted are the lack of plot, self-referential nature, mobile camera, and eschewing of classical techniques.

طريق النجاح-فضاء المعلمات و المعلمين -سنة-خامسة

طريق النجاح-فضاء المعلمات و المعلمين -سنة-خامسةImed Ilahi تتناول الوثيقة موضوع الهيكل العظمي ووظائفه، حيث يوضح كيف يتكون من عظام مختلفة تمثل دعامة للجسم وتساعد في حركته. كما تتطرق الوثيقة إلى أنواع المفاصل والعضلات، بالإضافة إلى توضيح كيفية دوران الدم وأهمية الجهاز التنفسي. تشمل الوثيقة أيضًا نصائح للحفاظ على صحة الجسم والوقاية من الأمراض.

طريق النجاح الجزء الأول -فضاء المعلمات و المعلمين -سنة-خامسة

طريق النجاح الجزء الأول -فضاء المعلمات و المعلمين -سنة-خامسةImed Ilahi المستند يتناول موضوع مصادر الضوء، ويقسمها إلى نوعين رئيسيين: الطبيعية ومنها الشمس والنجوم، والاصطناعية التي تشمل المصابيح والفوانيس. يتناول المستند أيضًا النظريات الفيزيائية المتعلقة بالرؤية وكيفية انتقال الضوء، بالإضافة إلى توضيحات حول كيفية تأثير الأوساط المختلفة على الضوء. بجانب ذلك، يتناول بعض المعلومات الرياضية الأساسية المتعلقة بالأرقام والوحدات والمقاييس.

using Self-Supervised Learning Can Improve Model Robustness and uncertainty....

using Self-Supervised Learning Can Improve Model Robustness and uncertainty....ssuserbafbd0 Self-supervised learning using rotation prediction can improve model robustness and uncertainty. Models trained with this method showed improved robustness to common corruptions like noise, blur and weather effects. They also showed robustness to adversarial perturbations and label corruptions. These models were better able to detect out-of-distribution examples. Ablation studies demonstrated that self-attention helps networks learn shape and compare regions, aiding in out-of-distribution detection.

LA NEGATION EN FRANCAIS.pptx

LA NEGATION EN FRANCAIS.pptxJauresDountio Le document traite des formes affirmative et négative en français, en expliquant comment transformer des phrases affirmatives en négatives à l'aide de divers adverbes de négation. Il fournit des exemples et des règles générales concernant la place de la négation dans les phrases, ainsi que la distinction entre le ne adverbe de négation et le ne adverbe explétif. Enfin, le texte aborde l'utilisation du mot 'non' dans divers contextes de négation.

TensorFlow Object Detection | Realtime Object Detection with TensorFlow | Ten...

TensorFlow Object Detection | Realtime Object Detection with TensorFlow | Ten...Edureka! The document outlines a training program on PySpark certification, focusing on object detection and its applications such as face recognition, people counting, and security. It introduces essential concepts including TensorFlow, tensors, and provides workflows and prerequisites for implementing object detection. Additionally, the document mentions live demonstrations and real-time object detection using TensorFlow.

[Paper Reading] Unsupervised Learning of Sentence Embeddings using Compositi...

[Paper Reading] Unsupervised Learning of Sentence Embeddings using Compositi...Hiroki Shimanaka (1) The document presents an unsupervised method called Sent2Vec to learn sentence embeddings using compositional n-gram features. (2) Sent2Vec extends the continuous bag-of-words model to train sentence embeddings by composing word vectors with n-gram embeddings. (3) Experimental results show Sent2Vec outperforms other unsupervised models on most benchmark tasks, highlighting the robustness of the sentence embeddings produced.

Neural machine translation of rare words with subword units

Neural machine translation of rare words with subword unitsTae Hwan Jung This paper proposes using subword units generated by byte-pair encoding (BPE) to address the open-vocabulary problem in neural machine translation. The paper finds that BPE segmentation outperforms a back-off dictionary baseline on two translation tasks, improving BLEU by up to 1.1 and CHRF by up to 1.3. BPE learns a joint encoding between source and target languages which increases consistency in segmentation compared to language-specific encodings, further improving translation of rare and unseen words.

UWB semeval2016-task5

UWB semeval2016-task5Lukáš Svoboda This summarizes a document describing a system used in the Aspect Based Sentiment Analysis (ABSA) task of SemEval 2016. The system uses maximum entropy classifiers for aspect category detection and sentiment polarity tasks. Conditional random fields are used for opinion target extraction. It achieved state-of-the-art results in 9 constrained and 2 unconstrained experiments. The system is described including the features used, such as semantics features from word embeddings, and constrained/unconstrained features for the different subtasks and languages.

Deep Reinforcement Learning with Distributional Semantic Rewards for Abstract...

Deep Reinforcement Learning with Distributional Semantic Rewards for Abstract...Deren Lei The paper investigates the use of deep reinforcement learning with distributional semantic rewards for enhancing abstractive summarization, addressing issues related to exposure bias and the limitations of traditional evaluation metrics like ROUGE-L. By introducing a distributional semantic reward (DSR), the authors demonstrate improvements in generating diverse and fluent summaries without the repetitiveness of conventional methods. Experimental results on datasets such as Gigaword and CNN/Daily Mail indicate that the proposed DSR outperforms existing reward systems, enhancing both the relevance and fluency of generated summaries.

Bootstrapping Entity Alignment with Knowledge Graph Embedding

Bootstrapping Entity Alignment with Knowledge Graph EmbeddingNanjing University This document presents BootEA, a framework for bootstrapping entity alignment across knowledge graphs using knowledge graph embedding. BootEA models entity alignment as a classification task and trains alignment-oriented knowledge graph embeddings using an iterative process of parameter swapping, alignment prediction, labeling likely alignments, and editing alignments. Experimental results on five datasets show that BootEA significantly outperforms three state-of-the-art embedding-based entity alignment methods, particularly on sparse data.

Fast and Accurate Preordering for SMT using Neural Networks

Fast and Accurate Preordering for SMT using Neural NetworksSDL The document discusses the application of neural networks for source-side preordering in statistical machine translation (SMT), demonstrating better reordering performance compared to existing methods. The proposed model predicts node-swapping probabilities to produce a more monotonic corpus, enhancing translation quality and speeding up decoding across multiple language pairs. Experimental results show the neural network-based approach achieves the lowest crossing scores and the best BLEU scores, confirming its effectiveness in SMT.

Sergey Nikolenko and Elena Tutubalina - Constructing Aspect-Based Sentiment ...

Sergey Nikolenko and Elena Tutubalina - Constructing Aspect-Based Sentiment ...AIST The document discusses techniques for constructing aspect-based sentiment lexicons using topic modeling. It presents an overview of sentiment analysis and existing topic modeling approaches for sentiment. The paper proposes a method to extend existing sentiment dictionaries by learning word sentiment priors automatically through an expectation-maximization algorithm applied to sentiment-topic models. Experimental results on a Russian reviews dataset show the approach improves sentiment classification compared to using a manually constructed lexicon alone.

nakai22apsipa_presentation.pdf

nakai22apsipa_presentation.pdfYuki Saito This document describes a multi-task adversarial training algorithm for improving the performance of multi-speaker neural text-to-speech (TTS) models, especially for voice cloning of unseen speakers. The proposed method augments GANSpeech training with an autoencoder interpolation regularization technique to diversify speaker variations during training. Evaluation shows the method generates higher quality synthesized speech for seen speakers compared to baselines, and also improves voice cloning for unseen speakers by reducing quality degradation. However, there is still a gap in speaker similarity between seen and unseen speakers, indicating room for further improvement.

Neural Mask Generator : Learning to Generate Adaptive Word

Maskings for Langu...

Neural Mask Generator : Learning to Generate Adaptive Word

Maskings for Langu...MLAI2 The document discusses the Neural Mask Generator (NMG), which is designed to generate adaptive word maskings for language model adaptation, leveraging a bi-level meta-learning framework and reinforcement learning. It addresses the need for effective masking policies in pre-trained language models, demonstrating through experiments that NMG achieves better or comparable performance than existing heuristic masking strategies across various natural language understanding tasks. The research proposes a methodology for optimizing masking policies to enhance domain-specific language model adaptation.

Dynamic pooling and unfolding recursive autoencoders for paraphrase detection

Dynamic pooling and unfolding recursive autoencoders for paraphrase detectionKoza Ozawa This paper proposes a method using recursive autoencoders and dynamic pooling to detect paraphrases. It represents words as vectors using distributed representations trained with a neural language model. It uses recursive autoencoders to obtain word and phrase embeddings, and constructs a similarity matrix between sentences. It then applies dynamic pooling to map this matrix to a fixed size input for a classifier. The method achieves state-of-the-art performance on paraphrase detection tasks.

2-Chapter Two-N-gram Language Models.ppt

2-Chapter Two-N-gram Language Models.pptmilkesa13 This document discusses n-gram language models. It provides an introduction to language models and their role in applications like speech recognition. Simple n-gram models are described that estimate word probabilities based on prior context. Parameter estimation and smoothing techniques are covered to address data sparsity issues from rare word combinations. Evaluation of language models on held-out test data is also mentioned.

GAN(と強化学習との関係)

GAN(と強化学習との関係)Masahiro Suzuki This document discusses generative adversarial networks (GANs) and their relationship to reinforcement learning. It begins with an introduction to GANs, explaining how they can generate images without explicitly defining a probability distribution by using an adversarial training process. The second half discusses how GANs are related to actor-critic models and inverse reinforcement learning in reinforcement learning. It explains how GANs can be viewed as training a generator to fool a discriminator, similar to how policies are trained in reinforcement learning.

Turkish language modeling using BERT

Turkish language modeling using BERTAbdurrahimDerric The document outlines a project focused on predicting missing words in sentences using Turkish language modeling techniques, highlighting two main approaches: statistical language modeling and neural language models, specifically utilizing BERT. The study emphasizes the importance of dataset size on model performance and compares the effectiveness of Markov chains and BERT, revealing that, despite BERT's modern deep learning advances, statistical models still yield competitive results. Future work is suggested on enhancing dataset sizes and improving understanding of language, acknowledging limitations in computational time with large data volumes.

2021 03-02-distributed representations-of_words_and_phrases

2021 03-02-distributed representations-of_words_and_phrasesJAEMINJEONG5 The document proposes several extensions to improve the skip-gram model for learning word embeddings, including negative sampling, subsampling frequent words, and learning phrases. It finds that these extensions lead to faster training speed and higher quality word representations compared to the original skip-gram model and other published word embeddings. The extensions allow meaningful combinations of word vectors through simple addition.

UNDERSTANDING NEGATIVE SAMPLING IN KNOWLEDGE GRAPH EMBEDDING

UNDERSTANDING NEGATIVE SAMPLING IN KNOWLEDGE GRAPH EMBEDDINGijaia This document summarizes and categorizes existing approaches for negative sampling in knowledge graph embedding. It divides negative sampling methods into three categories: 1) static distribution-based approaches like uniform and Bernoulli sampling that sample negatives from fixed distributions, 2) dynamic distribution-based approaches that sample from adaptive distributions, and 3) custom cluster-based approaches that group entities for targeted negative sampling. The document analyzes representative approaches within each category and discusses their characteristics and limitations to provide guidance on negative sampling in knowledge graph embedding.

Research paper presentation for a project .pptx

Research paper presentation for a project .pptxMaryamAziz47 The document presents a study on emotion inference in conversations using a global-local modeling approach that combines recurrent neural networks and pre-trained language models. It defines the differences between emotion recognition and inference, highlighting the challenges and proposed methods for predicting emotions based on dialogue history. The framework utilizes GPT-3 for knowledge generation and a fine-tuned model where results show the importance of addressee information, with experiments conducted on various datasets.

Score-Based Generative Modeling through Stochastic Differential Equations

Score-Based Generative Modeling through Stochastic Differential EquationsSangwoo Mo This document discusses score-based generative modeling using stochastic differential equations, highlighting a unified framework for score matching and diffusion models which proposes improvements for both methodologies. Noteworthy experimental results include state-of-the-art fidelity scores on CIFAR-10 and scalability to larger datasets, along with advancements in conditional generation techniques. The paper also examines the practical advantages of SDE-based generative models, including efficient sampling methods and the potential for future research directions.

Skip-gram Model Broken Down

Skip-gram Model Broken DownChin Huan Tan The document summarizes the skip-gram model used in natural language processing. It discusses how the skip-gram model uses a neural network to create vector representations of words based on their contexts. These word vectors encode semantic relationships between words and can be trained using negative sampling to predict a target word from an input word. The training objective is to maximize the probability of predicting the correct context words.

Analyse de sentiment et classification par approche neuronale en Python et Weka

Analyse de sentiment et classification par approche neuronale en Python et WekaPatrice Bellot - Aix-Marseille Université / CNRS (LIS, INS2I) The document discusses analyzing sentiment and classification using neural network approaches. It begins by introducing the concepts of machine learning models, training and evaluation data, and model training and evaluation. It then discusses applications of sentiment analysis and classification to movie reviews, including describing commonly used datasets and evaluation metrics. Finally, it outlines different neural network architectures that can be used for sentiment analysis and classification tasks, including convolutional and recurrent neural networks.

Maximum likelihood-set - introduction

Maximum likelihood-set - introductionYusuke Matsubara The document discusses the Maximum Likelihood Set (MLS) approach for language modeling. MLS finds all possible probability mass functions (pmfs) that make the observed data most probable compared to any other dataset of the same size. This addresses issues with maximum likelihood estimation, which can underestimate probabilities of unseen words. MLS incorporates prior knowledge through a reference pmf and has competitive performance on standard benchmarks compared to techniques like Witten-Bell and Kneser-Ney smoothing.

Meta Learning Low Rank Covariance Factors for Energy-Based Deterministic Unce...

Meta Learning Low Rank Covariance Factors for Energy-Based Deterministic Unce...MLAI2 This document discusses a new method, named Proto Mahalanobis, which utilizes meta learning and a prototypical network backbone to effectively address deterministic uncertainty in energy-based models. The methodology involves an attentive set encoder and logit-normal softmax to enhance confidence in predictions, especially in tasks with small datasets or varying task distributions. Results indicate that Proto Mahalanobis outperforms existing models in maintaining calibration under data corruption.

Online Hyperparameter Meta-Learning with Hypergradient Distillation

Online Hyperparameter Meta-Learning with Hypergradient DistillationMLAI2 The document discusses a novel approach to hyperparameter optimization in meta-learning called hypergradient distillation, which aims to efficiently address limitations of existing gradient-based methods. It highlights the importance of achieving scalability, reducing short-horizon bias, maintaining constant memory costs, and enabling online optimization for effective learning. Experimental results indicate that hyperdistillation can significantly enhance convergence rates and generalization performance while being computationally efficient.

More Related Content

Similar to Contrastive Learning with Adversarial Perturbations for Conditional Text Generation (20)

[Paper Reading] Unsupervised Learning of Sentence Embeddings using Compositi...

[Paper Reading] Unsupervised Learning of Sentence Embeddings using Compositi...Hiroki Shimanaka (1) The document presents an unsupervised method called Sent2Vec to learn sentence embeddings using compositional n-gram features. (2) Sent2Vec extends the continuous bag-of-words model to train sentence embeddings by composing word vectors with n-gram embeddings. (3) Experimental results show Sent2Vec outperforms other unsupervised models on most benchmark tasks, highlighting the robustness of the sentence embeddings produced.

Neural machine translation of rare words with subword units

Neural machine translation of rare words with subword unitsTae Hwan Jung This paper proposes using subword units generated by byte-pair encoding (BPE) to address the open-vocabulary problem in neural machine translation. The paper finds that BPE segmentation outperforms a back-off dictionary baseline on two translation tasks, improving BLEU by up to 1.1 and CHRF by up to 1.3. BPE learns a joint encoding between source and target languages which increases consistency in segmentation compared to language-specific encodings, further improving translation of rare and unseen words.

UWB semeval2016-task5

UWB semeval2016-task5Lukáš Svoboda This summarizes a document describing a system used in the Aspect Based Sentiment Analysis (ABSA) task of SemEval 2016. The system uses maximum entropy classifiers for aspect category detection and sentiment polarity tasks. Conditional random fields are used for opinion target extraction. It achieved state-of-the-art results in 9 constrained and 2 unconstrained experiments. The system is described including the features used, such as semantics features from word embeddings, and constrained/unconstrained features for the different subtasks and languages.

Deep Reinforcement Learning with Distributional Semantic Rewards for Abstract...

Deep Reinforcement Learning with Distributional Semantic Rewards for Abstract...Deren Lei The paper investigates the use of deep reinforcement learning with distributional semantic rewards for enhancing abstractive summarization, addressing issues related to exposure bias and the limitations of traditional evaluation metrics like ROUGE-L. By introducing a distributional semantic reward (DSR), the authors demonstrate improvements in generating diverse and fluent summaries without the repetitiveness of conventional methods. Experimental results on datasets such as Gigaword and CNN/Daily Mail indicate that the proposed DSR outperforms existing reward systems, enhancing both the relevance and fluency of generated summaries.

Bootstrapping Entity Alignment with Knowledge Graph Embedding

Bootstrapping Entity Alignment with Knowledge Graph EmbeddingNanjing University This document presents BootEA, a framework for bootstrapping entity alignment across knowledge graphs using knowledge graph embedding. BootEA models entity alignment as a classification task and trains alignment-oriented knowledge graph embeddings using an iterative process of parameter swapping, alignment prediction, labeling likely alignments, and editing alignments. Experimental results on five datasets show that BootEA significantly outperforms three state-of-the-art embedding-based entity alignment methods, particularly on sparse data.

Fast and Accurate Preordering for SMT using Neural Networks

Fast and Accurate Preordering for SMT using Neural NetworksSDL The document discusses the application of neural networks for source-side preordering in statistical machine translation (SMT), demonstrating better reordering performance compared to existing methods. The proposed model predicts node-swapping probabilities to produce a more monotonic corpus, enhancing translation quality and speeding up decoding across multiple language pairs. Experimental results show the neural network-based approach achieves the lowest crossing scores and the best BLEU scores, confirming its effectiveness in SMT.

Sergey Nikolenko and Elena Tutubalina - Constructing Aspect-Based Sentiment ...

Sergey Nikolenko and Elena Tutubalina - Constructing Aspect-Based Sentiment ...AIST The document discusses techniques for constructing aspect-based sentiment lexicons using topic modeling. It presents an overview of sentiment analysis and existing topic modeling approaches for sentiment. The paper proposes a method to extend existing sentiment dictionaries by learning word sentiment priors automatically through an expectation-maximization algorithm applied to sentiment-topic models. Experimental results on a Russian reviews dataset show the approach improves sentiment classification compared to using a manually constructed lexicon alone.

nakai22apsipa_presentation.pdf

nakai22apsipa_presentation.pdfYuki Saito This document describes a multi-task adversarial training algorithm for improving the performance of multi-speaker neural text-to-speech (TTS) models, especially for voice cloning of unseen speakers. The proposed method augments GANSpeech training with an autoencoder interpolation regularization technique to diversify speaker variations during training. Evaluation shows the method generates higher quality synthesized speech for seen speakers compared to baselines, and also improves voice cloning for unseen speakers by reducing quality degradation. However, there is still a gap in speaker similarity between seen and unseen speakers, indicating room for further improvement.

Neural Mask Generator : Learning to Generate Adaptive Word

Maskings for Langu...

Neural Mask Generator : Learning to Generate Adaptive Word

Maskings for Langu...MLAI2 The document discusses the Neural Mask Generator (NMG), which is designed to generate adaptive word maskings for language model adaptation, leveraging a bi-level meta-learning framework and reinforcement learning. It addresses the need for effective masking policies in pre-trained language models, demonstrating through experiments that NMG achieves better or comparable performance than existing heuristic masking strategies across various natural language understanding tasks. The research proposes a methodology for optimizing masking policies to enhance domain-specific language model adaptation.

Dynamic pooling and unfolding recursive autoencoders for paraphrase detection

Dynamic pooling and unfolding recursive autoencoders for paraphrase detectionKoza Ozawa This paper proposes a method using recursive autoencoders and dynamic pooling to detect paraphrases. It represents words as vectors using distributed representations trained with a neural language model. It uses recursive autoencoders to obtain word and phrase embeddings, and constructs a similarity matrix between sentences. It then applies dynamic pooling to map this matrix to a fixed size input for a classifier. The method achieves state-of-the-art performance on paraphrase detection tasks.

2-Chapter Two-N-gram Language Models.ppt

2-Chapter Two-N-gram Language Models.pptmilkesa13 This document discusses n-gram language models. It provides an introduction to language models and their role in applications like speech recognition. Simple n-gram models are described that estimate word probabilities based on prior context. Parameter estimation and smoothing techniques are covered to address data sparsity issues from rare word combinations. Evaluation of language models on held-out test data is also mentioned.

GAN(と強化学習との関係)

GAN(と強化学習との関係)Masahiro Suzuki This document discusses generative adversarial networks (GANs) and their relationship to reinforcement learning. It begins with an introduction to GANs, explaining how they can generate images without explicitly defining a probability distribution by using an adversarial training process. The second half discusses how GANs are related to actor-critic models and inverse reinforcement learning in reinforcement learning. It explains how GANs can be viewed as training a generator to fool a discriminator, similar to how policies are trained in reinforcement learning.

Turkish language modeling using BERT

Turkish language modeling using BERTAbdurrahimDerric The document outlines a project focused on predicting missing words in sentences using Turkish language modeling techniques, highlighting two main approaches: statistical language modeling and neural language models, specifically utilizing BERT. The study emphasizes the importance of dataset size on model performance and compares the effectiveness of Markov chains and BERT, revealing that, despite BERT's modern deep learning advances, statistical models still yield competitive results. Future work is suggested on enhancing dataset sizes and improving understanding of language, acknowledging limitations in computational time with large data volumes.

2021 03-02-distributed representations-of_words_and_phrases

2021 03-02-distributed representations-of_words_and_phrasesJAEMINJEONG5 The document proposes several extensions to improve the skip-gram model for learning word embeddings, including negative sampling, subsampling frequent words, and learning phrases. It finds that these extensions lead to faster training speed and higher quality word representations compared to the original skip-gram model and other published word embeddings. The extensions allow meaningful combinations of word vectors through simple addition.

UNDERSTANDING NEGATIVE SAMPLING IN KNOWLEDGE GRAPH EMBEDDING

UNDERSTANDING NEGATIVE SAMPLING IN KNOWLEDGE GRAPH EMBEDDINGijaia This document summarizes and categorizes existing approaches for negative sampling in knowledge graph embedding. It divides negative sampling methods into three categories: 1) static distribution-based approaches like uniform and Bernoulli sampling that sample negatives from fixed distributions, 2) dynamic distribution-based approaches that sample from adaptive distributions, and 3) custom cluster-based approaches that group entities for targeted negative sampling. The document analyzes representative approaches within each category and discusses their characteristics and limitations to provide guidance on negative sampling in knowledge graph embedding.

Research paper presentation for a project .pptx

Research paper presentation for a project .pptxMaryamAziz47 The document presents a study on emotion inference in conversations using a global-local modeling approach that combines recurrent neural networks and pre-trained language models. It defines the differences between emotion recognition and inference, highlighting the challenges and proposed methods for predicting emotions based on dialogue history. The framework utilizes GPT-3 for knowledge generation and a fine-tuned model where results show the importance of addressee information, with experiments conducted on various datasets.

Score-Based Generative Modeling through Stochastic Differential Equations

Score-Based Generative Modeling through Stochastic Differential EquationsSangwoo Mo This document discusses score-based generative modeling using stochastic differential equations, highlighting a unified framework for score matching and diffusion models which proposes improvements for both methodologies. Noteworthy experimental results include state-of-the-art fidelity scores on CIFAR-10 and scalability to larger datasets, along with advancements in conditional generation techniques. The paper also examines the practical advantages of SDE-based generative models, including efficient sampling methods and the potential for future research directions.

Skip-gram Model Broken Down

Skip-gram Model Broken DownChin Huan Tan The document summarizes the skip-gram model used in natural language processing. It discusses how the skip-gram model uses a neural network to create vector representations of words based on their contexts. These word vectors encode semantic relationships between words and can be trained using negative sampling to predict a target word from an input word. The training objective is to maximize the probability of predicting the correct context words.

Analyse de sentiment et classification par approche neuronale en Python et Weka

Analyse de sentiment et classification par approche neuronale en Python et WekaPatrice Bellot - Aix-Marseille Université / CNRS (LIS, INS2I) The document discusses analyzing sentiment and classification using neural network approaches. It begins by introducing the concepts of machine learning models, training and evaluation data, and model training and evaluation. It then discusses applications of sentiment analysis and classification to movie reviews, including describing commonly used datasets and evaluation metrics. Finally, it outlines different neural network architectures that can be used for sentiment analysis and classification tasks, including convolutional and recurrent neural networks.

Maximum likelihood-set - introduction

Maximum likelihood-set - introductionYusuke Matsubara The document discusses the Maximum Likelihood Set (MLS) approach for language modeling. MLS finds all possible probability mass functions (pmfs) that make the observed data most probable compared to any other dataset of the same size. This addresses issues with maximum likelihood estimation, which can underestimate probabilities of unseen words. MLS incorporates prior knowledge through a reference pmf and has competitive performance on standard benchmarks compared to techniques like Witten-Bell and Kneser-Ney smoothing.

Analyse de sentiment et classification par approche neuronale en Python et Weka

Analyse de sentiment et classification par approche neuronale en Python et WekaPatrice Bellot - Aix-Marseille Université / CNRS (LIS, INS2I)

More from MLAI2 (20)

Meta Learning Low Rank Covariance Factors for Energy-Based Deterministic Unce...

Meta Learning Low Rank Covariance Factors for Energy-Based Deterministic Unce...MLAI2 This document discusses a new method, named Proto Mahalanobis, which utilizes meta learning and a prototypical network backbone to effectively address deterministic uncertainty in energy-based models. The methodology involves an attentive set encoder and logit-normal softmax to enhance confidence in predictions, especially in tasks with small datasets or varying task distributions. Results indicate that Proto Mahalanobis outperforms existing models in maintaining calibration under data corruption.

Online Hyperparameter Meta-Learning with Hypergradient Distillation

Online Hyperparameter Meta-Learning with Hypergradient DistillationMLAI2 The document discusses a novel approach to hyperparameter optimization in meta-learning called hypergradient distillation, which aims to efficiently address limitations of existing gradient-based methods. It highlights the importance of achieving scalability, reducing short-horizon bias, maintaining constant memory costs, and enabling online optimization for effective learning. Experimental results indicate that hyperdistillation can significantly enhance convergence rates and generalization performance while being computationally efficient.

Online Coreset Selection for Rehearsal-based Continual Learning

Online Coreset Selection for Rehearsal-based Continual LearningMLAI2 The document discusses online coreset selection (OCS) for rehearsal-based continual learning, which addresses the challenges of class-imbalanced and noisy instances that can hinder model performance. OCS employs gradient-based criteria for selecting valuable samples in order to enhance task adaptation and reduce catastrophic forgetting of previous tasks. Experimental results demonstrate the effectiveness of OCS across various datasets, showing improved accuracy and reduced forgetting compared to existing methods.

Representational Continuity for Unsupervised Continual Learning

Representational Continuity for Unsupervised Continual LearningMLAI2 The document discusses advancements in unsupervised continual learning (UCL) aimed at handling large volumes of unlabelled data and mitigating catastrophic forgetting. It highlights the development of lifelong unsupervised mixup (LUMP) for enhancing UCL's performance by learning discriminative patterns and achieving better stability compared to self-supervised learning (SCL). Empirical results demonstrate that UCL outperforms SCL across various datasets and contexts, making strides in continual representation learning without reliance on annotated data.

Sequential Reptile_Inter-Task Gradient Alignment for Multilingual Learning

Sequential Reptile_Inter-Task Gradient Alignment for Multilingual LearningMLAI2 The document discusses sequential reptile, a method for enhancing multilingual learning by aligning task gradients to improve knowledge transfer and reduce catastrophic forgetting in pretrained language models. It highlights experimental results showing that sequential reptile effectively manages gradient alignment across various multilingual NLP tasks, achieving better performance compared to existing techniques. The study concludes that aligning gradients is crucial for fostering knowledge transfer in low-resource languages and presents a novel algorithm that avoids the computational costs of second-order derivatives.

Skill-Based Meta-Reinforcement Learning

Skill-Based Meta-Reinforcement LearningMLAI2 The document discusses skill-based meta-reinforcement learning, detailing a framework that utilizes prior knowledge to accelerate learning for various tasks such as cooking. It presents a three-phase approach: skill extraction from offline data, skill-based meta-training, and target task learning. The framework reportedly allows for faster adaptation and learning of new long-horizon and sparse-reward tasks compared to existing methods.

Edge Representation Learning with Hypergraphs

Edge Representation Learning with HypergraphsMLAI2 The document presents a novel edge representation learning scheme using dual hypergraph transformation and introduces two new edge pooling methods, hypercluster and hyperdrop. The proposed methods enhance the representation of graph edges, which are crucial for various tasks like graph reconstruction, generation, and classification, outperforming existing methods significantly. The effectiveness of the approach is validated through experiments demonstrating improved performance across multiple graph-related tasks.

Hit and Lead Discovery with Explorative RL and Fragment-based Molecule Genera...

Hit and Lead Discovery with Explorative RL and Fragment-based Molecule Genera...MLAI2 1) The document presents a method called FREED that uses fragment-based molecule generation guided by reinforcement learning to discover novel drug hits.

2) FREED explicitly constrains molecule generation to pharmacologically acceptable fragments to avoid toxic structures, which is more effective than implicit constraint methods.

3) FREED's exploratory RL algorithm prioritizes experience replay to encourage visiting novel states and finding diverse optima in the constrained chemical space.

Mini-Batch Consistent Slot Set Encoder For Scalable Set Encoding

Mini-Batch Consistent Slot Set Encoder For Scalable Set EncodingMLAI2 The document presents a mini-batch consistent slot set encoder (SSE) designed for scalable set encoding in machine learning, allowing for the processing of large sets in mini-batches while adhering to permutation invariance and equivariance properties. It highlights the limitations of existing methods like set transformers and demonstrates that the proposed SSE can achieve mini-batch consistency by utilizing slots for attention mechanisms. The paper includes experiments on point cloud classification and image reconstruction, showcasing the effectiveness of the SSE in these tasks.

Task Adaptive Neural Network Search with Meta-Contrastive Learning

Task Adaptive Neural Network Search with Meta-Contrastive LearningMLAI2 The document introduces a novel method called Task-Adaptive Neural Network Search (TANS) that aims to automatically search for optimal pretrained neural network architectures and relevant parameters for specific datasets using meta-contrastive learning. TANS addresses challenges in conventional neural architecture search (NAS) by implementing a cross-modal retrieval framework and an efficient model-zoo construction approach. Experimental results demonstrate that TANS outperforms existing methods with reduced search and training times, highlighting its effectiveness in applying pretrained knowledge to various tasks.

Federated Semi-Supervised Learning with Inter-Client Consistency & Disjoint L...

Federated Semi-Supervised Learning with Inter-Client Consistency & Disjoint L...MLAI2 The document presents a method called federated semi-supervised learning (FSSL) designed to enhance knowledge sharing among clients with limited labeled data. It introduces 'fedmatch', which employs inter-client consistency loss and parameter decomposition for effective learning across different scenarios where either partially or completely unlabeled data is present. Experimental results demonstrate that fedmatch significantly outperforms traditional FSSL approaches in both batch and streaming datasets.

Meta-GMVAE: Mixture of Gaussian VAE for Unsupervised Meta-Learning

Meta-GMVAE: Mixture of Gaussian VAE for Unsupervised Meta-LearningMLAI2 The document presents a novel unsupervised meta-learning model called meta-gmvae, designed to learn from unlabeled data by utilizing a Gaussian mixture prior to improve learning efficiency. It discusses the method's development, which includes variational autoencoders and a semi-supervised EM algorithm, and compares its performance against several baselines across two benchmark datasets. Results indicate that meta-gmvae outperforms existing unsupervised methods and exhibits competitive performance compared to supervised models in specific scenarios.

Accurate Learning of Graph Representations with Graph Multiset Pooling

Accurate Learning of Graph Representations with Graph Multiset PoolingMLAI2 This paper presents a novel graph representation learning approach utilizing graph multiset pooling to effectively encode graphs by considering structural constraints and node interactions. The proposed graph multiset transformer (GMT) model demonstrates substantial performance improvements in graph classification, reconstruction, and generation tasks compared to existing methods, while also showcasing efficiency in memory and time usage. The findings suggest that the GMT can reach the expressive power of the Weisfeiler-Lehman test, paving the way for enhanced graph analysis techniques.

Clinical Risk Prediction with Temporal Probabilistic Asymmetric Multi-Task Le...

Clinical Risk Prediction with Temporal Probabilistic Asymmetric Multi-Task Le...MLAI2 The document discusses a novel probabilistic asymmetric multi-task learning framework aimed at improving clinical risk prediction through uncertain knowledge transfer across different tasks and timesteps. It highlights experiments conducted on clinical datasets that demonstrated significant accuracy improvements over traditional single-task and multi-task learning methods. The framework utilizes a Bayesian formulation to manage knowledge transfer based on feature uncertainty, providing valuable insights into temporal relationships among clinical tasks.

MetaPerturb: Transferable Regularizer for Heterogeneous Tasks and Architectures

MetaPerturb: Transferable Regularizer for Heterogeneous Tasks and ArchitecturesMLAI2 MetaPerturb is a meta-learned perturbation function that can enhance generalization of neural networks on different tasks and architectures. It proposes a novel meta-learning framework involving jointly training a main model and perturbation module on multiple source tasks to learn a transferable perturbation function. This meta-learned perturbation function can then be transferred to improve performance of a target model on an unseen target task or architecture, outperforming baselines on various datasets and architectures.

Learning to Extrapolate Knowledge: Transductive Few-shot Out-of-Graph Link Pr...

Learning to Extrapolate Knowledge: Transductive Few-shot Out-of-Graph Link Pr...MLAI2 The document presents a novel meta-learning framework for few-shot out-of-graph link prediction, addressing the challenges of incomplete knowledge graphs and evolving entities. This approach, termed the Graph Extrapolation Network (GEN), effectively predicts links between seen and unseen entities, significantly outperforming existing baselines in knowledge graph completion and drug-drug interaction tasks. The framework learns from simulated unseen entities to improve generalization in link prediction tasks.

Cost-effective Interactive Attention Learning with Neural Attention Process

Cost-effective Interactive Attention Learning with Neural Attention ProcessMLAI2 The document presents an interactive learning framework aimed at improving model interpretability in deep neural networks through human interaction and feedback. It addresses challenges such as incorrect interpretations and the high costs of retraining models, proposing cost-effective approaches for annotation and instance selection. The framework utilizes neural attention processes for efficient learning and evaluation across various datasets, including electronic health records and real estate transactions.

Adversarial Neural Pruning with Latent Vulnerability Suppression

Adversarial Neural Pruning with Latent Vulnerability SuppressionMLAI2 This document presents a study on adversarial neural pruning (ANP) with a focus on latent vulnerability suppression to improve the robustness and efficiency of neural networks against adversarial attacks. The authors introduce the concept of vulnerability in latent-feature representations and propose a Bayesian framework for pruning vulnerable features to enhance model performance. Experimental results show that the proposed ANP with vulnerability suppression (ANP-VS) outperforms various baseline models across multiple benchmark datasets.

Generating Diverse and Consistent QA pairs from Contexts with Information-Max...

Generating Diverse and Consistent QA pairs from Contexts with Information-Max...MLAI2 The document discusses the challenges of data scarcity in question answering (QA) and proposes a novel approach using info-maximizing hierarchical conditional variational autoencoders (info-hcvae) to generate diverse and consistent QA pairs. It highlights the limitations of existing systems while emphasizing the importance of mutual information maximization for maintaining semantic consistency of generated questions and answers. Experimental results demonstrate that info-hcvae outperforms baseline models in QA pair generation across various datasets.

Learning to Balance: Bayesian Meta-Learning for Imbalanced and Out-of-distrib...

Learning to Balance: Bayesian Meta-Learning for Imbalanced and Out-of-distrib...MLAI2 The document discusses Bayesian meta-learning methods for addressing challenges in few-shot learning, particularly regarding imbalanced and out-of-distribution tasks. It introduces techniques for balancing task-specific learning through the use of Bayesian frameworks and hierarchical statistics pooling to improve model performance. Experimental results demonstrate that the proposed methods, particularly Bayesian TAML, outperform traditional meta-learning approaches, especially in real-world scenarios with data imbalances and distributional shifts.

Ad

Recently uploaded (20)

Down the Rabbit Hole – Solving 5 Training Roadblocks

Down the Rabbit Hole – Solving 5 Training RoadblocksRustici Software Feeling stuck in the Matrix of your training technologies? You’re not alone. Managing your training catalog, wrangling LMSs and delivering content across different tools and audiences can feel like dodging digital bullets. At some point, you hit a fork in the road: Keep patching things up as issues pop up… or follow the rabbit hole to the root of the problems.

Good news, we’ve already been down that rabbit hole. Peter Overton and Cameron Gray of Rustici Software are here to share what we found. In this webinar, we’ll break down 5 training roadblocks in delivery and management and show you how they’re easier to fix than you might think.

Can We Use Rust to Develop Extensions for PostgreSQL? (POSETTE: An Event for ...

Can We Use Rust to Develop Extensions for PostgreSQL? (POSETTE: An Event for ...NTT DATA Technology & Innovation Can We Use Rust to Develop Extensions for PostgreSQL?

(POSETTE: An Event for Postgres 2025)

June 11, 2025

Shinya Kato

NTT DATA Japan Corporation

“Key Requirements to Successfully Implement Generative AI in Edge Devices—Opt...

“Key Requirements to Successfully Implement Generative AI in Edge Devices—Opt...Edge AI and Vision Alliance For the full video of this presentation, please visit: https://www.edge-ai-vision.com/2025/06/key-requirements-to-successfully-implement-generative-ai-in-edge-devices-optimized-mapping-to-the-enhanced-npx6-neural-processing-unit-ip-a-presentation-from-synopsys/

Gordon Cooper, Principal Product Manager at Synopsys, presents the “Key Requirements to Successfully Implement Generative AI in Edge Devices—Optimized Mapping to the Enhanced NPX6 Neural Processing Unit IP” tutorial at the May 2025 Embedded Vision Summit.

In this talk, Cooper discusses emerging trends in generative AI for edge devices and the key role of transformer-based neural networks. He reviews the distinct attributes of transformers, their advantages over conventional convolutional neural networks and how they enable generative AI.

Cooper then covers key requirements that must be met for neural processing units (NPU) to support transformers and generative AI in edge device applications. He uses transformer-based generative AI examples to illustrate the efficient mapping of these workloads onto the enhanced Synopsys ARC NPX NPU IP family.

AudGram Review: Build Visually Appealing, AI-Enhanced Audiograms to Engage Yo...

AudGram Review: Build Visually Appealing, AI-Enhanced Audiograms to Engage Yo...SOFTTECHHUB AudGram changes everything by bridging the gap between your audio content and the visual engagement your audience craves. This cloud-based platform transforms your existing audio into scroll-stopping visual content that performs across all social media platforms.

Data Validation and System Interoperability

Data Validation and System InteroperabilitySafe Software A non-profit human services agency with specialized health record and billing systems. Challenges solved include access control integrations from employee electronic HR records, multiple regulations compliance, data migrations, benefits enrollments, payroll processing, and automated reporting for business intelligence and analysis.

Bridging the divide: A conversation on tariffs today in the book industry - T...

Bridging the divide: A conversation on tariffs today in the book industry - T...BookNet Canada A collaboration-focused conversation on the recently imposed US and Canadian tariffs where speakers shared insights into the current legislative landscape, ongoing advocacy efforts, and recommended next steps. This event was presented in partnership with the Book Industry Study Group.

Link to accompanying resource: https://bnctechforum.ca/sessions/bridging-the-divide-a-conversation-on-tariffs-today-in-the-book-industry/

Presented by BookNet Canada and the Book Industry Study Group on May 29, 2025 with support from the Department of Canadian Heritage.

National Fuels Treatments Initiative: Building a Seamless Map of Hazardous Fu...

National Fuels Treatments Initiative: Building a Seamless Map of Hazardous Fu...Safe Software The National Fuels Treatments Initiative (NFT) is transforming wildfire mitigation by creating a standardized map of nationwide fuels treatment locations across all land ownerships in the United States. While existing state and federal systems capture this data in diverse formats, NFT bridges these gaps, delivering the first truly integrated national view. This dataset will be used to measure the implementation of the National Cohesive Wildland Strategy and demonstrate the positive impact of collective investments in hazardous fuels reduction nationwide. In Phase 1, we developed an ETL pipeline template in FME Form, leveraging a schema-agnostic workflow with dynamic feature handling intended for fast roll-out and light maintenance. This was key as the initiative scaled from a few to over fifty contributors nationwide. By directly pulling from agency data stores, oftentimes ArcGIS Feature Services, NFT preserves existing structures, minimizing preparation needs. External mapping tables ensure consistent attribute and domain alignment, while robust change detection processes keep data current and actionable. Now in Phase 2, we’re migrating pipelines to FME Flow to take advantage of advanced scheduling, monitoring dashboards, and automated notifications to streamline operations. Join us to explore how this initiative exemplifies the power of technology, blending FME, ArcGIS Online, and AWS to solve a national business problem with a scalable, automated solution.

Raman Bhaumik - Passionate Tech Enthusiast

Raman Bhaumik - Passionate Tech EnthusiastRaman Bhaumik A Junior Software Developer with a flair for innovation, Raman Bhaumik excels in delivering scalable web solutions. With three years of experience and a solid foundation in Java, Python, JavaScript, and SQL, she has streamlined task tracking by 20% and improved application stability.

The State of Web3 Industry- Industry Report

The State of Web3 Industry- Industry ReportLiveplex Web3 is poised for mainstream integration by 2030, with decentralized applications potentially reaching billions of users through improved scalability, user-friendly wallets, and regulatory clarity. Many forecasts project trillions of dollars in tokenized assets by 2030 , integration of AI, IoT, and Web3 (e.g. autonomous agents and decentralized physical infrastructure), and the possible emergence of global interoperability standards. Key challenges going forward include ensuring security at scale, preserving decentralization principles under regulatory oversight, and demonstrating tangible consumer value to sustain adoption beyond speculative cycles.

“Addressing Evolving AI Model Challenges Through Memory and Storage,” a Prese...

“Addressing Evolving AI Model Challenges Through Memory and Storage,” a Prese...Edge AI and Vision Alliance For the full video of this presentation, please visit: https://www.edge-ai-vision.com/2025/06/addressing-evolving-ai-model-challenges-through-memory-and-storage-a-presentation-from-micron/

Wil Florentino, Senior Segment Marketing Manager at Micron, presents the “Addressing Evolving AI Model Challenges Through Memory and Storage” tutorial at the May 2025 Embedded Vision Summit.

In the fast-changing world of artificial intelligence, the industry is deploying more AI compute at the edge. But the growing diversity and data footprint of transformers and models such as large language models and large multimodal models puts a spotlight on memory performance and data storage capacity as key bottlenecks. Enabling the full potential of AI in industries such as manufacturing, automotive, robotics and transportation will require us to find efficient ways to deploy this new generation of complex models.

In this presentation, Florentino explores how memory and storage are responding to this need and solving complex issues in the AI market. He examines the storage capacity and memory bandwidth requirements of edge AI use cases ranging from tiny devices with severe cost and power constraints to edge servers, and he explains how new memory technologies such as LPDDR5, LPCAMM2 and multi-port SSDs are helping system developers to meet these challenges.

vertical-cnc-processing-centers-drillteq-v-200-en.pdf

vertical-cnc-processing-centers-drillteq-v-200-en.pdfAmirStern2 מכונות CNC קידוח אנכיות הן הבחירה הנכונה והטובה ביותר לקידוח ארונות וארגזים לייצור רהיטים. החלק נוסע לאורך ציר ה-x באמצעות ציר דיגיטלי מדויק, ותפוס ע"י צבת מכנית, כך שאין צורך לבצע setup (התאמות) לגדלים שונים של חלקים.

Floods in Valencia: Two FME-Powered Stories of Data Resilience

Floods in Valencia: Two FME-Powered Stories of Data ResilienceSafe Software In October 2024, the Spanish region of Valencia faced severe flooding that underscored the critical need for accessible and actionable data. This presentation will explore two innovative use cases where FME facilitated data integration and availability during the crisis. The first case demonstrates how FME was used to process and convert satellite imagery and other geospatial data into formats tailored for rapid analysis by emergency teams. The second case delves into making human mobility data—collected from mobile phone signals—accessible as source-destination matrices, offering key insights into population movements during and after the flooding. These stories highlight how FME's powerful capabilities can bridge the gap between raw data and decision-making, fostering resilience and preparedness in the face of natural disasters. Attendees will gain practical insights into how FME can support crisis management and urban planning in a changing climate.

High Availability On-Premises FME Flow.pdf

High Availability On-Premises FME Flow.pdfSafe Software FME Flow is a highly robust tool for transforming data both automatically and by user-initiated workflows. At the Finnish telecommunications company Elisa, FME Flow serves processes and internal stakeholders that require 24/7 availability from underlying systems, while imposing limitations on the use of cloud based systems. In response to these business requirements, Elisa has implemented a high-availability on-premises setup of FME Flow, where all components of the system have been duplicated or clustered. The goal of the presentation is to provide insights into the architecture behind the high-availability functionality. The presentation will show in basic technical terms how the different parts of the system work together. Basic level understanding of IT technologies is required to understand the technical portion of the presentation, namely understanding the purpose of the following components: load balancer, FME Flow host nodes, FME Flow worker nodes, network file storage drives, databases, and external authentication services. The presentation will also outline our lessons learned from the high-availability project, both benefits and challenges to consider.

Supporting the NextGen 911 Digital Transformation with FME

Supporting the NextGen 911 Digital Transformation with FMESafe Software Next Generation 911 involves the transformation of our 911 system from an old analog one to the new digital internet based architecture. The evolution of NG911 opens up a host of new opportunities to improve the system. This includes everything from device based location, to real time text. This can improve location accuracy dramatically as well as provide live updates from the citizen in need along with real time sensor updates. There is also the opportunity to provide multi-media attachments and medical records if the end user approves. This digital transformation and enhancements all require the support of new NENA and CRTC standards, along with integration across a variety of data streams.

This presentation will focus on how FME has supported NG911 transformations to date, and how we are positioning FME to support the enhanced capabilities to come. This session will be of interest to emergency services, municipalities and anyone who may be interested to know more about how emergency services are being improved to provide more accurate, localized information in order to improve the speed and relevance of emergency response and ultimately save more lives and provide better outcomes for those in need.

Viral>Wondershare Filmora 14.5.18.12900 Crack Free Download

Viral>Wondershare Filmora 14.5.18.12900 Crack Free DownloadPuppy jhon ➡ 🌍📱👉COPY & PASTE LINK👉👉👉 ➤ ➤➤ https://drfiles.net/

Wondershare Filmora Crack is a user-friendly video editing software designed for both beginners and experienced users.

Securing Account Lifecycles in the Age of Deepfakes.pptx

Securing Account Lifecycles in the Age of Deepfakes.pptxFIDO Alliance Securing Account Lifecycles in the Age of Deepfakes

Creating Inclusive Digital Learning with AI: A Smarter, Fairer Future

Creating Inclusive Digital Learning with AI: A Smarter, Fairer FutureImpelsys Inc. Have you ever struggled to read a tiny label on a medicine box or tried to navigate a confusing website? Now imagine if every learning experience felt that way—every single day.

For millions of people living with disabilities, poorly designed content isn’t just frustrating. It’s a barrier to growth. Inclusive learning is about fixing that. And today, AI is helping us build digital learning that’s smarter, kinder, and accessible to everyone.

Accessible learning increases engagement, retention, performance, and inclusivity for everyone. Inclusive design is simply better design.

Edge-banding-machines-edgeteq-s-200-en-.pdf

Edge-banding-machines-edgeteq-s-200-en-.pdfAmirStern2 מכונת קנטים המתאימה לנגריות קטנות או גדולות (כמכונת גיבוי).

מדביקה קנטים מגליל או פסים, עד עובי קנט – 3 מ"מ ועובי חומר עד 40 מ"מ. בקר ממוחשב המתריע על תקלות, ומנועים מאסיביים תעשייתיים כמו במכונות הגדולות.

Can We Use Rust to Develop Extensions for PostgreSQL? (POSETTE: An Event for ...

Can We Use Rust to Develop Extensions for PostgreSQL? (POSETTE: An Event for ...NTT DATA Technology & Innovation

“Key Requirements to Successfully Implement Generative AI in Edge Devices—Opt...

“Key Requirements to Successfully Implement Generative AI in Edge Devices—Opt...Edge AI and Vision Alliance

“Addressing Evolving AI Model Challenges Through Memory and Storage,” a Prese...

“Addressing Evolving AI Model Challenges Through Memory and Storage,” a Prese...Edge AI and Vision Alliance

Ad

Contrastive Learning with Adversarial Perturbations for Conditional Text Generation

- 1. Contrastive Learning with Adversarial Perturbations for Conditional Text Generation Seanie Lee1*, Dong Bok Lee1*, Sung Ju Hwang1,2 KAIST1, Daejeon, South Korea AITRICS2, Seoul, South Korea 1

- 2. Pretrained Language Model 2 Pretraining language model with large corpus and finetuning it for target task requires a large amount of labeled data.

- 3. Conditional Text Generation 3 Conditional text generation is to generate another sequence from the given sequence. Generally, we use encoder-decoder architecture. the blue Encoder Encoder Encoder house Embed Embed Embed Decoder Decoder Decoder Decoder Embed Embed Embed Embed la masion bleu <eos>

- 4. Exposure Bias 4 Seq2seq models trained with teacher forcing often show exposure bias problem, which hurts generalization to unseen inputs. the blue Encoder Encoder Encoder house <bos> Embed Embed Embed Decoder Decoder Decoder Decoder Embed Embed Embed Embed le masion bleu <eos> la masion bleu prediction Ground Truth

- 5. Contrastive Learning Framework 5 We propose to use contrast a ground truth pair to negative pairs for better representation of target sentence. I cannot do that. GT Target Sentence Source Sent ence Encoder-Decoder He wasn’t in great shape <eos> <bos> He wasn’t in great shape Randomly sampled negative examples are easily discriminated with the pretrained language model and requires a large batch size to mine meaningful negative examples.

- 6. Contrastive Learning with Adversarial Perturbation 6 We propose to use adversarial perturbation to generate an “imposter” which is close to the GT in embedding space but semantically different. Imposter He wasn’t in good shape. Distant-Target Perturbation He was was in good shape. Perturbation Source Sent ence Encoder-Decoder He wasn’t in great shape <eos> <bos> He wasn’t in great shape Manifold

- 7. Contrastive Learning with Adversarial Perturbation 7 Conversely, we generate a “distant target” which is far away from the source sentence in embedding space but semantically similar. Imposter He wasn’t in good shape. Distant-Target Perturbation He was was in good shape. Perturbation Source Sent ence Encoder-Decoder He wasn’t in great shape <eos> <bos> He wasn’t in great shape Manifold

- 8. Contrastive Learning with Adversarial Perturbation 8 We pull the imposter as well as the negative examples away from the source and push the distant target and target to the source. Max Min push source target dist-target imposter pull

- 9. Contrastive Learning objective 9 Given a pair of source and target sentence 𝑥("), 𝒚(𝒊), we randomly sample 𝒚(𝒋) with 𝑖 ≠ 𝑗 and use them as a set of negative examples 𝑆. As SimCLR, we maximize the cosine similarity between source and target and minimize it between source and negative examples. Nu era într-o formă prea bună. 𝒙(𝒊) He wasn’t in great shape. 𝒚(𝒊) But I cannot do it anymore. By mid-July, it was 40 percent. 𝒚(𝒋) 𝒚(𝒌) Chen et al. "A simple framework for contrastive learning of visual representations." ICML 2020.

- 10. Generation of Imposter 10 We add a small perturbation to the hidden representation of target sentence to generate imposter with linear approximation as Goodfellow et al. (2015). Encoder-Decoder Nu era într-o formă prea bună. <bos> He wasn’t in great shape. He was was in great shape. Pooling Pooling Min Source Sentence Target Sentence Goodfellow et al. "Explaining and harnessing adversarial examples. International Conference on Learning Representations." ICLR 2015. Objective Linear Approximation

- 11. Generation of Distant Target 11 Add a large perturbation to the target embedding to be far away from the source sentence but preserving the semantics of target sentence. Maximize Distance Semantic Preservation Encoder Decoder Pooling Pooling He wasn’t in good shape. Source Sentence Max Nu era într-o formă prea bună. <bos> He wasn’t in great shape. Target Sentence

- 12. Learning Objective – (1) 12 We add the imposter to the set of negative examples 𝑆 and use distant target as another positive example of source sentence for contrastive learning.

- 13. Learning Objective 13 We jointly maximize the following objectives with stochastic gradient ascent.

- 14. Experimental Setup – (1) 14 1) Tasks and Evaluation Metric • Neural Machine Translation: BLEU score • Question Generation : BLEU score, F1/EM • Text Summarization: Rouge score 2) Data • WMT’16 RO-EN • SQuAD • Xsum

- 15. Experimental Setup – (2) 15 3) Baselines • T5-MLE: The T5 model trained with maximum likelihood estimation. • T5-𝛼-MLE: The T5 model trained with MLE but decode target sequence with temperature scaling 𝛼 in softmax. • T5-MLE-contrastive: Naïve contrastive learning with MLE. [Caccia 2020]Caccia et al., Language gans falling short, ICLR 2019

- 16. Experimental Setup – (2) 16 3) Baselines • T5-SSMBA [Ng 2020]: Generating additional examples by denoising and reconstructing target sentences with masked language model • T5-WordDropout Contrastive [Yang 2019]: Generate negative examples by removing the most frequent word from the target sentence. • T5-R3f [Aghajanyan 2021]: Add a Gaussian noise and enforce consistency loss. [Ng 2020] Ng et al, Ssmba: Self-supervised manifold based data augmentation for improving out-of-domain robustness, EMNLP 2020 [Yang2021] Reducing word omission errors in neural machine translation: A contrastive learning approach, ACL 2019 [Aghajanyan 2019] Better fine-tuning by reducing representational collapse, ICLR2021

- 17. Experimental Result – (1) 17 Method BLEU Machine Translation – WMT’16 RO-EN T5-MLE 32.43 T5-𝛼-MLE 32.14 T5-MLE-contrastive 32.03 T5-SSMBA 32.81 T5-WordDropout Contrastive 32.44 T5-CLAPS (Ours) 33.96

- 18. Experimental Result – (2) 18 Method BLEU F1 EM Question Generation – SQuAD T5-MLE 21.00 67.64 55.91 T5-𝛼-MLE 20.50 68.04 56.30 T5-MLE-contrastive 20.91 67.32 55.25 T5-SSMBA 21.07 68.47 56.37 T5-WordDropout Contrastive 21.19 68.16 56.41 T5-CLAPS (Ours) 21.55 69.01 57.06

- 19. Experimental Result – (3) 19 Method Rouge-1 Rouge-2 Rouge-L Text Summarization – Xsum T5-MLE 36.10 14.72 29.16 T5-𝛼-MLE 36.68 15.10 29.72 T5-MLE-contrastive 36.34 14.81 29.41 T5-SSMBA 36.58 14.81 29.79 T5-WordDropout Contrastive 36.88 15.11 29.68 T5-CLAPS (Ours) 37.89 15.78 30.59

- 20. Visualization of Sentence Embedding 20 The model learns to push away the imposter from the target sentence and pull the distant target to the source sentence.

- 21. Conclusion 21 • We propose a contrastive learning framework for conditional sequence generation to mitigate the exposure bias problem. • With adversarial perturbation, we generate negative and positive pairs that are more difficult for the model to distinguish from the GT pair. • Results show that we outperforms the baselines of T5 model across machine translation, question generation and summarization tasks.

- 22. Future work 22 • For future work, we will improve the quality of imposter which contains many grammatical errors. • Generating imposter and distant target still requires a large amount of labeled data. We need to improve the sample efficiency.

- 23. Thank you