Control dataset partitioning and cache to optimize performances in Spark

Download as PPTX, PDF0 likes624 views

Christophe Préaud and Florian Fauvarque presented techniques for optimizing Spark performance through proper dataset partitioning and caching. They discussed how to tune the number of partitions for reading, writing, and transformations like joins. Storage levels like MEMORY_ONLY and MEMORY_AND_DISK were explained for caching datasets. Profiling tools like Babar were also mentioned for analyzing Spark applications. The presentation aimed to help optimize slot usage and reduce job runtimes.

1 of 52

Downloaded 15 times

Ad

Recommended

CloverETL + Hadoop

CloverETL + HadoopDavid Pavlis By combining two best of breed solutions, one can create very powerful big data crunching solution.

Hadoop is a very popular big data solution with poor agility and not so great data transformation capabilities - despite what many Hadoop hype riding companies are trying to pitch.

By combining Hadoop's strengths with other very powerful open source technology - CloverETL, we get a nice synergy of both.

Lecture 2 part 1

Lecture 2 part 1Jazan University The document discusses Hadoop, its components, and how they work together. It covers HDFS, which stores and manages large files across commodity servers; MapReduce, which processes large datasets in parallel; and other tools like Pig and Hive that provide interfaces for Hadoop. Key points are that Hadoop is designed for large datasets and hardware failures, HDFS replicates data for reliability, and MapReduce moves computation instead of data for efficiency.

Ch 17 disk storage, basic files structure, and hashing

Ch 17 disk storage, basic files structure, and hashingZainab Almugbel Modified version of Chapter 17 of the book Fundamentals_of_Database_Systems,_6th_Edition with review questions

as part of database management system course

Native erasure coding support inside hdfs presentation

Native erasure coding support inside hdfs presentationlin bao from http://cdn.oreillystatic.com/en/assets/1/event/132/Native%20erasure%20coding%20support%20inside%20HDFS%20Presentation.pdf

Hadoop architecture meetup

Hadoop architecture meetupvmoorthy Hadoop is an open source framework for distributed storage and processing of large datasets across commodity hardware. It has two main components - the Hadoop Distributed File System (HDFS) for storage, and MapReduce for processing. HDFS stores data across clusters in a redundant and fault-tolerant manner. MapReduce allows distributed processing of large datasets in parallel using map and reduce functions. The architecture aims to provide reliable, scalable computing using commodity hardware.

Hadoop distributed computing framework for big data

Hadoop distributed computing framework for big dataCyanny LIANG This document provides an overview of Hadoop, an open source distributed computing framework for processing large datasets. It discusses the motivation for Hadoop, including challenges with traditional approaches. It then describes how Hadoop provides partial failure support, fault tolerance, and data locality to efficiently process big data across clusters. The document outlines the core Hadoop concepts and architecture, including HDFS for reliable data storage, and MapReduce for parallel processing. It provides examples of how Hadoop works and how organizations use it at large scale.

Introduction to HDFS

Introduction to HDFSBhavesh Padharia The Hadoop Distributed File System (HDFS) is the primary data storage system used by Hadoop applications. It employs a Master and Slave architecture with a NameNode that manages metadata and DataNodes that store data blocks. The NameNode tracks locations of data blocks and regulates access to files, while DataNodes store file blocks and manage read/write operations as directed by the NameNode. HDFS provides high-performance, scalable access to data across large Hadoop clusters.

Hadoop training in hyderabad-kellytechnologies

Hadoop training in hyderabad-kellytechnologiesKelly Technologies Hadoop Institutes : kelly technologies is the best Hadoop Training Institutes in Hyderabad. Providing Hadoop training by real time faculty in Hyderabad.

Understanding Hadoop

Understanding HadoopMahendran Ponnusamy Hadoop is an open-source software framework for distributed storage and processing of large datasets. It has three core components: HDFS for storage, MapReduce for processing, and YARN for resource management. HDFS stores data as blocks across clusters of commodity servers. MapReduce allows distributed processing of large datasets in parallel. YARN improves on MapReduce and provides a general framework for distributed applications beyond batch processing.

Big data- HDFS(2nd presentation)

Big data- HDFS(2nd presentation)Takrim Ul Islam Laskar Hadoop Distributed File System (HDFS) is a distributed file system that stores large datasets across commodity hardware. It is highly fault tolerant, provides high throughput, and is suitable for applications with large datasets. HDFS uses a master/slave architecture where a NameNode manages the file system namespace and DataNodes store data blocks. The NameNode ensures data replication across DataNodes for reliability. HDFS is optimized for batch processing workloads where computations are moved to nodes storing data blocks.

Less is More: 2X Storage Efficiency with HDFS Erasure Coding

Less is More: 2X Storage Efficiency with HDFS Erasure CodingZhe Zhang Ever since its creation, HDFS has been relying on data replication to shield against most failure scenarios. However, with the explosive growth in data volume, replication is getting quite expensive: the default 3x replication scheme incurs a 200% overhead in storage space and other resources (e.g., network bandwidth when writing the data). Erasure coding (EC) uses far less storage space while still providing the same level of fault tolerance. Under typical configurations, EC reduces the storage cost by ~50% compared with 3x replication.

Parquet Strata/Hadoop World, New York 2013

Parquet Strata/Hadoop World, New York 2013Julien Le Dem Parquet is a columnar storage format for Hadoop data. It was developed collaboratively by Twitter and Cloudera to address the need for efficient analytics on large datasets. Parquet provides more efficient compression and I/O compared to row-based formats by only reading and decompressing the columns needed by a query. It has been adopted by many companies for analytics workloads involving terabytes to petabytes of data. Parquet is language-independent and supports integration with frameworks like Hive, Pig, and Impala. It provides significant performance improvements and storage savings compared to traditional row-based formats.

Chapter13

Chapter13gourab87 The document discusses various topics related to secondary storage and file organization in databases:

1) Secondary storage devices like magnetic disks are used to permanently store large databases and provide high storage capacity compared to main memory.

2) Files are organized on disks using various methods like heap files, sorted files, and hashing to allow efficient retrieval, insertion, and deletion of records.

3) RAID (Redundant Array of Independent Disks) technology improves disk performance using data striping across multiple disks and reliability using disk mirroring.

Apache hadoop, hdfs and map reduce Overview

Apache hadoop, hdfs and map reduce OverviewNisanth Simon This document provides an overview of Apache Hadoop, HDFS, and MapReduce. It describes how Hadoop uses a distributed file system (HDFS) to store large amounts of data across commodity hardware. It also explains how MapReduce allows distributed processing of that data by allocating map and reduce tasks across nodes. Key components discussed include the HDFS architecture with NameNodes and DataNodes, data replication for fault tolerance, and how the MapReduce engine works with a JobTracker and TaskTrackers to parallelize jobs.

Distributed Computing with Apache Hadoop: Technology Overview

Distributed Computing with Apache Hadoop: Technology OverviewKonstantin V. Shvachko Distributed Computing with Apache Hadoop is a technology overview that discusses:

1) Hadoop is an open source software framework for distributed storage and processing of large datasets across clusters of commodity hardware.

2) Hadoop addresses limitations of traditional distributed computing with an architecture that scales linearly by adding more nodes, moves computation to data instead of moving data, and provides reliability even when hardware failures occur.

3) Core Hadoop components include the Hadoop Distributed File System for storage, and MapReduce for distributed processing of large datasets in parallel on multiple machines.

(Julien le dem) parquet

(Julien le dem) parquetNAVER D2 Parquet is an open source columnar storage format for Hadoop data. It was developed as a collaboration between Twitter and Cloudera to optimize IO and storage for analytics workloads on large datasets. Parquet supports efficient compression and encoding techniques that reduce storage size and enable faster scans by only loading the columns needed. It can be used with existing Hadoop tools and was implemented in Java, C++, and other languages to integrate with frameworks like Hive and Impala. Initial results at Twitter showed a 28% reduction in storage size and up to a 50% improvement in scan speeds compared to the previous Thrift format.

Hadoop Architecture

Hadoop ArchitectureDelhi/NCR HUG The document provides an overview of the Hadoop architecture including its core components like HDFS for distributed storage, MapReduce for distributed processing, and an explanation of how data is stored in blocks and replicated across nodes in the cluster. Key aspects of HDFS such as the namenode, datanodes, and secondary namenode functions are described as well as how Hadoop implementations like Pig and Hive provide interfaces for data processing.

Hadoop Distributed File System

Hadoop Distributed File SystemRutvik Bapat Hadoop DFS consists of HDFS for storage and MapReduce for processing. HDFS provides massive storage, fault tolerance through data replication, and high throughput access to data. It uses a master-slave architecture with a NameNode managing the file system namespace and DataNodes storing file data blocks. The NameNode ensures data reliability through policies that replicate blocks across racks and nodes. HDFS provides scalability, flexibility and low-cost storage of large datasets.

HDFS Erasure Coding in Action

HDFS Erasure Coding in Action DataWorks Summit/Hadoop Summit This document discusses HDFS Erasure Coding and its usage at Yahoo Japan. It begins with an overview of erasure coding, how it is implemented in HDFS, and compares it to replication. Test results show the write performance is lower for erasure coding while read performance is similar. Yahoo Japan uses erasure coding for cold weblog data, reducing storage costs by 65% compared to replication. Future plans include supporting additional codecs and features to provide more usability.

Meethadoop

MeethadoopIIIT-H Hadoop is an open source framework for running large-scale data processing jobs across clusters of computers. It has two main components: HDFS for reliable storage and Hadoop MapReduce for distributed processing. HDFS stores large files across nodes through replication and uses a master-slave architecture. MapReduce allows users to write map and reduce functions to process large datasets in parallel and generate results. Hadoop has seen widespread adoption for processing massive datasets due to its scalability, reliability and ease of use.

Hadoop-Introduction

Hadoop-IntroductionSandeep Deshmukh The document provides an introduction to Hadoop, including an overview of its core components HDFS and MapReduce, and motivates their use by explaining the need to process large amounts of data in parallel across clusters of computers in a fault-tolerant and scalable manner. It also presents sample code walkthroughs and discusses the Hadoop ecosystem of related projects like Pig, HBase, Hive and Zookeeper.

Snapshot in Hadoop Distributed File System

Snapshot in Hadoop Distributed File SystemBhavesh Padharia In this PPT you can learn What is Snapshot in HDFS?

About of Snapshot. Use of Snapshot, Command Code of Snapshot in HDFS.

HDF5 I/O Performance

HDF5 I/O PerformanceThe HDF-EOS Tools and Information Center The document discusses various tuning knobs in the HDF5 library that can improve I/O performance for sequential and parallel access of HDF files. For sequential performance, it describes file level knobs like setting the metadata block size and cache size, as well as data transfer level knobs like buffer and sieve buffer size. For parallel performance, it discusses data alignment, using HDF5 split driver to separate metadata and raw data into different files, and passing MPI-IO hints. Benchmark results demonstrate the performance gains from using these tuning knobs.

RAID: High-Performance, Reliable Secondary Storage

RAID: High-Performance, Reliable Secondary StorageUğur Tılıkoğlu This document discusses high performance secondary storage using disk arrays. It provides an overview and introduction to disk array terminology, technologies, and implementations. Specifically, it covers disk array basics like data striping, redundancy methods, and various RAID levels. It also discusses performance, cost, reliability considerations, and implementation topics for disk arrays.

Bigdata processing with Spark - part II

Bigdata processing with Spark - part IIArjen de Vries This document provides a summary of Spark RDDs and the Spark execution model:

- RDDs (Resilient Distributed Datasets) are Spark's fundamental data structure, representing an immutable distributed collection of objects that can be operated on in parallel. RDDs track lineage to support fault tolerance and optimization.

- Spark uses a logical plan built from transformations on RDDs, which is then optimized and scheduled into physical stages and tasks by the Spark scheduler. Tasks operate on partitions of RDDs in a data-parallel manner.

- The scheduler pipelines transformations where possible, truncates redundant work, and leverages caching and data locality to improve performance. It splits the graph into stages separated by shuffle operations or parent RDD boundaries

An Introduction to Hadoop

An Introduction to HadoopDerrekYoungDotCom Hadoop is an open-source software framework for distributed storage and processing of large datasets across clusters of commodity servers. It allows for the reliable, scalable, and distributed processing of large data sets across a cluster. A typical Hadoop cluster consists of thousands of commodity servers storing exabytes of data and processing petabytes of data per day. Hadoop uses the Hadoop Distributed File System (HDFS) for storage and MapReduce as its processing engine. HDFS stores data across nodes in a cluster as blocks and provides redundancy, while MapReduce processes data in parallel on those nodes.

getFamiliarWithHadoop

getFamiliarWithHadoopAmirReza Mohammadi This document provides an introduction to big data and Hadoop. It discusses how the volume of data being generated is growing rapidly and exceeding the capabilities of traditional databases. Hadoop is presented as a solution for distributed storage and processing of large datasets across clusters of commodity hardware. Key aspects of Hadoop covered include MapReduce for parallel processing, the Hadoop Distributed File System (HDFS) for reliable storage, and how data is replicated across nodes for fault tolerance.

Bigdata processing with Spark

Bigdata processing with SparkArjen de Vries This document provides information about big data and Hadoop. It discusses how big data is defined in terms of large volumes, variety of data types, and velocity of data ingestion. It then summarizes the MapReduce programming model used in Hadoop for distributed processing of large datasets in parallel across clusters. Key aspects covered include how MapReduce handles scheduling, data distribution, synchronization, and fault tolerance. The document also notes some of the deficiencies of Hadoop, such as sources of latency, its lack of indexes, and its limitations for complex multi-step data analysis workflows.

Managing your Black Friday Logs - Antonio Bonuccelli - Codemotion Rome 2018

Managing your Black Friday Logs - Antonio Bonuccelli - Codemotion Rome 2018Codemotion Monitoring an entire application is not a simple task, but with the right tools it is not a hard task either. However, events like Black Friday can push your application to the limit, and even cause crashes. As the system is stressed, it generates a lot more logs, which may crash the monitoring system as well. In this talk I will walk through the best practices when using the Elastic Stack to centralize and monitor your logs. I will also share some tricks to help you with the huge increase of traffic typical in Black Fridays.

Azure Data Factory Data Flow Performance Tuning 101

Azure Data Factory Data Flow Performance Tuning 101Mark Kromer The document provides performance timing results and recommendations for optimizing Azure Data Factory data flows. Sample 1 processed a 421MB file with 887k rows in 4 minutes using default partitioning on an 80-core Azure IR. Sample 2 processed a table with the same size and transforms in 3 minutes using source and derived column partitioning. Sample 3 processed the same size file in 2 minutes with default partitioning. The document recommends partitioning strategies, using memory optimized clusters, and scaling cores to improve performance.

Ad

More Related Content

What's hot (20)

Understanding Hadoop

Understanding HadoopMahendran Ponnusamy Hadoop is an open-source software framework for distributed storage and processing of large datasets. It has three core components: HDFS for storage, MapReduce for processing, and YARN for resource management. HDFS stores data as blocks across clusters of commodity servers. MapReduce allows distributed processing of large datasets in parallel. YARN improves on MapReduce and provides a general framework for distributed applications beyond batch processing.

Big data- HDFS(2nd presentation)

Big data- HDFS(2nd presentation)Takrim Ul Islam Laskar Hadoop Distributed File System (HDFS) is a distributed file system that stores large datasets across commodity hardware. It is highly fault tolerant, provides high throughput, and is suitable for applications with large datasets. HDFS uses a master/slave architecture where a NameNode manages the file system namespace and DataNodes store data blocks. The NameNode ensures data replication across DataNodes for reliability. HDFS is optimized for batch processing workloads where computations are moved to nodes storing data blocks.

Less is More: 2X Storage Efficiency with HDFS Erasure Coding

Less is More: 2X Storage Efficiency with HDFS Erasure CodingZhe Zhang Ever since its creation, HDFS has been relying on data replication to shield against most failure scenarios. However, with the explosive growth in data volume, replication is getting quite expensive: the default 3x replication scheme incurs a 200% overhead in storage space and other resources (e.g., network bandwidth when writing the data). Erasure coding (EC) uses far less storage space while still providing the same level of fault tolerance. Under typical configurations, EC reduces the storage cost by ~50% compared with 3x replication.

Parquet Strata/Hadoop World, New York 2013

Parquet Strata/Hadoop World, New York 2013Julien Le Dem Parquet is a columnar storage format for Hadoop data. It was developed collaboratively by Twitter and Cloudera to address the need for efficient analytics on large datasets. Parquet provides more efficient compression and I/O compared to row-based formats by only reading and decompressing the columns needed by a query. It has been adopted by many companies for analytics workloads involving terabytes to petabytes of data. Parquet is language-independent and supports integration with frameworks like Hive, Pig, and Impala. It provides significant performance improvements and storage savings compared to traditional row-based formats.

Chapter13

Chapter13gourab87 The document discusses various topics related to secondary storage and file organization in databases:

1) Secondary storage devices like magnetic disks are used to permanently store large databases and provide high storage capacity compared to main memory.

2) Files are organized on disks using various methods like heap files, sorted files, and hashing to allow efficient retrieval, insertion, and deletion of records.

3) RAID (Redundant Array of Independent Disks) technology improves disk performance using data striping across multiple disks and reliability using disk mirroring.

Apache hadoop, hdfs and map reduce Overview

Apache hadoop, hdfs and map reduce OverviewNisanth Simon This document provides an overview of Apache Hadoop, HDFS, and MapReduce. It describes how Hadoop uses a distributed file system (HDFS) to store large amounts of data across commodity hardware. It also explains how MapReduce allows distributed processing of that data by allocating map and reduce tasks across nodes. Key components discussed include the HDFS architecture with NameNodes and DataNodes, data replication for fault tolerance, and how the MapReduce engine works with a JobTracker and TaskTrackers to parallelize jobs.

Distributed Computing with Apache Hadoop: Technology Overview

Distributed Computing with Apache Hadoop: Technology OverviewKonstantin V. Shvachko Distributed Computing with Apache Hadoop is a technology overview that discusses:

1) Hadoop is an open source software framework for distributed storage and processing of large datasets across clusters of commodity hardware.

2) Hadoop addresses limitations of traditional distributed computing with an architecture that scales linearly by adding more nodes, moves computation to data instead of moving data, and provides reliability even when hardware failures occur.

3) Core Hadoop components include the Hadoop Distributed File System for storage, and MapReduce for distributed processing of large datasets in parallel on multiple machines.

(Julien le dem) parquet

(Julien le dem) parquetNAVER D2 Parquet is an open source columnar storage format for Hadoop data. It was developed as a collaboration between Twitter and Cloudera to optimize IO and storage for analytics workloads on large datasets. Parquet supports efficient compression and encoding techniques that reduce storage size and enable faster scans by only loading the columns needed. It can be used with existing Hadoop tools and was implemented in Java, C++, and other languages to integrate with frameworks like Hive and Impala. Initial results at Twitter showed a 28% reduction in storage size and up to a 50% improvement in scan speeds compared to the previous Thrift format.

Hadoop Architecture

Hadoop ArchitectureDelhi/NCR HUG The document provides an overview of the Hadoop architecture including its core components like HDFS for distributed storage, MapReduce for distributed processing, and an explanation of how data is stored in blocks and replicated across nodes in the cluster. Key aspects of HDFS such as the namenode, datanodes, and secondary namenode functions are described as well as how Hadoop implementations like Pig and Hive provide interfaces for data processing.

Hadoop Distributed File System

Hadoop Distributed File SystemRutvik Bapat Hadoop DFS consists of HDFS for storage and MapReduce for processing. HDFS provides massive storage, fault tolerance through data replication, and high throughput access to data. It uses a master-slave architecture with a NameNode managing the file system namespace and DataNodes storing file data blocks. The NameNode ensures data reliability through policies that replicate blocks across racks and nodes. HDFS provides scalability, flexibility and low-cost storage of large datasets.

HDFS Erasure Coding in Action

HDFS Erasure Coding in Action DataWorks Summit/Hadoop Summit This document discusses HDFS Erasure Coding and its usage at Yahoo Japan. It begins with an overview of erasure coding, how it is implemented in HDFS, and compares it to replication. Test results show the write performance is lower for erasure coding while read performance is similar. Yahoo Japan uses erasure coding for cold weblog data, reducing storage costs by 65% compared to replication. Future plans include supporting additional codecs and features to provide more usability.

Meethadoop

MeethadoopIIIT-H Hadoop is an open source framework for running large-scale data processing jobs across clusters of computers. It has two main components: HDFS for reliable storage and Hadoop MapReduce for distributed processing. HDFS stores large files across nodes through replication and uses a master-slave architecture. MapReduce allows users to write map and reduce functions to process large datasets in parallel and generate results. Hadoop has seen widespread adoption for processing massive datasets due to its scalability, reliability and ease of use.

Hadoop-Introduction

Hadoop-IntroductionSandeep Deshmukh The document provides an introduction to Hadoop, including an overview of its core components HDFS and MapReduce, and motivates their use by explaining the need to process large amounts of data in parallel across clusters of computers in a fault-tolerant and scalable manner. It also presents sample code walkthroughs and discusses the Hadoop ecosystem of related projects like Pig, HBase, Hive and Zookeeper.

Snapshot in Hadoop Distributed File System

Snapshot in Hadoop Distributed File SystemBhavesh Padharia In this PPT you can learn What is Snapshot in HDFS?

About of Snapshot. Use of Snapshot, Command Code of Snapshot in HDFS.

HDF5 I/O Performance

HDF5 I/O PerformanceThe HDF-EOS Tools and Information Center The document discusses various tuning knobs in the HDF5 library that can improve I/O performance for sequential and parallel access of HDF files. For sequential performance, it describes file level knobs like setting the metadata block size and cache size, as well as data transfer level knobs like buffer and sieve buffer size. For parallel performance, it discusses data alignment, using HDF5 split driver to separate metadata and raw data into different files, and passing MPI-IO hints. Benchmark results demonstrate the performance gains from using these tuning knobs.

RAID: High-Performance, Reliable Secondary Storage

RAID: High-Performance, Reliable Secondary StorageUğur Tılıkoğlu This document discusses high performance secondary storage using disk arrays. It provides an overview and introduction to disk array terminology, technologies, and implementations. Specifically, it covers disk array basics like data striping, redundancy methods, and various RAID levels. It also discusses performance, cost, reliability considerations, and implementation topics for disk arrays.

Bigdata processing with Spark - part II

Bigdata processing with Spark - part IIArjen de Vries This document provides a summary of Spark RDDs and the Spark execution model:

- RDDs (Resilient Distributed Datasets) are Spark's fundamental data structure, representing an immutable distributed collection of objects that can be operated on in parallel. RDDs track lineage to support fault tolerance and optimization.

- Spark uses a logical plan built from transformations on RDDs, which is then optimized and scheduled into physical stages and tasks by the Spark scheduler. Tasks operate on partitions of RDDs in a data-parallel manner.

- The scheduler pipelines transformations where possible, truncates redundant work, and leverages caching and data locality to improve performance. It splits the graph into stages separated by shuffle operations or parent RDD boundaries

An Introduction to Hadoop

An Introduction to HadoopDerrekYoungDotCom Hadoop is an open-source software framework for distributed storage and processing of large datasets across clusters of commodity servers. It allows for the reliable, scalable, and distributed processing of large data sets across a cluster. A typical Hadoop cluster consists of thousands of commodity servers storing exabytes of data and processing petabytes of data per day. Hadoop uses the Hadoop Distributed File System (HDFS) for storage and MapReduce as its processing engine. HDFS stores data across nodes in a cluster as blocks and provides redundancy, while MapReduce processes data in parallel on those nodes.

getFamiliarWithHadoop

getFamiliarWithHadoopAmirReza Mohammadi This document provides an introduction to big data and Hadoop. It discusses how the volume of data being generated is growing rapidly and exceeding the capabilities of traditional databases. Hadoop is presented as a solution for distributed storage and processing of large datasets across clusters of commodity hardware. Key aspects of Hadoop covered include MapReduce for parallel processing, the Hadoop Distributed File System (HDFS) for reliable storage, and how data is replicated across nodes for fault tolerance.

Bigdata processing with Spark

Bigdata processing with SparkArjen de Vries This document provides information about big data and Hadoop. It discusses how big data is defined in terms of large volumes, variety of data types, and velocity of data ingestion. It then summarizes the MapReduce programming model used in Hadoop for distributed processing of large datasets in parallel across clusters. Key aspects covered include how MapReduce handles scheduling, data distribution, synchronization, and fault tolerance. The document also notes some of the deficiencies of Hadoop, such as sources of latency, its lack of indexes, and its limitations for complex multi-step data analysis workflows.

Similar to Control dataset partitioning and cache to optimize performances in Spark (20)

Managing your Black Friday Logs - Antonio Bonuccelli - Codemotion Rome 2018

Managing your Black Friday Logs - Antonio Bonuccelli - Codemotion Rome 2018Codemotion Monitoring an entire application is not a simple task, but with the right tools it is not a hard task either. However, events like Black Friday can push your application to the limit, and even cause crashes. As the system is stressed, it generates a lot more logs, which may crash the monitoring system as well. In this talk I will walk through the best practices when using the Elastic Stack to centralize and monitor your logs. I will also share some tricks to help you with the huge increase of traffic typical in Black Fridays.

Azure Data Factory Data Flow Performance Tuning 101

Azure Data Factory Data Flow Performance Tuning 101Mark Kromer The document provides performance timing results and recommendations for optimizing Azure Data Factory data flows. Sample 1 processed a 421MB file with 887k rows in 4 minutes using default partitioning on an 80-core Azure IR. Sample 2 processed a table with the same size and transforms in 3 minutes using source and derived column partitioning. Sample 3 processed the same size file in 2 minutes with default partitioning. The document recommends partitioning strategies, using memory optimized clusters, and scaling cores to improve performance.

Apache Spark At Scale in the Cloud

Apache Spark At Scale in the CloudDatabricks Using Apache Spark to analyze large datasets in the cloud presents a range of challenges. Different stages of your pipeline may be constrained by CPU, memory, disk and/or network IO. But what if all those stages have to run on the same cluster? In the cloud, you have limited control over the hardware your cluster runs on.

You may have even less control over the size and format of your raw input files. Performance tuning is an iterative and experimental process. It’s frustrating with very large datasets: what worked great with 30 billion rows may not work at all with 400 billion rows. But with strategic optimizations and compromises, 50+ TiB datasets can be no big deal.

By using Spark UI and simple metrics, explore how to diagnose and remedy issues on jobs:

Sizing the cluster based on your dataset (shuffle partitions)

Ingestion challenges – well begun is half done (globbing S3, small files)

Managing memory (sorting GC – when to go parallel, when to go G1, when offheap can help you)

Shuffle (give a little to get a lot – configs for better out of box shuffle) – Spill (partitioning for the win)

Scheduling (FAIR vs FIFO, is there a difference for your pipeline?)

Caching and persistence (it’s the cost of doing business, so what are your options?)

Fault tolerance (blacklisting, speculation, task reaping)

Making the best of a bad deal (skew joins, windowing, UDFs, very large query plans)

Writing to S3 (dealing with write partitions, HDFS and s3DistCp vs writing directly to S3)

Apache Spark At Scale in the Cloud

Apache Spark At Scale in the CloudRose Toomey Presented at Spark+AI Summit Europe 2019

https://databricks.com/session_eu19/apache-spark-at-scale-in-the-cloud

Using Apache Spark to analyze large datasets in the cloud presents a range of challenges. Different stages of your pipeline may be constrained by CPU, memory, disk and/or network IO. But what if all those stages have to run on the same cluster? In the cloud, you have limited control over the hardware your cluster runs on.

You may have even less control over the size and format of your raw input files. Performance tuning is an iterative and experimental process. It’s frustrating with very large datasets: what worked great with 30 billion rows may not work at all with 400 billion rows. But with strategic optimizations and compromises, 50+ TiB datasets can be no big deal.

By using Spark UI and simple metrics, explore how to diagnose and remedy issues on jobs:

Sizing the cluster based on your dataset (shuffle partitions)

Ingestion challenges – well begun is half done (globbing S3, small files)

Managing memory (sorting GC – when to go parallel, when to go G1, when offheap can help you)

Shuffle (give a little to get a lot – configs for better out of box shuffle) – Spill (partitioning for the win)

Scheduling (FAIR vs FIFO, is there a difference for your pipeline?)

Caching and persistence (it’s the cost of doing business, so what are your options?)

Fault tolerance (blacklisting, speculation, task reaping)

Making the best of a bad deal (skew joins, windowing, UDFs, very large query plans)

Apache Spark: What's under the hood

Apache Spark: What's under the hoodAdarsh Pannu This document provides an overview of Apache Spark's architectural components through the life of simple Spark jobs. It begins with a simple Spark application analyzing airline on-time arrival data, then covers Resilient Distributed Datasets (RDDs), the cluster architecture, job execution through Spark components like tasks and scheduling, and techniques for writing better Spark applications like optimizing partitioning and reducing shuffle size.

Presentation by TachyonNexus & Intel at Strata Singapore 2015

Presentation by TachyonNexus & Intel at Strata Singapore 2015Tachyon Nexus, Inc. Make Tachyon Ready for Next-Gen Data Center Platforms with NVM.

The talk was presented at Strata Singapore, December 2015, focusing on using Tachyon Tiered Storage with NVM as the next generation data center platforms.

Tachyon: An Open Source Memory-Centric Distributed Storage System

Tachyon: An Open Source Memory-Centric Distributed Storage SystemTachyon Nexus, Inc. Tachyon talk at Strata and Hadoop World 2015 at New York City, given by Haoyuan Li, Founder & CEO of Tachyon Nexus. If you are interested, please do not hesitate to contact us at [email protected] . You are welcome to visit our website ( www.tachyonnexus.com ) as well.

Managing Data and Operation Distribution In MongoDB

Managing Data and Operation Distribution In MongoDBJason Terpko In a sharded MongoDB cluster, scale and data distribution are defined by your shard keys. Even when choosing the correct shards key, ongoing maintenance and review can still be required to maintain optimal performance.

This presentation will review shard key selection and how the distribution of chunks can create scenarios where you may need to manually move, split, or merge chunks in your sharded cluster. Scenarios requiring these actions can exist with both optimal and sub-optimal shard keys. Example use cases will provide tips on selection of shard key, detecting an issue, reasons why you may encounter these scenarios, and specific steps you can take to rectify the issue.

Memory Management Strategies - III.pdf

Memory Management Strategies - III.pdfHarika Pudugosula The objectives of these slides are:

- To provide a detailed description of various ways of organizing memory hardware

- To discuss various memory-management techniques, including paging and segmentation

- To provide a detailed description of the Intel Pentium, which supports both pure segmentation and segmentation with paging

Fast and Scalable Python

Fast and Scalable PythonTravis Oliphant This document discusses tools for making NumPy and Pandas code faster and able to run in parallel. It introduces the Dask library, which allows users to work with large datasets in a familiar Pandas/NumPy style through parallel computing. Dask implements parallel DataFrames, Arrays, and other collections that mimic their Pandas/NumPy counterparts. It can scale computations across multiple cores on a single machine or across many machines in a cluster. The document provides examples of using Dask to analyze large CSV and text data in parallel through DataFrames and Bags. It also discusses scaling computations from a single laptop to large clusters.

OVERVIEW ON SPARK.pptx

OVERVIEW ON SPARK.pptxAishg4 Spark is a fast and general engine for large-scale data processing. It was designed to be fast, easy to use and supports machine learning. Spark achieves high performance by keeping data in-memory as much as possible using its Resilient Distributed Datasets (RDDs) abstraction. RDDs allow data to be partitioned across nodes and operations are performed in parallel. The Spark architecture uses a master-slave model with a driver program coordinating execution across worker nodes. Transformations operate on RDDs to produce new RDDs while actions trigger job execution and return results.

Tachyon Presentation at AMPCamp 6 (November, 2015)

Tachyon Presentation at AMPCamp 6 (November, 2015)Tachyon Nexus, Inc. Tachyon: An Open Source Memory-Centric Distributed Storage System Presentation at AMPCamp 6, November 2015. It describes Tachyon's history, open source status, brief review of Tachyon project before 2015, exciting deployments and new features in 2016, and how to get involved with the Tachyon open source community.

Managing data and operation distribution in MongoDB

Managing data and operation distribution in MongoDBAntonios Giannopoulos This document provides an overview of managing data and operations distribution in MongoDB sharded clusters. It discusses shard key selection, applying and reverting shard keys, automatic and manual chunk splitting and merging, balancing data distribution across shards, and cleaning up orphaned documents. The goal is to optimally distribute data and load across shards in the cluster.

The Parquet Format and Performance Optimization Opportunities

The Parquet Format and Performance Optimization OpportunitiesDatabricks The Parquet format is one of the most widely used columnar storage formats in the Spark ecosystem. Given that I/O is expensive and that the storage layer is the entry point for any query execution, understanding the intricacies of your storage format is important for optimizing your workloads.

As an introduction, we will provide context around the format, covering the basics of structured data formats and the underlying physical data storage model alternatives (row-wise, columnar and hybrid). Given this context, we will dive deeper into specifics of the Parquet format: representation on disk, physical data organization (row-groups, column-chunks and pages) and encoding schemes. Now equipped with sufficient background knowledge, we will discuss several performance optimization opportunities with respect to the format: dictionary encoding, page compression, predicate pushdown (min/max skipping), dictionary filtering and partitioning schemes. We will learn how to combat the evil that is ‘many small files’, and will discuss the open-source Delta Lake format in relation to this and Parquet in general.

This talk serves both as an approachable refresher on columnar storage as well as a guide on how to leverage the Parquet format for speeding up analytical workloads in Spark using tangible tips and tricks.

Lessons from the Field: Applying Best Practices to Your Apache Spark Applicat...

Lessons from the Field: Applying Best Practices to Your Apache Spark Applicat...Databricks This document discusses best practices for optimizing Apache Spark applications. It covers techniques for speeding up file loading, optimizing file storage and layout, identifying bottlenecks in queries, dealing with many partitions, using datasource tables, managing schema inference, file types and compression, partitioning and bucketing files, managing shuffle partitions with adaptive execution, optimizing unions, using the cost-based optimizer, and leveraging the data skipping index. The presentation aims to help Spark developers apply these techniques to improve performance.

Deep Dive into Project Tungsten: Bringing Spark Closer to Bare Metal-(Josh Ro...

Deep Dive into Project Tungsten: Bringing Spark Closer to Bare Metal-(Josh Ro...Spark Summit This document summarizes Project Tungsten, an effort by Databricks to substantially improve the memory and CPU efficiency of Spark applications. It discusses how Tungsten optimizes memory and CPU usage through techniques like explicit memory management, cache-aware algorithms, and code generation. It provides examples of how these optimizations improve performance for aggregation queries and record sorting. The roadmap outlines expanding Tungsten's optimizations in Spark 1.4 through 1.6 to support more workloads and achieve end-to-end processing using binary data representations.

Storage talk

Storage talkchristkv MongoDB stores data in files on disk that are broken into variable-sized extents containing documents. These extents, as well as separate index structures, are memory mapped by the operating system for efficient read/write. A write-ahead journal is used to provide durability and prevent data corruption after crashes by logging operations before writing to the data files. The journal increases write performance by 5-30% but can be optimized using a separate drive. Data fragmentation over time can be addressed using the compact command or adjusting the schema.

Vmfs

VmfsErick Treviño Fetch policy determines when pages are brought into main memory, with demand paging bringing pages in only when referenced while prepaging brings in more pages, often pages not referenced. The TLB caches page table entries to map virtual to physical addresses, with TLB misses similarly slow as instruction/data cache misses. For page replacement when no free frames exist, a page replacement algorithm selects a victim frame to swap out and free the frame.

Ad

Recently uploaded (20)

Manifest Pre-Seed Update | A Humanoid OEM Deeptech In France

Manifest Pre-Seed Update | A Humanoid OEM Deeptech In Francechb3 The latest updates on Manifest's pre-seed stage progress.

AI EngineHost Review: Revolutionary USA Datacenter-Based Hosting with NVIDIA ...

AI EngineHost Review: Revolutionary USA Datacenter-Based Hosting with NVIDIA ...SOFTTECHHUB I started my online journey with several hosting services before stumbling upon Ai EngineHost. At first, the idea of paying one fee and getting lifetime access seemed too good to pass up. The platform is built on reliable US-based servers, ensuring your projects run at high speeds and remain safe. Let me take you step by step through its benefits and features as I explain why this hosting solution is a perfect fit for digital entrepreneurs.

HCL Nomad Web – Best Practices und Verwaltung von Multiuser-Umgebungen

HCL Nomad Web – Best Practices und Verwaltung von Multiuser-Umgebungenpanagenda Webinar Recording: https://www.panagenda.com/webinars/hcl-nomad-web-best-practices-und-verwaltung-von-multiuser-umgebungen/

HCL Nomad Web wird als die nächste Generation des HCL Notes-Clients gefeiert und bietet zahlreiche Vorteile, wie die Beseitigung des Bedarfs an Paketierung, Verteilung und Installation. Nomad Web-Client-Updates werden “automatisch” im Hintergrund installiert, was den administrativen Aufwand im Vergleich zu traditionellen HCL Notes-Clients erheblich reduziert. Allerdings stellt die Fehlerbehebung in Nomad Web im Vergleich zum Notes-Client einzigartige Herausforderungen dar.

Begleiten Sie Christoph und Marc, während sie demonstrieren, wie der Fehlerbehebungsprozess in HCL Nomad Web vereinfacht werden kann, um eine reibungslose und effiziente Benutzererfahrung zu gewährleisten.

In diesem Webinar werden wir effektive Strategien zur Diagnose und Lösung häufiger Probleme in HCL Nomad Web untersuchen, einschließlich

- Zugriff auf die Konsole

- Auffinden und Interpretieren von Protokolldateien

- Zugriff auf den Datenordner im Cache des Browsers (unter Verwendung von OPFS)

- Verständnis der Unterschiede zwischen Einzel- und Mehrbenutzerszenarien

- Nutzung der Client Clocking-Funktion

Designing Low-Latency Systems with Rust and ScyllaDB: An Architectural Deep Dive

Designing Low-Latency Systems with Rust and ScyllaDB: An Architectural Deep DiveScyllaDB Want to learn practical tips for designing systems that can scale efficiently without compromising speed?

Join us for a workshop where we’ll address these challenges head-on and explore how to architect low-latency systems using Rust. During this free interactive workshop oriented for developers, engineers, and architects, we’ll cover how Rust’s unique language features and the Tokio async runtime enable high-performance application development.

As you explore key principles of designing low-latency systems with Rust, you will learn how to:

- Create and compile a real-world app with Rust

- Connect the application to ScyllaDB (NoSQL data store)

- Negotiate tradeoffs related to data modeling and querying

- Manage and monitor the database for consistently low latencies

Heap, Types of Heap, Insertion and Deletion

Heap, Types of Heap, Insertion and DeletionJaydeep Kale This pdf will explain what is heap, its type, insertion and deletion in heap and Heap sort

Linux Support for SMARC: How Toradex Empowers Embedded Developers

Linux Support for SMARC: How Toradex Empowers Embedded DevelopersToradex Toradex brings robust Linux support to SMARC (Smart Mobility Architecture), ensuring high performance and long-term reliability for embedded applications. Here’s how:

• Optimized Torizon OS & Yocto Support – Toradex provides Torizon OS, a Debian-based easy-to-use platform, and Yocto BSPs for customized Linux images on SMARC modules.

• Seamless Integration with i.MX 8M Plus and i.MX 95 – Toradex SMARC solutions leverage NXP’s i.MX 8 M Plus and i.MX 95 SoCs, delivering power efficiency and AI-ready performance.

• Secure and Reliable – With Secure Boot, over-the-air (OTA) updates, and LTS kernel support, Toradex ensures industrial-grade security and longevity.

• Containerized Workflows for AI & IoT – Support for Docker, ROS, and real-time Linux enables scalable AI, ML, and IoT applications.

• Strong Ecosystem & Developer Support – Toradex offers comprehensive documentation, developer tools, and dedicated support, accelerating time-to-market.

With Toradex’s Linux support for SMARC, developers get a scalable, secure, and high-performance solution for industrial, medical, and AI-driven applications.

Do you have a specific project or application in mind where you're considering SMARC? We can help with Free Compatibility Check and help you with quick time-to-market

For more information: https://www.toradex.com/computer-on-modules/smarc-arm-family

AI Changes Everything – Talk at Cardiff Metropolitan University, 29th April 2...

AI Changes Everything – Talk at Cardiff Metropolitan University, 29th April 2...Alan Dix Talk at the final event of Data Fusion Dynamics: A Collaborative UK-Saudi Initiative in Cybersecurity and Artificial Intelligence funded by the British Council UK-Saudi Challenge Fund 2024, Cardiff Metropolitan University, 29th April 2025

https://alandix.com/academic/talks/CMet2025-AI-Changes-Everything/

Is AI just another technology, or does it fundamentally change the way we live and think?

Every technology has a direct impact with micro-ethical consequences, some good, some bad. However more profound are the ways in which some technologies reshape the very fabric of society with macro-ethical impacts. The invention of the stirrup revolutionised mounted combat, but as a side effect gave rise to the feudal system, which still shapes politics today. The internal combustion engine offers personal freedom and creates pollution, but has also transformed the nature of urban planning and international trade. When we look at AI the micro-ethical issues, such as bias, are most obvious, but the macro-ethical challenges may be greater.

At a micro-ethical level AI has the potential to deepen social, ethnic and gender bias, issues I have warned about since the early 1990s! It is also being used increasingly on the battlefield. However, it also offers amazing opportunities in health and educations, as the recent Nobel prizes for the developers of AlphaFold illustrate. More radically, the need to encode ethics acts as a mirror to surface essential ethical problems and conflicts.

At the macro-ethical level, by the early 2000s digital technology had already begun to undermine sovereignty (e.g. gambling), market economics (through network effects and emergent monopolies), and the very meaning of money. Modern AI is the child of big data, big computation and ultimately big business, intensifying the inherent tendency of digital technology to concentrate power. AI is already unravelling the fundamentals of the social, political and economic world around us, but this is a world that needs radical reimagining to overcome the global environmental and human challenges that confront us. Our challenge is whether to let the threads fall as they may, or to use them to weave a better future.

Transcript: #StandardsGoals for 2025: Standards & certification roundup - Tec...

Transcript: #StandardsGoals for 2025: Standards & certification roundup - Tec...BookNet Canada Book industry standards are evolving rapidly. In the first part of this session, we’ll share an overview of key developments from 2024 and the early months of 2025. Then, BookNet’s resident standards expert, Tom Richardson, and CEO, Lauren Stewart, have a forward-looking conversation about what’s next.

Link to recording, presentation slides, and accompanying resource: https://bnctechforum.ca/sessions/standardsgoals-for-2025-standards-certification-roundup/

Presented by BookNet Canada on May 6, 2025 with support from the Department of Canadian Heritage.

#StandardsGoals for 2025: Standards & certification roundup - Tech Forum 2025

#StandardsGoals for 2025: Standards & certification roundup - Tech Forum 2025BookNet Canada Book industry standards are evolving rapidly. In the first part of this session, we’ll share an overview of key developments from 2024 and the early months of 2025. Then, BookNet’s resident standards expert, Tom Richardson, and CEO, Lauren Stewart, have a forward-looking conversation about what’s next.

Link to recording, transcript, and accompanying resource: https://bnctechforum.ca/sessions/standardsgoals-for-2025-standards-certification-roundup/

Presented by BookNet Canada on May 6, 2025 with support from the Department of Canadian Heritage.

tecnologias de las primeras civilizaciones.pdf

tecnologias de las primeras civilizaciones.pdffjgm517 descaripcion detallada del avance de las tecnologias en mesopotamia, egipto, roma y grecia.

IEDM 2024 Tutorial2_Advances in CMOS Technologies and Future Directions for C...

IEDM 2024 Tutorial2_Advances in CMOS Technologies and Future Directions for C...organizerofv IEDM 2024 Tutorial2

UiPath Community Berlin: Orchestrator API, Swagger, and Test Manager API

UiPath Community Berlin: Orchestrator API, Swagger, and Test Manager APIUiPathCommunity Join this UiPath Community Berlin meetup to explore the Orchestrator API, Swagger interface, and the Test Manager API. Learn how to leverage these tools to streamline automation, enhance testing, and integrate more efficiently with UiPath. Perfect for developers, testers, and automation enthusiasts!

📕 Agenda

Welcome & Introductions

Orchestrator API Overview

Exploring the Swagger Interface

Test Manager API Highlights

Streamlining Automation & Testing with APIs (Demo)

Q&A and Open Discussion

Perfect for developers, testers, and automation enthusiasts!

👉 Join our UiPath Community Berlin chapter: https://community.uipath.com/berlin/

This session streamed live on April 29, 2025, 18:00 CET.

Check out all our upcoming UiPath Community sessions at https://community.uipath.com/events/.

Enhancing ICU Intelligence: How Our Functional Testing Enabled a Healthcare I...

Enhancing ICU Intelligence: How Our Functional Testing Enabled a Healthcare I...Impelsys Inc. Impelsys provided a robust testing solution, leveraging a risk-based and requirement-mapped approach to validate ICU Connect and CritiXpert. A well-defined test suite was developed to assess data communication, clinical data collection, transformation, and visualization across integrated devices.

Generative Artificial Intelligence (GenAI) in Business

Generative Artificial Intelligence (GenAI) in BusinessDr. Tathagat Varma My talk for the Indian School of Business (ISB) Emerging Leaders Program Cohort 9. In this talk, I discussed key issues around adoption of GenAI in business - benefits, opportunities and limitations. I also discussed how my research on Theory of Cognitive Chasms helps address some of these issues

Special Meetup Edition - TDX Bengaluru Meetup #52.pptx

Special Meetup Edition - TDX Bengaluru Meetup #52.pptxshyamraj55 We’re bringing the TDX energy to our community with 2 power-packed sessions:

🛠️ Workshop: MuleSoft for Agentforce

Explore the new version of our hands-on workshop featuring the latest Topic Center and API Catalog updates.

📄 Talk: Power Up Document Processing

Dive into smart automation with MuleSoft IDP, NLP, and Einstein AI for intelligent document workflows.

How Can I use the AI Hype in my Business Context?

How Can I use the AI Hype in my Business Context?Daniel Lehner 𝙄𝙨 𝘼𝙄 𝙟𝙪𝙨𝙩 𝙝𝙮𝙥𝙚? 𝙊𝙧 𝙞𝙨 𝙞𝙩 𝙩𝙝𝙚 𝙜𝙖𝙢𝙚 𝙘𝙝𝙖𝙣𝙜𝙚𝙧 𝙮𝙤𝙪𝙧 𝙗𝙪𝙨𝙞𝙣𝙚𝙨𝙨 𝙣𝙚𝙚𝙙𝙨?

Everyone’s talking about AI but is anyone really using it to create real value?

Most companies want to leverage AI. Few know 𝗵𝗼𝘄.

✅ What exactly should you ask to find real AI opportunities?

✅ Which AI techniques actually fit your business?

✅ Is your data even ready for AI?

If you’re not sure, you’re not alone. This is a condensed version of the slides I presented at a Linkedin webinar for Tecnovy on 28.04.2025.

Cyber Awareness overview for 2025 month of security

Cyber Awareness overview for 2025 month of securityriccardosl1 Cyber awareness training educates employees on risk associated with internet and malicious emails

Massive Power Outage Hits Spain, Portugal, and France: Causes, Impact, and On...

Massive Power Outage Hits Spain, Portugal, and France: Causes, Impact, and On...Aqusag Technologies In late April 2025, a significant portion of Europe, particularly Spain, Portugal, and parts of southern France, experienced widespread, rolling power outages that continue to affect millions of residents, businesses, and infrastructure systems.

Cybersecurity Identity and Access Solutions using Azure AD

Cybersecurity Identity and Access Solutions using Azure ADVICTOR MAESTRE RAMIREZ Cybersecurity Identity and Access Solutions using Azure AD

Ad

Control dataset partitioning and cache to optimize performances in Spark

- 1. Control dataset partitioning and cache to optimize performances in Spark Christophe Préaud & Florian Fauvarque

- 2. 2 Who are we? Christophe Préaud Big data and distributed computing enthusiast Christophe is data engineer at Kelkoo Group, in charge of the maintenance and evolution of the big data technology stack, the development of Spark applications and the Spark support to other teams. Florian Fauvarque Opensource enthusiast, who loves neat and clean code, and more generally good software craftmanship practices Florian is software engineer at Kelkoo Group, in charge of the development of Spark applications to produce analysis and products feeds for affiliate web sites. This presentation is also available at https://aquilae.eu/snowcamp2019-spark

- 3. 3 The global data-driven marketing platform that connects consumers to products 22 countries International presence 20 years of ecommerce experience 4 price comparison sites

- 4. 7 We are hiring! Over 30 roles in the company Roles in Grenoble: • Java/Scala Developers • Front-End Developers • Data Scientists • Internships

- 5. 8 • 2 Billions logs written per day • 60 TB in HDFS • 15 servers in our prod yarn cluster: 1.73 TB memory 520 Vcores • 3300 jobs executed every day KelkooGroup – Some numbers

- 6. 9 Spark is a unified processing engine that can analyze big data using SQL, machine learning, graph processing or real-time stream analysis: http://spark.apache.org What is Apache Spark?

- 7. 11 • Task • Slot • Shuffle Spark glossary

- 8. 12 • Narrow transformation (ex: coalesce, filter, map, …) Spark glossary

- 9. 13 • Wide transformation (ex: repartition, distinct, groupBy, ...) Spark glossary

- 10. 14 1. Partitions 2. Cache 3. Profiling

- 11. 15 • What does it mean to partition data? • To divide a single dataset into smaller manageable chunks • →A Partition is a small piece of the total dataset • How do the DataFrameReaders decide how to partition data? • It depends according to the reader (CSV, Parquet, ORC, ...) • Task / Partition relationship: • A typical Task is processing a single Partition • →The number of Partitions will determine the number of Tasks needed to process process the dataset What is a partition in Spark?

- 12. 16 During the first part of this presentation, we will focus mainly on... • The number of Partitions my data is divided into • The number of Slots I have for parallel execution The goal is to maximize Slots usage, i.e. ensure as much as possible that each Slot is processing a Task What is a partition in Spark?

- 13. 17 • 4 executors • 2 cores / executor • College Scorecards (source: catalog.data.gov) make it easier for students to search for a college that is a good fit for them. They can use the College Scorecard to find out more about a college's affordability and value so they can make more informed decisions about which college to attend. Configuration for demo 8

- 14. 18 Partition tuning: reading a file 3.3 min numPartitions: 1 3 min 24

- 15. 19 Partition tuning: reading a file 38 s numPartitions: 9 42 s

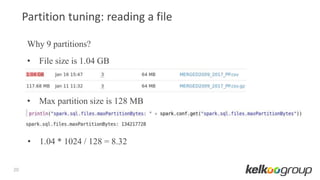

- 16. 20 Why 9 partitions? • File size is 1.04 GB • Max partition size is 128 MB • 1.04 * 1024 / 128 = 8.32 Partition tuning: reading a file

- 17. 21 Partition tuning: reading a file • As a rule of thumb, it is always advised that the number of Partitions is a factor of the number of Slots, so that every Slot is being used (i.e. assigned a Task) during the processing • With 9 Partitions and 8 Slots, we are under-utilizing 7 of the 8 Slots (7 Slots will be assigned 1 Task, 1 Slot will be assigned 2 Tasks)

- 18. 22 Partition tuning: reading a file 14 s 15 s numPartitions: 8 32 s repartition(8)

- 19. 23 Partition tuning: reading a file spark.sql.files.maxPartitionBytes: The maximum number of bytes to pack into a single partition when reading files. 20 s 320 numPartitions: 8 22 s

- 20. 24 Partition tuning: reading a file 45 s 128 numPartitions: 8 49 s

- 21. 25 Partition tuning: repartition and coalesce repartition(4)coalesce(4)

- 22. 26 Partition tuning: repartition and coalesce

- 23. 27 Partition tuning: repartition and coalesce

- 24. 28 Partition tuning: repartition and coalesce

- 25. 29 Partition tuning: repartition and coalesce coalesce repartition • Performs better: no shuffle • Records are not evenly distributed across all partitions→risk of skewed dataset (i.e. a few partitions containing most of the data) • Extra cost because of shuffle operation • Ensure uniform distribution of the records on all partitions→slots usage will be optimal

- 26. 30 Partition tuning: writing a file numPartitions: 19 39 s

- 27. 31 Partition tuning: writing a file 3.9 min coalesce(1) 3 min 57

- 28. 32 Partition tuning: writing a file 1.8 min 22 s repartition(1) 2 min 18

- 29. 33 Partition tuning: repartition or coalesce? • If your dataset is skewed: use repartition • If you want more partitions: use repartition • If you want to drastically reduce the number of partitions (e.g. numPartitions = 1): use repartition • If your dataset is well balanced (i.e. not skewed) and you want fewer partitions (but not drastically fewer, i.e. not fewer than the number of Slots): use coalesce • If in doubt: use repartition

- 30. 34 spark.sql.files.maxRecordsPerFile: Maximum number of records to write out to a single file. If this value is zero or negative, there is no limit. Partition tuning: writing a file

- 31. 35 Partition tuning: writing a file Number of records is checked for each partition (and not for the whole dataset) while the partition is being written – when it is over the threshold, a new file is created. for each partition { for each record { numRecords ++ if (numRecords > 15000) { closeFile() openNewFile() numRecords = 0 } writeRecordInFile() } }

- 32. 36 Partition tuning: writing a file There cannot be less than one file per partition.

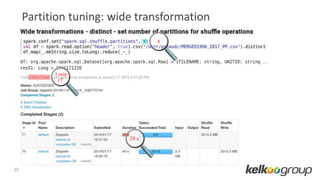

- 33. 37 Wide transformation: The data required to compute the records in a single Partition may reside in many Partitions of the parent Dataset (i.e. it triggers a shuffle operation) Partition tuning: wide transformation 45 s 1 min 32

- 34. 38 spark.sql.shuffle.partitions: The default number of partitions to use when shuffling data for joins or aggregations. Partition tuning: wide transformation

- 35. 39 Partition tuning: wide transformation 28 s 1 min 17 8

- 36. 40 1. Partitions 2. Cache 3. Profiling

- 37. 41 When use cache • When re-use a Dataset multiple times • To recover quickly from a node failure • data scientist : training data in an iterative loop 👍 • data analyst : most of the time no, hide that the data are not organized properly 👎 • data engineer : usually no, but depends on the cases. Benchmark before going to prod ❔

- 38. 42 When use cache 7 sec

- 39. 43 When use cache 1 min 41 sec

- 40. 44 How to cache a data set in Spark Cache strategy: Storage Level • NONE: No cache • MEMORY_ONLY : • data cached non-serialized in memory • If not enough memory: data is evicted and when needed rebuilt from source • DISK_ONLY : data is serialized and stored on disk • MEMORY_AND_DISK : • data cached non-serialized in memory • If not enough memory: data is serialized and stored on disk • OFF_HEAP : data is serialized and stored of heap with Alluxio (formerly Tachyon)

- 41. 45 How to cache a data set in Spark Cache strategy: Storage Level • _SER suffix: • Always serialize the data in memory • Save space but with serialization penalty • _2 suffix : • Replicate each partition on 2 cluster nodes • Improve recovery time when node failure NONE DISK_ONLY DISK_ONLY_2 MEMORY_ONLY MEMORY_ONLY_2 MEMORY_ONLY_SER MEMORY_ONLY_SER_2 MEMORY_AND_DISK MEMORY_AND_DISK_2 MEMORY_AND_DISK_SER MEMORY_AND_DISK_SER_2 OFF_HEAP

- 42. 46 How to cache a data set in Spark Cache strategy: Storage Level • .cache() alias for .persist(MEMORY_AND_DISK) RDD: MEMORY_ONLY • Lazy: .count()

- 43. 47 Broadcast variable Useful to share small immutable data

- 44. 48 Broadcast variable • spark.sql.autoBroadcastJoinThreshold : auto optimize join queries when the size of one side data is below the threshold (default 10 MB)

- 45. 1. Partitions 2. Cache 3. Profiling

- 46. 50 How to Profile a Spark App ?

- 47. 51 How to Profile a Spark App ?

- 48. 52 How to Profile a Spark App ?

- 49. 53 How to Profile a Spark App ? https://github.com/criteo/babar

- 50. 54 Questions ?

- 51. 55 Ressources • Spark official documentation: https://spark.apache.org/docs/latest/tuning.html • Mastering Apache Spark by Jacek Laskowski: https://jaceklaskowski.gitbooks.io/mastering- apache-spark/ • Apache Spark - Best Practices and Tuning by Umberto Griffo: https://umbertogriffo.gitbooks.io/apache-spark-best-practices-and-tuning/ • High Performance Spark by Rachel Warren, Holden Karau, O'Reilly