Convolutional Neural Network (CNN) presentation from theory to code in Theano

Download as pptx, pdf13 likes4,934 views

I collected and created presentation file about deep learning algorithm of convolutional neural network

1 of 87

Downloaded 339 times

![M&S

CNN Tensor notation in Theano

- Input Images -

4D tensor

1D tensor

[number of feature maps at layer m, number of feature maps at layer m-1,

filter height, filter width]

ij

klx

op

qrW

mb

[ i, j, k, l ] =

[ o, p, q, r ] =

[ m ] =

- Weight -

- Bias -

[n’th feature map number]

[mini-batch size, number of input feature

maps, image height, image width]](https://image.slidesharecdn.com/cnnpresentation-160427115016/85/Convolutional-Neural-Network-CNN-presentation-from-theory-to-code-in-Theano-15-320.jpg)

![M&S

Convolution?

daatwax

twxty

)()(

))(()(

a

anwaxny ][][][

- Continuous Variables -

- Discrete Variables -](https://image.slidesharecdn.com/cnnpresentation-160427115016/85/Convolutional-Neural-Network-CNN-presentation-from-theory-to-code-in-Theano-17-320.jpg)

![M&S

Convolution?

a

anwaxny ][][][

- Discrete Variables -

][][ awax ][][ awax

)]([][ nawax

Y-axis transformation](https://image.slidesharecdn.com/cnnpresentation-160427115016/85/Convolutional-Neural-Network-CNN-presentation-from-theory-to-code-in-Theano-18-320.jpg)

![M&S

Cross-Correlation?

a

nawaxnwxny ][][])[(][

- Discrete Variables (In real number) -

][][ awax ][][ nawax n step move

★](https://image.slidesharecdn.com/cnnpresentation-160427115016/85/Convolutional-Neural-Network-CNN-presentation-from-theory-to-code-in-Theano-20-320.jpg)

![M&S

Cross-Correlation in 2D

Output (y) Kernel (w) Input (x)

n m

nmwjnimx

jiwxjiy

],[],[

],)[(],[](https://image.slidesharecdn.com/cnnpresentation-160427115016/85/Convolutional-Neural-Network-CNN-presentation-from-theory-to-code-in-Theano-22-320.jpg)

![M&S

Input Image

Input Image

- Input Images -

4D tensor

[mini-batch size, number of input feature

maps, image height, image width]ij

klx

5

...

28

28

500

7

[ i, j, k, l ] =

Mini batch 1

5

...

28

28

500

8

Mini batch 100

. . .

50,000 images

in the training data](https://image.slidesharecdn.com/cnnpresentation-160427115016/85/Convolutional-Neural-Network-CNN-presentation-from-theory-to-code-in-Theano-54-320.jpg)

![M&S

Weight tensor

Input Image

Convolutional

Layer

4D tensor

[number of feature maps at layer m, number of feature maps at layer m-1,

filter height, filter width]

op

qrW [ o, p, q, r ] =

- Weight -](https://image.slidesharecdn.com/cnnpresentation-160427115016/85/Convolutional-Neural-Network-CNN-presentation-from-theory-to-code-in-Theano-55-320.jpg)

![M&S

Exercise for Input and Weight tensor

11

11x

11

11W

Input layer

Convolutional layer 1 Convolutional layer 2

[ 1, 1, 1, 1 ]

[ 1, 1, 1, 1 ]](https://image.slidesharecdn.com/cnnpresentation-160427115016/85/Convolutional-Neural-Network-CNN-presentation-from-theory-to-code-in-Theano-56-320.jpg)

![M&S

Code for Convolutional Layer

28

28

8

def evaluate_lenet5(learning_rate=0.1,

n_epochs=2, dataset=‘minist.pkl.gz’,

nkerns=[20, 50], batch_size=500):

LeNetConvPoolLayer

image_shape=(batch_size, 1, 28, 28)

filter_shape=(nkerns[0], 1, 5, 5)

poolsize=(2, 2)

image_shape=(batch_size, nkerns[0], 12, 12)

filter_shape=(nkerns[1], nkerns[0], 5, 5)

poolsize=(2, 2)

Layer0 – Convolutional layer 1

Layer1 – Convolutional layer 2

5

5

20

24

24

20

5

5

12

12

8

8

4

4

20 50 50 50

28 – 5 + 1 = 24

Convolution

24 / 2 = 12

Pooling

12 – 5 + 1 = 8

Convolution

8 / 2 = 4

Pooling

Class](https://image.slidesharecdn.com/cnnpresentation-160427115016/85/Convolutional-Neural-Network-CNN-presentation-from-theory-to-code-in-Theano-57-320.jpg)

![M&S

Code for MLP in Theano

Input Image

Convolutional

Layer

Pooling

MLP layer2 = HiddenLayer( rng,

input=layer2_input,

n_in-nkerns[1] * 4 * 4,

n_out=500,

activation = T.tanh)

HiddenLayer

Class

Last output size for C+P

Number of node at Hidden layer

Activation function at Hidden layer

***In order to extend the number of Hidden Layer in MLP,

We need to make layer3 by copying this code***](https://image.slidesharecdn.com/cnnpresentation-160427115016/85/Convolutional-Neural-Network-CNN-presentation-from-theory-to-code-in-Theano-85-320.jpg)

Ad

Recommended

Machine Learning - Convolutional Neural Network

Machine Learning - Convolutional Neural NetworkRichard Kuo The document provides an overview of convolutional neural networks (CNNs) for visual recognition. It discusses the basic concepts of CNNs such as convolutional layers, activation functions, pooling layers, and network architectures. Examples of classic CNN architectures like LeNet-5 and AlexNet are presented. Modern architectures such as Inception and ResNet are also discussed. Code examples for image classification using TensorFlow, Keras, and Fastai are provided.

Simple Introduction to AutoEncoder

Simple Introduction to AutoEncoderJun Lang The document introduces autoencoders, which are neural networks that compress an input into a lower-dimensional code and then reconstruct the output from that code. It discusses that autoencoders can be trained using an unsupervised pre-training method called restricted Boltzmann machines to minimize the reconstruction error. Autoencoders can be used for dimensionality reduction, document retrieval by compressing documents into codes, and data visualization by compressing high-dimensional data points into 2D for plotting with different categories colored separately.

CNN and its applications by ketaki

CNN and its applications by ketakiKetaki Patwari The document describes a vehicle detection system using a fully convolutional regression network (FCRN). The FCRN is trained on patches from aerial images to predict a density map indicating vehicle locations. The proposed system is evaluated on two public datasets and achieves higher precision and recall than comparative shallow and deep learning methods for vehicle detection in aerial images. The system could help with applications like urban planning and traffic management.

Convolutional Neural Network Models - Deep Learning

Convolutional Neural Network Models - Deep LearningMohamed Loey Convolutional Neural Network Models - Deep Learning

Convolutional Neural Network

ILSVRC

AlexNet (2012)

ZFNet (2013)

VGGNet (2014)

GoogleNet 2014)

ResNet (2015)

Conclusion

Convolutional Neural Network (CNN) is a multi-layer neural network

Convolutional Neural Networks (CNN)

Convolutional Neural Networks (CNN)Gaurav Mittal A comprehensive tutorial on Convolutional Neural Networks (CNN) which talks about the motivation behind CNNs and Deep Learning in general, followed by a description of the various components involved in a typical CNN layer. It explains the theory involved with the different variants used in practice and also, gives a big picture of the whole network by putting everything together.

Next, there's a discussion of the various state-of-the-art frameworks being used to implement CNNs to tackle real-world classification and regression problems.

Finally, the implementation of the CNNs is demonstrated by implementing the paper 'Age ang Gender Classification Using Convolutional Neural Networks' by Hassner (2015).

Time Series Classification with Deep Learning | Marco Del Pra

Time Series Classification with Deep Learning | Marco Del PraData Science Milan Today there are a lot of data that are stored in the form of time series, and with the actual large diffusion of real-time applications many areas are strongly increasing their interest in applications based on this kind of data, like for example finance, advertising, marketing, health care, automated disease detection, biometrics, retail, and identification of anomalies of any kind. It is therefore very interesting to understand the role and potential of machine learning in this sector.

Many methods can be used for the classification of the time series, but all of them, apart from deep learning, require some kind of feature engineering as a separate stage before the classification is performed, and this can imply the loss of some important information and the increase of the development and test time. On the contrary, deep learning models such as recurrent and convolutional neural networks already incorporate this kind of feature engineering internally, optimizing it and eliminating the need to do it manually. Therefore they are able to extract information from the time series in a faster, more direct, and more complete way.

Bio:

Marco Del Pra

I am 41 years old, I was born in Venice, I have 2 master's degrees (Computer Science and Mathematics). I have been working for about 10 years in Artificial Intelligence, first as Data Scientist, then as Team Leader and finally as Head of Data. Among others, I worked for Microsoft, for the European Commission (JRC of Ispra) and for Cuebiq. I am currently working as a freelancer and I am creating with 2 other cofounders an innovative AI startup. I have 2 important publications in applied mathematics.

Topics: recurrent and convolutional neural networks, deep learning, time-series.

Introduction to Recurrent Neural Network

Introduction to Recurrent Neural NetworkYan Xu Basic concepts of RNN and introduction to Long short term memory network; Presented at Houston Machine Learning meetup.

Convolutional Neural Network and Its Applications

Convolutional Neural Network and Its ApplicationsKasun Chinthaka Piyarathna In machine learning, a convolutional neural network is a class of deep, feed-forward artificial neural networks that have successfully been applied fpr analyzing visual imagery.

Autoencoder

AutoencoderHARISH R An Autoencoder is a type of Artificial Neural Network used to learn efficient data codings in an unsupervised manner. The aim of an autoencoder is to learn a representation (encoding) for a set of data, typically for dimensionality reduction, by training the network to ignore signal “noise.”

Introduction to Keras

Introduction to KerasJohn Ramey An introduction to Keras, a high-level neural networks library written in Python. Keras makes deep learning more accessible, is fantastic for rapid protyping, and can run on top of TensorFlow, Theano, or CNTK. These slides focus on examples, starting with logistic regression and building towards a convolutional neural network.

The presentation was given at the Austin Deep Learning meetup: https://www.meetup.com/Austin-Deep-Learning/events/237661902/

AlexNet

AlexNetBertil Hatt AlexNet achieved unprecedented results on the ImageNet dataset by using a deep convolutional neural network with over 60 million parameters. It achieved top-1 and top-5 error rates of 37.5% and 17.0%, significantly outperforming previous methods. The network architecture included 5 convolutional layers, some with max pooling, and 3 fully-connected layers. Key aspects were the use of ReLU activations for faster training, dropout to reduce overfitting, and parallelizing computations across two GPUs. This dramatic improvement demonstrated the potential of deep learning for computer vision tasks.

Deep Learning - Convolutional Neural Networks

Deep Learning - Convolutional Neural NetworksChristian Perone This document provides an agenda for a presentation on deep learning, neural networks, convolutional neural networks, and interesting applications. The presentation will include introductions to deep learning and how it differs from traditional machine learning by learning feature representations from data. It will cover the history of neural networks and breakthroughs that enabled training of deeper models. Convolutional neural network architectures will be overviewed, including convolutional, pooling, and dense layers. Applications like recommendation systems, natural language processing, and computer vision will also be discussed. There will be a question and answer section.

Deep neural networks

Deep neural networksSi Haem Deep learning and neural networks are inspired by biological neurons. Artificial neural networks (ANN) can have multiple layers and learn through backpropagation. Deep neural networks with multiple hidden layers did not work well until recent developments in unsupervised pre-training of layers. Experiments on MNIST digit recognition and NORB object recognition datasets showed deep belief networks and deep Boltzmann machines outperform other models. Deep learning is now widely used for applications like computer vision, natural language processing, and information retrieval.

Feed forward ,back propagation,gradient descent

Feed forward ,back propagation,gradient descentMuhammad Rasel This document discusses gradient descent algorithms, feedforward neural networks, and backpropagation. It defines machine learning, artificial intelligence, and deep learning. It then explains gradient descent as an optimization technique used to minimize cost functions in deep learning models. It describes feedforward neural networks as having connections that move in one direction from input to output nodes. Backpropagation is mentioned as an algorithm for training neural networks.

Video Transformers.pptx

Video Transformers.pptxSangmin Woo The document discusses recent developments in video transformers. It summarizes several recent works that employ spatial backbones like ViT or ResNet combined with temporal transformers for video classification. Examples mentioned include VTN, TimeSformer, STAM, and ViViT. The document also discusses common practices in video transformer inference, like using multiple clips/crops and averaging predictions. Design choices covered include number of frames, spatial dimensions, and multi-view inference techniques.

Autoencoders

AutoencodersCloudxLab An autoencoder is an artificial neural network that is trained to copy its input to its output. It consists of an encoder that compresses the input into a lower-dimensional latent-space encoding, and a decoder that reconstructs the output from this encoding. Autoencoders are useful for dimensionality reduction, feature learning, and generative modeling. When constrained by limiting the latent space or adding noise, autoencoders are forced to learn efficient representations of the input data. For example, a linear autoencoder trained with mean squared error performs principal component analysis.

Image Classification using deep learning

Image Classification using deep learning Asma-AH image classification is a common problem in Artificial Intelligence , we used CIFR10 data set and tried a lot of methods to reach a high test accuracy like neural networks and Transfer learning techniques .

you can view the source code and the papers we read on github : https://github.com/Asma-Hawari/Machine-Learning-Project-

Resnet

Resnetashwinjoseph95 Residual neural networks (ResNets) solve the vanishing gradient problem through shortcut connections that allow gradients to flow directly through the network. The ResNet architecture consists of repeating blocks with convolutional layers and shortcut connections. These connections perform identity mappings and add the outputs of the convolutional layers to the shortcut connection. This helps networks converge earlier and increases accuracy. Variants include basic blocks with two convolutional layers and bottleneck blocks with three layers. Parameters like number of layers affect ResNet performance, with deeper networks showing improved accuracy. YOLO is a variant that replaces the softmax layer with a 1x1 convolutional layer and logistic function for multi-label classification.

Cnn

CnnNirthika Rajendran Convolutional neural networks (CNNs) learn multi-level features and perform classification jointly and better than traditional approaches for image classification and segmentation problems. CNNs have four main components: convolution, nonlinearity, pooling, and fully connected layers. Convolution extracts features from the input image using filters. Nonlinearity introduces nonlinearity. Pooling reduces dimensionality while retaining important information. The fully connected layer uses high-level features for classification. CNNs are trained end-to-end using backpropagation to minimize output errors by updating weights.

Face Recognition: From Scratch To Hatch

Face Recognition: From Scratch To HatchEduard Tyantov face detection & recognition: metric learning (triplet, center, angular softmax losses), TensoRT, tips & tricks.

Semantic Segmentation Methods using Deep Learning

Semantic Segmentation Methods using Deep LearningSungjoon Choi This document discusses semantic segmentation, which is the task of assigning each pixel in an image to a semantic class. It introduces semantic segmentation and provides a leader board of top performing models. It then details the results of various semantic segmentation models on benchmark datasets, including PSPNet, DeepLab v3+, and DeepLab v3. The models are evaluated based on metrics like mean intersection over union.

Convolutional neural network

Convolutional neural networkMojammilHusain Convolutional neural network (CNN / ConvNet's) is a part of Computer Vision. Machine Learning Algorithm. Image Classification, Image Detection, Digit Recognition, and many more. https://technoelearn.com .

Hog

HogAnirudh Kanneganti The document describes the Histogram of Oriented Gradients (HOG) feature descriptor technique. HOG counts occurrences of gradient orientation in localized portions of an image to represent a distribution of intensity fluctuations along different orientations. It works by first calculating gradient images, then calculating histograms of gradients in 8x8 cells, followed by block normalization to account for lighting variations before forming the final HOG feature vector.

Convolutional Neural Network (CNN)

Convolutional Neural Network (CNN)Muhammad Haroon Convolutional Neural Network (CNN)

Steps in CNN

Convolution

Max Pooling

Flattening

Full Connection

Deep Neural Networks (DNN)

Deep Neural Networks (DNN)Sir Syed University of Engineering & Technology The document discusses deep neural networks (DNN) and deep learning. It explains that deep learning uses multiple layers to learn hierarchical representations from raw input data. Lower layers identify lower-level features while higher layers integrate these into more complex patterns. Deep learning models are trained on large datasets by adjusting weights to minimize error. Applications discussed include image recognition, natural language processing, drug discovery, and analyzing satellite imagery. Both advantages like state-of-the-art performance and drawbacks like high computational costs are outlined.

Recurrent Neural Network (RNN) | RNN LSTM Tutorial | Deep Learning Course | S...

Recurrent Neural Network (RNN) | RNN LSTM Tutorial | Deep Learning Course | S...Simplilearn This presentation on Recurrent Neural Network will help you understand what is a neural network, what are the popular neural networks, why we need recurrent neural network, what is a recurrent neural network, how does a RNN work, what is vanishing and exploding gradient problem, what is LSTM and you will also see a use case implementation of LSTM (Long short term memory). Neural networks used in Deep Learning consists of different layers connected to each other and work on the structure and functions of the human brain. It learns from huge volumes of data and used complex algorithms to train a neural net. The recurrent neural network works on the principle of saving the output of a layer and feeding this back to the input in order to predict the output of the layer. Now lets deep dive into this presentation and understand what is RNN and how does it actually work.

Below topics are explained in this recurrent neural networks tutorial:

1. What is a neural network?

2. Popular neural networks?

3. Why recurrent neural network?

4. What is a recurrent neural network?

5. How does an RNN work?

6. Vanishing and exploding gradient problem

7. Long short term memory (LSTM)

8. Use case implementation of LSTM

Simplilearn’s Deep Learning course will transform you into an expert in deep learning techniques using TensorFlow, the open-source software library designed to conduct machine learning & deep neural network research. With our deep learning course, you'll master deep learning and TensorFlow concepts, learn to implement algorithms, build artificial neural networks and traverse layers of data abstraction to understand the power of data and prepare you for your new role as deep learning scientist.

Why Deep Learning?

It is one of the most popular software platforms used for deep learning and contains powerful tools to help you build and implement artificial neural networks.

Advancements in deep learning are being seen in smartphone applications, creating efficiencies in the power grid, driving advancements in healthcare, improving agricultural yields, and helping us find solutions to climate change. With this Tensorflow course, you’ll build expertise in deep learning models, learn to operate TensorFlow to manage neural networks and interpret the results.

And according to payscale.com, the median salary for engineers with deep learning skills tops $120,000 per year.

You can gain in-depth knowledge of Deep Learning by taking our Deep Learning certification training course. With Simplilearn’s Deep Learning course, you will prepare for a career as a Deep Learning engineer as you master concepts and techniques including supervised and unsupervised learning, mathematical and heuristic aspects, and hands-on modeling to develop algorithms. Those who complete the course will be able to:

Learn more at: https://www.simplilearn.com/

Deep Learning in Computer Vision

Deep Learning in Computer VisionSungjoon Choi Deep Learning in Computer Vision Applications

1. Basics on Convolutional Neural Network

2. Otimization Methods (Momentum, AdaGrad, RMSProp, Adam, etc)

3. Semantic Segmentation

4. Class Activation Map

5. Object Detection

6. Recurrent Neural Network

7. Visual Question and Answering

8. Word2Vec (Word embedding)

9. Image Captioning

CNN Tutorial

CNN TutorialSungjoon Choi This document provides an overview of convolutional neural networks and summarizes four popular CNN architectures: AlexNet, VGG, GoogLeNet, and ResNet. It explains that CNNs are made up of convolutional and subsampling layers for feature extraction followed by dense layers for classification. It then briefly describes key aspects of each architecture like ReLU activation, inception modules, residual learning blocks, and their performance on image classification tasks.

Idea for ineractive programming language

Idea for ineractive programming languageLincoln Hannah Notebooks such as Jupyter give programming languages a level of interactivity approaching that of spreadsheets.

I present here an idea for a programming language specifically designed for an interactive environment similar to a notebook.

It aims to combining the power of a programming language with the usability of a spreadsheet.

Instead of free-form code, the user creates fields / columns, but these can be combined into tables and object classes.

By decoratively cycling through field elements, loops and other programming constructs can be created.

I give examples from classical computer science, machine learning and mathematical finance, specifically:

Nth Prime Number, 8 Queens, Poker Hand, Travelling Salesman, Linear Regression, VaR Attribution

8. Vectors data frames

8. Vectors data framesExternalEvents As part of the GSP’s capacity development and improvement programme, FAO/GSP have organised a one week training in Izmir, Turkey. The main goal of the training was to increase the capacity of Turkey on digital soil mapping, new approaches on data collection, data processing and modelling of soil organic carbon. This 5 day training is titled ‘’Training on Digital Soil Organic Carbon Mapping’’ was held in IARTC - International Agricultural Research and Education Center in Menemen, Izmir on 20-25 August, 2017.

Ad

More Related Content

What's hot (20)

Autoencoder

AutoencoderHARISH R An Autoencoder is a type of Artificial Neural Network used to learn efficient data codings in an unsupervised manner. The aim of an autoencoder is to learn a representation (encoding) for a set of data, typically for dimensionality reduction, by training the network to ignore signal “noise.”

Introduction to Keras

Introduction to KerasJohn Ramey An introduction to Keras, a high-level neural networks library written in Python. Keras makes deep learning more accessible, is fantastic for rapid protyping, and can run on top of TensorFlow, Theano, or CNTK. These slides focus on examples, starting with logistic regression and building towards a convolutional neural network.

The presentation was given at the Austin Deep Learning meetup: https://www.meetup.com/Austin-Deep-Learning/events/237661902/

AlexNet

AlexNetBertil Hatt AlexNet achieved unprecedented results on the ImageNet dataset by using a deep convolutional neural network with over 60 million parameters. It achieved top-1 and top-5 error rates of 37.5% and 17.0%, significantly outperforming previous methods. The network architecture included 5 convolutional layers, some with max pooling, and 3 fully-connected layers. Key aspects were the use of ReLU activations for faster training, dropout to reduce overfitting, and parallelizing computations across two GPUs. This dramatic improvement demonstrated the potential of deep learning for computer vision tasks.

Deep Learning - Convolutional Neural Networks

Deep Learning - Convolutional Neural NetworksChristian Perone This document provides an agenda for a presentation on deep learning, neural networks, convolutional neural networks, and interesting applications. The presentation will include introductions to deep learning and how it differs from traditional machine learning by learning feature representations from data. It will cover the history of neural networks and breakthroughs that enabled training of deeper models. Convolutional neural network architectures will be overviewed, including convolutional, pooling, and dense layers. Applications like recommendation systems, natural language processing, and computer vision will also be discussed. There will be a question and answer section.

Deep neural networks

Deep neural networksSi Haem Deep learning and neural networks are inspired by biological neurons. Artificial neural networks (ANN) can have multiple layers and learn through backpropagation. Deep neural networks with multiple hidden layers did not work well until recent developments in unsupervised pre-training of layers. Experiments on MNIST digit recognition and NORB object recognition datasets showed deep belief networks and deep Boltzmann machines outperform other models. Deep learning is now widely used for applications like computer vision, natural language processing, and information retrieval.

Feed forward ,back propagation,gradient descent

Feed forward ,back propagation,gradient descentMuhammad Rasel This document discusses gradient descent algorithms, feedforward neural networks, and backpropagation. It defines machine learning, artificial intelligence, and deep learning. It then explains gradient descent as an optimization technique used to minimize cost functions in deep learning models. It describes feedforward neural networks as having connections that move in one direction from input to output nodes. Backpropagation is mentioned as an algorithm for training neural networks.

Video Transformers.pptx

Video Transformers.pptxSangmin Woo The document discusses recent developments in video transformers. It summarizes several recent works that employ spatial backbones like ViT or ResNet combined with temporal transformers for video classification. Examples mentioned include VTN, TimeSformer, STAM, and ViViT. The document also discusses common practices in video transformer inference, like using multiple clips/crops and averaging predictions. Design choices covered include number of frames, spatial dimensions, and multi-view inference techniques.

Autoencoders

AutoencodersCloudxLab An autoencoder is an artificial neural network that is trained to copy its input to its output. It consists of an encoder that compresses the input into a lower-dimensional latent-space encoding, and a decoder that reconstructs the output from this encoding. Autoencoders are useful for dimensionality reduction, feature learning, and generative modeling. When constrained by limiting the latent space or adding noise, autoencoders are forced to learn efficient representations of the input data. For example, a linear autoencoder trained with mean squared error performs principal component analysis.

Image Classification using deep learning

Image Classification using deep learning Asma-AH image classification is a common problem in Artificial Intelligence , we used CIFR10 data set and tried a lot of methods to reach a high test accuracy like neural networks and Transfer learning techniques .

you can view the source code and the papers we read on github : https://github.com/Asma-Hawari/Machine-Learning-Project-

Resnet

Resnetashwinjoseph95 Residual neural networks (ResNets) solve the vanishing gradient problem through shortcut connections that allow gradients to flow directly through the network. The ResNet architecture consists of repeating blocks with convolutional layers and shortcut connections. These connections perform identity mappings and add the outputs of the convolutional layers to the shortcut connection. This helps networks converge earlier and increases accuracy. Variants include basic blocks with two convolutional layers and bottleneck blocks with three layers. Parameters like number of layers affect ResNet performance, with deeper networks showing improved accuracy. YOLO is a variant that replaces the softmax layer with a 1x1 convolutional layer and logistic function for multi-label classification.

Cnn

CnnNirthika Rajendran Convolutional neural networks (CNNs) learn multi-level features and perform classification jointly and better than traditional approaches for image classification and segmentation problems. CNNs have four main components: convolution, nonlinearity, pooling, and fully connected layers. Convolution extracts features from the input image using filters. Nonlinearity introduces nonlinearity. Pooling reduces dimensionality while retaining important information. The fully connected layer uses high-level features for classification. CNNs are trained end-to-end using backpropagation to minimize output errors by updating weights.

Face Recognition: From Scratch To Hatch

Face Recognition: From Scratch To HatchEduard Tyantov face detection & recognition: metric learning (triplet, center, angular softmax losses), TensoRT, tips & tricks.

Semantic Segmentation Methods using Deep Learning

Semantic Segmentation Methods using Deep LearningSungjoon Choi This document discusses semantic segmentation, which is the task of assigning each pixel in an image to a semantic class. It introduces semantic segmentation and provides a leader board of top performing models. It then details the results of various semantic segmentation models on benchmark datasets, including PSPNet, DeepLab v3+, and DeepLab v3. The models are evaluated based on metrics like mean intersection over union.

Convolutional neural network

Convolutional neural networkMojammilHusain Convolutional neural network (CNN / ConvNet's) is a part of Computer Vision. Machine Learning Algorithm. Image Classification, Image Detection, Digit Recognition, and many more. https://technoelearn.com .

Hog

HogAnirudh Kanneganti The document describes the Histogram of Oriented Gradients (HOG) feature descriptor technique. HOG counts occurrences of gradient orientation in localized portions of an image to represent a distribution of intensity fluctuations along different orientations. It works by first calculating gradient images, then calculating histograms of gradients in 8x8 cells, followed by block normalization to account for lighting variations before forming the final HOG feature vector.

Convolutional Neural Network (CNN)

Convolutional Neural Network (CNN)Muhammad Haroon Convolutional Neural Network (CNN)

Steps in CNN

Convolution

Max Pooling

Flattening

Full Connection

Deep Neural Networks (DNN)

Deep Neural Networks (DNN)Sir Syed University of Engineering & Technology The document discusses deep neural networks (DNN) and deep learning. It explains that deep learning uses multiple layers to learn hierarchical representations from raw input data. Lower layers identify lower-level features while higher layers integrate these into more complex patterns. Deep learning models are trained on large datasets by adjusting weights to minimize error. Applications discussed include image recognition, natural language processing, drug discovery, and analyzing satellite imagery. Both advantages like state-of-the-art performance and drawbacks like high computational costs are outlined.

Recurrent Neural Network (RNN) | RNN LSTM Tutorial | Deep Learning Course | S...

Recurrent Neural Network (RNN) | RNN LSTM Tutorial | Deep Learning Course | S...Simplilearn This presentation on Recurrent Neural Network will help you understand what is a neural network, what are the popular neural networks, why we need recurrent neural network, what is a recurrent neural network, how does a RNN work, what is vanishing and exploding gradient problem, what is LSTM and you will also see a use case implementation of LSTM (Long short term memory). Neural networks used in Deep Learning consists of different layers connected to each other and work on the structure and functions of the human brain. It learns from huge volumes of data and used complex algorithms to train a neural net. The recurrent neural network works on the principle of saving the output of a layer and feeding this back to the input in order to predict the output of the layer. Now lets deep dive into this presentation and understand what is RNN and how does it actually work.

Below topics are explained in this recurrent neural networks tutorial:

1. What is a neural network?

2. Popular neural networks?

3. Why recurrent neural network?

4. What is a recurrent neural network?

5. How does an RNN work?

6. Vanishing and exploding gradient problem

7. Long short term memory (LSTM)

8. Use case implementation of LSTM

Simplilearn’s Deep Learning course will transform you into an expert in deep learning techniques using TensorFlow, the open-source software library designed to conduct machine learning & deep neural network research. With our deep learning course, you'll master deep learning and TensorFlow concepts, learn to implement algorithms, build artificial neural networks and traverse layers of data abstraction to understand the power of data and prepare you for your new role as deep learning scientist.

Why Deep Learning?

It is one of the most popular software platforms used for deep learning and contains powerful tools to help you build and implement artificial neural networks.

Advancements in deep learning are being seen in smartphone applications, creating efficiencies in the power grid, driving advancements in healthcare, improving agricultural yields, and helping us find solutions to climate change. With this Tensorflow course, you’ll build expertise in deep learning models, learn to operate TensorFlow to manage neural networks and interpret the results.

And according to payscale.com, the median salary for engineers with deep learning skills tops $120,000 per year.

You can gain in-depth knowledge of Deep Learning by taking our Deep Learning certification training course. With Simplilearn’s Deep Learning course, you will prepare for a career as a Deep Learning engineer as you master concepts and techniques including supervised and unsupervised learning, mathematical and heuristic aspects, and hands-on modeling to develop algorithms. Those who complete the course will be able to:

Learn more at: https://www.simplilearn.com/

Deep Learning in Computer Vision

Deep Learning in Computer VisionSungjoon Choi Deep Learning in Computer Vision Applications

1. Basics on Convolutional Neural Network

2. Otimization Methods (Momentum, AdaGrad, RMSProp, Adam, etc)

3. Semantic Segmentation

4. Class Activation Map

5. Object Detection

6. Recurrent Neural Network

7. Visual Question and Answering

8. Word2Vec (Word embedding)

9. Image Captioning

CNN Tutorial

CNN TutorialSungjoon Choi This document provides an overview of convolutional neural networks and summarizes four popular CNN architectures: AlexNet, VGG, GoogLeNet, and ResNet. It explains that CNNs are made up of convolutional and subsampling layers for feature extraction followed by dense layers for classification. It then briefly describes key aspects of each architecture like ReLU activation, inception modules, residual learning blocks, and their performance on image classification tasks.

Similar to Convolutional Neural Network (CNN) presentation from theory to code in Theano (20)

Idea for ineractive programming language

Idea for ineractive programming languageLincoln Hannah Notebooks such as Jupyter give programming languages a level of interactivity approaching that of spreadsheets.

I present here an idea for a programming language specifically designed for an interactive environment similar to a notebook.

It aims to combining the power of a programming language with the usability of a spreadsheet.

Instead of free-form code, the user creates fields / columns, but these can be combined into tables and object classes.

By decoratively cycling through field elements, loops and other programming constructs can be created.

I give examples from classical computer science, machine learning and mathematical finance, specifically:

Nth Prime Number, 8 Queens, Poker Hand, Travelling Salesman, Linear Regression, VaR Attribution

8. Vectors data frames

8. Vectors data framesExternalEvents As part of the GSP’s capacity development and improvement programme, FAO/GSP have organised a one week training in Izmir, Turkey. The main goal of the training was to increase the capacity of Turkey on digital soil mapping, new approaches on data collection, data processing and modelling of soil organic carbon. This 5 day training is titled ‘’Training on Digital Soil Organic Carbon Mapping’’ was held in IARTC - International Agricultural Research and Education Center in Menemen, Izmir on 20-25 August, 2017.

Design and Analysis of Algorithms-DP,Backtracking,Graphs,B&B

Design and Analysis of Algorithms-DP,Backtracking,Graphs,B&BSreedhar Chowdam Dynamic Programming

Backtracking

Techniques for Graphs

Branch and Bound

COCOA: Communication-Efficient Coordinate Ascent

COCOA: Communication-Efficient Coordinate Ascentjeykottalam This document summarizes the CoCoA algorithm for distributed optimization. CoCoA uses a primal-dual framework to solve machine learning problems efficiently when data is distributed across multiple machines. It allows local machines to immediately apply updates to their local dual variables, while averaging the local primal updates over a small number of machines. CoCoA guarantees convergence, requires low communication, and can be implemented in just a few lines of code in systems like Spark. It improves upon mini-batch approaches by handling methods beyond stochastic gradient descent and avoiding issues with stale updates.

Introduction to Neural Networks and Deep Learning from Scratch

Introduction to Neural Networks and Deep Learning from ScratchAhmed BESBES If you're willing to understand how neural networks work behind the scene and debug the back-propagation algorithm step by step by yourself, this presentation should be a good starting point.

We'll cover elements on:

- the popularity of neural networks and their applications

- the artificial neuron and the analogy with the biological one

- the perceptron

- the architecture of multi-layer perceptrons

- loss functions

- activation functions

- the gradient descent algorithm

At the end, there will be an implementation FROM SCRATCH of a fully functioning neural net.

code: https://github.com/ahmedbesbes/Neural-Network-from-scratch

Leveraging R in Big Data of Mobile Ads (R在行動廣告大數據的應用)

Leveraging R in Big Data of Mobile Ads (R在行動廣告大數據的應用)Craig Chao 1. Introduction of Mobile Ads

2. Prediction Model in Mobile Ads

3. Logistic Regression & Matrix Factorization

4. Factorization Machine in R

Primitives

PrimitivesNageswara Rao Gottipati This document discusses algorithms for drawing 2D graphics primitives like lines, triangles, and circles in computer graphics. It begins by introducing basic concepts like coordinate systems, pixels, and graphics APIs. It then covers algorithms for drawing lines, including the slope-intercept method, DDA algorithm, and Bresenham's line drawing algorithm, which uses only integer calculations for better performance. Finally, it briefly mentions extending these techniques to draw other shapes like circles and curves, as well as filling shapes.

Tutorial on convolutional neural networks

Tutorial on convolutional neural networksHojin Yang Tutorial on Convolutional Neural Networks. Made for people with some knowledge of basic neural networks.

Count-Distinct Problem

Count-Distinct ProblemKai Zhang The document discusses count-distinct algorithms for estimating the cardinality of large data streams. It provides an overview of the history of count-distinct algorithms, from early linear counting approaches to modern algorithms like LogLog counting and HyperLogLog counting. The document then describes the basic ideas, algorithms, and implementations of LogLog counting and HyperLogLog counting. It analyzes the performance of these algorithms and discusses open issues like how to handle small and large cardinalities more accurately.

Computer graphics

Computer graphicsBala Murali The document discusses 2D and 3D transformations used in computer graphics. It covers topics like scaling, rotation, translation and how they can be represented using matrices. Matrix representations allow multiple transformations to be combined through matrix multiplication. Both linear transformations like scaling and rotation, as well as affine transformations like translation, can be captured with matrices. The order of matrix multiplications is important, as transformations are not commutative. These concepts extend from 2D to 3D graphics using homogeneous coordinates.

ECCV2010: feature learning for image classification, part 2

ECCV2010: feature learning for image classification, part 2zukun The document discusses sparse coding, an unsupervised machine learning technique for image representation. Sparse coding learns a dictionary of basic image features called bases from unlabeled image data. It then represents each image as a sparse linear combination of the bases. This produces a more compact representation than raw pixels and interprets images as combinations of basic visual concepts like edges. The technique was inspired by representations in the visual cortex and can be combined with features like SIFT for improved performance.

Yoyak ScalaDays 2015

Yoyak ScalaDays 2015ihji The document summarizes a presentation titled "Yoyak" given by Heejong Lee at ScalaDays 2015. The presentation introduces Yoyak, a static analysis framework developed by the speaker. It covers the following topics:

- Static analysis and abstract interpretation theory

- Implementation highlights of the Yoyak framework

- Experiences using Scala in developing Yoyak

- The roadmap for future development of Yoyak

Vectors data frames

Vectors data framesFAO FAO - Global Soil Partnership training on Digital Soil Organic Carbon Mapping by Mr. Yusuf Yigini, 20 - 24 January 2018, Tehran, Iran. (Day 2, 2)

Lecture 2: Stochastic Hydrology

Lecture 2: Stochastic Hydrology Amro Elfeki - The document discusses representation of stochastic processes in real and spectral domains and Monte Carlo sampling.

- Stochastic processes can be represented in the real (time or space) domain using autocorrelation and variogram functions, and in the spectral domain using power spectral density functions.

- Monte Carlo sampling uses techniques to generate random numbers from a probability density function for random sampling.

Computer graphics 2

Computer graphics 2Prabin Gautam The document discusses computer graphics concepts like points, pixels, lines, and circles. It begins with definitions of pixels and how they relate to points in geometry. It then covers the basic structure for specifying points in OpenGL and how to draw points, lines, and triangles. Next, it discusses algorithms for drawing lines, including the digital differential analyzer (DDA) method and Bresenham's line algorithm. Finally, it covers circle drawing and introduces the mid-point circle algorithm. In summary:

1) It defines key computer graphics concepts like pixels, points, lines, and circles.

2) It explains the basic OpenGL functions for drawing points and lines and provides examples of drawing simple shapes.

3) It

Seminar PSU 09.04.2013 - 10.04.2013 MiFIT, Arbuzov Vyacheslav

Seminar PSU 09.04.2013 - 10.04.2013 MiFIT, Arbuzov VyacheslavVyacheslav Arbuzov Using R in financial modeling provides an introduction to using R for financial applications. It discusses importing stock price data from various sources and visualizing it using basic graphs and technical indicators. It also covers topics like calculating returns, estimating distributions of returns, correlations, volatility modeling, and value at risk calculations. The document provides examples of commands and functions in R to perform these financial analytics tasks on sample stock price data.

Linear Algebra and Matlab tutorial

Linear Algebra and Matlab tutorialJia-Bin Huang - The document provides an introduction to linear algebra and MATLAB. It discusses various linear algebra concepts like vectors, matrices, tensors, and operations on them.

- It then covers key MATLAB topics - basic data types, vector and matrix operations, control flow, plotting, and writing efficient code.

- The document emphasizes how linear algebra and MATLAB are closely related and commonly used together in applications like image and signal processing.

Lesson_8_DeepLearning.pdf

Lesson_8_DeepLearning.pdfssuser7f0b19 Machine learning allows computers to learn from data without being explicitly programmed. There are two main types of machine learning: supervised learning, where data points have known outcomes used to train a model to predict unknown outcomes, and unsupervised learning, where data points have unknown outcomes and the model finds hidden patterns in the data. Machine learning algorithms build a mathematical model based on sample data, known as "training data", in order to make predictions or decisions without being explicitly programmed to perform the task.

Ad

Recently uploaded (20)

Process Mining and Official Statistics - CBS

Process Mining and Official Statistics - CBSProcess mining Evangelist Johan Lammers from Statistics Netherlands has been a business analyst and statistical researcher for almost 30 years. In their business, processes have two faces: You can produce statistics about processes and processes are needed to produce statistics. As a government-funded office, the efficiency and the effectiveness of their processes is important to spend that public money well.

Johan takes us on a journey of how official statistics are made. One way to study dynamics in statistics is to take snapshots of data over time. A special way is the panel survey, where a group of cases is followed over time. He shows how process mining could test certain hypotheses much faster compared to statistical tools like SPSS.

How to regulate and control your it-outsourcing provider with process mining

How to regulate and control your it-outsourcing provider with process miningProcess mining Evangelist Oliver Wildenstein is an IT process manager at MLP. As in many other IT departments, he works together with external companies who perform supporting IT processes for his organization. With process mining he found a way to monitor these outsourcing providers.

Rather than having to believe the self-reports from the provider, process mining gives him a controlling mechanism for the outsourced process. Because such analyses are usually not foreseen in the initial outsourcing contract, companies often have to pay extra to get access to the data for their own process.

2024-Media-Literacy-Index-Of-Ukrainians-ENG-SHORT.pdf

2024-Media-Literacy-Index-Of-Ukrainians-ENG-SHORT.pdfOlhaTatokhina1 Media Literacy Index of Ukrainians for 2024 and its dynamics compared to previous years.

Adopting Process Mining at the Rabobank - use case

Adopting Process Mining at the Rabobank - use caseProcess mining Evangelist Frank van Geffen is a Process Innovator at the Rabobank. He realized that it took a lot of different disciplines and skills working together to achieve what they have achieved. It's not only about knowing what process mining is and how to operate the process mining tool. Instead, a lot of emphasis needs to be placed on the management of stakeholders and on presenting insights in a meaningful way for them.

The results speak for themselves: In their IT service desk improvement project, they could already save 50,000 steps by reducing rework and preventing incidents from being raised. In another project, business expense claim turnaround time has been reduced from 11 days to 1.2 days. They could also analyze their cross-channel mortgage customer journey process.

定制(意大利Rimini毕业证)布鲁诺马代尔纳嘉雷迪米音乐学院学历认证

定制(意大利Rimini毕业证)布鲁诺马代尔纳嘉雷迪米音乐学院学历认证Taqyea 2025年新版意大利毕业证布鲁诺马代尔纳嘉雷迪米音乐学院文凭【q微1954292140】办理布鲁诺马代尔纳嘉雷迪米音乐学院毕业证(Rimini毕业证书)2025年新版毕业证书【q微1954292140】布鲁诺马代尔纳嘉雷迪米音乐学院offer/学位证、留信官方学历认证(永久存档真实可查)采用学校原版纸张、特殊工艺完全按照原版一比一制作【q微1954292140】Buy Conservatorio di Musica "B.Maderna G.Lettimi" Diploma购买美国毕业证,购买英国毕业证,购买澳洲毕业证,购买加拿大毕业证,以及德国毕业证,购买法国毕业证(q微1954292140)购买荷兰毕业证、购买瑞士毕业证、购买日本毕业证、购买韩国毕业证、购买新西兰毕业证、购买新加坡毕业证、购买西班牙毕业证、购买马来西亚毕业证等。包括了本科毕业证,硕士毕业证。

主营项目:

1、真实教育部国外学历学位认证《意大利毕业文凭证书快速办理布鲁诺马代尔纳嘉雷迪米音乐学院毕业证定购》【q微1954292140】《论文没过布鲁诺马代尔纳嘉雷迪米音乐学院正式成绩单》,教育部存档,教育部留服网站100%可查.

2、办理Rimini毕业证,改成绩单《Rimini毕业证明办理布鲁诺马代尔纳嘉雷迪米音乐学院办理文凭》【Q/WeChat:1954292140】Buy Conservatorio di Musica "B.Maderna G.Lettimi" Certificates《正式成绩单论文没过》,布鲁诺马代尔纳嘉雷迪米音乐学院Offer、在读证明、学生卡、信封、证明信等全套材料,从防伪到印刷,从水印到钢印烫金,高精仿度跟学校原版100%相同.

3、真实使馆认证(即留学人员回国证明),使馆存档可通过大使馆查询确认.

4、留信网认证,国家专业人才认证中心颁发入库证书,留信网存档可查.

《布鲁诺马代尔纳嘉雷迪米音乐学院留服认证意大利毕业证书办理Rimini文凭不见了怎么办》【q微1954292140】学位证1:1完美还原海外各大学毕业材料上的工艺:水印,阴影底纹,钢印LOGO烫金烫银,LOGO烫金烫银复合重叠。文字图案浮雕、激光镭射、紫外荧光、温感、复印防伪等防伪工艺。

高仿真还原意大利文凭证书和外壳,定制意大利布鲁诺马代尔纳嘉雷迪米音乐学院成绩单和信封。毕业证定制Rimini毕业证【q微1954292140】办理意大利布鲁诺马代尔纳嘉雷迪米音乐学院毕业证(Rimini毕业证书)【q微1954292140】学位证书制作代办流程布鲁诺马代尔纳嘉雷迪米音乐学院offer/学位证成绩单激光标、留信官方学历认证(永久存档真实可查)采用学校原版纸张、特殊工艺完全按照原版一比一制作。帮你解决布鲁诺马代尔纳嘉雷迪米音乐学院学历学位认证难题。

意大利文凭布鲁诺马代尔纳嘉雷迪米音乐学院成绩单,Rimini毕业证【q微1954292140】办理意大利布鲁诺马代尔纳嘉雷迪米音乐学院毕业证(Rimini毕业证书)【q微1954292140】安全可靠的布鲁诺马代尔纳嘉雷迪米音乐学院offer/学位证办理原版成绩单、留信官方学历认证(永久存档真实可查)采用学校原版纸张、特殊工艺完全按照原版一比一制作。帮你解决布鲁诺马代尔纳嘉雷迪米音乐学院学历学位认证难题。

意大利文凭购买,意大利文凭定制,意大利文凭补办。专业在线定制意大利大学文凭,定做意大利本科文凭,【q微1954292140】复制意大利Conservatorio di Musica "B.Maderna G.Lettimi" completion letter。在线快速补办意大利本科毕业证、硕士文凭证书,购买意大利学位证、布鲁诺马代尔纳嘉雷迪米音乐学院Offer,意大利大学文凭在线购买。

如果您在英、加、美、澳、欧洲等留学过程中或回国后:

1、在校期间因各种原因未能顺利毕业《Rimini成绩单工艺详解》【Q/WeChat:1954292140】《Buy Conservatorio di Musica "B.Maderna G.Lettimi" Transcript快速办理布鲁诺马代尔纳嘉雷迪米音乐学院教育部学历认证书毕业文凭证书》,拿不到官方毕业证;

2、面对父母的压力,希望尽快拿到;

3、不清楚认证流程以及材料该如何准备;

4、回国时间很长,忘记办理;

5、回国马上就要找工作《正式成绩单布鲁诺马代尔纳嘉雷迪米音乐学院文凭详解细节》【q微1954292140】《研究生文凭Rimini毕业证详解细节》办给用人单位看;

6、企事业单位必须要求办理的;

7、需要报考公务员、购买免税车、落转户口、申请留学生创业基金。

【q微1954292140】帮您解决在意大利布鲁诺马代尔纳嘉雷迪米音乐学院未毕业难题(Conservatorio di Musica "B.Maderna G.Lettimi" )文凭购买、毕业证购买、大学文凭购买、大学毕业证购买、买文凭、日韩文凭、英国大学文凭、美国大学文凭、澳洲大学文凭、加拿大大学文凭(q微1954292140)新加坡大学文凭、新西兰大学文凭、爱尔兰文凭、西班牙文凭、德国文凭、教育部认证,买毕业证,毕业证购买,买大学文凭,购买日韩毕业证、英国大学毕业证、美国大学毕业证、澳洲大学毕业证、加拿大大学毕业证(q微1954292140)新加坡大学毕业证、新西兰大学毕业证、爱尔兰毕业证、西班牙毕业证、德国毕业证,回国证明,留信网认证,留信认证办理,学历认证。从而完成就业。布鲁诺马代尔纳嘉雷迪米音乐学院毕业证办理,布鲁诺马代尔纳嘉雷迪米音乐学院文凭办理,布鲁诺马代尔纳嘉雷迪米音乐学院成绩单办理和真实留信认证、留服认证、布鲁诺马代尔纳嘉雷迪米音乐学院学历认证。学院文凭定制,布鲁诺马代尔纳嘉雷迪米音乐学院原版文凭补办,扫描件文凭定做,100%文凭复刻。

特殊原因导致无法毕业,也可以联系我们帮您办理相关材料:

1:在布鲁诺马代尔纳嘉雷迪米音乐学院挂科了,不想读了,成绩不理想怎么办???

2:打算回国了,找工作的时候,需要提供认证《Rimini成绩单购买办理布鲁诺马代尔纳嘉雷迪米音乐学院毕业证书范本》【Q/WeChat:1954292140】Buy Conservatorio di Musica "B.Maderna G.Lettimi" Diploma《正式成绩单论文没过》有文凭却得不到认证。又该怎么办???意大利毕业证购买,意大利文凭购买,

3:回国了找工作没有布鲁诺马代尔纳嘉雷迪米音乐学院文凭怎么办?有本科却要求硕士又怎么办?

录取通知书加拿大TMU毕业证多伦多都会大学电子版毕业证成绩单

录取通知书加拿大TMU毕业证多伦多都会大学电子版毕业证成绩单Taqyea 保密服务多伦多都会大学英文毕业证书影本加拿大成绩单多伦多都会大学文凭【q微1954292140】办理多伦多都会大学学位证(TMU毕业证书)成绩单VOID底纹防伪【q微1954292140】帮您解决在加拿大多伦多都会大学未毕业难题(Toronto Metropolitan University)文凭购买、毕业证购买、大学文凭购买、大学毕业证购买、买文凭、日韩文凭、英国大学文凭、美国大学文凭、澳洲大学文凭、加拿大大学文凭(q微1954292140)新加坡大学文凭、新西兰大学文凭、爱尔兰文凭、西班牙文凭、德国文凭、教育部认证,买毕业证,毕业证购买,买大学文凭,购买日韩毕业证、英国大学毕业证、美国大学毕业证、澳洲大学毕业证、加拿大大学毕业证(q微1954292140)新加坡大学毕业证、新西兰大学毕业证、爱尔兰毕业证、西班牙毕业证、德国毕业证,回国证明,留信网认证,留信认证办理,学历认证。从而完成就业。多伦多都会大学毕业证办理,多伦多都会大学文凭办理,多伦多都会大学成绩单办理和真实留信认证、留服认证、多伦多都会大学学历认证。学院文凭定制,多伦多都会大学原版文凭补办,扫描件文凭定做,100%文凭复刻。

特殊原因导致无法毕业,也可以联系我们帮您办理相关材料:

1:在多伦多都会大学挂科了,不想读了,成绩不理想怎么办???

2:打算回国了,找工作的时候,需要提供认证《TMU成绩单购买办理多伦多都会大学毕业证书范本》【Q/WeChat:1954292140】Buy Toronto Metropolitan University Diploma《正式成绩单论文没过》有文凭却得不到认证。又该怎么办???加拿大毕业证购买,加拿大文凭购买,【q微1954292140】加拿大文凭购买,加拿大文凭定制,加拿大文凭补办。专业在线定制加拿大大学文凭,定做加拿大本科文凭,【q微1954292140】复制加拿大Toronto Metropolitan University completion letter。在线快速补办加拿大本科毕业证、硕士文凭证书,购买加拿大学位证、多伦多都会大学Offer,加拿大大学文凭在线购买。

加拿大文凭多伦多都会大学成绩单,TMU毕业证【q微1954292140】办理加拿大多伦多都会大学毕业证(TMU毕业证书)【q微1954292140】学位证书电子图在线定制服务多伦多都会大学offer/学位证offer办理、留信官方学历认证(永久存档真实可查)采用学校原版纸张、特殊工艺完全按照原版一比一制作。帮你解决多伦多都会大学学历学位认证难题。

主营项目:

1、真实教育部国外学历学位认证《加拿大毕业文凭证书快速办理多伦多都会大学毕业证书不见了怎么办》【q微1954292140】《论文没过多伦多都会大学正式成绩单》,教育部存档,教育部留服网站100%可查.

2、办理TMU毕业证,改成绩单《TMU毕业证明办理多伦多都会大学学历认证定制》【Q/WeChat:1954292140】Buy Toronto Metropolitan University Certificates《正式成绩单论文没过》,多伦多都会大学Offer、在读证明、学生卡、信封、证明信等全套材料,从防伪到印刷,从水印到钢印烫金,高精仿度跟学校原版100%相同.

3、真实使馆认证(即留学人员回国证明),使馆存档可通过大使馆查询确认.

4、留信网认证,国家专业人才认证中心颁发入库证书,留信网存档可查.

《多伦多都会大学学位证购买加拿大毕业证书办理TMU假学历认证》【q微1954292140】学位证1:1完美还原海外各大学毕业材料上的工艺:水印,阴影底纹,钢印LOGO烫金烫银,LOGO烫金烫银复合重叠。文字图案浮雕、激光镭射、紫外荧光、温感、复印防伪等防伪工艺。

高仿真还原加拿大文凭证书和外壳,定制加拿大多伦多都会大学成绩单和信封。学历认证证书电子版TMU毕业证【q微1954292140】办理加拿大多伦多都会大学毕业证(TMU毕业证书)【q微1954292140】毕业证书样本多伦多都会大学offer/学位证学历本科证书、留信官方学历认证(永久存档真实可查)采用学校原版纸张、特殊工艺完全按照原版一比一制作。帮你解决多伦多都会大学学历学位认证难题。

多伦多都会大学offer/学位证、留信官方学历认证(永久存档真实可查)采用学校原版纸张、特殊工艺完全按照原版一比一制作【q微1954292140】Buy Toronto Metropolitan University Diploma购买美国毕业证,购买英国毕业证,购买澳洲毕业证,购买加拿大毕业证,以及德国毕业证,购买法国毕业证(q微1954292140)购买荷兰毕业证、购买瑞士毕业证、购买日本毕业证、购买韩国毕业证、购买新西兰毕业证、购买新加坡毕业证、购买西班牙毕业证、购买马来西亚毕业证等。包括了本科毕业证,硕士毕业证。

717239550-Hotel-Management-Ppt-Final.pptx

717239550-Hotel-Management-Ppt-Final.pptxdharmendrasingh31102 iydqgdutauybdibafyvseufvsVhsjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjvCSUYEVkSJekiyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyfgyyyyyyyyyyyyyyyyyyyyyyyiydqgdutauybdibafyvseufvsVhsjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjvCSUYEVkSJekiyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyfgyyyyyyyyyyyyyyyyyyyyyyyiydqgdutauybdibafyvseufvsVhsjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjvCSUYEVkSJekiyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyfgyyyyyyyyyyyyyyyyyyyyyyyiydqgdutauybdibafyvseufvsVhsjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjvCSUYEVkSJekiyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyfgyyyyyyyyyyyyyyyyyyyyyyyiydqgdutauybdibafyvseufvsVhsjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjvCSUYEVkSJekiyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyfgyyyyyyyyyyyyyyyyyyyyyyyiydqgdutauybdibafyvseufvsVhsjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjvCSUYEVkSJekiyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyfgyyyyyyyyyyyyyyyyyyyyyyyiydqgdutauybdibafyvseufvsVhsjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjvCSUYEVkSJekiyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyfgyyyyyyyyyyyyyyyyyyyyyyyiydqgdutauybdibafyvseufvsVhsjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjvCSUYEVkSJekiyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyfgyyyyyyyyyyyyyyyyyyyyyyyiydqgdutauybdibafyvseufvsVhsjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjvCSUYEVkSJekiyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyfgyyyyyyyyyyyyyyyyyyyyyyyiydqgdutauybdibafyvseufvsVhsjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjvCSUYEVkSJekiyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyfgyyyyyyyyyyyyyyyyyyyyyyyiydqgdutauybdibafyvseufvsVhsjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjjvCSUYEVkSJekiyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyy

Process Mining at AE - Key success factors

Process Mining at AE - Key success factorsProcess mining Evangelist Bram Vanschoenwinkel is a Business Architect at AE. Bram first heard about process mining in 2008 or 2009, when he was searching for new techniques with a quantitative approach to process analysis. By now he has completed several projects in payroll accounting, public administration, and postal services.

The discovered AS IS process models are based on facts rather than opinions and, therefore, serve as the ideal starting point for change. Bram uses process mining not as a standalone technique but complementary and in combination with other techniques to focus on what is really important: Actually improving the process.

Suncorp - Integrating Process Mining at Australia's Largest Insurer

Suncorp - Integrating Process Mining at Australia's Largest InsurerProcess mining Evangelist Mitchell Cunningham is a process analyst with experience across the business process management lifecycle. He has a particular interest in process performance measurement and the integration of process performance data into existing process management methodologies.

Suncorp has an established BPM team and a single claims-processing IT platform. They have been integrating process mining into their process management methodology at a range of points across the process lifecycle. They have also explored connecting process mining results to service process outcome measures, like customer satisfaction. Mitch gives an overview of the key successes, challenges and lessons learned.

FPET_Implementation_2_MA to 360 Engage Direct.pptx

FPET_Implementation_2_MA to 360 Engage Direct.pptxssuser4ef83d Engage Direct 360 marketing optimization sas entreprise miner

Deloitte Analytics - Applying Process Mining in an audit context

Deloitte Analytics - Applying Process Mining in an audit contextProcess mining Evangelist Mieke Jans is a Manager at Deloitte Analytics Belgium. She learned about process mining from her PhD supervisor while she was collaborating with a large SAP-using company for her dissertation.

Mieke extended her research topic to investigate the data availability of process mining data in SAP and the new analysis possibilities that emerge from it. It took her 8-9 months to find the right data and prepare it for her process mining analysis. She needed insights from both process owners and IT experts. For example, one person knew exactly how the procurement process took place at the front end of SAP, and another person helped her with the structure of the SAP-tables. She then combined the knowledge of these different persons.

Automation Platforms and Process Mining - success story

Automation Platforms and Process Mining - success storyProcess mining Evangelist

Zig Websoftware creates process management software for housing associations. Their workflow solution is used by the housing associations to, for instance, manage the process of finding and on-boarding a new tenant once the old tenant has moved out of an apartment.

Paul Kooij shows how they could help their customer WoonFriesland to improve the housing allocation process by analyzing the data from Zig's platform. Every day that a rental property is vacant costs the housing association money.

But why does it take so long to find new tenants? For WoonFriesland this was a black box. Paul explains how he used process mining to uncover hidden opportunities to reduce the vacancy time by 4,000 days within just the first six months.

新西兰文凭奥克兰理工大学毕业证书AUT成绩单补办

新西兰文凭奥克兰理工大学毕业证书AUT成绩单补办Taqyea 快速办理新西兰成绩单奥克兰理工大学毕业证【q微1954292140】办理奥克兰理工大学毕业证(AUT毕业证书)diploma学位认证【q微1954292140】新西兰文凭购买,新西兰文凭定制,新西兰文凭补办。专业在线定制新西兰大学文凭,定做新西兰本科文凭,【q微1954292140】复制新西兰Auckland University of Technology completion letter。在线快速补办新西兰本科毕业证、硕士文凭证书,购买新西兰学位证、奥克兰理工大学Offer,新西兰大学文凭在线购买。

主营项目:

1、真实教育部国外学历学位认证《新西兰毕业文凭证书快速办理奥克兰理工大学毕业证的方法是什么?》【q微1954292140】《论文没过奥克兰理工大学正式成绩单》,教育部存档,教育部留服网站100%可查.

2、办理AUT毕业证,改成绩单《AUT毕业证明办理奥克兰理工大学展示成绩单模板》【Q/WeChat:1954292140】Buy Auckland University of Technology Certificates《正式成绩单论文没过》,奥克兰理工大学Offer、在读证明、学生卡、信封、证明信等全套材料,从防伪到印刷,从水印到钢印烫金,高精仿度跟学校原版100%相同.

3、真实使馆认证(即留学人员回国证明),使馆存档可通过大使馆查询确认.

4、留信网认证,国家专业人才认证中心颁发入库证书,留信网存档可查.

《奥克兰理工大学毕业证定制新西兰毕业证书办理AUT在线制作本科文凭》【q微1954292140】学位证1:1完美还原海外各大学毕业材料上的工艺:水印,阴影底纹,钢印LOGO烫金烫银,LOGO烫金烫银复合重叠。文字图案浮雕、激光镭射、紫外荧光、温感、复印防伪等防伪工艺。

高仿真还原新西兰文凭证书和外壳,定制新西兰奥克兰理工大学成绩单和信封。专业定制国外毕业证书AUT毕业证【q微1954292140】办理新西兰奥克兰理工大学毕业证(AUT毕业证书)【q微1954292140】学历认证复核奥克兰理工大学offer/学位证成绩单定制、留信官方学历认证(永久存档真实可查)采用学校原版纸张、特殊工艺完全按照原版一比一制作。帮你解决奥克兰理工大学学历学位认证难题。

新西兰文凭奥克兰理工大学成绩单,AUT毕业证【q微1954292140】办理新西兰奥克兰理工大学毕业证(AUT毕业证书)【q微1954292140】学位认证要多久奥克兰理工大学offer/学位证在线制作硕士成绩单、留信官方学历认证(永久存档真实可查)采用学校原版纸张、特殊工艺完全按照原版一比一制作。帮你解决奥克兰理工大学学历学位认证难题。

奥克兰理工大学offer/学位证、留信官方学历认证(永久存档真实可查)采用学校原版纸张、特殊工艺完全按照原版一比一制作【q微1954292140】Buy Auckland University of Technology Diploma购买美国毕业证,购买英国毕业证,购买澳洲毕业证,购买加拿大毕业证,以及德国毕业证,购买法国毕业证(q微1954292140)购买荷兰毕业证、购买瑞士毕业证、购买日本毕业证、购买韩国毕业证、购买新西兰毕业证、购买新加坡毕业证、购买西班牙毕业证、购买马来西亚毕业证等。包括了本科毕业证,硕士毕业证。

特殊原因导致无法毕业,也可以联系我们帮您办理相关材料:

1:在奥克兰理工大学挂科了,不想读了,成绩不理想怎么办???

2:打算回国了,找工作的时候,需要提供认证《AUT成绩单购买办理奥克兰理工大学毕业证书范本》【Q/WeChat:1954292140】Buy Auckland University of Technology Diploma《正式成绩单论文没过》有文凭却得不到认证。又该怎么办???新西兰毕业证购买,新西兰文凭购买,

【q微1954292140】帮您解决在新西兰奥克兰理工大学未毕业难题(Auckland University of Technology)文凭购买、毕业证购买、大学文凭购买、大学毕业证购买、买文凭、日韩文凭、英国大学文凭、美国大学文凭、澳洲大学文凭、加拿大大学文凭(q微1954292140)新加坡大学文凭、新西兰大学文凭、爱尔兰文凭、西班牙文凭、德国文凭、教育部认证,买毕业证,毕业证购买,买大学文凭,购买日韩毕业证、英国大学毕业证、美国大学毕业证、澳洲大学毕业证、加拿大大学毕业证(q微1954292140)新加坡大学毕业证、新西兰大学毕业证、爱尔兰毕业证、西班牙毕业证、德国毕业证,回国证明,留信网认证,留信认证办理,学历认证。从而完成就业。奥克兰理工大学毕业证办理,奥克兰理工大学文凭办理,奥克兰理工大学成绩单办理和真实留信认证、留服认证、奥克兰理工大学学历认证。学院文凭定制,奥克兰理工大学原版文凭补办,扫描件文凭定做,100%文凭复刻。

hersh's midterm project.pdf music retail and distribution

hersh's midterm project.pdf music retail and distributionhershtara1 midterm project for music retail and distribution

Customer Segmentation using K-Means clustering

Customer Segmentation using K-Means clusteringIngrid Nyakerario This project demonstrates the application of machine learning—specifically K-Means Clustering—to segment customers based on behavioral and demographic data. The objective is to identify distinct customer groups to enable targeted marketing strategies and personalized customer engagement.

The presentation walks through:

Data preprocessing and exploratory data analysis (EDA)

Feature scaling and dimensionality reduction

K-Means clustering and silhouette analysis

Insights and business recommendations from each customer segment

This work showcases practical data science skills applied to a real-world business problem, using Python and visualization tools to generate actionable insights for decision-makers.

indonesia-gen-z-report-2024 Gen Z (born between 1997 and 2012) is currently t...

indonesia-gen-z-report-2024 Gen Z (born between 1997 and 2012) is currently t...disnakertransjabarda Gen Z (born between 1997 and 2012) is currently the biggest generation group in Indonesia with 27.94% of the total population or. 74.93 million people.

Analysis of Billboards hot 100 toop five hit makers on the chart.docx

Analysis of Billboards hot 100 toop five hit makers on the chart.docxhershtara1 We had to do an analysis of the top 5 on the billboard 100

Chapter-3-PROBLEM-SOLVING.pdf hhhhhhhhhh

Chapter-3-PROBLEM-SOLVING.pdf hhhhhhhhhhChrisjohnAlfiler math fhxjrjxtckcktcktckttkctkctkvtkvtkvtovyovyovoyvtovvlyyovvoyvyotvlvottovtovvotvtovtotvovotvotvtovtovtovotvtlvltlvtvlt

How to regulate and control your it-outsourcing provider with process mining

How to regulate and control your it-outsourcing provider with process miningProcess mining Evangelist

indonesia-gen-z-report-2024 Gen Z (born between 1997 and 2012) is currently t...

indonesia-gen-z-report-2024 Gen Z (born between 1997 and 2012) is currently t...disnakertransjabarda

Ad

Convolutional Neural Network (CNN) presentation from theory to code in Theano

- 1. M&S Convolutional Neural Network from Theory to Code Seongwon Hwang

- 5. M&S Range 𝑎 𝑏 𝑐 𝑑 𝑎 𝑏 𝑐 𝑑 𝑒 𝑓 𝑔 ℎ 𝑖 𝑎 𝑏 𝑒 𝑓 𝑐 𝑑 𝑔 ℎ 𝑖 𝑗 𝑚 𝑛 𝑘 𝑙 𝑜 𝑝 𝐴𝑖𝑗 i = 2, j = 2 i = 3, j = 3 i = 4, j = 4 𝑎 𝑏 𝑐 𝑑 𝑒 𝑓 i = 3, j = 2

- 6. M&S Free index : unrepeated index 𝑦1 = 𝑎11 𝑥1+𝑎12 𝑥2 𝑦2 = 𝑎21 𝑥1+𝑎22 𝑥2 𝑦 𝑘 = 𝑎 𝑘1 𝑥1+𝑎 𝑘2 𝑥2, k = 1,2 2 1i ikik xay ),( 21 yyy

- 7. M&S Dummy index : repeated index 1,2kixay ikik , Free index Dummy index 2 1i ikik xay 𝑦1 = 𝑎11 𝑥1+𝑎12 𝑥2 𝑦2 = 𝑎21 𝑥1+𝑎22 𝑥2

- 8. M&S Example iji BA jj BABA 2211 = 212111,1 BABAj 222121,2 BABAj ),( 222121212111 BABABABA iji BA = i, j = 1,2

- 9. M&S Example ijjA 332211 iii AAA ),,( 333322311233222211133122111 AAAAAAAAA 133122111,1 AAAi 233222211,2 AAAi 333322311,3 AAAi = i, j = 1,2,3

- 11. M&S Example – Determinant bcad dc ba aa aa A detdetdet 2221 1211 bcad aaaa aaeaaeaaeaae aae jiij 00 21122211 221222211221221112211111 21

- 12. M&S Example – Determinant )(...det 21... matrixNNaaaeA Nkjikij

- 14. M&S Derivatives kjjkjj xx y y x y y 2 ,, , 1,2,3jφ x φ z φ y φ x φ φgrad jj ),,( , Example – Gradient

- 15. M&S CNN Tensor notation in Theano - Input Images - 4D tensor 1D tensor [number of feature maps at layer m, number of feature maps at layer m-1, filter height, filter width] ij klx op qrW mb [ i, j, k, l ] = [ o, p, q, r ] = [ m ] = - Weight - - Bias - [n’th feature map number] [mini-batch size, number of input feature maps, image height, image width]

- 17. M&S Convolution? daatwax twxty )()( ))(()( a anwaxny ][][][ - Continuous Variables - - Discrete Variables -

- 18. M&S Convolution? a anwaxny ][][][ - Discrete Variables - ][][ awax ][][ awax )]([][ nawax Y-axis transformation

- 20. M&S Cross-Correlation? a nawaxnwxny ][][])[(][ - Discrete Variables (In real number) - ][][ awax ][][ nawax n step move ★

- 22. M&S Cross-Correlation in 2D Output (y) Kernel (w) Input (x) n m nmwjnimx jiwxjiy ],[],[ ],)[(],[

- 27. M&S wx ★ Intuition for Cross-Correlation Input )( mapFreature neuronHidden fieldrecptiveLocal filterorkernel

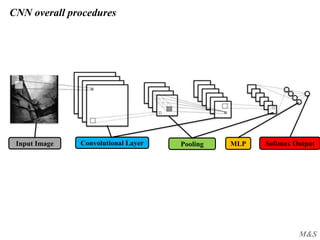

- 29. M&S CNN overall procedures Input Image Convolutional Layer Pooling MLP Softmax Output

- 30. M&S CNN overall procedures Input Image Convolutional Layer Pooling MLP Softmax Output

- 31. M&S Input Image Input Image 1D - Input neurons 2D - Input neurons

- 32. M&S Convolutional Layer Input Image Convolutional Layer Input neurons Hidden neuron

- 33. M&S Traditional Neural Network Input layer Hidden layer Output layer

- 34. M&S CNN - Sparse Connectivity Input layer Hidden layer (Feature map) Output layer

- 35. M&S CNN – Dimension shrinkage ( + Pooling) Input layer Output layerHidden layer (Feature map)

- 36. M&S Cross-Correlation Input (or Hidden) layer with weight Input layer Output layer 1W 2W 3W '3W '2W '1W Hidden layer (Feature map)(Receptive field)

- 37. M&S Shared Weight representation Input layer Output layer 1W 2W 3W '3W '2W '1W Hidden layer (Feature map)

- 38. M&S Shared Weight representation Input layer Output layer 1W 2W 3W '3W '2W '1W Hidden layer (Feature map)

- 39. M&S Shared Weight representation Input layer Output layer 1W 2W 3W '3W '2W '1W Hidden layer (Feature map)

- 40. M&S Shared Weight representation Input layer Output layer 1W 2W 3W '3W '2W '1W Hidden layer (Feature map)

- 41. M&S Shared Weight representation Input layer Output layer 1W 2W 3W '3W '2W '1W Hidden layer (Feature map)

- 42. M&S Shared Weight representation Input layer Output layer 1W 2W 3W '3W '2W '1W Hidden layer (Feature map)

- 43. M&S Multiple Feature maps Input Image Convolutional Layer Input neurons First hidden layer

- 44. M&S Max Pooling Input Image Convolutional Layer Pooling Max pooling with 2x2 filters and stride 2

- 45. M&S Why pooling? Input Image Convolutional Layer Pooling 1. Low computation 2. Translation invariance 3. Transformation invariance 4. Scaling invariance

- 46. M&S Several types of Pooling Input Image Convolutional Layer Pooling

- 47. M&S Transform data dimension before MLP Input Image Convolutional Layer Pooling MLP 1D - Output neurons 2D - Output neurons

- 48. M&S Multilayer Perceptron (MLP) Input Image Convolutional Layer Pooling MLP Input layer Hidden layer 1 Hidden layer 2

- 49. M&S Softmax Output Input Image Convolutional Layer Pooling MLP Softmax Output Hidden layer 2 Output layer

- 50. M&S Several types of CNN

- 51. M&S Intuition for CNN Input Image Convolutional Layer Pooling MLP Softmax Output

- 52. M&S Convolutional Neural Network Code in Theono

- 53. M&S CNN overall procedures Input Image Convolutional Layer Pooling MLP Softmax Output

- 54. M&S Input Image Input Image - Input Images - 4D tensor [mini-batch size, number of input feature maps, image height, image width]ij klx 5 ... 28 28 500 7 [ i, j, k, l ] = Mini batch 1 5 ... 28 28 500 8 Mini batch 100 . . . 50,000 images in the training data

- 55. M&S Weight tensor Input Image Convolutional Layer 4D tensor [number of feature maps at layer m, number of feature maps at layer m-1, filter height, filter width] op qrW [ o, p, q, r ] = - Weight -

- 56. M&S Exercise for Input and Weight tensor 11 11x 11 11W Input layer Convolutional layer 1 Convolutional layer 2 [ 1, 1, 1, 1 ] [ 1, 1, 1, 1 ]

- 57. M&S Code for Convolutional Layer 28 28 8 def evaluate_lenet5(learning_rate=0.1, n_epochs=2, dataset=‘minist.pkl.gz’, nkerns=[20, 50], batch_size=500): LeNetConvPoolLayer image_shape=(batch_size, 1, 28, 28) filter_shape=(nkerns[0], 1, 5, 5) poolsize=(2, 2) image_shape=(batch_size, nkerns[0], 12, 12) filter_shape=(nkerns[1], nkerns[0], 5, 5) poolsize=(2, 2) Layer0 – Convolutional layer 1 Layer1 – Convolutional layer 2 5 5 20 24 24 20 5 5 12 12 8 8 4 4 20 50 50 50 28 – 5 + 1 = 24 Convolution 24 / 2 = 12 Pooling 12 – 5 + 1 = 8 Convolution 8 / 2 = 4 Pooling Class

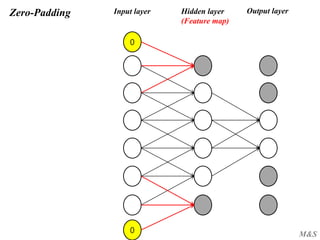

- 58. M&S Zero-Padding Input layer Output layerHidden layer (Feature map) Input Image Convolutional Layer Pooling

- 59. M&S Zero-Padding Input layer Output layerHidden layer (Feature map) 0 0

- 60. M&S Zero-Padding Input layer Output layerHidden layer (Feature map) 0 0

- 61. M&S Zero-Padding Input layer Output layerHidden layer (Feature map) 0 0

- 62. M&S Zero-Padding Input layer Output layerHidden layer (Feature map) 0 0

- 63. M&S Zero-Padding Input layer Output layerHidden layer (Feature map) 0 0 0 0

- 64. M&S Zero-Padding Input layer Output layerHidden layer (Feature map) 0 0 0 0

- 71. M&S Zero-Padding No zero-padding Zero-padding 1 Zero-padding 2 Dimension Reduction Dimension Equality Dimension Increase Zero- padding in Theano Default in Theano

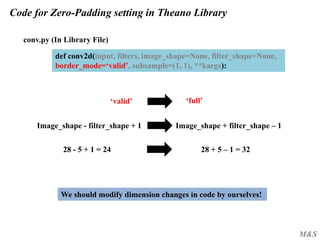

- 72. M&S Code for Zero-Padding setting in Theano Library conv.py (In Library File) def conv2d(input, filters, image_shape=None, filter_shape=None, border_mode=‘valid’, subsample=(1, 1), **kargs): ‘valid’ ‘full’ We should modify dimension changes in code by ourselves! Image_shape + filter_shape – 1Image_shape - filter_shape + 1 28 + 5 – 1 = 3228 - 5 + 1 = 24

- 73. M&S 1. Border in Pooling No problem in Border Ingore_border = False Ingore_border = True

- 74. M&S 1. Border in Pooling (Code) No problem in Border Ingore_border = False Ingore_border = True pooled_out = downsample.max_pool_2d(input=conv_out, ds=poolsize, ignore_border=True) LeNetConvPoolLayer Class Default in Theano library is False!

- 75. M&S 2. Stride in Pooling Default in Theano stride size = poolsize Unduplicated!

- 76. M&S 2. Stride in Pooling 2 Default in Theano stride size = poolsize Unduplicated!

- 77. M&S 2. Stride in Pooling 2 Default in Theano stride size = poolsize = (2,2) Unduplicated!

- 78. M&S 2. Stride in Pooling 2 Default in Theano stride size = poolsize = (2,2) Unduplicated!

- 79. M&S 2. Stride in Pooling 2 Default in Theano stride size = poolsize = (2,2) Unduplicated!

- 80. M&S 2. Stride in Pooling stride size = (1, 1) 6

- 81. M&S 2. Stride in Pooling 1 76

- 82. M&S 2. Stride in Pooling (Code) 76 8 6 7 8 3 3 4 pooled_out = downsample.max_pool_2d(input=conv_out, ds=poolsize, ignore_border=True) pooled_out = downsample.max_pool_2d(input=conv_out, ds=poolsize, ignore_border=True, st = (1,1))

- 83. M&S Activation Function in Convolutional Layer self.output=T.tanh(pooled_out + self.b.dimshuffle(‘x’,0,‘x’,‘x’) Convolution Pooling Activation LeNetConvPoolLayer Class

- 84. M&S Dimension Reduction 2D 1D Input Image Convolutional Layer Pooling MLP layer2_input = layer1_input.output.flatten(2) 1D - Output neurons 2D - Output neurons

- 85. M&S Code for MLP in Theano Input Image Convolutional Layer Pooling MLP layer2 = HiddenLayer( rng, input=layer2_input, n_in-nkerns[1] * 4 * 4, n_out=500, activation = T.tanh) HiddenLayer Class Last output size for C+P Number of node at Hidden layer Activation function at Hidden layer ***In order to extend the number of Hidden Layer in MLP, We need to make layer3 by copying this code***

- 86. M&S Code for Softmax Output in Theano Input Image Convolutional Layer Pooling MLP Softmax Output layer3 = LogisticRegression( input=layer2.output, n_in=500, n_out=10) LogisticRegression Class cost = layer3.negative_log_likelihood(y) Number of node at previous Hidden layer Final Output size Ex) 0, 1, 2, ,,, 9 Softmax activation function

- 87. M&S CNN application in bioinformatics problem