Core Services behind Spark Job Execution

2 likes665 views

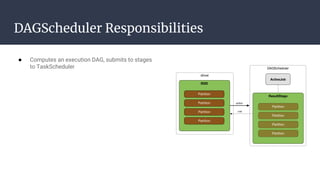

The DAGScheduler is responsible for computing the DAG of stages for a Spark job and submitting them to the TaskScheduler. The TaskScheduler then submits individual tasks from each stage for execution and works with the DAGScheduler to handle failures through task and stage retries. Together, the DAGScheduler and TaskScheduler coordinate the execution of jobs by breaking them into independent stages of parallel tasks across executor nodes.

1 of 23

Downloaded 33 times

Ad

Recommended

Introduction to Structured streaming

Introduction to Structured streamingdatamantra This document provides an introduction to Structured Streaming in Apache Spark. It discusses the evolution of stream processing, drawbacks of the DStream API, and advantages of Structured Streaming. Key points include: Structured Streaming models streams as infinite tables/datasets, allowing stream transformations to be expressed using SQL and Dataset APIs; it supports features like event time processing, state management, and checkpointing for fault tolerance; and it allows stream processing to be combined more easily with batch processing using the common Dataset abstraction. The document also provides examples of reading from and writing to different streaming sources and sinks using Structured Streaming.

Migrating to Spark 2.0 - Part 2

Migrating to Spark 2.0 - Part 2datamantra This document discusses best practices for migrating Spark applications from version 1.x to 2.0. It covers new features in Spark 2.0 like the Dataset API, catalog API, subqueries and checkpointing for iterative algorithms. The document recommends changes to existing best practices around choice of serializer, cache format, use of broadcast variables and choice of cluster manager. It also discusses how Spark 2.0's improved SQL support impacts use of HiveContext.

Exploratory Data Analysis in Spark

Exploratory Data Analysis in Sparkdatamantra This document discusses exploratory data analysis (EDA) techniques that can be performed on large datasets using Spark and notebooks. It covers generating a five number summary, detecting outliers, creating histograms, and visualizing EDA results. EDA is an interactive process for understanding data distributions and relationships before modeling. Spark enables interactive EDA on large datasets using notebooks for visualizations and Pandas for local analysis.

Productionalizing Spark ML

Productionalizing Spark MLdatamantra This document discusses best practices for productionalizing machine learning models built with Spark ML. It covers key stages like data preparation, model training, and operationalization. For data preparation, it recommends handling null values, missing data, and data types as custom Spark ML stages within a pipeline. For training, it suggests sampling data for testing and caching only required columns to improve efficiency. For operationalization, it discusses persisting models, validating prediction schemas, and extracting feature names from pipelines. The goal is to build robust, scalable and efficient ML workflows with Spark ML.

Structured Streaming with Kafka

Structured Streaming with Kafkadatamantra This document provides an overview of structured streaming with Kafka in Spark. It discusses data collection vs ingestion and why they are key. It also covers Kafka architecture and terminology. It describes how Spark integrates with Kafka for streaming data sources. It explains checkpointing in structured streaming and using Kafka as a sink. The document discusses delivery semantics and how Spark supports exactly-once semantics with certain output stores. Finally, it outlines new features in Kafka for exactly-once guarantees and the future of structured streaming.

Understanding time in structured streaming

Understanding time in structured streamingdatamantra This document discusses time abstractions in structured streaming. It introduces process time, event time, and ingestion time. It explains how to use the window API to apply windows over these different time abstractions. It also discusses handling late events using watermarks and implementing non-time based windows using custom state management and sessionization.

Migrating to spark 2.0

Migrating to spark 2.0datamantra Spark 2.0 introduces several major changes including using Dataset as the main abstraction, replacing RDDs for optimized performance. The migration involves updating to Scala 2.11, replacing contexts with SparkSession, using built-in CSV connector, updating RDD-based code to use Dataset APIs, adding checks for cross joins, and updating custom ML transformers. Migrating leverages many of the improvements in Spark 2.0 while addressing breaking changes.

Introduction to Structured Streaming

Introduction to Structured Streamingdatamantra Spark Streaming 2.0 introduces Structured Streaming which addresses some areas for improvement in Spark Streaming 1.X. Structured Streaming builds streaming queries on the Spark SQL engine, providing implicit benefits like extending the primary batch API to streaming and gaining an optimizer. It introduces a more seamless API between batch and stream processing, supports event time semantics, and provides end-to-end fault tolerance guarantees through checkpointing. Structured Streaming also aims to simplify streaming application development by managing streaming queries and allowing continuous queries to be started, stopped, and modified more gracefully.

Evolution of apache spark

Evolution of apache sparkdatamantra 1) Spark 1.0 was released in 2014 as the first production-ready version containing Spark batch, streaming, Shark, and machine learning libraries.

2) By 2014, most big data processing used higher-level tools like Hive and Pig on structured data rather than the original MapReduce assumption of only unstructured data.

3) Spark evolved to support structured data through the DataFrame API in versions 1.2-1.3, providing a unified way to read from structured sources.

Building real time Data Pipeline using Spark Streaming

Building real time Data Pipeline using Spark Streamingdatamantra This document summarizes the key challenges and solutions in building a real-time data pipeline that ingests data from a database, transforms it using Spark Streaming, and publishes the output to Salesforce. The pipeline aims to have a latency of 1 minute with zero data loss and ordering guarantees. Some challenges discussed include handling out of sequence and late arrival events, schema evolution, bootstrap loading, data loss/corruption, and diagnosing issues. Solutions proposed use Kafka, checkpointing, replay capabilities, and careful broker/connect setups to help meet the reliability requirements for the pipeline.

Introduction to concurrent programming with Akka actors

Introduction to concurrent programming with Akka actorsShashank L This document provides an introduction to concurrent programming with Akka Actors. It discusses concurrency and parallelism, how the end of Moore's Law necessitated a shift to concurrent programming, and introduces key concepts of actors including message passing concurrency, actor systems, actor operations like create and send, routing, supervision, configuration and testing. Remote actors are also discussed. Examples are provided in Scala.

Introduction to Flink Streaming

Introduction to Flink Streamingdatamantra This document provides an introduction to Apache Flink and its streaming capabilities. It discusses stream processing as an abstraction and how Flink uses streams as a core abstraction. It compares Flink streaming to Spark streaming and outlines some of Flink's streaming features like windows, triggers, and how it handles event time.

Introduction to Datasource V2 API

Introduction to Datasource V2 APIdatamantra A brief introduction to Datasource V2 API in Spark 2.3.0, Comparison with the previous Datasource API.

Productionalizing a spark application

Productionalizing a spark applicationdatamantra 1. The document discusses the process of productionalizing a financial analytics application built on Spark over multiple iterations. It started with data scientists using Python and data engineers porting code to Scala RDDs. They then moved to using DataFrames and deployed on EMR.

2. Issues with code quality and testing led to adding ScalaTest, PR reviews, and daily Jenkins builds. Architectural challenges were addressed by moving to Databricks Cloud which provided notebooks, jobs, and throwaway clusters.

3. Future work includes using Spark SQL windows and Dataset API for stronger typing and schema support. The iterations improved the code, testing, deployment, and use of latest Spark features.

State management in Structured Streaming

State management in Structured Streamingdatamantra This document discusses state management in Apache Spark Structured Streaming. It begins by introducing Structured Streaming and differentiating between stateless and stateful stream processing. It then explains the need for state stores to manage intermediate data in stateful processing. It describes how state was managed inefficiently in old Spark Streaming using RDDs and snapshots, and how Structured Streaming improved on this with its decoupled, asynchronous, and incremental state persistence approach. The document outlines Apache Spark's implementation of storing state to HDFS and the involved code entities. It closes by discussing potential issues with this approach and how embedded stores like RocksDB may help address them in production stream processing systems.

Spark on Kubernetes

Spark on Kubernetesdatamantra Spark can run on Kubernetes containers in two ways - as a static cluster or with native integration. As a static cluster, Spark pods are manually deployed without autoscaling. Native integration treats Kubernetes as a resource manager, allowing Spark to dynamically acquire and release containers like in YARN. It uses Kubernetes custom controllers to create driver pods that then launch worker pods. This provides autoscaling of resources based on job demands.

Building distributed processing system from scratch - Part 2

Building distributed processing system from scratch - Part 2datamantra Continuation of http://www.slideshare.net/datamantra/building-distributed-systems-from-scratch-part-1

Building Distributed Systems from Scratch - Part 1

Building Distributed Systems from Scratch - Part 1datamantra Meetup talk about building distributed systems like Spark from scratch on top of frameworks like Apache Mesos and YARN

Interactive Data Analysis in Spark Streaming

Interactive Data Analysis in Spark Streamingdatamantra This document discusses strategies for building interactive streaming applications in Spark Streaming. It describes using Zookeeper as a dynamic configuration source to allow modifying a Spark Streaming application's behavior at runtime. The key points are:

- Zookeeper can be used to track configuration changes and trigger Spark Streaming context restarts through its watch mechanism and Curator library.

- This allows building interactive applications that can adapt to configuration updates without needing to restart the whole streaming job.

- Examples are provided of using Curator caches like node and path caches to monitor Zookeeper for changes and restart Spark Streaming contexts in response.

Introduction to dataset

Introduction to datasetdatamantra The document introduces the Dataset API in Spark, which provides type safety and performance benefits over DataFrames. Datasets allow operating on domain objects using compiled functions rather than Rows. Encoders efficiently serialize objects to and from the JVM. This allows type checking of operations and retaining objects in distributed operations. The document outlines the history of Spark APIs, limitations of DataFrames, and how Datasets address these through compiled encoding and working with case classes rather than Rows.

Understanding Implicits in Scala

Understanding Implicits in Scaladatamantra Implicit parameters and implicit conversions are Scala language features that allow omitting explicit calls to methods or variables. Implicits enable concise and elegant code through features like dependency injection, context passing, and ad hoc polymorphism. Implicits resolve types at compile-time rather than runtime. While powerful, implicits can cause conflicts and slow compilation if overused. Frameworks like Scala collections, Spark, and Spray JSON extensively use implicits to provide type classes and conversions between Scala and Java types.

Interactive workflow management using Azkaban

Interactive workflow management using Azkabandatamantra Managing a workflow using Azkaban scheduler. It can be used in batch as well as interactive workloads

Optimizing S3 Write-heavy Spark workloads

Optimizing S3 Write-heavy Spark workloadsdatamantra This document discusses optimizing Spark write-heavy workloads to S3 object storage. It describes problems with eventual consistency, renames, and failures when writing to S3. It then presents several solutions implemented at Qubole to improve the performance of Spark writes to Hive tables and directly writing to the Hive warehouse location. These optimizations include parallelizing renames, writing directly to the warehouse, and making recover partitions faster by using more efficient S3 listing. Performance improvements of up to 7x were achieved.

Apache spark - Installation

Apache spark - InstallationMartin Zapletal This document provides an overview of installing and deploying Apache Spark, including:

1. Spark can be installed via prebuilt packages or by building from source.

2. Spark runs in local, standalone, YARN, or Mesos cluster modes and the SparkContext is used to connect to the cluster.

3. Jobs are deployed to the cluster using the spark-submit script which handles building jars and dependencies.

Introduction to spark 2.0

Introduction to spark 2.0datamantra This document introduces Spark 2.0 and its key features, including the Dataset abstraction, Spark Session API, moving from RDDs to Datasets, Dataset and DataFrame APIs, handling time windows, and adding custom optimizations. The major focus of Spark 2.0 is standardizing on the Dataset abstraction and improving performance by an order of magnitude. Datasets provide a strongly typed API that combines the best of RDDs and DataFrames.

Testing Spark and Scala

Testing Spark and Scaladatamantra Unit testing in Scala can be done using the Scalatest framework. Scalatest provides different styles like FunSuite, FlatSpec, FunSpec etc. to write unit tests. It allows sharing of fixtures between tests to reduce duplication. Asynchronous testing and mocking frameworks are also supported. When testing Spark applications, the test suite should initialize the Spark context and clean it up. Spark batch and streaming operations can be tested by asserting on DataFrames and controlling the processing time respectively.

Zoo keeper in the wild

Zoo keeper in the wilddatamantra This document discusses ZooKeeper deployment, management, and client use pitfalls. It provides guidance on ideal ZooKeeper cluster sizing, topology design, and server role selection for deployment. For management, it covers dynamic reconfiguration and high failure expectations. For clients, it discusses herd effects, limiting child nodes, and handling high write loads. The presentation aims to help users avoid common ZooKeeper issues.

Introduction to Spark 2.0 Dataset API

Introduction to Spark 2.0 Dataset APIdatamantra This document provides an introduction and overview of Spark's Dataset API. It discusses how Dataset combines the best aspects of RDDs and DataFrames into a single API, providing strongly typed transformations on structured data. The document also covers how Dataset moves Spark away from RDDs towards a more SQL-like programming model and optimized data handling. Key topics include the Spark Session entry point, differences between DataFrames and Datasets, and examples of Dataset operations like word count.

How Spark Does It Internally?

How Spark Does It Internally?Knoldus Inc. Apache Spark is all the rage these days. People who work with Big Data, Spark is a household name for them. We have been using it for quite some time now. So we already know that Spark is lightning-fast cluster computing technology, it is faster than Hadoop MapReduce.

If you ask any of these Spark techies, how Spark is fast, they would give you a vague answer by saying Spark uses DAG to carry out the in-memory computations.

So, how far is this answer satisfiable?

Well to a Spark expert, this answer is just equivalent to a poison.

Let’s try to understand how exactly spark is handling our computations through DAG.

DAGScheduler - The Internals of Apache Spark.pdf

DAGScheduler - The Internals of Apache Spark.pdfJoeKibangu DAGScheduler is the scheduling layer of Apache Spark that implements stage-oriented scheduling using Jobs and Stages. It transforms a logical execution plan into a physical execution plan using stages. It computes an execution DAG of stages for each job, keeps track of materialized RDDs and stage outputs, and finds a minimal schedule to run jobs. It then submits stages to TaskScheduler. It also determines preferred task locations and handles failures due to lost shuffle files.

Ad

More Related Content

What's hot (20)

Evolution of apache spark

Evolution of apache sparkdatamantra 1) Spark 1.0 was released in 2014 as the first production-ready version containing Spark batch, streaming, Shark, and machine learning libraries.

2) By 2014, most big data processing used higher-level tools like Hive and Pig on structured data rather than the original MapReduce assumption of only unstructured data.

3) Spark evolved to support structured data through the DataFrame API in versions 1.2-1.3, providing a unified way to read from structured sources.

Building real time Data Pipeline using Spark Streaming

Building real time Data Pipeline using Spark Streamingdatamantra This document summarizes the key challenges and solutions in building a real-time data pipeline that ingests data from a database, transforms it using Spark Streaming, and publishes the output to Salesforce. The pipeline aims to have a latency of 1 minute with zero data loss and ordering guarantees. Some challenges discussed include handling out of sequence and late arrival events, schema evolution, bootstrap loading, data loss/corruption, and diagnosing issues. Solutions proposed use Kafka, checkpointing, replay capabilities, and careful broker/connect setups to help meet the reliability requirements for the pipeline.

Introduction to concurrent programming with Akka actors

Introduction to concurrent programming with Akka actorsShashank L This document provides an introduction to concurrent programming with Akka Actors. It discusses concurrency and parallelism, how the end of Moore's Law necessitated a shift to concurrent programming, and introduces key concepts of actors including message passing concurrency, actor systems, actor operations like create and send, routing, supervision, configuration and testing. Remote actors are also discussed. Examples are provided in Scala.

Introduction to Flink Streaming

Introduction to Flink Streamingdatamantra This document provides an introduction to Apache Flink and its streaming capabilities. It discusses stream processing as an abstraction and how Flink uses streams as a core abstraction. It compares Flink streaming to Spark streaming and outlines some of Flink's streaming features like windows, triggers, and how it handles event time.

Introduction to Datasource V2 API

Introduction to Datasource V2 APIdatamantra A brief introduction to Datasource V2 API in Spark 2.3.0, Comparison with the previous Datasource API.

Productionalizing a spark application

Productionalizing a spark applicationdatamantra 1. The document discusses the process of productionalizing a financial analytics application built on Spark over multiple iterations. It started with data scientists using Python and data engineers porting code to Scala RDDs. They then moved to using DataFrames and deployed on EMR.

2. Issues with code quality and testing led to adding ScalaTest, PR reviews, and daily Jenkins builds. Architectural challenges were addressed by moving to Databricks Cloud which provided notebooks, jobs, and throwaway clusters.

3. Future work includes using Spark SQL windows and Dataset API for stronger typing and schema support. The iterations improved the code, testing, deployment, and use of latest Spark features.

State management in Structured Streaming

State management in Structured Streamingdatamantra This document discusses state management in Apache Spark Structured Streaming. It begins by introducing Structured Streaming and differentiating between stateless and stateful stream processing. It then explains the need for state stores to manage intermediate data in stateful processing. It describes how state was managed inefficiently in old Spark Streaming using RDDs and snapshots, and how Structured Streaming improved on this with its decoupled, asynchronous, and incremental state persistence approach. The document outlines Apache Spark's implementation of storing state to HDFS and the involved code entities. It closes by discussing potential issues with this approach and how embedded stores like RocksDB may help address them in production stream processing systems.

Spark on Kubernetes

Spark on Kubernetesdatamantra Spark can run on Kubernetes containers in two ways - as a static cluster or with native integration. As a static cluster, Spark pods are manually deployed without autoscaling. Native integration treats Kubernetes as a resource manager, allowing Spark to dynamically acquire and release containers like in YARN. It uses Kubernetes custom controllers to create driver pods that then launch worker pods. This provides autoscaling of resources based on job demands.

Building distributed processing system from scratch - Part 2

Building distributed processing system from scratch - Part 2datamantra Continuation of http://www.slideshare.net/datamantra/building-distributed-systems-from-scratch-part-1

Building Distributed Systems from Scratch - Part 1

Building Distributed Systems from Scratch - Part 1datamantra Meetup talk about building distributed systems like Spark from scratch on top of frameworks like Apache Mesos and YARN

Interactive Data Analysis in Spark Streaming

Interactive Data Analysis in Spark Streamingdatamantra This document discusses strategies for building interactive streaming applications in Spark Streaming. It describes using Zookeeper as a dynamic configuration source to allow modifying a Spark Streaming application's behavior at runtime. The key points are:

- Zookeeper can be used to track configuration changes and trigger Spark Streaming context restarts through its watch mechanism and Curator library.

- This allows building interactive applications that can adapt to configuration updates without needing to restart the whole streaming job.

- Examples are provided of using Curator caches like node and path caches to monitor Zookeeper for changes and restart Spark Streaming contexts in response.

Introduction to dataset

Introduction to datasetdatamantra The document introduces the Dataset API in Spark, which provides type safety and performance benefits over DataFrames. Datasets allow operating on domain objects using compiled functions rather than Rows. Encoders efficiently serialize objects to and from the JVM. This allows type checking of operations and retaining objects in distributed operations. The document outlines the history of Spark APIs, limitations of DataFrames, and how Datasets address these through compiled encoding and working with case classes rather than Rows.

Understanding Implicits in Scala

Understanding Implicits in Scaladatamantra Implicit parameters and implicit conversions are Scala language features that allow omitting explicit calls to methods or variables. Implicits enable concise and elegant code through features like dependency injection, context passing, and ad hoc polymorphism. Implicits resolve types at compile-time rather than runtime. While powerful, implicits can cause conflicts and slow compilation if overused. Frameworks like Scala collections, Spark, and Spray JSON extensively use implicits to provide type classes and conversions between Scala and Java types.

Interactive workflow management using Azkaban

Interactive workflow management using Azkabandatamantra Managing a workflow using Azkaban scheduler. It can be used in batch as well as interactive workloads

Optimizing S3 Write-heavy Spark workloads

Optimizing S3 Write-heavy Spark workloadsdatamantra This document discusses optimizing Spark write-heavy workloads to S3 object storage. It describes problems with eventual consistency, renames, and failures when writing to S3. It then presents several solutions implemented at Qubole to improve the performance of Spark writes to Hive tables and directly writing to the Hive warehouse location. These optimizations include parallelizing renames, writing directly to the warehouse, and making recover partitions faster by using more efficient S3 listing. Performance improvements of up to 7x were achieved.

Apache spark - Installation

Apache spark - InstallationMartin Zapletal This document provides an overview of installing and deploying Apache Spark, including:

1. Spark can be installed via prebuilt packages or by building from source.

2. Spark runs in local, standalone, YARN, or Mesos cluster modes and the SparkContext is used to connect to the cluster.

3. Jobs are deployed to the cluster using the spark-submit script which handles building jars and dependencies.

Introduction to spark 2.0

Introduction to spark 2.0datamantra This document introduces Spark 2.0 and its key features, including the Dataset abstraction, Spark Session API, moving from RDDs to Datasets, Dataset and DataFrame APIs, handling time windows, and adding custom optimizations. The major focus of Spark 2.0 is standardizing on the Dataset abstraction and improving performance by an order of magnitude. Datasets provide a strongly typed API that combines the best of RDDs and DataFrames.

Testing Spark and Scala

Testing Spark and Scaladatamantra Unit testing in Scala can be done using the Scalatest framework. Scalatest provides different styles like FunSuite, FlatSpec, FunSpec etc. to write unit tests. It allows sharing of fixtures between tests to reduce duplication. Asynchronous testing and mocking frameworks are also supported. When testing Spark applications, the test suite should initialize the Spark context and clean it up. Spark batch and streaming operations can be tested by asserting on DataFrames and controlling the processing time respectively.

Zoo keeper in the wild

Zoo keeper in the wilddatamantra This document discusses ZooKeeper deployment, management, and client use pitfalls. It provides guidance on ideal ZooKeeper cluster sizing, topology design, and server role selection for deployment. For management, it covers dynamic reconfiguration and high failure expectations. For clients, it discusses herd effects, limiting child nodes, and handling high write loads. The presentation aims to help users avoid common ZooKeeper issues.

Introduction to Spark 2.0 Dataset API

Introduction to Spark 2.0 Dataset APIdatamantra This document provides an introduction and overview of Spark's Dataset API. It discusses how Dataset combines the best aspects of RDDs and DataFrames into a single API, providing strongly typed transformations on structured data. The document also covers how Dataset moves Spark away from RDDs towards a more SQL-like programming model and optimized data handling. Key topics include the Spark Session entry point, differences between DataFrames and Datasets, and examples of Dataset operations like word count.

Similar to Core Services behind Spark Job Execution (20)

How Spark Does It Internally?

How Spark Does It Internally?Knoldus Inc. Apache Spark is all the rage these days. People who work with Big Data, Spark is a household name for them. We have been using it for quite some time now. So we already know that Spark is lightning-fast cluster computing technology, it is faster than Hadoop MapReduce.

If you ask any of these Spark techies, how Spark is fast, they would give you a vague answer by saying Spark uses DAG to carry out the in-memory computations.

So, how far is this answer satisfiable?

Well to a Spark expert, this answer is just equivalent to a poison.

Let’s try to understand how exactly spark is handling our computations through DAG.

DAGScheduler - The Internals of Apache Spark.pdf

DAGScheduler - The Internals of Apache Spark.pdfJoeKibangu DAGScheduler is the scheduling layer of Apache Spark that implements stage-oriented scheduling using Jobs and Stages. It transforms a logical execution plan into a physical execution plan using stages. It computes an execution DAG of stages for each job, keeps track of materialized RDDs and stage outputs, and finds a minimal schedule to run jobs. It then submits stages to TaskScheduler. It also determines preferred task locations and handles failures due to lost shuffle files.

Apache Spark in Depth: Core Concepts, Architecture & Internals

Apache Spark in Depth: Core Concepts, Architecture & InternalsAnton Kirillov Slides cover Spark core concepts of Apache Spark such as RDD, DAG, execution workflow, forming stages of tasks and shuffle implementation and also describes architecture and main components of Spark Driver. The workshop part covers Spark execution modes , provides link to github repo which contains Spark Applications examples and dockerized Hadoop environment to experiment with

Tuning and Debugging in Apache Spark

Tuning and Debugging in Apache SparkPatrick Wendell Video: https://www.youtube.com/watch?v=kkOG_aJ9KjQ

This talk gives details about Spark internals and an explanation of the runtime behavior of a Spark application. It explains how high level user programs are compiled into physical execution plans in Spark. It then reviews common performance bottlenecks encountered by Spark users, along with tips for diagnosing performance problems in a production application.

Apache Spark - Running on a Cluster | Big Data Hadoop Spark Tutorial | CloudxLab

Apache Spark - Running on a Cluster | Big Data Hadoop Spark Tutorial | CloudxLabCloudxLab (Big Data with Hadoop & Spark Training: http://bit.ly/2IUsWca

This CloudxLab Running in a Cluster tutorial helps you to understand running Spark in the cluster in detail. Below are the topics covered in this tutorial:

1) Spark Runtime Architecture

2) Driver Node

3) Scheduling Tasks on Executors

4) Understanding the Architecture

5) Cluster Managers

6) Executors

7) Launching a Program using spark-submit

8) Local Mode & Cluster-Mode

9) Installing Standalone Cluster

10) Cluster Mode - YARN

11) Launching a Program on YARN

12) Cluster Mode - Mesos and AWS EC2

13) Deployment Modes - Client and Cluster

14) Which Cluster Manager to Use?

15) Common flags for spark-submit

Big Data processing with Apache Spark

Big Data processing with Apache SparkLucian Neghina Get started with Apache Spark parallel computing framework.

This is a keynote from the series "by Developer for Developers"

Apache Spark overview

Apache Spark overviewDataArt This document provides an overview of Apache Spark, including how it compares to Hadoop, the Spark ecosystem, Resilient Distributed Datasets (RDDs), transformations and actions on RDDs, the directed acyclic graph (DAG) scheduler, Spark Streaming, and the DataFrames API. Key points covered include Spark's faster performance versus Hadoop through its use of memory instead of disk, the RDD abstraction for distributed collections, common RDD operations, and Spark's capabilities for real-time streaming data processing and SQL queries on structured data.

Tuning and Debugging in Apache Spark

Tuning and Debugging in Apache SparkDatabricks This talk gives details about Spark internals and an explanation of the runtime behavior of a Spark application. It explains how high level user programs are compiled into physical execution plans in Spark. It then reviews common performance bottlenecks encountered by Spark users, along with tips for diagnosing performance problems in a production application.

Apache Spark Internals

Apache Spark InternalsKnoldus Inc. We will see internal architecture of spark cluster i.e what is driver, worker, executor and cluster manager, how spark program will be run on cluster and what are jobs,stages and task.

internals

internalsSandeep Purohit This document discusses the internals of Apache Spark, including its architecture, execution workflow, and key concepts like tasks, stages and jobs. It begins with an overview of the Spark cluster architecture consisting of driver programs, executors, worker nodes and a cluster manager. It then defines tasks as individual units of execution, stages as collections of tasks, and jobs as actions submitted to process RDDs. The document also explains how the DAG scheduler creates a DAG of stages to evaluate the final result and split the graph across workers.

Internals

InternalsSandeep Purohit we will see internal architecture of spark cluster i.e what is driver, worker,executer and cluster manager, how spark program will be run on cluster and what are jobs,stages and task.

Speed up UDFs with GPUs using the RAPIDS Accelerator

Speed up UDFs with GPUs using the RAPIDS AcceleratorDatabricks The RAPIDS Accelerator for Apache Spark is a plugin that enables the power of GPUs to be leveraged in Spark DataFrame and SQL queries, improving the performance of ETL pipelines. User-defined functions (UDFs) in the query appear as opaque transforms and can prevent the RAPIDS Accelerator from processing some query operations on the GPU.

This presentation discusses how users can leverage the RAPIDS Accelerator UDF Compiler to automatically translate some simple UDFs to equivalent Catalyst operations that are processed on the GPU. The presentation also covers how users can provide a GPU version of Scala, Java, or Hive UDFs for maximum control and performance. Sample UDFs for each case will be shown along with how the query plans are impacted when the UDFs are processed on the GPU.

Spark Deep Dive

Spark Deep DiveCorey Nolet This deep dive attempts to "de-mystify" Spark by touching on some of the main design philosophies and diving into some of the more advanced features that make it such a flexible and powerful cluster computing framework. It will touch on some common pitfalls and attempt to build some best practices for building, configuring, and deploying Spark applications.

Overview of Spark for HPC

Overview of Spark for HPCGlenn K. Lockwood I originally gave this presentation as an internal briefing at SDSC based on my experiences in working with Spark to solve scientific problems.

TriHUG talk on Spark and Shark

TriHUG talk on Spark and Sharktrihug This document summarizes Lightning-Fast Cluster Computing with Spark and Shark, a presentation about the Spark and Shark frameworks. Spark is an open-source cluster computing system that aims to provide fast, fault-tolerant processing of large datasets. It uses resilient distributed datasets (RDDs) and supports diverse workloads with sub-second latency. Shark is a system built on Spark that exposes the HiveQL query language and compiles queries down to Spark programs for faster, interactive analysis of large datasets.

MapReduce

MapReduceahmedelmorsy89 MapReduce is a programming model for processing large datasets in a distributed environment. It consists of a map function that processes input key-value pairs to generate intermediate key-value pairs, and a reduce function that merges all intermediate values associated with the same key. It allows for parallelization of computations across large clusters. Example applications include word count, sorting, and indexing web links. Hadoop is an open source implementation of MapReduce that runs on commodity hardware.

Spark

SparkHeena Madan Spark is an open-source distributed computing framework used for processing large datasets. It allows for in-memory cluster computing, which enhances processing speed. Spark core components include Resilient Distributed Datasets (RDDs) and a directed acyclic graph (DAG) that represents the lineage of transformations and actions on RDDs. Spark Streaming is an extension that allows for processing of live data streams with low latency.

Apache spark - Spark's distributed programming model

Apache spark - Spark's distributed programming modelMartin Zapletal Spark's distributed programming model uses resilient distributed datasets (RDDs) and a directed acyclic graph (DAG) approach. RDDs support transformations like map, filter, and actions like collect. Transformations are lazy and form the DAG, while actions execute the DAG. RDDs support caching, partitioning, and sharing state through broadcasts and accumulators. The programming model aims to optimize the DAG through operations like predicate pushdown and partition coalescing.

Apache Spark II (SparkSQL)

Apache Spark II (SparkSQL)Datio Big Data In this second part, we'll continue the Spark's review and introducing SparkSQL which allows to use data frames in Python, Java, and Scala; read and write data in a variety of structured formats; and query Big Data with SQL.

Apache Spark™ is a multi-language engine for executing data-S5.ppt

Apache Spark™ is a multi-language engine for executing data-S5.pptbhargavi804095 Apache Spark™ is a multi-language engine for executing data engineering, data science, and machine learning on single-node machines or clusters.

Ad

More from datamantra (12)

Multi Source Data Analysis using Spark and Tellius

Multi Source Data Analysis using Spark and Telliusdatamantra Multi Source Data Analysis Using Apache Spark and Tellius

This document discusses analyzing data from multiple sources using Apache Spark and the Tellius platform. It covers loading data from different sources like databases and files into Spark DataFrames, defining a data model by joining the sources, and performing analysis like calculating revenues by department across sources. It also discusses challenges like double counting values when directly querying the joined data. The Tellius platform addresses this by implementing a custom query layer on top of Spark SQL to enable accurate multi-source analysis.

Understanding transactional writes in datasource v2

Understanding transactional writes in datasource v2datamantra This document discusses the new Transactional Writes in Datasource V2 API introduced in Spark 2.3. It outlines the shortcomings of the previous V1 write API, specifically the lack of transaction support. It then describes the anatomy of the new V2 write API, including interfaces like DataSourceWriter, DataWriterFactory, and DataWriter that provide transactional capabilities at the partition and job level. It also covers how the V2 API addresses partition awareness through preferred location hints to improve performance.

Spark stack for Model life-cycle management

Spark stack for Model life-cycle managementdatamantra This document summarizes a presentation given by Samik Raychaudhuri on [24]7's use of Apache Spark for automating the lifecycle of prediction models. [24]7 builds machine learning models on large customer interaction data to predict customer intent and provide personalized experiences. Previously, models were managed using Vertica, but Spark provides faster, more scalable distributed processing. The new platform uses Spark for regular model building from HDFS data, and the trained models can be deployed on [24]7's production systems. Future work includes using Spark to train more complex models like deep learning for chatbots.

Scalable Spark deployment using Kubernetes

Scalable Spark deployment using Kubernetesdatamantra The document discusses deploying Spark clusters on Kubernetes. It introduces Kubernetes as a container orchestration platform for deploying containerized applications at scale across cloud and on-prem environments. It describes building a custom Spark 2.1 Docker image and using it to deploy a Spark cluster on Kubernetes with master and worker pods, exposing the Spark UI through a service.

Introduction to concurrent programming with akka actors

Introduction to concurrent programming with akka actorsdatamantra This document provides an introduction to concurrent programming with Akka Actors. It discusses concurrency and parallelism, how the end of Moore's Law necessitated a shift to concurrent programming, and introduces key concepts of actors including message passing concurrency, actor systems, actor operations like sending messages, and more advanced topics like routing, supervision, testing, configuration and remote actors.

Functional programming in Scala

Functional programming in Scaladatamantra This document provides an overview of functional programming concepts in Scala. It discusses the history and advantages of functional programming. It then covers the basics of Scala including its support for object oriented and functional programming. Key functional programming aspects of Scala like immutable data, higher order functions, and implicit parameters are explained with examples.

Telco analytics at scale

Telco analytics at scaledatamantra This document discusses Telco analytics at scale using distributed stream processing. It describes using technologies like Apache Spark Streaming, Kafka, and Hadoop (HDFS, Hive, HBase) to ingest and process large volumes of streaming data from various sources in real-time or near real-time. Example use cases discussed include fraud detection, real-time rating, security information and event management. It also covers strategies for distributed in-memory caching and rule processing to enable low latency analytics at high throughput scales needed for telco data and applications.

Platform for Data Scientists

Platform for Data Scientistsdatamantra This document discusses a platform for data scientists that aims to automate routine jobs, maximize resource utilization, and allow data scientists to focus more on business solutions. The platform provides capabilities for data capture, analysis, modeling, and output of analytics. It seeks to reduce the time taken to turn data into insights from months to weeks. Key elements of the platform include tools for exploratory data analysis, advanced modeling, distributed architecture, bespoke algorithms, and packaged analytics solutions.

Building scalable rest service using Akka HTTP

Building scalable rest service using Akka HTTPdatamantra Akka HTTP is a toolkit for building scalable REST services in Scala. It provides a high-level API built on top of Akka actors and Akka streams for writing asynchronous, non-blocking and resilient microservices. The document discusses Akka HTTP's architecture, routing DSL, directives, testing, additional features like file uploads and websockets. It also compares Akka HTTP to other Scala frameworks and outlines pros and cons of using Akka HTTP for building REST APIs.

Real time ETL processing using Spark streaming

Real time ETL processing using Spark streamingdatamantra The document discusses the architecture for real-time ETL processing, including using GoldenGate for change data capture from source databases, Kafka as the messaging system, and Spark jobs for streaming reconciliation and joining of data. It also covers requirements for the reconciler component like supporting idempotency, immutability, and schema evolution. Challenges with handling out-of-order events in Spark streaming and the data model used to address issues like idempotency and schema evolution are also described.

Anatomy of Spark SQL Catalyst - Part 2

Anatomy of Spark SQL Catalyst - Part 2datamantra Catalyst is a framework that defines relational operators and expressions for Spark SQL. It converts DataFrame queries into logical and physical query plans. The logical plans are optimized through rules before being converted to Spark plans by strategies. These strategies implement the query planner to transform logical plans into physical plans that can be executed on RDDs. Key components include the analyzer, optimizer, query planner and strategies like filtering that generate Spark plans from logical plans.

Anatomy of spark catalyst

Anatomy of spark catalystdatamantra This document provides an overview of Spark Catalyst including:

- Catalyst trees and expressions represent logical and physical query plans

- Expressions have datatypes and operate on Row objects

- Custom expressions can be defined

- Code generation improves expression evaluation performance by generating Java code via Janino compiler

- Key concepts like trees, expressions, datatypes, rows, code generation and Janino compiler are explained through examples

Ad

Recently uploaded (20)

Just-In-Timeasdfffffffghhhhhhhhhhj Systems.ppt

Just-In-Timeasdfffffffghhhhhhhhhhj Systems.pptssuser5f8f49 Just-in-time: Repetitive production system in which processing and movement of materials and goods occur just as they are needed, usually in small batches

JIT is characteristic of lean production systems

JIT operates with very little “fat”

md-presentHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHation.pptx

md-presentHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHation.pptxfatimalazaar2004 BBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBB

Developing Security Orchestration, Automation, and Response Applications

Developing Security Orchestration, Automation, and Response ApplicationsVICTOR MAESTRE RAMIREZ Developing Security Orchestration, Automation, and Response Applications

Thingyan is now a global treasure! See how people around the world are search...

Thingyan is now a global treasure! See how people around the world are search...Pixellion We explored how the world searches for 'Thingyan' and 'သင်္ကြန်' and this year, it’s extra special. Thingyan is now officially recognized as a World Intangible Cultural Heritage by UNESCO! Dive into the trends and celebrate with us!

Deloitte Analytics - Applying Process Mining in an audit context

Deloitte Analytics - Applying Process Mining in an audit contextProcess mining Evangelist Mieke Jans is a Manager at Deloitte Analytics Belgium. She learned about process mining from her PhD supervisor while she was collaborating with a large SAP-using company for her dissertation.

Mieke extended her research topic to investigate the data availability of process mining data in SAP and the new analysis possibilities that emerge from it. It took her 8-9 months to find the right data and prepare it for her process mining analysis. She needed insights from both process owners and IT experts. For example, one person knew exactly how the procurement process took place at the front end of SAP, and another person helped her with the structure of the SAP-tables. She then combined the knowledge of these different persons.

AI Competitor Analysis: How to Monitor and Outperform Your Competitors

AI Competitor Analysis: How to Monitor and Outperform Your CompetitorsContify AI competitor analysis helps businesses watch and understand what their competitors are doing. Using smart competitor intelligence tools, you can track their moves, learn from their strategies, and find ways to do better. Stay smart, act fast, and grow your business with the power of AI insights.

For more information please visit here https://www.contify.com/

Classification_in_Machinee_Learning.pptx

Classification_in_Machinee_Learning.pptxwencyjorda88 Brief powerpoint presentation about different classification of machine learning

FPET_Implementation_2_MA to 360 Engage Direct.pptx

FPET_Implementation_2_MA to 360 Engage Direct.pptxssuser4ef83d Engage Direct 360 marketing optimization sas entreprise miner

Day 1 - Lab 1 Reconnaissance Scanning with NMAP, Vulnerability Assessment wit...

Day 1 - Lab 1 Reconnaissance Scanning with NMAP, Vulnerability Assessment wit...Abodahab IHOY78T6R5E45TRYTUYIU

Core Services behind Spark Job Execution

- 1. Core services behind Spark Job Execution DAGScheduler and TaskScheduler

- 2. Agenda ● Spark Architecture ● RDD ● Job-Stage-Task ● Bird Eye View ● DAGScheduler ● TaskScheduler

- 4. Spark Architecture Driver Hosts SparkContext Cockpit of Jobs and Task Execution Schedules Tasks to run on executors Contains DAGScheduler and TaskScheduler Executor Static allocation vs Dynamic allocation Sends Heartbeat and Metrics Provides In memory storage for RDD Communicates directly with driver to execute task

- 5. RDD — Resilient Distributed Dataset ● RDD is the primary data abstraction in Spark and the core of Spark ● Motivation for RDD ● Features of RDD

- 6. RDD — Resilient Distributed Dataset ● RDD creation ● RDD Lineage ● Lazy Execution ● Partitions

- 7. RDD operations ● Transformations ● Actions

- 8. A sample Spark program val conf = new SparkConf().setAppName(appName).setMaster(master) val sc = new SparkContext(conf) val file = sc.textFile("hdfs://...") // This is an RDD val errors = file.filter(_.contains("ERROR")) // This is an RDD val errorCount = errors.count() // This is an “action”

- 9. Job-Stage-Task What is a Job? Top level work item Computation job == Computation Partition of RDD Target RDD Lineage

- 10. Job-Stage-Task Job divided into stages Logical Plan → Physical plan (execution unit) Set of Parallel task Stage Boundary (Shuffle) Computation of stage triggers parents stage execution

- 11. Types of Stages ShuffleMapStage: Intermediate stage in execution DAG Saves map output → fetched later Pipelined operations before shuffle Can be Shared across jobs ResultStage: Final stage executing action Works on one or many partitions

- 12. Job-Stage-Task Smallest unit of execution Comprises of function and placement preference Task operate on a single partition Launched on executor and ran there

- 13. Types of Task ShuffleMapTask: Intermediate stage Returns MapStatus ResultStageTask: Last Stage Returns output to driver

- 15. DAGScheduler ● Initialization ● Stage-oriented scheduling (Logical - Physical) ● DAGSchedulerEvent (Job or Stage) ● Stage Submissions

- 16. DAGScheduler Responsibilities ● Computes an execution DAG, submits to stages to TaskScheduler

- 17. DAGScheduler Responsibilities ● Computes an execution DAG ● Determines the preferred locations to run each task on, keeps track of cached RDD

- 18. DAGScheduler Responsibilities ● Computes an execution DAG ● Determines the preferred locations to run each task on ● Handles failures due to shuffle output files being lost (FetchFailed, ExecutorLost)

- 19. DAGScheduler Responsibilities ● Computes an execution DAG ● Determines the preferred locations to run each task on ● Handles failures due to shuffle output files being lost (FetchFailed, ExecutorLost) ● Stage retry

- 21. TaskSet and TaskSetManager ● What is a TaskSet? ○ Fully independent sequence of task ● Why TaskSetManager ● Responsibilities of TaskSetManager ○ Scheduling tasks in a TaskSet ○ Completion notification ○ Retry and Abort ○ Locality preference

- 22. TaskScheduler’s Responsibilities ● responsible for submitting tasks for execution for every stage ● works closely with DAGScheduler for resubmission of stage ● tracks the executors in a Spark application (executorHeartBeat and executorLost)

- 23. Thank You