Ad

Crunch Your Data in the Cloud with Elastic Map Reduce - Amazon EMR Hadoop

- 1. What is Cloud Computing?

- 2. Using the Cloud to Crunch Your DataAdrian Cockcroft – [email protected]

- 3. What is Capacity PlanningWe care about CPU, Memory, Network and Disk resources, and Application response timesWe need to know how much of each resource we are using now, and will use in the futureWe need to know how much headroom we have to handle higher loadsWe want to understand how headroom varies, and how it relates to application response times and throughput

- 4. Capacity Planning NormsCapacity is expensiveCapacity takes time to buy and provisionCapacity only increases, can’t be shrunk easilyCapacity comes in big chunks, paid up frontPlanning errors can cause big problemsSystems are clearly defined assetsSystems can be instrumented in detail

- 5. Capacity Planning in CloudsCapacity is expensiveCapacity takes time to buy and provisionCapacity only increases, can’t be shrunk easilyCapacity comes in big chunks, paid up frontPlanning errors can cause big problemsSystems are clearly defined assetsSystems can be instrumented in detail

- 6. Capacity is expensivehttp://aws.amazon.com/s3/ & http://aws.amazon.com/ec2/Storage (Amazon S3) $0.150 per GB – first 50 TB / month of storage used$0.120 per GB – storage used / month over 500 TBData Transfer (Amazon S3) $0.100 per GB – all data transfer in$0.170 per GB – first 10 TB / month data transfer out$0.100 per GB – data transfer out / month over 150 TBRequests (Amazon S3 Storage access is via http)$0.01 per 1,000 PUT, COPY, POST, or LIST requests$0.01 per 10,000 GET and all other requests$0 per DELETECPU (Amazon EC2)Small (Default) $0.085 per hour, Extra Large $0.68 per hourNetwork (Amazon EC2)Inbound/Outbound around $0.10 per GB

- 7. Capacity comes in big chunks, paid up frontCapacity takes time to buy and provisionNo minimum price, monthly billing“Amazon EC2 enables you to increase or decrease capacity within minutes, not hours or days. You can commission one, hundreds or even thousands of server instances simultaneously”Capacity only increases, can’t be shrunk easilyPay for what is actually usedPlanning errors can cause big problemsSize only for what you need now

- 8. Systems are clearly defined assetsYou are running in a “stateless” multi-tenanted virtual image that can die or be taken away and replaced at any timeYou don’t know exactly where it isYou can choose to locate “USA” or “Europe”You can specify zones that will not share components to avoid common mode failures

- 9. Systems can be instrumented in detailNeed to use stateless monitoring toolsMonitored nodes come and go by the hourNeed to write role-name to hostnamee.g. wwwprod002, not the EC2 defaultall monitoring by role-nameGanglia – automatic configurationMulticast replicated monitoring stateNo need to pre-define metrics and nodes

- 10. Acquisition requires management buy-inAnyone with a credit card and $10 is in businessData governance issues…Remember 1980’s when PC’s first turned up?Departmental budgets funded PC’sNo standards, management or backupsCentral IT departments could lose controlDecentralized use of clouds will be driven by teams seeking competitive advantage and business agility – ultimately unstoppable…

- 11. December 9, 2009OK, so what should we do?

- 12. The Umbrella StrategyMonitor network traffic to cloud vendor API’sCatch unauthorized clouds as they formMeasure, trend and predict cloud activityPick two cloud standards, setup corporate accountsBetter sharing of lessons as they are learnedCreate path of least resistance for usersAvoid vendor lock-inAggregate traffic to get bulk discountsPressure the vendors to develop common standards, but don’t wait for them to arrive. The best APIs will be cloned eventuallySponsor a pathfinder project for each vendorNavigate a route through the cloudsDon’t get caught unawares in the rain

- 13. Predicting the WeatherBilling is variable, monthly in arrearsTry to predict how much you will be billed…Not an issue to start with, but can’t be ignoredAmazon has a cost estimator tool that may helpCentral analysis and collection of cloud metricsCloud vendor usage metrics via APIYour system and application metrics via GangliaIntra-cloud bandwidth is free, analyze in the cloud!Based on standard analysis framework “Hadoop”Validation of vendor metrics and billingCharge-back for individual user applications

- 14. Use it to learn it…Focus on how you can use the cloud yourself to do large scale log processingYou can upload huge datasets to a cloud and crunch them with a large cluster of computers using Amazon Elastic Map Reduce (EMR)Do it all from your web browser for a handful of dollars charged to a credit card.Here’s how

- 15. Cloud CrunchThe RecipeYou will need:A computer connected to the InternetThe Firefox browserA Firefox specific browser extensionA credit card and less than $1 to spendBig log files as ingredientsSome very processor intensive queries

- 16. RecipeFirst we will warm up our computer by setting up Firefox and connecting to the cloud.Then we will upload our ingredients to be crunched, at about 20 minutes per gigabyteYou should pick between one and twenty processors to crunch with, they are charged by the hour and the cloud takes about 10 minutes to warm up.The query itself starts by mapping the ingredients so that the mixture separates, then the excess is boiled off to make a nice informative reduction.

- 17. CostsFirefox and Extension – freeUpload ingredients – 10 cents/GigabyteSmall Processors – 11.5 cents/hour eachDownload results – 17 cents/GigabyteStorage – 15 cents/Gigabyte/monthService updates – 1 cent/1000 callsService requests – 1 cent/10,000 callsActual cost to run two example programs as described in this presentation was 26 cents

- 18. Faster Results at Same Cost!You may have trouble finding enough data and a complex enough query to keep the processors busy for an hour. In that case you can use fewer processorsConversely if you want quicker results you can use more and/or larger processors.Up to 20 systems with 8 CPU cores = 160 cores is immediately available. Oversize on request.

- 19. Step by StepWalkthrough to get you startedRun Amazon Elastic MapReduceexamplesGet up and running before this presentation is over….

- 20. Step 1 – Get Firefoxhttp://www.mozilla.com/en-US/firefox/firefox.html

- 21. Step 2 – Get S3Fox ExtensionNext select the Add-ons option from the Tools menu, select “Get Add-ons” and search for S3Fox.

- 22. Step 3 – Learn AboutAWSBring up http://aws.amazon.com/ to read about the services. Amazon S3 is short for Amazon Simple Storage Service, which is part of the Amazon Web Services product We will be using Amazon S3 to store data, and Amazon Elastic Map Reduce (EMR) to process it.Underneath EMR there is an Amazon Elastic Compute Cloud (EC2), which is created automatically for you each time you use EMR.

- 23. What is S3, EC2, EMR?Amazon Simple Storage Service lets you put data into the cloud that is addressed using a URL. Access to it can be private or public.Amazon Elastic Compute Cloud lets you pick the size and number of computers in your cloud.Amazon Elastic Map Reduce automatically builds a Hadoop cluster on top of EC2, feeds it data from S3, saves the results in S3, then removes the cluster and frees up the EC2 systems.

- 24. Step 4 – Sign Up For AWSGo to the top-right of the page and sign up at http://aws.amazon.com/You can login using the same account you use to buy books!

- 25. Step 5 – Sign Up For Amazon S3Follow the link to http://aws.amazon.com/s3/

- 26. Check The S3 Rate Card

- 27. Step 6 – Sign Up For Amazon EMRGo to http://aws.amazon.com/elasticmapreduce/

- 28. EC2 SignupThe EMR signup combines the other needed services such as EC2 in the sign up process and the rates for all are displayed.The EMR costs are in addition to the EC2 costs, so 1.5 cents/hour for EMR is added to the 10 cents/hour for EC2 making 11.5 cents/hour for each small instance running Linux with Hadoop.

- 29. EMR And EC2 Rates(old data – it is cheaper now with even bigger nodes)

- 30. Step 7 - How Big are EC2 Instances?See http://aws.amazon.com/ec2/instance-types/The compute power is specified in a standard unit called an EC2 Compute Unit (ECU).Amazon states “One EC2 Compute Unit (ECU) provides the equivalent CPU capacity of a 1.0-1.2 GHz 2007 Opteron or 2007 Xeon processor.”

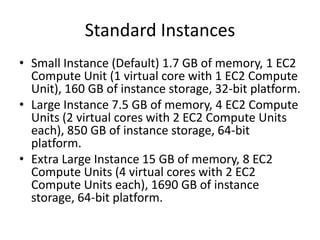

- 31. Standard InstancesSmall Instance (Default) 1.7 GB of memory, 1 EC2 Compute Unit (1 virtual core with 1 EC2 Compute Unit), 160 GB of instance storage, 32-bit platform.Large Instance 7.5 GB of memory, 4 EC2 Compute Units (2 virtual cores with 2 EC2 Compute Units each), 850 GB of instance storage, 64-bit platform.Extra Large Instance 15 GB of memory, 8 EC2 Compute Units (4 virtual cores with 2 EC2 Compute Units each), 1690 GB of instance storage, 64-bit platform.

- 32. Compute Intensive InstancesHigh-CPU Medium Instance 1.7 GB of memory, 5 EC2 Compute Units (2 virtual cores with 2.5 EC2 Compute Units each), 350 GB of instance storage, 32-bit platform.High-CPU Extra Large Instance 7 GB of memory, 20 EC2 Compute Units (8 virtual cores with 2.5 EC2 Compute Units each), 1690 GB of instance storage, 64-bit platform.

- 33. Step 8 – Getting Startedhttp://docs.amazonwebservices.com/ElasticMapReduce/2009-03-31/GettingStartedGuide/There is a Getting Started guide and Developer Documentation including sample applicationsWe will be working through two of those applications.There is also a very helpful FAQ page that is worth reading through.

- 34. Step 9 – What is EMR?

- 35. Step 10 – How ToMapReduce?Code directly in JavaSubmit “streaming” command line scriptsEMR bundles Perl, Python, Ruby and RCode MR sequences in Java using CascadingProcess log files using Cascading MultitoolWrite dataflow scripts with PigWrite SQL queries using Hive

- 36. Step 11 – Setup Access KeysGetting Started Guidehttp://docs.amazonwebservices.com/ElasticMapReduce/2009-03-31/GettingStartedGuide/gsFirstSteps.htmlThe URL to visit to get your key is:http://aws-portal.amazon.com/gp/aws/developer/account/index.html?action=access-key

- 37. Enter Access Keys in S3FoxOpen S3 Firefox Organizer and select the Manage Accounts button at the top leftEnter your access keys into the popup.

- 38. Step 12 – Create An Output FolderClick Here

- 40. Step 13 – Run Your First JobSee the Getting Started Guide at:http://docs.amazonwebservices.com/ElasticMapReduce/2009-03-31/GettingStartedGuide/gsConsoleRunJob.htmllogin to the EMR console at https://console.aws.amazon.com/elasticmapreduce/homecreate a new job flow called “Word crunch”, and select the sample application word count.

- 41. Set the Output S3 Bucket

- 42. Pick the Instance CountOne small instance (i.e. computer) will do…Cost will be 11.5 cents for 1 hour (minimum)

- 43. Start the Job FlowThe Job Flow takes a few minutes to get started, then completes in about 5 minutes run time

- 44. Step 14 – View The ResultsIn S3Fox click on the refresh icon, then doubleclick on the crunchie folderKeep clicking until the output file is visible

- 45. Save To Your PCS3Fox – click on left arrowSave to PCOpen in TextEditSee that the word “a” occurred 14716 timesboring…. so try a more interesting demo!Click Here

- 46. Step 15 – Crunch Some Log FilesCreate a new output bucket with a new nameStart a new job flow using the CloudFront DemoThis uses the Cascading Multitool

- 47. Step 16 – How Much Did That Cost?

- 48. Wrap UpEasy, cheap, anyone can use itNow let’s look at how to write code…

- 49. Log & Data Analysis using HadoopBased on slides [email protected]

- 50. Agenda1. Hadoop - Background - Ecosystem - HDFS & Map/Reduce - Example2. Log & Data Analysis @ Netflix - Problem / Data Volume - Current & Future Projects 3. Hadoop on Amazon EC2 - Storage options - Deployment options

- 51. HadoopApache open-source software for reliable, scalable, distributed computing.Originally a sub-project of the Lucene search engine.Used to analyze and transform large data sets.Supports partial failure, Recoverability, Consistency & Scalability

- 52. Hadoop Sub-ProjectsCoreHDFS: A distributed file system that provides high throughput access to data. Map/Reduce : A framework for processing large data sets.HBase : A distributed database that supports structured data storage for large tablesHive : An infrastructure for ad hoc querying (sql like)Pig : A data-flow language and execution frameworkAvro : A data serialization system that provides dynamic integration with scripting languages. Similar to Thrift & Protocol Buffers Cascading : Executing data processing workflowChukwa, Mahout, ZooKeeper, and many more

- 53. Hadoop Distributed File System(HDFS)FeaturesCannot be mounted as a “file system”Access via command line or Java APIPrefers large files (multiple terabytes) to many small filesFiles are write once, read many (append coming soon)Users, Groups and PermissionsName and Space Quotas Blocksize and Replication factor are configurable per fileCommands: hadoop dfs -ls, -du, -cp, -mv, -rm, -rmrUploading fileshadoopdfs -put foomydata/foo

- 54. cat ReallyBigFile | hadoop dfs -put - mydata/ReallyBigFileDownloading fileshadoopdfs -get mydata/foofoo

- 55. hadoop dfs –tail [–f] mydata/fooMap/ReduceMap/Reduce is a programming model for efficientdistributed computingData processing of large datasetMassively parallel (hundreds or thousands of CPUs)Easy to useProgrammers don’t worry about socket(), etc.It works like a Unix pipeline:cat * | grep | sort | uniq -c | cat > outputInput | Map | Shuffle & Sort | Reduce | OutputEfficiency from streaming through data, reducing seeksA good fit for a lot of applicationsLog processingIndex buildingData mining and machine learning

- 58. Input & Output FormatsThe application also chooses input and output formats, which define how the persistent data is read and written. These are interfaces and can be defined by the application.InputFormatSplits the input to determine the input to each map task.Defines a RecordReader that reads key, value pairs that are passed to the map taskOutputFormatGiven the key, value pairs and a filename, writes the reduce task output to persistent store.

- 59. Map/Reduce ProcessesLaunching ApplicationUser application codeSubmits a specific kind of Map/Reduce jobJobTrackerHandles all jobsMakes all scheduling decisionsTaskTrackerManager for all tasks on a given nodeTaskRuns an individual map or reduce fragment for a given jobForks from the TaskTracker

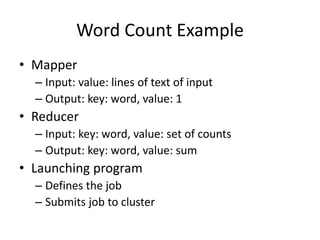

- 60. Word Count ExampleMapperInput: value: lines of text of inputOutput: key: word, value: 1ReducerInput: key: word, value: set of countsOutput: key: word, value: sumLaunching programDefines the jobSubmits job to cluster

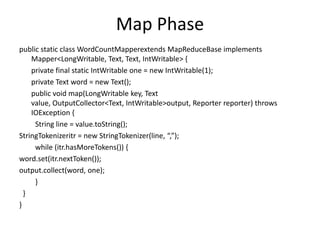

- 61. Map Phasepublic static class WordCountMapperextends MapReduceBase implements Mapper<LongWritable, Text, Text, IntWritable> { private final static IntWritable one = new IntWritable(1); private Text word = new Text(); public void map(LongWritable key, Text value, OutputCollector<Text, IntWritable> output, Reporter reporter) throws IOException { String line = value.toString();StringTokenizeritr = new StringTokenizer(line, “,”); while (itr.hasMoreTokens()) {word.set(itr.nextToken());output.collect(word, one); } }}

- 62. Reduce Phasepublic static class WordCountReducer extends MapReduceBase implements Reducer<Text, IntWritable, Text, IntWritable> { public void reduce(Text key, Iterator<IntWritable> values, OutputCollector<Text, IntWritable> output, Reporter reporter) throws IOException {int sum = 0; while (values.hasNext()) { sum += values.next().get(); }output.collect(key, new IntWritable(sum)); }}

- 63. Running the Jobpublic class WordCount { public static void main(String[] args) throws IOException {JobConf conf = new JobConf(WordCount.class); // the keys are words (strings)conf.setOutputKeyClass(Text.class); // the values are counts (ints)conf.setOutputValueClass(IntWritable.class);conf.setMapperClass(WordCountMapper.class);conf.setReducerClass(WordCountReducer.class);conf.setInputPath(new Path(args[0]);conf.setOutputPath(new Path(args[1]);JobClient.runJob(conf); }}

- 64. Running the Example InputWelcome to NetflixThis is a great place to workOuputNetflix 1This 1Welcome 1a 1great 1is 1place 1to 2work 1

- 65. WWW Access Log Structure

- 66. Analysis using access logTopURLsUsersIPSizeTime based analysisSessionDurationVisits per sessionIdentify attacks (DOS, Invalid Plug-in, etc)Study Visit patterns for Resource PlanningPreload relevant dataImpact of WWW call on middle tier services

- 67. Why run Hadoop in the cloud?“Infinite” resourcesHadoop scales linearlyElasticityRun a large cluster for a short timeGrow or shrink a cluster on demand

- 68. Common sense and safetyAmazon security is goodBut you are a few clicks away from making a data set public by mistakeCommon sense precautionsBefore you try it yourself…Get permission to move data to the cloudScrub data to remove sensitive informationSystem performance monitoring logs are a good choice for analysis

![hadoop dfs –tail [–f] mydata/fooMap/ReduceMap/Reduce is a programming model for efficientdistributed computingData processing of large datasetMassively parallel (hundreds or thousands of CPUs)Easy to useProgrammers don’t worry about socket(), etc.It works like a Unix pipeline:cat * | grep | sort | uniq -c | cat > outputInput | Map | Shuffle & Sort | Reduce | OutputEfficiency from streaming through data, reducing seeksA good fit for a lot of applicationsLog processingIndex buildingData mining and machine learning](https://image.slidesharecdn.com/emr-100205201256-phpapp02/85/Crunch-Your-Data-in-the-Cloud-with-Elastic-Map-Reduce-Amazon-EMR-Hadoop-55-320.jpg)

![Running the Jobpublic class WordCount { public static void main(String[] args) throws IOException {JobConf conf = new JobConf(WordCount.class); // the keys are words (strings)conf.setOutputKeyClass(Text.class); // the values are counts (ints)conf.setOutputValueClass(IntWritable.class);conf.setMapperClass(WordCountMapper.class);conf.setReducerClass(WordCountReducer.class);conf.setInputPath(new Path(args[0]);conf.setOutputPath(new Path(args[1]);JobClient.runJob(conf); }}](https://image.slidesharecdn.com/emr-100205201256-phpapp02/85/Crunch-Your-Data-in-the-Cloud-with-Elastic-Map-Reduce-Amazon-EMR-Hadoop-63-320.jpg)