CS-321 Compiler Design computer engineering PPT.pdf

- 1. 3 Motivation • Language processing is an important component of programming • A large number of systems software and application programs require structured input – Operating Systems (command line processing) – Databases (Query language processing) – Type setting systems like Latex

- 2. 3 Motivation • Language processing is an important component of programming • A large number of systems software and application programs require structured input – Operating Systems (command line processing) – Databases (Query language processing) – Type setting systems like Latex • Software quality assurance and software testing

- 3. 4 • Where ever input has a structure one can think of language processing Motivation

- 4. 4 • Where ever input has a structure one can think of language processing • Why study compilers? – Compilers use the whole spectrum of language processing technology Motivation

- 5. 5 Expectations? • What will we learn in the course?

- 6. 6 What do we expect to achieve by the end of the course? • Knowledge to design, develop, understand, modify/enhance, and maintain compilers for (even complex!) programming languages

- 7. 6 What do we expect to achieve by the end of the course? • Knowledge to design, develop, understand, modify/enhance, and maintain compilers for (even complex!) programming languages • Confidence to use language processing technology for software development

- 8. 7 Organization of the course • Assignments 10% • Mid semester exam 20% • End semester exam 35% • Course Project 35% – Group of 2/3/4 (to be decided) • Tentative

- 9. 8 Bit of History • How are programming languages implemented? Two major strategies: – Interpreters (old and much less studied) – Compilers (very well understood with mathematical foundations)

- 10. 8 Bit of History • How are programming languages implemented? Two major strategies: – Interpreters (old and much less studied) – Compilers (very well understood with mathematical foundations) • Some environments provide both interpreter and compiler. Lisp, scheme etc. provide – Interpreter for development – Compiler for deployment –

- 11. 8 Bit of History • How are programming languages implemented? Two major strategies: – Interpreters (old and much less studied) – Compilers (very well understood with mathematical foundations) • Some environments provide both interpreter and compiler. Lisp, scheme etc. provide – Interpreter for development – Compiler for deployment • Java – Java compiler: Java to interpretable bytecode – Java JIT: bytecode to executable image

- 12. 9 Some early machines and implementations • IBM developed 704 in 1954. All programming was done in assembly language. Cost of software development far exceeded cost of hardware. Low productivity.

- 13. 9 Some early machines and implementations • IBM developed 704 in 1954. All programming was done in assembly language. Cost of software development far exceeded cost of hardware. Low productivity. • Speedcoding interpreter: programs ran about 10 times slower than hand written assembly code

- 14. 9 Some early machines and implementations • IBM developed 704 in 1954. All programming was done in assembly language. Cost of software development far exceeded cost of hardware. Low productivity. • Speedcoding interpreter: programs ran about 10 times slower than hand written assembly code • John Backus (in 1954): Proposed a program that translated high level expressions into native machine code. Skeptism all around. Most people thought it was impossible

- 15. 9 Some early machines and implementations • IBM developed 704 in 1954. All programming was done in assembly language. Cost of software development far exceeded cost of hardware. Low productivity. • Speedcoding interpreter: programs ran about 10 times slower than hand written assembly code • John Backus (in 1954): Proposed a program that translated high level expressions into native machine code. Skeptism all around. Most people thought it was impossible • Fortran I project (1954-1957): The first compiler was released

- 16. 10 Fortran I • The first compiler had a huge impact on the programming languages and computer science. The whole new field of compiler design was started

- 17. 10 Fortran I • The first compiler had a huge impact on the programming languages and computer science. The whole new field of compiler design was started • More than half the programmers were using Fortran by 1958

- 18. 10 Fortran I • The first compiler had a huge impact on the programming languages and computer science. The whole new field of compiler design was started • More than half the programmers were using Fortran by 1958 • The development time was cut down to half

- 19. 10 Fortran I • The first compiler had a huge impact on the programming languages and computer science. The whole new field of compiler design was started • More than half the programmers were using Fortran by 1958 • The development time was cut down to half • Led to enormous amount of theoretical work (lexical analysis, parsing, optimization, structured programming, code generation, error recovery etc.)

- 20. 10 Fortran I • The first compiler had a huge impact on the programming languages and computer science. The whole new field of compiler design was started • More than half the programmers were using Fortran by 1958 • The development time was cut down to half • Led to enormous amount of theoretical work (lexical analysis, parsing, optimization, structured programming, code generation, error recovery etc.) • Modern compilers preserve the basic structure of the Fortran I compiler !!!

- 21. 11 The big picture • Compiler is part of program development environment • The other typical components of this environment are editor, assembler, linker, loader, debugger, profiler etc. • The compiler (and all other tools) must support each other for easy program development

- 26. 12 Editor Compiler Assembler Linker Loader Programmer Source Program Assembly code Machine Code Resolved Machine Code Executable Image Execution on the target machine

- 27. 12 Editor Compiler Assembler Linker Loader Programmer Source Program Assembly code Machine Code Resolved Machine Code Executable Image Execution on the target machine Normally end up with error

- 28. 12 Editor Compiler Assembler Linker Loader Debugger Programmer Source Program Assembly code Machine Code Resolved Machine Code Executable Image Debugging results Execution on the target machine Normally end up with error Execute under Control of debugger

- 29. 12 Editor Compiler Assembler Linker Loader Debugger Programmer Source Program Assembly code Machine Code Resolved Machine Code Executable Image Debugging results Programmer does manual correction of the code Execution on the target machine Normally end up with error Execute under Control of debugger

- 30. What are Compilers? • Translates from one representation of the program to another • Typically from high level source code to low level machine code or object code • Source code is normally optimized for human readability – Expressive: matches our notion of languages (and application?!) – Redundant to help avoid programming errors • Machine code is optimized for hardware – Redundancy is reduced – Information about the intent is lost 1

- 31. 2 Compiler as a Translator Compiler High level program Low level code

- 32. Goals of translation • Good compile time performance • Good performance for the generated code • Correctness – A very important issue. –Can compilers be proven to be correct? • Tedious even for toy compilers! Undecidable in general. –However, the correctness has an implication on the development cost 3

- 33. How to translate? • Direct translation is difficult. Why? • Source code and machine code mismatch in level of abstraction – Variables vs Memory locations/registers – Functions vs jump/return – Parameter passing – structs • Some languages are farther from machine code than others – For example, languages supporting Object Oriented Paradigm 4

- 34. How to translate easily? • Translate in steps. Each step handles a reasonably simple, logical, and well defined task • Design a series of program representations • Intermediate representations should be amenable to program manipulation of various kinds (type checking, optimization, code generation etc.) • Representations become more machine specific and less language specific as the translation proceeds 5

- 35. The first few steps • The first few steps can be understood by analogies to how humans comprehend a natural language • The first step is recognizing/knowing alphabets of a language. For example –English text consists of lower and upper case alphabets, digits, punctuations and white spaces –Written programs consist of characters from the ASCII characters set (normally 9-13, 32-126) 6

- 36. The first few steps • The next step to understand the sentence is recognizing words –How to recognize English words? –Words found in standard dictionaries –Dictionaries are updated regularly 7

- 37. The first few steps • How to recognize words in a programming language? –a dictionary (of keywords etc.) –rules for constructing words (identifiers, numbers etc.) • This is called lexical analysis • Recognizing words is not completely trivial. For example: w hat ist his se nte nce? 8

- 38. Lexical Analysis: Challenges • We must know what the word separators are • The language must define rules for breaking a sentence into a sequence of words. • Normally white spaces and punctuations are word separators in languages. 9

- 39. Lexical Analysis: Challenges • In programming languages a character from a different class may also be treated as word separator. • The lexical analyzer breaks a sentence into a sequence of words or tokens: –If a == b then a = 1 ; else a = 2 ; –Sequence of words (total 14 words) if a == b then a = 1 ; else a = 2 ; 10

- 40. The next step • Once the words are understood, the next step is to understand the structure of the sentence • The process is known as syntax checking or parsing I am going to play pronoun aux verb adverb subject verb adverb-phrase Sentence 11

- 41. Parsing • Parsing a program is exactly the same process as shown in previous slide. • Consider an expression if x == y then z = 1 else z = 2 if stmt predicate then-stmt else-stmt = = = = x y z 1 z 2 12

- 42. Understanding the meaning • Once the sentence structure is understood we try to understand the meaning of the sentence (semantic analysis) • A challenging task • Example: Prateek said Nitin left his assignment at home • What does his refer to? Prateek or Nitin? 13

- 43. Understanding the meaning • Worse case Amit said Amit left his assignment at home • Even worse Amit said Amit left Amit’s assignment at home • How many Amits are there? Which one left the assignment? Whose assignment got left? 14

- 44. Semantic Analysis • Too hard for compilers. They do not have capabilities similar to human understanding • However, compilers do perform analysis to understand the meaning and catch inconsistencies • Programming languages define strict rules to avoid such ambiguities { int Amit = 3; { int Amit = 4; cout << Amit; } } 15

- 45. More on Semantic Analysis • Compilers perform many other checks besides variable bindings • Type checking Amit left her work at home • There is a type mismatch between her and Amit. Presumably Amit is a male. And they are not the same person. 16

- 46. अश्वथामा हत: इतत नरो वा क ु ञ्जरो वा “Ashwathama hathaha iti, narova kunjarova” Ashwathama is dead. But, I am not certain whether it was a human or an elephant Example from Mahabharat

- 47. Compiler structure once again 18 Compiler Front End Lexical Analysis Syntax Analysis Semantic Analysis (Language specific) Token stream Abstract Syntax tree Unambiguous Program representation Source Program Target Program Back End

- 49. Code Optimization • No strong counter part with English, but is similar to editing/précis writing • Automatically modify programs so that they –Run faster –Use less resources (memory, registers, space, fewer fetches etc.) 23

- 50. Code Optimization • Some common optimizations –Common sub-expression elimination –Copy propagation –Dead code elimination –Code motion –Strength reduction –Constant folding • Example: x = 15 * 3 is transformed to x = 45 24

- 51. Example of Optimizations A : assignment M : multiplication D : division E : exponent PI = 3.14159 Area = 4 * PI * R^2 Volume = (4/3) * PI * R^3 3A+4M+1D+2E -------------------------------- X = 3.14159 * R * R Area = 4 * X Volume = 1.33 * X * R 3A+5M -------------------------------- Area = 4 * 3.14159 * R * R Volume = ( Area / 3 ) * R 2A+4M+1D -------------------------------- Area = 12.56636 * R * R Volume = ( Area /3 ) * R 2A+3M+1D -------------------------------- X = R * R Area = 12.56636 * X Volume = 4.18879 * X * R 3A+4M 25

- 52. Code Generation • Usually a two step process –Generate intermediate code from the semantic representation of the program –Generate machine code from the intermediate code • The advantage is that each phase is simple • Requires design of intermediate language 26

- 53. Code Generation • Most compilers perform translation between successive intermediate representations • Intermediate languages are generally ordered in decreasing level of abstraction from highest (source) to lowest (machine) 27

- 54. Code Generation • Abstractions at the source level identifiers, operators, expressions, statements, conditionals, iteration, functions (user defined, system defined or libraries) • Abstraction at the target level memory locations, registers, stack, opcodes, addressing modes, system libraries, interface to the operating systems • Code generation is mapping from source level abstractions to target machine abstractions 28

- 55. Code Generation • Map identifiers to locations (memory/storage allocation) • Explicate variable accesses (change identifier reference to relocatable/absolute address • Map source operators to opcodes or a sequence of opcodes 29

- 56. Code Generation • Convert conditionals and iterations to a test/jump or compare instructions • Layout parameter passing protocols: locations for parameters, return values, layout of activations frame etc. • Interface calls to library, runtime system, operating systems 30

- 57. Post translation Optimizations • Algebraic transformations and reordering –Remove/simplify operations like • Multiplication by 1 • Multiplication by 0 • Addition with 0 –Reorder instructions based on • Commutative properties of operators • For example x+y is same as y+x (always?) 31

- 58. Post translation Optimizations Instruction selection –Addressing mode selection –Opcode selection –Peephole optimization 32

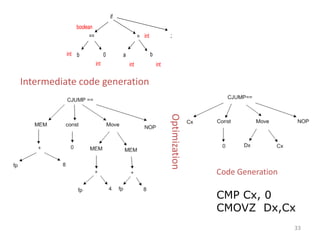

- 59. 33 if == = b 0 a b boolean int int int int int ; Intermediate code generation Optimization Code Generation CMP Cx, 0 CMOVZ Dx,Cx

- 60. Compiler structure 34 Compiler Front End Lexical Analysis Syntax Analysis Semantic Analysis (Language specific) Token stream Abstract Syntax tree Unambiguous Program representation Source Program Target Program Optimizer Optimized code Optional Phase IL code generator IL code Code generator Back End Machine specific

- 61. Something is missing • Information required about the program variables during compilation – Class of variable: keyword, identifier etc. – Type of variable: integer, float, array, function etc. – Amount of storage required – Address in the memory – Scope information • Location to store this information – Attributes with the variable (has obvious problems) – At a central repository and every phase refers to the repository whenever information is required • Normally the second approach is preferred – Use a data structure called symbol table 35

- 62. Final Compiler structure 36 Compiler Front End Lexical Analysis Syntax Analysis Semantic Analysis (Language specific) Token stream Abstract Syntax tree Unambiguous Program representation Source Program Target Program Optimizer Optimized code Optional Phase IL code generator IL code Code generator Back End Machine specific Symbol Table

- 63. Advantages of the model • Also known as Analysis-Synthesis model of compilation – Front end phases are known as analysis phases – Back end phases are known as synthesis phases • Each phase has a well defined work • Each phase handles a logical activity in the process of compilation 37

- 64. Advantages of the model … • Compiler is re-targetable • Source and machine independent code optimization is possible. • Optimization phase can be inserted after the front and back end phases have been developed and deployed 38

- 65. Issues in Compiler Design • Compilation appears to be very simple, but there are many pitfalls • How are erroneous programs handled? • Design of programming languages has a big impact on the complexity of the compiler • M*N vs. M+N problem – Compilers are required for all the languages and all the machines – For M languages and N machines we need to develop M*N compilers – However, there is lot of repetition of work because of similar activities in the front ends and back ends – Can we design only M front ends and N back ends, and some how link them to get all M*N compilers? 39

- 66. M*N vs M+N Problem 40 F1 F2 F3 FM B1 B2 B3 BN Requires M*N compilers F1 F2 F3 FM B1 B2 B3 BN Intermediate Language IL Requires M front ends And N back ends

- 67. Universal Intermediate Language • Impossible to design a single intermediate language to accommodate all programming languages – Mythical universal intermediate language sought since mid 1950s (Aho, Sethi, Ullman) • However, common IRs for similar languages, and similar machines have been designed, and are used for compiler development 41

- 68. How do we know compilers generate correct code? • Prove that the compiler is correct. • However, program proving techniques do not exist at a level where large and complex programs like compilers can be proven to be correct • In practice do a systematic testing to increase confidence level 42

- 69. • Regression testing – Maintain a suite of test programs – Expected behavior of each program is documented – All the test programs are compiled using the compiler and deviations are reported to the compiler writer • Design of test suite – Test programs should exercise every statement of the compiler at least once – Usually requires great ingenuity to design such a test suite – Exhaustive test suites have been constructed for some languages 43

- 70. How to reduce development and testing effort? • DO NOT WRITE COMPILERS • GENERATE compilers • A compiler generator should be able to “generate” compiler from the source language and target machine specifications 44 Compiler Compiler Generator Source Language Specification Target Machine Specification

- 71. Tool based Compiler Development 45 Lexical Analyzer Parser Semantic Analyzer Optimizer IL code generator Code generator Source Program Target Program Lexical Analyzer Generator Lexeme specs Parser Generator Parser specs Other phase Generators Phase Specifications Code Generator generator Machine specifications

- 72. Bootstrapping • Compiler is a complex program and should not be written in assembly language • How to write compiler for a language in the same language (first time!)? • First time this experiment was done for Lisp • Initially, Lisp was used as a notation for writing functions. • Functions were then hand translated into assembly language and executed • McCarthy wrote a function eval[e] in Lisp that took a Lisp expression e as an argument • The function was later hand translated and it became an interpreter for Lisp 46

- 73. Bootstrap Image By: No machine-readable author provided. Tarquin~commonswiki assumed (based on copyright claims). - No machine-readable source provided. Own work assumed (based on copyright claims)., CC BY-SA 3.0, https://commons.wikimedia.org/w/index.php?curid=105468

- 74. Bootstrapping: Example • Lets solve a simpler problem first • Existing architecture and C compiler: –gcc-x86 compiles C language to x86 • New architecture: –x335 • How to develop cc-x335? –runs on x335, generates code for x335 48

- 75. Bootstrapping: Example • How to develop cc-x335? • Write a C compiler in C that emits x335 code • Compile using gcc-x86 on x86 machine • We have a C compiler that emits x335 code –But runs on x86, not x355 / 49

- 76. Bootstrapping: Example • We have cc-x86-x335 • Compiler runs on x86, generated code runs on x355 • Compile the source code of C compiler with cc-x86-x335 • There it is • the output is a binary that runs on x335 • this binary is the desired compiler : cc-x335

- 77. Bootstrapping … • A compiler can be characterized by three languages: the source language (S), the target language (T), and the implementation language (I) • The three language S, I, and T can be quite different. Such a compiler is called cross-compiler • This is represented by a T-diagram as: • In textual form this can be represented as SIT 51 S T I

- 78. • Write a cross compiler for a language L in implementation language S to generate code for machine N • Existing compiler for S runs on a different machine M and generates code for M • When Compiler LSN is run through SMM we get compiler LMN 52 S M M L S N L M N C PDP11 PDP11 EQN TROFF C EQN TROFF PDP11

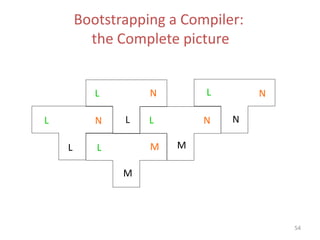

- 79. Bootstrapping a Compiler • Suppose LNN is to be developed on a machine M where LMM is available • Compile LLN second time using the generated compiler 53 L M M L L N L M N L L N L M N L N N

- 80. 54 L N L L L L L L N M M M N N N Bootstrapping a Compiler: the Complete picture

- 81. Compilers of the 21st Century • Overall structure of almost all the compilers is similar to the structure we have discussed • The proportions of the effort have changed since the early days of compilation • Earlier front end phases were the most complex and expensive parts. • Today back end phases and optimization dominate all other phases. Front end phases are typically a smaller fraction of the total time 55

- 82. Lexical Analysis • Recognize tokens and ignore white spaces, comments • Error reporting • Model using regular expressions • Recognize using Finite State Automata1 Generates token stream

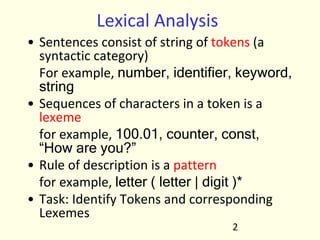

- 83. Lexical Analysis • Sentences consist of string of tokens (a syntactic category) For example, number, identifier, keyword, string • Sequences of characters in a token is a lexeme for example, 100.01, counter, const, “How are you?” • Rule of description is a pattern for example, letter ( letter | digit )* • Task: Identify Tokens and corresponding Lexemes 2

- 84. Lexical Analysis • Examples • Construct constants: for example, convert a number to token num and pass the value as its attribute, – 31 becomes <num, 31> • Recognize keyword and identifiers – counter = counter + increment becomes id = id + id – check that id here is not a keyword • Discard whatever does not contribute to parsing – white spaces (blanks, tabs, newlines) and comments 3

- 85. Interface to other phases • Why do we need Push back? • Required due to look-ahead for example, to recognize >= and > • Typically implemented through a buffer – Keep input in a buffer – Move pointers over the input 4 Lexical Analyzer Syntax Analyzer Input Ask for token Token Read characters Push back Extra characters

- 86. Approaches to implementation • Use assembly language Most efficient but most difficult to implement • Use high level languages like C Efficient but difficult to implement • Use tools like lex, flex Easy to implement but not as efficient as the first two cases 5

- 87. Symbol Table • Stores information for subsequent phases • Interface to the symbol table –Insert(s,t): save lexeme s and token t and return pointer –Lookup(s): return index of entry for lexeme s or 0 if s is not found 9

- 88. Implementation of Symbol Table • Fixed amount of space to store lexemes. –Not advisable as it waste space. • Store lexemes in a separate array. –Each lexeme is separated by eos. –Symbol table has pointers to lexemes. 10

- 89. Fixed space for lexemes Other attributes Usually 32 bytes lexeme1 lexeme2 eos eos lexeme3 …… Other attributes Usually 4 bytes 11

- 90. How to handle keywords? • Consider token DIV and MOD with lexemes div and mod. • Initialize symbol table with insert( “div” , DIV ) and insert( “mod” , MOD). • Any subsequent insert fails (unguarded insert) • Any subsequent lookup returns the keyword value, therefore, these cannot be used as an identifier. 12

- 91. Difficulties in the design of lexical analyzers 13 Is it as simple as it sounds?

- 92. Lexical analyzer: Challenges • Lexemes in a fixed position. Fixed format vs. free format languages • FORTRAN Fixed Format – 80 columns per line – Column 1-5 for the statement number/label column – Column 6 for continuation mark (?) – Column 7-72 for the program statements – Column 73-80 Ignored (Used for other purpose) – Letter C in Column 1 meant the current line is a comment 14

- 93. Lexical analyzer: Challenges • Handling of blanks – in C, blanks separate identifiers – in FORTRAN, blanks are important only in literal strings – variable counter is same as count er – Another example DO 10 I = 1.25 DO 10 I = 1,25 15 DO10I=1.25 DO10I=1,25

- 94. • The first line is a variable assignment DO10I=1.25 • The second line is beginning of a Do loop • Reading from left to right one can not distinguish between the two until the “;” or “.” is reached 16

- 95. 17 Fortran white space and fixed format rules came into force due to punch cards and errors in punching

- 96. 18 Fortran white space and fixed format rules came into force due to punch cards and errors in punching

- 97. PL/1 Problems • Keywords are not reserved in PL/1 if then then then = else else else = then if if then then = then + 1 • PL/1 declarations Declare(arg1,arg2,arg3,…….,argn) • Cannot tell whether Declare is a keyword or array reference until after “)” • Requires arbitrary lookahead and very large buffers. – Worse, the buffers may have to be reloaded. 19

- 98. Problem continues even today!! • C++ template syntax: Foo<Bar> • C++ stream syntax: cin >> var; • Nested templates: Foo<Bar<Bazz>> • Can these problems be resolved by lexical analyzers alone? 20

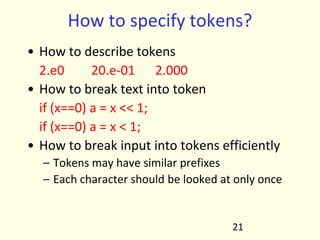

- 99. How to specify tokens? • How to describe tokens 2.e0 20.e-01 2.000 • How to break text into token if (x==0) a = x << 1; if (x==0) a = x < 1; • How to break input into tokens efficiently – Tokens may have similar prefixes – Each character should be looked at only once 21

- 100. How to describe tokens? • Programming language tokens can be described by regular languages • Regular languages – Are easy to understand – There is a well understood and useful theory – They have efficient implementation • Regular languages have been discussed in great detail in the “Theory of Computation” course 22

- 101. How to specify tokens • Regular definitions – Let ri be a regular expression and di be a distinct name – Regular definition is a sequence of definitions of the form d1 J r1 d2 J r2 ….. dn J rn – Where each ri is a regular expression over Σ U {d1, d2, …, di-1} 29

- 102. Examples • My fax number 91-(512)-259-7586 • Σ = digit U {-, (, ) } • Country J digit+ • Area J ‘(‘ digit+ ‘)’ • Exchange J digit+ • Phone J digit+ • Number J country ‘-’ area ‘-’ exchange ‘-’ phone 30 digit2 digit3 digit3 digit4

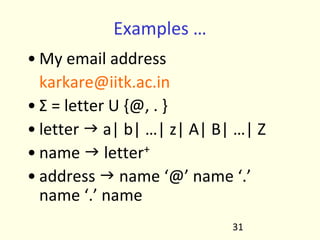

- 103. Examples … • My email address [email protected] • Σ = letter U {@, . } • letter J a| b| …| z| A| B| …| Z • name J letter+ • address J name ‘@’ name ‘.’ name ‘.’ name 31

- 104. Examples … • Identifier letter J a| b| …|z| A| B| …| Z digit J 0| 1| …| 9 identifier J letter(letter|digit)* • Unsigned number in C digit J 0| 1| …|9 digits J digit+ fraction J ’.’ digits | є exponent J (E ( ‘+’ | ‘-’ | є) digits) | є number J digits fraction exponent 32

- 105. Regular expressions in specifications • Regular expressions describe many useful languages • Regular expressions are only specifications; implementation is still required • Given a string s and a regular expression R, does s Є L(R) ? • Solution to this problem is the basis of the lexical analyzers • However, just the yes/no answer is not sufficient • Goal: Partition the input into tokens 33

- 106. 1. Write a regular expression for lexemes of each token • number Æ digit+ • identifier Æ letter(letter|digit)+ 2. Construct R matching all lexemes of all tokens • R = R1 + R2 + R3 + ….. 3. Let input be x1…xn • for 1 ≤ i ≤ n check x1…xi Є L(R) 4. x1…xi Є L(R) B x1…xi Є L(Rj) for some j • smallest such j is token class of x1…xi 5. Remove x1…xi from input; go to (3) 34

- 107. • The algorithm gives priority to tokens listed earlier – Treats “if” as keyword and not identifier • How much input is used? What if – x1…xi Є L(R) – x1…xj Є L(R) – Pick up the longest possible string in L(R) – The principle of “maximal munch” • Regular expressions provide a concise and useful notation for string patterns • Good algorithms require a single pass over the input 35

- 108. How to break up text • Elsex=0 • Regular expressions alone are not enough • Normally the longest match wins • Ties are resolved by prioritizing tokens • Lexical definitions consist of regular definitions, priority rules and maximal munch principle 36 else x = 0 elsex = 0

- 109. Transition Diagrams • Regular expression are declarative specifications • Transition diagram is an implementation • A transition diagram consists of – An input alphabet belonging to Σ – A set of states S – A set of transitions statei →𝑖𝑛𝑝𝑢𝑡 statej – A set of final states F – A start state n • Transition s1 →𝑎 s2 is read: in state s1 on input 𝑎 go to state s2 • If end of input is reached in a final state then accept • Otherwise, reject 37

- 110. Pictorial notation • A state • A final state • Transition • Transition from state i to state j on an input a 38 i j a

- 111. How to recognize tokens • Consider relop Æ < | <= | = | <> | >= | > id Æ letter(letter|digit)* num Æ digit+ (‘.’ digit+)? (E(‘+’|’-’)? digit+)? delim Æ blank | tab | newline ws Æ delim+ • Construct an analyzer that will return <token, attribute> pairs 39

- 112. Transition diagram for relops > = other token is relop, lexeme is >= token is relop, lexeme is > * < > > = = = other other * * token is relop, lexeme is >= token is relop, lexeme is > token is relop, lexeme is < token is relop, lexeme is <> token is relop, lexeme is <= token is relop, lexeme is = 40

- 113. Transition diagram for identifier letter digit other delim letter other delim * * Transition diagram for white spaces 41

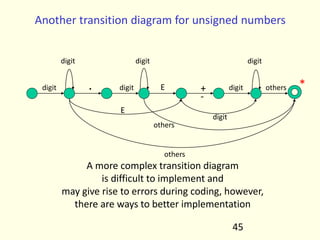

- 114. digit digit digit others * Transition diagram for unsigned numbers digit digit digit others * . digit digit digit digit digit digit digit . E E others * + - Integer number Real numbers 42

- 115. • The lexeme for a given token must be the longest possible • Assume input to be 12.34E56 • Starting in the third diagram the accept state will be reached after 12 • Therefore, the matching should always start with the first transition diagram • If failure occurs in one transition diagram then retract the forward pointer to the start state and activate the next diagram • If failure occurs in all diagrams then a lexical error has occurred 43

- 116. Implementation of transition diagrams Token nexttoken() { while(1) { switch (state) { …… case 10: c=nextchar(); if(isletter(c)) state=10; elseif (isdigit(c)) state=10; else state=11; break; …… } } } 44

- 117. Another transition diagram for unsigned numbers digit digit digit digit digit digit digit . E E others * + - others others A more complex transition diagram is difficult to implement and may give rise to errors during coding, however, there are ways to better implementation 45

- 118. Lexical analyzer generator • Input to the generator – List of regular expressions in priority order – Associated actions for each of regular expression (generates kind of token and other book keeping information) • Output of the generator – Program that reads input character stream and breaks that into tokens – Reports lexical errors (unexpected characters), if any 46

- 119. LEX: A lexical analyzer generator 47 LEX C Compiler Lexical analyzer Token specifications lex.yy.c C code for Lexical analyzer Object code Input program tokens Refer to LEX User’s Manual

- 120. How does LEX work? • Regular expressions describe the languages that can be recognized by finite automata • Translate each token regular expression into a non deterministic finite automaton (NFA) • Convert the NFA into an equivalent DFA • Minimize the DFA to reduce number of states • Emit code driven by the DFA tables 48

- 121. Syntax Analysis • Check syntax and construct abstract syntax tree • Error reporting and recovery • Model using context free grammars • Recognize using Push down automata/Table Driven Parsers 1 if == = ; b 0 a b

- 122. Limitations of regular languages • How to describe language syntax precisely and conveniently. Can regular expressions be used? • Many languages are not regular, for example, string of balanced parentheses – ((((…)))) – { (i)i | i ≥ 0 } – There is no regular expression for this language • A finite automata may repeat states, however, it cannot remember the number of times it has been to a particular state • A more powerful language is needed to describe a valid string of tokens 2

- 123. Syntax definition • Context free grammars <T, N, P, S> – T: a set of tokens (terminal symbols) – N: a set of non terminal symbols – P: a set of productions of the form nonterminal →String of terminals & non terminals – S: a start symbol • A grammar derives strings by beginning with a start symbol and repeatedly replacing a non terminal by the right hand side of a production for that non terminal. • The strings that can be derived from the start symbol of a grammar G form the language L(G) defined by the grammar. 3

- 124. Examples • String of balanced parentheses S → ( S ) S | Є • Grammar list → list + digit | list – digit | digit digit → 0 | 1 | … | 9 Consists of the language which is a list of digit separated by + or -. 4

- 125. Derivation list Î list + digit Î list – digit + digit Î digit – digit + digit Î 9 – digit + digit Î 9 – 5 + digit Î 9 – 5 + 2 Therefore, the string 9-5+2 belongs to the language specified by the grammar The name context free comes from the fact that use of a production X Æ … does not depend on the context of X 5

- 126. Examples … • Simplified Grammar for C block block Æ ‘{‘ decls statements ‘}’ statements Æ stmt-list | Є stmt–list Æ stmt-list stmt ‘;’ | stmt ‘;’ decls Æ decls declaration | Є declaration Æ … 6

- 127. Syntax analyzers • Testing for membership whether w belongs to L(G) is just a “yes” or “no” answer • However the syntax analyzer – Must generate the parse tree – Handle errors gracefully if string is not in the language • Form of the grammar is important – Many grammars generate the same language – Tools are sensitive to the grammar 7

- 128. What syntax analysis cannot do! • To check whether variables are of types on which operations are allowed • To check whether a variable has been declared before use • To check whether a variable has been initialized • These issues will be handled in semantic analysis 8

- 129. Derivation • If there is a production A Æ α then we say that A derives α and is denoted by A B α • α A β B α γ β if A Æ γ is a production • If α1 B α2 B … B αn then α1 B αn • Given a grammar G and a string w of terminals in L(G) we can write S B w • If S B α where α is a string of terminals and non terminals of G then we say that α is a sentential form of G 9 + + *

- 130. Derivation … • If in a sentential form only the leftmost non terminal is replaced then it becomes leftmost derivation • Every leftmost step can be written as wAγ Blm* wδγ where w is a string of terminals and A Æ δ is a production • Similarly, right most derivation can be defined • An ambiguous grammar is one that produces more than one leftmost (rightmost) derivation of a sentence 10

- 131. Parse tree • shows how the start symbol of a grammar derives a string in the language • root is labeled by the start symbol • leaf nodes are labeled by tokens • Each internal node is labeled by a non terminal • if A is the label of anode and x1, x2, …xn are labels of the children of that node then A Æ x1 x2 … xn is a production in the grammar 11

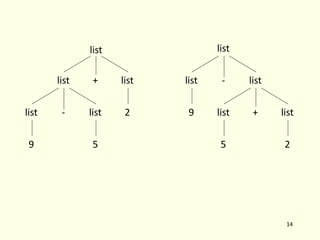

- 132. Example Parse tree for 9-5+2 12 list list list digit digit + - digit 9 5 2

- 133. Ambiguity • A Grammar can have more than one parse tree for a string • Consider grammar list Æ list+ list | list – list | 0 | 1 | … | 9 • String 9-5+2 has two parse trees 13

- 134. 14 list + list - list list 9 list 2 5 list list - list 9 list + list 5 2

- 135. Ambiguity … • Ambiguity is problematic because meaning of the programs can be incorrect • Ambiguity can be handled in several ways – Enforce associativity and precedence – Rewrite the grammar (cleanest way) • There is no algorithm to convert automatically any ambiguous grammar to an unambiguous grammar accepting the same language • Worse, there are inherently ambiguous languages! 15

- 136. Ambiguity in Programming Lang. • Dangling else problem stmt o if expr stmt | if expr stmt else stmt • For this grammar, the string if e1 if e2 then s1 else s2 has two parse trees 16

- 137. 17 stmt if expr stmt else stmt expr stmt if e1 s2 e2 s1 stmt if expr stmt stmt else stmt expr if e1 e2 s1 s2 if e1 if e2 s1 else s2 if e1 if e2 s1 else s2

- 138. Resolving dangling else problem • General rule: match each else with the closest previous unmatched if. The grammar can be rewritten as stmt o matched-stmt | unmatched-stmt matched-stmt o if expr matched-stmt else matched-stmt | others unmatched-stmt o if expr stmt | if expr matched-stmt else unmatched-stmt 18

- 139. Associativity • If an operand has operator on both the sides, the side on which operator takes this operand is the associativity of that operator • In a+b+c b is taken by left + • +, -, *, / are left associative • ^, = are right associative • Grammar to generate strings with right associative operators right Æ letter = right | letter letter Æ a| b |…| z 19

- 140. Precedence • String a+5*2 has two possible interpretations because of two different parse trees corresponding to (a+5)*2 and a+(5*2) • Precedence determines the correct interpretation. • Next, an example of how precedence rules are encoded in a grammar 20

- 141. Precedence/Associativity in the Grammar for Arithmetic Expressions Ambiguous E Æ E + E | E * E | (E) | num | id 3 + 2 + 5 3 + 2 * 5 21 • Unambiguous, with precedence and associativity rules honored E Æ E + T | T T Æ T * F | F F Æ ( E ) | num | id

- 142. Parsing • Process of determination whether a string can be generated by a grammar • Parsing falls in two categories: – Top-down parsing: Construction of the parse tree starts at the root (from the start symbol) and proceeds towards leaves (token or terminals) – Bottom-up parsing: Construction of the parse tree starts from the leaf nodes (tokens or terminals of the grammar) and proceeds towards root (start symbol) 22

- 143. Top down Parsing • Following grammar generates types of Pascal type Æ simple | n id | array [ simple] of type simple Æ integer | char | num dotdot num 1

- 144. Example … • Construction of a parse tree is done by starting the root labeled by a start symbol • repeat following two steps – at a node labeled with non terminal A select one of the productions of A and construct children nodes – find the next node at which subtree is Constructed 2 (Which production?) (Which node?)

- 145. • Parse array [ num dotdot num ] of integer • Cannot proceed as non terminal “simple” never generates a string beginning with token “array”. Therefore, requires back-tracking. • Back-tracking is not desirable, therefore, take help of a “look-ahead” token. The current token is treated as look- ahead token. (restricts the class of grammars) 3 type simple Start symbol Expanded using the rule type Æ simple

- 146. 4 array [ num dotdot num ] of integer type simple ] type [ array dotdot num num simple integer look-ahead of Start symbol Expand using the rule type Æ array [ simple ] of type Left most non terminal Expand using the rule Simple Æ num dotdot num Left most non terminal Expand using the rule type Æ simple Left most non terminal Expand using the rule simple Æ integer all the tokens exhausted Parsing completed

- 147. Recursive descent parsing First set: Let there be a production A o D then First(D) is the set of tokens that appear as the first token in the strings generated from D For example : First(simple) = {integer, char, num} First(num dotdot num) = {num} 5

- 148. Define a procedure for each non terminal procedure type; if lookahead in {integer, char, num} then simple else if lookahead = n then begin match( n ); match(id) end else if lookahead = array then begin match(array); match([); simple; match(]); match(of); type end else error; 6

- 149. procedure simple; if lookahead = integer then match(integer) else if lookahead = char then match(char) else if lookahead = num then begin match(num); match(dotdot); match(num) end else error; procedure match(t:token); if lookahead = t then lookahead = next token else error; 7

- 150. Left recursion • A top down parser with production A o A D may loop forever • From the grammar A o A D | E left recursion may be eliminated by transforming the grammar to A o E R R o D R | H 8

- 151. 9 A A A β α α A R R β α Є Parse tree corresponding to a left recursive grammar Parse tree corresponding to the modified grammar Both the trees generate string βα*

- 152. Example • Consider grammar for arithmetic expressions E Æ E + T | T T Æ T * F | F F Æ ( E ) | id • After removal of left recursion the grammar becomes E Æ T E’ E’ Æ + T E’ | Є T Æ F T’ T’ Æ* F T’ | Є F Æ ( E ) | id 10

- 153. Removal of left recursion In general A Æ AD1 | AD2 | ….. |ADm |E1 | E2 | …… | En transforms to A Æ E1A' | E2A' | …..| EnA' A' Æ D1A' | D2A' |…..| DmA' | Є 11

- 154. Left recursion hidden due to many productions • Left recursion may also be introduced by two or more grammar rules. For example: S Æ Aa | b A Æ Ac | Sd | Є there is a left recursion because S o Aa o Sda • In such cases, left recursion is removed systematically – Starting from the first rule and replacing all the occurrences of the first non terminal symbol – Removing left recursion from the modified grammar 12

- 155. Removal of left recursion due to many productions … • After the first step (substitute S by its rhs in the rules) the grammar becomes S Æ Aa | b A Æ Ac | Aad | bd | Є • After the second step (removal of left recursion) the grammar becomes S Æ Aa | b A Æ bdA' | A' A' Æ cA' | adA' | Є 13

- 156. Left factoring • In top-down parsing when it is not clear which production to choose for expansion of a symbol defer the decision till we have seen enough input. In general if A Æ DE1 | DE2 defer decision by expanding A to DA' we can then expand A’ to E1 or E2 • Therefore A Æ D E1 | D E2 transforms to A Æ DA’ A’ Æ E1 | E2 14

- 157. Dangling else problem again Dangling else problem can be handled by left factoring stmt Æ if expr then stmt else stmt | if expr then stmt can be transformed to stmt Æ if expr then stmt S' S' Æ else stmt | Є 15

- 158. Predictive parsers • A non recursive top down parsing method • Parser “predicts” which production to use • It removes backtracking by fixing one production for every non-terminal and input token(s) • Predictive parsers accept LL(k) languages – First L stands for left to right scan of input – Second L stands for leftmost derivation – k stands for number of lookahead token • In practice LL(1) is used 16

- 159. Predictive parsing • Predictive parser can be implemented by maintaining an external stack 17 input stack parser Parse table output Parse table is a two dimensional array M*X,a+ where “X” is a non terminal and “a” is a terminal of the grammar

- 160. Example • Consider the grammar E Æ T E’ E' Æ +T E' | Є T Æ F T' T' Æ * F T' | Є F Æ ( E ) | id 18

- 161. Parse table for the grammar id + * ( ) $ E EÆTE’ EÆTE’ E’ E’Æ+TE’ E’ÆЄ E’ÆЄ T TÆFT’ TÆFT’ T’ T’ÆЄ T’Æ*FT’ T’ÆЄ T’ÆЄ F FÆid FÆ(E) 19 Blank entries are error states. For example E cannot derive a string starting with ‘+’

- 162. Parsing algorithm • The parser considers 'X' the symbol on top of stack, and 'a' the current input symbol • These two symbols determine the action to be taken by the parser • Assume that '$' is a special token that is at the bottom of the stack and terminates the input string if X = a = $ then halt if X = a ≠ $ then pop(x) and ip++ if X is a non terminal then if M[X,a] = {X Æ UVW} then begin pop(X); push(W,V,U) end else error 20

- 163. Example Stack input action $E id + id * id $ expand by EÆTE’ $E’T id + id * id $ expand by TÆFT’ $E’T’F id + id * id $ expand by FÆid $E’T’id id + id * id $ pop id and ip++ $E’T’ + id * id $ expand by T’ÆЄ $E’ + id * id $ expand by E’Æ+TE’ $E’T+ + id * id $ pop + and ip++ $E’T id * id $ expand by TÆFT’ 21

- 164. Example … Stack input action $E’T’F id * id $ expand by FÆid $E’T’id id * id $ pop id and ip++ $E’T’ * id $ expand by T’Æ*FT’ $E’T’F* * id $ pop * and ip++ $E’T’F id $ expand by FÆid $E’T’id id $ pop id and ip++ $E’T’ $ expand by T’ÆЄ $E’ $ expand by E’ÆЄ $ $ halt 22

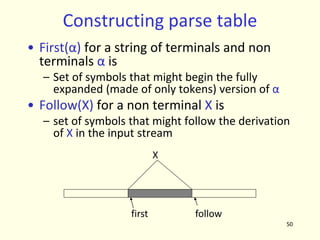

- 165. Constructing parse table • Table can be constructed if for every non terminal, every lookahead symbol can be handled by at most one production • First(α) for a string of terminals and non terminals α is – Set of symbols that might begin the fully expanded (made of only tokens) version of α • Follow(X) for a non terminal X is – set of symbols that might follow the derivation of X in the input stream 23 first follow X

- 166. Compute first sets • If X is a terminal symbol then First(X) = {X} • If X Æ Є is a production then Є is in First(X) • If X is a non terminal and X Æ YlY2 … Yk is a production then if for some i, a is in First(Yi) and Є is in all of First(Yj) (such that j<i) then a is in First(X) • If Є is in First (Y1) … First(Yk) then Є is in First(X) 24

- 167. Example • For the expression grammar E Æ T E’ E' Æ +T E' | Є T Æ F T' T' Æ * F T' | Є F Æ ( E ) | id First(E) = First(T) = First(F) = { (, id } First(E') = {+, Є} First(T') = { *, Є} 25

- 168. Compute follow sets 1. Place $ in follow(S) 2. If there is a production A → αBβ then everything in first(β) (except ε) is in follow(B) 3. If there is a production A → αB then everything in follow(A) is in follow(B) 4. If there is a production A → αBβ and First(β) contains ε then everything in follow(A) is in follow(B) Since follow sets are defined in terms of follow sets last two steps have to be repeated until follow sets converge 26

- 169. Example • For the expression grammar E Æ T E’ E' Æ + T E' | Є T Æ F T' T' Æ * F T' | Є F Æ ( E ) | id follow(E) = follow(E’) = , $, ) - follow(T) = follow(T’) = , $, ), + - follow(F) = { $, ), +, *} 27

- 170. Construction of parse table • for each production A Æ α do – for each terminal ‘a’ in first(α) M[A,a] = A Æ α – If Є is in First(α) M[A,b] = A Æ α for each terminal b in follow(A) – If ε is in First(α) and $ is in follow(A) M[A,$] = A Æ α • A grammar whose parse table has no multiple entries is called LL(1) 28

- 171. Practice Assignment • Construct LL(1) parse table for the expression grammar bexpr Æ bexpr or bterm | bterm bterm Æ bterm and bfactor | bfactor bfactor Æ not bfactor | ( bexpr ) | true | false • Steps to be followed – Remove left recursion – Compute first sets – Compute follow sets – Construct the parse table 29

- 172. Error handling • Stop at the first error and print a message – Compiler writer friendly – But not user friendly • Every reasonable compiler must recover from errors and identify as many errors as possible • However, multiple error messages due to a single fault must be avoided • Error recovery methods – Panic mode – Phrase level recovery – Error productions – Global correction 30

- 173. Panic mode • Simplest and the most popular method • Most tools provide for specifying panic mode recovery in the grammar • When an error is detected – Discard tokens one at a time until a set of tokens is found whose role is clear – Skip to the next token that can be placed reliably in the parse tree 31

- 174. Panic mode … • Consider following code begin a = b + c; x = p r ; h = x < 0; end; • The second expression has syntax error • Panic mode recovery for begin-end block skip ahead to next ‘;’ and try to parse the next expression • It discards one expression and tries to continue parsing • May fail if no further ‘;’ is found 32

- 175. Phrase level recovery • Make local correction to the input • Works only in limited situations – A common programming error which is easily detected – For example insert a “;” after closing “-” of a class definition • Does not work very well! 33

- 176. Error productions • Add erroneous constructs as productions in the grammar • Works only for most common mistakes which can be easily identified • Essentially makes common errors as part of the grammar • Complicates the grammar and does not work very well 34

- 177. Global corrections • Considering the program as a whole find a correct “nearby” program • Nearness may be measured using certain metric • PL/C compiler implemented this scheme: anything could be compiled! • It is complicated and not a very good idea! 35

- 178. Error Recovery in LL(1) parser • Error occurs when a parse table entry M[A,a] is empty • Skip symbols in the input until a token in a selected set (synch) appears • Place symbols in follow(A) in synch set. Skip tokens until an element in follow(A) is seen. Pop(A) and continue parsing • Add symbol in first(A) in synch set. Then it may be possible to resume parsing according to A if a symbol in first(A) appears in input. 36

- 179. Practice Assignment • Reading assignment: Read about error recovery in LL(1) parsers • Assignment to be submitted: – introduce synch symbols (using both follow and first sets) in the parse table created for the boolean expression grammar in the previous assignment – Parse “not (true and or false)” and show how error recovery works 37

- 180. 1 Bottom up parsing • Construct a parse tree for an input string beginning at leaves and going towards root OR • Reduce a string w of input to start symbol of grammar Consider a grammar S Æ aABe A Æ Abc | b B Æ d And reduction of a string a b b c d e a A b c d e a A d e a A B e S The sentential forms happen to be a right most derivation in the reverse order. S Î a A B e Î a A d e Î a A b c d e Î a b b c d e

- 181. 2 • Split string being parsed into two parts – Two parts are separated by a special character “.” – Left part is a string of terminals and non terminals – Right part is a string of terminals • Initially the input is .w Shift reduce parsing

- 182. 3 Shift reduce parsing … • Bottom up parsing has two actions • Shift: move terminal symbol from right string to left string if string before shift is α.pqr then string after shift is αp.qr

- 183. 4 Shift reduce parsing … • Reduce: immediately on the left of “.” identify a string same as RHS of a production and replace it by LHS if string before reduce action is αβ.pqr and AÆβ is a production then string after reduction is αA.pqr

- 184. 5 Example Assume grammar is E Æ E+E | E*E | id Parse id*id+id Assume an oracle tells you when to shift / when to reduce String action (by oracle) .id*id+id shift id.*id+id reduce EÆid E.*id+id shift E*.id+id shift E*id.+id reduce EÆid E*E.+id reduce EÆE*E E.+id shift E+.id shift E+id. Reduce EÆid E+E. Reduce EÆE+E E. ACCEPT

- 185. 6 Shift reduce parsing … • Symbols on the left of “.” are kept on a stack – Top of the stack is at “.” – Shift pushes a terminal on the stack – Reduce pops symbols (rhs of production) and pushes a non terminal (lhs of production) onto the stack • The most important issue: when to shift and when to reduce • Reduce action should be taken only if the result can be reduced to the start symbol

- 186. 7 Issues in bottom up parsing • How do we know which action to take –whether to shift or reduce –Which production to use for reduction? • Sometimes parser can reduce but it should not: XÆЄ can always be used for reduction!

- 187. 8 Issues in bottom up parsing • Sometimes parser can reduce in different ways! • Given stack δ and input symbol a, should the parser –Shift a onto stack (making it δa) –Reduce by some production AÆβ assuming that stack has form αβ (making it αA) –Stack can have many combinations of αβ –How to keep track of length of β?

- 188. Handles • The basic steps of a bottom-up parser are – to identify a substring within a rightmost sentential form which matches the RHS of a rule. – when this substring is replaced by the LHS of the matching rule, it must produce the previous rightmost-sentential form. • Such a substring is called a handle

- 189. 10 Handle • A handle of a right sentential form γ is – a production rule A→ β, and – an occurrence of a sub-string β in γ such that • when the occurrence of β is replaced by A in γ, we get the previous right sentential form in a rightmost derivation of γ.

- 190. 11 Handle Formally, if S Îrm* αAw Îrm αβw, then • β in the position following α, • and the corresponding production AÆ β is a handle of αβw. • The string w consists of only terminal symbols

- 191. 12 Handle • We only want to reduce handle and not any RHS • Handle pruning: If β is a handle and A Æ β is a production then replace β by A • A right most derivation in reverse can be obtained by handle pruning.

- 192. 13 Handle: Observation • Only terminal symbols can appear to the right of a handle in a rightmost sentential form. • Why?

- 193. 14 Handle: Observation Is this scenario possible: • 𝛼𝛽𝛾 is the content of the stack • 𝐴 → 𝛾 is a handle • The stack content reduces to 𝛼𝛽𝐴 • Now B → 𝛽 is the handle In other words, handle is not on top, but buried inside stack Not Possible! Why?

- 194. 15 Handles … • Consider two cases of right most derivation to understand the fact that handle appears on the top of the stack 𝑆 → 𝛼𝐴𝑧 → 𝛼𝛽𝐵𝑦𝑧 → 𝛼𝛽𝛾𝑦𝑧 𝑆 → 𝛼𝐵𝑥𝐴𝑧 → 𝛼𝐵𝑥𝑦𝑧 → 𝛼𝛾𝑥𝑦𝑧

- 195. 16 Handle always appears on the top Case I: 𝑆 → 𝛼𝐴𝑧 → 𝛼𝛽𝐵𝑦𝑧 → 𝛼𝛽𝛾𝑦𝑧 stack input action αβγ yz reduce by BÆγ αβB yz shift y αβBy z reduce by AÆ βBy αA z Case II: 𝑆 → 𝛼𝐵𝑥𝐴𝑧 → 𝛼𝐵𝑥𝑦𝑧 → 𝛼𝛾𝑥𝑦𝑧 stack input action αγ xyz reduce by BÆγ αB xyz shift x αBx yz shift y αBxy z reduce AÆy αBxA z

- 196. 17 Shift Reduce Parsers • The general shift-reduce technique is: – if there is no handle on the stack then shift – If there is a handle then reduce • Bottom up parsing is essentially the process of detecting handles and reducing them. • Different bottom-up parsers differ in the way they detect handles.

- 197. 18 Conflicts • What happens when there is a choice –What action to take in case both shift and reduce are valid? shift-reduce conflict –Which rule to use for reduction if reduction is possible by more than one rule? reduce-reduce conflict

- 198. 19 Conflicts • Conflicts come either because of ambiguous grammars or parsing method is not powerful enough

- 199. 20 Shift reduce conflict stack input action E+E *id reduce by EÆE+E E *id shift E* id shift E*id reduce by EÆid E*E reduce byEÆE*E E stack input action E+E *id shift E+E* id shift E+E*id reduce by EÆid E+E*E reduce by EÆE*E E+E reduce by EÆE+E E Consider the grammar E Æ E+E | E*E | id and the input id+id*id

- 200. 21 Reduce reduce conflict Consider the grammar M Æ R+R | R+c | R R Æ c and the input c+c Stack input action c+c shift c +c reduce by RÆc R +c shift R+ c shift R+c reduce by RÆc R+R reduce by MÆR+R M Stack input action c+c shift c +c reduce by RÆc R +c shift R+ c shift R+c reduce by MÆR+c M

- 201. 22 LR parsing • Input buffer contains the input string. • Stack contains a string of the form S0X1S1X2……XnSn where each Xi is a grammar symbol and each Si is a state. • Table contains action and goto parts. • action table is indexed by state and terminal symbols. • goto table is indexed by state and non terminal symbols. input stack parser driver Parse table action goto output

- 202. 23 Example E Æ E + T | T T Æ T * F | F F Æ ( E ) | id State id + * ( ) $ E T F 0 s5 s4 1 2 3 1 s6 acc 2 r2 s7 r2 r2 3 r4 r4 r4 r4 4 s5 s4 8 2 3 5 r6 r6 r6 r6 6 s5 s4 9 3 7 s5 s4 10 8 s6 s11 9 r1 s7 r1 r1 10 r3 r3 r3 r3 11 r5 r5 r5 r5 Consider a grammar and its parse table goto action

- 203. 24 Actions in an LR (shift reduce) parser • Assume Si is top of stack and ai is current input symbol • Action [Si,ai] can have four values 1. sj: shift ai to the stack, goto state Sj 2. rk: reduce by rule number k 3. acc: Accept 4. err: Error (empty cells in the table)

- 204. 25 Driving the LR parser Stack: S0X1S1X2…XmSm Input: aiai+1…an$ • If action[Sm,ai] = shift S Then the configuration becomes Stack: S0X1S1……XmSmaiS Input: ai+1…an$ • If action[Sm,ai] = reduce AÆβ Then the configuration becomes Stack: S0X1S1…Xm-rSm-r AS Input: aiai+1…an$ Where r = |β| and S = goto[Sm-r,A]

- 205. 26 Driving the LR parser Stack: S0X1S1X2…XmSm Input: aiai+1…an$ • If action[Sm,ai] = accept Then parsing is completed. HALT • If action[Sm,ai] = error (or empty cell) Then invoke error recovery routine.

- 206. 27 Parse id + id * id Stack Input Action 0 id+id*id$ shift 5 0 id 5 +id*id$ reduce by FÆid 0 F 3 +id*id$ reduce by TÆF 0 T 2 +id*id$ reduce by EÆT 0 E 1 +id*id$ shift 6 0 E 1 + 6 id*id$ shift 5 0 E 1 + 6 id 5 *id$ reduce by FÆid 0 E 1 + 6 F 3 *id$ reduce by TÆF 0 E 1 + 6 T 9 *id$ shift 7 0 E 1 + 6 T 9 * 7 id$ shift 5 0 E 1 + 6 T 9 * 7 id 5 $ reduce by FÆid 0 E 1 + 6 T 9 * 7 F 10 $ reduce by TÆT*F 0 E 1 + 6 T 9 $ reduce by EÆE+T 0 E 1 $ ACCEPT

- 207. 28 Configuration of a LR parser • The tuple <Stack Contents, Remaining Input> defines a configuration of a LR parser • Initially the configuration is <S0 , a0a1…an$ > • Typical final configuration on a successful parse is < S0X1Si , $>

- 208. 29 LR parsing Algorithm Initial state: Stack: S0 Input: w$ while (1) { if (action[S,a] = shift S’) { push(a); push(S’); ip++ } else if (action[S,a] = reduce AÆβ) { pop (2*|β|) symbols; push(A); push (goto*S’’,A+) (S’’ is the state at stack top after popping symbols) } else if (action[S,a] = accept) { exit } else { error }

- 209. 30 Constructing parse table Augment the grammar • G is a grammar with start symbol S • The augmented grammar G’ for G has a new start symbol S’ and an additional production S’ Æ S • When the parser reduces by this rule it will stop with accept

- 210. Production to Use for Reduction • How do we know which production to apply in a given configuration • We can guess! – May require backtracking • Keep track of “ALL” possible rules that can apply at a given point in the input string – But in general, there is no upper bound on the length of the input string – Is there a bound on number of applicable rules?

- 211. Some hands on! • 𝐸′ → 𝐸 • 𝐸 → 𝐸 + 𝑇 • 𝐸 → 𝑇 • 𝑇 → 𝑇 ∗ 𝐹 • 𝑇 → 𝐹 • 𝐹 → (𝐸) • 𝐹 → 𝑖𝑑 Strings to Parse • id + id + id + id • id * id * id * id • id * id + id * id • id * (id + id) * id

- 212. 33 Parser states • Goal is to know the valid reductions at any given point • Summarize all possible stack prefixes α as a parser state • Parser state is defined by a DFA state that reads in the stack α • Accept states of DFA are unique reductions

- 213. 34 Viable prefixes • α is a viable prefix of the grammar if – ∃w such that αw is a right sentential form – <α,w> is a configuration of the parser • As long as the parser has viable prefixes on the stack no parser error has been seen • The set of viable prefixes is a regular language • We can construct an automaton that accepts viable prefixes

- 214. 35 LR(0) items • An LR(0) item of a grammar G is a production of G with a special symbol “.” at some position of the right side • Thus production A→XYZ gives four LR(0) items A Æ .XYZ A Æ X.YZ A Æ XY.Z A Æ XYZ.

- 215. 36 LR(0) items • An item indicates how much of a production has been seen at a point in the process of parsing – Symbols on the left of “.” are already on the stacks – Symbols on the right of “.” are expected in the input

- 216. 37 Start state • Start state of DFA is an empty stack corresponding to S’Æ.S item • This means no input has been seen • The parser expects to see a string derived from S

- 217. 38 Closure of a state • Closure of a state adds items for all productions whose LHS occurs in an item in the state, just after “.” –Set of possible productions to be reduced next –Added items have “.” located at the beginning –No symbol of these items is on the stack as yet

- 218. 39 Closure operation • Let I be a set of items for a grammar G • closure(I) is a set constructed as follows: – Every item in I is in closure (I) – If A Æ α.Bβ is in closure(I) and B Æ γ is a production then B Æ .γ is in closure(I) • Intuitively A Æα.Bβ indicates that we expect a string derivable from Bβ in input • If B Æ γ is a production then we might see a string derivable from γ at this point

- 219. 40 Example For the grammar E’ Æ E E Æ E + T | T T Æ T * F | F F → ( E ) | id If I is , E’ Æ .E } then closure(I) is E’ Æ .E E Æ .E + T E Æ .T T Æ .T * F T Æ .F F Æ .id F Æ .(E)

- 220. 41 Goto operation • Goto(I,X) , where I is a set of items and X is a grammar symbol, –is closure of set of item A ÆαX.β –such that A → α.Xβ is in I • Intuitively if I is a set of items for some valid prefix α then goto(I,X) is set of valid items for prefix αX

- 221. 42 Goto operation If I is , E’ÆE. , EÆE. + T } then goto(I,+) is E Æ E + .T T Æ .T * F T Æ .F F Æ .(E) F Æ .id

- 222. 43 Sets of items C : Collection of sets of LR(0) items for grammar G’ C = , closure ( , S’ Æ .S } ) } repeat for each set of items I in C for each grammar symbol X if goto (I,X) is not empty and not in C ADD goto(I,X) to C until no more additions to C

- 223. 44 Example Grammar: E’ Æ E E Æ E+T | T T Æ T*F | F F Æ (E) | id I0: closure(E’Æ.E) E′ Æ .E E Æ .E + T E Æ .T T Æ .T * F T Æ .F F Æ .(E) F Æ .id I1: goto(I0,E) E′ Æ E. E Æ E. + T I2: goto(I0,T) E Æ T. T Æ T. *F I3: goto(I0,F) T Æ F. I4: goto( I0,( ) F Æ (.E) E Æ .E + T E Æ .T T Æ .T * F T Æ .F F Æ .(E) F Æ .id I5: goto(I0,id) F Æ id.

- 224. 45 I6: goto(I1,+) E Æ E + .T T Æ .T * F T Æ .F F Æ .(E) F Æ .id I7: goto(I2,*) T Æ T * .F F Æ.(E) F Æ .id I8: goto(I4,E) F Æ (E.) E Æ E. + T goto(I4,T) is I2 goto(I4,F) is I3 goto(I4,( ) is I4 goto(I4,id) is I5 I9: goto(I6,T) E Æ E + T. T Æ T. * F goto(I6,F) is I3 goto(I6,( ) is I4 goto(I6,id) is I5 I10: goto(I7,F) T Æ T * F. goto(I7,( ) is I4 goto(I7,id) is I5 I11: goto(I8,) ) F Æ (E). goto(I8,+) is I6 goto(I9,*) is I7

- 225. 46 I0 I4 I8 I11 I2 I7 I10 I3 I1 I6 I5 I9 + + * * ( ( ( ( id id id id )

- 226. 47 I0 I4 I8 I11 I2 I7 I10 I3 I1 I6 I5 I9 E E T T T F F F F

- 227. 48 I0 I4 I8 I11 I2 I7 I10 I3 I1 I6 I5 I9 E E + + T T T * * F F F F ( ( ( ( id id id id )

- 228. LR(0) (?) Parse Table • The information is still not sufficient to help us resolve shift-reduce conflict. For example the state: I1: E′ Æ E. E Æ E. + T • We need some more information to make decisions.

- 229. 50 Constructing parse table • First(α) for a string of terminals and non terminals α is – Set of symbols that might begin the fully expanded (made of only tokens) version of α • Follow(X) for a non terminal X is – set of symbols that might follow the derivation of X in the input stream first follow X

- 230. 51 Compute first sets • If X is a terminal symbol then first(X) = {X} • If X Æ Є is a production then Є is in first(X) • If X is a non terminal and X Æ YlY2 … Yk is a production, then if for some i, a is in first(Yi) and Є is in all of first(Yj) (such that j<i) then a is in first(X) • If Є is in first (Y1) … first(Yk) then Є is in first(X) • Now generalize to a string 𝛼 of terminals and non-terminals

- 231. 52 Example • For the expression grammar E Æ T E‘ E' Æ +T E' | Є T Æ F T' T' Æ * F T' | Є F Æ ( E ) | id First(E) = First(T) = First(F) = { (, id } First(E') = {+, Є} First(T') = { *, Є}

- 232. 53 Compute follow sets 1. Place $ in follow(S) // S is the start symbol 2. If there is a production A → αBβ then everything in first(β) (except ε) is in follow(B) 3. If there is a production A → αBβ and first(β) contains ε then everything in follow(A) is in follow(B) 4. If there is a production A → αB then everything in follow(A) is in follow(B) Last two steps have to be repeated until the follow sets converge.

- 233. 54 Example • For the expression grammar E Æ T E’ E' Æ + T E' | Є T Æ F T' T' Æ * F T' | Є F Æ ( E ) | id follow(E) = follow(E’) = , $, ) - follow(T) = follow(T’) = , $, ), + - follow(F) = { $, ), +, *}

- 234. 55 Construct SLR parse table • Construct C={I0, …, In} the collection of sets of LR(0) items • If AÆα.aβ is in Ii and goto(Ii,a) = Ij then action[i,a] = shift j • If AÆα. is in Ii then action[i,a] = reduce AÆα for all a in follow(A) • If S'ÆS. is in Ii then action[i,$] = accept • If goto(Ii,A) = Ij then goto[i,A]=j for all non terminals A • All entries not defined are errors

- 235. 56 Notes • This method of parsing is called SLR (Simple LR) • LR parsers accept LR(k) languages – L stands for left to right scan of input – R stands for rightmost derivation – k stands for number of lookahead token • SLR is the simplest of the LR parsing methods. SLR is too weak to handle most languages! • If an SLR parse table for a grammar does not have multiple entries in any cell then the grammar is unambiguous • All SLR grammars are unambiguous • Are all unambiguous grammars in SLR?

- 236. 57 Practice Assignment Construct SLR parse table for following grammar E Æ E + E | E - E | E * E | E / E | ( E ) | digit Show steps in parsing of string 9*5+(2+3*7) • Steps to be followed – Augment the grammar – Construct set of LR(0) items – Construct the parse table – Show states of parser as the given string is parsed

- 237. 58 Example • Consider following grammar and its SLR parse table: S’ Æ S S Æ L = R S Æ R L Æ *R L Æ id R Æ L I0: S’ Æ .S S Æ .L=R S Æ .R L Æ .*R L Æ .id R Æ .L I1: goto(I0, S) S’ Æ S. I2: goto(I0, L) S Æ L.=R R Æ L. Assignment (not to be submitted): Construct rest of the items and the parse table.

- 238. 59 = * id $ S L R 0 s4 s5 1 2 3 1 acc 2 s6,r6 r6 3 r3 4 s4 s5 8 7 5 r5 r5 6 s4 s5 8 9 7 r4 r4 8 r6 r6 9 r2 SLR parse table for the grammar The table has multiple entries in action[2,=]

- 239. 60 • There is both a shift and a reduce entry in action[2,=]. Therefore state 2 has a shift- reduce conflict on symbol “=“, However, the grammar is not ambiguous. • Parse id=id assuming reduce action is taken in [2,=] Stack input action 0 id=id shift 5 0 id 5 =id reduce by LÆid 0 L 2 =id reduce by RÆL 0 R 3 =id error

- 240. 61 • if shift action is taken in [2,=] Stack input action 0 id=id$ shift 5 0 id 5 =id$ reduce by LÆid 0 L 2 =id$ shift 6 0 L 2 = 6 id$ shift 5 0 L 2 = 6 id 5 $ reduce by LÆid 0 L 2 = 6 L 8 $ reduce by RÆL 0 L 2 = 6 R 9 $ reduce by SÆL=R 0 S 1 $ ACCEPT

- 241. 62 Problems in SLR parsing • No sentential form of this grammar can start with R=… • However, the reduce action in action[2,=] generates a sentential form starting with R= • Therefore, the reduce action is incorrect • In SLR parsing method state i calls for reduction on symbol “a”, by rule AÆα if Ii contains [AÆα.+ and “a” is in follow(A) • However, when state I appears on the top of the stack, the viable prefix βα on the stack may be such that βA can not be followed by symbol “a” in any right sentential form • Thus, the reduction by the rule AÆα on symbol “a” is invalid • SLR parsers cannot remember the left context

- 242. 63 Canonical LR Parsing • Carry extra information in the state so that wrong reductions by A Æ α will be ruled out • Redefine LR items to include a terminal symbol as a second component (look ahead symbol) • The general form of the item becomes [A Æ α.β, a] which is called LR(1) item. • Item [A Æ α., a] calls for reduction only if next input is a. The set of symbols “a”s will be a subset of Follow(A).

- 243. 64 Closure(I) repeat for each item [A Æ α.Bβ, a] in I for each production B Æ γ in G' and for each terminal b in First(βa) add item [B Æ .γ, b] to I until no more additions to I

- 244. 65 Example Consider the following grammar S‘Æ S S Æ CC C Æ cC | d Compute closure(I) where I=,*S’ Æ .S, $]} S‘Æ .S, $ S Æ .CC, $ C Æ .cC, c C Æ .cC, d C Æ .d, c C Æ .d, d

- 245. 66 Example Construct sets of LR(1) items for the grammar on previous slide I0: S′ Æ .S, $ S Æ .CC, $ C Æ .cC, c/d C Æ .d, c/d I1: goto(I0,S) S′ Æ S., $ I2: goto(I0,C) S Æ C.C, $ C Æ .cC, $ C Æ .d, $ I3: goto(I0,c) C Æ c.C, c/d C Æ .cC, c/d C Æ .d, c/d I4: goto(I0,d) C Æ d., c/d I5: goto(I2,C) S Æ CC., $ I6: goto(I2,c) C Æ c.C, $ C Æ .cC, $ C Æ .d, $ I7: goto(I2,d) C Æ d., $ I8: goto(I3,C) C Æ cC., c/d I9: goto(I6,C) C Æ cC., $

- 246. 67 Construction of Canonical LR parse table • Construct C={I0, …,In} the sets of LR(1) items. • If [A Æ α.aβ, b] is in Ii and goto(Ii, a)=Ij then action[i,a]=shift j • If [A Æ α., a] is in Ii then action[i,a] reduce A Æ α • If [S′ Æ S., $] is in Ii then action[i,$] = accept • If goto(Ii, A) = Ij then goto[i,A] = j for all non terminals A

- 247. 68 Parse table State c d $ S C 0 s3 s4 1 2 1 acc 2 s6 s7 5 3 s3 s4 8 4 r3 r3 5 r1 6 s6 s7 9 7 r3 8 r2 r2 9 r2

- 248. 69 Notes on Canonical LR Parser • Consider the grammar discussed in the previous two slides. The language specified by the grammar is c*dc*d. • When reading input cc…dcc…d the parser shifts cs into stack and then goes into state 4 after reading d. It then calls for reduction by CÆd if following symbol is c or d. • IF $ follows the first d then input string is c*d which is not in the language; parser declares an error • On an error canonical LR parser never makes a wrong shift/reduce move. It immediately declares an error • Problem: Canonical LR parse table has a large number of states

- 249. 70 LALR Parse table • Look Ahead LR parsers • Consider a pair of similar looking states (same kernel and different lookaheads) in the set of LR(1) items I4: C Æ d. , c/d I7: C Æ d., $ • Replace I4 and I7 by a new state I47 consisting of (C Æ d., c/d/$) • Similarly I3 & I6 and I8 & I9 form pairs • Merge LR(1) items having the same core

- 250. 71 Construct LALR parse table • Construct C={I0,……,In} set of LR(1) items • For each core present in LR(1) items find all sets having the same core and replace these sets by their union • Let C' = {J0,…….,Jm} be the resulting set of items • Construct action table as was done earlier • Let J = I1 U I2…….U Ik since I1 , I2……., Ik have same core, goto(J,X) will have he same core Let K=goto(I1,X) U goto(I2,X)……goto(Ik,X) the goto(J,X)=K

- 251. 72 LALR parse table … State c d $ S C 0 s36 s47 1 2 1 acc 2 s36 s47 5 36 s36 s47 89 47 r3 r3 r3 5 r1 89 r2 r2 r2

- 252. 73 Notes on LALR parse table • Modified parser behaves as original except that it will reduce CÆd on inputs like ccd. The error will eventually be caught before any more symbols are shifted. • In general core is a set of LR(0) items and LR(1) grammar may produce more than one set of items with the same core. • Merging items never produces shift/reduce conflicts but may produce reduce/reduce conflicts. • SLR and LALR parse tables have same number of states.

- 253. 74 Notes on LALR parse table… • Merging items may result into conflicts in LALR parsers which did not exist in LR parsers • New conflicts can not be of shift reduce kind: – Assume there is a shift reduce conflict in some state of LALR parser with items {[XÆα.,a],[YÆγ.aβ,b]} – Then there must have been a state in the LR parser with the same core – Contradiction; because LR parser did not have conflicts • LALR parser can have new reduce-reduce conflicts – Assume states {[XÆα., a], [YÆβ., b]} and {[XÆα., b], [YÆβ., a]} – Merging the two states produces {[XÆα., a/b], [YÆβ., a/b]}

- 254. 75 Notes on LALR parse table… • LALR parsers are not built by first making canonical LR parse tables • There are direct, complicated but efficient algorithms to develop LALR parsers • Relative power of various classes – SLR(1) ≤ LALR(1) ≤ LR(1) – SLR(k) ≤ LALR(k) ≤ LR(k) – LL(k) ≤ LR(k)

- 255. 76 Error Recovery • An error is detected when an entry in the action table is found to be empty. • Panic mode error recovery can be implemented as follows: – scan down the stack until a state S with a goto on a particular nonterminal A is found. – discard zero or more input symbols until a symbol a is found that can legitimately follow A. – stack the state goto[S,A] and resume parsing. • Choice of A: Normally these are non terminals representing major program pieces such as an expression, statement or a block. For example if A is the nonterminal stmt, a might be semicolon or end.

- 256. 77 Parser Generator • Some common parser generators – YACC: Yet Another Compiler Compiler – Bison: GNU Software – ANTLR: ANother Tool for Language Recognition • Yacc/Bison source program specification (accept LALR grammars) declaration %% translation rules %% supporting C routines

- 257. 78 Yacc and Lex schema Lex Yacc y.tab.c C Compiler Parser Token specifications Grammar specifications Lex.yy.c C code for parser Object code Input program Abstract Syntax tree C code for lexical analyzer Refer to YACC Manual

- 258. 79 Bottom up parsing … • A more powerful parsing technique • LR grammars – more expensive than LL • Can handle left recursive grammars • Can handle virtually all the programming languages • Natural expression of programming language syntax • Automatic generation of parsers (Yacc, Bison etc.) • Detects errors as soon as possible • Allows better error recovery

- 259. Semantic Analysis • Static checking – Type checking – Control flow checking – Uniqueness checking – Name checks • Disambiguate overloaded operators • Type coercion • Error reporting 1

- 260. Beyond syntax analysis • Parser cannot catch all the program errors • There is a level of correctness that is deeper than syntax analysis • Some language features cannot be modeled using context free grammar formalism – Whether an identifier has been declared before use – This problem is of identifying a language {wαw | w є Σ*} – This language is not context free 2

- 261. Beyond syntax … • Examples string x; int y; y = x + 3 the use of x could be a type error int a, b; a = b + c c is not declared • An identifier may refer to different variables in different parts of the program • An identifier may be usable in one part of the program but not another 3

- 262. Compiler needs to know? • Whether a variable has been declared? • Are there variables which have not been declared? • What is the type of the variable? • Whether a variable is a scalar, an array, or a function? • What declaration of the variable does each reference use? • If an expression is type consistent? • If an array use like A[i,j,k] is consistent with the declaration? Does it have three dimensions? 4

- 263. • How many arguments does a function take? • Are all invocations of a function consistent with the declaration? • If an operator/function is overloaded, which function is being invoked? • Inheritance relationship • Classes not multiply defined • Methods in a class are not multiply defined • The exact requirements depend upon the language 5

- 264. How to answer these questions? • These issues are part of semantic analysis phase • Answers to these questions depend upon values like type information, number of parameters etc. • Compiler will have to do some computation to arrive at answers • The information required by computations may be non local in some cases 6

- 265. How to … ? • Use formal methods – Context sensitive grammars – Extended attribute grammars • Use ad-hoc techniques – Symbol table – Ad-hoc code • Something in between !!! – Use attributes – Do analysis along with parsing – Use code for attribute value computation – However, code is developed systematically 7

- 266. Why attributes ? • For lexical analysis and syntax analysis formal techniques were used. • However, we still had code in form of actions along with regular expressions and context free grammar • The attribute grammar formalism is important – However, it is very difficult to implement – But makes many points clear – Makes “ad-hoc” code more organized – Helps in doing non local computations 8

- 267. Attribute Grammar Framework • Generalization of CFG where each grammar symbol has an associated set of attributes • Values of attributes are computed by semantic rules 9

- 268. Attribute Grammar Framework • Two notations for associating semantic rules with productions • Syntax directed definition •high level specifications •hides implementation details •explicit order of evaluation is not specified •Translation scheme •indicate order in which semantic rules are to be evaluated •allow some implementation details to be shown 10

- 269. • Conceptually both: – parse input token stream – build parse tree – traverse the parse tree to evaluate the semantic rules at the parse tree nodes • Evaluation may: – save information in the symbol table – issue error messages – generate code – perform any other activity 11 Attribute Grammar Framework

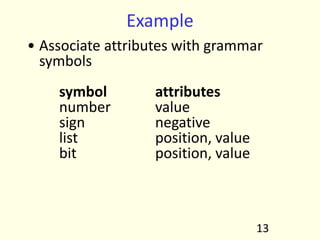

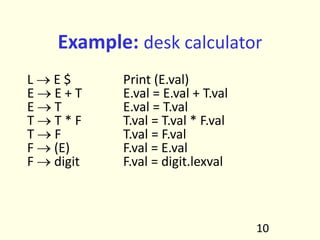

- 270. Example • Consider a grammar for signed binary numbers number Æ sign list sign Æ + | - list Æ list bit | bit bit Æ 0 | 1 • Build attribute grammar that annotates number with the value it represents 12

- 271. Example • Associate attributes with grammar symbols symbol attributes number value sign negative list position, value bit position, value 13

- 272. production Attribute rule number Æ sign list list.position Å 0 if sign.negative number.value Å -list.value else number.value Å list.value sign Æ + sign.negative Å false sign Æ - sign.negative Å true 14 symbol attributes number value sign negative list position, value bit position, value

- 273. production Attribute rule list Æ bit bit.position Å list.position list.value Å bit.value list0 Æ list1 bit list1.position Å list0.position + 1 bit.position Å list0.position list0.value Å list1.value + bit.value bit Æ 0 bit.value Å 0 bit Æ 1 bit.value Å 2bit.position 15 symbol attributes number value sign negative list position, value bit position, value

- 274. 16 Number sign list list bit list bit bit - 1 0 1 neg=true Pos=0 Pos=1 Pos=1 Pos=2 Pos=2 Pos=0 Val=4 Val=0 Val=4 Val=4 Val=1 Val=5 Val=-5 Parse tree and the dependence graph

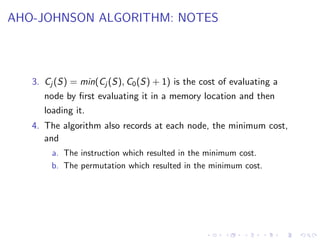

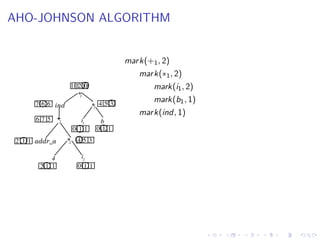

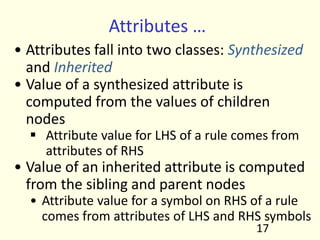

- 275. Attributes … • Attributes fall into two classes: Synthesized and Inherited • Value of a synthesized attribute is computed from the values of children nodes ƒ Attribute value for LHS of a rule comes from attributes of RHS • Value of an inherited attribute is computed from the sibling and parent nodes • Attribute value for a symbol on RHS of a rule comes from attributes of LHS and RHS symbols 17