Cs231n 2017 lecture12 Visualizing and Understanding

- 1. Fei-Fei Li & Justin Johnson & Serena Yeung Lecture 12 - May 16, 2017Fei-Fei Li & Justin Johnson & Serena Yeung Lecture 12 - May 16, 20171 Lecture 12: Visualizing and Understanding

- 2. Fei-Fei Li & Justin Johnson & Serena Yeung Lecture 11 - May 10, 2017Fei-Fei Li & Justin Johnson & Serena Yeung Lecture 11 - May 10, 20172 Administrative Milestones due tonight on Canvas, 11:59pm Midterm grades released on Gradescope this week A3 due next Friday, 5/26 HyperQuest deadline extended to Sunday 5/21, 11:59pm Poster session is June 6

- 3. Fei-Fei Li & Justin Johnson & Serena Yeung Lecture 11 - May 10, 20173 Last Time: Lots of Computer Vision Tasks Classification + Localization Semantic Segmentation Object Detection Instance Segmentation CATGRASS, CAT, TREE, SKY DOG, DOG, CAT DOG, DOG, CAT Single Object Multiple ObjectNo objects, just pixels This image is CC0 public domainThis image is CC0 public domain

- 4. Fei-Fei Li & Justin Johnson & Serena Yeung Lecture 11 - May 10, 20174 This image is CC0 public domain Class Scores: 1000 numbers What’s going on inside ConvNets? Input Image: 3 x 224 x 224 What are the intermediate features looking for? Krizhevsky et al, “ImageNet Classification with Deep Convolutional Neural Networks”, NIPS 2012. Figure reproduced with permission.

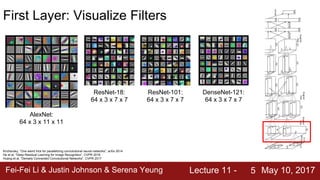

- 5. Fei-Fei Li & Justin Johnson & Serena Yeung Lecture 11 - May 10, 20175 First Layer: Visualize Filters AlexNet: 64 x 3 x 11 x 11 ResNet-18: 64 x 3 x 7 x 7 ResNet-101: 64 x 3 x 7 x 7 DenseNet-121: 64 x 3 x 7 x 7 Krizhevsky, “One weird trick for parallelizing convolutional neural networks”, arXiv 2014 He et al, “Deep Residual Learning for Image Recognition”, CVPR 2016 Huang et al, “Densely Connected Convolutional Networks”, CVPR 2017

- 6. Fei-Fei Li & Justin Johnson & Serena Yeung Lecture 11 - May 10, 20176 Visualize the filters/kernels (raw weights) We can visualize filters at higher layers, but not that interesting (these are taken from ConvNetJS CIFAR-10 demo) layer 1 weights layer 2 weights layer 3 weights 16 x 3 x 7 x 7 20 x 16 x 7 x 7 20 x 20 x 7 x 7

- 7. Fei-Fei Li & Justin Johnson & Serena Yeung Lecture 11 - May 10, 20177 FC7 layer Last Layer 4096-dimensional feature vector for an image (layer immediately before the classifier) Run the network on many images, collect the feature vectors

- 8. Fei-Fei Li & Justin Johnson & Serena Yeung Lecture 11 - May 10, 20178 Last Layer: Nearest Neighbors Test image L2 Nearest neighbors in feature space 4096-dim vector Recall: Nearest neighbors in pixel space Krizhevsky et al, “ImageNet Classification with Deep Convolutional Neural Networks”, NIPS 2012. Figures reproduced with permission.

- 9. Fei-Fei Li & Justin Johnson & Serena Yeung Lecture 11 - May 10, 20179 Last Layer: Dimensionality Reduction Van der Maaten and Hinton, “Visualizing Data using t-SNE”, JMLR 2008 Figure copyright Laurens van der Maaten and Geoff Hinton, 2008. Reproduced with permission. Visualize the “space” of FC7 feature vectors by reducing dimensionality of vectors from 4096 to 2 dimensions Simple algorithm: Principle Component Analysis (PCA) More complex: t-SNE

- 10. Fei-Fei Li & Justin Johnson & Serena Yeung Lecture 11 - May 10, 201710 Last Layer: Dimensionality Reduction Van der Maaten and Hinton, “Visualizing Data using t-SNE”, JMLR 2008 Krizhevsky et al, “ImageNet Classification with Deep Convolutional Neural Networks”, NIPS 2012. Figure reproduced with permission. See high-resolution versions at http://cs.stanford.edu/people/karpathy/cnnembed/

- 11. Fei-Fei Li & Justin Johnson & Serena Yeung Lecture 11 - May 10, 201711 Visualizing Activations Yosinski et al, “Understanding Neural Networks Through Deep Visualization”, ICML DL Workshop 2014. Figure copyright Jason Yosinski, 2014. Reproduced with permission. conv5 feature map is 128x13x13; visualize as 128 13x13 grayscale images

- 12. Fei-Fei Li & Justin Johnson & Serena Yeung Lecture 11 - May 10, 201712 Maximally Activating Patches Pick a layer and a channel; e.g. conv5 is 128 x 13 x 13, pick channel 17/128 Run many images through the network, record values of chosen channel Visualize image patches that correspond to maximal activations Springenberg et al, “Striving for Simplicity: The All Convolutional Net”, ICLR Workshop 2015 Figure copyright Jost Tobias Springenberg, Alexey Dosovitskiy, Thomas Brox, Martin Riedmiller, 2015; reproduced with permission.

- 13. Fei-Fei Li & Justin Johnson & Serena Yeung Lecture 11 - May 10, 201713 Occlusion Experiments Mask part of the image before feeding to CNN, draw heatmap of probability at each mask location Zeiler and Fergus, “Visualizing and Understanding Convolutional Networks”, ECCV 2014 Boat image is CC0 public domain Elephant image is CC0 public domain Go-Karts image is CC0 public domain

- 14. Fei-Fei Li & Justin Johnson & Serena Yeung Lecture 11 - May 10, 201714 Saliency Maps Dog How to tell which pixels matter for classification? Simonyan, Vedaldi, and Zisserman, “Deep Inside Convolutional Networks: Visualising Image Classification Models and Saliency Maps”, ICLR Workshop 2014. Figures copyright Karen Simonyan, Andrea Vedaldi, and Andrew Zisserman, 2014; reproduced with permission.

- 15. Fei-Fei Li & Justin Johnson & Serena Yeung Lecture 11 - May 10, 201715 Saliency Maps Dog How to tell which pixels matter for classification? Compute gradient of (unnormalized) class score with respect to image pixels, take absolute value and max over RGB channels Simonyan, Vedaldi, and Zisserman, “Deep Inside Convolutional Networks: Visualising Image Classification Models and Saliency Maps”, ICLR Workshop 2014. Figures copyright Karen Simonyan, Andrea Vedaldi, and Andrew Zisserman, 2014; reproduced with permission.

- 16. Fei-Fei Li & Justin Johnson & Serena Yeung Lecture 11 - May 10, 201716 Saliency Maps Simonyan, Vedaldi, and Zisserman, “Deep Inside Convolutional Networks: Visualising Image Classification Models and Saliency Maps”, ICLR Workshop 2014. Figures copyright Karen Simonyan, Andrea Vedaldi, and Andrew Zisserman, 2014; reproduced with permission.

- 17. Fei-Fei Li & Justin Johnson & Serena Yeung Lecture 11 - May 10, 201717 Saliency Maps: Segmentation without supervision Simonyan, Vedaldi, and Zisserman, “Deep Inside Convolutional Networks: Visualising Image Classification Models and Saliency Maps”, ICLR Workshop 2014. Figures copyright Karen Simonyan, Andrea Vedaldi, and Andrew Zisserman, 2014; reproduced with permission. Rother et al, “Grabcut: Interactive foreground extraction using iterated graph cuts”, ACM TOG 2004 Use GrabCut on saliency map

- 18. Fei-Fei Li & Justin Johnson & Serena Yeung Lecture 11 - May 10, 201718 Intermediate Features via (guided) backprop Zeiler and Fergus, “Visualizing and Understanding Convolutional Networks”, ECCV 2014 Springenberg et al, “Striving for Simplicity: The All Convolutional Net”, ICLR Workshop 2015 Pick a single intermediate neuron, e.g. one value in 128 x 13 x 13 conv5 feature map Compute gradient of neuron value with respect to image pixels

- 19. Fei-Fei Li & Justin Johnson & Serena Yeung Lecture 11 - May 10, 201719 Intermediate features via (guided) backprop Pick a single intermediate neuron, e.g. one value in 128 x 13 x 13 conv5 feature map Compute gradient of neuron value with respect to image pixels Images come out nicer if you only backprop positive gradients through each ReLU (guided backprop) ReLU Zeiler and Fergus, “Visualizing and Understanding Convolutional Networks”, ECCV 2014 Springenberg et al, “Striving for Simplicity: The All Convolutional Net”, ICLR Workshop 2015 Figure copyright Jost Tobias Springenberg, Alexey Dosovitskiy, Thomas Brox, Martin Riedmiller, 2015; reproduced with permission.

- 20. Fei-Fei Li & Justin Johnson & Serena Yeung Lecture 11 - May 10, 201720 Intermediate features via (guided) backprop Zeiler and Fergus, “Visualizing and Understanding Convolutional Networks”, ECCV 2014 Springenberg et al, “Striving for Simplicity: The All Convolutional Net”, ICLR Workshop 2015 Figure copyright Jost Tobias Springenberg, Alexey Dosovitskiy, Thomas Brox, Martin Riedmiller, 2015; reproduced with permission.

- 21. Fei-Fei Li & Justin Johnson & Serena Yeung Lecture 11 - May 10, 201721 Visualizing CNN features: Gradient Ascent (Guided) backprop: Find the part of an image that a neuron responds to Gradient ascent: Generate a synthetic image that maximally activates a neuron I* = arg maxI f(I) + R(I) Neuron value Natural image regularizer

- 22. Fei-Fei Li & Justin Johnson & Serena Yeung Lecture 11 - May 10, 201722 Visualizing CNN features: Gradient Ascent score for class c (before Softmax) zero image 1. Initialize image to zeros Repeat: 2. Forward image to compute current scores 3. Backprop to get gradient of neuron value with respect to image pixels 4. Make a small update to the image

- 23. Fei-Fei Li & Justin Johnson & Serena Yeung Lecture 11 - May 10, 201723 Visualizing CNN features: Gradient Ascent Simonyan, Vedaldi, and Zisserman, “Deep Inside Convolutional Networks: Visualising Image Classification Models and Saliency Maps”, ICLR Workshop 2014. Figures copyright Karen Simonyan, Andrea Vedaldi, and Andrew Zisserman, 2014; reproduced with permission. Simple regularizer: Penalize L2 norm of generated image

- 24. Fei-Fei Li & Justin Johnson & Serena Yeung Lecture 11 - May 10, 201724 Visualizing CNN features: Gradient Ascent Simonyan, Vedaldi, and Zisserman, “Deep Inside Convolutional Networks: Visualising Image Classification Models and Saliency Maps”, ICLR Workshop 2014. Figures copyright Karen Simonyan, Andrea Vedaldi, and Andrew Zisserman, 2014; reproduced with permission. Simple regularizer: Penalize L2 norm of generated image

- 25. Fei-Fei Li & Justin Johnson & Serena Yeung Lecture 11 - May 10, 201725 Visualizing CNN features: Gradient Ascent Simple regularizer: Penalize L2 norm of generated image Yosinski et al, “Understanding Neural Networks Through Deep Visualization”, ICML DL Workshop 2014. Figure copyright Jason Yosinski, Jeff Clune, Anh Nguyen, Thomas Fuchs, and Hod Lipson, 2014. Reproduced with permission.

- 26. Fei-Fei Li & Justin Johnson & Serena Yeung Lecture 11 - May 10, 201726 Visualizing CNN features: Gradient Ascent Better regularizer: Penalize L2 norm of image; also during optimization periodically (1) Gaussian blur image (2) Clip pixels with small values to 0 (3) Clip pixels with small gradients to 0 Yosinski et al, “Understanding Neural Networks Through Deep Visualization”, ICML DL Workshop 2014.

- 27. Fei-Fei Li & Justin Johnson & Serena Yeung Lecture 11 - May 10, 201727 Visualizing CNN features: Gradient Ascent Better regularizer: Penalize L2 norm of image; also during optimization periodically (1) Gaussian blur image (2) Clip pixels with small values to 0 (3) Clip pixels with small gradients to 0 Yosinski et al, “Understanding Neural Networks Through Deep Visualization”, ICML DL Workshop 2014. Figure copyright Jason Yosinski, Jeff Clune, Anh Nguyen, Thomas Fuchs, and Hod Lipson, 2014. Reproduced with permission.

- 28. Fei-Fei Li & Justin Johnson & Serena Yeung Lecture 11 - May 10, 201728 Visualizing CNN features: Gradient Ascent Better regularizer: Penalize L2 norm of image; also during optimization periodically (1) Gaussian blur image (2) Clip pixels with small values to 0 (3) Clip pixels with small gradients to 0 Yosinski et al, “Understanding Neural Networks Through Deep Visualization”, ICML DL Workshop 2014. Figure copyright Jason Yosinski, Jeff Clune, Anh Nguyen, Thomas Fuchs, and Hod Lipson, 2014. Reproduced with permission.

- 29. Fei-Fei Li & Justin Johnson & Serena Yeung Lecture 11 - May 10, 201729 Visualizing CNN features: Gradient Ascent Use the same approach to visualize intermediate features Yosinski et al, “Understanding Neural Networks Through Deep Visualization”, ICML DL Workshop 2014. Figure copyright Jason Yosinski, Jeff Clune, Anh Nguyen, Thomas Fuchs, and Hod Lipson, 2014. Reproduced with permission.

- 30. Fei-Fei Li & Justin Johnson & Serena Yeung Lecture 11 - May 10, 201730 Visualizing CNN features: Gradient Ascent Use the same approach to visualize intermediate features Yosinski et al, “Understanding Neural Networks Through Deep Visualization”, ICML DL Workshop 2014. Figure copyright Jason Yosinski, Jeff Clune, Anh Nguyen, Thomas Fuchs, and Hod Lipson, 2014. Reproduced with permission.

- 31. Fei-Fei Li & Justin Johnson & Serena Yeung Lecture 11 - May 10, 201731 Visualizing CNN features: Gradient Ascent Adding “multi-faceted” visualization gives even nicer results: (Plus more careful regularization, center-bias) Nguyen et al, “Multifaceted Feature Visualization: Uncovering the Different Types of Features Learned By Each Neuron in Deep Neural Networks”, ICML Visualization for Deep Learning Workshop 2016. Figures copyright Anh Nguyen, Jason Yosinski, and Jeff Clune, 2016; reproduced with permission.

- 32. Fei-Fei Li & Justin Johnson & Serena Yeung Lecture 11 - May 10, 201732 Visualizing CNN features: Gradient Ascent Nguyen et al, “Multifaceted Feature Visualization: Uncovering the Different Types of Features Learned By Each Neuron in Deep Neural Networks”, ICML Visualization for Deep Learning Workshop 2016. Figures copyright Anh Nguyen, Jason Yosinski, and Jeff Clune, 2016; reproduced with permission.

- 33. Fei-Fei Li & Justin Johnson & Serena Yeung Lecture 11 - May 10, 201733 Visualizing CNN features: Gradient Ascent Nguyen et al, “Synthesizing the preferred inputs for neurons in neural networks via deep generator networks,” NIPS 2016 Figure copyright Nguyen et al, 2016; reproduced with permission. Optimize in FC6 latent space instead of pixel space:

- 34. Fei-Fei Li & Justin Johnson & Serena Yeung Lecture 11 - May 10, 201734 Fooling Images / Adversarial Examples (1) Start from an arbitrary image (2) Pick an arbitrary class (3) Modify the image to maximize the class (4) Repeat until network is fooled

- 35. Fei-Fei Li & Justin Johnson & Serena Yeung Lecture 11 - May 10, 201735 Fooling Images / Adversarial Examples Boat image is CC0 public domain Elephant image is CC0 public domain

- 36. Fei-Fei Li & Justin Johnson & Serena Yeung Lecture 11 - May 10, 201736 Fooling Images / Adversarial Examples Boat image is CC0 public domain Elephant image is CC0 public domain What is going on? Ian Goodfellow will explain

- 37. Fei-Fei Li & Justin Johnson & Serena Yeung Lecture 11 - May 10, 201737 37 DeepDream: Amplify existing features Rather than synthesizing an image to maximize a specific neuron, instead try to amplify the neuron activations at some layer in the network Choose an image and a layer in a CNN; repeat: 1. Forward: compute activations at chosen layer 2. Set gradient of chosen layer equal to its activation 3. Backward: Compute gradient on image 4. Update image Mordvintsev, Olah, and Tyka, “Inceptionism: Going Deeper into Neural Networks”, Google Research Blog. Images are licensed under CC-BY 4.0

- 38. Fei-Fei Li & Justin Johnson & Serena Yeung Lecture 11 - May 10, 201738 38 DeepDream: Amplify existing features Rather than synthesizing an image to maximize a specific neuron, instead try to amplify the neuron activations at some layer in the network Equivalent to: I* = arg maxI ∑i fi (I)2 Mordvintsev, Olah, and Tyka, “Inceptionism: Going Deeper into Neural Networks”, Google Research Blog. Images are licensed under CC-BY 4.0 Choose an image and a layer in a CNN; repeat: 1. Forward: compute activations at chosen layer 2. Set gradient of chosen layer equal to its activation 3. Backward: Compute gradient on image 4. Update image

- 39. Fei-Fei Li & Justin Johnson & Serena Yeung Lecture 11 - May 10, 201739 DeepDream: Amplify existing features Code is very simple but it uses a couple tricks: (Code is licensed under Apache 2.0)

- 40. Fei-Fei Li & Justin Johnson & Serena Yeung Lecture 11 - May 10, 201740 DeepDream: Amplify existing features Code is very simple but it uses a couple tricks: (Code is licensed under Apache 2.0) Jitter image

- 41. Fei-Fei Li & Justin Johnson & Serena Yeung Lecture 11 - May 10, 201741 DeepDream: Amplify existing features Code is very simple but it uses a couple tricks: (Code is licensed under Apache 2.0) Jitter image L1 Normalize gradients

- 42. Fei-Fei Li & Justin Johnson & Serena Yeung Lecture 11 - May 10, 201742 DeepDream: Amplify existing features Code is very simple but it uses a couple tricks: (Code is licensed under Apache 2.0) Jitter image L1 Normalize gradients Clip pixel values Also uses multiscale processing for a fractal effect (not shown)

- 43. Fei-Fei Li & Justin Johnson & Serena Yeung Lecture 11 - May 10, 201743 Sky image is licensed under CC-BY SA 3.0

- 44. Fei-Fei Li & Justin Johnson & Serena Yeung Lecture 11 - May 10, 201744 Image is licensed under CC-BY 4.0

- 45. Fei-Fei Li & Justin Johnson & Serena Yeung Lecture 11 - May 10, 201745 Image is licensed under CC-BY 4.0

- 46. Fei-Fei Li & Justin Johnson & Serena Yeung Lecture 11 - May 10, 201746 Image is licensed under CC-BY 3.0

- 47. Fei-Fei Li & Justin Johnson & Serena Yeung Lecture 11 - May 10, 201747 Image is licensed under CC-BY 3.0

- 48. Fei-Fei Li & Justin Johnson & Serena Yeung Lecture 11 - May 10, 201748 Image is licensed under CC-BY 4.0

- 49. Fei-Fei Li & Justin Johnson & Serena Yeung Lecture 11 - May 10, 201749 Feature Inversion Given a CNN feature vector for an image, find a new image that: - Matches the given feature vector - “looks natural” (image prior regularization) Mahendran and Vedaldi, “Understanding Deep Image Representations by Inverting Them”, CVPR 2015 Given feature vector Features of new image Total Variation regularizer (encourages spatial smoothness)

- 50. Fei-Fei Li & Justin Johnson & Serena Yeung Lecture 11 - May 10, 201750 Feature Inversion Reconstructing from different layers of VGG-16 Mahendran and Vedaldi, “Understanding Deep Image Representations by Inverting Them”, CVPR 2015 Figure from Johnson, Alahi, and Fei-Fei, “Perceptual Losses for Real-Time Style Transfer and Super-Resolution”, ECCV 2016. Copyright Springer, 2016. Reproduced for educational purposes.

- 51. Fei-Fei Li & Justin Johnson & Serena Yeung Lecture 11 - May 10, 201751 Texture Synthesis Given a sample patch of some texture, can we generate a bigger image of the same texture? Input Output Output image is licensed under the MIT license

- 52. Fei-Fei Li & Justin Johnson & Serena Yeung Lecture 11 - May 10, 201752 Texture Synthesis: Nearest Neighbor Generate pixels one at a time in scanline order; form neighborhood of already generated pixels and copy nearest neighbor from input Wei and Levoy, “Fast Texture Synthesis using Tree-structured Vector Quantization”, SIGGRAPH 2000 Efros and Leung, “Texture Synthesis by Non-parametric Sampling”, ICCV 1999

- 53. Fei-Fei Li & Justin Johnson & Serena Yeung Lecture 11 - May 10, 201753 Texture Synthesis: Nearest Neighbor Images licensed under the MIT license

- 54. Fei-Fei Li & Justin Johnson & Serena Yeung Lecture 11 - May 10, 201754 Neural Texture Synthesis: Gram Matrix Each layer of CNN gives C x H x W tensor of features; H x W grid of C-dimensional vectors This image is in the public domain. w H C

- 55. Fei-Fei Li & Justin Johnson & Serena Yeung Lecture 11 - May 10, 201755 Neural Texture Synthesis: Gram Matrix Each layer of CNN gives C x H x W tensor of features; H x W grid of C-dimensional vectors Outer product of two C-dimensional vectors gives C x C matrix measuring co-occurrence This image is in the public domain. w H C C C

- 56. Fei-Fei Li & Justin Johnson & Serena Yeung Lecture 11 - May 10, 201756 Neural Texture Synthesis: Gram Matrix Each layer of CNN gives C x H x W tensor of features; H x W grid of C-dimensional vectors Outer product of two C-dimensional vectors gives C x C matrix measuring co-occurrence Average over all HW pairs of vectors, giving Gram matrix of shape C x C This image is in the public domain. w H C C C Gram Matrix

- 57. Fei-Fei Li & Justin Johnson & Serena Yeung Lecture 11 - May 10, 201757 Neural Texture Synthesis: Gram Matrix Each layer of CNN gives C x H x W tensor of features; H x W grid of C-dimensional vectors Outer product of two C-dimensional vectors gives C x C matrix measuring co-occurrence Average over all HW pairs of vectors, giving Gram matrix of shape C x C This image is in the public domain. w H C C C Efficient to compute; reshape features from C x H x W to =C x HW then compute G = FFT

- 58. Fei-Fei Li & Justin Johnson & Serena Yeung Lecture 11 - May 10, 201758 Gatys, Ecker, and Bethge, “Texture Synthesis Using Convolutional Neural Networks”, NIPS 2015 Figure copyright Leon Gatys, Alexander S. Ecker, and Matthias Bethge, 2015. Reproduced with permission. Neural Texture Synthesis 1. Pretrain a CNN on ImageNet (VGG-19) 2. Run input texture forward through CNN, record activations on every layer; layer i gives feature map of shape Ci × Hi × Wi 3. At each layer compute the Gram matrix giving outer product of features: (shape Ci × Ci ) 4. Initialize generated image from random noise 5. Pass generated image through CNN, compute Gram matrix on each layer 6. Compute loss: weighted sum of L2 distance between Gram matrices 7. Backprop to get gradient on image 8. Make gradient step on image 9. GOTO 5

- 59. Fei-Fei Li & Justin Johnson & Serena Yeung Lecture 11 - May 10, 201759 Gatys, Ecker, and Bethge, “Texture Synthesis Using Convolutional Neural Networks”, NIPS 2015 Figure copyright Leon Gatys, Alexander S. Ecker, and Matthias Bethge, 2015. Reproduced with permission. Neural Texture Synthesis 1. Pretrain a CNN on ImageNet (VGG-19) 2. Run input texture forward through CNN, record activations on every layer; layer i gives feature map of shape Ci × Hi × Wi 3. At each layer compute the Gram matrix giving outer product of features: (shape Ci × Ci ) 4. Initialize generated image from random noise 5. Pass generated image through CNN, compute Gram matrix on each layer 6. Compute loss: weighted sum of L2 distance between Gram matrices 7. Backprop to get gradient on image 8. Make gradient step on image 9. GOTO 5

- 60. Fei-Fei Li & Justin Johnson & Serena Yeung Lecture 11 - May 10, 201760 Gatys, Ecker, and Bethge, “Texture Synthesis Using Convolutional Neural Networks”, NIPS 2015 Figure copyright Leon Gatys, Alexander S. Ecker, and Matthias Bethge, 2015. Reproduced with permission. Neural Texture Synthesis 1. Pretrain a CNN on ImageNet (VGG-19) 2. Run input texture forward through CNN, record activations on every layer; layer i gives feature map of shape Ci × Hi × Wi 3. At each layer compute the Gram matrix giving outer product of features: (shape Ci × Ci ) 4. Initialize generated image from random noise 5. Pass generated image through CNN, compute Gram matrix on each layer 6. Compute loss: weighted sum of L2 distance between Gram matrices 7. Backprop to get gradient on image 8. Make gradient step on image 9. GOTO 5

- 61. Fei-Fei Li & Justin Johnson & Serena Yeung Lecture 11 - May 10, 201761 Gatys, Ecker, and Bethge, “Texture Synthesis Using Convolutional Neural Networks”, NIPS 2015 Figure copyright Leon Gatys, Alexander S. Ecker, and Matthias Bethge, 2015. Reproduced with permission. Neural Texture Synthesis 1. Pretrain a CNN on ImageNet (VGG-19) 2. Run input texture forward through CNN, record activations on every layer; layer i gives feature map of shape Ci × Hi × Wi 3. At each layer compute the Gram matrix giving outer product of features: (shape Ci × Ci ) 4. Initialize generated image from random noise 5. Pass generated image through CNN, compute Gram matrix on each layer 6. Compute loss: weighted sum of L2 distance between Gram matrices 7. Backprop to get gradient on image 8. Make gradient step on image 9. GOTO 5

- 62. Fei-Fei Li & Justin Johnson & Serena Yeung Lecture 11 - May 10, 201762 Neural Texture Synthesis Gatys, Ecker, and Bethge, “Texture Synthesis Using Convolutional Neural Networks”, NIPS 2015 Figure copyright Leon Gatys, Alexander S. Ecker, and Matthias Bethge, 2015. Reproduced with permission. Reconstructing texture from higher layers recovers larger features from the input texture

- 63. Fei-Fei Li & Justin Johnson & Serena Yeung Lecture 11 - May 10, 201763 Neural Texture Synthesis: Texture = Artwork Texture synthesis (Gram reconstruction) Figure from Johnson, Alahi, and Fei-Fei, “Perceptual Losses for Real-Time Style Transfer and Super-Resolution”, ECCV 2016. Copyright Springer, 2016. Reproduced for educational purposes.

- 64. Fei-Fei Li & Justin Johnson & Serena Yeung Lecture 11 - May 10, 201764 Neural Style Transfer: Feature + Gram Reconstruction Feature reconstruction Texture synthesis (Gram reconstruction) Figure from Johnson, Alahi, and Fei-Fei, “Perceptual Losses for Real-Time Style Transfer and Super-Resolution”, ECCV 2016. Copyright Springer, 2016. Reproduced for educational purposes.

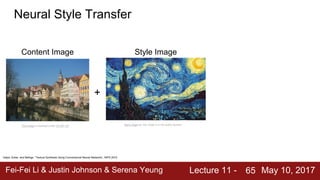

- 65. Fei-Fei Li & Justin Johnson & Serena Yeung Lecture 11 - May 10, 201765 Neural Style Transfer Content Image Style Image + This image is licensed under CC-BY 3.0 Starry Night by Van Gogh is in the public domain Gatys, Ecker, and Bethge, “Texture Synthesis Using Convolutional Neural Networks”, NIPS 2015

- 66. Fei-Fei Li & Justin Johnson & Serena Yeung Lecture 11 - May 10, 201766 Neural Style Transfer Content Image Style Image Style Transfer! + = This image is licensed under CC-BY 3.0 Starry Night by Van Gogh is in the public domain This image copyright Justin Johnson, 2015. Reproduced with permission. Gatys, Ecker, and Bethge, “Image style transfer using convolutional neural networks”, CVPR 2016

- 67. Fei-Fei Li & Justin Johnson & Serena Yeung Lecture 11 - May 10, 201767 Style image Content image Output image (Start with noise) Gatys, Ecker, and Bethge, “Image style transfer using convolutional neural networks”, CVPR 2016 Figure adapted from Johnson, Alahi, and Fei-Fei, “Perceptual Losses for Real-Time Style Transfer and Super-Resolution”, ECCV 2016. Copyright Springer, 2016. Reproduced for educational purposes.

- 68. Fei-Fei Li & Justin Johnson & Serena Yeung Lecture 11 - May 10, 201768 Style image Content image Output image Gatys, Ecker, and Bethge, “Image style transfer using convolutional neural networks”, CVPR 2016 Figure adapted from Johnson, Alahi, and Fei-Fei, “Perceptual Losses for Real-Time Style Transfer and Super-Resolution”, ECCV 2016. Copyright Springer, 2016. Reproduced for educational purposes.

- 69. Fei-Fei Li & Justin Johnson & Serena Yeung Lecture 11 - May 10, 201769 Neural Style Transfer Gatys, Ecker, and Bethge, “Image style transfer using convolutional neural networks”, CVPR 2016 Figure copyright Justin Johnson, 2015. Example outputs from my implementation (in Torch)

- 70. Fei-Fei Li & Justin Johnson & Serena Yeung Lecture 11 - May 10, 201770 More weight to content loss More weight to style loss Neural Style Transfer

- 71. Fei-Fei Li & Justin Johnson & Serena Yeung Lecture 11 - May 10, 201771 Larger style image Smaller style image Resizing style image before running style transfer algorithm can transfer different types of features Neural Style Transfer Gatys, Ecker, and Bethge, “Image style transfer using convolutional neural networks”, CVPR 2016 Figure copyright Justin Johnson, 2015.

- 72. Fei-Fei Li & Justin Johnson & Serena Yeung Lecture 11 - May 10, 201772 Neural Style Transfer: Multiple Style Images Mix style from multiple images by taking a weighted average of Gram matrices Gatys, Ecker, and Bethge, “Image style transfer using convolutional neural networks”, CVPR 2016 Figure copyright Justin Johnson, 2015.

- 73. Fei-Fei Li & Justin Johnson & Serena Yeung Lecture 11 - May 10, 201773

- 74. Fei-Fei Li & Justin Johnson & Serena Yeung Lecture 11 - May 10, 201774

- 75. Fei-Fei Li & Justin Johnson & Serena Yeung Lecture 11 - May 10, 201775

- 76. Fei-Fei Li & Justin Johnson & Serena Yeung Lecture 11 - May 10, 201776 Neural Style Transfer Problem: Style transfer requires many forward / backward passes through VGG; very slow!

- 77. Fei-Fei Li & Justin Johnson & Serena Yeung Lecture 11 - May 10, 201777 Neural Style Transfer Problem: Style transfer requires many forward / backward passes through VGG; very slow! Solution: Train another neural network to perform style transfer for us!

- 78. Fei-Fei Li & Justin Johnson & Serena Yeung Lecture 11 - May 10, 201778 78 Fast Style Transfer (1) Train a feedforward network for each style (2) Use pretrained CNN to compute same losses as before (3) After training, stylize images using a single forward pass Johnson, Alahi, and Fei-Fei, “Perceptual Losses for Real-Time Style Transfer and Super-Resolution”, ECCV 2016 Figure copyright Springer, 2016. Reproduced for educational purposes.

- 79. Fei-Fei Li & Justin Johnson & Serena Yeung Lecture 11 - May 10, 201779 Fast Style Transfer Slow SlowFast Fast Johnson, Alahi, and Fei-Fei, “Perceptual Losses for Real-Time Style Transfer and Super-Resolution”, ECCV 2016 Figure copyright Springer, 2016. Reproduced for educational purposes. https://github.com/jcjohnson/fast-neural-style

- 80. Fei-Fei Li & Justin Johnson & Serena Yeung Lecture 11 - May 10, 201780 Fast Style Transfer Ulyanov et al, “Texture Networks: Feed-forward Synthesis of Textures and Stylized Images”, ICML 2016 Ulyanov et al, “Instance Normalization: The Missing Ingredient for Fast Stylization”, arXiv 2016 Figures copyright Dmitry Ulyanov, Vadim Lebedev, Andrea Vedaldi, and Victor Lempitsky, 2016. Reproduced with permission. Concurrent work from Ulyanov et al, comparable results

- 81. Fei-Fei Li & Justin Johnson & Serena Yeung Lecture 11 - May 10, 201781 Fast Style Transfer Ulyanov et al, “Texture Networks: Feed-forward Synthesis of Textures and Stylized Images”, ICML 2016 Ulyanov et al, “Instance Normalization: The Missing Ingredient for Fast Stylization”, arXiv 2016 Figures copyright Dmitry Ulyanov, Vadim Lebedev, Andrea Vedaldi, and Victor Lempitsky, 2016. Reproduced with permission. Replacing batch normalization with Instance Normalization improves results

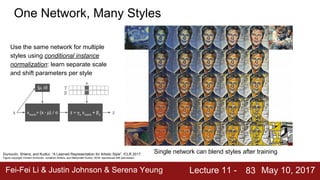

- 82. Fei-Fei Li & Justin Johnson & Serena Yeung Lecture 11 - May 10, 201782 One Network, Many Styles Dumoulin, Shlens, and Kudlur, “A Learned Representation for Artistic Style”, ICLR 2017. Figure copyright Vincent Dumoulin, Jonathon Shlens, and Manjunath Kudlur, 2016; reproduced with permission.

- 83. Fei-Fei Li & Justin Johnson & Serena Yeung Lecture 11 - May 10, 201783 One Network, Many Styles Dumoulin, Shlens, and Kudlur, “A Learned Representation for Artistic Style”, ICLR 2017. Figure copyright Vincent Dumoulin, Jonathon Shlens, and Manjunath Kudlur, 2016; reproduced with permission. Use the same network for multiple styles using conditional instance normalization: learn separate scale and shift parameters per style Single network can blend styles after training

- 84. Fei-Fei Li & Justin Johnson & Serena Yeung Lecture 11 - May 10, 201784 Summary Many methods for understanding CNN representations Activations: Nearest neighbors, Dimensionality reduction, maximal patches, occlusion Gradients: Saliency maps, class visualization, fooling images, feature inversion Fun: DeepDream, Style Transfer.

- 85. Fei-Fei Li & Justin Johnson & Serena Yeung Lecture 11 - May 10, 201785 Next time: Unsupervised Learning Autoencoders Variational Autoencoders Generative Adversarial Networks

![[OSGeo-KR Tech Workshop] Deep Learning for Single Image Super-Resolution](https://cdn.slidesharecdn.com/ss_thumbnails/osgeo-krdeeplearningforsingleimagesuper-resolution-180223175347-thumbnail.jpg?width=560&fit=bounds)

![[Public] gerrit concepts and workflows](https://cdn.slidesharecdn.com/ss_thumbnails/publicgerrit-conceptsandworkflows-200829034112-thumbnail.jpg?width=560&fit=bounds)