Ad

CS3352-Foundations of Data Science Notes.pdf

- 1. UNIT I: Introduction Syllabus Data Science : Benefits and uses - facets of data Defining research goals - Retrieving data - Data preparation - Exploratory Data analysis - build the model presenting findings and building applications Warehousing - Basic Statistical descriptions of Data. Data Science • Data is measurable units of information gathered or captured from activity of people, places and things. • Data science is an interdisciplinary field that seeks to extract knowledge or insights from various forms of data. At its core, Data Science aims to discover and extract actionable knowledge from data that can be used to make sound business decisions and predictions. Data science combines math and statistics, specialized programming, advanced analytics, Artificial Intelligence (AI) and machine learning with specific subject matter expertise to uncover actionable insights hidden in an organization's data. • Data science uses advanced analytical theory and various methods such as time series analysis for predicting future. From historical data, Instead of knowing how many products sold in previous quarter, data science helps in forecasting future product sales and revenue more accurately. • Data science is devoted to the extraction of clean information from raw data to form actionable insights. Data science practitioners apply machine learning algorithms to numbers, text, images, video, audio and more to produce artificial intelligence systems to perform tasks that ordinarily require human intelligence. • The data science field is growing rapidly and revolutionizing so many industries. It has incalculable benefits in business, research and our everyday lives. • As a general rule, data scientists are skilled in detecting patterns hidden within large volumes of data and they often use advanced algorithms and implement machine learning models to help businesses and organizations make accurate assessments and predictions. Data science and big data evolved from statistics and traditional data management but are now considered to be distinct disciplines. • Life cycle of data science: 1. Capture: Data acquisition, data entry, signal reception and data extraction. 2. Maintain Data warehousing, data cleansing, data staging, data processing and data architecture. 3. Process Data mining, clustering and classification, data modeling and data summarization. 4. Analyze: Data reporting, data visualization, business intelligence and decision making. 5. Communicate: Exploratory and confirmatory analysis, predictive analysis, regression, text mining and qualitative analysis. Big Data • Big data can be defined as very large volumes of data available at various sources, in varying degrees of complexity, generated at different speed i.e. velocities and varying degrees of ambiguity, which cannot be processed using traditional technologies, processing methods, algorithms or any commercial off-the-shelf solutions.

- 2. • 'Big data' is a term used to describe collection of data that is huge in size and yet growing exponentially with time. In short, such a data is so large and complex that none of the traditional data management tools are able to store it or process it efficiently. Characteristics of Big Data • Characteristics of big data are volume, velocity and variety. They are often referred to as the three V's. 1. Volume Volumes of data are larger than that conventional relational database infrastructure can cope with. It consisting of terabytes or petabytes of data. 2. Velocity: The term 'velocity' refers to the speed of generation of data. How fast the data is generated and processed to meet the demands, determines real potential in the data. It is being created in or near real-time. 3. Variety: It refers to heterogeneous sources and the nature of data, both structured and unstructured. • These three dimensions are also called as three V's of Big Data. • Two other characteristics of big data is veracity and value. a) Veracity: • Veracity refers to source reliability, information credibility and content validity. • Veracity refers to the trustworthiness of the data. Can the manager rely on the fact that the data is representative? Every good manager knows that there are inherent discrepancies in all the data collected. • Spatial veracity: For vector data (imagery based on points, lines and polygons), the quality varies. It depends on whether the points have been GPS determined or determined by unknown origins or manually. Also, resolution and projection issues can alter veracity. • For geo-coded points, there may be errors in the address tables and in the point location algorithms associated with addresses. • For raster data (imagery based on pixels), veracity depends on accuracy of recording instruments in satellites or aerial devices and on timeliness. b) Value: • It represents the business value to be derived from big data. • The ultimate objective of any big data project should be to generate some sort of value for the company doing all the analysis. Otherwise, user just performing some technological task for technology's sake.

- 3. • For real-time spatial big data, decisions can be enhance through visualization of dynamic change in such spatial phenomena as climate, traffic, social-media-based attitudes and massive inventory locations. • Exploration of data trends can include spatial proximities and relationships. • Once spatial big data are structured, formal spatial analytics can be applied, such as spatial autocorrelation, overlays, buffering, spatial cluster techniques and location quotients. Difference between Data Science and Big Data Comparison between Cloud Computing and Big Data

- 4. Benefits and Uses of Data Science • Data science example and applications: a) Anomaly detection: Fraud, disease and crime b) Classification: Background checks; an email server classifying emails as "important" c) Forecasting: Sales, revenue and customer retention d) Pattern detection: Weather patterns, financial market patterns e) Recognition: Facial, voice and text f) Recommendation: Based on learned preferences, recommendation engines can refer user to movies, restaurants and books g) Regression: Predicting food delivery times, predicting home prices based on amenities h) Optimization: Scheduling ride-share pickups and package deliveries Benefits and Use of Big Data • Benefits of Big Data: 1. Improved customer service 2. Businesses can utilize outside intelligence while taking decisions 3. Reducing maintenance costs 4. Re-develop our products: Big Data can also help us understand how others perceive our products so that we can adapt them or our marketing, if need be. 5. Early identification of risk to the product/services, if any 6. Better operational efficiency • Some of the examples of big data are: 1. Social media: Social media is one of the biggest contributors to the flood of data we have today. Facebook generates around 500+ terabytes of data every day in the form of content generated by the users like status messages, photos and video uploads, messages, comments etc. 2. Stock exchange: Data generated by stock exchanges is also in terabytes per day. Most of this data is the trade data of users and companies. 3. Aviation industry: Asingle jet engine can generate around 10 terabytes of data during a 30 minute flight. 4. Survey data: Online or offline surveys conducted on various topics which typically has hundreds and thousands of responses and needs to be processed for analysis and visualization by creating a cluster of population and their associated responses. 5. Compliance data: Many organizations like healthcare, hospitals, life sciences, finance etc has to file compliance reports.

- 5. Facets of Data • Very large amount of data will generate in big data and data science. These data is various types and main categories of data are as follows: a) Structured b) Natural language c) Graph-based d) Streaming e) Unstructured f) Machine-generated g) Audio, video and images Structured Data • Structured data is arranged in rows and column format. It helps for application to retrieve and process data easily. Database management system is used for storing structured data. • The term structured data refers to data that is identifiable because it is organized in a structure. The most common form of structured data or records is a database where specific information is stored based on a methodology of columns and rows. • Structured data is also searchable by data type within content. Structured data is understood by computers and is also efficiently organized for human readers. • An Excel table is an example of structured data. Unstructured Data • Unstructured data is data that does not follow a specified format. Row and columns are not used for unstructured data. Therefore it is difficult to retrieve required information. Unstructured data has no identifiable structure. • The unstructured data can be in the form of Text: (Documents, email messages, customer feedbacks), audio, video, images. Email is an example of unstructured data. • Even today in most of the organizations more than 80 % of the data are in unstructured form. This carries lots of information. But extracting information from these various sources is a very big challenge. • Characteristics of unstructured data: 1. There is no structural restriction or binding for the data. 2. Data can be of any type. 3. Unstructured data does not follow any structural rules. 4. There are no predefined formats, restriction or sequence for unstructured data. 5. Since there is no structural binding for unstructured data, it is unpredictable in nature.

- 6. Natural Language • Natural language is a special type of unstructured data. • Natural language processing enables machines to recognize characters, words and sentences, then apply meaning and understanding to that information. This helps machines to understand language as humans do. • Natural language processing is the driving force behind machine intelligence in many modern real- world applications. The natural language processing community has had success in entity recognition, topic recognition, summarization, text completion and sentiment analysis. •For natural language processing to help machines understand human language, it must go through speech recognition, natural language understanding and machine translation. It is an iterative process comprised of several layers of text analysis. Machine - Generated Data • Machine-generated data is an information that is created without human interaction as a result of a computer process or application activity. This means that data entered manually by an end-user is not recognized to be machine-generated. • Machine data contains a definitive record of all activity and behavior of our customers, users, transactions, applications, servers, networks, factory machinery and so on. • It's configuration data, data from APIs and message queues, change events, the output of diagnostic commands and call detail records, sensor data from remote equipment and more. • Examples of machine data are web server logs, call detail records, network event logs and telemetry. • Both Machine-to-Machine (M2M) and Human-to-Machine (H2M) interactions generate machine data. Machine data is generated continuously by every processor-based system, as well as many consumer-oriented systems. • It can be either structured or unstructured. In recent years, the increase of machine data has surged. The expansion of mobile devices, virtual servers and desktops, as well as cloud- based services and RFID technologies, is making IT infrastructures more complex. Graph-based or Network Data •Graphs are data structures to describe relationships and interactions between entities in complex systems. In general, a graph contains a collection of entities called nodes and another collection of interactions between a pair of nodes called edges. • Nodes represent entities, which can be of any object type that is relevant to our problem domain. By connecting nodes with edges, we will end up with a graph (network) of nodes. • A graph database stores nodes and relationships instead of tables or documents. Data is stored just like we might sketch ideas on a whiteboard. Our data is stored without restricting it to a predefined model, allowing a very flexible way of thinking about and using it. • Graph databases are used to store graph-based data and are queried with specialized query languages such as SPARQL. • Graph databases are capable of sophisticated fraud prevention. With graph databases, we can use relationships to process financial and purchase transactions in near-real time. With fast graph queries,

- 7. we are able to detect that, for example, a potential purchaser is using the same email address and credit card as included in a known fraud case. • Graph databases can also help user easily detect relationship patterns such as multiple people associated with a personal email address or multiple people sharing the same IP address but residing in different physical addresses. • Graph databases are a good choice for recommendation applications. With graph databases, we can store in a graph relationships between information categories such as customer interests, friends and purchase history. We can use a highly available graph database to make product recommendations to a user based on which products are purchased by others who follow the same sport and have similar purchase history. • Graph theory is probably the main method in social network analysis in the early history of the social network concept. The approach is applied to social network analysis in order to determine important features of the network such as the nodes and links (for example influencers and the followers). • Influencers on social network have been identified as users that have impact on the activities or opinion of other users by way of followership or influence on decision made by other users on the network as shown in Fig. 1.2.1. • Graph theory has proved to be very effective on large-scale datasets such as social network data. This is because it is capable of by-passing the building of an actual visual representation of the data to run directly on data matrices. Audio, Image and Video • Audio, image and video are data types that pose specific challenges to a data scientist. Tasks that are trivial for humans, such as recognizing objects in pictures, turn out to be challenging for computers. •The terms audio and video commonly refers to the time-based media storage format for sound/music and moving pictures information. Audio and video digital recording, also referred as audio and video codecs, can be uncompressed, lossless compressed or lossy compressed depending on the desired quality and use cases.

- 8. • It is important to remark that multimedia data is one of the most important sources of information and knowledge; the integration, transformation and indexing of multimedia data bring significant challenges in data management and analysis. Many challenges have to be addressed including big data, multidisciplinary nature of Data Science and heterogeneity. • Data Science is playing an important role to address these challenges in multimedia data. Multimedia data usually contains various forms of media, such as text, image, video, geographic coordinates and even pulse waveforms, which come from multiple sources. Data Science can be a key instrument covering big data, machine learning and data mining solutions to store, handle and analyze such heterogeneous data. Streaming Data Streaming data is data that is generated continuously by thousands of data sources, which typically send in the data records simultaneously and in small sizes (order of Kilobytes). • Streaming data includes a wide variety of data such as log files generated by customers using your mobile or web applications, ecommerce purchases, in-game player activity, information from social networks, financial trading floors or geospatial services and telemetry from connected devices or instrumentation in data centers. Difference between Structured and Unstructured Data Data Science Process Data science process consists of six stages: 1. Discovery or Setting the research goal 2. Retrieving data 3. Data preparation 4. Data exploration 5. Data modeling 6. Presentation and automation

- 9. • Fig. 1.3.1 shows data science design process. • Step 1: Discovery or Defining research goal This step involves acquiring data from all the identified internal and external sources, which helps to answer the business question. • Step 2: Retrieving data It collection of data which required for project. This is the process of gaining a business understanding of the data user have and deciphering what each piece of data means. This could entail determining exactly what data is required and the best methods for obtaining it. This also entails determining what each of the data points means in terms of the company. If we have given a data set from a client, for example, we shall need to know what each column and row represents. • Step 3: Data preparation Data can have many inconsistencies like missing values, blank columns, an incorrect data format, which needs to be cleaned. We need to process, explore and condition data before modeling. The clean data, gives the better predictions. • Step 4: Data exploration Data exploration is related to deeper understanding of data. Try to understand how variables interact with each other, the distribution of the data and whether there are outliers. To achieve this use descriptive statistics, visual techniques and simple modeling. This steps is also called as Exploratory Data Analysis. • Step 5: Data modeling In this step, the actual model building process starts. Here, Data scientist distributes datasets for training and testing. Techniques like association, classification and clustering are applied to the training data set. The model, once prepared, is tested against the "testing" dataset. • Step 6: Presentation and automation Deliver the final baselined model with reports, code and technical documents in this stage. Model is deployed into a real-time production environment after thorough testing. In this stage, the key findings are communicated to all stakeholders. This helps to decide if the project results are a success or a failure based on the inputs from the model.

- 10. Defining Research Goals • To understand the project, three concept must understand: what, why and how. a) What is expectation of company or organization? b) Why does a company's higher authority define such research value? c) How is it part of a bigger strategic picture? • Goal of first phase will be the answer of these three questions. • In this phase, the data science team must learn and investigate the problem, develop context and understanding and learn about the data sources needed and available for the project. 1. Learning the business domain: • Understanding the domain area of the problem is essential. In many cases, data scientists will have deep computational and quantitative knowledge that can be broadly applied across many disciplines. • Data scientists have deep knowledge of the methods, techniques and ways for applying heuristics to a variety of business and conceptual problems. 2. Resources: • As part of the discovery phase, the team needs to assess the resources available to support the project. In this context, resources include technology, tools, systems, data and people. 3. Frame the problem: • Framing is the process of stating the analytics problem to be solved. At this point, it is a best practice to write down the problem statement and share it with the key stakeholders. • Each team member may hear slightly different things related to the needs and the problem and have somewhat different ideas of possible solutions. 4. Identifying key stakeholders: • The team can identify the success criteria, key risks and stakeholders, which should include anyone who will benefit from the project or will be significantly impacted by the project. • When interviewing stakeholders, learn about the domain area and any relevant history from similar analytics projects. 5. Interviewing the analytics sponsor: • The team should plan to collaborate with the stakeholders to clarify and frame the analytics problem. • At the outset, project sponsors may have a predetermined solution that may not necessarily realize the desired outcome. • In these cases, the team must use its knowledge and expertise to identify the true underlying problem and appropriate solution. • When interviewing the main stakeholders, the team needs to take time to thoroughly interview the project sponsor, who tends to be the one funding the project or providing the high-level requirements. • This person understands the problem and usually has an idea of a potential working solution.

- 11. 6. Developing initial hypotheses: • This step involves forming ideas that the team can test with data. Generally, it is best to come up with a few primary hypotheses to test and then be creative about developing several more. • These Initial Hypotheses form the basis of the analytical tests the team will use in later phases and serve as the foundation for the findings in phase. 7. Identifying potential data sources: • Consider the volume, type and time span of the data needed to test the hypotheses. Ensure that the team can access more than simply aggregated data. In most cases, the team will need the raw data to avoid introducing bias for the downstream analysis. Retrieving Data • Retrieving required data is second phase of data science project. Sometimes Data scientists need to go into the field and design a data collection process. Many companies will have already collected and stored the data and what they don't have can often be bought from third parties. • Most of the high quality data is freely available for public and commercial use. Data can be stored in various format. It is in text file format and tables in database. Data may be internal or external. 1. Start working on internal data, i.e. data stored within the company • First step of data scientists is to verify the internal data. Assess the relevance and quality of the data that's readily in company. Most companies have a program for maintaining key data, so much of the cleaning work may already be done. This data can be stored in official data repositories such as databases, data marts, data warehouses and data lakes maintained by a team of IT professionals. • Data repository is also known as a data library or data archive. This is a general term to refer to a data set isolated to be mined for data reporting and analysis. The data repository is a large database infrastructure, several databases that collect, manage and store data sets for data analysis, sharing and reporting. • Data repository can be used to describe several ways to collect and store data: a) Data warehouse is a large data repository that aggregates data usually from multiple sources or segments of a business, without the data being necessarily related. b) Data lake is a large data repository that stores unstructured data that is classified and tagged with metadata. c) Data marts are subsets of the data repository. These data marts are more targeted to what the data user needs and easier to use. d) Metadata repositories store data about data and databases. The metadata explains where the data source, how it was captured and what it represents. e) Data cubes are lists of data with three or more dimensions stored as a table. Advantages of data repositories: i. Data is preserved and archived. ii. Data isolation allows for easier and faster data reporting. iii. Database administrators have easier time tracking problems. iv. There is value to storing and analyzing data.

- 12. Disadvantages of data repositories: i. Growing data sets could slow down systems. ii. A system crash could affect all the data. iii. Unauthorized users can access all sensitive data more easily than if it was distributed across several locations. 2. Do not be afraid to shop around • If required data is not available within the company, take the help of other company, which provides such types of database. For example, Nielsen and GFK are provides data for retail industry. Data scientists also take help of Twitter, LinkedIn and Facebook. • Government's organizations share their data for free with the world. This data can be of excellent quality; it depends on the institution that creates and manages it. The information they share covers a broad range of topics such as the number of accidents or amount of drug abuse in a certain region and its demographics. 3. Perform data quality checks to avoid later problem • Allocate or spend some time for data correction and data cleaning. Collecting suitable, error free data is success of the data science project. • Most of the errors encounter during the data gathering phase are easy to spot, but being too careless will make data scientists spend many hours solving data issues that could have been prevented during data import. • Data scientists must investigate the data during the import, data preparation and exploratory phases. The difference is in the goal and the depth of the investigation. • In data retrieval process, verify whether the data is right data type and data is same as in the source document. • With data preparation process, more elaborate checks performed. Check any shortcut method is used. For example, check time and data format. • During the exploratory phase, Data scientists focus shifts to what he/she can learn from the data. Now Data scientists assume the data to be clean and look at the statistical properties such as distributions, correlations and outliers. Data Preparation • Data preparation means data cleansing, Integrating and transforming data. Data Cleaning • Data is cleansed through processes such as filling in missing values, smoothing the noisy data or resolving the inconsistencies in the data. • Data cleaning tasks are as follows: 1. Data acquisition and metadata 2. Fill in missing values 3. Unified date format 4. Converting nominal to numeric 5. Identify outliers and smooth out noisy data

- 13. 6. Correct inconsistent data • Data cleaning is a first step in data pre-processing techniques which is used to find the missing value, smooth noise data, recognize outliers and correct inconsistent. • Missing value: These dirty data will affects on miming procedure and led to unreliable and poor output. Therefore it is important for some data cleaning routines. For example, suppose that the average salary of staff is Rs. 65000/-. Use this value to replace the missing value for salary. • Data entry errors: Data collection and data entry are error-prone processes. They often require human intervention and because humans are only human, they make typos or lose their concentration for a second and introduce an error into the chain. But data collected by machines or computers isn't free from errors either. Errors can arise from human sloppiness, whereas others are due to machine or hardware failure. Examples of errors originating from machines are transmission errors or bugs in the extract, transform and load phase (ETL). • Whitespace error: Whitespaces tend to be hard to detect but cause errors like other redundant characters would. To remove the spaces present at start and end of the string, we can use strip() function on the string in Python. • Fixing capital letter mismatches: Capital letter mismatches are common problem. Most programming languages make a distinction between "Chennai" and "chennai". • Python provides string conversion like to convert a string to lowercase, uppercase using lower(), upper(). • The lower() Function in python converts the input string to lowercase. The upper() Function in python converts the input string to uppercase. Outlier • Outlier detection is the process of detecting and subsequently excluding outliers from a given set of data. The easiest way to find outliers is to use a plot or a table with the minimum and maximum values. • Fig. 1.6.1 shows outliers detection. Here O1 and O2 seem outliers from the rest. • An outlier may be defined as a piece of data or observation that deviates drastically from the given norm or average of the data set. An outlier may be caused simply by chance, but it may also indicate measurement error or that the given data set has a heavy-tailed distribution.

- 14. • Outlier analysis and detection has various applications in numerous fields such as fraud detection, credit card, discovering computer intrusion and criminal behaviours, medical and public health outlier detection, industrial damage detection. • General idea of application is to find out data which deviates from normal behaviour of data set. Dealing with Missing Value • These dirty data will affects on miming procedure and led to unreliable and poor output. Therefore it is important for some data cleaning routines. How to handle noisy data in data mining? • Following methods are used for handling noisy data: 1. Ignore the tuple: Usually done when the class label is missing. This method is not good unless the tuple contains several attributes with missing values. 2. Fill in the missing value manually: It is time-consuming and not suitable for a large data set with many missing values. 3. Use a global constant to fill in the missing value: Replace all missing attribute values by the same constant. 4. Use the attribute mean to fill in the missing value: For example, suppose that the average salary of staff is Rs 65000/-. Use this value to replace the missing value for salary. 5. Use the attribute mean for all samples belonging to the same class as the given tuple. 6. Use the most probable value to fill in the missing value. Correct Errors as Early as Possible • If error is not corrected in early stage of project, then it create problem in latter stages. Most of the time, we spend on finding and correcting error. Retrieving data is a difficult task and organizations spend millions of dollars on it in the hope of making better decisions. The data collection process is errorprone and in a big organization it involves many steps and teams. • Data should be cleansed when acquired for many reasons: a) Not everyone spots the data anomalies. Decision-makers may make costly mistakes on information based on incorrect data from applications that fail to correct for the faulty data. b) If errors are not corrected early on in the process, the cleansing will have to be done for every project that uses that data. c) Data errors may point to a business process that isn't working as designed. d) Data errors may point to defective equipment, such as broken transmission lines and defective sensors. e) Data errors can point to bugs in software or in the integration of software that may be critical to the company Combining Data from Different Data Sources 1. Joining table • Joining tables allows user to combine the information of one observation found in one table with the information that we find in another table. The focus is on enriching a single observation.

- 15. • A primary key is a value that cannot be duplicated within a table. This means that one value can only be seen once within the primary key column. That same key can exist as a foreign key in another table which creates the relationship. A foreign key can have duplicate instances within a table. • Fig. 1.6.2 shows Joining two tables on the CountryID and CountryName keys. 2. Appending tables • Appending table is called stacking table. It effectively adding observations from one table to another table. Fig. 1.6.3 shows Appending table. (See Fig. 1.6.3 on next page) • Table 1 contains x3 value as 3 and Table 2 contains x3 value as 33.The result of appending these tables is a larger one with the observations from Table 1 as well as Table 2. The equivalent operation in set theory would be the union and this is also the command in SQL, the common language of relational databases. Other set operators are also used in data science, such as set difference and intersection.

- 16. 3. Using views to simulate data joins and appends • Duplication of data is avoided by using view and append. The append table requires more space for storage. If table size is in terabytes of data, then it becomes problematic to duplicate the data. For this reason, the concept of a view was invented. • Fig. 1.6.4 shows how the sales data from the different months is combined virtually into a yearly sales table instead of duplicating the data. Transforming Data • In data transformation, the data are transformed or consolidated into forms appropriate for mining. Relationships between an input variable and an output variable aren't always linear. • Reducing the number of variables: Having too many variables in the model makes the model difficult to handle and certain techniques don't perform well when user overload them with too many input variables. • All the techniques based on a Euclidean distance perform well only up to 10 variables. Data scientists use special methods to reduce the number of variables but retain the maximum amount of data. Euclidean distance: • Euclidean distance is used to measure the similarity between observations. It is calculated as the square root of the sum of differences between each point. Euclidean distance = √(X1-X2)2 + (Y1-Y2)2 Turning variable into dummies: • Variables can be turned into dummy variables. Dummy variables canonly take two values: true (1) or false√ (0). They're used to indicate the absence of acategorical effect that may explain the observation.

- 17. Exploratory Data Analysis • Exploratory Data Analysis (EDA) is a general approach to exploring datasets by means of simple summary statistics and graphic visualizations in order to gain a deeper understanding of data. • EDA is used by data scientists to analyze and investigate data sets and summarize their main characteristics, often employing data visualization methods. It helps determine how best to manipulate data sources to get the answers user need, making it easier for data scientists to discover patterns, spot anomalies, test a hypothesis or check assumptions. • EDA is an approach/philosophy for data analysis that employs a variety of techniques to: 1. Maximize insight into a data set; 2. Uncover underlying structure; 3. Extract important variables; 4. Detect outliers and anomalies; 5. Test underlying assumptions; 6. Develop parsimonious models; and 7. Determine optimal factor settings.

- 18. • With EDA, following functions are performed: 1. Describe of user data 2. Closely explore data distributions 3. Understand the relations between variables 4. Notice unusual or unexpected situations 5. Place the data into groups 6. Notice unexpected patterns within groups 7. Take note of group differences • Box plots are an excellent tool for conveying location and variation information in data sets, particularly for detecting and illustrating location and variation changes between different groups of data. • Exploratory data analysis is majorly performed using the following methods: 1. Univariate analysis: Provides summary statistics for each field in the raw data set (or) summary only on one variable. Ex : CDF,PDF,Box plot 2. Bivariate analysis is performed to find the relationship between each variable in the dataset and the target variable of interest (or) using two variables and finding relationship between them. Ex: Boxplot, Violin plot. 3. Multivariate analysis is performed to understand interactions between different fields in the dataset (or) finding interactions between variables more than 2. • A box plot is a type of chart often used in explanatory data analysis to visually show the distribution of numerical data and skewness through displaying the data quartiles or percentile and averages. 1. Minimum score: The lowest score, exlcuding outliers. 2. Lower quartile: 25% of scores fall below the lower quartile value. 3. Median: The median marks the mid-point of the data and is shown by the line that divides the box into two parts. 4. Upper quartile: 75 % of the scores fall below the upper quartiel value. 5. Maximum score: The highest score, excluding outliers. 6. Whiskers: The upper and lower whiskers represent scores outside the middle 50%. 7. The interquartile range: This is the box plot showing the middle 50% of scores.

- 19. • Boxplots are also extremely usefule for visually checking group differences. Suppose we have four groups of scores and we want to compare them by teaching method. Teaching method is our categorical grouping variable and score is the continuous outcomes variable that the researchers measured. Build the Models • To build the model, data should be clean and understand the content properly. The components of model building are as follows: a) Selection of model and variable b) Execution of model c) Model diagnostic and model comparison • Building a model is an iterative process. Most models consist of the following main steps: 1. Selection of a modeling technique and variables to enter in the model 2. Execution of the model 3. Diagnosis and model comparison Model and Variable Selection • For this phase, consider model performance and whether project meets all the requirements to use model, as well as other factors: 1. Must the model be moved to a production environment and, if so, would it be easy to implement? 2. How difficult is the maintenance on the model: how long will it remain relevantif left untouched? 3. Does the model need to be easy to explain? Model Execution • Various programming language is used for implementing the model. For model execution, Python provides libraries like StatsModels or Scikit-learn. These packages use several of the most popular techniques.

- 20. • Coding a model is a nontrivial task in most cases, so having these libraries available can speed up the process. Following are the remarks on output: a) Model fit: R-squared or adjusted R-squared is used. b) Predictor variables have a coefficient: For a linear model this is easy to interpret. c) Predictor significance: Coefficients are great, but sometimes not enough evidence exists to show that the influence is there. • Linear regression works if we want to predict a value, but for classify something, classification models are used. The k-nearest neighbors method is one of the best method. • Following commercial tools are used : 1. SAS enterprise miner: This tool allows users to run predictive and descriptive models based on large volumes of data from across the enterprise. 2. SPSS modeler: It offers methods to explore and analyze data through a GUI. 3. Matlab: Provides a high-level language for performing a variety of data analytics, algorithms and data exploration. 4. Alpine miner: This tool provides a GUI front end for users to develop analytic workflows and interact with Big Data tools and platforms on the back end. • Open Source tools: 1. R and PL/R: PL/R is a procedural language for PostgreSQL with R. 2. Octave: A free software programming language for computational modeling, has some of the functionality of Matlab. 3. WEKA: It is a free data mining software package with an analytic workbench. The functions created in WEKA can be executed within Java code. 4. Python is a programming language that provides toolkits for machine learning and analysis. 5. SQL in-database implementations, such as MADlib provide an alterative to in memory desktop analytical tools. Model Diagnostics and Model Comparison Try to build multiple model and then select best one based on multiple criteria. Working with a holdout sample helps user pick the best-performing model. • In Holdout Method, the data is split into two different datasets labeled as a training and a testing dataset. This can be a 60/40 or 70/30 or 80/20 split. This technique is called the hold-out validation technique. Suppose we have a database with house prices as the dependent variable and two independent variables showing the square footage of the house and the number of rooms. Now, imagine this dataset has 30 rows. The whole idea is that you build a model that can predict house prices accurately. • To 'train' our model or see how well it performs, we randomly subset 20 of those rows and fit the model. The second step is to predict the values of those 10 rows that we excluded and measure how well our predictions were. • As a rule of thumb, experts suggest to randomly sample 80% of the data into the training set and 20% into the test set.

- 21. • The holdout method has two, basic drawbacks: 1. It requires extra dataset. 2. It is a single train-and-test experiment, the holdout estimate of error rate will be misleading if we happen to get an "unfortunate" split. Presenting Findings and Building Applications • The team delivers final reports, briefings, code and technical documents. • In addition, team may run a pilot project to implement the models in a production environment. • The last stage of the data science process is where user soft skills will be most useful. • Presenting your results to the stakeholders and industrializing your analysis process for repetitive reuse and integration with other tools. Data Mining • Data mining refers to extracting or mining knowledge from large amounts of data. It is a process of discovering interesting patterns or Knowledge from a large amount of data stored either in databases, data warehouses or other information repositories. Reasons for using data mining: 1. Knowledge discovery: To identify the invisible correlation, patterns in the database. 2. Data visualization: To find sensible way of displaying data. 3. Data correction: To identify and correct incomplete and inconsistent data. Functions of Data Mining • Different functions of data mining are characterization, association and correlation analysis, classification, prediction, clustering analysis and evolution analysis. 1. Characterization is a summarization of the general characteristics or features of a target class of data. For example, the characteristics of students can be produced, generating a profile of all the University in first year engineering students. 2. Association is the discovery of association rules showing attribute-value conditions that occur frequently together in a given set of data. 3. Classification differs from prediction. Classification constructs a set of models that describe and distinguish data classes and prediction builds a model to predict some missing data values. 4. Clustering can also support taxonomy formation. The organization of observations into a hierarchy of classes that group similar events together. 5. Data evolution analysis describes and models' regularities for objects whose behaviour changes over time. It may include characterization, discrimination, association, classification or clustering of time-related data. Data mining tasks can be classified into two categories: descriptive and predictive. Predictive Mining Tasks • To make prediction, predictive mining tasks performs inference on the current data. Predictive analysis provides answers of the future queries that move across using historical data as the chief principle for decisions

- 22. • It involves the supervised learning functions used for the prediction of the target value. The methods fall under this mining category are the classification, time-series analysis and regression. • Data modeling is the necessity of the predictive analysis, which works by utilizing some variables to anticipate the unknown future data values for other variables. • It provides organizations with actionable insights based on data. It provides an estimation regarding the likelihood of a future outcome. • To do this, a variety of techniques are used, such as machine learning, data mining, modeling and game theory. • Predictive modeling can, for example, help to identify any risks or opportunities in the future. • Predictive analytics can be used in all departments, from predicting customer behaviour in sales and marketing, to forecasting demand for operations or determining risk profiles for finance. • A very well-known application of predictive analytics is credit scoring used by financial services to determine the likelihood of customers making future credit payments on time. Determining such a risk profile requires a vast amount of data, including public and social data. • Historical and transactional data are used to identify patterns and statistical models and algorithms are used to capture relationships in various datasets. • Predictive analytics has taken off in the big data era and there are many tools available for organisations to predict future outcomes. Descriptive Mining Task • Descriptive Analytics is the conventional form of business intelligence and data analysis, seeks to provide a depiction or "summary view" of facts and figures in an understandable format, to either inform or prepare data for further analysis. • Two primary techniques are used for reporting past events: data aggregation and data mining. • It presents past data in an easily digestible format for the benefit of a wide business audience. • A set of techniques for reviewing and examining the data set to understand the data and analyze business performance. • Descriptive analytics helps organisations to understand what happened in the past. It helps to understand the relationship between product and customers. • The objective of this analysis is to understanding, what approach to take in the future. If we learn from past behaviour, it helps us to influence future outcomes. • It also helps to describe and present data in such format, which can be easily understood by a wide variety of business readers. Architecture of a Typical Data Mining System • Data mining refers to extracting or mining knowledge from large amounts of data. It is a process of discovering interesting patterns or knowledge from a large amount of data stored either in databases, data warehouses. • It is the computational process of discovering patterns in huge data sets involving methods at the intersection of AI, machine learning, statistics and database systems. • Fig. 1.10.1 (See on next page) shows typical architecture of data mining system.

- 23. • Components of data mining system are data source, data warehouse server, data mining engine, pattern evaluation module, graphical user interface and knowledge base. • Database, data warehouse, WWW or other information repository: This is set of databases, data warehouses, spreadsheets or other kinds of data repositories. Data cleaning and data integration techniques may be apply on the data. • Data warehouse server based on the user's data request, data warehouse server is responsible for fetching the relevant data. • Knowledge base is helpful in the whole data mining process. It might be useful for guiding the search or evaluating the interestingness of the result patterns. The knowledge base might even contain user beliefs and data from user experiences that can be useful in the process of data mining. • The data mining engine is the core component of any data mining system. It consists of a number of modules for performing data mining tasks including association, classification, characterization, clustering, prediction, time-series analysis etc. • The pattern evaluation module is mainly responsible for the measure of interestingness of the pattern by using a threshold value. It interacts with the data mining engine to focus the search towards interesting patterns. • The graphical user interface module communicates between the user and the data mining system. This module helps the user use the system easily and efficiently without knowing the real complexity behind the process. • When the user specifies a query or a task, this module interacts with the data mining system and displays the result in an easily understandable manner.

- 24. Classification of DM System • Data mining system can be categorized according to various parameters. These are database technology, machine learning, statistics, information science, visualization and other disciplines. • Fig. 1.10.2 shows classification of DM system. • Multi-dimensional view of data mining classification. Data Warehousing • Data warehousing is the process of constructing and using a data warehouse. A data warehouse is constructed by integrating data from multiple heterogeneous sources that support analytical reporting, structured and/or ad hoc queries and decision making. Data warehousing involves data cleaning, data integration and data consolidations. • A data warehouse is a subject-oriented, integrated, time-variant and non-volatile collection of data in support of management's decision-making process. A data warehouse stores historical data for purposes of decision support. • A database an application-oriented collection of data that is organized, structured, coherent, with minimum and controlled redundancy, which may be accessed by several users in due time.

- 25. • Data warehousing provides architectures and tools for business executives to systematically organize, understand and use their data to make strategic decisions. • A data warehouse is a subject-oriented collection of data that is integrated, time-variant, non- volatile, which may be used to support the decision-making process. • Data warehouses are databases that store and maintain analytical data separately from transaction- oriented databases for the purpose of decision support. Data warehouses separate analysis workload from transaction workload and enable an organization to consolidate data from several source. • Data organization in data warehouses is based on areas of interest, on the major subjects of the organization: Customers, products, activities etc. databases organize data based on enterprise applications resulted from its functions. • The main objective of a data warehouse is to support the decision-making system, focusing on the subjects of the organization. The objective of a database is to support the operational system and information is organized on applications and processes. • A data warehouse usually stores many months or years of data to support historical analysis. The data in a data warehouse is typically loaded through an extraction, transformation and loading (ETL) process from multiple data sources. • Databases and data warehouses are related but not the same. • A database is a way to record and access information from a single source. A database is often handling real-time data to support day-to-day business processes like transaction processing. • A data warehouse is a way to store historical information from multiple sources to allow you to analyse and report on related data (e.g., your sales transaction data, mobile app data and CRM data). Unlike a database, the information isn't updated in real-time and is better for data analysis of broader trends. • Modern data warehouses are moving toward an Extract, Load, Transformation (ELT) architecture in which all or most data transformation is performed on the database that hosts the data warehouse. • Goals of data warehousing: 1. To help reporting as well as analysis. 2. Maintain the organization's historical information. 3. Be the foundation for decision making. "How are organizations using the information from data warehouses ?" • Most of the organizations makes use of this information for taking business decision like : a) Increasing customer focus: It is possible by performing analysis of customer buying. b) Repositioning products and managing product portfolios by comparing the performance of last year sales. c) Analysing operations and looking for sources of profit. d) Managing customer relationships, making environmental corrections and managing the cost of corporate assets. Characteristics of Data Warehouse 1. Subject oriented Data are organized based on how the users refer to them. A data warehouse can be used to analyse a particular subject area. For example, "sales" can be a particular subject.

- 26. 2. Integrated: All inconsistencies regarding naming convention and value representations are removed. For example, source A and source B may have different ways of identifying a product, but in a data warehouse, there will be only a single way of identifying a product. 3. Non-volatile: Data are stored in read-only format and do not change over time. Typical activities such as deletes, inserts and changes that are performed in an operational application environment are completely non-existent in a DW environment. 4. Time variant: Data are not current but normally time series. Historical information is kept in a data warehouse. For example, one can retrieve files from 3 months, 6 months, 12 months or even previous data from a data warehouse. Key characteristics of a Data Warehouse 1. Data is structured for simplicity of access and high-speed query performance. 2. End users are time-sensitive and desire speed-of-thought response times. 3. Large amounts of historical data are used. 4. Queries often retrieve large amounts of data, perhaps many thousands of rows. 5. Both predefined and ad hoc queries are common. 6. The data load involves multiple sources and transformations. Multitier Architecture of Data Warehouse • Data warehouse architecture is a data storage framework's design of an organization. A data warehouse architecture takes information from raw sets of data and stores it in a structured and easily digestible format. • Data warehouse system is constructed in three ways. These approaches are classified the number of tiers in the architecture. a) Single-tier architecture. b) Two-tier architecture. c) Three-tier architecture (Multi-tier architecture). • Single tier warehouse architecture focuses on creating a compact data set and minimizing the amount of data stored. While it is useful for removing redundancies. It is not effective for organizations with large data needs and multiple streams. • Two-tier warehouse structures separate the resources physically available from the warehouse itself. This is most commonly used in small organizations where a server is used as a data mart. While it is more effective at storing and sorting data. Two-tier is not scalable and it supports a minimal number of end-users. Three tier (Multi-tier) architecture: • Three tier architecture creates a more structured flow for data from raw sets to actionable insights. It is the most widely used architecture for data warehouse systems. • Fig. 1.11.1 shows three tier architecture. Three tier architecture sometimes called multi-tier architecture. • The bottom tier is the database of the warehouse, where the cleansed and transformed data is loaded. The bottom tier is a warehouse database server.

- 27. • The middle tier is the application layer giving an abstracted view of the database. It arranges the data to make it more suitable for analysis. This is done with an OLAP server, implemented using the ROLAP or MOLAP model. • OLAPS can interact with both relational databases and multidimensional databases, which lets them collect data better based on broader parameters. • The top tier is the front-end of an organization's overall business intelligence suite. The top-tier is where the user accesses and interacts with data via queries, data visualizations and data analytics tools. • The top tier represents the front-end client layer. The client level which includes the tools and Application Programming Interface (API) used for high-level data analysis, inquiring and reporting. User can use reporting tools, query, analysis or data mining tools. Needs of Data Warehouse 1) Business user: Business users require a data warehouse to view summarized data from the past. Since these people are non-technical, the data may be presented to them in an elementary form. 2) Store historical data: Data warehouse is required to store the time variable data from the past. This input is made to be used for various purposes. 3) Make strategic decisions: Some strategies may be depending upon the data in the data warehouse. So, data warehouse contributes to making strategic decisions. 4) For data consistency and quality Bringing the data from different sources at a commonplace, the user can effectively undertake to bring the uniformity and consistency in data.

- 28. 5) High response time: Data warehouse has to be ready for somewhat unexpected loads and types of queries, which demands a significant degree of flexibility and quick response time. Benefits of Data Warehouse a) Understand business trends and make better forecasting decisions. b) Data warehouses are designed to perform well enormous amounts of data. c) The structure of data warehouses is more accessible for end-users to navigate, understand and query. d) Queries that would be complex in many normalized databases could be easier to build and maintain in data warehouses. e) Data warehousing is an efficient method to manage demand for lots of information from lots of users. f) Data warehousing provide the capabilities to analyze a large amount of historical data. Difference between ODS and Data Warehouse Metadata • Metadata is simply defined as data about data. The data that is used to represent other data is known as metadata. In data warehousing, metadata is one of the essential aspects. • We can define metadata as follows: a) Metadata is the road-map to a data warehouse. b) Metadata in a data warehouse defines the warehouse objects. c) Metadata acts as a directory. This directory helps the decision support system to locate the contents of a data warehouse. • In a data warehouse, we create metadata for the data names and definitions of a given data warehouse. Along with this metadata, additional metadata is also created for time-stamping any extracted data, the source of extracted data. Why is metadata necessary in a data warehouse ? a) First, it acts as the glue that links all parts of the data warehouses. b) Next, it provides information about the contents and structures to the developers. c) Finally, it opens the doors to the end-users and makes the contents recognizable in their terms.

- 29. • Fig. 1.11.2 shows warehouse metadata Basic Statistical Descriptions of Data • For data preprocessing to be successful, it is essential to have an overall picture of our data. Basic statistical descriptions can be used to identify properties of the data and highlight which data values should be treated as noise or outliers. • Basic statistical descriptions can be used to identify properties of the data and highlight which data values should be treated as noise or outliers. • For data preprocessing tasks, we want to learn about data characteristics regarding both central tendency and dispersion of the data. • Measures of central tendency include mean, median, mode and midrange. • Measures of data dispersion include quartiles, interquartile range (IQR) and variance. • These descriptive statistics are of great help in understanding the distribution of the data. Measuring the Central Tendency • We look at various ways to measure the central tendency of data, include: Mean, Weighted mean, Trimmed mean, Median, Mode and Midrange. 1. Mean : • The mean of a data set is the average of all the data values. The sample mean x is the point estimator of the population mean μ.

- 30. 2. Median : Sum of the values of then observations Number of observations in the sample Sum of the values of the N observations Number of observations in the population • The median of a data set is the value in the middle when the data items are arranged in ascending order. Whenever a data set has extreme values, the median is the preferred measure of central location. • The median is the measure of location most often reported for annual income and property value data. A few extremely large incomes of property values can inflate the mean. • For an off number of observations: 7 observations= 26, 18, 27, 12, 14, 29, 19. Numbers in ascending order = 12, 14, 18, 19, 26, 27, 29 • The median is the middle value. Median=19 • For an even number of observations: 8 observations = 26 18 29 12 14 27 30 19 Numbers in ascending order =12, 14, 18, 19, 26, 27, 29, 30 The median is the average of the middle two values. 3. Mode: • The mode of a data set is the value that occurs with greatest frequency. The greatest frequency can occur at two or more different values. If the data have exactly two modes, the data have exactly two modes, the data are bimodal. If the data have more than two modes, the data are multimodal. • Weighted mean: Sometimes, each value in a set may be associated with a weight, the weights reflect the significance, importance or occurrence frequency attached to their respective values. • Trimmed mean: A major problem with the mean is its sensitivity to extreme (e.g., outlier) values. Even a small number of extreme values can corrupt the mean. The trimmed mean is the mean obtained after cutting off values at the high and low extremes. • For example, we can sort the values and remove the top and bottom 2 % before computing the mean. We should avoid trimming too large a portion (such as 20 %) at both ends as this can result in the loss of valuable information. • Holistic measure is a measure that must be computed on the entire data set as a whole. It cannot be computed by partitioning the given data into subsets and merging the values obtained for the measure in each subset. Measuring the Dispersion of Data • An outlier is an observation that lies an abnormal distance from other values in a random sample from a population. • First quartile (Q1): The first quartile is the value, where 25% of the values are smaller than Q1 and 75% are larger. • Third quartile (Q3): The third quartile is the value, where 75 % of the values are smaller than Q3 and 25% are larger.

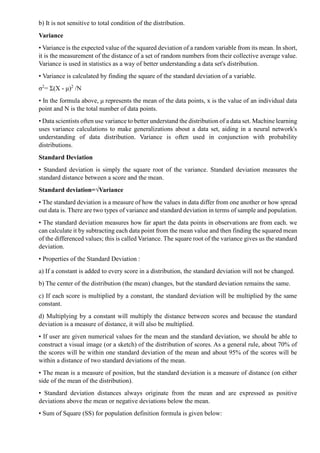

- 31. • The box plot is a useful graphical display for describing the behavior of the data in the middle as well as at the ends of the distributions. The box plot uses the median and the lower and upper quartiles. If the lower quartile is Q1 and the upper quartile is Q3, then the difference (Q3 - Q1) is called the interquartile range or IQ. • Range: Difference between highest and lowest observed values Variance : • The variance is a measure of variability that utilizes all the data. It is based on the difference between the value of each observation (x;) and the mean (x) for a sample, u for a population). • The variance is the average of the squared between each data value and the mean. Standard Deviation : • The standard deviation of a data set is the positive square root of the variance. It is measured in the same in the same units as the data, making it more easily interpreted than the variance. • The standard deviation is computed as follows:

- 32. Difference between Standard Deviation and Variance Graphic Displays of Basic Statistical Descriptions • There are many types of graphs for the display of data summaries and distributions, such as Bar charts, Pie charts, Line graphs, Boxplot, Histograms, Quantile plots and Scatter plots. 1. Scatter diagram • Also called scatter plot, X-Y graph. • While working with statistical data it is often observed that there are connections between sets of data. For example the mass and height of persons are related, the taller the person the greater his/her mass. • To find out whether or not two sets of data are connected scatter diagrams can be used. Scatter diagram shows the relationship between children's age and height. • A scatter diagram is a tool for analyzing relationship between two variables. One variable is plotted on the horizontal axis and the other is plotted on the vertical axis. • The pattern of their intersecting points can graphically show relationship patterns. Commonly a scatter diagram is used to prove or disprove cause-and-effect relationships. • While scatter diagram shows relationships, it does not by itself prove that one variable causes other. In addition to showing possible cause and effect relationships, a scatter diagram can show that two

- 33. variables are from a common cause that is unknown or that one variable can be used as a surrogate for the other. 2. Histogram • A histogram is used to summarize discrete or continuous data. In a histogram, the data are grouped into ranges (e.g. 10-19, 20-29) and then plotted as connected bars. Each bar represents a range of data. • To construct a histogram from a continuous variable you first need to split the data into intervals, called bins. Each bin contains the number of occurrences of scores in the data set that are contained within that bin. • The width of each bar is proportional to the width of each category and the height is proportional to the frequency or percentage of that category. 3. Line graphs • It is also called stick graphs. It gives relationships between variables. • Line graphs are usually used to show time series data that is how one or more variables vary over a continuous period of time. They can also be used to compare two different variables over time. • Typical examples of the types of data that can be presented using line graphs are monthly rainfall and annual unemployment rates. • Line graphs are particularly useful for identifying patterns and trends in the data such as seasonal effects, large changes and turning points. Fig. 1.12.1 show line graph. (See Fig. 1.12.1 on next page) • As well as time series data, line graphs can also be appropriate for displaying data that are measured over other continuous variables such as distance. • For example, a line graph could be used to show how pollution levels vary with increasing distance from a source or how the level of a chemical varies with depth of soil. • In a line graph the x-axis represents the continuous variable (for example year or distance from the initial measurement) whilst the y-axis has a scale and indicated the measurement. • Several data series can be plotted on the same line chart and this is particularly useful for analysing and comparing the trends in different datasets. • Line graph is often used to visualize rate of change of a quantity. It is more useful when the given data has peaks and valleys. Line graphs are very simple to draw and quite convenient to interpret.

- 34. 4. Pie charts • A type of graph is which a circle is divided into sectors that each represents a proportion of whole. Each sector shows the relative size of each value. • Apie chart displays data, information and statistics in an easy to read "pie slice" format with varying slice sizes telling how much of one data element exists. • Pie chart is also known as circle graph. The bigger the slice, the more of that particular data was gathered. The main use of a pie chart is to show comparisons. Fig. 1.12.2 shows pie chart. (See Fig. 1.12.2 on next page) • Various applications of pie charts can be found in business, school and at home. For business pie charts can be used to show the success or failure of certain products or services. • At school, pie chart applications include showing how much time is allotted to each subject. At home pie charts can be useful to see expenditure of monthly income in different needs. • Reading of pie chart is as easy figuring out which slice of an actual pie is the biggest. Limitation of pie chart: • It is difficult to tell the difference between estimates of similar size. Error bars or confidence limits cannot be shown on pie graph. Legends and labels on pie graphs are hard to align and read. • The human visual system is more efficient at perceiving and discriminating between lines and line lengths rather than two-dimensional areas and angles. • Pie graphs simply don't work when comparing data.

- 35. Two Marks Questions with Answers Q.1 What is data science? Ans; • Data science is an interdisciplinary field that seeks to extract knowledge or insights from various forms of data. • At its core, data science aims to discover and extract actionable knowledge from data that can be used to make sound business decisions and predictions. • Data science uses advanced analytical theory and various methods such as time series analysis for predicting future. Q.2 Define structured data. Ans. Structured data is arranged in rows and column format. It helps for application to retrieve and process data easily. Database management system is used for storing structured data. The term structured data refers to data that is identifiable because it is organized in a structure. Q.3 What is data? Ans. Data set is collection of related records or information. The information may be on some entity or some subject area. Q.4 What is unstructured data? Ans. Unstructured data is data that does not follow a specified format. Row and columns are not used for unstructured data. Therefore it is difficult to retrieve required information. Unstructured data has no identifiable structure. Q.5 What is machine - generated data? Ans. Machine-generated data is an information that is created without human interaction as a result of a computer process or application activity. This means that data entered manually by an end-user is not recognized to be machine-generated. Q.6 Define streaming data. Ans; Streaming data is data that is generated continuously by thousands of data sources, which typically send in the data records simultaneously and in small sizes (order of Kilobytes). Q.7 List the stages of data science process. Ans.: Stages of data science process are as follows: 1. Discovery or Setting the research goal 2. Retrieving data 3. Data preparation 4. Data exploration 5. Data modeling 6. Presentation and automation Q.8 What are the advantages of data repositories? Ans.: Advantages are as follows:

- 36. i. Data is preserved and archived. ii. Data isolation allows for easier and faster data reporting. iii. Database administrators have easier time tracking problems. iv. There is value to storing and analyzing data. Q.9 What is data cleaning? Ans. Data cleaning means removing the inconsistent data or noise and collecting necessary information of a collection of interrelated data. Q.10 What is outlier detection? Ans. : Outlier detection is the process of detecting and subsequently excluding outliers from a given set of data. The easiest way to find outliers is to use a plot or a table with the minimum and maximum values. Q.11 Explain exploratory data analysis. Ans. : Exploratory Data Analysis (EDA) is a general approach to exploring datasets by means of simple summary statistics and graphic visualizations in order to gain a deeper understanding of data. EDA is used by data scientists to analyze and investigate data sets and summarize their main characteristics, often employing data visualization methods. Q.12 Define data mining. Ans. : Data mining refers to extracting or mining knowledge from large amounts of data. It is a process of discovering interesting patterns or Knowledge from a large amount of data stored either in databases, data warehouses, or other information repositories. Q.13 What are the three challenges to data mining regarding data mining methodology? Ans. Challenges to data mining regarding data mining methodology include the following: 1. Mining different kinds of knowledge in databases, 2. Interactive mining of knowledge at multiple levels of abstraction, 3. Incorporation of background knowledge. Q.14 What is predictive mining? Ans. Predictive mining tasks perform inference on the current data in order to make predictions. Predictive analysis provides answers of the future queries that move across using historical data as the chief principle for decisions. Q.15 What is data cleaning? Ans. Data cleaning means removing the inconsistent data or noise and collecting necessary information of a collection of interrelated data. Q.16 List the five primitives for specifying a data mining task. Ans. : 1. The set of task-relevant data to be mined 2. The kind of knowledge to be mined 3. The background knowledge to be used in the discovery process

- 37. 4. The interestingness measures and thresholds for pattern evaluation 5. The expected representation for visualizing the discovered pattern. Q.17 List the stages of data science process. Ans. Data science process consists of six stages: 1. Discovery or Setting the research goal 2. Retrieving data 3. Data preparation 4. Data exploration 5. Data modeling 6. Presentation and automation Q.18 What is data repository? Ans. Data repository is also known as a data library or data archive. This is a general term to refer to a data set isolated to be mined for data reporting and analysis. The data repository is a large database infrastructure, several databases that collect, manage and store data sets for data analysis, sharing and reporting. Q.19 List the data cleaning tasks? Ans. Data cleaning are as follows: 1. Data acquisition and metadata 2. Fill in missing values 3. Unified date format 4. Converting nominal to numeric 5. Identify outliers and smooth out noisy data 6. Correct inconsistent data Q.20 What is Euclidean distance? Ans. Euclidean distance is used to measure the similarity between observations. It is calculated as the square root of the sum of differences between each point.

- 38. UNIT II : Describing Data Syllabus Types of Data - Types of Variables - Describing Data with Tables and Graphs -Describing Data with Averages - Describing Variability - Normal Distributions and Standard (z) Scores. Types of Data • Data is collection of facts and figures which relay something specific, but which are not organized in any way. It can be numbers, words, measurements, observations or even just descriptions of things. We can say, data is raw material in the production of information. • Data set is collection of related records or information. The information may be on some entity or some subject area. • Collection of data objects and their attributes. Attributes captures the basic characteristics of an object • Each row of a data set is called a record. Each data set also has multiple attributes, each of which gives information on a specific characteristic. Qualitative and Quantitative Data • Data can broadly be divided into following two types: Qualitative data and quantitative data. Qualitative data: • Qualitative data provides information about the quality of an object or information which cannot be measured. Qualitative data cannot be expressed as a number. Data that represent nominal scales such as gender, economic status, religious preference are usually considered to be qualitative data. • Qualitative data is data concerned with descriptions, which can be observed but cannot be computed. Qualitative data is also called categorical data. Qualitative data can be further subdivided into two types as follows: 1. Nominal data 2. Ordinal data Qualitative data: • Qualitative data is the one that focuses on numbers and mathematical calculations and can be calculated and computed. • Qualitative data are anything that can be expressed as a number or quantified. Examples of quantitative data are scores on achievement tests, number of hours of study or weight of a subject. These data may be represented by ordinal, interval or ratio scales and lend themselves to most statistical manipulation.

- 39. • There are two types of qualitative data: Interval data and ratio data. Difference between Qualitative and Quantitative Data Advantages and Disadvantages of Qualitative Data 1. Advantages: • It helps in-depth analysis • Qualitative data helps the market researchers to understand the mindset of their customers. • Avoid pre-judgments 2. Disadvantages: • Time consuming • Not easy to generalize • Difficult to make systematic comparisons Advantages and Disadvantages of Quantitative Data 1. Advantages: • Easier to summarize and make comparisons. • It is often easier to obtain large sample sizes • It is less time consuming since it is based on statistical analysis. 2. Disadvantages: • The cost is relatively high. • There is no accurate generalization of data the researcher received

- 40. Ranked Data • Ranked data is a variable in which the value of the data is captured from an ordered set, which is recorded in the order of magnitude. Ranked data is also called as Ordinal data. • Ordinal represents the "order." Ordinal data is known as qualitative data or categorical data. It can be grouped, named and also ranked. • Characteristics of the Ranked data: a) The ordinal data shows the relative ranking of the variables b) It identifies and describes the magnitude of a variable c) Along with the information provided by the nominal scale, ordinal scales give the rankings of those variables d) The interval properties are not known e) The surveyors can quickly analyze the degree of agreement concerning the identified order of variables • Examples: a) University ranking : 1st , 9th , 87th ... b) Socioeconomic status: poor, middle class, rich. c) Level of agreement: yes, maybe, no. d) Time of day: dawn, morning, noon, afternoon, evening, night Scale of Measurement • Scales of measurement, also called levels of measurement. Each level of measurement scale has specific properties that determine the various use of statistical analysis. • There are four different scales of measurement. The data can be defined as being one of the four scales. The four types of scales are: Nominal, ordinal, interval and ratio. Nominal • A nominal data is the 1 level of measurement scale in which the numbers serve as "tags" or "labels" to classify or identify the objects. • A nominal data usually deals with the non-numeric variables or the numbers that do not have any value. While developing statistical models, nominal data are usually transformed before building the model. • It is also known as categorical variables. Characteristics of nominal data: 1. A nominal data variable is classified into two or more categories. In this measurement mechanism, the answer should fall into either of the classes. 2. It is qualitative. The numbers are used here to identify the objects. 3. The numbers don't define the object characteristics. The only permissible aspect of numbers in the nominal scale is "counting".