Cure, Clustering Algorithm

31 likes20,510 views

The document summarizes the CURE clustering algorithm, which uses a hierarchical approach that selects a constant number of representative points from each cluster to address limitations of centroid-based and all-points clustering methods. It employs random sampling and partitioning to speed up processing of large datasets. Experimental results show CURE detects non-spherical and variably-sized clusters better than compared methods, and it has faster execution times on large databases due to its sampling approach.

1 of 19

Ad

Recommended

3.5 model based clustering

3.5 model based clusteringKrish_ver2 The document discusses various model-based clustering techniques for handling high-dimensional data, including expectation-maximization, conceptual clustering using COBWEB, self-organizing maps, subspace clustering with CLIQUE and PROCLUS, and frequent pattern-based clustering. It provides details on the methodology and assumptions of each technique.

DISTRIBUTED DATABASE WITH RECOVERY TECHNIQUES

DISTRIBUTED DATABASE WITH RECOVERY TECHNIQUESAAKANKSHA JAIN Distributed Database Designs are nothing but multiple, logically related Database systems, physically distributed over several sites, using a Computer Network, which is usually under a centralized site control.

Distributed database design refers to the following problem:

Given a database and its workload, how should the database be split and allocated to sites so as to optimize certain objective function

There are two issues:

(i) Data fragmentation which determines how the data should be fragmented.

(ii) Data allocation which determines how the fragments should be allocated.

Data Mining: Mining ,associations, and correlations

Data Mining: Mining ,associations, and correlationsDatamining Tools Market basket analysis examines customer purchasing patterns to determine which items are commonly bought together. This can help retailers with marketing strategies like product bundling and complementary product placement. Association rule mining is a two-step process that first finds frequent item sets that occur together above a minimum support threshold, and then generates strong association rules from these frequent item sets based on minimum support and confidence. Various techniques can improve the efficiency of the Apriori algorithm for mining association rules, such as hashing, transaction reduction, partitioning, sampling, and dynamic item-set counting. Pruning strategies like item merging, sub-item-set pruning, and item skipping can also enhance efficiency. Constraint-based mining allows users to specify constraints on the type of

Machine learning clustering

Machine learning clusteringCosmoAIMS Bassett This document discusses machine learning concepts including supervised vs. unsupervised learning, clustering algorithms, and specific clustering methods like k-means and k-nearest neighbors. It provides examples of how clustering can be used for applications such as market segmentation and astronomical data analysis. Key clustering algorithms covered are hierarchy methods, partitioning methods, k-means which groups data by assigning objects to the closest cluster center, and k-nearest neighbors which classifies new data based on its closest training examples.

Clustering paradigms and Partitioning Algorithms

Clustering paradigms and Partitioning AlgorithmsUmang MIshra The PPT is about the Clustering paradigms and Partitioning Algorithms by K means and K-method in Data Mining and Data Warehousing

05 Clustering in Data Mining

05 Clustering in Data MiningValerii Klymchuk Classification of common clustering algorithm and techniques, e.g., hierarchical clustering, distance measures, K-means, Squared error, SOFM, Clustering large databases.

3.2 partitioning methods

3.2 partitioning methodsKrish_ver2 The document discusses various clustering approaches including partitioning, hierarchical, density-based, grid-based, model-based, frequent pattern-based, and constraint-based methods. It focuses on partitioning methods such as k-means and k-medoids clustering. K-means clustering aims to partition objects into k clusters by minimizing total intra-cluster variance, representing each cluster by its centroid. K-medoids clustering is a more robust variant that represents each cluster by its medoid or most centrally located object. The document also covers algorithms for implementing k-means and k-medoids clustering.

Data clustring

Data clustring Salman Memon DATA

Data is any raw material or unorganized information.

CLUSTER

Cluster is group of objects that belongs to a same class.

Cluster is a set of tables physically stored together as one table that shares common columns.

http://phpexecutor.com

DBSCAN : A Clustering Algorithm

DBSCAN : A Clustering AlgorithmPınar Yahşi This document contains a description of the dbscan algorithm, the operating principle of the algorithm, its advantages and disadvantages.

K means Clustering

K means ClusteringEdureka! The document discusses clustering and k-means clustering algorithms. It provides examples of scenarios where clustering can be used, such as placing cell phone towers or opening new offices. It then defines clustering as organizing data into groups where objects within each group are similar to each other and dissimilar to objects in other groups. The document proceeds to explain k-means clustering, including the process of initializing cluster centers, assigning data points to the closest center, recomputing the centers, and iterating until centers converge. It provides a use case of using k-means to determine locations for new schools.

1.2 steps and functionalities

1.2 steps and functionalitiesKrish_ver2 Data mining involves multiple steps in the knowledge discovery process including data cleaning, integration, selection, transformation, mining, and pattern evaluation. It has various functionalities including descriptive mining to characterize data, predictive mining for inference, and different mining techniques like classification, association analysis, clustering, and outlier analysis.

5.3 mining sequential patterns

5.3 mining sequential patternsKrish_ver2 The document discusses sequential pattern mining, which involves finding frequently occurring ordered sequences or subsequences in sequence databases. It covers key concepts like sequential patterns, sequence databases, support count, and subsequences. It also describes several algorithms for sequential pattern mining, including GSP (Generalized Sequential Patterns) which uses a candidate generation and test approach, SPADE which works on a vertical data format, and PrefixSpan which employs a prefix-projected sequential pattern growth approach without candidate generation.

Data reduction

Data reductionkalavathisugan This document discusses techniques for data reduction to reduce the size of large datasets for analysis. It describes five main strategies for data reduction: data cube aggregation, dimensionality reduction, data compression, numerosity reduction, and discretization. Data cube aggregation involves aggregating data at higher conceptual levels, such as aggregating quarterly sales data to annual totals. Dimensionality reduction removes redundant attributes. The document then focuses on attribute subset selection techniques, including stepwise forward selection, stepwise backward elimination, and combinations of the two, to select a minimal set of relevant attributes. Decision trees can also be used for attribute selection by removing attributes not used in the tree.

Presentation on K-Means Clustering

Presentation on K-Means ClusteringPabna University of Science & Technology This presentation introduces clustering analysis and the k-means clustering technique. It defines clustering as an unsupervised method to segment data into groups with similar traits. The presentation outlines different clustering types (hard vs soft), techniques (partitioning, hierarchical, etc.), and describes the k-means algorithm in detail through multiple steps. It discusses requirements for clustering, provides examples of applications, and reviews advantages and disadvantages of k-means clustering.

5.2 mining time series data

5.2 mining time series dataKrish_ver2 This document discusses time-series data and methods for analyzing it. Time-series data consists of sequential values measured over time that can be analyzed to identify patterns, trends, and outliers. Key methods discussed include trend analysis to identify long-term movements, seasonal variations, and irregular components; similarity search to find similar sequences; and dimensionality reduction and transformation techniques to reduce data size before analysis or indexing.

2. visualization in data mining

2. visualization in data miningAzad public school Visual data mining combines traditional data mining methods with information visualization techniques to explore large datasets. There are three levels of integration between visualization and automated mining methods - no/limited integration, loose integration where methods are applied sequentially, and full integration where methods are applied in parallel. Different visualization methods exist for univariate, bivariate and multivariate data based on the type and dimensions of the data. The document describes frameworks and algorithms for visual data mining, including developing new algorithms interactively through a visual interface. It also summarizes a document on using data mining and visualization techniques for selective visualization of large spatial datasets.

Feedforward neural network

Feedforward neural networkSopheaktra YONG This slide is prepared for the lectures-in-turn challenge within the study group of social informatics, kyoto university.

Apriori Algorithm

Apriori AlgorithmInternational School of Engineering The document discusses the Apriori algorithm, which is used for mining frequent itemsets from transactional databases. It begins with an overview and definition of the Apriori algorithm and its key concepts like frequent itemsets, the Apriori property, and join operations. It then outlines the steps of the Apriori algorithm, provides an example using a market basket database, and includes pseudocode. The document also discusses limitations of the algorithm and methods to improve its efficiency, as well as advantages and disadvantages.

3. mining frequent patterns

3. mining frequent patternsAzad public school The document discusses frequent pattern mining and the Apriori algorithm. It introduces frequent patterns as frequently occurring sets of items in transaction data. The Apriori algorithm is described as a seminal method for mining frequent itemsets via multiple passes over the data, generating candidate itemsets and pruning those that are not frequent. Challenges with Apriori include multiple database scans and large number of candidate sets generated.

Query trees

Query treesShefa Idrees Hey friends, here is my "query tree" assignment. :-) I have searched a lot to get this master piece :p and I can guarantee you that this one gonna help you In Sha ALLAH more than any else document on the subject. Have a good day :-)

Mining Frequent Patterns, Association and Correlations

Mining Frequent Patterns, Association and CorrelationsJustin Cletus This document summarizes Chapter 6 of the book "Data Mining: Concepts and Techniques" which discusses frequent pattern mining. It introduces basic concepts like frequent itemsets and association rules. It then describes several scalable algorithms for mining frequent itemsets, including Apriori, FP-Growth, and ECLAT. It also discusses optimizations to Apriori like partitioning the database and techniques to reduce the number of candidates and database scans.

2.2 decision tree

2.2 decision treeKrish_ver2 This document discusses decision tree induction and attribute selection measures. It describes common measures like information gain, gain ratio, and Gini index that are used to select the best splitting attribute at each node in decision tree construction. It provides examples to illustrate information gain calculation for both discrete and continuous attributes. The document also discusses techniques for handling large datasets like SLIQ and SPRINT that build decision trees in a scalable manner by maintaining attribute value lists.

Data Mining: Association Rules Basics

Data Mining: Association Rules BasicsBenazir Income Support Program (BISP) Association rule mining finds frequent patterns and correlations among items in transaction databases. It involves two main steps:

1) Frequent itemset generation: Finds itemsets that occur together in a minimum number of transactions (above a support threshold). This is done efficiently using the Apriori algorithm.

2) Rule generation: Generates rules from frequent itemsets where the confidence (fraction of transactions with left hand side that also contain right hand side) is above a minimum threshold. Rules are a partitioning of an itemset into left and right sides.

Bias and variance trade off

Bias and variance trade offVARUN KUMAR This manuscript addresses the fundamentals of the trade-off relation between bias and variance in machine learning.

Vc dimension in Machine Learning

Vc dimension in Machine LearningVARUN KUMAR The document discusses VC dimension in machine learning. It introduces the concept of VC dimension as a measure of the capacity or complexity of a set of functions used in a statistical binary classification algorithm. VC dimension is defined as the largest number of points that can be shattered, or classified correctly, by the algorithm. The document notes that test error is related to both training error and model complexity, which can be measured by VC dimension. A low VC dimension or large training set size can help reduce the gap between training and test error.

backpropagation in neural networks

backpropagation in neural networksAkash Goel Backpropagation is the algorithm that is used to train modern feed-forwards neural nets. This ppt aims to explain it succinctly.

Data preprocessing

Data preprocessingJason Rodrigues Data preprocessing techniques

See my Paris applied psychology conference paper here

https://www.slideshare.net/jasonrodrigues/paris-conference-on-applied-psychology

or

https://prezi.com/view/KBP8JnekVH9LkLOiKY3w/

Dempster shafer theory

Dempster shafer theoryDr. C.V. Suresh Babu The Dempster-Shafer Theory was developed by Arthur Dempster in 1967 and Glenn Shafer in 1976 as an alternative to Bayesian probability. It allows one to combine evidence from different sources and obtain a degree of belief (or probability) for some event. The theory uses belief functions and plausibility functions to represent degrees of belief for various hypotheses given certain evidence. It was developed to describe ignorance and consider all possible outcomes, unlike Bayesian probability which only considers single evidence. An example is given of using the theory to determine the murderer in a room with 4 people where the lights went out.

Data warehouse architecture

Data warehouse architecturepcherukumalla The document provides information about what a data warehouse is and why it is important. A data warehouse is a relational database designed for querying and analysis that contains historical data from transaction systems and other sources. It allows organizations to access, analyze, and report on integrated information to support business processes and decisions.

Cluster Analysis

Cluster AnalysisDataminingTools Inc Cluster analysis is used to group similar objects together and separate dissimilar objects. It has applications in understanding data patterns and reducing large datasets. The main types are partitional which divides data into non-overlapping subsets, and hierarchical which arranges clusters in a tree structure. Popular clustering algorithms include k-means, hierarchical clustering, and graph-based clustering. K-means partitions data into k clusters by minimizing distances between points and cluster centroids, but requires specifying k and is sensitive to initial centroid positions. Hierarchical clustering creates nested clusters without needing to specify the number of clusters, but has higher computational costs.

Ad

More Related Content

What's hot (20)

DBSCAN : A Clustering Algorithm

DBSCAN : A Clustering AlgorithmPınar Yahşi This document contains a description of the dbscan algorithm, the operating principle of the algorithm, its advantages and disadvantages.

K means Clustering

K means ClusteringEdureka! The document discusses clustering and k-means clustering algorithms. It provides examples of scenarios where clustering can be used, such as placing cell phone towers or opening new offices. It then defines clustering as organizing data into groups where objects within each group are similar to each other and dissimilar to objects in other groups. The document proceeds to explain k-means clustering, including the process of initializing cluster centers, assigning data points to the closest center, recomputing the centers, and iterating until centers converge. It provides a use case of using k-means to determine locations for new schools.

1.2 steps and functionalities

1.2 steps and functionalitiesKrish_ver2 Data mining involves multiple steps in the knowledge discovery process including data cleaning, integration, selection, transformation, mining, and pattern evaluation. It has various functionalities including descriptive mining to characterize data, predictive mining for inference, and different mining techniques like classification, association analysis, clustering, and outlier analysis.

5.3 mining sequential patterns

5.3 mining sequential patternsKrish_ver2 The document discusses sequential pattern mining, which involves finding frequently occurring ordered sequences or subsequences in sequence databases. It covers key concepts like sequential patterns, sequence databases, support count, and subsequences. It also describes several algorithms for sequential pattern mining, including GSP (Generalized Sequential Patterns) which uses a candidate generation and test approach, SPADE which works on a vertical data format, and PrefixSpan which employs a prefix-projected sequential pattern growth approach without candidate generation.

Data reduction

Data reductionkalavathisugan This document discusses techniques for data reduction to reduce the size of large datasets for analysis. It describes five main strategies for data reduction: data cube aggregation, dimensionality reduction, data compression, numerosity reduction, and discretization. Data cube aggregation involves aggregating data at higher conceptual levels, such as aggregating quarterly sales data to annual totals. Dimensionality reduction removes redundant attributes. The document then focuses on attribute subset selection techniques, including stepwise forward selection, stepwise backward elimination, and combinations of the two, to select a minimal set of relevant attributes. Decision trees can also be used for attribute selection by removing attributes not used in the tree.

Presentation on K-Means Clustering

Presentation on K-Means ClusteringPabna University of Science & Technology This presentation introduces clustering analysis and the k-means clustering technique. It defines clustering as an unsupervised method to segment data into groups with similar traits. The presentation outlines different clustering types (hard vs soft), techniques (partitioning, hierarchical, etc.), and describes the k-means algorithm in detail through multiple steps. It discusses requirements for clustering, provides examples of applications, and reviews advantages and disadvantages of k-means clustering.

5.2 mining time series data

5.2 mining time series dataKrish_ver2 This document discusses time-series data and methods for analyzing it. Time-series data consists of sequential values measured over time that can be analyzed to identify patterns, trends, and outliers. Key methods discussed include trend analysis to identify long-term movements, seasonal variations, and irregular components; similarity search to find similar sequences; and dimensionality reduction and transformation techniques to reduce data size before analysis or indexing.

2. visualization in data mining

2. visualization in data miningAzad public school Visual data mining combines traditional data mining methods with information visualization techniques to explore large datasets. There are three levels of integration between visualization and automated mining methods - no/limited integration, loose integration where methods are applied sequentially, and full integration where methods are applied in parallel. Different visualization methods exist for univariate, bivariate and multivariate data based on the type and dimensions of the data. The document describes frameworks and algorithms for visual data mining, including developing new algorithms interactively through a visual interface. It also summarizes a document on using data mining and visualization techniques for selective visualization of large spatial datasets.

Feedforward neural network

Feedforward neural networkSopheaktra YONG This slide is prepared for the lectures-in-turn challenge within the study group of social informatics, kyoto university.

Apriori Algorithm

Apriori AlgorithmInternational School of Engineering The document discusses the Apriori algorithm, which is used for mining frequent itemsets from transactional databases. It begins with an overview and definition of the Apriori algorithm and its key concepts like frequent itemsets, the Apriori property, and join operations. It then outlines the steps of the Apriori algorithm, provides an example using a market basket database, and includes pseudocode. The document also discusses limitations of the algorithm and methods to improve its efficiency, as well as advantages and disadvantages.

3. mining frequent patterns

3. mining frequent patternsAzad public school The document discusses frequent pattern mining and the Apriori algorithm. It introduces frequent patterns as frequently occurring sets of items in transaction data. The Apriori algorithm is described as a seminal method for mining frequent itemsets via multiple passes over the data, generating candidate itemsets and pruning those that are not frequent. Challenges with Apriori include multiple database scans and large number of candidate sets generated.

Query trees

Query treesShefa Idrees Hey friends, here is my "query tree" assignment. :-) I have searched a lot to get this master piece :p and I can guarantee you that this one gonna help you In Sha ALLAH more than any else document on the subject. Have a good day :-)

Mining Frequent Patterns, Association and Correlations

Mining Frequent Patterns, Association and CorrelationsJustin Cletus This document summarizes Chapter 6 of the book "Data Mining: Concepts and Techniques" which discusses frequent pattern mining. It introduces basic concepts like frequent itemsets and association rules. It then describes several scalable algorithms for mining frequent itemsets, including Apriori, FP-Growth, and ECLAT. It also discusses optimizations to Apriori like partitioning the database and techniques to reduce the number of candidates and database scans.

2.2 decision tree

2.2 decision treeKrish_ver2 This document discusses decision tree induction and attribute selection measures. It describes common measures like information gain, gain ratio, and Gini index that are used to select the best splitting attribute at each node in decision tree construction. It provides examples to illustrate information gain calculation for both discrete and continuous attributes. The document also discusses techniques for handling large datasets like SLIQ and SPRINT that build decision trees in a scalable manner by maintaining attribute value lists.

Data Mining: Association Rules Basics

Data Mining: Association Rules BasicsBenazir Income Support Program (BISP) Association rule mining finds frequent patterns and correlations among items in transaction databases. It involves two main steps:

1) Frequent itemset generation: Finds itemsets that occur together in a minimum number of transactions (above a support threshold). This is done efficiently using the Apriori algorithm.

2) Rule generation: Generates rules from frequent itemsets where the confidence (fraction of transactions with left hand side that also contain right hand side) is above a minimum threshold. Rules are a partitioning of an itemset into left and right sides.

Bias and variance trade off

Bias and variance trade offVARUN KUMAR This manuscript addresses the fundamentals of the trade-off relation between bias and variance in machine learning.

Vc dimension in Machine Learning

Vc dimension in Machine LearningVARUN KUMAR The document discusses VC dimension in machine learning. It introduces the concept of VC dimension as a measure of the capacity or complexity of a set of functions used in a statistical binary classification algorithm. VC dimension is defined as the largest number of points that can be shattered, or classified correctly, by the algorithm. The document notes that test error is related to both training error and model complexity, which can be measured by VC dimension. A low VC dimension or large training set size can help reduce the gap between training and test error.

backpropagation in neural networks

backpropagation in neural networksAkash Goel Backpropagation is the algorithm that is used to train modern feed-forwards neural nets. This ppt aims to explain it succinctly.

Data preprocessing

Data preprocessingJason Rodrigues Data preprocessing techniques

See my Paris applied psychology conference paper here

https://www.slideshare.net/jasonrodrigues/paris-conference-on-applied-psychology

or

https://prezi.com/view/KBP8JnekVH9LkLOiKY3w/

Dempster shafer theory

Dempster shafer theoryDr. C.V. Suresh Babu The Dempster-Shafer Theory was developed by Arthur Dempster in 1967 and Glenn Shafer in 1976 as an alternative to Bayesian probability. It allows one to combine evidence from different sources and obtain a degree of belief (or probability) for some event. The theory uses belief functions and plausibility functions to represent degrees of belief for various hypotheses given certain evidence. It was developed to describe ignorance and consider all possible outcomes, unlike Bayesian probability which only considers single evidence. An example is given of using the theory to determine the murderer in a room with 4 people where the lights went out.

Viewers also liked (12)

Data warehouse architecture

Data warehouse architecturepcherukumalla The document provides information about what a data warehouse is and why it is important. A data warehouse is a relational database designed for querying and analysis that contains historical data from transaction systems and other sources. It allows organizations to access, analyze, and report on integrated information to support business processes and decisions.

Cluster Analysis

Cluster AnalysisDataminingTools Inc Cluster analysis is used to group similar objects together and separate dissimilar objects. It has applications in understanding data patterns and reducing large datasets. The main types are partitional which divides data into non-overlapping subsets, and hierarchical which arranges clusters in a tree structure. Popular clustering algorithms include k-means, hierarchical clustering, and graph-based clustering. K-means partitions data into k clusters by minimizing distances between points and cluster centroids, but requires specifying k and is sensitive to initial centroid positions. Hierarchical clustering creates nested clusters without needing to specify the number of clusters, but has higher computational costs.

Cluster analysis

Cluster analysisVenkata Reddy Konasani The document provides an overview of topics to be covered in a data analysis course, including cluster analysis and decision trees. The course will cover descriptive statistics, probability distributions, correlation, regression, hypothesis testing, clustering methods like k-means, and decision tree techniques like CHAID. Clustering involves grouping similar objects together to identify homogeneous clusters that are heterogeneous from each other. Applications of clustering include market segmentation, credit risk analysis, and operations. The document gives an example of clustering students based on their exam scores.

Clique

Clique sk_klms CLIQUE is a grid-based clustering algorithm that identifies dense units in subspaces of high-dimensional data to provide efficient clustering. It works by first partitioning each attribute dimension into equal intervals and then the data space into rectangular grid cells. It finds dense units in subspaces like planes and intersections them to identify dense units in higher dimensions. These dense units are grouped into clusters. CLIQUE scales linearly with size of data and number of dimensions and automatically identifies relevant subspaces for clustering. However, the clustering accuracy may be reduced for simplicity.

Difference between molap, rolap and holap in ssas

Difference between molap, rolap and holap in ssasUmar Ali MOLAP, ROLAP, and HOLAP are different storage modes in SQL Server Analysis Services (SSAS). MOLAP stores aggregated data and source data in a multidimensional structure for fast queries. ROLAP stores aggregations in indexed views in the relational database, while HOLAP combines MOLAP and ROLAP by storing aggregations in a multidimensional structure but not source data. Queries against aggregated data are faster with MOLAP and HOLAP, while ROLAP is slower but uses less storage.

Database aggregation using metadata

Database aggregation using metadataDr Sandeep Kumar Poonia This document describes a simulator for database aggregation using metadata. The simulator sits between an end-user application and a database management system (DBMS) to intercept SQL queries and transform them to take advantage of available aggregates using metadata describing the data warehouse schema. The simulator provides performance gains by optimizing queries to use appropriate aggregate tables. It was found to improve performance over previous aggregate navigators by making fewer calls to system tables through the use of metadata mappings. Experimental results showed the simulator solved queries faster than alternative approaches by transforming queries to leverage aggregate tables.

3.4 density and grid methods

3.4 density and grid methodsKrish_ver2 The document discusses several density-based and grid-based clustering algorithms. DBSCAN is described as a density-based method that forms clusters as maximal sets of density-connected points. OPTICS extends DBSCAN to produce a special ordering of the database with respect to density-based clustering structure. DENCLUE uses density functions to allow mathematically describing arbitrarily shaped clusters. Grid-based methods like STING, WaveCluster, and CLIQUE partition space into a grid structure to perform fast clustering.

Density Based Clustering

Density Based ClusteringSSA KPI This document summarizes the DBSCAN clustering algorithm. DBSCAN finds clusters based on density, requiring only two parameters: Eps, which defines the neighborhood distance, and MinPts, the minimum number of points required to form a cluster. It can discover clusters of arbitrary shape. The algorithm works by expanding clusters from core points, which have at least MinPts points within their Eps-neighborhood. Points that are not part of any cluster are classified as noise. Applications include spatial data analysis, image segmentation, and automatic border detection in medical images.

1.7 data reduction

1.7 data reductionKrish_ver2 The document discusses various data reduction strategies including attribute subset selection, numerosity reduction, and dimensionality reduction. Attribute subset selection aims to select a minimal set of important attributes. Numerosity reduction techniques like regression, log-linear models, histograms, clustering, and sampling can reduce data volume by finding alternative representations like model parameters or cluster centroids. Dimensionality reduction techniques include discrete wavelet transformation and principal component analysis, which transform high-dimensional data into a lower-dimensional representation.

Application of data mining

Application of data miningSHIVANI SONI Shivani Soni presented on data mining. Data mining involves using computational methods to discover patterns in large datasets, combining techniques from machine learning, statistics, artificial intelligence, and database systems. It is used to extract useful information from data and transform it into an understandable structure. Data mining has various applications, including in sales/marketing, banking/finance, healthcare/insurance, transportation, medicine, education, manufacturing, and research analysis. It enables businesses to understand customer purchasing patterns and maximize profits. Examples of its use include fraud detection, credit risk analysis, stock trading, customer loyalty analysis, distribution scheduling, claims analysis, risk profiling, detecting medical therapy patterns, education decision making, and aiding manufacturing process design and research.

Cluster analysis

Cluster analysisJewel Refran Cluster analysis is a technique used to group objects based on characteristics they possess. It involves measuring the distance or similarity between objects and grouping those that are most similar together. There are two main types: hierarchical cluster analysis, which groups objects sequentially into clusters; and nonhierarchical cluster analysis, which directly assigns objects to pre-specified clusters. The choice of method depends on factors like sample size and research objectives.

OLAP

OLAPSlideshare OLAP provides multidimensional analysis of large datasets to help solve business problems. It uses a multidimensional data model to allow for drilling down and across different dimensions like students, exams, departments, and colleges. OLAP tools are classified as MOLAP, ROLAP, or HOLAP based on how they store and access multidimensional data. MOLAP uses a multidimensional database for fast performance while ROLAP accesses relational databases through metadata. HOLAP provides some analysis directly on relational data or through intermediate MOLAP storage. Web-enabled OLAP allows interactive querying over the internet.

Ad

Similar to Cure, Clustering Algorithm (20)

Cluster analysis

Cluster analysisPushkar Mishra This is Cluster Analysis Slide which help you to understand detailed note about cluster analysis and their Categories.

An Efficient Clustering Method for Aggregation on Data Fragments

An Efficient Clustering Method for Aggregation on Data FragmentsIJMER Clustering is an important step in the process of data analysis with applications to numerous fields. Clustering ensembles, has emerged as a powerful technique for combining different clustering results to obtain a quality cluster. Existing clustering aggregation algorithms are applied directly to large number of data points. The algorithms are inefficient if the number of data points is large. This project defines an efficient approach for clustering aggregation based on data fragments. In fragment-based approach, a data fragment is any subset of the data. To increase the efficiency of the proposed approach, the clustering aggregation can be performed directly on data fragments under comparison measure and normalized mutual information measures for clustering aggregation, enhanced clustering aggregation algorithms are described. To show the minimal computational complexity. (Agglomerative, Furthest, and Local Search); nevertheless, which increases the accuracy.

Extended pso algorithm for improvement problems k means clustering algorithm

Extended pso algorithm for improvement problems k means clustering algorithmIJMIT JOURNAL The clustering is a without monitoring process and one of the most common data mining techniques. The

purpose of clustering is grouping similar data together in a group, so were most similar to each other in a

cluster and the difference with most other instances in the cluster are. In this paper we focus on clustering

partition k-means, due to ease of implementation and high-speed performance of large data sets, After 30

year it is still very popular among the developed clustering algorithm and then for improvement problem of

placing of k-means algorithm in local optimal, we pose extended PSO algorithm, that its name is ECPSO.

Our new algorithm is able to be cause of exit from local optimal and with high percent produce the

problem’s optimal answer. The probe of results show that mooted algorithm have better performance

regards as other clustering algorithms specially in two index, the carefulness of clustering and the quality

of clustering.

Extended pso algorithm for improvement problems k means clustering algorithm

Extended pso algorithm for improvement problems k means clustering algorithmIJMIT JOURNAL The clustering is a without monitoring process and one of the most common data mining techniques. The

purpose of clustering is grouping similar data together in a group, so were most similar to each other in a

cluster and the difference with most other instances in the cluster are. In this paper we focus on clustering

partition k-means, due to ease of implementation and high-speed performance of large data sets, After 30

year it is still very popular among the developed clustering algorithm and then for improvement problem of

placing of k-means algorithm in local optimal, we pose extended PSO algorithm, that its name is ECPSO.

Our new algorithm is able to be cause of exit from local optimal and with high percent produce the

problem’s optimal answer. The probe of results show that mooted algorithm have better performance

regards as other clustering algorithms specially in two index, the carefulness of clustering and the quality

of clustering.

Rohit 10103543

Rohit 10103543Pulkit Chhabra The document discusses clustering and its applications in contour detection. It notes that while clustering is widely used to organize unlabeled data and remove noise, there are still challenges. Specifically, selecting an appropriate data set, determining the number of clusters, and validating results can be ambiguous. Clustering algorithms are also sensitive to these parameters and the data set properties. Contour extraction methods also lack efficiency and universality. Improved clustering techniques are needed that can be more effectively applied to contour detection problems across different data sets.

Data clustering using kernel based

Data clustering using kernel basedIJITCA Journal In recent machine learning community, there is a trend of constructing a linear logarithm version of

nonlinear version through the ‘kernel method’ for example kernel principal component analysis, kernel

fisher discriminant analysis, support Vector Machines (SVMs), and the current kernel clustering

algorithms. Typically, in unsupervised methods of clustering algorithms utilizing kernel method, a

nonlinear mapping is operated initially in order to map the data into a much higher space feature, and then

clustering is executed. A hitch of these kernel clustering algorithms is that the clustering prototype resides

in increased features specs of dimensions and therefore lack intuitive and clear descriptions without

utilizing added approximation of projection from the specs to the data as executed in the literature

presented. This paper aims to utilize the ‘kernel method’, a novel clustering algorithm, founded on the

conventional fuzzy clustering algorithm (FCM) is anticipated and known as kernel fuzzy c-means algorithm

(KFCM). This method embraces a novel kernel-induced metric in the space of data in order to interchange

the novel Euclidean matric norm in cluster prototype and fuzzy clustering algorithm still reside in the space

of data so that the results of clustering could be interpreted and reformulated in the spaces which are

original. This property is used for clustering incomplete data. Execution on supposed data illustrate that

KFCM has improved performance of clustering and stout as compare to other transformations of FCM for

clustering incomplete data.

Experimental study of Data clustering using k- Means and modified algorithms

Experimental study of Data clustering using k- Means and modified algorithmsIJDKP The k- Means clustering algorithm is an old algorithm that has been intensely researched owing to its ease

and simplicity of implementation. Clustering algorithm has a broad attraction and usefulness in

exploratory data analysis. This paper presents results of the experimental study of different approaches to

k- Means clustering, thereby comparing results on different datasets using Original k-Means and other

modified algorithms implemented using MATLAB R2009b. The results are calculated on some performance

measures such as no. of iterations, no. of points misclassified, accuracy, Silhouette validity index and

execution time

50120140505013

50120140505013IAEME Publication This document describes a new distance-based clustering algorithm (DBCA) that aims to improve upon K-means clustering. DBCA selects initial cluster centroids based on the total distance of each data point to all other points, rather than random selection. It calculates distances between all points, identifies points with maximum total distances, and sets initial centroids as the averages of groups of these maximally distant points. The algorithm is compared to K-means, hierarchical clustering, and hierarchical partitioning clustering on synthetic and real data. Experimental results show DBCA produces better quality clusters than these other algorithms.

A PSO-Based Subtractive Data Clustering Algorithm

A PSO-Based Subtractive Data Clustering AlgorithmIJORCS There is a tremendous proliferation in the amount of information available on the largest shared information source, the World Wide Web. Fast and high-quality clustering algorithms play an important role in helping users to effectively navigate, summarize, and organize the information. Recent studies have shown that partitional clustering algorithms such as the k-means algorithm are the most popular algorithms for clustering large datasets. The major problem with partitional clustering algorithms is that they are sensitive to the selection of the initial partitions and are prone to premature converge to local optima. Subtractive clustering is a fast, one-pass algorithm for estimating the number of clusters and cluster centers for any given set of data. The cluster estimates can be used to initialize iterative optimization-based clustering methods and model identification methods. In this paper, we present a hybrid Particle Swarm Optimization, Subtractive + (PSO) clustering algorithm that performs fast clustering. For comparison purpose, we applied the Subtractive + (PSO) clustering algorithm, PSO, and the Subtractive clustering algorithms on three different datasets. The results illustrate that the Subtractive + (PSO) clustering algorithm can generate the most compact clustering results as compared to other algorithms.

Automated Clustering Project - 12th CONTECSI 34th WCARS

Automated Clustering Project - 12th CONTECSI 34th WCARS TECSI FEA USP This document describes an automated clustering and outlier detection program. The program normalizes data, performs principal component analysis to select important components, compares clustering algorithms, selects the best model using silhouette values, and produces outputs labeling clusters and outliers. It is demonstrated on a sample of 5,000 credit card customer records, identifying a small cluster of 3 accounts as outliers based on features like new status and high late payments.

Enhanced Clustering Algorithm for Processing Online Data

Enhanced Clustering Algorithm for Processing Online DataIOSR Journals This document proposes an enhanced incremental clustering algorithm for processing online data. It discusses existing clustering algorithms like leader clustering and hierarchical clustering, which have limitations in handling dynamic data. The proposed algorithm aims to dynamically create initial clusters and rearrange clusters based on data characteristics, allowing for more accurate clustering of online data over time. It also introduces a new frequency-based method for searching and retrieving specific data from fixed clusters.

Big data Clustering Algorithms And Strategies

Big data Clustering Algorithms And StrategiesFarzad Nozarian The document discusses various algorithms for big data clustering. It begins by covering preprocessing techniques such as data reduction. It then covers hierarchical, prototype-based, density-based, grid-based, and scalability clustering algorithms. Specific algorithms discussed include K-means, K-medoids, PAM, CLARA/CLARANS, DBSCAN, OPTICS, MR-DBSCAN, DBCURE, and hierarchical algorithms like PINK and l-SL. The document emphasizes techniques for scaling these algorithms to large datasets, including partitioning, sampling, approximation strategies, and MapReduce implementations.

CLUSTERING IN DATA MINING.pdf

CLUSTERING IN DATA MINING.pdfSowmyaJyothi3 Clustering is an unsupervised machine learning technique used to group unlabeled data points. There are two main approaches: hierarchical clustering and partitioning clustering. Partitioning clustering algorithms like k-means and k-medoids attempt to partition data into k clusters by optimizing a criterion function. Hierarchical clustering creates nested clusters by merging or splitting clusters. Examples of hierarchical algorithms include agglomerative clustering, which builds clusters from bottom-up, and divisive clustering, which separates clusters from top-down. Clustering can group both numerical and categorical data.

A0310112

A0310112iosrjournals The document provides a literature review of different clustering techniques. It begins by defining clustering and its applications. It then categorizes and describes several clustering methods including hierarchical (BIRCH, CURE, CHAMELEON), partitioning (k-means, k-medoids), density-based (DBSCAN, OPTICS, DENCLUE), grid-based (CLIQUE, STING, MAFIA), and model-based (RBMN, SOM) methods. For each method, it discusses the algorithm, advantages, disadvantages and time complexity. The document aims to provide an overview of various clustering techniques for classification and comparison.

Comparison Between Clustering Algorithms for Microarray Data Analysis

Comparison Between Clustering Algorithms for Microarray Data AnalysisIOSR Journals Currently, there are two techniques used for large-scale gene-expression profiling; microarray and

RNA-Sequence (RNA-Seq).This paper is intended to study and compare different clustering algorithms that used

in microarray data analysis. Microarray is a DNA molecules array which allows multiple hybridization

experiments to be carried out simultaneously and trace expression levels of thousands of genes. It is a highthroughput

technology for gene expression analysis and becomes an effective tool for biomedical research.

Microarray analysis aims to interpret the data produced from experiments on DNA, RNA, and protein

microarrays, which enable researchers to investigate the expression state of a large number of genes. Data

clustering represents the first and main process in microarray data analysis. The k-means, fuzzy c-mean, selforganizing

map, and hierarchical clustering algorithms are under investigation in this paper. These algorithms

are compared based on their clustering model.

Unsupervised Learning.pptx

Unsupervised Learning.pptxGandhiMathy6 Model Selection and Evaluation

Dimensionality Reduction

Artificial intelligence

Machine Learning

Supervised Learning

Unsupervised Learning

Clustering and Classification Algorithms Ankita Dubey

Clustering and Classification Algorithms Ankita DubeyAnkita Dubey Clustering is a process of partitioning a set of data (or objects) into a set of meaningful sub-classes, called clusters. Help users understand the natural grouping or structure in a data set. Used either as a stand-alone tool to get insight into data distribution or as a preprocessing step for other algorithms.

D0931621

D0931621IOSR Journals 1. The document presents a hybrid algorithm that combines Kernelized Fuzzy C-Means (KFCM), Hybrid Ant Colony Optimization (HACO), and Fuzzy Adaptive Particle Swarm Optimization (FAPSO) to improve clustering of electrocardiogram (ECG) beat data.

2. The algorithm maps data into a higher dimensional space using kernel functions to make clusters more linearly separable, addresses issues with KFCM being sensitive to initialization and prone to local minima.

3. It uses HACO to optimize cluster centers and membership degrees, and FAPSO to evaluate fitness values and optimize weight vectors, forming usable clusters for applications like ECG classification.

84cc04ff77007e457df6aa2b814d2346bf1b

84cc04ff77007e457df6aa2b814d2346bf1bPRAWEEN KUMAR This document compares hierarchical and non-hierarchical clustering algorithms. It summarizes four clustering algorithms: K-Means, K-Medoids, Farthest First Clustering (hierarchical algorithms), and DBSCAN (non-hierarchical algorithm). It describes the methodology of each algorithm and provides pseudocode. It also describes the datasets used to evaluate the performance of the algorithms and the evaluation metrics. The goal is to compare the performance of the clustering methods on different datasets.

Ijetr021251

Ijetr021251Engineering Research Publication Engineering Research Publication

Best International Journals, High Impact Journals,

International Journal of Engineering & Technical Research

ISSN : 2321-0869 (O) 2454-4698 (P)

www.erpublication.org

Ad

More from Lino Possamai (7)

Music Motive @ H-ack

Music Motive @ H-ack Lino Possamai Progetto Vincitore del primo H-ack in H-farm

by Chiara Olivieri, Giovanni Trento, Andrea Bazerla, Lino Possamai, Walter Barbagallo, Nicola Ghirardi, Enrico Battistelli, Giovanna Nardini, Alessandro Paolini

http://ghirardinicola.blogspot.it/2013/10/and-winner-is-team-fungo-hackindustry.html

Metodi matematici per l’analisi di sistemi complessi

Metodi matematici per l’analisi di sistemi complessiLino Possamai Introduzione alle strutture dati, ai metodi matematici, algoritmi e dati empirici su alcuni sistemi complessi presenti in natura.

Multidimensional Analysis of Complex Networks

Multidimensional Analysis of Complex NetworksLino Possamai A new study of how complex networks evolve along the two most important informative axes, space and time.

Optimization of Collective Communication in MPICH

Optimization of Collective Communication in MPICH Lino Possamai This is a lecture about the paper: "Optimization of Collective Communication in MPICH". Department of Computer Science, University Ca' Foscari of Venice, Italy

A static Analyzer for Finding Dynamic Programming Errors

A static Analyzer for Finding Dynamic Programming ErrorsLino Possamai A static Analyzer for Finding Dynamic Programming Errors

On Applying Or-Parallelism and Tabling to Logic Programs

On Applying Or-Parallelism and Tabling to Logic ProgramsLino Possamai The document discusses applying or-parallelism and tabling techniques to logic programs to improve performance. Or-parallelism allows concurrent execution of alternatives by distributing subgoals across multiple engines. Tabling remembers prior computations to avoid redundant evaluations and ensures termination for some non-terminating programs. The authors propose a model that combines or-parallelism within tabling to leverage both techniques for efficient parallel execution.

Recently uploaded (20)

tecnologias de las primeras civilizaciones.pdf

tecnologias de las primeras civilizaciones.pdffjgm517 descaripcion detallada del avance de las tecnologias en mesopotamia, egipto, roma y grecia.

Designing Low-Latency Systems with Rust and ScyllaDB: An Architectural Deep Dive

Designing Low-Latency Systems with Rust and ScyllaDB: An Architectural Deep DiveScyllaDB Want to learn practical tips for designing systems that can scale efficiently without compromising speed?

Join us for a workshop where we’ll address these challenges head-on and explore how to architect low-latency systems using Rust. During this free interactive workshop oriented for developers, engineers, and architects, we’ll cover how Rust’s unique language features and the Tokio async runtime enable high-performance application development.

As you explore key principles of designing low-latency systems with Rust, you will learn how to:

- Create and compile a real-world app with Rust

- Connect the application to ScyllaDB (NoSQL data store)

- Negotiate tradeoffs related to data modeling and querying

- Manage and monitor the database for consistently low latencies

Cyber Awareness overview for 2025 month of security

Cyber Awareness overview for 2025 month of securityriccardosl1 Cyber awareness training educates employees on risk associated with internet and malicious emails

Rusty Waters: Elevating Lakehouses Beyond Spark

Rusty Waters: Elevating Lakehouses Beyond Sparkcarlyakerly1 Spark is a powerhouse for large datasets, but when it comes to smaller data workloads, its overhead can sometimes slow things down. What if you could achieve high performance and efficiency without the need for Spark?

At S&P Global Commodity Insights, having a complete view of global energy and commodities markets enables customers to make data-driven decisions with confidence and create long-term, sustainable value. 🌍

Explore delta-rs + CDC and how these open-source innovations power lightweight, high-performance data applications beyond Spark! 🚀

AI Changes Everything – Talk at Cardiff Metropolitan University, 29th April 2...

AI Changes Everything – Talk at Cardiff Metropolitan University, 29th April 2...Alan Dix Talk at the final event of Data Fusion Dynamics: A Collaborative UK-Saudi Initiative in Cybersecurity and Artificial Intelligence funded by the British Council UK-Saudi Challenge Fund 2024, Cardiff Metropolitan University, 29th April 2025

https://alandix.com/academic/talks/CMet2025-AI-Changes-Everything/

Is AI just another technology, or does it fundamentally change the way we live and think?

Every technology has a direct impact with micro-ethical consequences, some good, some bad. However more profound are the ways in which some technologies reshape the very fabric of society with macro-ethical impacts. The invention of the stirrup revolutionised mounted combat, but as a side effect gave rise to the feudal system, which still shapes politics today. The internal combustion engine offers personal freedom and creates pollution, but has also transformed the nature of urban planning and international trade. When we look at AI the micro-ethical issues, such as bias, are most obvious, but the macro-ethical challenges may be greater.

At a micro-ethical level AI has the potential to deepen social, ethnic and gender bias, issues I have warned about since the early 1990s! It is also being used increasingly on the battlefield. However, it also offers amazing opportunities in health and educations, as the recent Nobel prizes for the developers of AlphaFold illustrate. More radically, the need to encode ethics acts as a mirror to surface essential ethical problems and conflicts.

At the macro-ethical level, by the early 2000s digital technology had already begun to undermine sovereignty (e.g. gambling), market economics (through network effects and emergent monopolies), and the very meaning of money. Modern AI is the child of big data, big computation and ultimately big business, intensifying the inherent tendency of digital technology to concentrate power. AI is already unravelling the fundamentals of the social, political and economic world around us, but this is a world that needs radical reimagining to overcome the global environmental and human challenges that confront us. Our challenge is whether to let the threads fall as they may, or to use them to weave a better future.

Build Your Own Copilot & Agents For Devs

Build Your Own Copilot & Agents For DevsBrian McKeiver May 2nd, 2025 talk at StirTrek 2025 Conference.

Semantic Cultivators : The Critical Future Role to Enable AI

Semantic Cultivators : The Critical Future Role to Enable AIartmondano By 2026, AI agents will consume 10x more enterprise data than humans, but with none of the contextual understanding that prevents catastrophic misinterpretations.

DevOpsDays Atlanta 2025 - Building 10x Development Organizations.pptx

DevOpsDays Atlanta 2025 - Building 10x Development Organizations.pptxJustin Reock Building 10x Organizations with Modern Productivity Metrics

10x developers may be a myth, but 10x organizations are very real, as proven by the influential study performed in the 1980s, ‘The Coding War Games.’

Right now, here in early 2025, we seem to be experiencing YAPP (Yet Another Productivity Philosophy), and that philosophy is converging on developer experience. It seems that with every new method we invent for the delivery of products, whether physical or virtual, we reinvent productivity philosophies to go alongside them.

But which of these approaches actually work? DORA? SPACE? DevEx? What should we invest in and create urgency behind today, so that we don’t find ourselves having the same discussion again in a decade?

TrsLabs - Fintech Product & Business Consulting

TrsLabs - Fintech Product & Business ConsultingTrs Labs Hybrid Growth Mandate Model with TrsLabs

Strategic Investments, Inorganic Growth, Business Model Pivoting are critical activities that business don't do/change everyday. In cases like this, it may benefit your business to choose a temporary external consultant.

An unbiased plan driven by clearcut deliverables, market dynamics and without the influence of your internal office equations empower business leaders to make right choices.

Getting things done within a budget within a timeframe is key to Growing Business - No matter whether you are a start-up or a big company

Talk to us & Unlock the competitive advantage

Quantum Computing Quick Research Guide by Arthur Morgan

Quantum Computing Quick Research Guide by Arthur MorganArthur Morgan This is a Quick Research Guide (QRG).

QRGs include the following:

- A brief, high-level overview of the QRG topic.

- A milestone timeline for the QRG topic.

- Links to various free online resource materials to provide a deeper dive into the QRG topic.

- Conclusion and a recommendation for at least two books available in the SJPL system on the QRG topic.

QRGs planned for the series:

- Artificial Intelligence QRG

- Quantum Computing QRG

- Big Data Analytics QRG

- Spacecraft Guidance, Navigation & Control QRG (coming 2026)

- UK Home Computing & The Birth of ARM QRG (coming 2027)

Any questions or comments?

- Please contact Arthur Morgan at [email protected].

100% human made.

#StandardsGoals for 2025: Standards & certification roundup - Tech Forum 2025

#StandardsGoals for 2025: Standards & certification roundup - Tech Forum 2025BookNet Canada Book industry standards are evolving rapidly. In the first part of this session, we’ll share an overview of key developments from 2024 and the early months of 2025. Then, BookNet’s resident standards expert, Tom Richardson, and CEO, Lauren Stewart, have a forward-looking conversation about what’s next.

Link to recording, transcript, and accompanying resource: https://bnctechforum.ca/sessions/standardsgoals-for-2025-standards-certification-roundup/

Presented by BookNet Canada on May 6, 2025 with support from the Department of Canadian Heritage.

The Evolution of Meme Coins A New Era for Digital Currency ppt.pdf

The Evolution of Meme Coins A New Era for Digital Currency ppt.pdfAbi john Analyze the growth of meme coins from mere online jokes to potential assets in the digital economy. Explore the community, culture, and utility as they elevate themselves to a new era in cryptocurrency.

Procurement Insights Cost To Value Guide.pptx

Procurement Insights Cost To Value Guide.pptxJon Hansen Procurement Insights integrated Historic Procurement Industry Archives, serves as a powerful complement — not a competitor — to other procurement industry firms. It fills critical gaps in depth, agility, and contextual insight that most traditional analyst and association models overlook.

Learn more about this value- driven proprietary service offering here.

Noah Loul Shares 5 Steps to Implement AI Agents for Maximum Business Efficien...

Noah Loul Shares 5 Steps to Implement AI Agents for Maximum Business Efficien...Noah Loul Artificial intelligence is changing how businesses operate. Companies are using AI agents to automate tasks, reduce time spent on repetitive work, and focus more on high-value activities. Noah Loul, an AI strategist and entrepreneur, has helped dozens of companies streamline their operations using smart automation. He believes AI agents aren't just tools—they're workers that take on repeatable tasks so your human team can focus on what matters. If you want to reduce time waste and increase output, AI agents are the next move.

Enhancing ICU Intelligence: How Our Functional Testing Enabled a Healthcare I...

Enhancing ICU Intelligence: How Our Functional Testing Enabled a Healthcare I...Impelsys Inc. Impelsys provided a robust testing solution, leveraging a risk-based and requirement-mapped approach to validate ICU Connect and CritiXpert. A well-defined test suite was developed to assess data communication, clinical data collection, transformation, and visualization across integrated devices.

Transcript: #StandardsGoals for 2025: Standards & certification roundup - Tec...

Transcript: #StandardsGoals for 2025: Standards & certification roundup - Tec...BookNet Canada Book industry standards are evolving rapidly. In the first part of this session, we’ll share an overview of key developments from 2024 and the early months of 2025. Then, BookNet’s resident standards expert, Tom Richardson, and CEO, Lauren Stewart, have a forward-looking conversation about what’s next.

Link to recording, presentation slides, and accompanying resource: https://bnctechforum.ca/sessions/standardsgoals-for-2025-standards-certification-roundup/

Presented by BookNet Canada on May 6, 2025 with support from the Department of Canadian Heritage.

Generative Artificial Intelligence (GenAI) in Business

Generative Artificial Intelligence (GenAI) in BusinessDr. Tathagat Varma My talk for the Indian School of Business (ISB) Emerging Leaders Program Cohort 9. In this talk, I discussed key issues around adoption of GenAI in business - benefits, opportunities and limitations. I also discussed how my research on Theory of Cognitive Chasms helps address some of these issues

Dev Dives: Automate and orchestrate your processes with UiPath Maestro

Dev Dives: Automate and orchestrate your processes with UiPath MaestroUiPathCommunity This session is designed to equip developers with the skills needed to build mission-critical, end-to-end processes that seamlessly orchestrate agents, people, and robots.

📕 Here's what you can expect:

- Modeling: Build end-to-end processes using BPMN.

- Implementing: Integrate agentic tasks, RPA, APIs, and advanced decisioning into processes.

- Operating: Control process instances with rewind, replay, pause, and stop functions.

- Monitoring: Use dashboards and embedded analytics for real-time insights into process instances.

This webinar is a must-attend for developers looking to enhance their agentic automation skills and orchestrate robust, mission-critical processes.

👨🏫 Speaker:

Andrei Vintila, Principal Product Manager @UiPath

This session streamed live on April 29, 2025, 16:00 CET.

Check out all our upcoming Dev Dives sessions at https://community.uipath.com/dev-dives-automation-developer-2025/.

2025-05-Q4-2024-Investor-Presentation.pptx

2025-05-Q4-2024-Investor-Presentation.pptxSamuele Fogagnolo Cloudflare Q4 Financial Results Presentation

Cure, Clustering Algorithm

- 1. Cure: An Efficient Clustering Algorithm for Large Databases Possamai Lino, 800509 Department of Computer Science University of Venice www.possamai.it/lino Data Mining Lecture - September 13th, 2006

- 2. Introduction Main algorithms for clustering are those who uses partitioning or hierarchical agglomerative techniques. These are different because the former starts with one big cluster and downward step by step reaches the number of clusters wanted partitioning the existing clusters. The second starts with single point cluster and upward step by step merge cluster until desired number of cluster is reached. The second is used in this work

- 3. Drawbacks of Traditional Clustering Algorithms The result of clustering process depend on the approach used for represent each cluster. In fact, centroid-based approach (using d mean ) consider only one point as representative of a cluster – the cluster centroid. Other approach, as for example all-points (based on d min ) uses all the points inside him for cluster rappresentation. This choice is extremely sensitive to outliers and to slight changes in the position of data points, as the first approach can’t work well for non-spherical or arbitrary shaped clusters.

- 4. Contribution of CURE, ideas CURE employs a new hierarchical algorithm that adopts a middle ground between centroid-based and all-points approach. A constant number c of well scattered points in a cluster are chosen as representative. This points catch all the possible form that could have the cluster. The clusters with the closest pair of representative points are the cluster that are merged at each step of Cure. Random sampling and partitioning are used for reducing the data set of input.

- 6. Random Sampling When all the data set is considered as input of algorithm, execution time could be high due to the I/O costs. Random sampling is the answer to this problem. It is demonstrated that with only 2.5% of the original data set, the algorithm results are better than traditional algorithms, execution time are lower and geometry of cluster are preserved. For speed-up the algorithm operations, random sampling is fitted in main memory. The overhead of generating random sample is very small compared to the time for performing the clustering on the sample.

- 7. Partitioning sample When the clusters in the data set became less dense, random sampling with limited points became useless because implies a poor quality of clustering. So we have to increase the random sample. They proposed a simple partitioning scheme for speedup CURE algorithm. The scheme follows these steps: Partition n data points into p partition (n/p each). Partially cluster each partition until the final number of cluster created reduces to n/(p*q) with q>1. Cluster partially clustered partition starting from n/q cluster created. The advantage of partitioning the input is the reduced execution time. Each n/p group of points must fit in main memory for increasing performance of partially clustering.

- 8. Hierarchical Clustering Algorithm A constant number c of well scattered points in a cluster are chosen as representative. These points catch all the possible form that could have the cluster. The points are shrank toward the mean of the cluster by a fraction . If =0 the algorithm behavior became similar as all-points representation. Otherwise, ( =1) cure reduces to centroid-based approach. Outliers are typically further away from the mean of the cluster so the shrinking consequence is to dampen this effect.

- 9. Hierarchical Clustering Algorithm The clusters with the closest pair of representative points are the cluster that are merged at each step of CURE. When the number of points inside each cluster increase, the process of choosing c new representative points could became very slowly. For this reason, a new procedure is proposed. Instead choosing c new points from among all the points in the merged cluster, we select c points from the 2c scattered points for the two clusters being merged. The new points are fairly well scattered.

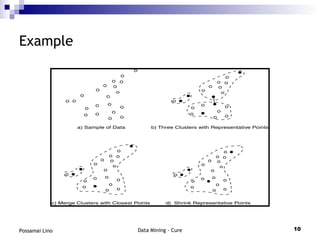

- 10. Example

- 11. Handling Outlier In different moments CURE dealt with outliers. Random Sampling filter out the majority of outliers. Outliers, due to their larger distance from other points, tend to merge with other points less and typically grow at a much slower rate than actual clusters. Thus, the number of points in a collection of outliers is typically much less than the number in a cluster. So, first, the clusters which are growing very slowly are identified and eliminated. Second, at the end of growing process, very small cluster are eliminated.

- 12. Labeling Data on Disk The process of sampling the initial data set, exclude the majority of data points. This data point must be assigned to some cluster created in former phases. Each cluster created is represented by a fraction of randomly selected representative points and each point excluded in the first phase are associated to the cluster whose representative point is closer. This method is different from BIRCH in which it employs only the centroids of the clusters for “partitioning” the remaining points. Since the space defined by a single centroid is a sphere, BIRCH labeling phase has a tendency to split clusters when they have non-spherical shapes of non-uniform sizes.

- 13. Experimental Results During experimental phase, CURE was compared to other clustering algorithms and using the same data set results are plotted. Algorithm for comparison are BIRCH and MST (Minimum Spanning Tree, same as CURE when shrink factor is 0) Data set 1 used is formed by one big circle cluster, two small circle clusters and two ellipsoid connected by a dense chain of outliers. Data set 2 used for execution time comparison.

- 14. Experimental Results Quality of Clustering As we can see from the picture, BIRCH and MST calculate a wrong result. BIRCH cannot distinguish between big and small cluster, so the consequence is splitting the big one. MST merges the two ellipsoids because it cannot handle the chain of outliers connecting them.

- 15. Experimental Results Sensitivity to Parameters Another index to take into account is the a factor. Changes implies a good or poor quality of clustering as we can see from the picture below.

- 16. Experimental Results Execution Time To compare the execution time of two algorithms, they have choose dataset 2 because both BIRCH and CURE have the same results. Execution time is presented changing the number of data points thus each cluster became more dense as the points increase, but the geometry still remain the same. Cure is more than 50% less expensive because BIRCH scan the entire data set where CURE sample count always 2500 units. For CURE algorithm we must count for a very little contribution of sampling from a large data set.

- 17. Conclusion We have see that CURE can detect cluster with non-spherical shape and wide variance in size using a set of representative points for each cluster. CURE can also have a good execution time in presence of large database using random sampling and partitioning methods. CURE works well when the database contains outliers. These are detected and eliminated.

- 18. Index Introduction Drawbacks of Traditional Clustering Algorithms CURE algorithm Contribution of Cure, ideas CURE architecture Random Sampling Partitioning sample Hierarchical Clustering Algorithm Labeling Data on disk Handling Outliers Example Experimental Results

- 19. References Sudipto Guha, Rajeev Rastogi, Kyuseok Shim Cure: An Efficient Clustering Algorithm for Large Databases. Information Systems, Volume 26, Number 1, March 2001