Dancing Elephants - Efficiently Working with Object Stores from Apache Spark and Apache Hive

- 1. © Hortonworks Inc. 2011 – 2017 All Rights Reserved Dancing Elephants: Working with Object Storage in Apache Spark and Hive Sanjay Radia June 2017

- 2. © Hortonworks Inc. 2011 – 2017 All Rights Reserved About the Speaker Sanjay Radia Chief Architect, Founder, Hortonworks Part of the original Hadoop team at Yahoo! since 2007 – Chief Architect of Hadoop Core at Yahoo! –Apache Hadoop PMC and Committer Prior Data center automation, virtualization, Java, HA, OSs, File Systems Startup, Sun Microsystems, Inria … Ph.D., University of Waterloo Page 2

- 3. © Hortonworks Inc. 2011 – 2017 All Rights Reserved Why Cloud? • No upfront hardware costs – Pay as you use • Elasticity • Often lower TCO • Natural for Data ingress for IoT, mobile apps, .. • Business agility

- 4. © Hortonworks Inc. 2011 – 2017 All Rights Reserved Key Architectural Considerations for Hadoop in the Cloud Shared Data & Storage On-Demand Ephemeral Workloads 10101 10101010101 01010101010101 010101010101010101 0 Elastic Resource Management Shared Metadata, Security & Governance

- 5. © Hortonworks Inc. 2011 – 2017 All Rights Reserved Shared Data Requires Shared Metadata, Security, and Governance ⬢Shared Metadata Across All Workloads/Clusters Metadata considerations • Tabular data metastore • Lineage and provenance metadata • Add upon ingest • Update as processing modifies data Access / tag-based policies ⬢ & audit logs Key Observation: Classification Prohibition Time Location Streams Pipelines Feeds Tables Files Objects Shared Metadata Policies

- 6. © Hortonworks Inc. 2011 – 2017 All Rights Reserved Shared Data and Cloud Storage ⬢ Cloud Storage is the Shared Data Lake –For both Hadoop and Cloud-native (non-Hadoop) Apps –Lower Cost •HDFS via EBS can get very very expensive •HDFS’s role changes –Built-in geo-distribution and DR ⬢ Challenges –Cloud storage designed for scale, low cost and geo-distribution –Performance is slower – was not designed for data-intensive apps –Cloud storage segregated from compute –API and semantics not like a FS – especially wrt. consistency

- 7. © Hortonworks Inc. 2011 – 2017 All Rights Reserved Making Object Stores work for Big Data Apps ⬢ Focus Areas –Address cloud storage consistency –Performance (changes in connectors and frameworks) –Caching in memory and local storage ⬢ Other issues not covered in this talk –Shared Metastore, Common Governance, Security across multiple clusters –Columnar access control to Tabular data See Hortonworks cloud offering Shared Data & Storage 10101 10101010101 01010101010101 0101010101010101010

- 8. © Hortonworks Inc. 2011 – 2017 All Rights Reserved Cloud Storage Integration: Evolution for Agility HDFS Application HDFS Application GoalEvolution towards cloud storage as the persistent Data Lake Input Output Backup Restore Input Output Upload HDFS Application Input Output tmp AzureAWS –today ->>>>>

- 9. © Hortonworks Inc. 2011 – 2017 All Rights Reserved Danger: Object stores are not hierarchical filesystems Focus: Cost & Geo-distribution over Consistency and Performance

- 10. © Hortonworks Inc. 2011 – 2017 All Rights Reserved A Filesystem: Directories, Files Data / work pending part-00 part-01 00 00 00 01 01 01 complete part-01 rename("/work/pending/part-01", "/work/complete")

- 11. © Hortonworks Inc. 2011 – 2017 All Rights Reserved Object Store: hash(name)⇒data 00 00 00 01 01 s01 s02 s03 s04 hash("/work/pending/part-01") ["s02", "s03", "s04"] No rename hence: copy("/work/pending/part-01", "/work/complete/part01") 01 01 01 01 delete("/work/pending/part-01") hash("/work/pending/part-00") ["s01", "s02", "s04"]

- 12. © Hortonworks Inc. 2011 – 2017 All Rights Reserved Often: Eventually Consistent 00 00 00 01 01 s01 s02 s03 s04 01 DELETE /work/pending/part-00 GET /work/pending/part-00 GET /work/pending/part-00 200 200 200

- 13. © Hortonworks Inc. 2011 – 2017 All Rights Reserved Eventual Consistency problems ⬢ When listing a directory –Newly created files may not yet be visible, deleted ones still present ⬢ After updating a file –Opening and reading the file may still return the previous data ⬢ After deleting a file –Opening the file may succeed, returning the data ⬢ While reading an object –If object is updated or deleted during the process

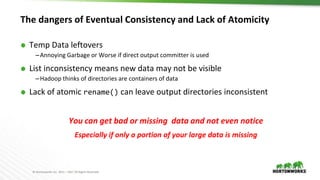

- 14. © Hortonworks Inc. 2011 – 2017 All Rights Reserved The dangers of Eventual Consistency and Lack of Atomicity ⬢ Temp Data leftovers –Annoying Garbage or Worse if direct output committer is used ⬢ List inconsistency means new data may not be visible –Hadoop thinks of directories are containers of data ⬢ Lack of atomic rename() can leave output directories inconsistent You can get bad or missing data and not even notice Especially if only a portion of your large data is missing

- 15. © Hortonworks Inc. 2011 – 2017 All Rights Reserved org.apache.hadoop.fs.FileSystem hdfs s3awasb adlswift gs

- 16. © Hortonworks Inc. 2011 – 2017 All Rights Reserved s3:// —“inode on S3” s3n:// “Native” S3 s3a:// Replaces s3n swift:// OpenStack wasb:// Azure WASB Phase I: Stabilize S3A oss:// Aliyun gs:// Google Cloud Phase II: speed & scale adl:// Azure Data Lake 2006 2007 2008 2009 2010 2011 2012 2013 2014 2015 2016 2017 s3:// Amazon EMR S3 History of Object Storage Support Phase III: scale & consistency (proprietary)

- 17. © Hortonworks Inc. 2011 – 2017 All Rights Reserved Cloud Storage Connectors Azure WASB ● Strongly consistent ● Good performance ● Well-tested on applications (incl. HBase) ADL ● Strongly consistent ● Tuned for big data analytics workloads Amazon Web Services S3A ● Eventually consistent - consistency work in recently completed by Hortonworks, Cloudera, others.. ● Performance improvements recently and in progress ● Active development in Apache EMRFS ● Proprietary connector used in EMR ● Optional strong consistency for a cost Google Cloud Platform GCS ● Multiple configurable consistency policies ● Currently Google open source ● Good performance ● Could improve test coverage

- 18. © Hortonworks Inc. 2011 – 2017 All Rights Reserved Make Apache Hadoop at home in the cloud Step 1: Hadoop runs great on Azure Step 2: Be the best performance on EC2 (i.e. Beat propriety solutions like EMR) ✔ ✔ ✔

- 19. © Hortonworks Inc. 2011 – 2017 All Rights Reserved Problem: S3 Analytics is too slow/broken 1. Analyze benchmarks and bug-reports 2. Optimize the non-io metaDataOps (very cheap on HDFS) 3. Fix Read-path for Columnar Data 4. Fix Write-path 5. Improve query partitioning (not covered in this talk) 6. The Commitment Problem

- 20. getFileStatus() read() LLAP (single node) on AWS TPC-DS queries at 200 GB scale readFully(pos)

- 21. © Hortonworks Inc. 2011 – 2017 All Rights Reserved Hadoop 2.8/HDP 2.6 transforms I/O performance! // forward seek by skipping stream fs.s3a.readahead.range=256K // faster backward seek for Columnar Storage fs.s3a.experimental.input.fadvise=random // Write-IO - enhanced data upload (parallel background uploads) // Additional flags for mem vs disk fs.s3a.fast.output.enabled=true fs.s3a.multipart.size=32M fs.s3a.fast.upload.active.blocks=8 // Additional per-bucket flags —see HADOOP-11694 for lots more!

- 22. © Hortonworks Inc. 2011 – 2017 All Rights Reserved Every HTTP request is precious ⬢ HADOOP-13162: Reduce number of getFileStatus calls in mkdirs() ⬢ HADOOP-13164: Optimize deleteUnnecessaryFakeDirectories() ⬢ HADOOP-13406: Consider reusing filestatus in delete() and mkdirs() ⬢ HADOOP-13145: DistCp to skip getFileStatus when not preserving metadata ⬢ HADOOP-13208: listFiles(recursive=true) to do a bulk listObjects see HADOOP-11694

- 23. © Hortonworks Inc. 2011 – 2017 All Rights Reserved Caching in Memory or Local Disk (ssd) even more relevant for slow cloud storage benchmarks != your queries, your data, your VMs, … …but we think we've made a good start

- 24. © Hortonworks Inc. 2011 – 2017 All Rights Reserved S3 Data Source 1TB TPCDS LLAP- vs Hive 1.x: 0 500 1,000 1,500 2,000 2,500 LLAP-1TB-TPCDS Hive-1-1TB-TPCDS 1 TB TPC-DS ORC DataSet 3 x i2x4x Large (16 CPU x 122 GB RAM x 4 SSD)

- 25. © Hortonworks Inc. 2011 – 2017 All Rights Reserved Rename Problem and Direct Output Committer "

- 26. © Hortonworks Inc. 2011 – 2017 All Rights Reserved The S3 Commitment Problem rename() used for atomic commitment transaction ⬢ Additional Time: copy() + delete() proportional to data * files – Server side copy is used to make this faster, but still a copy – Non-Atomic!! ⬢ Alternate: Direct output committer can solve the performance problem ⬢ BOTH can give wrong results –Intermediate data may be visible –Failures (task or job) leave storage in unknown state –Speculative execution makes it worse ⬢ BTW Compared to Azure Storage, S3 is slow (6-10+ MB/s)

- 27. © Hortonworks Inc. 2011 – 2017 All Rights Reserved Spark's Direct Output Committer? Risk of Corruption of data

- 28. © Hortonworks Inc. 2011 – 2017 All Rights Reserved Netflix Staging Committer 1. Saves output to file:// 2. Task commit: upload to S3A as multipart PUT —but does not commit the PUT, just saves the information about it to hdfs:// 3. Normal commit protocol manages task and job data promotion in HDFS 4. Final Job committer reads pending information and generates final PUT —possibly from a different host 1. But multiple files hence not fully atomic – window is much much smaller Outcome: ⬢ No visible overwrite until final job commit: resilience and speculation ⬢ Task commit time = data/bandwidth ⬢ Job commit time = POST * #files

- 29. © Hortonworks Inc. 2011 – 2017 All Rights Reserved Use the Hive Metastore to Commit Atomically ⬢ Work in progress – use the Hive metastore to record the commit –Databricks seems to have done a similar thing for Databricks Spark (i.e. propriety) ⬢ Fits into the Hive ACID work

- 30. © Hortonworks Inc. 2011 – 2017 All Rights Reserved S3guard Fast, consistent S3 metadata HADOOP-13445

- 31. © Hortonworks Inc. 2011 – 2017 All Rights Reserved S3Guard: Fast And Consistent S3 Metadata ⬢ Goals –Provide consistent list and get status operations on S3 objects written with S3Guard enabled •listStatus() after put and delete •getFileStatus() after put and delete –Performance improvements that impact real workloads –Provide tools to manage associated metadata and caching policies. ⬢ Again, 100% open source in Apache Hadoop community –Hortonworks, Cloudera, Western Digital, Disney … ⬢ Inspired by Apache licensed S3mper project from Netflix –Note apparently EMRFS’s committer is also inspired from this but copied and kept prorierty ⬢ Seamless integration with S3AFileSystem

- 32. © Hortonworks Inc. 2011 – 2017 All Rights Reserved Use DynamoDB as fast, consistent metadata store 00 00 00 01 01 s01 s02 s03 s04 01 DELETE part-00 200 HEAD part-00 200 HEAD part-00 404 PUT part-00 200 00

- 33. © Hortonworks Inc. 2011 – 2017 All Rights Reserved Availability Read + Write in HDP 2.6 and Apache Hadoop 2.8 S3Guard: preview of DDB caching soon Zero-rename commit: work in progress

- 34. © Hortonworks Inc. 2011 – 2017 All Rights Reserved Summary ⬢ Cloud Storage is the Data Lake on the Cloud – HDFS plays a different role ⬢ Challenges: Performance, Consistency, Correctness – Output committer – non-atomicity should not be ignored ⬢ We have made significant improvements – Object store connectors – Upper layers, such as Hive and ORC – S3Guard branch merged ⬢ LLAP as the cache for tabular data ⬢ Other considerations – Shared Metadata, Security and Governance (See HDP Cloud offerings)

- 35. © Hortonworks Inc. 2011 – 2017 All Rights Reserved Big thanks to: Rajesh Balamohan Steve Loughran Mingliang Liu Chris Nauroth Dominik Bialek Ram Venkatesh Everyone in QE, RE + everyone who reviewed/tested, patches and added their own, filed bug reports and measured performance

- 36. © Hortonworks Inc. 2011 – 2017 All Rights Reserved5 2 © Hortonworks Inc. 2011 – 2017. All Rights Reserved Questions? [email protected] @srr

Editor's Notes

- #5: key architectural considerations for improving Hadoop on the cloud Shared Data & Storage – the shared-data-lake is on cloud storage, it is not HDFS. Also memory and local storage play a different role – that of caching An import distinction in the cloud is On-Demand Ephemeral Workloads – this changes a number of things in fundamental ways. Shared Metadata, Security, and Governance remains important but need to be adjusted in the face of ephemeral clusters. And finally, Elastic Resource Management We need to shift our thinking away from cluster resource management and more towards SLA-driven workloads

- #6: Shared data requires a shared approach to metadata, security and governance. - Each ephemeral cluster cannot have it private copy of the metadata.. In cloud, metadata must be centrally stored - across all ephemeral clusters. The Metadata is not just the classic Hive metadata that describes the tabular data, about storing and tracking the lineage and provenance of data, - about details related to data pipeline processing and job management. Also, as data is ingested and processed, metadata needs to be created and adjusted Governance and securing the data remain critical and its matadata needs to be managed across all workloads. - Projects such as Ranger and Atlas need need to be evolved to fit the cloud environment.

- #9: on prem = 1, azure @ 3, AWS @ 2 There are also some problems in using S3 to replace HDFS esp for high perfm apps . . For example, HDFS provides much larger read/write throughput, and provides strong file system guarantees. We will talk about the practical problems shortly. Actually, they have different pros and cons for difference use cases, and fortunately they are not mutually exclusive. We suggest our customers use the right storage at the right place. But goal is move to the right one.

- #10: In all the examples, object stores take a role which replaces HDFS. But this is dangerous, because...

- #11: This is one of the simplest deployments in cloud: scheduled/dynamic ETL. Incoming data sources saving to an object store; spark cluster brought up for ETL Either direct cleanup/filter or multistep operations, but either way: an ETL pipeline. HDFS on the VMs for transient storage, the object store used as the destination for data —now in a more efficient format such as ORC or Parquet

- #12: Notebooks on demand. ; it talks to spark in cloud which then does the work against external and internal data; Your notebook itself can be saved to the object store, for persistence and sharing.

- #13: Example: streaming on Azure + on LHS add streaming

- #14: In all the examples, object stores take a role which replaces HDFS. But this is dangerous, because...

- #15: This picture is classic HDFS – 3 replicas and metadata Here in the rename all you expect is that the data remains and simply some metadata change – that is what happens in HDFS but no so in S3 Rename is important – task level and job level, Tasks may fail, job may fail, job speculation …

- #16: First off all there are no real directories in S3 – the directory names are part of the file name – Cloud object store does not treat “/” as a special separator. Note the hash function – if you rename the hash function result changes – hence rename or output commitment is not simple. There is no rename operation – a copy and delete has to be done The copied data is new (Yellow) The deleted data (Orange) So you might imagine – lets write directly to overcome the copy and delete – many committers did this – was great for the benchmark !!! EMR did this, Spark/Databricks did this …. All soon realized that in production loads you run into problems.

- #18: You might say – big deal the data in pending is deleted later – but image if you did direct output connector and was deleting failed task output Also same problem can occur for new data you added.

- #20: We will come back to the inconsistency problem and the new S3Guard

- #21: Everything uses the Hadoop APIs to talk to both HDFS, Hadoop Compatible Filesystems and object stores; the Hadoop FS API. There's actually two: the one with a clean split between client side and "driver side", and the older one which is a direct connect. Most use the latter. HDFS is "real" filesystem; WASB/Azure close enough. What is "real?". Best test: can support HBase.

- #22: This is the history of the connectors

- #24: Simple goal. Make ASF hadoop at home in cloud infra. It's always been a bit of a mixed bag, need to address many things: things fail differently. - Folks are surprised that this wasn’t the case – we did the s3 connectors in 2008, 2009, 2010!! - Netflix was the first real production workload – open sourced but integrated by community and not stack tested by community - EMR copied but kept proprietary Step 1: Azure. That's the work with Microsoft on wasb://; you can use Azure as a drop-in replacement for HDFS in Azure Step 2: EMR. More specifically, have the ASF Hadoop codebase get better performance than EMR

- #29: So what are the kinds of problems one can run into. Initially we did a lot of performance analysis, benchmarks and look at bug reports which showed consistency problems. We optimized many non-io-metaDataOperation – note these optimizations were not critical in HDFS since HDFS’s metadata and metadata operations are very cheap and no one bothered about optimizing them Work on the readPath and then on the writePath. Further work in query partitioning – which I will not talk abiut in this talk, The the output commitment and consistency problem – I will touch on this.

- #30: Here's a flamegraph of LLAP (single node) with AWS+HDC for a set of TPC-DS queries at 200 GB scale; we should stick this up online Shows number of operations (not time) – Small number of operations are IO, rest are metadata operations which are expensive REST operations. - getListing, seek, etc. only about 2% of time (optimized code) is doing S3 IO. Something at start partitioning data

- #32: Why so much seeking? It's the default implementation of read

- #34: things you will want for Hadoop 2.8. They are in HDP 2.5, and HDP 2.6 possible in the next CDH release. 1st two reduce cost of seeking. Seeking was originally implementing as reopening the connection and reading from the seeked position, However it sometimes cheaper to readahead , The Readahead config variable indicates that hundreds of KB can be skipped before reconnecting (yes, it can take that long to reconnect). The experimental fadvise random feature speeds reading in optimized binary formats like ORC which involves lots of random read, Indeed read at footer at the end of the file to then read the actual data from different portions of the file. The last deals supports fast uploads of data. There are parameters for buffering output as local files and then doing , multi-part uploads which offers the potential of significantly more effective use of bandwidth New version in Hadoop 2.8 is an improvement over the previous one that used to buffer in memory which used to conflict with with RDD caching..

- #35: A big killer turned out to be the fact that if we had to break and re-open the connection, on a large file this would be done by closing the TCP connection and opening a new one. The fix: ask for data in smaller blocks; the max of (requested-length, min-request-len). Result: significantly lower cost back-in-file seeking and in very-long-distance forward seeks, at the expense of an increased cost in end-to-reads of a file (gzip, csv). It's an experimental option for this reason; I think I'd like to make it an API call that libs like parquet & orc can explicitly request on their IO: it should apply to all blobstores

- #36: If you look at what we've done, much of it (credit to Rajesh & Chris) is minimizing HTTP requests and # of connections. Recall metadata operations are cheap in HDFS BUT NOT in S3. Each one can take hundreds of millis, sometimes even seconds due to load balancer issues (tip: reduce DNS TTL on your clients to <30s). A lot of the work internal to S3A was culling those getFileStatus() calls by (a) caching results, (b) perhaps not needed. Example: cheaper to issue a DELETE listing all parent paths than actually looking to see if they exist, wait for the response, and then delete them. The one at the end, HADOOP-13208, replaces a slow recursive tree walk (many status, many list) with a flat listing of all objects in a tree. This works only for the listStatus(path, true) call Better to use that API call, not do your own treewalk.

- #38: Note: much work remains: Data layout in cloud storage and its implications on applications needs further study - we need to understand the implications of sharding and throttling in S3. What we do know is that deep/shallow trees are pathological for recursive treewalks, and they end up storing data in the same s3 nodes, so throttling adjacent requests. Now lets talk about caching!

- #39: This benchmark shows the benefits of caching especially wrt to slow cloud storage. LLAP is next latest hive: . 2 benefits (both on-prem and esp cloud) thread-based work allocation (instead of process) and also caching. LLAP caches columnar data – only the columns that you need and also in an intermediate form. 26x faster!!!

- #43: Spark, early on focused on the direct outpuyt commiter ignoring the problem of atomicity and the careful design of the Hadoop’s HDFS centric file output committer, Performance was of good and the benchmarks and it helped Spark’s adoption. But the production problems surface and the direct output committer was removed, It was taken away because it can corrupt your data, without you noticiing. This is generally considered harmful.

- #44: Lets now turn out attention tp Netflix’s output connector, Output to local disk It uses a multi-part put to upload to S3, with a final output to commit the job. Significantly narrows the window of visibility of partial data and failure of job committer.

- #46: Recap – S3 is eventually consistent Additonal work we are doing to use Cloud DB to cache the metadata to achieve consistency.

- #48: Lets look at this in a little detail there is an additional metastore (e.g. Dynamo DB) where all metadata updates are stored. So an additional operation but some metadata operations are faster However, note there are issues about out-of-band, and source of truth that need to be considered.

- #55: And this is the big one, as it spans the lot: Hadoop's own code (so far: distcp), Spark, Hive, Flink, related tooling. If we can't speed up the object stores, we can tune the apps

- #59: Hadoop 2.8 adds a lot of control here. (credit: Netfllx, + later us & cloudera) -You can define a list of credential providers to use; the default is simple, env, instance, but you can add temporary and anonymous, choose which are unsupported, etc. -passwords/secrets can be encrypted in hadoop credential files stored locally, HDFS -IAM auth is what EC2 VMs need

![© Hortonworks Inc. 2011 – 2017 All Rights Reserved

Object Store: hash(name)⇒data

00

00

00

01

01

s01 s02

s03 s04

hash("/work/pending/part-01")

["s02", "s03", "s04"]

No rename hence:

copy("/work/pending/part-01",

"/work/complete/part01")

01

01

01

01

delete("/work/pending/part-01")

hash("/work/pending/part-00")

["s01", "s02", "s04"]](https://image.slidesharecdn.com/sanjayradiadancingelephantsefficientlyworkingwithobjectstoresfromapachesparkandapachehive-170921020759/85/Dancing-Elephants-Efficiently-Working-with-Object-Stores-from-Apache-Spark-and-Apache-Hive-11-320.jpg)