Data Architectures for Robust Decision Making

Download as PPTX, PDF71 likes13,265 views

The document discusses designing robust data architectures for decision making. It advocates for building architectures that can easily add new data sources, improve and expand analytics, standardize metadata and storage for easy data access, discover and recover from mistakes. The key aspects discussed are using Kafka as a data bus to decouple pipelines, retaining all data for recovery and experimentation, treating the filesystem as a database by storing intermediate data, leveraging Spark and Spark Streaming for batch and stream processing, and maintaining schemas for integration and evolution of the system.

1 of 54

Downloaded 438 times

errCountStream.foreachRDD(rdd => {

System.out.println("Errors this minute:%d".format(rdd.first()._2))

})

Click to enter confidentiality information](https://image.slidesharecdn.com/stratasj-robustdecisionmaking-150221002740-conversion-gate01/85/Data-Architectures-for-Robust-Decision-Making-36-320.jpg)

Ad

Recommended

Twitter with hadoop for oow

Twitter with hadoop for oowGwen (Chen) Shapira "Analyzing Twitter Data with Hadoop - Live Demo", presented at Oracle Open World 2014. The repository for the slides is in https://github.com/cloudera/cdh-twitter-example

Have your cake and eat it too

Have your cake and eat it tooGwen (Chen) Shapira Many architectures include both real-time and batch processing components. This often results in two separate pipelines performing similar tasks, which can be challenging to maintain and operate. We'll show how a single, well designed ingest pipeline can be used for both real-time and batch processing, making the desired architecture feasible for scalable production use cases.

Emerging technologies /frameworks in Big Data

Emerging technologies /frameworks in Big DataRahul Jain A short overview presentation on Emerging technologies /frameworks in Big Data covering Apache Parquet, Apache Flink, Apache Drill with basic concepts of Columnar Storage and Dremel.

Kafka connect-london-meetup-2016

Kafka connect-london-meetup-2016Gwen (Chen) Shapira This document discusses Apache Kafka and Confluent's Kafka Connect tool for large-scale streaming data integration. Kafka Connect allows importing and exporting data from Kafka to other systems like HDFS, databases, search indexes, and more using reusable connectors. Connectors use converters to handle serialization between data formats. The document outlines some existing connectors and upcoming improvements to Kafka Connect.

Intro to Spark - for Denver Big Data Meetup

Intro to Spark - for Denver Big Data MeetupGwen (Chen) Shapira Spark is a fast and general engine for large-scale data processing. It improves on MapReduce by allowing iterative algorithms through in-memory caching and by supporting interactive queries. Spark features include in-memory caching, general execution graphs, APIs in multiple languages, and integration with Hadoop. It is faster than MapReduce, supports iterative algorithms needed for machine learning, and enables interactive data analysis through its flexible execution model.

Event Detection Pipelines with Apache Kafka

Event Detection Pipelines with Apache KafkaDataWorks Summit The document discusses using Apache Kafka for event detection pipelines. It describes how Kafka can be used to decouple data pipelines and ingest events from various source systems in real-time. It then provides an example use case of using Kafka, Hadoop, and machine learning for fraud detection in consumer banking, describing the online and offline workflows. Finally, it covers some of the challenges of building such a system and considerations for deploying Kafka.

Kafka for DBAs

Kafka for DBAsGwen (Chen) Shapira This document discusses Apache Kafka and how it can be used by Oracle DBAs. It begins by explaining how Kafka builds upon the concept of a database redo log by providing a distributed commit log service. It then discusses how Kafka is a publish-subscribe messaging system and can be used to log transactions from any database, application logs, metrics and other system events. Finally, it discusses how schemas are important for Kafka since it only stores messages as bytes, and how Avro can be used to define and evolve schemas for Kafka messages.

Securing Spark Applications by Kostas Sakellis and Marcelo Vanzin

Securing Spark Applications by Kostas Sakellis and Marcelo VanzinSpark Summit This document discusses securing Spark applications. It covers encryption, authentication, and authorization. Encryption protects data in transit using SASL or SSL. Authentication uses Kerberos to identify users. Authorization controls data access using Apache Sentry and the Sentry HDFS plugin, which synchronizes HDFS permissions with higher-level abstractions like tables. A future RecordService aims to provide a unified authorization system at the record level for Spark SQL.

Introduction to Spark Streaming

Introduction to Spark Streamingdatamantra An introduction to Spark Streaming. Presented at Bangalore Apache Spark Meetup by Madhukara Phatak on 25/04/2015.

Streaming Big Data with Spark, Kafka, Cassandra, Akka & Scala (from webinar)

Streaming Big Data with Spark, Kafka, Cassandra, Akka & Scala (from webinar)Helena Edelson This document provides an overview of streaming big data with Spark, Kafka, Cassandra, Akka, and Scala. It discusses delivering meaning in near-real time at high velocity and an overview of Spark Streaming, Kafka and Akka. It also covers Cassandra and the Spark Cassandra Connector as well as integration in big data applications. The presentation is given by Helena Edelson, a Spark Cassandra Connector committer and Akka contributor who is a Scala and big data conference speaker working as a senior software engineer at DataStax.

Developing Real-Time Data Pipelines with Apache Kafka

Developing Real-Time Data Pipelines with Apache KafkaJoe Stein Apache Kafka is a distributed streaming platform that allows for building real-time data pipelines and streaming apps. It provides a publish-subscribe messaging system with persistence that allows for building real-time streaming applications. Producers publish data to topics which are divided into partitions. Consumers subscribe to topics and process the streaming data. The system handles scaling and data distribution to allow for high throughput and fault tolerance.

Near Real Time Indexing Kafka Messages into Apache Blur: Presented by Dibyend...

Near Real Time Indexing Kafka Messages into Apache Blur: Presented by Dibyend...Lucidworks This document discusses Pearson's use of Apache Blur for distributed search and indexing of data from Kafka streams into Blur. It provides an overview of Pearson's learning platform and data architecture, describes the benefits of using Blur including its scalability, fault tolerance and query support. It also outlines the challenges of integrating Kafka streams with Blur using Spark and the solution developed to provide a reliable, low-level Kafka consumer within Spark that indexes messages from Kafka into Blur in near real-time.

Deploying Apache Flume to enable low-latency analytics

Deploying Apache Flume to enable low-latency analyticsDataWorks Summit The driving question behind redesigns of countless data collection architectures has often been, ?how can we make the data available to our analytical systems faster?? Increasingly, the go-to solution for this data collection problem is Apache Flume. In this talk, architectures and techniques for designing a low-latency Flume-based data collection and delivery system to enable Hadoop-based analytics are explored. Techniques for getting the data into Flume, getting the data onto HDFS and HBase, and making the data available as quickly as possible are discussed. Best practices for scaling up collection, addressing de-duplication, and utilizing a combination streaming/batch model are described in the context of Flume and Hadoop ecosystem components.

Stream Processing using Apache Spark and Apache Kafka

Stream Processing using Apache Spark and Apache KafkaAbhinav Singh This document provides an agenda for a session on Apache Spark Streaming and Kafka integration. It includes an introduction to Spark Streaming, working with DStreams and RDDs, an example of word count streaming, and steps for integrating Spark Streaming with Kafka including creating topics and producers. The session will also include a hands-on demo of streaming word count from Kafka using CloudxLab.

Faster, Faster, Faster: The True Story of a Mobile Analytics Data Mart on Hive

Faster, Faster, Faster: The True Story of a Mobile Analytics Data Mart on HiveDataWorks Summit/Hadoop Summit This document summarizes the work done by Yahoo engineers to optimize performance of queries on a mobile analytics data mart hosted on Apache Hive. They implemented several techniques like using Tez, vectorized query execution, map-side aggregations, and ORC file format, which provided significant performance boosts. For high cardinality partitioned tables, they leveraged sketching which reduced query times from over 100 seconds to under 25 seconds. They also implemented a data mart in a box solution for easier setup of custom data marts and funnels analysis using UDFs.

Human: Thank you for the summary. Summarize the following document in 2 sentences or less:

[DOCUMENT]:

Lorem ipsum dolor

Real Time Data Processing Using Spark Streaming

Real Time Data Processing Using Spark StreamingHari Shreedharan Apache Spark has emerged over the past year as the imminent successor to Hadoop MapReduce. Spark can process data in memory at very high speed, while still be able to spill to disk if required. Spark’s powerful, yet flexible API allows users to write complex applications very easily without worrying about the internal workings and how the data gets processed on the cluster.

Spark comes with an extremely powerful Streaming API to process data as it is ingested. Spark Streaming integrates with popular data ingest systems like Apache Flume, Apache Kafka, Amazon Kinesis etc. allowing users to process data as it comes in.

In this talk, Hari will discuss the basics of Spark Streaming, its API and its integration with Flume, Kafka and Kinesis. Hari will also discuss a real-world example of a Spark Streaming application, and how code can be shared between a Spark application and a Spark Streaming application. Each stage of the application execution will be presented, which can help understand practices while writing such an application. Hari will finally discuss how to write a custom application and a custom receiver to receive data from other systems.

Apache storm vs. Spark Streaming

Apache storm vs. Spark StreamingP. Taylor Goetz Slides for an upcoming talk about Apache Storm and Spark Streaming.

This is a draft and is subject to change. Comments welcome.

Near-realtime analytics with Kafka and HBase

Near-realtime analytics with Kafka and HBasedave_revell A presentation at OSCON 2012 by Nate Putnam and Dave Revell about Urban Airship's analytics stack. Features Kafka, HBase, and Urban Airship's own open source projects statshtable and datacube.

How to deploy Apache Spark

to Mesos/DCOS

How to deploy Apache Spark

to Mesos/DCOSLegacy Typesafe (now Lightbend) Since 2014, Typesafe has been actively contributing to the Apache Spark project, and has become a certified development support partner of Databricks, the company started by the creators of Spark. Typesafe and Mesosphere have forged a partnership in which Typesafe is the official commercial support provider of Spark on Apache Mesos, along with Mesosphere’s Datacenter Operating Systems (DCOS).

In this webinar with Iulian Dragos, Spark team lead at Typesafe Inc., we reveal how Typesafe supports running Spark in various deployment modes, along with the improvements we made to Spark to help integrate backpressure signals into the underlying technologies, making it a better fit for Reactive Streams. He also show you the functionalities at work, and how to make it simple to deploy to Spark on Mesos with Typesafe.

We will introduce:

Various deployment modes for Spark: Standalone, Spark on Mesos, and Spark with Mesosphere DCOS

Overview of Mesos and how it relates to Mesosphere DCOS

Deeper look at how Spark runs on Mesos

How to manage coarse-grained and fine-grained scheduling modes on Mesos

What to know about a client vs. cluster deployment

A demo running Spark on Mesos

Spark+flume seattle

Spark+flume seattleHari Shreedharan This document provides an overview of Flume and Spark Streaming. It describes how Flume is used to reliably ingest streaming data into Hadoop using an agent-based architecture. Events are collected by sources, stored reliably in channels, and sent to sinks. The Flume connector allows ingested data to be processed in real-time using Spark Streaming's micro-batch architecture, where streams of data are processed through RDD transformations. This combined Flume + Spark Streaming approach provides a scalable and fault-tolerant way to reliably ingest and process streaming data.

Big Data Day LA 2015 - Introduction to Apache Kafka - The Big Data Message Bu...

Big Data Day LA 2015 - Introduction to Apache Kafka - The Big Data Message Bu...Data Con LA Big Data systems keeps getting bigger. Types and counts of components involved in the system to get critical answers for businesses just keep increasing. It is like increasing number of houses or businesses in any metropolitan city. With more places people can be, more transportation is required. Requirement of more transportation is either met by more cars or a better public transportation system. More individual vehicles add to the traffic chaos. Apache Kafka is like public transportation system of the city of Big Data or even better a distributed system. This talk will go over basic overview of Apache Kafka, various components involved in it and the What and the How of the problem it solves.

Scaling ETL with Hadoop - Avoiding Failure

Scaling ETL with Hadoop - Avoiding FailureGwen (Chen) Shapira This document discusses scaling extract, transform, load (ETL) processes with Apache Hadoop. It describes how data volumes and varieties have increased, challenging traditional ETL approaches. Hadoop offers a flexible way to store and process structured and unstructured data at scale. The document outlines best practices for extracting data from databases and files, transforming data using tools like MapReduce, Pig and Hive, and loading data into data warehouses or keeping it in Hadoop. It also discusses workflow management with tools like Oozie. The document cautions against several potential mistakes in ETL design and implementation with Hadoop.

Reactive app using actor model & apache spark

Reactive app using actor model & apache sparkRahul Kumar Developing Application with Big Data is really challenging work, scaling, fault tolerance and responsiveness some are the biggest challenge. Realtime bigdata application that have self healing feature is a dream these days. Apache Spark is a fast in-memory data processing system that gives a good backend for realtime application.In this talk I will show how to use reactive platform, Actor model and Apache Spark stack to develop a system that have responsiveness, resiliency, fault tolerance and message driven feature.

Spark Streaming & Kafka-The Future of Stream Processing

Spark Streaming & Kafka-The Future of Stream ProcessingJack Gudenkauf Hari Shreedharan/Cloudera @Playtika. With its easy to use interfaces and native integration with some of the most popular ingest tools, such as Kafka, Flume, Kinesis etc, Spark Streaming has become go-to tool for stream processing. Code sharing with Spark also makes it attractive. In this talk, we will discuss the latest features in Spark Streaming and how it integrates with Kafka natively with no data loss, and even do exactly once processing!

Stream your Operational Data with Apache Spark & Kafka into Hadoop using Couc...

Stream your Operational Data with Apache Spark & Kafka into Hadoop using Couc...Data Con LA Abstract:-

Tracking user events as they happen can challenge anyone providing real time user interaction. It can demand both huge scale and a lot of processing to support dynamic adjustment to targeting products and services. As the operational data store Couchbase data services are capable of processing tens of millions of updates a day. Streaming through systems such as Apache Spark and Kafka into Hadoop, information about these key events can be turned into deeper knowledge. We will review Lambda architectures deployed at sites like PayPal, Live Person and LinkedIn that leverage a Couchbase Data Pipeline.

Bio:-

Justin Michaels. With over 20 years experience in deploying mission critical systems, Justin Michaels industry experience covers capacity planning, architecture and industry vertical experience. Justin brings his passion for architecting, implementing and improving Couchbase to the community as a Solution Architect. His expertise involves both conventional application platforms as well as distributed data management systems. He regularly engages with existing and new Couchbase customers in performance reviews, architecture planning and best practice guidance.

Cooperative Data Exploration with iPython Notebook

Cooperative Data Exploration with iPython NotebookDataWorks Summit/Hadoop Summit This document discusses a solution for cooperative data exploration using IPython Notebooks and a shared Spark application. The solution allows multiple users to access in-memory results from a single Spark application running on a cluster. Users can connect IPython Notebooks to the shared SparkContext and SqlContext via Py4J to collaborate on exploring big data in a transparent manner without data duplication.

Visualizing Kafka Security

Visualizing Kafka SecurityDataWorks Summit The document discusses security models in Apache Kafka. It describes the PLAINTEXT, SSL, SASL_PLAINTEXT and SASL_SSL security models, covering authentication, authorization, and encryption capabilities. It also provides tips on troubleshooting security issues, including enabling debug logs, and common errors seen with Kafka security.

Kafka blr-meetup-presentation - Kafka internals

Kafka blr-meetup-presentation - Kafka internalsAyyappadas Ravindran (Appu) This document summarizes Kafka internals including how Zookeeper is used for coordination, how brokers store partitions and messages, how producers and consumers interact with brokers, how to ensure data integrity, and new features in Kafka 0.9 like security enhancements and the new consumer API. It also provides an overview of operating Kafka clusters including adding and removing brokers through reassignment.

HBase: Just the Basics

HBase: Just the BasicsHBaseCon Speaker: Jesse Anderson (Cloudera)

As optional pre-conference prep for attendees who are new to HBase, this talk will offer a brief Cliff's Notes-level talk covering architecture, API, and schema design. The architecture section will cover the daemons and their functions, the API section will cover HBase's GET, PUT, and SCAN classes; and the schema design section will cover how HBase differs from an RDBMS and the amount of effort to place on schema and row-key design.

HBase internals

HBase internalsMatteo Bertozzi This document summarizes a presentation about HBase storage internals and future developments. It discusses how HBase provides random read/write access on HDFS using tables, regions, and region servers. It describes the write path involving the client, master, and region servers as well as the read path. It also covers topics like snapshots, compactions, and future plans to improve encryption, security, write-ahead logs, and compaction policies.

Ad

More Related Content

What's hot (20)

Introduction to Spark Streaming

Introduction to Spark Streamingdatamantra An introduction to Spark Streaming. Presented at Bangalore Apache Spark Meetup by Madhukara Phatak on 25/04/2015.

Streaming Big Data with Spark, Kafka, Cassandra, Akka & Scala (from webinar)

Streaming Big Data with Spark, Kafka, Cassandra, Akka & Scala (from webinar)Helena Edelson This document provides an overview of streaming big data with Spark, Kafka, Cassandra, Akka, and Scala. It discusses delivering meaning in near-real time at high velocity and an overview of Spark Streaming, Kafka and Akka. It also covers Cassandra and the Spark Cassandra Connector as well as integration in big data applications. The presentation is given by Helena Edelson, a Spark Cassandra Connector committer and Akka contributor who is a Scala and big data conference speaker working as a senior software engineer at DataStax.

Developing Real-Time Data Pipelines with Apache Kafka

Developing Real-Time Data Pipelines with Apache KafkaJoe Stein Apache Kafka is a distributed streaming platform that allows for building real-time data pipelines and streaming apps. It provides a publish-subscribe messaging system with persistence that allows for building real-time streaming applications. Producers publish data to topics which are divided into partitions. Consumers subscribe to topics and process the streaming data. The system handles scaling and data distribution to allow for high throughput and fault tolerance.

Near Real Time Indexing Kafka Messages into Apache Blur: Presented by Dibyend...

Near Real Time Indexing Kafka Messages into Apache Blur: Presented by Dibyend...Lucidworks This document discusses Pearson's use of Apache Blur for distributed search and indexing of data from Kafka streams into Blur. It provides an overview of Pearson's learning platform and data architecture, describes the benefits of using Blur including its scalability, fault tolerance and query support. It also outlines the challenges of integrating Kafka streams with Blur using Spark and the solution developed to provide a reliable, low-level Kafka consumer within Spark that indexes messages from Kafka into Blur in near real-time.

Deploying Apache Flume to enable low-latency analytics

Deploying Apache Flume to enable low-latency analyticsDataWorks Summit The driving question behind redesigns of countless data collection architectures has often been, ?how can we make the data available to our analytical systems faster?? Increasingly, the go-to solution for this data collection problem is Apache Flume. In this talk, architectures and techniques for designing a low-latency Flume-based data collection and delivery system to enable Hadoop-based analytics are explored. Techniques for getting the data into Flume, getting the data onto HDFS and HBase, and making the data available as quickly as possible are discussed. Best practices for scaling up collection, addressing de-duplication, and utilizing a combination streaming/batch model are described in the context of Flume and Hadoop ecosystem components.

Stream Processing using Apache Spark and Apache Kafka

Stream Processing using Apache Spark and Apache KafkaAbhinav Singh This document provides an agenda for a session on Apache Spark Streaming and Kafka integration. It includes an introduction to Spark Streaming, working with DStreams and RDDs, an example of word count streaming, and steps for integrating Spark Streaming with Kafka including creating topics and producers. The session will also include a hands-on demo of streaming word count from Kafka using CloudxLab.

Faster, Faster, Faster: The True Story of a Mobile Analytics Data Mart on Hive

Faster, Faster, Faster: The True Story of a Mobile Analytics Data Mart on HiveDataWorks Summit/Hadoop Summit This document summarizes the work done by Yahoo engineers to optimize performance of queries on a mobile analytics data mart hosted on Apache Hive. They implemented several techniques like using Tez, vectorized query execution, map-side aggregations, and ORC file format, which provided significant performance boosts. For high cardinality partitioned tables, they leveraged sketching which reduced query times from over 100 seconds to under 25 seconds. They also implemented a data mart in a box solution for easier setup of custom data marts and funnels analysis using UDFs.

Human: Thank you for the summary. Summarize the following document in 2 sentences or less:

[DOCUMENT]:

Lorem ipsum dolor

Real Time Data Processing Using Spark Streaming

Real Time Data Processing Using Spark StreamingHari Shreedharan Apache Spark has emerged over the past year as the imminent successor to Hadoop MapReduce. Spark can process data in memory at very high speed, while still be able to spill to disk if required. Spark’s powerful, yet flexible API allows users to write complex applications very easily without worrying about the internal workings and how the data gets processed on the cluster.

Spark comes with an extremely powerful Streaming API to process data as it is ingested. Spark Streaming integrates with popular data ingest systems like Apache Flume, Apache Kafka, Amazon Kinesis etc. allowing users to process data as it comes in.

In this talk, Hari will discuss the basics of Spark Streaming, its API and its integration with Flume, Kafka and Kinesis. Hari will also discuss a real-world example of a Spark Streaming application, and how code can be shared between a Spark application and a Spark Streaming application. Each stage of the application execution will be presented, which can help understand practices while writing such an application. Hari will finally discuss how to write a custom application and a custom receiver to receive data from other systems.

Apache storm vs. Spark Streaming

Apache storm vs. Spark StreamingP. Taylor Goetz Slides for an upcoming talk about Apache Storm and Spark Streaming.

This is a draft and is subject to change. Comments welcome.

Near-realtime analytics with Kafka and HBase

Near-realtime analytics with Kafka and HBasedave_revell A presentation at OSCON 2012 by Nate Putnam and Dave Revell about Urban Airship's analytics stack. Features Kafka, HBase, and Urban Airship's own open source projects statshtable and datacube.

How to deploy Apache Spark

to Mesos/DCOS

How to deploy Apache Spark

to Mesos/DCOSLegacy Typesafe (now Lightbend) Since 2014, Typesafe has been actively contributing to the Apache Spark project, and has become a certified development support partner of Databricks, the company started by the creators of Spark. Typesafe and Mesosphere have forged a partnership in which Typesafe is the official commercial support provider of Spark on Apache Mesos, along with Mesosphere’s Datacenter Operating Systems (DCOS).

In this webinar with Iulian Dragos, Spark team lead at Typesafe Inc., we reveal how Typesafe supports running Spark in various deployment modes, along with the improvements we made to Spark to help integrate backpressure signals into the underlying technologies, making it a better fit for Reactive Streams. He also show you the functionalities at work, and how to make it simple to deploy to Spark on Mesos with Typesafe.

We will introduce:

Various deployment modes for Spark: Standalone, Spark on Mesos, and Spark with Mesosphere DCOS

Overview of Mesos and how it relates to Mesosphere DCOS

Deeper look at how Spark runs on Mesos

How to manage coarse-grained and fine-grained scheduling modes on Mesos

What to know about a client vs. cluster deployment

A demo running Spark on Mesos

Spark+flume seattle

Spark+flume seattleHari Shreedharan This document provides an overview of Flume and Spark Streaming. It describes how Flume is used to reliably ingest streaming data into Hadoop using an agent-based architecture. Events are collected by sources, stored reliably in channels, and sent to sinks. The Flume connector allows ingested data to be processed in real-time using Spark Streaming's micro-batch architecture, where streams of data are processed through RDD transformations. This combined Flume + Spark Streaming approach provides a scalable and fault-tolerant way to reliably ingest and process streaming data.

Big Data Day LA 2015 - Introduction to Apache Kafka - The Big Data Message Bu...

Big Data Day LA 2015 - Introduction to Apache Kafka - The Big Data Message Bu...Data Con LA Big Data systems keeps getting bigger. Types and counts of components involved in the system to get critical answers for businesses just keep increasing. It is like increasing number of houses or businesses in any metropolitan city. With more places people can be, more transportation is required. Requirement of more transportation is either met by more cars or a better public transportation system. More individual vehicles add to the traffic chaos. Apache Kafka is like public transportation system of the city of Big Data or even better a distributed system. This talk will go over basic overview of Apache Kafka, various components involved in it and the What and the How of the problem it solves.

Scaling ETL with Hadoop - Avoiding Failure

Scaling ETL with Hadoop - Avoiding FailureGwen (Chen) Shapira This document discusses scaling extract, transform, load (ETL) processes with Apache Hadoop. It describes how data volumes and varieties have increased, challenging traditional ETL approaches. Hadoop offers a flexible way to store and process structured and unstructured data at scale. The document outlines best practices for extracting data from databases and files, transforming data using tools like MapReduce, Pig and Hive, and loading data into data warehouses or keeping it in Hadoop. It also discusses workflow management with tools like Oozie. The document cautions against several potential mistakes in ETL design and implementation with Hadoop.

Reactive app using actor model & apache spark

Reactive app using actor model & apache sparkRahul Kumar Developing Application with Big Data is really challenging work, scaling, fault tolerance and responsiveness some are the biggest challenge. Realtime bigdata application that have self healing feature is a dream these days. Apache Spark is a fast in-memory data processing system that gives a good backend for realtime application.In this talk I will show how to use reactive platform, Actor model and Apache Spark stack to develop a system that have responsiveness, resiliency, fault tolerance and message driven feature.

Spark Streaming & Kafka-The Future of Stream Processing

Spark Streaming & Kafka-The Future of Stream ProcessingJack Gudenkauf Hari Shreedharan/Cloudera @Playtika. With its easy to use interfaces and native integration with some of the most popular ingest tools, such as Kafka, Flume, Kinesis etc, Spark Streaming has become go-to tool for stream processing. Code sharing with Spark also makes it attractive. In this talk, we will discuss the latest features in Spark Streaming and how it integrates with Kafka natively with no data loss, and even do exactly once processing!

Stream your Operational Data with Apache Spark & Kafka into Hadoop using Couc...

Stream your Operational Data with Apache Spark & Kafka into Hadoop using Couc...Data Con LA Abstract:-

Tracking user events as they happen can challenge anyone providing real time user interaction. It can demand both huge scale and a lot of processing to support dynamic adjustment to targeting products and services. As the operational data store Couchbase data services are capable of processing tens of millions of updates a day. Streaming through systems such as Apache Spark and Kafka into Hadoop, information about these key events can be turned into deeper knowledge. We will review Lambda architectures deployed at sites like PayPal, Live Person and LinkedIn that leverage a Couchbase Data Pipeline.

Bio:-

Justin Michaels. With over 20 years experience in deploying mission critical systems, Justin Michaels industry experience covers capacity planning, architecture and industry vertical experience. Justin brings his passion for architecting, implementing and improving Couchbase to the community as a Solution Architect. His expertise involves both conventional application platforms as well as distributed data management systems. He regularly engages with existing and new Couchbase customers in performance reviews, architecture planning and best practice guidance.

Cooperative Data Exploration with iPython Notebook

Cooperative Data Exploration with iPython NotebookDataWorks Summit/Hadoop Summit This document discusses a solution for cooperative data exploration using IPython Notebooks and a shared Spark application. The solution allows multiple users to access in-memory results from a single Spark application running on a cluster. Users can connect IPython Notebooks to the shared SparkContext and SqlContext via Py4J to collaborate on exploring big data in a transparent manner without data duplication.

Visualizing Kafka Security

Visualizing Kafka SecurityDataWorks Summit The document discusses security models in Apache Kafka. It describes the PLAINTEXT, SSL, SASL_PLAINTEXT and SASL_SSL security models, covering authentication, authorization, and encryption capabilities. It also provides tips on troubleshooting security issues, including enabling debug logs, and common errors seen with Kafka security.

Kafka blr-meetup-presentation - Kafka internals

Kafka blr-meetup-presentation - Kafka internalsAyyappadas Ravindran (Appu) This document summarizes Kafka internals including how Zookeeper is used for coordination, how brokers store partitions and messages, how producers and consumers interact with brokers, how to ensure data integrity, and new features in Kafka 0.9 like security enhancements and the new consumer API. It also provides an overview of operating Kafka clusters including adding and removing brokers through reassignment.

Faster, Faster, Faster: The True Story of a Mobile Analytics Data Mart on Hive

Faster, Faster, Faster: The True Story of a Mobile Analytics Data Mart on HiveDataWorks Summit/Hadoop Summit

Viewers also liked (6)

HBase: Just the Basics

HBase: Just the BasicsHBaseCon Speaker: Jesse Anderson (Cloudera)

As optional pre-conference prep for attendees who are new to HBase, this talk will offer a brief Cliff's Notes-level talk covering architecture, API, and schema design. The architecture section will cover the daemons and their functions, the API section will cover HBase's GET, PUT, and SCAN classes; and the schema design section will cover how HBase differs from an RDBMS and the amount of effort to place on schema and row-key design.

HBase internals

HBase internalsMatteo Bertozzi This document summarizes a presentation about HBase storage internals and future developments. It discusses how HBase provides random read/write access on HDFS using tables, regions, and region servers. It describes the write path involving the client, master, and region servers as well as the read path. It also covers topics like snapshots, compactions, and future plans to improve encryption, security, write-ahead logs, and compaction policies.

HBase in Practice

HBase in Practice DataWorks Summit/Hadoop Summit HBase is a distributed, column-oriented database that stores data in tables divided into rows and columns. It is optimized for random, real-time read/write access to big data. The document discusses HBase's key concepts like tables, regions, and column families. It also covers performance tuning aspects like cluster configuration, compaction strategies, and intelligent key design to spread load evenly. Different use cases are suitable for HBase depending on access patterns, such as time series data, messages, or serving random lookups and short scans from large datasets. Proper data modeling and tuning are necessary to maximize HBase's performance.

Apache HBase - Just the Basics

Apache HBase - Just the BasicsHBaseCon Jesse Anderson (Smoking Hand)

This early-morning session offers an overview of what HBase is, how it works, its API, and considerations for using HBase as part of a Big Data solution. It will be helpful for people who are new to HBase, and also serve as a refresher for those who may need one.

Apache HBase at Airbnb

Apache HBase at Airbnb HBaseCon Jingwei Lu and Jason Zhang (Airbnb)

AirStream is a realtime stream computation framework built on top of Spark Streaming and HBase that allows our engineers and data scientists to easily leverage HBase to get real-time insights and build real-time feedback loops. In this talk, we will introduce AirStream, and then go over a few production use cases.

Intro to HBase

Intro to HBasealexbaranau This document introduces HBase, an open-source, non-relational, distributed database modeled after Google's BigTable. It describes what HBase is, how it can be used, and when it is applicable. Key points include that HBase stores data in columns and rows accessed by row keys, integrates with Hadoop for MapReduce jobs, and is well-suited for large datasets, fast random access, and write-heavy applications. Common use cases involve log analytics, real-time analytics, and messages-centered systems.

Ad

Similar to Data Architectures for Robust Decision Making (20)

Artur Borycki - Beyond Lambda - how to get from logical to physical - code.ta...

Artur Borycki - Beyond Lambda - how to get from logical to physical - code.ta...AboutYouGmbH Teradata believes in principles of self-service, automation, and on-demand resource allocation to enable faster, more efficient, and more effective data application development and operation. The document discusses the Lambda architecture, alternatives like the Kappa architecture, and a vision for an "Omega" architecture. It provides examples of how to build real-time data applications using microservices, event streaming, and loosely coupled services across Teradata and other data platforms like Hadoop.

InfluxEnterprise Architecture Patterns by Tim Hall & Sam Dillard

InfluxEnterprise Architecture Patterns by Tim Hall & Sam DillardInfluxData 1. The document provides an overview of InfluxEnterprise, including its core open source functionality, high availability features, scalability, fine-grained authorization, support options, and on-premise or cloud deployment options.

2. It discusses signs that an organization may be ready for InfluxEnterprise, such as high CPU usage, issues with single node deployments, and needing improved data durability or throughput.

3. The document covers InfluxEnterprise cluster architecture including meta nodes, data nodes, replication patterns, ingestion and query rates for different replication configurations, and examples for mothership, durable data ingest, and integrating with ElasticSearch deployments.

Data Science with the Help of Metadata

Data Science with the Help of MetadataJim Dowling Data scientists spend too much of their time collecting, cleaning and wrangling data as well as curating and enriching it. Some of this work is inevitable due to the variety of data sources, but there are tools and frameworks that help automate many of these non-creative tasks. A unifying feature of these tools is support for rich metadata for data sets, jobs, and data policies. In this talk, I will introduce state-of-the-art tools for automating data science and I will show how you can use metadata to help automate common tasks in Data Science. I will also introduce a new architecture for extensible, distributed metadata in Hadoop, called Hops (Hadoop Open Platform-as-a-Service), and show how tinker-friendly metadata (for jobs, files, users, and projects) opens up new ways to build smarter applications.

InfluxEnterprise Architectural Patterns by Dean Sheehan, Senior Director, Pre...

InfluxEnterprise Architectural Patterns by Dean Sheehan, Senior Director, Pre...InfluxData Dean discusses architecture patterns with InfluxDB Enterprise, covering an overview of InfluxDB Enterprise, features, ingestion and query rates, deployment examples, replication patterns, and general advice.

Realtime Detection of DDOS attacks using Apache Spark and MLLib

Realtime Detection of DDOS attacks using Apache Spark and MLLibRyan Bosshart In this talk we will show how Hadoop Ecosystem tools like Apache Kafka, Spark, and MLLib can be used in various real-time architectures and how they can be used to perform real-time detection of a DDOS attack. We will explain some of the challenges in building real-time architectures, followed by walking through the DDOS detection example and a live demo. This talk is appropriate for anyone interested in Security, IoT, Apache Kafka, Spark, or Hadoop.

Presenter Ryan Bosshart is a Systems Engineer at Cloudera and is the first 3 time presenter at BigDataMadison!

Whats new in Oracle Database 12c release 12.1.0.2

Whats new in Oracle Database 12c release 12.1.0.2Connor McDonald This document provides an overview of new features in Oracle Database 12c Release 1 (12.1.0.2). It discusses Oracle Database In-Memory for accelerating analytics, improvements for developers like support for JSON and RESTful services, capabilities for accessing big data using SQL, enhancements to Oracle Multitenant for database consolidation, and other performance improvements. The document also briefly outlines features like Oracle Rapid Home Provisioning, Database Backup Logging Recovery Appliance, and Oracle Key Vault.

What's New in Apache Hive 3.0 - Tokyo

What's New in Apache Hive 3.0 - TokyoDataWorks Summit The document discusses new features and enhancements in Apache Hive 3.0 including:

1. Improved transactional capabilities with ACID v2 that provide faster performance compared to previous versions while also supporting non-bucketed tables and non-ORC formats.

2. New materialized view functionality that allows queries to be rewritten to improve performance by leveraging pre-computed results stored in materialized views.

3. Enhancements to LLAP workload management that improve query scheduling and enable better sharing of resources across users.

What's New in Apache Hive 3.0?

What's New in Apache Hive 3.0?DataWorks Summit Apache Hive is a rapidly evolving project, many people are loved by the big data ecosystem. Hive continues to expand support for analytics, reporting, and bilateral queries, and the community is striving to improve support along with many other aspects and use cases. In this lecture, we introduce the latest and greatest features and optimization that appeared in this project last year. This includes benchmarks covering LLAP, Apache Druid's materialized views and integration, workload management, ACID improvements, using Hive in the cloud, and performance improvements. I will also tell you a little about what you can expect in the future.

Apache Eagle - Monitor Hadoop in Real Time

Apache Eagle - Monitor Hadoop in Real TimeDataWorks Summit/Hadoop Summit Apache Eagle is a distributed real-time monitoring and alerting engine for Hadoop created by eBay to address limitations of existing tools in handling large volumes of metrics and logs from Hadoop clusters. It provides data activity monitoring, job performance monitoring, and unified monitoring. Eagle detects anomalies using machine learning algorithms and notifies users through alerts. It has been deployed across multiple eBay clusters with over 10,000 nodes and processes hundreds of thousands of events per day.

SplunkLive! Frankfurt 2018 - Data Onboarding Overview

SplunkLive! Frankfurt 2018 - Data Onboarding OverviewSplunk Presented at SplunkLive! Frankfurt 2018:

Splunk Data Collection Architecture

Apps and Technology Add-ons

Demos / Examples

Best Practices

Resources and Q&A

Real time analytics

Real time analyticsLeandro Totino Pereira Getting real-time analytics for devices/application/business monitoring from trillions of events and petabytes of data like companies Netflix, Uber, Alibaba, Paypal, Ebay, Metamarkets do.

Real Time Data Processing Using Spark Streaming

Real Time Data Processing Using Spark StreamingHari Shreedharan This is the talk I gave at the Seattle Spark Meetup in March, 2015. I discussed some Spark Streaming fundamentals, integration points with Kafka, Flume etc.

Taming Big Data with Big SQL 3.0

Taming Big Data with Big SQL 3.0Nicolas Morales This document discusses Big SQL 3.0, a SQL query engine for analyzing large datasets in Hadoop. Big SQL 3.0 leverages an advanced SQL compiler and native runtime to provide high performance SQL queries without requiring data to be copied. It supports features like stored procedures, functions, and comprehensive security including row and column level access controls. The document provides an overview of Big SQL 3.0's architecture and how it integrates with and utilizes existing Hadoop components to analyze data stored in HDFS and Hive.

Tugdual Grall - Real World Use Cases: Hadoop and NoSQL in Production

Tugdual Grall - Real World Use Cases: Hadoop and NoSQL in ProductionCodemotion What’s important about a technology is what you can use it to do. I’ve looked at what a number of groups are doing with Apache Hadoop and NoSQL in production, and I will relay what worked well for them and what did not. Drawing from real world use cases, I show how people who understand these new approaches can employ them well in conjunction with traditional approaches and existing applications. Thread Detection, Datawarehouse optimization, Marketing Efficiency, Biometric Database are some examples exposed during this presentation.

Application Architectures with Hadoop | Data Day Texas 2015

Application Architectures with Hadoop | Data Day Texas 2015Cloudera, Inc. This document discusses application architectures using Hadoop. It begins with an introduction to the speaker and his book on Hadoop architectures. It then presents a case study on clickstream analysis, describing how web logs could be analyzed in Hadoop. The document discusses challenges of Hadoop implementation and various architectural considerations for data storage, modeling, ingestion, processing and more. It focuses on choices for storage layers, file formats, schema design and processing engines like MapReduce, Spark and Impala.

Application Architectures with Hadoop

Application Architectures with Hadoophadooparchbook This document discusses application architectures using Hadoop. It provides an example case study of clickstream analysis. It covers challenges of Hadoop implementation and various architectural considerations for data storage and modeling, data ingestion, and data processing. For data processing, it discusses different processing engines like MapReduce, Pig, Hive, Spark and Impala. It also discusses what specific processing needs to be done for the clickstream data like sessionization and filtering.

Databricks Platform.pptx

Databricks Platform.pptxAlex Ivy The document provides an overview of the Databricks platform, which offers a unified environment for data engineering, analytics, and AI. It describes how Databricks addresses the complexity of managing data across siloed systems by providing a single "data lakehouse" platform where all data and analytics workloads can be run. Key features highlighted include Delta Lake for ACID transactions on data lakes, auto loader for streaming data ingestion, notebooks for interactive coding, and governance tools to securely share and catalog data and models.

SplunkLive! Munich 2018: Data Onboarding Overview

SplunkLive! Munich 2018: Data Onboarding OverviewSplunk Presented at SplunkLive! Munich 2018:

- Splunk Data Collection Architecture

- Apps & Technology Add-ons

- Demons / Examples

- Best Practices

- Resources

10 Big Data Technologies you Didn't Know About

10 Big Data Technologies you Didn't Know About Jesus Rodriguez This document introduces several big data technologies that are less well known than traditional solutions like Hadoop and Spark. It discusses Apache Flink for stream processing, Apache Samza for processing real-time data from Kafka, Google Cloud Dataflow which provides a managed service for batch and stream data processing, and StreamSets Data Collector for collecting and processing data in real-time. It also covers machine learning technologies like TensorFlow for building dataflow graphs, and cognitive computing services from Microsoft. The document aims to think beyond traditional stacks and learn from companies building pipelines at scale.

Unifying your data management with Hadoop

Unifying your data management with HadoopJayant Shekhar This document discusses using Hadoop to unify data management. It describes challenges with managing huge volumes of fast-moving machine data and outlines an overall architecture using Hadoop components like HDFS, HBase, Solr, Impala and OpenTSDB to store, search, analyze and build features from different types of data. Key aspects of the architecture include intelligent search, batch and real-time analytics, parsing, time series data and alerts.

Ad

More from Gwen (Chen) Shapira (20)

Velocity 2019 - Kafka Operations Deep Dive

Velocity 2019 - Kafka Operations Deep DiveGwen (Chen) Shapira In which disk-related failure

scenarios of Apache Kafka are discussed in unprecedented level of detail

Lies Enterprise Architects Tell - Data Day Texas 2018 Keynote

Lies Enterprise Architects Tell - Data Day Texas 2018 Keynote Gwen (Chen) Shapira The document discusses lies that architects sometimes tell and truths they avoid. It provides examples of six common lies: 1) saying a system is real-time or has big data when it really has specific requirements, 2) claiming a microservices architecture exists when the goal is still to migrate, 3) saying hybrid/multi-cloud architectures don't exist when the architecture is just copy-pasted, 4) using "best of breed" when really using only one of everything, 5) claiming something can't be done at an organization due to its nature when other similar organizations succeeded, and 6) avoiding risk or change by safely interpreting things in a non-threatening way. The document advocates defining responsibilities clearly, embracing change, taking measured

Gluecon - Kafka and the service mesh

Gluecon - Kafka and the service meshGwen (Chen) Shapira Exploring the problem of Microservices communication and how both Kafka and Service Mesh solutions address it. We then look at some approaches for combining both.

Multi-Cluster and Failover for Apache Kafka - Kafka Summit SF 17

Multi-Cluster and Failover for Apache Kafka - Kafka Summit SF 17Gwen (Chen) Shapira This document discusses disaster recovery strategies for Apache Kafka clusters running across multiple data centers. It outlines several failure scenarios like an entire data center being demolished and recommends solutions like running a single Kafka cluster across multiple near-by data centers. It then describes a "stretch cluster" approach using 3 data centers with replication between them to provide high availability. The document also discusses active-active replication between two data center clusters and challenges around consumer offsets not being identical across data centers during a failover. It recommends approaches like tracking timestamps and failing over consumers based on time.

Papers we love realtime at facebook

Papers we love realtime at facebookGwen (Chen) Shapira Presentation for Papers We Love at QCON NYC 17. I didn't write the paper, good people at Facebook did. But I sure enjoyed reading it and presenting it.

Kafka reliability velocity 17

Kafka reliability velocity 17Gwen (Chen) Shapira The document discusses reliability guarantees in Apache Kafka. It explains that Kafka provides reliability through replication of data across multiple brokers. As long as the minimum number of in-sync replicas (ISRs) is maintained, messages will not be lost even if individual brokers fail. It also discusses best practices for producers and consumers to ensure data is not lost such as using acks=all for producers, disabling unclean leader election, committing offsets only after processing is complete, and monitoring for errors, lag and reconciliation of message counts.

Multi-Datacenter Kafka - Strata San Jose 2017

Multi-Datacenter Kafka - Strata San Jose 2017Gwen (Chen) Shapira This document discusses strategies for building large-scale stream infrastructures across multiple data centers using Apache Kafka. It outlines common multi-data center patterns like stretched clusters, active/passive clusters, and active/active clusters. It also covers challenges like maintaining ordering and consumer offsets across data centers and potential solutions.

Streaming Data Integration - For Women in Big Data Meetup

Streaming Data Integration - For Women in Big Data MeetupGwen (Chen) Shapira A stream processing platform is not an island unto itself; it must be connected to all of your existing data systems, applications, and sources. In this talk, we will provide different options for integrating systems and applications with Apache Kafka, with a focus on the Kafka Connect framework and the ecosystem of Kafka connectors. We will discuss the intended use cases for Kafka Connect and share our experience and best practices for building large-scale data pipelines using Apache Kafka.

Kafka at scale facebook israel

Kafka at scale facebook israelGwen (Chen) Shapira This document provides guidance on scaling Apache Kafka clusters and tuning performance. It discusses expanding Kafka clusters horizontally across inexpensive servers for increased throughput and CPU utilization. Key aspects that impact performance like disk layout, OS tuning, Java settings, broker and topic monitoring, client tuning, and anticipating problems are covered. Application performance can be improved through configuration of batch size, compression, and request handling, while consumer performance relies on partitioning, fetch settings, and avoiding perpetual rebalances.

Fraud Detection for Israel BigThings Meetup

Fraud Detection for Israel BigThings MeetupGwen (Chen) Shapira Modern data systems don't just process massive amounts of data, they need to do it very fast. Using fraud detection as a convenient example, this session will include best practices on how to build real-time data processing applications using Apache Kafka. We'll explain how Kafka makes real-time processing almost trivial, discuss the pros and cons of the famous lambda architecture, help you choose a stream processing framework and even talk about deployment options.

Kafka Reliability - When it absolutely, positively has to be there

Kafka Reliability - When it absolutely, positively has to be thereGwen (Chen) Shapira Kafka provides reliability guarantees through replication and configuration settings. It replicates data across multiple brokers to protect against failures. Producers can ensure data is committed to all in-sync replicas through configuration settings like request.required.acks. Consumers maintain offsets and can commit after processing to prevent data loss. Monitoring is also important to detect any potential issues or data loss in the Kafka system.

Nyc kafka meetup 2015 - when bad things happen to good kafka clusters

Nyc kafka meetup 2015 - when bad things happen to good kafka clustersGwen (Chen) Shapira This document contains stories of things that went wrong with production Kafka clusters in an effort to provide lessons learned. Some examples include losing all data by deleting an important topic, running Kafka with an outdated version, improperly configuring replication factors, and running Kafka logs in a temporary directory which resulted in data loss. The goal is to share these stories so others can learn from mistakes and better configure their Kafka clusters for reliability.

Fraud Detection Architecture

Fraud Detection ArchitectureGwen (Chen) Shapira This session will go into best practices and detail on how to architect a near real-time application on Hadoop using an end-to-end fraud detection case study as an example. It will discuss various options available for ingest, schema design, processing frameworks, storage handlers and others, available for architecting this fraud detection application and walk through each of the architectural decisions among those choices.

Kafka and Hadoop at LinkedIn Meetup

Kafka and Hadoop at LinkedIn MeetupGwen (Chen) Shapira The document discusses several methods for getting data from Kafka into Hadoop, including batch tools like Camus, Sqoop2, and NiFi. It also covers streaming options like using Kafka as a source in Hive with the HiveKa storage handler, Spark Streaming, and Storm. The presenter is a software engineer and former consultant who now works at Cloudera on projects including Sqoop, Kafka, and Flume. They also maintain a blog on these topics and discuss setting up and using Kafka in Cloudera Manager.

Kafka & Hadoop - for NYC Kafka Meetup

Kafka & Hadoop - for NYC Kafka MeetupGwen (Chen) Shapira The document discusses various options for getting data from Kafka into Hadoop, including Camus, Flume, Spark Streaming, and Storm. It provides information on how each works and their advantages and disadvantages. The presenter has 15 years of experience moving data and is now a Cloudera engineer working on projects like Flume, Sqoop, and Kafka.

R for hadoopers

R for hadoopersGwen (Chen) Shapira This document provides an overview of using R, Hadoop, and Rhadoop for scalable analytics. It begins with introductions to basic R concepts like data types, vectors, lists, and data frames. It then covers Hadoop basics like MapReduce. Next, it discusses libraries for data manipulation in R like reshape2 and plyr. Finally, it focuses on Rhadoop projects like RMR for implementing MapReduce in R and considerations for using RMR effectively.

Incredible Impala

Incredible Impala Gwen (Chen) Shapira Cloudera Impala: The Open Source, Distributed SQL Query Engine for Big Data. The Cloudera Impala project is pioneering the next generation of Hadoop capabilities: the convergence of fast SQL queries with the capacity, scalability, and flexibility of a Apache Hadoop cluster. With Impala, the Hadoop ecosystem now has an open-source codebase that helps users query data stored in Hadoop-based enterprise data hubs in real time, using familiar SQL syntax.

This talk will begin with an overview of the challenges organizations face as they collect and process more data than ever before, followed by an overview of Impala from the user's perspective and a dive into Impala's architecture. It concludes with stories of how Cloudera's customers are using Impala and the benefits they see.

Data Wrangling and Oracle Connectors for Hadoop

Data Wrangling and Oracle Connectors for HadoopGwen (Chen) Shapira This document discusses loading data from Hadoop into Oracle databases using Oracle connectors. It describes how the Oracle Loader for Hadoop and Oracle SQL Connector for HDFS can load data from HDFS into Oracle tables much faster than traditional methods like Sqoop by leveraging parallel processing in Hadoop. The connectors optimize the loading process by automatically partitioning, sorting, and formatting the data into Oracle blocks to achieve high performance loads. Measuring the CPU time needed per gigabyte loaded allows estimating how long full loads will take based on available resources.

Scaling etl with hadoop shapira 3

Scaling etl with hadoop shapira 3Gwen (Chen) Shapira This document discusses scaling ETL processes with Hadoop. It describes using Hadoop for extracting data from various structured and unstructured sources, transforming data using MapReduce and other tools, and loading data into data warehouses or other targets. Specific techniques covered include using Sqoop and Flume for extraction, partitioning and tuning data structures for transformation, and loading data in parallel for scaling. Workflow management with Oozie and monitoring with Cloudera Manager are also discussed.

Is hadoop for you

Is hadoop for youGwen (Chen) Shapira 1) Hadoop is well-suited for organizations that have large amounts of non-relational or unstructured data from sources like logs, sensor data, or social media. It allows for the distributed storage and parallel processing of such large datasets across clusters of commodity hardware.

2) Hadoop uses the Hadoop Distributed File System (HDFS) to reliably store large files across nodes in a cluster and allows for the parallel processing of data using the MapReduce programming model. This architecture provides benefits like scalability, flexibility, reliability, and low costs compared to traditional database solutions.

3) To get started with Hadoop, organizations should run some initial proof-of-concept projects using freely available cloud resources

Recently uploaded (20)

Process Parameter Optimization for Minimizing Springback in Cold Drawing Proc...

Process Parameter Optimization for Minimizing Springback in Cold Drawing Proc...Journal of Soft Computing in Civil Engineering In tube drawing process, a tube is pulled out through a die and a plug to reduce its diameter and thickness as per the requirement. Dimensional accuracy of cold drawn tubes plays a vital role in the further quality of end products and controlling rejection in manufacturing processes of these end products. Springback phenomenon is the elastic strain recovery after removal of forming loads, causes geometrical inaccuracies in drawn tubes. Further, this leads to difficulty in achieving close dimensional tolerances. In the present work springback of EN 8 D tube material is studied for various cold drawing parameters. The process parameters in this work include die semi-angle, land width and drawing speed. The experimentation is done using Taguchi’s L36 orthogonal array, and then optimization is done in data analysis software Minitab 17. The results of ANOVA shows that 15 degrees die semi-angle,5 mm land width and 6 m/min drawing speed yields least springback. Furthermore, optimization algorithms named Particle Swarm Optimization (PSO), Simulated Annealing (SA) and Genetic Algorithm (GA) are applied which shows that 15 degrees die semi-angle, 10 mm land width and 8 m/min drawing speed results in minimal springback with almost 10.5 % improvement. Finally, the results of experimentation are validated with Finite Element Analysis technique using ANSYS.

The Gaussian Process Modeling Module in UQLab

The Gaussian Process Modeling Module in UQLabJournal of Soft Computing in Civil Engineering We introduce the Gaussian process (GP) modeling module developed within the UQLab software framework. The novel design of the GP-module aims at providing seamless integration of GP modeling into any uncertainty quantification workflow, as well as a standalone surrogate modeling tool. We first briefly present the key mathematical tools on the basis of GP modeling (a.k.a. Kriging), as well as the associated theoretical and computational framework. We then provide an extensive overview of the available features of the software and demonstrate its flexibility and user-friendliness. Finally, we showcase the usage and the performance of the software on several applications borrowed from different fields of engineering. These include a basic surrogate of a well-known analytical benchmark function; a hierarchical Kriging example applied to wind turbine aero-servo-elastic simulations and a more complex geotechnical example that requires a non-stationary, user-defined correlation function. The GP-module, like the rest of the scientific code that is shipped with UQLab, is open source (BSD license).

International Journal of Distributed and Parallel systems (IJDPS)

International Journal of Distributed and Parallel systems (IJDPS)samueljackson3773 The growth of Internet and other web technologies requires the development of new

algorithms and architectures for parallel and distributed computing. International journal of

Distributed and parallel systems is a bimonthly open access peer-reviewed journal aims to

publish high quality scientific papers arising from original research and development from

the international community in the areas of parallel and distributed systems. IJDPS serves

as a platform for engineers and researchers to present new ideas and system technology,

with an interactive and friendly, but strongly professional atmosphere.

Compiler Design Unit1 PPT Phases of Compiler.pptx

Compiler Design Unit1 PPT Phases of Compiler.pptxRushaliDeshmukh2 Compiler phases

Lexical analysis

Syntax analysis

Semantic analysis

Intermediate (machine-independent) code generation

Intermediate code optimization

Target (machine-dependent) code generation

Target code optimization

15th International Conference on Computer Science, Engineering and Applicatio...

15th International Conference on Computer Science, Engineering and Applicatio...IJCSES Journal #computerscience #programming #coding #technology #programmer #python #computer #developer #tech #coder #javascript #java #codinglife #html #code #softwaredeveloper #webdeveloper #software #cybersecurity #linux #computerengineering #webdevelopment #softwareengineer #machinelearning #hacking #engineering #datascience #css #programmers #pythonprogramming

DATA-DRIVEN SHOULDER INVERSE KINEMATICS YoungBeom Kim1 , Byung-Ha Park1 , Kwa...

DATA-DRIVEN SHOULDER INVERSE KINEMATICS YoungBeom Kim1 , Byung-Ha Park1 , Kwa...charlesdick1345 This paper proposes a shoulder inverse kinematics (IK) technique. Shoulder complex is comprised of the sternum, clavicle, ribs, scapula, humerus, and four joints.

Data Structures_Searching and Sorting.pptx

Data Structures_Searching and Sorting.pptxRushaliDeshmukh2 Sorting Order and Stability in Sorting.

Concept of Internal and External Sorting.

Bubble Sort,

Insertion Sort,

Selection Sort,

Quick Sort and

Merge Sort,

Radix Sort, and

Shell Sort,

External Sorting, Time complexity analysis of Sorting Algorithms.

"Feed Water Heaters in Thermal Power Plants: Types, Working, and Efficiency G...

"Feed Water Heaters in Thermal Power Plants: Types, Working, and Efficiency G...Infopitaara A feed water heater is a device used in power plants to preheat water before it enters the boiler. It plays a critical role in improving the overall efficiency of the power generation process, especially in thermal power plants.

🔧 Function of a Feed Water Heater:

It uses steam extracted from the turbine to preheat the feed water.

This reduces the fuel required to convert water into steam in the boiler.

It supports Regenerative Rankine Cycle, increasing plant efficiency.

🔍 Types of Feed Water Heaters:

Open Feed Water Heater (Direct Contact)

Steam and water come into direct contact.

Mixing occurs, and heat is transferred directly.

Common in low-pressure stages.

Closed Feed Water Heater (Surface Type)

Steam and water are separated by tubes.

Heat is transferred through tube walls.

Common in high-pressure systems.

⚙️ Advantages:

Improves thermal efficiency.

Reduces fuel consumption.

Lowers thermal stress on boiler components.

Minimizes corrosion by removing dissolved gases.

Avnet Silica's PCIM 2025 Highlights Flyer

Avnet Silica's PCIM 2025 Highlights FlyerWillDavies22 See what you can expect to find on Avnet Silica's stand at PCIM 2025.

Artificial Intelligence (AI) basics.pptx

Artificial Intelligence (AI) basics.pptxaditichinar its all about Artificial Intelligence(Ai) and Machine Learning and not on advanced level you can study before the exam or can check for some information on Ai for project

π0.5: a Vision-Language-Action Model with Open-World Generalization

π0.5: a Vision-Language-Action Model with Open-World GeneralizationNABLAS株式会社 今回の資料「Transfusion / π0 / π0.5」は、画像・言語・アクションを統合するロボット基盤モデルについて紹介しています。

拡散×自己回帰を融合したTransformerをベースに、π0.5ではオープンワールドでの推論・計画も可能に。

This presentation introduces robot foundation models that integrate vision, language, and action.

Built on a Transformer combining diffusion and autoregression, π0.5 enables reasoning and planning in open-world settings.

Lidar for Autonomous Driving, LiDAR Mapping for Driverless Cars.pptx

Lidar for Autonomous Driving, LiDAR Mapping for Driverless Cars.pptxRishavKumar530754 LiDAR-Based System for Autonomous Cars

Autonomous Driving with LiDAR Tech

LiDAR Integration in Self-Driving Cars

Self-Driving Vehicles Using LiDAR

LiDAR Mapping for Driverless Cars

Value Stream Mapping Worskshops for Intelligent Continuous Security

Value Stream Mapping Worskshops for Intelligent Continuous SecurityMarc Hornbeek This presentation provides detailed guidance and tools for conducting Current State and Future State Value Stream Mapping workshops for Intelligent Continuous Security.

AI-assisted Software Testing (3-hours tutorial)

AI-assisted Software Testing (3-hours tutorial)Vəhid Gəruslu Invited tutorial at the Istanbul Software Testing Conference (ISTC) 2025 https://iststc.com/

ELectronics Boards & Product Testing_Shiju.pdf

ELectronics Boards & Product Testing_Shiju.pdfShiju Jacob This presentation provides a high level insight about DFT analysis and test coverage calculation, finalizing test strategy, and types of tests at different levels of the product.

Process Parameter Optimization for Minimizing Springback in Cold Drawing Proc...

Process Parameter Optimization for Minimizing Springback in Cold Drawing Proc...Journal of Soft Computing in Civil Engineering

Data Architectures for Robust Decision Making

- 1. Designing Data Architectures for Robust Decision Making Gwen Shapira / Software Engineer

- 2. 2©2014 Cloudera, Inc. All rights reserved. • 15 years of moving data around • Formerly consultant • Now Cloudera Engineer: – Sqoop Committer – Kafka – Flume • @gwenshap About Me

- 3. 3©2014 Cloudera, Inc. All rights reserved. There’s a book on that!

- 4. 4 About you: You know Hadoop

- 5. “Big Data” is stuck at The Lab.

- 6. 6 We want to move to The Factory

- 7. 7Click to enter confidentiality information

- 8. 8 What does it mean to “Systemize”? • Ability to easily add new data sources • Easily improve and expend analytics • Ease data access by standardizing metadata and storage • Ability to discover mistakes and to recover from them • Ability to safely experiment with new approaches Click to enter confidentiality information

- 9. 9 We will discuss: • Actual decision making • Data Science • Machine learning • Algorithms Click to enter confidentiality information We will not discuss: • Architectures • Patterns • Ingest • Storage • Schemas • Metadata • Streaming • Experimenting • Recovery

- 10. 10 So how do we build real data architectures? Click to enter confidentiality information

- 11. 11 The Data Bus

- 12. 1212 Client Source Data Pipelines Start like this.

- 13. 1313 Client Source Client Client Client Then we reuse them

- 14. 1414 Client Backend Client Client Client Then we add consumers to the existing sources Another Backend

- 15. 1515 Client Backend Client Client Client Then it starts to look like this Another Backend Another Backend Another Backend

- 16. 1616 Client Backend Client Client Client With maybe some of this Another Backend Another Backend Another Backend

- 17. 17 Adding applications should be easier We need: • Shared infrastructure for sending records • Infrastructure must scale • Set of agreed-upon record schemas

- 18. 18 Kafka Based Ingest Architecture 18 Source System Source System Source System Source System Kafka decouples Data Pipelines Hadoop Security Systems Real-time monitoring Data Warehouse Kafka Producer s Brokers Consume rs Kafka decouples Data Pipelines

- 19. 19 Retain All Data Click to enter confidentiality information

- 20. 20 Data Pipeline – Traditional View Raw data Raw data Clean data Aggregated dataClean data Enriched data Input Output Waste of diskspace

- 21. 21©2014 Cloudera, Inc. All rights reserved. It is all valuable data Raw data Raw data Clean data Aggregated dataClean data Enriched data Filtered data Dash board Report Data scientis t Alerts OMG

- 22. 22 Hadoop Based ETL – The FileSystem is the DB /user/… /user/gshapira/testdata/orders /data/<database>/<table>/<partition> /data/<biz unit>/<app>/<dataset>/partition /data/pharmacy/fraud/orders/date=20131101 /etl/<biz unit>/<app>/<dataset>/<stage> /etl/pharmacy/fraud/orders/validated

- 23. 23 Store intermediate data /etl/<biz unit>/<app>/<dataset>/<stage>/<dataset_id> /etl/pharmacy/fraud/orders/raw/date=20131101 /etl/pharmacy/fraud/orders/deduped/date=20131101 /etl/pharmacy/fraud/orders/validated/date=20131101 /etl/pharmacy/fraud/orders_labs/merged/date=20131101 /etl/pharmacy/fraud/orders_labs/aggregated/date=20131101 /etl/pharmacy/fraud/orders_labs/ranked/date=20131101 Click to enter confidentiality information

- 24. 24 Batch ETL is old news Click to enter confidentiality information

- 25. 25 Small Problem! • HDFS is optimized for large chunks of data • Don’t write individual events of micro-batches • Think 100M-2G batches • What do we do with small events? Click to enter confidentiality information

- 26. 26 Well, we have this data bus… Click to enter confidentiality information 0 1 2 3 4 5 6 7 8 9 1 0 1 1 1 2 1 3 0 1 2 3 4 5 6 7 8 9 1 0 1 1 0 1 2 3 4 5 6 7 8 9 1 0 1 1 1 2 1 3 Partition 1 Partition 2 Partition 3 Writes Old New

- 27. 27 Kafka has topics How about? <biz unit>.<app>.<dataset>.<stage> pharmacy.fraud.orders.raw pharmacy.fraud.orders.deduped pharmacy.fraud.orders.validated pharmacy.fraud.orders_labs.merged Click to enter confidentiality information

- 28. 28©2014 Cloudera, Inc. All rights reserved. It’s (almost) all topics Raw data Raw data Clean data Aggregated dataClean data Filtered data Dash board Report Data scientis t Alerts OMG Enriched Data

- 29. 29 Benefits • Recover from accidents • Debug suspicious results • Fix algorithm errors • Experiment with new algorithms • Expend pipelines • Jump-start expended pipelines Click to enter confidentiality information

- 30. 30 Kinda Lambda

- 31. 31 Lambda Architecture • Immutable events • Store intermediate stages • Combine Batches and Streams • Reprocessing Click to enter confidentiality information

- 32. 32 What we don’t like Maintaining two applications Often in two languages That do the same thing Click to enter confidentiality information

- 33. 33 Pain Avoidance #1 – Use Spark + SparkStreaming • Spark is awesome for batch, so why not? – The New Kid that isn’t that New Anymore – Easily 10x less code – Extremely Easy and Powerful API – Very good for machine learning – Scala, Java, and Python – RDDs – DAG Engine Click to enter confidentiality information

- 34. 34 Spark Streaming • Calling Spark in a Loop • Extends RDDs with DStream • Very Little Code Changes from ETL to Streaming Confidentiality Information Goes Here

- 35. 35 Spark Streaming Confidentiality Information Goes Here Single Pass Source Receiver RDD Source Receiver RDD RDD Filter Count Print Source Receiver RDD RDD RDD Single Pass Filter Count Print Pre-first Batch First Batch Second Batch

- 36. 36 Small Example val sparkConf = new SparkConf() .setMaster(args(0)).setAppName(this.getClass.getCanonicalName) val ssc = new StreamingContext(sparkConf, Seconds(10)) // Create the DStream from data sent over the network val dStream = ssc.socketTextStream(args(1), args(2).toInt, StorageLevel.MEMORY_AND_DISK_SER) // Counting the errors in each RDD in the stream val errCountStream = dStream.transform(rdd => ErrorCount.countErrors(rdd)) val stateStream = errCountStream.updateStateByKey[Int](updateFunc) errCountStream.foreachRDD(rdd => { System.out.println("Errors this minute:%d".format(rdd.first()._2)) }) Click to enter confidentiality information

- 37. 37 Pain Avoidance #2 – Split the Stream Why do we even need stream + batch? • Batch efficiencies • Re-process to fix errors • Re-process after delayed arrival What if we could re-play data? Click to enter confidentiality information

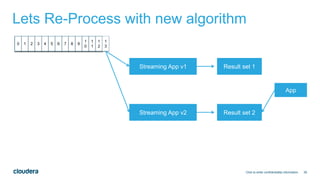

- 38. 38 Lets Re-Process with new algorithm Click to enter confidentiality information 0 1 2 3 4 5 6 7 8 9 1 0 1 1 1 2 1 3 Streaming App v1 Streaming App v2 Result set 1 Result set 2 App

- 39. 39 Lets Re-Process with new algorithm Click to enter confidentiality information 0 1 2 3 4 5 6 7 8 9 1 0 1 1 1 2 1 3 Streaming App v1 Streaming App v2 Result set 1 Result set 2 App

- 40. 40 Oh no, we just got a bunch of data for yesterday! Click to enter confidentiality information 0 1 2 3 4 5 6 7 8 9 1 0 1 1 1 2 1 3 Streaming App Streaming App Today Yesterday

- 41. 41 Note: No need to choose between the approaches. There are good reasons to do both. Click to enter confidentiality information

- 42. 42 Prediction: Batch vs. Streaming distinction is going away. Click to enter confidentiality information

- 43. 43 Yes, you really need a Schema Click to enter confidentiality information

- 44. 44 Schema is a MUST HAVE for data integration Click to enter confidentiality information

- 46. 46 Remember that we want this? 46 Source System Source System Source System Source System Hadoop Security Systems Real-time monitoring Data Warehouse Kafka Producer s Brokers Consume rs

- 47. 47 This means we need this: Click to enter confidentiality information Source System Source System Source System Source System Hadoop Security Systems Real-time monitoring Data Warehouse Kafka Schema Repository

- 48. 48 We can do it in few ways • People go around asking each other: “So, what does the 5th field of the messages in topic Blah contain?” • There’s utility code for reading/writing messages that everyone reuses • Schema embedded in the message • A centralized repository for schemas – Each message has Schema ID – Each topic has Schema ID Click to enter confidentiality information