Data Integration through Data Virtualization (SQL Server Konferenz 2019)

9 likes3,095 views

Data Integration through Data Virtualization - PolyBase and new SQL Server 2019 Features (Presented at SQL Server Konferenz 2019 on February 21st, 2019)

1 of 142

Downloaded 131 times

![Create External Table

CREATE EXTERNAL TABLE [SchemaName].[TableName] (

[ColumnName] INT NOT NULL

) WITH (

LOCATION = <FileName>',

DATA_SOURCE = <DataSourceName>,

FILE_FORMAT = <FileFormatName>

)](https://image.slidesharecdn.com/sqlkonferenz-cathrinewilhelmsen-datavirtualization-190221100634/85/Data-Integration-through-Data-Virtualization-SQL-Server-Konferenz-2019-78-320.jpg)

![Unexpected error encountered filling record

reader buffer: HadoopExecutionException:

Too long string in column [-1]:

Actual len = [4242]. MaxLEN=[4000]](https://image.slidesharecdn.com/sqlkonferenz-cathrinewilhelmsen-datavirtualization-190221100634/85/Data-Integration-through-Data-Virtualization-SQL-Server-Konferenz-2019-92-320.jpg)

Ad

Recommended

Data virtualization, Data Federation & IaaS with Jboss Teiid

Data virtualization, Data Federation & IaaS with Jboss TeiidAnil Allewar Enterprise have always grappled with the problem of information silos that needed to be merged using multiple data warehouses(DWs) and business intelligence(BI) tools so that enterprises could mine this disparate data for businessdecisions and strategy. Traditionally this data integration was done with ETL by consolidating multiple DBMS into a single data storage facility.

Data virtualization enables abstraction, transformation, federation, and delivery of data taken from variety of heterogeneous data sources as if it is a single virtual data source without the need to physically copy the data for integration. It allows consuming applications or users to access data from these various sources via a request to a single access point and delivers information-as-a-service (IaaS).

In this presentation, we will explore what data virtualization is and how it differs from the traditional data integration architecture. We’ll also look at validating the data virtualization and federation concepts by working through an example(see videos at the GitHub repo) to federate data across 2 heterogeneous data sources; mySQL and MongoDB using the JBoss Teiid data virtualization platform.

Data Platform Overview

Data Platform OverviewHamid J. Fard The document discusses how businesses need to build a data strategy and modernize their data platforms to harness the power of data from diverse and growing sources. It provides examples of how organizations like healthcare and energy companies are using technologies like machine learning, real-time analytics, and predictive modeling on data from various sources to improve outcomes, predict trends, and drive business decisions. The Microsoft data platform is positioned as helping businesses manage both traditional and new forms of data, gain insights faster, and transform into data-driven organizations through offerings like SQL Server, Azure, Power BI, and the Internet of Things.

Introduction to Azure Data Lake

Introduction to Azure Data LakeAntonios Chatzipavlis This document provides an introduction and overview of Azure Data Lake. It describes Azure Data Lake as a single store of all data ranging from raw to processed that can be used for reporting, analytics and machine learning. It discusses key Azure Data Lake components like Data Lake Store, Data Lake Analytics, HDInsight and the U-SQL language. It compares Data Lakes to data warehouses and explains how Azure Data Lake Store, Analytics and U-SQL process and transform data at scale.

Azure Lowlands: An intro to Azure Data Lake

Azure Lowlands: An intro to Azure Data LakeRick van den Bosch These are the slides for my talk "An intro to Azure Data Lake" at Azure Lowlands 2019. The session was held on Friday January 25th from 14:20 - 15:05 in room Santander.

Azure Data Factory V2; The Data Flows

Azure Data Factory V2; The Data FlowsThomas Sykes The document discusses Azure Data Factory V2 data flows. It will provide an introduction to Azure Data Factory, discuss data flows, and have attendees build a simple data flow to demonstrate how they work. The speaker will introduce Azure Data Factory and data flows, explain concepts like pipelines, linked services, and data flows, and guide a hands-on demo where attendees build a data flow to join customer data to postal district data to add matching postal towns.

Choosing technologies for a big data solution in the cloud

Choosing technologies for a big data solution in the cloudJames Serra Has your company been building data warehouses for years using SQL Server? And are you now tasked with creating or moving your data warehouse to the cloud and modernizing it to support “Big Data”? What technologies and tools should use? That is what this presentation will help you answer. First we will cover what questions to ask concerning data (type, size, frequency), reporting, performance needs, on-prem vs cloud, staff technology skills, OSS requirements, cost, and MDM needs. Then we will show you common big data architecture solutions and help you to answer questions such as: Where do I store the data? Should I use a data lake? Do I still need a cube? What about Hadoop/NoSQL? Do I need the power of MPP? Should I build a "logical data warehouse"? What is this lambda architecture? Can I use Hadoop for my DW? Finally, we’ll show some architectures of real-world customer big data solutions. Come to this session to get started down the path to making the proper technology choices in moving to the cloud.

Data Virtualization Primer - Introduction

Data Virtualization Primer - IntroductionKenneth Peeples This document provides an introduction to data virtualization and JBoss Data Virtualization. It discusses key concepts like data services, connectors, models, and virtual databases. It describes the architecture and components of JBoss Data Virtualization including tools for creating data views, the runtime environment, and repository. Use cases like business intelligence, 360-degree views, cloud data integration, and big data integration are also covered.

Microsoft Data Platform - What's included

Microsoft Data Platform - What's includedJames Serra This document provides an overview of a speaker and their upcoming presentation on Microsoft's data platform. The speaker is a 30-year IT veteran who has worked in various roles including BI architect, developer, and consultant. Their presentation will cover collecting and managing data, transforming and analyzing data, and visualizing and making decisions from data. It will also discuss Microsoft's various product offerings for data warehousing and big data solutions.

Data Modeling on Azure for Analytics

Data Modeling on Azure for AnalyticsIke Ellis When you model data you are making two decisions:

* The location where data will be stored

* How the data will be organized for ease of use

Azure Synapse Analytics Overview (r1)

Azure Synapse Analytics Overview (r1)James Serra Azure Synapse Analytics is Azure SQL Data Warehouse evolved: a limitless analytics service, that brings together enterprise data warehousing and Big Data analytics into a single service. It gives you the freedom to query data on your terms, using either serverless on-demand or provisioned resources, at scale. Azure Synapse brings these two worlds together with a unified experience to ingest, prepare, manage, and serve data for immediate business intelligence and machine learning needs. This is a huge deck with lots of screenshots so you can see exactly how it works.

Data Virtualization and ETL

Data Virtualization and ETLLily Luo This document discusses the limitations of traditional ETL processes and how data virtualization can help address these issues. Specifically:

- ETL processes are complex, involve costly data movement between systems, and can result in data inconsistencies due to latency.

- Data virtualization eliminates data movement by providing unified access to data across different systems. It enables queries to retrieve integrated data in real-time without physical replication.

- Rocket Data Virtualization is a mainframe-resident solution that reduces TCO by offloading up to 99% of data integration processing to specialty engines, simplifying access to mainframe data via SQL.

Encompassing Information Integration

Encompassing Information Integrationnguyenfilip This document provides an overview of Teiid, an open source data integration platform. It begins with an agenda that covers what Teiid is, basic usage, tooling, demonstrations, internals, heterogeneous data sources, enterprise data services, and how attendees can get involved. The document then delves into explanations of Teiid's history, architecture, modeling tooling, dynamic and static virtual databases, tooling like the console and admin shell, and how it works internally with parsing, optimization, and federation. It concludes by discussing how developers can contribute to Teiid and opportunities for students.

Introducing Azure SQL Data Warehouse

Introducing Azure SQL Data WarehouseJames Serra The new Microsoft Azure SQL Data Warehouse (SQL DW) is an elastic data warehouse-as-a-service and is a Massively Parallel Processing (MPP) solution for "big data" with true enterprise class features. The SQL DW service is built for data warehouse workloads from a few hundred gigabytes to petabytes of data with truly unique features like disaggregated compute and storage allowing for customers to be able to utilize the service to match their needs. In this presentation, we take an in-depth look at implementing a SQL DW, elastic scale (grow, shrink, and pause), and hybrid data clouds with Hadoop integration via Polybase allowing for a true SQL experience across structured and unstructured data.

SQL Server 2019 Big Data Cluster

SQL Server 2019 Big Data ClusterMaximiliano Accotto Here are a few questions I have after the material covered so far:

1. What is the difference between the compute pool and app pool in a SQL Server 2019 big data cluster?

2. How does Polybase work differently in a SQL Server 2019 big data cluster compared to a traditional Polybase setup?

3. What components make up the storage pool in a SQL Server 2019 big data cluster?

Azure SQL Database Managed Instance

Azure SQL Database Managed InstanceJames Serra Azure SQL Database Managed Instance is a new flavor of Azure SQL Database that is a game changer. It offers near-complete SQL Server compatibility and network isolation to easily lift and shift databases to Azure (you can literally backup an on-premise database and restore it into a Azure SQL Database Managed Instance). Think of it as an enhancement to Azure SQL Database that is built on the same PaaS infrastructure and maintains all it's features (i.e. active geo-replication, high availability, automatic backups, database advisor, threat detection, intelligent insights, vulnerability assessment, etc) but adds support for databases up to 35TB, VNET, SQL Agent, cross-database querying, replication, etc. So, you can migrate your databases from on-prem to Azure with very little migration effort which is a big improvement from the current Singleton or Elastic Pool flavors which can require substantial changes.

Microsoft cloud big data strategy

Microsoft cloud big data strategyJames Serra Think of big data as all data, no matter what the volume, velocity, or variety. The simple truth is a traditional on-prem data warehouse will not handle big data. So what is Microsoft’s strategy for building a big data solution? And why is it best to have this solution in the cloud? That is what this presentation will cover. Be prepared to discover all the various Microsoft technologies and products from collecting data, transforming it, storing it, to visualizing it. My goal is to help you not only understand each product but understand how they all fit together, so you can be the hero who builds your companies big data solution.

Azure data platform overview

Azure data platform overviewAlessandro Melchiori Azure SQL Database is a relational database-as-a-service hosted in the Azure cloud that reduces costs by eliminating the need to manage virtual machines, operating systems, or database software. It provides automatic backups, high availability through geo-replication, and the ability to scale performance by changing service tiers. Azure Cosmos DB is a globally distributed, multi-model database that supports automatic indexing, multiple data models via different APIs, and configurable consistency levels with strong performance guarantees. Azure Redis Cache uses the open-source Redis data structure store with managed caching instances in Azure for improved application performance.

Designing a modern data warehouse in azure

Designing a modern data warehouse in azure Antonios Chatzipavlis This document discusses designing a modern data warehouse in Azure. It provides an overview of traditional vs. self-service data warehouses and their limitations. It also outlines challenges with current data warehouses around timeliness, flexibility, quality and findability. The document then discusses why organizations need a modern data warehouse based on criteria like customer experience, quality assurance and operational efficiency. It covers various approaches to ingesting, storing, preparing, modeling and serving data on Azure. Finally, it discusses architectures like the lambda architecture and common data models.

What's new in SQL Server 2016

What's new in SQL Server 2016James Serra The document summarizes new features in SQL Server 2016 SP1, organized into three categories: performance enhancements, security improvements, and hybrid data capabilities. It highlights key features such as in-memory technologies for faster queries, always encrypted for data security, and PolyBase for querying relational and non-relational data. New editions like Express and Standard provide more built-in capabilities. The document also reviews SQL Server 2016 SP1 features by edition, showing advanced features are now more accessible across more editions.

JBoss Enterprise Data Services (Data Virtualization)

JBoss Enterprise Data Services (Data Virtualization)plarsen67 The document discusses how JBoss Enterprise Data Services and the JBoss Enterprise SOA Platform can be used to address common business challenges related to data, decision making, inflexible systems, and manual processes. It presents five solution patterns - data foundation, information delivery, externalizing knowledge, automating decision making, and codifying business processes - and how the technologies in the JBoss platforms map to implementing these patterns to generate new business value from existing assets.

Jboss Teiid - The data you have on the place you need

Jboss Teiid - The data you have on the place you needJackson dos Santos Olveira Jboss Teiid is a data virtualization and federation system that provides a uniform API for accessing data. It allows for data from different sources like SQL and NoSQL databases, unstructured data, and web services to be virtually integrated. Teiid extracts metadata from multiple data sources through virtual databases (VDBs), enabling federation. The consumer API is simple JDBC usage. Teiid is fully integrated with Jboss and customizable for performance and extensible through custom binders.

How to Build Modern Data Architectures Both On Premises and in the Cloud

How to Build Modern Data Architectures Both On Premises and in the CloudVMware Tanzu Enterprises are beginning to consider the deployment of data science and data warehouse platforms on hybrid (public cloud, private cloud, and on premises) infrastructure. This delivers the flexibility and freedom of choice to deploy your analytics anywhere you need it and to create an adaptable and agile analytics platform.

But the market is conspiring against customer desire for innovation...

Leading public cloud vendors are interested in pushing their new, but proprietary, analytic stacks, locking customers into subpar Analytics as a Service (AaaS) for years to come.

In tandem, Legacy Data Warehouse vendors are trying to extend the lifecycle of their costly and aging appliances with new features of marginal value, simply imitating the same limiting models of public cloud vendors.

New vendors are coming up with interesting ideas, but these ideas are often lacking critical features that don’t provide support for hybrid solutions, limiting the immediate value to users.

It is 2017—you can, in fact, have your analytics cake and eat it too! Solve your short term costs and capabilities challenges, and establish a long term hybrid data strategy by running the same open source analytics platform on your infrastructure as it exists today.

In this webinar you will learn how Pivotal can help you build a modern analytical architecture able to run on your public, private cloud, or on-premises platform of your choice, while fully leveraging proven open source technologies and supporting the needs of diverse analytical users.

Let’s have a productive discussion about how to deploy a solid cloud analytics strategy.

Presenter : Jacque Istok, Head of Data Technical Field for Pivotal

https://content.pivotal.io/webinars/jul-20-how-to-build-modern-data-architectures-both-on-premises-and-in-the-cloud

Azure data bricks by Eugene Polonichko

Azure data bricks by Eugene PolonichkoAlex Tumanoff This document provides an overview of Azure Databricks, including:

- Azure Databricks is an Apache Spark-based analytics platform optimized for Microsoft Azure cloud services. It includes Spark SQL, streaming, machine learning libraries, and integrates fully with Azure services.

- Clusters in Azure Databricks provide a unified platform for various analytics use cases. The workspace stores notebooks, libraries, dashboards, and folders. Notebooks provide a code environment with visualizations. Jobs and alerts can run and notify on notebooks.

- The Databricks File System (DBFS) stores files in Azure Blob storage in a distributed file system accessible from notebooks. Business intelligence tools can connect to Databricks clusters via JDBC

Overview of Microsoft Appliances: Scaling SQL Server to Hundreds of Terabytes

Overview of Microsoft Appliances: Scaling SQL Server to Hundreds of TerabytesJames Serra Learn how SQL Server can scale to HUNDREDS of terabytes for BI solutions. This session will focus on Fast Track Solutions and Appliances, Reference Architectures, and Parallel Data Warehousing (PDW). Included will be performance numbers and lessons learned on a PDW implementation and how a successful BI solution was built on top of it using SSAS.

Azure data factory

Azure data factoryBizTalk360 What are the evolving approaches to analytics? What is Azure Data Factory? Capabilities of Azure Data Factory

Azure - Data Platform

Azure - Data Platformgiventocode This document summarizes key components of Microsoft Azure's data platform, including SQL Database, NoSQL options like Azure Tables, Blob Storage, and Azure Files. It provides an overview of each service, how they work, common use cases, and demos of creating resources and accessing data. The document is aimed at helping readers understand Azure's database and data storage options for building cloud applications.

SQL Server 2017 Enhancements You Need To Know

SQL Server 2017 Enhancements You Need To KnowQuest In this session, database experts Pini Dibask and Jason Hall reveal the lesser-known features that’ll help you improve database performance in record time.

How to Achieve Fast Data Performance in Big Data, Logical Data Warehouse, and...

How to Achieve Fast Data Performance in Big Data, Logical Data Warehouse, and...Denodo Performance is a key consideration for organizations looking to implement big data, logical data warehouse, and operational use cases. In this presentation, the technology expert demonstrates the performance aspects of using data virtualization to accelerate the delivery of fast data to end consumers.

This presentation is part of the Fast Data Strategy Conference, and you can watch the video here goo.gl/YMPhvE.

Microsoft ignite 2018 SQL server 2019 big data clusters - deep dive session

Microsoft ignite 2018 SQL server 2019 big data clusters - deep dive sessionTravis Wright SQL Server 2019 big data clusters brings HDFS and Spark into SQL Server for scalable compute and storage.

Andriy Zrobok "MS SQL 2019 - new for Big Data Processing"

Andriy Zrobok "MS SQL 2019 - new for Big Data Processing"Lviv Startup Club This document discusses MS SQL Server 2019's capabilities for big data processing through PolyBase and Big Data Clusters. PolyBase allows SQL queries to join data stored externally in sources like HDFS, Oracle and MongoDB. Big Data Clusters deploy SQL Server on Linux in Kubernetes containers with separate control, compute and storage planes to provide scalable analytics on large datasets. Examples of using these technologies include data virtualization across sources, building data lakes in HDFS, distributed data marts for analysis, and integrated AI/ML tasks on HDFS and SQL data.

Ad

More Related Content

What's hot (20)

Data Modeling on Azure for Analytics

Data Modeling on Azure for AnalyticsIke Ellis When you model data you are making two decisions:

* The location where data will be stored

* How the data will be organized for ease of use

Azure Synapse Analytics Overview (r1)

Azure Synapse Analytics Overview (r1)James Serra Azure Synapse Analytics is Azure SQL Data Warehouse evolved: a limitless analytics service, that brings together enterprise data warehousing and Big Data analytics into a single service. It gives you the freedom to query data on your terms, using either serverless on-demand or provisioned resources, at scale. Azure Synapse brings these two worlds together with a unified experience to ingest, prepare, manage, and serve data for immediate business intelligence and machine learning needs. This is a huge deck with lots of screenshots so you can see exactly how it works.

Data Virtualization and ETL

Data Virtualization and ETLLily Luo This document discusses the limitations of traditional ETL processes and how data virtualization can help address these issues. Specifically:

- ETL processes are complex, involve costly data movement between systems, and can result in data inconsistencies due to latency.

- Data virtualization eliminates data movement by providing unified access to data across different systems. It enables queries to retrieve integrated data in real-time without physical replication.

- Rocket Data Virtualization is a mainframe-resident solution that reduces TCO by offloading up to 99% of data integration processing to specialty engines, simplifying access to mainframe data via SQL.

Encompassing Information Integration

Encompassing Information Integrationnguyenfilip This document provides an overview of Teiid, an open source data integration platform. It begins with an agenda that covers what Teiid is, basic usage, tooling, demonstrations, internals, heterogeneous data sources, enterprise data services, and how attendees can get involved. The document then delves into explanations of Teiid's history, architecture, modeling tooling, dynamic and static virtual databases, tooling like the console and admin shell, and how it works internally with parsing, optimization, and federation. It concludes by discussing how developers can contribute to Teiid and opportunities for students.

Introducing Azure SQL Data Warehouse

Introducing Azure SQL Data WarehouseJames Serra The new Microsoft Azure SQL Data Warehouse (SQL DW) is an elastic data warehouse-as-a-service and is a Massively Parallel Processing (MPP) solution for "big data" with true enterprise class features. The SQL DW service is built for data warehouse workloads from a few hundred gigabytes to petabytes of data with truly unique features like disaggregated compute and storage allowing for customers to be able to utilize the service to match their needs. In this presentation, we take an in-depth look at implementing a SQL DW, elastic scale (grow, shrink, and pause), and hybrid data clouds with Hadoop integration via Polybase allowing for a true SQL experience across structured and unstructured data.

SQL Server 2019 Big Data Cluster

SQL Server 2019 Big Data ClusterMaximiliano Accotto Here are a few questions I have after the material covered so far:

1. What is the difference between the compute pool and app pool in a SQL Server 2019 big data cluster?

2. How does Polybase work differently in a SQL Server 2019 big data cluster compared to a traditional Polybase setup?

3. What components make up the storage pool in a SQL Server 2019 big data cluster?

Azure SQL Database Managed Instance

Azure SQL Database Managed InstanceJames Serra Azure SQL Database Managed Instance is a new flavor of Azure SQL Database that is a game changer. It offers near-complete SQL Server compatibility and network isolation to easily lift and shift databases to Azure (you can literally backup an on-premise database and restore it into a Azure SQL Database Managed Instance). Think of it as an enhancement to Azure SQL Database that is built on the same PaaS infrastructure and maintains all it's features (i.e. active geo-replication, high availability, automatic backups, database advisor, threat detection, intelligent insights, vulnerability assessment, etc) but adds support for databases up to 35TB, VNET, SQL Agent, cross-database querying, replication, etc. So, you can migrate your databases from on-prem to Azure with very little migration effort which is a big improvement from the current Singleton or Elastic Pool flavors which can require substantial changes.

Microsoft cloud big data strategy

Microsoft cloud big data strategyJames Serra Think of big data as all data, no matter what the volume, velocity, or variety. The simple truth is a traditional on-prem data warehouse will not handle big data. So what is Microsoft’s strategy for building a big data solution? And why is it best to have this solution in the cloud? That is what this presentation will cover. Be prepared to discover all the various Microsoft technologies and products from collecting data, transforming it, storing it, to visualizing it. My goal is to help you not only understand each product but understand how they all fit together, so you can be the hero who builds your companies big data solution.

Azure data platform overview

Azure data platform overviewAlessandro Melchiori Azure SQL Database is a relational database-as-a-service hosted in the Azure cloud that reduces costs by eliminating the need to manage virtual machines, operating systems, or database software. It provides automatic backups, high availability through geo-replication, and the ability to scale performance by changing service tiers. Azure Cosmos DB is a globally distributed, multi-model database that supports automatic indexing, multiple data models via different APIs, and configurable consistency levels with strong performance guarantees. Azure Redis Cache uses the open-source Redis data structure store with managed caching instances in Azure for improved application performance.

Designing a modern data warehouse in azure

Designing a modern data warehouse in azure Antonios Chatzipavlis This document discusses designing a modern data warehouse in Azure. It provides an overview of traditional vs. self-service data warehouses and their limitations. It also outlines challenges with current data warehouses around timeliness, flexibility, quality and findability. The document then discusses why organizations need a modern data warehouse based on criteria like customer experience, quality assurance and operational efficiency. It covers various approaches to ingesting, storing, preparing, modeling and serving data on Azure. Finally, it discusses architectures like the lambda architecture and common data models.

What's new in SQL Server 2016

What's new in SQL Server 2016James Serra The document summarizes new features in SQL Server 2016 SP1, organized into three categories: performance enhancements, security improvements, and hybrid data capabilities. It highlights key features such as in-memory technologies for faster queries, always encrypted for data security, and PolyBase for querying relational and non-relational data. New editions like Express and Standard provide more built-in capabilities. The document also reviews SQL Server 2016 SP1 features by edition, showing advanced features are now more accessible across more editions.

JBoss Enterprise Data Services (Data Virtualization)

JBoss Enterprise Data Services (Data Virtualization)plarsen67 The document discusses how JBoss Enterprise Data Services and the JBoss Enterprise SOA Platform can be used to address common business challenges related to data, decision making, inflexible systems, and manual processes. It presents five solution patterns - data foundation, information delivery, externalizing knowledge, automating decision making, and codifying business processes - and how the technologies in the JBoss platforms map to implementing these patterns to generate new business value from existing assets.

Jboss Teiid - The data you have on the place you need

Jboss Teiid - The data you have on the place you needJackson dos Santos Olveira Jboss Teiid is a data virtualization and federation system that provides a uniform API for accessing data. It allows for data from different sources like SQL and NoSQL databases, unstructured data, and web services to be virtually integrated. Teiid extracts metadata from multiple data sources through virtual databases (VDBs), enabling federation. The consumer API is simple JDBC usage. Teiid is fully integrated with Jboss and customizable for performance and extensible through custom binders.

How to Build Modern Data Architectures Both On Premises and in the Cloud

How to Build Modern Data Architectures Both On Premises and in the CloudVMware Tanzu Enterprises are beginning to consider the deployment of data science and data warehouse platforms on hybrid (public cloud, private cloud, and on premises) infrastructure. This delivers the flexibility and freedom of choice to deploy your analytics anywhere you need it and to create an adaptable and agile analytics platform.

But the market is conspiring against customer desire for innovation...

Leading public cloud vendors are interested in pushing their new, but proprietary, analytic stacks, locking customers into subpar Analytics as a Service (AaaS) for years to come.

In tandem, Legacy Data Warehouse vendors are trying to extend the lifecycle of their costly and aging appliances with new features of marginal value, simply imitating the same limiting models of public cloud vendors.

New vendors are coming up with interesting ideas, but these ideas are often lacking critical features that don’t provide support for hybrid solutions, limiting the immediate value to users.

It is 2017—you can, in fact, have your analytics cake and eat it too! Solve your short term costs and capabilities challenges, and establish a long term hybrid data strategy by running the same open source analytics platform on your infrastructure as it exists today.

In this webinar you will learn how Pivotal can help you build a modern analytical architecture able to run on your public, private cloud, or on-premises platform of your choice, while fully leveraging proven open source technologies and supporting the needs of diverse analytical users.

Let’s have a productive discussion about how to deploy a solid cloud analytics strategy.

Presenter : Jacque Istok, Head of Data Technical Field for Pivotal

https://content.pivotal.io/webinars/jul-20-how-to-build-modern-data-architectures-both-on-premises-and-in-the-cloud

Azure data bricks by Eugene Polonichko

Azure data bricks by Eugene PolonichkoAlex Tumanoff This document provides an overview of Azure Databricks, including:

- Azure Databricks is an Apache Spark-based analytics platform optimized for Microsoft Azure cloud services. It includes Spark SQL, streaming, machine learning libraries, and integrates fully with Azure services.

- Clusters in Azure Databricks provide a unified platform for various analytics use cases. The workspace stores notebooks, libraries, dashboards, and folders. Notebooks provide a code environment with visualizations. Jobs and alerts can run and notify on notebooks.

- The Databricks File System (DBFS) stores files in Azure Blob storage in a distributed file system accessible from notebooks. Business intelligence tools can connect to Databricks clusters via JDBC

Overview of Microsoft Appliances: Scaling SQL Server to Hundreds of Terabytes

Overview of Microsoft Appliances: Scaling SQL Server to Hundreds of TerabytesJames Serra Learn how SQL Server can scale to HUNDREDS of terabytes for BI solutions. This session will focus on Fast Track Solutions and Appliances, Reference Architectures, and Parallel Data Warehousing (PDW). Included will be performance numbers and lessons learned on a PDW implementation and how a successful BI solution was built on top of it using SSAS.

Azure data factory

Azure data factoryBizTalk360 What are the evolving approaches to analytics? What is Azure Data Factory? Capabilities of Azure Data Factory

Azure - Data Platform

Azure - Data Platformgiventocode This document summarizes key components of Microsoft Azure's data platform, including SQL Database, NoSQL options like Azure Tables, Blob Storage, and Azure Files. It provides an overview of each service, how they work, common use cases, and demos of creating resources and accessing data. The document is aimed at helping readers understand Azure's database and data storage options for building cloud applications.

SQL Server 2017 Enhancements You Need To Know

SQL Server 2017 Enhancements You Need To KnowQuest In this session, database experts Pini Dibask and Jason Hall reveal the lesser-known features that’ll help you improve database performance in record time.

How to Achieve Fast Data Performance in Big Data, Logical Data Warehouse, and...

How to Achieve Fast Data Performance in Big Data, Logical Data Warehouse, and...Denodo Performance is a key consideration for organizations looking to implement big data, logical data warehouse, and operational use cases. In this presentation, the technology expert demonstrates the performance aspects of using data virtualization to accelerate the delivery of fast data to end consumers.

This presentation is part of the Fast Data Strategy Conference, and you can watch the video here goo.gl/YMPhvE.

Similar to Data Integration through Data Virtualization (SQL Server Konferenz 2019) (20)

Microsoft ignite 2018 SQL server 2019 big data clusters - deep dive session

Microsoft ignite 2018 SQL server 2019 big data clusters - deep dive sessionTravis Wright SQL Server 2019 big data clusters brings HDFS and Spark into SQL Server for scalable compute and storage.

Andriy Zrobok "MS SQL 2019 - new for Big Data Processing"

Andriy Zrobok "MS SQL 2019 - new for Big Data Processing"Lviv Startup Club This document discusses MS SQL Server 2019's capabilities for big data processing through PolyBase and Big Data Clusters. PolyBase allows SQL queries to join data stored externally in sources like HDFS, Oracle and MongoDB. Big Data Clusters deploy SQL Server on Linux in Kubernetes containers with separate control, compute and storage planes to provide scalable analytics on large datasets. Examples of using these technologies include data virtualization across sources, building data lakes in HDFS, distributed data marts for analysis, and integrated AI/ML tasks on HDFS and SQL data.

Data Analytics Meetup: Introduction to Azure Data Lake Storage

Data Analytics Meetup: Introduction to Azure Data Lake Storage CCG Microsoft Azure Data Lake Storage is designed to enable operational and exploratory analytics through a hyper-scale repository. Journey through Azure Data Lake Storage Gen1 with Microsoft Data Platform Specialist, Audrey Hammonds. In this video she explains the fundamentals to Gen 1 and Gen 2, walks us through how to provision a Data Lake, and gives tips to avoid turning your Data Lake into a swamp.

Learn more about Data Lakes with our blog - Data Lakes: Data Agility is Here Now https://bit.ly/2NUX1H6

SQL Server Ground to Cloud.pptx

SQL Server Ground to Cloud.pptxsaidbilgen This document provides an overview of 6 modules related to SQL Server workshops:

- Module 1 covers database design and architecture sessions

- Module 2 focuses on intelligent query processing, data classification/auditing, database recovery, data virtualization, and replication capabilities

- Module 3 discusses the big data landscape, including data growth drivers, common use cases, and scale-out processing approaches like Hadoop and Spark

Microsoft ignite 2018 SQL Server 2019 big data clusters - intro session

Microsoft ignite 2018 SQL Server 2019 big data clusters - intro sessionTravis Wright SQL Server 2019 big data clusters brings HDFS and Spark into SQL Server for scalable compute and storage.

Planning your Next-Gen Change Data Capture (CDC) Architecture in 2019 - Strea...

Planning your Next-Gen Change Data Capture (CDC) Architecture in 2019 - Strea...Impetus Technologies Traditional databases and batch ETL operations have not been able to serve the growing data volumes and the need for fast and continuous data processing.

How can modern enterprises provide their business users real-time access to the most up-to-date and complete data?

In our upcoming webinar, our experts will talk about how real-time CDC improves data availability and fast data processing through incremental updates in the big data lake, without modifying or slowing down source systems. Join this session to learn:

What is CDC and how it impacts business

The various methods for CDC in the enterprise data warehouse

The key factors to consider while building a next-gen CDC architecture:

Batch vs. real-time approaches

Moving from just capturing and storing, to capturing enriching, transforming, and storing

Avoiding stopgap silos to state-through processing

Implementation of CDC through a live demo and use-case

You can view the webinar here - https://www.streamanalytix.com/webinar/planning-your-next-gen-change-data-capture-cdc-architecture-in-2019/

For more information visit - https://www.streamanalytix.com

SQL Server 2019 Modern Data Platform.pptx

SQL Server 2019 Modern Data Platform.pptxQuyVo27 This document summarizes new features in SQL Server 2019 including intelligent query processing, data classification and auditing, accelerated database recovery, data virtualization, SQL Server replication in one command, additional capabilities and migration tools, and a modern platform with Linux, containers, and machine learning services. It provides examples of how these features can help solve modern data challenges and gain performance without changing applications.

SFScon22 - Francesco Corcoglioniti - Integrating Dynamically-Computed Data an...

SFScon22 - Francesco Corcoglioniti - Integrating Dynamically-Computed Data an...South Tyrol Free Software Conference Enabling transparent SQL/SPARQL access to both static and dynamically-computed data

Query languages for databases (e.g., SQL) and knowledge graphs (e.g., SPARQL) provide a concise, declarative, and highly flexible mechanism to access stored data. Yet, many use cases also involve dynamically-computed data available through web APIs or other forms of external services. In such settings, data access is comparatively less flexible (e.g., due to restrictions on available input/output methods), convenient, and sometimes prohibitively slow for users interactively querying data. In this talk, we discuss these problems and present open source solutions that enable querying dynamically-computed data as a “virtual” (since not fully materialized) relational database via SQL, or as a “virtual” knowledge graph via SPARQL, at the same time providing pre-computation and caching solutions to speed up data access. The core components presented in the talk have been developed in the context of the HIVE “Fusion Grant” project and the OntoCRM project, both involving UNIBZ and Ontopic srl. In both projects, we aim at extending virtual knowledge graphs to dynamically-computed data, with a particular focus on applications in the domains of environmental sustainability and climate risk management.

DAC4B 2015 - Polybase

DAC4B 2015 - PolybaseŁukasz Grala Big Data and relational DB. Polybase solution in SQL Server 2016.

Copmnference Data Analytics Cloude for Business 2015.

Databricks Platform.pptx

Databricks Platform.pptxAlex Ivy The document provides an overview of the Databricks platform, which offers a unified environment for data engineering, analytics, and AI. It describes how Databricks addresses the complexity of managing data across siloed systems by providing a single "data lakehouse" platform where all data and analytics workloads can be run. Key features highlighted include Delta Lake for ACID transactions on data lakes, auto loader for streaming data ingestion, notebooks for interactive coding, and governance tools to securely share and catalog data and models.

Get started with Microsoft SQL Polybase

Get started with Microsoft SQL PolybaseHenk van der Valk PolyBase allows SQL Server 2016 to query data residing in Hadoop and Azure Blob Storage. It provides a unified query experience using T-SQL. To use PolyBase, you configure external data sources and file formats, create external tables, then run T-SQL queries against those tables. The PolyBase engine handles distributing parts of the query to Hadoop for parallel processing when possible for improved performance. Monitoring DMVs help troubleshoot and tune PolyBase queries.

Data virtualization using polybase

Data virtualization using polybaseAntonios Chatzipavlis This document provides an overview of using Polybase for data virtualization in SQL Server. It discusses installing and configuring Polybase, connecting external data sources like Azure Blob Storage and SQL Server, using Polybase DMVs for monitoring and troubleshooting, and techniques for optimizing performance like predicate pushdown and creating statistics on external tables. The presentation aims to explain how Polybase can be leveraged to virtually access and query external data using T-SQL without needing to know the physical data locations or move the data.

Samedi SQL Québec - La plateforme data de Azure

Samedi SQL Québec - La plateforme data de AzureMSDEVMTL 6 juin 2015

Samedi SQL à Québec

Session 3 - Data (SQL Azure, Table et Blob Storage) (Eric Moreau)

SQL Azure est une base de données relationnelle en tant que service, Azure Storage permet de stocker et d'extraire de gros volumes de données non structurées (par exemple, des documents et fichiers multimédias) avec les objets blob Azure ; de données NoSql structurées avec les tables Azure ; de messages fiables avec les files d'attente Azure.

Exploring sql server 2016 bi

Exploring sql server 2016 biAntonios Chatzipavlis SQL Server 2016 introduces new features for business intelligence and reporting. PolyBase allows querying data across SQL Server and Hadoop using T-SQL. Integration Services has improved support for AlwaysOn availability groups and incremental package deployment. Reporting Services adds HTML5 rendering, PowerPoint export, and the ability to pin report items to Power BI dashboards. Mobile Report Publisher enables developing and publishing mobile reports.

7 Databases in 70 minutes

7 Databases in 70 minutesKaren Lopez This document provides an overview of NoSQL databases in Azure. It discusses 7 different database types - key-value, column family, document, graph and Hadoop. For each database type it provides information on what it is, examples of use cases, and how to query or model data. It encourages attendees to explore these databases and stresses that choosing the right database for the job is important.

Azure Data.pptx

Azure Data.pptxFedoRam1 This document provides an overview of a course on implementing a modern data platform architecture using Azure services. The course objectives are to understand cloud and big data concepts, the role of Azure data services in a modern data platform, and how to implement a reference architecture using Azure data services. The course will provide an ARM template for a data platform solution that can address most data challenges.

KoprowskiT_SQLSat230_Rheinland_SQLAzure-fromPlantoBackuptoCloud

KoprowskiT_SQLSat230_Rheinland_SQLAzure-fromPlantoBackuptoCloudTobias Koprowski This document provides an overview and summary of SQL Azure and cloud services from Red Gate. The document begins with an introduction to SQL Azure, including compatibility with different SQL Server versions, limitations, and security requirements. It then covers topics like database sizing, naming conventions, migration support, and using indexes. The document next discusses cloud services from Red Gate for backup, restore, and scheduling of SQL Azure databases. It concludes with some example links and a short demo. The overall summary discusses key capabilities and services for managing SQL Azure databases and backups in the cloud.

Discovery Day 2019 Sofia - Big data clusters

Discovery Day 2019 Sofia - Big data clustersIvan Donev This document provides an overview of the architecture and components of SQL Server 2019 Big Data Clusters. It describes the key Kubernetes concepts used in Big Data Clusters like pods, services, and nodes. It then explains the different planes (control, compute, data) and nodes that make up a Big Data Cluster and their roles. Components in each plane like the SQL master instance, compute pools, storage pools, and data pools are also outlined.

Microsoft Data Integration Pipelines: Azure Data Factory and SSIS

Microsoft Data Integration Pipelines: Azure Data Factory and SSISMark Kromer The document discusses tools for building ETL pipelines to consume hybrid data sources and load data into analytics systems at scale. It describes how Azure Data Factory and SQL Server Integration Services can be used to automate pipelines that extract, transform, and load data from both on-premises and cloud data stores into data warehouses and data lakes for analytics. Specific patterns shown include analyzing blog comments, sentiment analysis with machine learning, and loading a modern data warehouse.

SQLSaturday#290_Kiev_WindowsAzureDatabaseForBeginners

SQLSaturday#290_Kiev_WindowsAzureDatabaseForBeginnersTobias Koprowski Microsoft released SQL Azure more than two years ago - that's enough time for testing (I hope!). So, are you ready to move your data to the Cloud? If you’re considering a business (i.e. a production environment) in the Cloud, you need to think about methods for backing up your data, a backup plan for your data and, eventually, restoring with Red Gate Cloud Services (and not only). In this session, you’ll see the differences, functionality, restrictions, and opportunities in SQL Azure and On-Premise SQL Server 2008/2008 R2/2012. We’ll consider topics such as how to be prepared for backup and restore, and which parts of a cloud environment are most important: keys, triggers, indexes, prices, security, service level agreements, etc.

Planning your Next-Gen Change Data Capture (CDC) Architecture in 2019 - Strea...

Planning your Next-Gen Change Data Capture (CDC) Architecture in 2019 - Strea...Impetus Technologies

SFScon22 - Francesco Corcoglioniti - Integrating Dynamically-Computed Data an...

SFScon22 - Francesco Corcoglioniti - Integrating Dynamically-Computed Data an...South Tyrol Free Software Conference

Ad

More from Cathrine Wilhelmsen (20)

Fra utvikler til arkitekt: Skap din egen karrierevei ved å utvikle din person...

Fra utvikler til arkitekt: Skap din egen karrierevei ved å utvikle din person...Cathrine Wilhelmsen Fra utvikler til arkitekt: Skap din egen karrierevei

ved å utvikle din personlige merkevare (Presented at Tekna in Oslo, Norway on April 23rd, 2024)

One Year in Fabric: Lessons Learned from Implementing Real-World Projects (PA...

One Year in Fabric: Lessons Learned from Implementing Real-World Projects (PA...Cathrine Wilhelmsen One Year in Fabric: Lessons Learned from Implementing Real-World Projects (Presented at PASS Data Community Summit on November 8th, 2024)

Data Factory in Microsoft Fabric (MsBIP #82)

Data Factory in Microsoft Fabric (MsBIP #82)Cathrine Wilhelmsen Data Factory in Microsoft Fabric (Presented at the Microsoft BI Professionals Denmark meeting #82 on April 15th, 2024)

Getting Started: Data Factory in Microsoft Fabric (Microsoft Fabric Community...

Getting Started: Data Factory in Microsoft Fabric (Microsoft Fabric Community...Cathrine Wilhelmsen Getting Started: Data Factory in Microsoft Fabric (Presented at Microsoft Fabric Community Conference on March 26th, 2024)

Choosing Between Microsoft Fabric, Azure Synapse Analytics and Azure Data Fac...

Choosing Between Microsoft Fabric, Azure Synapse Analytics and Azure Data Fac...Cathrine Wilhelmsen Choosing Between Microsoft Fabric, Azure Synapse Analytics and Azure Data Factory (Presented at SQLBits on March 23rd, 2024)

Website Analytics in My Pocket using Microsoft Fabric (SQLBits 2024)

Website Analytics in My Pocket using Microsoft Fabric (SQLBits 2024)Cathrine Wilhelmsen The document is about how the author Cathrine Wilhelmsen built her own website analytics dashboard using Microsoft Fabric and Power BI. She collects data from the Cloudflare API and stores it in Microsoft Fabric. This allows her to visualize and access the analytics data on her phone through a mobile app beyond the 30 days retention offered by Cloudflare. In her presentation, she demonstrates how she retrieves the website data, processes it with Microsoft Fabric pipelines, and visualizes it in Power BI for a self-hosted analytics solution.

Data Integration using Data Factory in Microsoft Fabric (ESPC Microsoft Fabri...

Data Integration using Data Factory in Microsoft Fabric (ESPC Microsoft Fabri...Cathrine Wilhelmsen Data Integration using Data Factory in Microsoft Fabric (Presented at the ESPC Microsoft Fabric Week on February 19th, 2024)

Choosing between Fabric, Synapse and Databricks (Data Left Unattended 2023)

Choosing between Fabric, Synapse and Databricks (Data Left Unattended 2023)Cathrine Wilhelmsen Choosing between Microsoft Fabric, Azure Synapse Analytics and Azure Databricks (Presented at Data Left Unattended on December 7th, 2023)

Data Integration with Data Factory (Microsoft Fabric Day Oslo 2023)

Data Integration with Data Factory (Microsoft Fabric Day Oslo 2023)Cathrine Wilhelmsen Cathrine Wilhelmsen gave a presentation on using Microsoft Data Factory for data integration within Microsoft Fabric. Data Factory allows users to define data pipelines to ingest, transform and orchestrate data workflows. Pipelines contain activities that can copy or move data between different data stores. Connections specify how to connect to these data stores. Dataflows Gen2 provide enhanced orchestration capabilities, including defining activity dependencies and schedules. The presentation demonstrated how to use these capabilities in Data Factory for complex data integration scenarios.

The Battle of the Data Transformation Tools (PASS Data Community Summit 2023)

The Battle of the Data Transformation Tools (PASS Data Community Summit 2023)Cathrine Wilhelmsen The Battle of the Data Transformation Tools (Presented as part of the "Batte of the Data Transformation Tools" Learning Path at PASS Data Community Summit on November 16th, 2023)

Visually Transform Data in Azure Data Factory or Azure Synapse Analytics (PAS...

Visually Transform Data in Azure Data Factory or Azure Synapse Analytics (PAS...Cathrine Wilhelmsen Visually Transform Data in

Azure Data Factory or Azure Synapse Analytics (Presented as part of the "Batte of the Data Transformation Tools" Learning Path at PASS Data Community Summit on November 15th, 2023)

Building an End-to-End Solution in Microsoft Fabric: From Dataverse to Power ...

Building an End-to-End Solution in Microsoft Fabric: From Dataverse to Power ...Cathrine Wilhelmsen Building an End-to-End Solution in Microsoft Fabric: From Dataverse to Power BI (Presented at SQLSaturday Oregon & SW Washington on November 11th, 2023)

Website Analytics in my Pocket using Microsoft Fabric (AdaCon 2023)

Website Analytics in my Pocket using Microsoft Fabric (AdaCon 2023)Cathrine Wilhelmsen The document is about how the author created a mobile-friendly dashboard for her website analytics using Microsoft Fabric and Power BI. She collects data from the Cloudflare API and stores it in Microsoft Fabric. Then she visualizes the data in Power BI which can be viewed on her phone. This allows her to track website traffic and see which pages are most popular over time. She demonstrates her dashboard and discusses future improvements like comparing statistics across different time periods.

Choosing Between Microsoft Fabric, Azure Synapse Analytics and Azure Data Fac...

Choosing Between Microsoft Fabric, Azure Synapse Analytics and Azure Data Fac...Cathrine Wilhelmsen Choosing Between Microsoft Fabric, Azure Synapse Analytics and Azure Data Factory (Presented at Data Saturday Oslo on September 2nd, 2023)

Stressed, Depressed, or Burned Out? The Warning Signs You Shouldn't Ignore (D...

Stressed, Depressed, or Burned Out? The Warning Signs You Shouldn't Ignore (D...Cathrine Wilhelmsen Stressed, Depressed, or Burned Out? The Warning Signs You Shouldn't Ignore (Presented on September 2nd 2023 at Data Saturday Oslo)

Stressed, Depressed, or Burned Out? The Warning Signs You Shouldn't Ignore (S...

Stressed, Depressed, or Burned Out? The Warning Signs You Shouldn't Ignore (S...Cathrine Wilhelmsen Stressed, Depressed, or Burned Out? The Warning Signs You Shouldn't Ignore (Presented at SQLBits on March 18th, 2023)

We all experience stress in our lives. When the stress is time-limited and manageable, it can be positive and productive. This kind of stress can help you get things done and lead to personal growth. However, when the stress stretches out over longer periods of time and we are unable to manage it, it can be negative and debilitating. This kind of stress can affect your mental health as well as your physical health, and increase the risk of depression and burnout.

The tricky part is that both depression and burnout can hit you hard without the warning signs you might recognize from stress. Where stress barges through your door and yells "hey, it's me!", depression and burnout can silently sneak in and gradually make adjustments until one day you turn around and see them smiling while realizing that you no longer recognize your house. I know, because I've dealt with both. And when I thought I had kicked them out, they both came back for new visits.

I don't have the Answers™️ or Solutions™️ to how to keep them away forever. But in hindsight, there were plenty of warning signs I missed, ignored, or was oblivious to at the time. In this deeply personal session, I will share my story of dealing with both depression and burnout. What were the warning signs? Why did I miss them? Could I have done something differently? And most importantly, what can I - and you - do to help ourselves or our loved ones if we notice that something is not quite right?

"I can't keep up!" - Turning Discomfort into Personal Growth in a Fast-Paced ...

"I can't keep up!" - Turning Discomfort into Personal Growth in a Fast-Paced ...Cathrine Wilhelmsen "I can't keep up!" - Turning Discomfort into Personal Growth in a Fast-Paced World (Presented at SQLBits on March 17th, 2023)

Do you sometimes think the world is moving so fast that you're struggling to keep up?

Does it make you feel a little uncomfortable?

Awesome!

That means that you have ambitions. You want to learn new things, take that next step in your career, achieve your goals. You can do anything if you set your mind to it.

It just might not be easy.

All growth requires some discomfort. You need to manage and balance that discomfort, find a way to push yourself a little bit every day without feeling overwhelmed. In a fast-paced world, you need to know how to break down your goals into smaller chunks, how to prioritize, and how to optimize your learning.

Are you ready to turn your "I can't keep up" into "I can't believe I did all of that in just one year"?

Lessons Learned: Implementing Azure Synapse Analytics in a Rapidly-Changing S...

Lessons Learned: Implementing Azure Synapse Analytics in a Rapidly-Changing S...Cathrine Wilhelmsen Lessons Learned: Implementing Azure Synapse Analytics in a Rapidly-Changing Startup (Presented at SQLBits on March 11th, 2022)

What happens when you mix one rapidly-changing startup, one data analyst, one data engineer, and one hypothesis that Azure Synapse Analytics could be the right tool of choice for gaining business insights?

We had no idea, but we gave it a go!

Our ambition was to think big, start small, and act fast – to deliver business value early and often.

Did we succeed?

Join us for an honest conversation about why we decided to implement Azure Synapse Analytics alongside Power BI, how we got started, which areas we completely messed up at first, what our current solution looks like, the lessons learned along the way, and the things we would have done differently if we could start all over again.

6 Tips for Building Confidence as a Public Speaker (SQLBits 2022)

6 Tips for Building Confidence as a Public Speaker (SQLBits 2022)Cathrine Wilhelmsen 6 Tips for Building Confidence as a Public Speaker (Presented at SQLBits on March 10th, 2022)

Do you feel nervous about getting on stage to deliver a presentation?

That was me a few years ago. Palms sweating. Hands shaking. Voice trembling. I could barely breathe and talked at what felt like a thousand words per second. Now, public speaking is one of my favorite hobbies. Sometimes, I even plan my vacations around events! What changed?

There are no shortcuts to building confidence as a public speaker. However, there are many things you can do to make the journey a little easier for yourself. In this session, I share the top tips I have learned over the years. All it takes is a little preparation and practice.

You can do this!

Lessons Learned: Understanding Pipeline Pricing in Azure Data Factory and Azu...

Lessons Learned: Understanding Pipeline Pricing in Azure Data Factory and Azu...Cathrine Wilhelmsen Lessons Learned: Understanding Pipeline Pricing in Azure Data Factory and Azure Synapse Analytics (Presented at DataMinutes #2 on January 21st, 2022)

Ad

Recently uploaded (20)

Safety Innovation in Mt. Vernon A Westchester County Model for New Rochelle a...

Safety Innovation in Mt. Vernon A Westchester County Model for New Rochelle a...James Francis Paradigm Asset Management By James Francis, CEO of Paradigm Asset Management

In the landscape of urban safety innovation, Mt. Vernon is emerging as a compelling case study for neighboring Westchester County cities. The municipality’s recently launched Public Safety Camera Program not only represents a significant advancement in community protection but also offers valuable insights for New Rochelle and White Plains as they consider their own safety infrastructure enhancements.

Classification_in_Machinee_Learning.pptx

Classification_in_Machinee_Learning.pptxwencyjorda88 Brief powerpoint presentation about different classification of machine learning

Just-In-Timeasdfffffffghhhhhhhhhhj Systems.ppt

Just-In-Timeasdfffffffghhhhhhhhhhj Systems.pptssuser5f8f49 Just-in-time: Repetitive production system in which processing and movement of materials and goods occur just as they are needed, usually in small batches

JIT is characteristic of lean production systems

JIT operates with very little “fat”

Template_A3nnnnnnnnnnnnnnnnnnnnnnnnnnnnnnnnnnn

Template_A3nnnnnnnnnnnnnnnnnnnnnnnnnnnnnnnnnnncegiver630 Telangana State, India’s newest state that was carved from the erstwhile state of Andhra

Pradesh in 2014 has launched the Water Grid Scheme named as ‘Mission Bhagiratha (MB)’

to seek a permanent and sustainable solution to the drinking water problem in the state. MB is

designed to provide potable drinking water to every household in their premises through

piped water supply (PWS) by 2018. The vision of the project is to ensure safe and sustainable

piped drinking water supply from surface water sources

FPET_Implementation_2_MA to 360 Engage Direct.pptx

FPET_Implementation_2_MA to 360 Engage Direct.pptxssuser4ef83d Engage Direct 360 marketing optimization sas entreprise miner

Calories_Prediction_using_Linear_Regression.pptx

Calories_Prediction_using_Linear_Regression.pptxTijiLMAHESHWARI Calorie prediction using machine learning

CTS EXCEPTIONSPrediction of Aluminium wire rod physical properties through AI...

CTS EXCEPTIONSPrediction of Aluminium wire rod physical properties through AI...ThanushsaranS Prediction of Aluminium wire rod physical properties through AI, ML

or any modern technique for better productivity and quality control.

Simple_AI_Explanation_English somplr.pptx

Simple_AI_Explanation_English somplr.pptxssuser2aa19f Ai artificial intelligence ai with python course first upload

GenAI for Quant Analytics: survey-analytics.ai

GenAI for Quant Analytics: survey-analytics.aiInspirient Pitched at the Greenbook Insight Innovation Competition as apart of IIEX North America 2025 on 30 April 2025 in Washington, D.C.

Join us at survey-analytics.ai!

04302025_CCC TUG_DataVista: The Design Story

04302025_CCC TUG_DataVista: The Design Storyccctableauusergroup CCCCO and WestEd share the story behind how DataVista came together from a design standpoint and in Tableau.

Safety Innovation in Mt. Vernon A Westchester County Model for New Rochelle a...

Safety Innovation in Mt. Vernon A Westchester County Model for New Rochelle a...James Francis Paradigm Asset Management

Data Integration through Data Virtualization (SQL Server Konferenz 2019)

- 1. Data Integration through Data Virtualization Cathrine Wilhelmsen, Inmeta @cathrinew | cathrinew.net February 21st 2019

- 2. Abstract Data virtualization is an alternative to Extract, Transform and Load (ETL) processes. It handles the complexity of integrating different data sources and formats without requiring you to replicate or move the data itself. Save time, minimize effort, and eliminate duplicate data by creating a virtual data layer using PolyBase in SQL Server. In this session, we will first go through fundamental PolyBase concepts such as external data sources and external tables. Then, we will look at the PolyBase improvements in SQL Server 2019. Finally, we will create a virtual data layer that accesses and integrates both structured and unstructured data from different sources. Along the way, we will cover lessons learned, best practices, and known limitations.

- 4. …the next 60 minutes… PolyBase Virtual Data Layer Data Virtualization Data Integration

- 6. Combine Data in Different Formats from Separate Sources into Useful and Valuable Information

- 7. Combine Data in Different Formats from Separate Sources into Useful and Valuable Information

- 8. Combine Data Extract Transform Load Extract Load Transform Data Ingestion Data Preparation Data Wrangling

- 9. Combine Data in Different Formats from Separate Sources into Useful and Valuable Information

- 11. Combine Data in Different Formats from Separate Sources into Useful and Valuable Information

- 12. Separate Sources SQL Server Oracle Teradata MongoDB Hadoop Azure Blob Storage Azure Data-Lake Local File System

- 13. Combine Data in Different Formats from Separate Sources into Useful and Valuable Information

- 15. Combine Data in Different Formats from Separate Sources into Useful and Valuable Information

- 16. Valuable Information What you need Answer questions Solve problems Timesaving Reduce effort Improve efficiency

- 17. Combine Data in Different Formats from Separate Sources into Useful and Valuable Information

- 20. ETL – Extract Transform Load ELT – Extract Load Transform

- 21. ETL – Extract Transform Load ELT – Extract Load Transform = data movement

- 22. Data Movement: Costs Duplicated storage costs Need resources to build and maintain

- 23. Data Movement: Speed Takes time to build and maintain Delays before data can be used

- 24. Data Movement: Security Increased attack surface area Inconsistent security models

- 25. Data Movement: Data Quality More storage layers and pipelines Higher complexity

- 26. Data movement is a barrier to faster insights - Microsoft

- 28. Data Virtualization Logical Layers and Abstractions (Near) Real-Time View of Data Store in separate locations View in one location

- 29. Data Virtualization: Costs Lower storage costs Fewer resources to build and maintain

- 30. Data Virtualization: Speed No data latency Rapid iterations and prototypes

- 31. Data Virtualization: Security Smaller attack surface area Consistent security models

- 32. Data Virtualization: Data Quality Fewer storage layers and pipelines Less complexity

- 33. Data virtualization creates solutions - Microsoft

- 34. Data Movement = Bad ? Data Virtualization = Good ?

- 35. Data Movement = Bad ? Data Virtualization = Good ? no, just different use cases!

- 36. PolyBase

- 37. PolyBase Feature in SQL Server 2016 and later Query tables and files using T-SQL Used to query, import, and export data

- 39. PolyBase in SQL Server 2016 / 2017 Hadoop Azure Blob Storage Azure Data Lake

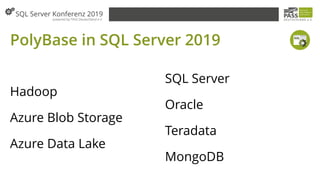

- 40. PolyBase in SQL Server 2019 Hadoop Azure Blob Storage Azure Data Lake SQL Server Oracle Teradata MongoDB

- 41. ODBC NoSQL Relational Databases Big Data PolyBase

- 42. How to use PolyBase? 1. Install PolyPase 2. Configure PolyBase Connectivity 3. Create Database Master Key 4. Create Database Scoped Credential 5. ...

- 43. How to use PolyBase? 4. ... 5. Create External Data Sources 6. Create External File Formats 7. Create External Tables 8. Create Statistics

- 44. Install PolyBase

- 45. 1. Install Prerequisites Microsoft .NET Framework 4.5 Oracle Java SE Runtime Environment (JRE) 7 or 8 2. Install PolyBase Single Node or Scale-Out Group 3. Enable PolyBase

- 46. Install Prerequisites Microsoft .NET Framework 4.5 https://www.microsoft.com/nl-nl/download/details.aspx?id=30653 Oracle Java SE Runtime Environment (JRE) 7 or 8 https://www.oracle.com/technetwork/java/javase/downloads/jre8-downloads-2133155.html

- 47. Install PolyBase Note: PolyBase can be installed on only one SQL Server instance per machine. Note: After you install PolyBase either standalone or in a scale-out group, you have to uninstall and reinstall to change it. . . . Ask me how I know : )

- 48. Enable PolyBase sp_configure 'polybase enabled', 1; RECONFIGURE;

- 51. 1. Configure PolyBase Connectivity 2. Restart Services SQL Server SQL Server PolyBase Engine SQL Server PolyBase Data Movement

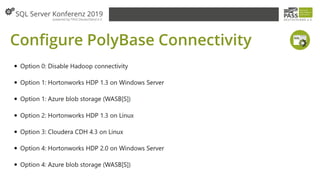

- 52. Configure PolyBase Connectivity sp_configure 'hadoop connectivity', 7; RECONFIGURE;

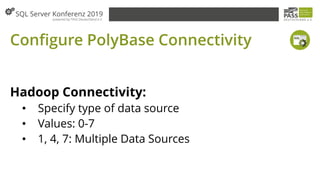

- 53. Configure PolyBase Connectivity Hadoop Connectivity: • Specify type of data source • Values: 0-7 • 1, 4, 7: Multiple Data Sources

- 56. Restart Services

- 57. Restart Services

- 59. Create Database Master Key CREATE MASTER KEY ENCRYPTION BY PASSWORD = '<password>';

- 61. Create Database Scoped Credential CREATE DATABASE SCOPED CREDENTIAL <CredentialName> WITH IDENTITY = '<identity>', SECRET = '<secret>';

- 63. Create External Data Source

- 64. Create External Data Source

- 65. Create External Data Source

- 66. Create External Data Source CREATE EXTERNAL DATA SOURCE <HadoopName> WITH ( TYPE = HADOOP, LOCATION ='<hdfs://...>', CREDENTIAL = <CredentialName>, RESOURCE_MANAGER_LOCATION = '<ip>' );

- 67. Create External Data Source CREATE EXTERNAL DATA SOURCE <AzureBlobName> WITH ( TYPE = HADOOP, LOCATION ='<wasbs://...>', CREDENTIAL = <CredentialName> );

- 68. Create External Data Source CREATE EXTERNAL DATA SOURCE <OracleName> WITH ( LOCATION ='<oracle://...>', CREDENTIAL = <CredentialName> );

- 70. Create External File Format

- 71. Create External File Format

- 72. Create External File Format

- 73. Create External File Format CREATE EXTERNAL FILE FORMAT <FileFormatName> WITH ( FORMAT_TYPE = DELIMITEDTEXT, FORMAT_OPTIONS ( FIELD_TERMINATOR = ';', USE_TYPE_DEFAULT = TRUE ) );

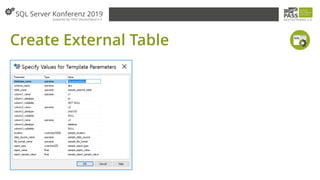

- 78. Create External Table CREATE EXTERNAL TABLE [SchemaName].[TableName] ( [ColumnName] INT NOT NULL ) WITH ( LOCATION = <FileName>', DATA_SOURCE = <DataSourceName>, FILE_FORMAT = <FileFormatName> )

- 80. Create Statistics Note: To create statistics, SQL Server imports the external data into temp table first. Remember to choose sampling or full scan. Note: Updating statistics is not supported. Drop and re-create instead.

- 81. Create Statistics CREATE STATISTICS <StatName> ON <TableName>(<ColumnName>); CREATE STATISTICS <StatName> ON <TableName>(<ColumnName>) WITH FULLSCAN;

- 82. All Done

- 83. Verify using Catalog Views SELECT * FROM sys.external_data_sources SELECT * FROM sys.external_file_formats; SELECT * FROM sys.external_tables;

- 84. T-SQL All The Things :)

- 85. …or…?

- 86. PolyBase can be grumpy :(

- 87. Unexpected error encountered filling record reader buffer: HadoopExecutionException: Not enough columns in this line.

- 88. Unexpected error encountered filling record reader buffer: HadoopExecutionException: Too many columns in the line.

- 89. Unexpected error encountered filling record reader buffer: HadoopExecutionException: Could not find a delimiter after string delimiter.

- 90. Unexpected error encountered filling record reader buffer: HadoopExecutionException: Error converting data type NVARCHAR to INT.

- 91. Unexpected error encountered filling record reader buffer: HadoopExecutionException: Conversion failed when converting the NVARCHAR value '"0"' to data type BIT.

- 92. Unexpected error encountered filling record reader buffer: HadoopExecutionException: Too long string in column [-1]: Actual len = [4242]. MaxLEN=[4000]

- 93. Msg 46518, Level 16, State 12, Line 1: The type 'nvarchar(max)' is not supported with external tables.

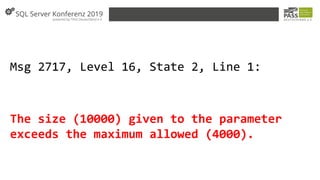

- 94. Msg 2717, Level 16, State 2, Line 1: The size (10000) given to the parameter exceeds the maximum allowed (4000).

- 95. Msg 131, Level 15, State 2, Line 1: The size (10000) given to the column exceeds the maximum allowed for any data type (8000).

- 96. = Know your data :)

- 97. SQL Server 2019 Big Data Clusters

- 98. SQL Server 2019 Big Data Clusters SQL Server, Spark, and HDFS Scalable clusters of containers Runs on Kubernetes

- 99. Kubernetes Pod Kubernetes Pod Kubernetes Pod Kubernetes Pod SQL Server Master Instance SQL Server HDFS Data Node SparkSQL Server HDFS Data Node Spark SQL Server HDFS Data Node Spark SQL Server HDFS Data Node Spark

- 101. Build Virtual Data Layer Scenarios: 1. Text Files in Azure Blob Storage 2. Tables in Oracle Database

- 102. Text Files in Azure Blob Storage

- 103. Tables in Oracle Database

- 104. DEMO Build Virtual Data Layer in SSMS

- 105. It's as easy as 1, 2, 3!

- 106. …4, 5, 6, 7, 8, 9, 10…

- 107. Is there an easier way?

- 109. Biml 💚 PolyBase Ben Weissman: Using Biml to automagically keep your external polybase tables in sync! https://www.solisyon.de/biml-polybase-external-tables/

- 111. Azure Data Studio 1. Install Azure Data Studio docs.microsoft.com/en-us/sql/azure-data-studio/download 2. Install Extension: SQL Server 2019 (Preview) docs.microsoft.com/en-us/sql/azure-data-studio/sql-server-2019-extension

- 112. Extension: SQL Server 2019 (Preview)

- 113. Extension: SQL Server 2019 (Preview) Double-clicking the .vsix file doesn't work…

- 114. Extension: SQL Server 2019 (Preview) …install preview extensions from Azure Data Studio

- 116. Azure Data Studio Wizard: CSV Files

- 124. Azure Data Studio Wizard: Oracle

- 134. DEMO Build Virtual Data Layer in ADS

- 135. Next Steps

- 136. Where can I learn more? Microsoft SQL Docs: docs.microsoft.com/sql

- 137. Where can I learn more? Kevin Feasel: 36chambers.wordpress.com/polybase

- 138. How can I try PolyBase? Microsoft Hands-on Labs: microsoft.com/handsonlabs

- 139. How can I try SQL Server 2019? For Windows, Linux, and containers: aka.ms/trysqlserver2019

- 140. How can I try Big Data Clusters? SQL Server 2019 Early Adoption Program: aka.ms/eapsignup

- 142. Thank you very much for your attention. Vielen Dank für Eure Aufmerksamkeit.