Data Mining and Analytics

- 1. Robert M. Shapiro Senior Vice President Global 360, Inc. SessionTitle: Analytics and Data Mining Welcome to Transformation and Innovation 2007 The Business Transformation Conference

- 2. Agenda Analytics Simulation Optimization Data Mining Summary

- 3. Why Care About Analytics and Data Mining? When Workflow Management Systems first began to proliferate (1990s) there was little attention paid to the data generated by the running processes. Most thought this as an audit trail, not a source of information for process improvement. We now understand that the historical record contains valuable information essential to a well orchestrated continuous process improvement program. Correctly designed analytics is the starting point for providing business process intelligence. The analytics drives both real-time monitoring and predictive optimization of the executing B usiness P rocess M anagement S ystem.

- 4. Overview Business Operations Control Event Detection &Correlation Predictive Simulation Data Mining Optimization Event Bus ERP BPM ECM Legacy EAI Custom Historical Analytics Real Time Dashboards Alerts & Actions

- 6. Events WORKFLOWCREATE WORKFLOWTERMINATE CHILDCREATE CHILDTERMINATE ARRIVEACTIVITY BEGINACTIVITY COMPLETEACTIVITY SUSPENDACTIVITY CANCELACTIVITY CONTINUEACTIVITY BEGINTIMEDSEQUENCE COMPLETETIMEDSEQUENCE LOGGEDEVENT Activity Process Timed Sequence Logged Event Event Event Group <?xml version="1.0" encoding="UTF-8"?> <XPDLogEvents> <XPDLogEvent System="MortgageDemo" Scenario="Mortgage Lending AsIs" Run="8/28/2006 6:29:01 PM" InstanceId="6505" ParentInstanceId="" WorkflowInstanceId="6505" Timestamp="2006-08-01T07:00:00Z" SequenceId="281010" ProcessId="Mortgage Lending AsIs" ProcessVersion="1" EventType="WORKFLOWCREATE" ActivitySetId="1" ActivityId="15" QueueId="-1" ElapsedTimeDays="0" ElapsedBusinessHours="0" ElapsedBusinessDays="0" AccruedWaitDays="0" AccruedWaitBusinessDays="0" AccruedWaitBusinessHours="0" AccruedProcessingDays="0" AccruedProcessingBusinessDays="0" AccruedProcessingBusinessHours="0"> <Participants> <Participant ParticipantId="System" /> </Participants> <DataFields> <DataField Name="SIM_Cost" Type="FLOAT">0</DataField> </DataFields> </XPDLogEvent> </XPDLogEvents>

- 7. Timing Data from Workflow Log Events

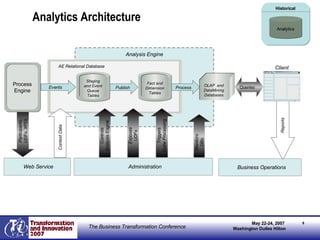

- 8. Analytics Architecture Publish AE Relational Database Events OLAP and DataMining Databases Process Analysis Engine Queries Context Data Client Reports Participants, UDFs, XPDL Staging and Event Queue Tables Fact and Dimension Tables Process Engine Administration Controls Analysis Engine Exposes UDFs Triggers Cube Processing Monitors DBs Web Service Business Operations Historical Analytics

- 9. Process Analytics Features Fast analysis of process, activity & SLA statistics, quality and labor information Drill down / slice and dice – Explore data from different perspectives Benefits Business process intelligence Identify process improvement areas End to end process visibility Problem You have to know where to look in the hypercube.

- 10. BAM Dashboards Status indicators Queue Counts Counters Goal/KPI status and trends

- 11. Actions & Alerts Process Metrics Action Schedule Rules Engine Email and Cellphone notification Process Event Triggers Goals Thresholds Risk Mitigation KPI Evaluation Web Service Call or Execute Script Actions

- 12. Simulation Why would you want to build simulation models? A simulation model lets you do what-ifs What if I changed my staff schedules What if I bought a faster check sorter What if the number of applications increased dramatically because of a marketing campaign The simulation results predict the effect on critical KPIs such as end-to-end cycle time and cost per processed application. Hence simulation plays an important role in continuous process improvement.

- 13. Key Simulation Factors Options Time Frame, Animation Update Frequency, Exposed Fields Activities Duration, Performers, Decisions Pre and Post Assignments, Pre and PostScripts Use of Historical Data for decisions and durations Arrivals Process, Start Activity Batch, Field Values, Pattern and Repeat Use of Historical Data for work load distributions over time Data Fields Participants Schedules, Roles Played, Details Roles Schedule Definitions

- 14. Review of Analytics, BAM and Simulation A stream of events produced by a variety of business process engines (ERP, Supply Chain Management, BPMS enactment) is fed to an Analytics engine which transforms the event data into usable information. A Business Activity Monitoring module updates in real time a set of KPI indicators and using a Rules Engine applied to these indicators, generates Alerts and Actions which inform managers of critical situations and alter the behavior of the running processes. A simulation tool, using the historical data, provides What-If analysis in support of continuous process improvement. Integrated with a Work Force Management system it enables optimization of staff schedules. But designing the what-if scenarios can be a challenging and labor-intensive task for a specialist.

- 15. Automatic Optimization Automatic Optimization uses Analytics and Simulation to generate and evaluate proposals for achieving a set of goals. Analysis of Process structure in conjunction with historical data about processing delays and resource availability permits the intelligent exploration of improvement strategies. Coupled with WorkForce Management technology, this approach helps optimize staff schedules.

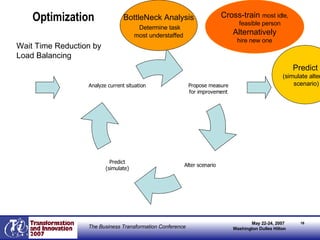

- 16. Optimization BottleNeck Analysis Determine task most understaffed Cross-train most idle, feasible person Alternatively hire new one Predict (simulate altered scenario) Wait Time Reduction by Load Balancing Analyze current situation Predict (simulate) Alter scenario Propose measure for improvement

- 18. Throughput: Unresolved Work Objects Work objects are piling up as long as workitems arrive (Cycle times go up continually) One region in particular is understaffed Number of unresolved work objects is limited (Upper bound for cycle times) After Load Balancing Before Load Balancing

- 19. Resource Utilization (Idle Times) Quite unbalanced More balanced Before Load Balancing After Load Balancing

- 20. Judging the Effect: Productivity Index Initially: After Load Balancing:

- 21. Judging the Effect: Throughput Analysis After role addition (e.g. cross training): After resource addition: Initially:

- 22. Review of Automated Optimization Technology Optimization, using goals formulated as KPI’s, can analyze historical information and propose what changes are likely to help attain these goals. It can systematically evaluate the proposed changes, using the simulation tool as a component. This can be performed in a totally automated manner, with termination upon satisfying the goal or recognizing that no proposed change results in further improvement. Staff optimization, focusing on end-to-end cycle time and processing cost as the KPI’s, is one example of the application of this technology.

- 23. Data Mining There are three stages: Data New Data Data Mining Apply To Predict Explore Data Build Mining Model Deploy Patterns

- 24. Process versus Content Data Mining We focus on the data generated by typical computer-based business processes, using Process Intelligence as the lens through which to view the data. This process view is critical in developing a mining structure and mining models that expose correlations between Key Performance Indicators and other factors such as work item attributes, resource schedules, arrival patterns and other external business factors.

- 25. Building a Mining Structure A Mining Structure is built by selecting tables and views from a relational database or OLAP cube and specifying the input and predictable columns (variables/attributes) in the selected tables/views. Choosing the input columns requires an analysis of the data. Here is an example based on the Microsoft Data Mining software.

- 26. Choosing Columns from a Subset of the Data Source View

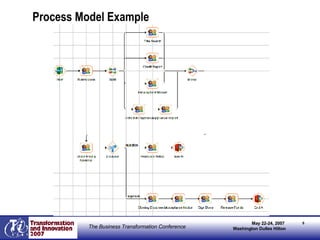

- 27. Process Model Example (previous example)

- 28. Mining Structure

- 29. A Tornado has knocked out a Processing Center

- 30. Can Data Mining Technology Help Us? A tornado has knocked out the application processing center in Tulsa. The event stream provides the staff change info to the BAM component. How can we use a trained data mining model to rapidly determine how the temporary loss of staff will impact the end-to-end processing time for applications? What will the cost be for processing a loan application under these circumstances?

- 31. Predicting Cycle Time We can tell customers the expected wait time for a loan.

- 32. Predicting Cost

- 33. Can Data Mining Technology Help Us? A marketing campaign is expected to increase the number of low end loan applications next month. Simulation-based forecasting could be used to optimize work force management, but the simulation model must have accurate information about how long each step in the process takes and using average duration values based on history will not do. How can data mining provide better estimates for durations based on line-of-business attributes of the applications?

- 35. Branching

- 36. Cluster Analysis

- 37. Making Predictions using Simulation and Data Mining Simulation and Data Mining can both be used to make predictions. Are they competing or complementary technologies? We have already discussed the role of Data Mining in the preparation of information required for accurate simulations. Apart from this, there are major differences. The simulation model must be a sufficiently accurate representation of the collection of processes being executed. It can make predictions for situations not previously encountered so long as the underlying processes have not changed. The Data Mining predictions are based on a statistical analysis of what has already happened. A trained mining model assumes the historical patterns are still valid. There are major differences in performance. Simulation is computationally intensive. It takes significant time to obtain predictions. In Data Mining, the training is computationally intensive. Once a model is trained predictions are extremely fast. Periodic retraining may be required to keep the model accurate.

- 38. Summary BPMS generate event streams that provide the Analytics Data needed for Business Activity Monitoring in real time and Continuous Process Improvement . A customizable Optimizer , employing Data Mining and Simulation tool kits, derives from the Analytics Data a stream of recommendations for improving the business operations, including: Redeployment of resources Process changes Optimization of business rules The Data Mining component supports an alternative approach to prediction under changing business circumstances and generates critical information for use by the Simulator. It also provides Process Discovery capabilities useful in Process Re-Design.

- 39. Thank You Robert M. Shapiro Senior Vice President Global 360, Inc. Contact Information: 617-823-1055 [email_address]

- 40. References StatSoft, Inc. (2006). Electronic Statistics Textbook. Tulsa, OK: StatSoft. WEB: http://www.statsoft.com/textbook/stathome.html Microsoft Inc. (2006) SQL Server 2005 Books Online Wiley, Inc. (2005) Data Mining with SQL Server 2005, Tang & MacLennan Sams Publishing (2006) Microsoft SQL Server 2005 Integration Services, Haseldon Wiley, Inc. (2004) Data Mining Techniques: For Marketing, Sales and CRM, Berry Idea Group Publishing (2001) Data Mining and Business Intelligence: A Guide to Productivity, Kudyba

![Thank You Robert M. Shapiro Senior Vice President Global 360, Inc. Contact Information: 617-823-1055 [email_address]](https://image.slidesharecdn.com/data-mining-and-analytics2729/85/Data-Mining-and-Analytics-39-320.jpg)