Data Mining: Cluster Analysis

- 1. Assignment Report CSE 4239: Data Mining Cluster Analysis Submitted By Suman Mia (Roll - 1607045) Submitted To Animesh Kumar Paul Assistant Professor Dept. of Computer Science and Engineering Khulna University of Engineering & Technology

- 2. INTRODUCTION Background: Clustering is the process of making a group of abstract objects into classes of similar objects. Points to Remember • A cluster of data objects can be treated as one group. • While doing cluster analysis, we first partition the set of data into groups based on data similarity and then assign the labels to the groups. • The main advantage of clustering over classification is that, it is adaptable to changes and helps single out useful features that distinguish different groups. Clustering helps to splits data into several subsets. Each of these subsets contains data similar to each other, and these subsets are called clusters. Now that the data from our customer base is divided into clusters, we can make an informed decision about who we think is best suited for this product. PURPOSES 1. Discover structures and patterns in high-dimensional data. 2. Group data with similar patterns together. 3. This reduces the complexity and facilitates interpretation. 4. Ability to deal with different types of attributes 5. Discovery of clusters with attribute shape 6. Interpretability & Scalability

- 3. Clustering Methods It can be classified based on the following categories. 1. Balanced Iterative Reducing and Clustering using Hierarchies (BIRCH) 2. Density-based spatial clustering of applications with noise (DBSCAN) 3. Agglomerative Hierarchical Clustering 4. K-means Clustering 5. K-Medoids clustering 6. Chameleon clustering BIRCH Clustering: Balanced Iterative Reducing and Clustering using Hierarchies (BIRCH) is a clustering algorithm that can cluster large datasets by first generating a small and compact summary of the the large dataset that retains as much information as possible. This smaller summary is then clustered instead of clustering the larger dataset. Parameters of BIRCH Algorithm : • threshold : threshold is the maximum number of data points a sub-cluster in the leaf node of the CF tree can hold. • branching_factor : This parameter specifies the maximum number of CF sub-clusters in each node (internal node). • n_clusters : The number of clusters to be returned after the entire BIRCH algorithm is complete i.e., number of clusters after the final clustering step. If set to None, the final clustering step is not performed and intermediate clusters are returned. Output Plot By Implementing BIRCH:

- 4. Density-based spatial clustering of applications with noise (DBSCAN): Density-based spatial clustering of applications with noise (DBSCAN) is a well-known data clustering algorithm that is commonly used in data mining and machine learning. Based on a set of points (let’s think in a bidimensional space as exemplified in the figure), DBSCAN groups together points that are close to each other based on a distance measurement (usually Euclidean distance) and a minimum number of points. It also marks as outliers the points that are in low-density regions. DBSCAN algorithm requires two parameters – 1. eps : It defines the neighborhood around a data point i.e. if the distance between two points is lower or equal to ‘eps’ then they are considered as neighbors. If the eps value is chosen too small then large part of the data will be considered as outliers. If it is chosen very large then the clusters will merge and majority of the data points will be in the same clusters. One way to find the eps value is based on the k-distance graph. 2. MinPts: Minimum number of neighbors (data points) within eps radius. Larger the dataset, the larger value of MinPts must be chosen. As a general rule, the minimum MinPts can be derived from the number of dimensions D in the dataset as, MinPts >= D+1. The minimum value of MinPts must be chosen at least Output Plot By Implementing DBSCAN:

- 5. Agglomerative Hierarchical Clustering : Also known as bottom-up approach or hierarchical agglomerative clustering (HAC). A structure that is more informative than the unstructured set of clusters returned by flat clustering. This clustering algorithm does not require us to prespecify the number of clusters. Bottom-up algorithms treat each data as a singleton cluster at the outset and then successively agglomerates pairs of clusters until all clusters have been merged into a single cluster that contains all data. Parameters : 1. n_clusters: int or None, default=2 2. affinity: str or callable, default=’euclidean’ 3. memory: str or object with the joblib.Memory interface, default=None 4. connectivity: array-like or callable, default=None 5. compute_full_tree: ‘auto’ or bool, default=’auto’ 6. linkage: {‘ward’, ‘complete’, ‘average’, ‘single’}, default=’ward’ Output plot By Implementing Agglomerative Hierarchical Clustering:

- 6. K-means Clustering: K-means clustering is a type of unsupervised learning, which is used when you have unlabeled data (i.e., data without defined categories or groups). ... The algorithm works iteratively to assign each data point to one of K groups based on the features that are provided. The algorithm will categorize the items into k groups of similarity. To calculate that similarity, we will use the euclidean distance as measurement. The algorithm works as follows: 1. First we initialize k points, called means, randomly. 2. We categorize each item to its closest mean and we update the mean’s coordinates, which are the averages of the items categorized in that mean so far. 3. We repeat the process for a given number of iterations and at the end, we have our clusters. Output plot By Implementing K-means Clustering:

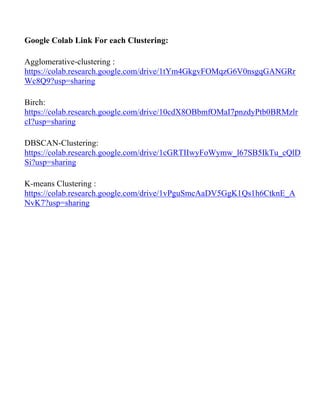

- 7. Google Colab Link For each Clustering: Agglomerative-clustering : https://colab.research.google.com/drive/1tYm4GkgvFOMqzG6V0nsgqGANGRr Wc8Q9?usp=sharing Birch: https://colab.research.google.com/drive/10cdX8OBbmfOMaI7pnzdyPtb0BRMzlr cI?usp=sharing DBSCAN-Clustering: https://colab.research.google.com/drive/1cGRTIIwyFoWymw_l67SB5IkTu_cQlD Si?usp=sharing K-means Clustering : https://colab.research.google.com/drive/1vPguSmcAaDV5GgK1Qs1h6CtknE_A NvK7?usp=sharing