Data mining with weka

3 likes3,903 views

This document discusses developing classifiers for a census income dataset using the WEKA data mining tool. It summarizes preprocessing steps like handling missing values, removing outliers, and balancing class distributions. It then evaluates various classifiers like J48 decision trees and k-nearest neighbors (IBk) on the preprocessed data. The best performing model was an ensemble "vote" classifier that combined the predictions of J48, IBk, and logistic regression models, achieving 87.3% accuracy and an ROC area of 0.947.

1 of 33

Downloaded 118 times

Ad

Recommended

Machine learning and linear regression programming

Machine learning and linear regression programmingSoumya Mukherjee Overview of AI and ML

Terminology awareness

Applications in real world

Use cases within Nokia

Types of Learning

Regression

Classification

Clustering

Linear Regression Single Variable with python

Outlier Detection

Outlier DetectionDr. Abdul Ahad Abro This document provides an overview of outlier detection. It defines outliers as observations that deviate significantly from other observations. There are two types of outliers: univariate outliers found in a single feature and multivariate outliers found in multiple features. Common causes of outliers include data entry errors, measurement errors, experimental errors, intentional outliers, data processing errors, sampling errors, and natural outliers. Methods for detecting outliers include z-score analysis, statistical modeling, linear regression models, proximity based models, information theory models, and high dimensional detection methods.

Machine Learning Classifiers

Machine Learning ClassifiersMostafa Use Machine learning to solve classification problems through building binary and multi-class classifiers.

Does your company face business-critical decisions that rely on dynamic transactional data? If you answered “yes,” you need to attend this free event featuring Microsoft analytics tools. We’ll focus on Azure Machine Learning capabilities and explore the following topics: - Introduction of two class classification problems.

- Classification Algorithms (Two Class Classification)

- Available algorithms in Azure ML.

- Real business problems that is solved using two class classification.

K Nearest Neighbor Algorithm

K Nearest Neighbor AlgorithmTharuka Vishwajith Sarathchandra KNN algorithm is one of the simplest classification algorithm and it is one of the most used learning algorithms. KNN is a non-parametric, lazy learning algorithm. Its purpose is to use a database in which the data points are separated into several classes to predict the classification of a new sample point.

Fuzzy arithmetic

Fuzzy arithmeticMohit Chimankar This document presents information on fuzzy arithmetic and operations. It discusses fuzzy numbers, linguistic variables, and arithmetic operations on fuzzy intervals and fuzzy numbers. Some key points:

- Fuzzy numbers are fuzzy sets with certain properties like being normal, having closed interval alpha-cuts, and bounded support.

- Linguistic variables assign linguistic values like "young" or "old" to numerical variables. They are represented as fuzzy sets.

- Arithmetic operations on fuzzy intervals are defined based on the corresponding operations on their alpha-cuts, which are closed intervals. Properties like commutativity and distributivity are discussed.

- Operations on fuzzy numbers are similarly defined based on the alpha-cuts of the resulting fuzzy

Classification Based Machine Learning Algorithms

Classification Based Machine Learning AlgorithmsMd. Main Uddin Rony This slide focuses on working procedure of some famous classification based machine learning algorithms

Finite automata examples

Finite automata examplesankitamakin Deterministic Finite State Automata (DFAs) are machines that read input strings and determine whether to accept or reject them based on their state transitions. A DFA is defined as a 5-tuple (Q, Σ, δ, q0, F) where Q is a finite set of states, Σ is a finite input alphabet, q0 is the starting state, F is the set of accepting states, and δ is the transition function that maps a state and input symbol to the next state. The language accepted by a DFA is the set of strings that cause the DFA to enter an accepting state. Nondeterministic Finite State Automata (NFAs) are similar but δ maps to sets of states rather

Perceptron

PerceptronNagarajan 1. A perceptron is a basic artificial neural network that can learn linearly separable patterns. It takes weighted inputs, applies an activation function, and outputs a single binary value.

2. Multilayer perceptrons can learn non-linear patterns by using multiple layers of perceptrons with weighted connections between them. They were developed to overcome limitations of single-layer perceptrons.

3. Perceptrons are trained using an error-correction learning rule called the delta rule or the least mean squares algorithm. Weights are adjusted to minimize the error between the actual and target outputs.

Statistical Pattern recognition(1)

Statistical Pattern recognition(1)Syed Atif Naseem The document summarizes statistical pattern recognition techniques. It is divided into 9 sections that cover topics like dimensionality reduction, classifiers, classifier combination, and unsupervised classification. The goal of pattern recognition is supervised or unsupervised classification of patterns based on features. Dimensionality reduction aims to reduce the number of features to address the curse of dimensionality when samples are limited. Multiple classifiers can be combined through techniques like stacking, bagging, and boosting. Unsupervised classification uses clustering algorithms to construct decision boundaries without labeled training data.

Machine Learning-Linear regression

Machine Learning-Linear regressionkishanthkumaar Linear regression is a supervised machine learning technique used to model the relationship between a continuous dependent variable and one or more independent variables. It is commonly used for prediction and forecasting. The regression line represents the best fit line for the data using the least squares method to minimize the distance between the observed data points and the regression line. R-squared measures how well the regression line represents the data, on a scale of 0-100%. Linear regression performs well when data is linearly separable but has limitations such as assuming linear relationships and being sensitive to outliers and multicollinearity.

Capter10 cluster basic

Capter10 cluster basicHouw Liong The This document summarizes Chapter 10 of the book "Data Mining: Concepts and Techniques (3rd ed.)" which covers cluster analysis. The chapter introduces different types of clustering methods including partitioning methods like k-means and k-medoids, hierarchical methods, density-based methods, and grid-based methods. It discusses how to evaluate the quality of clustering results and highlights considerations for cluster analysis such as similarity measures, clustering space, and challenges like scalability and high dimensionality.

ML - Simple Linear Regression

ML - Simple Linear RegressionAndrew Ferlitsch Abstract: This PDSG workshop introduces basic concepts of simple linear regression in machine learning. Concepts covered are Slope of a Line, Loss Function, and Solving Simple Linear Regression Equation, with examples.

Level: Fundamental

Requirements: No prior programming or statistics knowledge required.

Bias and variance trade off

Bias and variance trade offVARUN KUMAR This manuscript addresses the fundamentals of the trade-off relation between bias and variance in machine learning.

CSC446: Pattern Recognition (LN5)

CSC446: Pattern Recognition (LN5)Mostafa G. M. Mostafa This document discusses Bayesian decision theory and classifiers that use discriminant functions. It covers several key topics:

1. Classifiers can be represented by discriminant functions gi(x) that assign vectors x to classes based on their values. The functions divide the space into decision regions.

2. Discriminant functions gi(x) are not unique and can be scaled or shifted without changing decisions.

3. Examples of discriminant functions include posterior probabilities P(ωi | x), likelihood functions P(x | ωi)P(ωi), and risk functions.

4. The two-category case uses a single discriminant function g(x) = g1(x) - g2

Randomized algorithms ver 1.0

Randomized algorithms ver 1.0Dr. C.V. Suresh Babu This document discusses randomized algorithms. It begins by listing different categories of algorithms, including randomized algorithms. Randomized algorithms introduce randomness into the algorithm to avoid worst-case behavior and find efficient approximate solutions. Quicksort is presented as an example randomized algorithm, where randomness improves its average runtime from quadratic to linear. The document also discusses the randomized closest pair algorithm and a randomized algorithm for primality testing. Both introduce randomness to improve efficiency compared to deterministic algorithms for the same problems.

Support vector machines (svm)

Support vector machines (svm)Sharayu Patil A Support Vector Machine (SVM) is a discriminative classifier formally defined by a separating hyperplane. In other words, given labeled training data (supervised learning), the algorithm outputs an optimal hyperplane which categorizes new examples. In two dimentional space this hyperplane is a line dividing a plane in two parts where in each class lay in either side.

Data preprocessing in Machine learning

Data preprocessing in Machine learning pyingkodi maran Data preprocessing is the process of preparing raw data for analysis by cleaning it, transforming it, and reducing it. The key steps in data preprocessing include data cleaning to handle missing values, outliers, and noise; data transformation techniques like normalization, discretization, and feature extraction; and data reduction methods like dimensionality reduction and sampling. Preprocessing ensures the data is consistent, accurate and suitable for building machine learning models.

Classification Algorithm.

Classification Algorithm.Megha Sharma This document discusses various classification algorithms including k-nearest neighbors, decision trees, naive Bayes classifier, and logistic regression. It provides examples of how each algorithm works. For k-nearest neighbors, it shows how an unknown data point would be classified based on its nearest neighbors. For decision trees, it illustrates how a tree is built by splitting the data into subsets at each node until pure subsets are reached. It also provides an example decision tree to predict whether Amit will play cricket. For naive Bayes, it gives an example of calculating the probability of cancer given a patient is a smoker.

Constraint Satisfaction Problem (CSP) : Cryptarithmetic, Graph Coloring, 4- Q...

Constraint Satisfaction Problem (CSP) : Cryptarithmetic, Graph Coloring, 4- Q...Mahbubur Rahman Constraint satisfaction problems (CSPs) involve assigning values to variables from given domains so that all constraints are satisfied. CSPs provide a general framework that can model many combinatorial problems. A CSP is defined by variables that take values from domains, and constraints specifying allowed value combinations. Real-world CSPs include scheduling, assignment problems, timetabling, mapping coloring and puzzles. Examples provided include cryptarithmetic, Sudoku, 4-queens, and graph coloring.

Kernel density estimation (kde)

Kernel density estimation (kde)Padma Metta This document discusses kernel density estimation (KDE), a non-parametric method for estimating the probability density function of a variable. KDE involves placing a kernel (such as a Gaussian) over each data point and summing the kernels to estimate the density. The bandwidth parameter controls the width of each kernel and influences the smoothness of the estimated density function. Different kernel functions, such as uniform, triangular, and normal can be used. KDE provides a continuous density estimate compared to histograms and converges faster than histograms for continuous variables.

Machine learning clustering

Machine learning clusteringCosmoAIMS Bassett This document discusses machine learning concepts including supervised vs. unsupervised learning, clustering algorithms, and specific clustering methods like k-means and k-nearest neighbors. It provides examples of how clustering can be used for applications such as market segmentation and astronomical data analysis. Key clustering algorithms covered are hierarchy methods, partitioning methods, k-means which groups data by assigning objects to the closest cluster center, and k-nearest neighbors which classifies new data based on its closest training examples.

Machine Learning (Classification Models)

Machine Learning (Classification Models)Makerere Unversity School of Public Health, Victoria University These slides cover machine learning models more specifically classification algorithms (Logistic Regression, Linear Discriminant Analysis (LDA),

K-Nearest Neighbors (KNN),

Trees, Random Forests, and Boosting

Support Vector Machines (SVM),

Neural Networks)

Dempster Shafer Theory AI CSE 8th Sem

Dempster Shafer Theory AI CSE 8th SemDigiGurukul Artificial Intelligence Notes on Dempster Shafer Theory as according to CSVTU Syllabus for CSE 8th Sem

Association Rule Learning Part 1: Frequent Itemset Generation

Association Rule Learning Part 1: Frequent Itemset GenerationKnoldus Inc. A methodology useful for discovering interesting relationships hidden in large data sets. The uncovered relationships can be presented in the form of association rules.

Lecture5 - C4.5

Lecture5 - C4.5Albert Orriols-Puig This document provides a summary of a lecture on machine learning and decision trees. It recaps the previous lecture and outlines the goals of today's lecture, which will review data classification, decision trees, and the ID3 algorithm. The lecture will also cover how to build decision trees using ID3 and C4.5 and demonstrate running C4.5 on the Weka machine learning software.

introduction to machine learning

introduction to machine learningJohnson Ubah This document provides an introduction to machine learning and data science. It discusses key concepts like supervised vs. unsupervised learning, classification algorithms, overfitting and underfitting data. It also addresses challenges like having bad quality or insufficient training data. Python and MATLAB are introduced as suitable software for machine learning projects.

24 Multithreaded Algorithms

24 Multithreaded AlgorithmsAndres Mendez-Vazquez This document discusses multi-threaded algorithms and parallel computing. It begins with an introduction to multi-threaded algorithms and computational models like shared memory. It then discusses a symmetric multiprocessor model and operations like spawn, sync, and parallel. Examples of parallel algorithms for computing Fibonacci numbers, matrix multiplication, and merge sort are provided. Performance measures and parallel laws are also outlined.

Weka_Manual_Sagar

Weka_Manual_SagarSagar Kumar This document provides an overview of using the WEKA data mining tool to perform two common techniques: clustering and linear regression. It first introduces WEKA and its interfaces. It then provides details on k-means clustering, including how to implement it in WEKA on a sample BMW customer dataset. This identifies five distinct customer clusters. The document also explains linear regression and uses a house pricing dataset in WEKA to build a regression model to predict house value based on features.

Tutorial weka

Tutorial wekaRené Rojas Castillo El documento proporciona una introducción al uso del programa Weka para minería de datos. Explica cómo cargar y visualizar datos, aplicar filtros de preprocesamiento, y construir y evaluar clasificadores usando el Explorer de Weka. Algunos de los clasificadores discutidos incluyen ZeroR, PART y J48. El documento también cubre cómo ajustar parámetros de algoritmos y cómo esto puede afectar la complejidad y el rendimiento del clasificador.

Ad

More Related Content

What's hot (20)

Perceptron

PerceptronNagarajan 1. A perceptron is a basic artificial neural network that can learn linearly separable patterns. It takes weighted inputs, applies an activation function, and outputs a single binary value.

2. Multilayer perceptrons can learn non-linear patterns by using multiple layers of perceptrons with weighted connections between them. They were developed to overcome limitations of single-layer perceptrons.

3. Perceptrons are trained using an error-correction learning rule called the delta rule or the least mean squares algorithm. Weights are adjusted to minimize the error between the actual and target outputs.

Statistical Pattern recognition(1)

Statistical Pattern recognition(1)Syed Atif Naseem The document summarizes statistical pattern recognition techniques. It is divided into 9 sections that cover topics like dimensionality reduction, classifiers, classifier combination, and unsupervised classification. The goal of pattern recognition is supervised or unsupervised classification of patterns based on features. Dimensionality reduction aims to reduce the number of features to address the curse of dimensionality when samples are limited. Multiple classifiers can be combined through techniques like stacking, bagging, and boosting. Unsupervised classification uses clustering algorithms to construct decision boundaries without labeled training data.

Machine Learning-Linear regression

Machine Learning-Linear regressionkishanthkumaar Linear regression is a supervised machine learning technique used to model the relationship between a continuous dependent variable and one or more independent variables. It is commonly used for prediction and forecasting. The regression line represents the best fit line for the data using the least squares method to minimize the distance between the observed data points and the regression line. R-squared measures how well the regression line represents the data, on a scale of 0-100%. Linear regression performs well when data is linearly separable but has limitations such as assuming linear relationships and being sensitive to outliers and multicollinearity.

Capter10 cluster basic

Capter10 cluster basicHouw Liong The This document summarizes Chapter 10 of the book "Data Mining: Concepts and Techniques (3rd ed.)" which covers cluster analysis. The chapter introduces different types of clustering methods including partitioning methods like k-means and k-medoids, hierarchical methods, density-based methods, and grid-based methods. It discusses how to evaluate the quality of clustering results and highlights considerations for cluster analysis such as similarity measures, clustering space, and challenges like scalability and high dimensionality.

ML - Simple Linear Regression

ML - Simple Linear RegressionAndrew Ferlitsch Abstract: This PDSG workshop introduces basic concepts of simple linear regression in machine learning. Concepts covered are Slope of a Line, Loss Function, and Solving Simple Linear Regression Equation, with examples.

Level: Fundamental

Requirements: No prior programming or statistics knowledge required.

Bias and variance trade off

Bias and variance trade offVARUN KUMAR This manuscript addresses the fundamentals of the trade-off relation between bias and variance in machine learning.

CSC446: Pattern Recognition (LN5)

CSC446: Pattern Recognition (LN5)Mostafa G. M. Mostafa This document discusses Bayesian decision theory and classifiers that use discriminant functions. It covers several key topics:

1. Classifiers can be represented by discriminant functions gi(x) that assign vectors x to classes based on their values. The functions divide the space into decision regions.

2. Discriminant functions gi(x) are not unique and can be scaled or shifted without changing decisions.

3. Examples of discriminant functions include posterior probabilities P(ωi | x), likelihood functions P(x | ωi)P(ωi), and risk functions.

4. The two-category case uses a single discriminant function g(x) = g1(x) - g2

Randomized algorithms ver 1.0

Randomized algorithms ver 1.0Dr. C.V. Suresh Babu This document discusses randomized algorithms. It begins by listing different categories of algorithms, including randomized algorithms. Randomized algorithms introduce randomness into the algorithm to avoid worst-case behavior and find efficient approximate solutions. Quicksort is presented as an example randomized algorithm, where randomness improves its average runtime from quadratic to linear. The document also discusses the randomized closest pair algorithm and a randomized algorithm for primality testing. Both introduce randomness to improve efficiency compared to deterministic algorithms for the same problems.

Support vector machines (svm)

Support vector machines (svm)Sharayu Patil A Support Vector Machine (SVM) is a discriminative classifier formally defined by a separating hyperplane. In other words, given labeled training data (supervised learning), the algorithm outputs an optimal hyperplane which categorizes new examples. In two dimentional space this hyperplane is a line dividing a plane in two parts where in each class lay in either side.

Data preprocessing in Machine learning

Data preprocessing in Machine learning pyingkodi maran Data preprocessing is the process of preparing raw data for analysis by cleaning it, transforming it, and reducing it. The key steps in data preprocessing include data cleaning to handle missing values, outliers, and noise; data transformation techniques like normalization, discretization, and feature extraction; and data reduction methods like dimensionality reduction and sampling. Preprocessing ensures the data is consistent, accurate and suitable for building machine learning models.

Classification Algorithm.

Classification Algorithm.Megha Sharma This document discusses various classification algorithms including k-nearest neighbors, decision trees, naive Bayes classifier, and logistic regression. It provides examples of how each algorithm works. For k-nearest neighbors, it shows how an unknown data point would be classified based on its nearest neighbors. For decision trees, it illustrates how a tree is built by splitting the data into subsets at each node until pure subsets are reached. It also provides an example decision tree to predict whether Amit will play cricket. For naive Bayes, it gives an example of calculating the probability of cancer given a patient is a smoker.

Constraint Satisfaction Problem (CSP) : Cryptarithmetic, Graph Coloring, 4- Q...

Constraint Satisfaction Problem (CSP) : Cryptarithmetic, Graph Coloring, 4- Q...Mahbubur Rahman Constraint satisfaction problems (CSPs) involve assigning values to variables from given domains so that all constraints are satisfied. CSPs provide a general framework that can model many combinatorial problems. A CSP is defined by variables that take values from domains, and constraints specifying allowed value combinations. Real-world CSPs include scheduling, assignment problems, timetabling, mapping coloring and puzzles. Examples provided include cryptarithmetic, Sudoku, 4-queens, and graph coloring.

Kernel density estimation (kde)

Kernel density estimation (kde)Padma Metta This document discusses kernel density estimation (KDE), a non-parametric method for estimating the probability density function of a variable. KDE involves placing a kernel (such as a Gaussian) over each data point and summing the kernels to estimate the density. The bandwidth parameter controls the width of each kernel and influences the smoothness of the estimated density function. Different kernel functions, such as uniform, triangular, and normal can be used. KDE provides a continuous density estimate compared to histograms and converges faster than histograms for continuous variables.

Machine learning clustering

Machine learning clusteringCosmoAIMS Bassett This document discusses machine learning concepts including supervised vs. unsupervised learning, clustering algorithms, and specific clustering methods like k-means and k-nearest neighbors. It provides examples of how clustering can be used for applications such as market segmentation and astronomical data analysis. Key clustering algorithms covered are hierarchy methods, partitioning methods, k-means which groups data by assigning objects to the closest cluster center, and k-nearest neighbors which classifies new data based on its closest training examples.

Machine Learning (Classification Models)

Machine Learning (Classification Models)Makerere Unversity School of Public Health, Victoria University These slides cover machine learning models more specifically classification algorithms (Logistic Regression, Linear Discriminant Analysis (LDA),

K-Nearest Neighbors (KNN),

Trees, Random Forests, and Boosting

Support Vector Machines (SVM),

Neural Networks)

Dempster Shafer Theory AI CSE 8th Sem

Dempster Shafer Theory AI CSE 8th SemDigiGurukul Artificial Intelligence Notes on Dempster Shafer Theory as according to CSVTU Syllabus for CSE 8th Sem

Association Rule Learning Part 1: Frequent Itemset Generation

Association Rule Learning Part 1: Frequent Itemset GenerationKnoldus Inc. A methodology useful for discovering interesting relationships hidden in large data sets. The uncovered relationships can be presented in the form of association rules.

Lecture5 - C4.5

Lecture5 - C4.5Albert Orriols-Puig This document provides a summary of a lecture on machine learning and decision trees. It recaps the previous lecture and outlines the goals of today's lecture, which will review data classification, decision trees, and the ID3 algorithm. The lecture will also cover how to build decision trees using ID3 and C4.5 and demonstrate running C4.5 on the Weka machine learning software.

introduction to machine learning

introduction to machine learningJohnson Ubah This document provides an introduction to machine learning and data science. It discusses key concepts like supervised vs. unsupervised learning, classification algorithms, overfitting and underfitting data. It also addresses challenges like having bad quality or insufficient training data. Python and MATLAB are introduced as suitable software for machine learning projects.

24 Multithreaded Algorithms

24 Multithreaded AlgorithmsAndres Mendez-Vazquez This document discusses multi-threaded algorithms and parallel computing. It begins with an introduction to multi-threaded algorithms and computational models like shared memory. It then discusses a symmetric multiprocessor model and operations like spawn, sync, and parallel. Examples of parallel algorithms for computing Fibonacci numbers, matrix multiplication, and merge sort are provided. Performance measures and parallel laws are also outlined.

Viewers also liked (20)

Weka_Manual_Sagar

Weka_Manual_SagarSagar Kumar This document provides an overview of using the WEKA data mining tool to perform two common techniques: clustering and linear regression. It first introduces WEKA and its interfaces. It then provides details on k-means clustering, including how to implement it in WEKA on a sample BMW customer dataset. This identifies five distinct customer clusters. The document also explains linear regression and uses a house pricing dataset in WEKA to build a regression model to predict house value based on features.

Tutorial weka

Tutorial wekaRené Rojas Castillo El documento proporciona una introducción al uso del programa Weka para minería de datos. Explica cómo cargar y visualizar datos, aplicar filtros de preprocesamiento, y construir y evaluar clasificadores usando el Explorer de Weka. Algunos de los clasificadores discutidos incluyen ZeroR, PART y J48. El documento también cubre cómo ajustar parámetros de algoritmos y cómo esto puede afectar la complejidad y el rendimiento del clasificador.

Data mining assignment 1

Data mining assignment 1BarryK88 This document discusses applying data mining techniques to predict whether a football match will be cancelled due to weather. Attributes that could be used in the prediction include amount of rain, temperature, humidity, and weather conditions. The data would come from weather stations and the football club. A second example discusses using attributes like player numbers, injuries, past goals, and team performance to predict the outcome of a match between Ajax and Real Madrid. The document also explains the difference between a training set, which is used to weight attributes, and a test set, which is used to evaluate the training set's predictions. Key data mining concepts like features, instances, and classes are briefly defined with examples.

Fighting spam using social gate keepers

Fighting spam using social gate keepersHein Min Htike LENS is a technique that leverages social networks and trust to prevent spam transmission. It uses "Gatekeepers" who are legitimate users outside one's immediate social network that can vouch for other legitimate users. The key aspects of LENS are:

1. It forms communities of friends and friends-of-friends where users can directly send emails.

2. It selects Gatekeepers - trusted users outside one's network who can vouch for legitimate senders outside the network.

3. When a sender outside one's network sends an email, the sender's mail server will attach a voucher from a Gatekeeper. The recipient's server verifies the voucher to ensure the sender is legitimate.

4.

Classification of commercial and personal profiles on my space

Classification of commercial and personal profiles on my spacees712 This document presents research on classifying profiles on MySpace as either commercial or personal. A decision tree classifier called J48 was developed that uses profile attributes such as gender, age, friends, and publishing relationships. The J48 classifier achieved an accuracy of 92.25% to 96.42% on test data. The classifier is then applied to a privacy-preserving system where personal profiles can publish anonymously through an "avatar" to avoid disclosing private information to commercial profiles.

Naïve Bayes and J48 Classification Algorithms on Swahili Tweets: Performance ...

Naïve Bayes and J48 Classification Algorithms on Swahili Tweets: Performance ...IJCSIS Research Publications The document discusses a study that evaluates the performance of the Naive Bayes and J48 classification algorithms on Swahili tweets. The study collected 276 tweets from popular Tanzanian Twitter accounts and preprocessed the data to remove non-Swahili words. The preprocessed data was then analyzed using the Naive Bayes and J48 algorithms in WEKA. The algorithms were evaluated based on accuracy, precision, recall, and ROC curve. The results showed that the Naive Bayes algorithm performed better than J48, achieving higher accuracy (36.96% vs 34.78%) and higher values for the other evaluation metrics as well. Therefore, the study found that Naive Bayes classification works better than J48 for classifying Swahili tweets.

Empirical Study on Classification Algorithm For Evaluation of Students Academ...

Empirical Study on Classification Algorithm For Evaluation of Students Academ...iosrjce Data mining techniques (DMT) are extensively used in educational field to find new hidden patterns

from student’s data. In recent years, the greatest issues that educational institutions are facing the unstable

expansion of educational data and to utilize this information data to progress the quality of managerial

decisions. Educational institutions are playing a prominent role in the public and also playing an essential role

for enlargement and progress of nation. The idea is predicting the paths of students, thus identifying the student

achievement. The data mining methods are very useful in predicting the educational database. Educational data

mining is concerns with improving techniques for determining knowledge from data which comes from the

educational database. However it has issue with accuracy of classification algorithms. To overcome this

problem the higher accuracy of the classification J48 algorithm is used. This work takes consideration with the

locality and the performance of the student in education in order to analyse the student achievement is high over

schooling or in graduation

Weka

WekaMostafa Raihan Weka is a popular suite of machine learning software written in Java. It was developed at the University of Waikato in New Zealand and is free to use under the GNU license. Weka allows users to preprocess and analyze data, build models, and perform predictive analytics. It includes an easy to use graphical user interface that allows users to load datasets, run machine learning algorithms, and evaluate experimental results.

Assessing Component based ERP Architecture for Developing Organizations

Assessing Component based ERP Architecture for Developing OrganizationsIJCSIS Research Publications Abstract - Various aspects of three proposed architectures for distributed software are examined. A Crucial need to

create an ideal model for optimal architecture which meets the needs of the organization for flexibility, extensibility

and integration, to fulfill exhaustive performance for potential talents processes and opportunities in the corporations

a permanent and ongoing need. The excellence of the proposed architecture is demonstrated by presenting a rigor scenario based proof of adaptively and compatibility of the architecture in cases of merging and varying organizations, where the whole structure of hierarchies is revised.

Keywords: ERP, Data-centric architecture, architecture Component-based, Plug in architecture, distributed systems

PROJECT_REPORT_FINAL

PROJECT_REPORT_FINALJason Warnstaff This document discusses the data cleaning process for a dataset combining Reddit news headlines and Dow Jones Industrial Average (DJIA) values. The dataset contained issues like special characters in headlines and multiple word forms referring to the same concept. To address this, the data was cleaned by removing special characters, stemming words to their root forms, and removing stopwords. Keywords were identified by creating a corpus from the cleaned headlines and calculating term frequencies. Two frequency ranges containing about 50 terms each were selected for further analysis against the DJIA rise/fall classification attribute. The goal of the data cleaning was to extract keyword counts that could help predict the DJIA classification.

HCI - Individual Report for Metrolink App

HCI - Individual Report for Metrolink AppDarran Mottershead The document provides details on developing a new mobile application for Metrolink transit. It discusses weaknesses in the current application and outlines features and justifications for the new application being developed. The key points are:

1) The new application aims to remove unnecessary features from the current Metrolink app and add useful new functionality like ticket purchasing that users want.

2) Features like real-time updates, multiple ticket purchasing, QR codes for tickets, and alarms for arrival times are described and their benefits outlined.

3) Making the app free, reducing time spent on tasks, and providing relevant location-based information are emphasized as ways to enhance usability and encourage more users.

Data Mining Techniques using WEKA (Ankit Pandey-10BM60012)

Data Mining Techniques using WEKA (Ankit Pandey-10BM60012)Ankit Pandey This term paper contains a brief introduction of a powerful data mining tool WEKA along with a hands-on guide to two data mining techniques namely Clustering (k-means) and Linear Regression using WEKA.

P2P: Simulations and Real world Networks

P2P: Simulations and Real world NetworksMatilda Rhode This document describes simulations of peer-to-peer (P2P) networks using different protocols, configurations, and parameters. It discusses running many simulations to analyze the results using Tableau. Key aspects studied include average, maximum, and minimum aggregation functions, network size and topology, value distributions, and static versus dynamic network configurations. Real-world P2P networks tend to be either structured, unstructured, or hybrid depending on their topology and search capabilities.

Group7_Datamining_Project_Report_Final

Group7_Datamining_Project_Report_FinalManikandan Sundarapandian This document describes a data mining project to analyze bank marketing data using classification algorithms. The goal is to predict whether a client will subscribe to a term deposit based on their attributes. The dataset contains information on over 61,000 customers. Pre-processing steps like data cleaning, handling missing values, removing outliers, and scaling are performed. Algorithms like Naive Bayes, J48 decision trees, and PART are applied and their results compared to determine the best predictor of subscriptions.

Project_702

Project_702Sreelakshmi Dodderi This document summarizes the results of hierarchical cluster analysis performed on several gene expression datasets:

1. Cluster analysis was conducted on subsets of the NCI60 cancer gene expression data, showing close relatedness between breast and ovarian cancers, and clear clustering of colon and prostate cancers and colon and renal cancers.

2. Neighbor-joining cluster analysis of kinase genes in the Golub leukemia data grouped two tyrosine kinase genes closely together, which could inform drug design.

3. Biochemical validation methods like DNA sequencing and multiple sequence alignment were proposed to validate the computational cluster analysis results.

Data mining assignment 4

Data mining assignment 4BarryK88 The document discusses various concepts in data mining and decision trees including:

1) Pruning trees to address overfitting and improve generalization,

2) Separating data into training, development and test sets to evaluate model performance,

3) Information gain favoring attributes with many values by having less entropy,

4) Strategies for dealing with missing attribute values such as predicting values or focusing on other attributes/classes,

5) Changing stopping conditions for regression trees to use standard deviation thresholds rather than discrete classes.

Data mining assignment 5

Data mining assignment 5BarryK88 The document discusses perceptrons and gradient descent algorithms for training perceptrons on classification tasks. It contains 4 exercises:

1) Explains the role of the learning rate in perceptron training and which Boolean functions can/cannot be modeled with perceptrons.

2) Applies a perceptron to a sample dataset, calculates outputs, and determines the accuracy.

3) Performs one iteration of gradient descent on the same dataset, computing weight updates with a learning rate of 0.2.

4) Performs one iteration of stochastic gradient descent on the dataset, recomputing outputs and updating weights after each instance.

Tree pruning

Tree pruningpriya_kalia Tree pruning removes parts of a decision tree that overfit the training data due to noise or outliers, making the tree smaller and less complex. There are two pruning strategies: postpruning removes subtrees after full tree growth, while prepruning stops growing branches when information becomes unreliable. Decision tree algorithms are efficient for small datasets but have performance issues for very large real-world datasets that may not fit in memory.

Steps to Converting Exisiting Visitors to Customers Using Data, Testing and P...

Steps to Converting Exisiting Visitors to Customers Using Data, Testing and P...Triangle American Marketing Association Steps to Converting Exisiting Visitors to Customers Using Data, Testing and Personalization presented by Greg Ng, CXO, Brooks Bell Interactive, at Triangle AMA Digital Marketing Camp on Feb 29, 2102. www.triangleama.org

Naïve Bayes and J48 Classification Algorithms on Swahili Tweets: Performance ...

Naïve Bayes and J48 Classification Algorithms on Swahili Tweets: Performance ...IJCSIS Research Publications

Steps to Converting Exisiting Visitors to Customers Using Data, Testing and P...

Steps to Converting Exisiting Visitors to Customers Using Data, Testing and P...Triangle American Marketing Association

Ad

Similar to Data mining with weka (20)

30thSep2014

30thSep2014Mia liu This document summarizes a proposed method for discriminative unsupervised dimensionality reduction called DUDR. It begins by introducing traditional dimensionality reduction techniques like PCA and LDA. It then discusses limitations of existing graph embedding methods that require constructing a graph beforehand. The proposed DUDR method jointly learns the graph construction and dimensionality reduction to avoid this dependency. It formulates an optimization problem to learn a projection matrix and affinity matrix simultaneously. Experimental results on synthetic and real-world datasets show DUDR achieves better clustering performance than other methods like PCA, LPP, k-means and NMF.

introduction to Statistical Theory.pptx

introduction to Statistical Theory.pptxDr.Shweta Statistical theory is a branch of mathematics and statistics that provides the foundation for understanding and working with data, making inferences, and drawing conclusions from observed phenomena. It encompasses a wide range of concepts, principles, and techniques for analyzing and interpreting data in a systematic and rigorous manner. Statistical theory is fundamental to various fields, including science, social science, economics, engineering, and more.

Module-4_Part-II.pptx

Module-4_Part-II.pptxVaishaliBagewadikar Principal Component Analysis (PCA) is an unsupervised learning algorithm used for dimensionality reduction. It transforms correlated variables into linearly uncorrelated variables called principal components. PCA works by considering the variance of each attribute to reduce dimensionality while preserving as much information as possible. It is commonly used for exploratory data analysis, predictive modeling, and visualization.

background.pptx

background.pptxKabileshCm Basic machine learning background with Python scikit-learn

This document provides an overview of machine learning and the Python scikit-learn library. It introduces key machine learning concepts like classification, linear models, support vector machines, decision trees, bagging, boosting, and clustering. It also demonstrates how to perform tasks like SVM classification, decision tree modeling, random forest, principal component analysis, and k-means clustering using scikit-learn. The document concludes that scikit-learn can handle large datasets and recommends Keras for deep learning.

Dimensionality Reduction in Machine Learning

Dimensionality Reduction in Machine LearningRomiRoy4 This document discusses dimensionality reduction techniques. Dimensionality reduction reduces the number of random variables under consideration to address issues like sparsity and less similarity between data points. It is accomplished through feature selection, which omits redundant/irrelevant features, or feature extraction, which maps features into a lower dimensional space. Dimensionality reduction provides advantages like less complexity, storage needs, computation time and improved model accuracy. Popular techniques include principal component analysis (PCA), which extracts new variables, and filtering methods. PCA involves standardizing data, computing correlations via the covariance matrix, and identifying principal components via eigenvectors and eigenvalues.

Principal component analysis and lda

Principal component analysis and ldaSuresh Pokharel PCA and LDA are dimensionality reduction techniques. PCA transforms variables into uncorrelated principal components while maximizing variance. It is unsupervised. LDA finds axes that maximize separation between classes while minimizing within-class variance. It is supervised and finds axes that separate classes well. The document provides mathematical explanations of how PCA and LDA work including calculating covariance matrices, eigenvalues, eigenvectors, and transformations.

Feature Engineering Fundamentals Explained.pptx

Feature Engineering Fundamentals Explained.pptxshilpamathur13 Feature engineering is the process of selecting, modifying, or creating new features (variables) from raw data to improve the performance of machine learning models. It involves identifying the most relevant features, transforming data into a suitable format, handling missing values, encoding categorical variables, scaling numerical data, and creating interaction terms or derived features. Effective feature engineering can significantly enhance a model's accuracy and interpretability by providing it with the most informative inputs. It is often considered a crucial step in the machine learning pipeline.

Rapid Miner

Rapid MinerSrushtiSuvarna This presentation inludes step-by step tutorial by including the screen recordings to learn Rapid Miner.It also includes the step-step-step procedure to use the most interesting features -Turbo Prep and Auto Model.

Dimensionality Reduction

Dimensionality ReductionSaad Elbeleidy A brief overview of dimensionality reduction; why it's needed, different types, and some sample methods.

malware detection ppt for vtu project and other final year project

malware detection ppt for vtu project and other final year projectNaveenAd4 The document describes a project that aims to classify and detect malware in software using deep learning and autoencoders. It involves taking a malware detection dataset from an online repository, preprocessing the data, selecting features using PCA, splitting the data into training and test sets, and using machine learning classifiers like random forest and CNN to classify the data and detect malware. The proposed system is evaluated based on performance metrics like accuracy, precision, and recall, and is found to perform better than existing systems with higher accuracy and efficient prediction abilities.

07 learning

07 learningankit_ppt This document contains legal notices and disclaimers for an Intel presentation. It states that the presentation is for informational purposes only and that Intel makes no warranties. It also notes that performance depends on system configuration and that sample source code is released under an Intel license agreement. Finally, it provides basic copyright information.

IDS for IoT.pptx

IDS for IoT.pptxRashilaShrestha The document proposes a lightweight intrusion detection system for IoT networks using support vector machines (SVM). It analyzes common IoT threats like denial of service attacks and models IoT traffic using Poisson distributions. Features are extracted from training data and an SVM classifier is trained and evaluated on test data. Several experiments show the linear SVM performs best, detecting attacks with over 98% accuracy while requiring less time and resources than other classifiers. The proposed system is shown to effectively detect attacks in IoT networks while keeping low computational overhead needed for resource-constrained IoT devices.

An introduction to variable and feature selection

An introduction to variable and feature selectionMarco Meoni Presentation of a great paper from Isabelle Guyon (Clopinet) and André Elisseeff (Max Planck Institute) back in 2003, which outlines the main techniques for feature selection and model validation in machine learning systems

Machine learning meetup

Machine learning meetupQuantUniversity With R, Python, Apache Spark and a plethora of other open source tools, anyone with a computer can run machine learning algorithms in a jiffy! However, without an understanding of which algorithms to choose and when to apply a particular technique, most machine learning efforts turn into trial and error experiments with conclusions like "The algorithms don't work" or "Perhaps we should get more data".

In this lecture, we will focus on the key tenets of machine learning algorithms and how to choose an algorithm for a particular purpose. Rather than just showing how to run experiments in R ,Python or Apache Spark, we will provide an intuitive introduction to machine learning with just enough mathematics and basic statistics.

We will address:

• How do you differentiate Clustering, Classification and Prediction algorithms?

• What are the key steps in running a machine learning algorithm?

• How do you choose an algorithm for a specific goal?

• Where does exploratory data analysis and feature engineering fit into the picture?

• Once you run an algorithm, how do you evaluate the performance of an algorithm?

random forest.pptx

random forest.pptxPriyadharshiniG41 Random forest is an ensemble learning technique that builds multiple decision trees and merges their predictions to improve accuracy. It works by constructing many decision trees during training, then outputting the class that is the mode of the classes of the individual trees. Random forest can handle both classification and regression problems. It performs well even with large, complex datasets and prevents overfitting. Some key advantages are that it is accurate, efficient even with large datasets, and handles missing data well.

Week 12 Dimensionality Reduction Bagian 1

Week 12 Dimensionality Reduction Bagian 1khairulhuda242 This document discusses dimensionality reduction using principal component analysis (PCA). It explains that PCA is used to reduce the number of variables in a dataset while retaining the variation present in the original data. The document outlines the PCA algorithm, which transforms the original variables into new uncorrelated variables called principal components. It provides an example of applying PCA to reduce data from 2D to 1D. The document also discusses key PCA concepts like covariance matrices, eigenvalues, eigenvectors, and transforming data to the principal component coordinate system. Finally, it presents an assignment applying PCA and classification to a handwritten digits dataset.

Machine Learning Essentials Demystified part2 | Big Data Demystified

Machine Learning Essentials Demystified part2 | Big Data DemystifiedOmid Vahdaty The document provides an overview of machine learning concepts including linear regression, artificial neural networks, and convolutional neural networks. It discusses how artificial neural networks are inspired by biological neurons and can learn relationships in data. The document uses the MNIST dataset example to demonstrate how a neural network can be trained to classify images of handwritten digits using backpropagation to adjust weights to minimize error. TensorFlow is introduced as a popular Python library for building machine learning models, enabling flexible creation and training of neural networks.

Random Forest Decision Tree.pptx

Random Forest Decision Tree.pptxRamakrishna Reddy Bijjam Random forest is an ensemble machine learning algorithm that combines multiple decision trees to improve predictive accuracy. It works by constructing many decision trees during training and outputting the class that is the mode of the classes of the individual trees. Random forest can be used for both classification and regression problems and provides high accuracy even with large datasets.

Ad

Recently uploaded (20)

Just-In-Timeasdfffffffghhhhhhhhhhj Systems.ppt

Just-In-Timeasdfffffffghhhhhhhhhhj Systems.pptssuser5f8f49 Just-in-time: Repetitive production system in which processing and movement of materials and goods occur just as they are needed, usually in small batches

JIT is characteristic of lean production systems

JIT operates with very little “fat”

04302025_CCC TUG_DataVista: The Design Story

04302025_CCC TUG_DataVista: The Design Storyccctableauusergroup CCCCO and WestEd share the story behind how DataVista came together from a design standpoint and in Tableau.

CTS EXCEPTIONSPrediction of Aluminium wire rod physical properties through AI...

CTS EXCEPTIONSPrediction of Aluminium wire rod physical properties through AI...ThanushsaranS Prediction of Aluminium wire rod physical properties through AI, ML

or any modern technique for better productivity and quality control.

Thingyan is now a global treasure! See how people around the world are search...

Thingyan is now a global treasure! See how people around the world are search...Pixellion We explored how the world searches for 'Thingyan' and 'သင်္ကြန်' and this year, it’s extra special. Thingyan is now officially recognized as a World Intangible Cultural Heritage by UNESCO! Dive into the trends and celebrate with us!

Deloitte Analytics - Applying Process Mining in an audit context

Deloitte Analytics - Applying Process Mining in an audit contextProcess mining Evangelist Mieke Jans is a Manager at Deloitte Analytics Belgium. She learned about process mining from her PhD supervisor while she was collaborating with a large SAP-using company for her dissertation.

Mieke extended her research topic to investigate the data availability of process mining data in SAP and the new analysis possibilities that emerge from it. It took her 8-9 months to find the right data and prepare it for her process mining analysis. She needed insights from both process owners and IT experts. For example, one person knew exactly how the procurement process took place at the front end of SAP, and another person helped her with the structure of the SAP-tables. She then combined the knowledge of these different persons.

Day 1 - Lab 1 Reconnaissance Scanning with NMAP, Vulnerability Assessment wit...

Day 1 - Lab 1 Reconnaissance Scanning with NMAP, Vulnerability Assessment wit...Abodahab IHOY78T6R5E45TRYTUYIU

How iCode cybertech Helped Me Recover My Lost Funds

How iCode cybertech Helped Me Recover My Lost Fundsireneschmid345 I was devastated when I realized that I had fallen victim to an online fraud, losing a significant amount of money in the process. After countless hours of searching for a solution, I came across iCode cybertech. From the moment I reached out to their team, I felt a sense of hope that I can recommend iCode Cybertech enough for anyone who has faced similar challenges. Their commitment to helping clients and their exceptional service truly set them apart. Thank you, iCode cybertech, for turning my situation around!

[email protected]

Adobe Analytics NOAM Central User Group April 2025 Agent AI: Uncovering the S...

Adobe Analytics NOAM Central User Group April 2025 Agent AI: Uncovering the S...gmuir1066 Discussion of Highlights of Adobe Summit 2025

Safety Innovation in Mt. Vernon A Westchester County Model for New Rochelle a...

Safety Innovation in Mt. Vernon A Westchester County Model for New Rochelle a...James Francis Paradigm Asset Management By James Francis, CEO of Paradigm Asset Management

In the landscape of urban safety innovation, Mt. Vernon is emerging as a compelling case study for neighboring Westchester County cities. The municipality’s recently launched Public Safety Camera Program not only represents a significant advancement in community protection but also offers valuable insights for New Rochelle and White Plains as they consider their own safety infrastructure enhancements.

AI Competitor Analysis: How to Monitor and Outperform Your Competitors

AI Competitor Analysis: How to Monitor and Outperform Your CompetitorsContify AI competitor analysis helps businesses watch and understand what their competitors are doing. Using smart competitor intelligence tools, you can track their moves, learn from their strategies, and find ways to do better. Stay smart, act fast, and grow your business with the power of AI insights.

For more information please visit here https://www.contify.com/

Safety Innovation in Mt. Vernon A Westchester County Model for New Rochelle a...

Safety Innovation in Mt. Vernon A Westchester County Model for New Rochelle a...James Francis Paradigm Asset Management

Data mining with weka

- 1. Data Mining with WEKA Census Income Dataset (UCI Machine Learning Repository) Hein and Maneshka

- 2. Data Mining ● non-trivial extraction of previously unknown and potentially useful information from data by means of computers. ● part of machine learning field. ● two types of machine learning: ○ supervised learning: to find real values as output ■ regression: to find real value(s) as output ■ classification: to map instance of data to one of predefined classes ○ unsupervised learning: to discover internal representation of data ■ clustering: to group instances of data together based on some characteristics ■ association rule mining: to find relationship between instances of data

- 3. Aim ● Perform data mining using WEKA ○ understanding the dataset ○ preprocessing ○ task: classification

- 4. Dataset - Census Income Dataset ● from UCI machine learning repository ● 32, 561 instances ● attributes: 14 ○ continuous: age, fnlwgt, education-num, capital-gain, capital-loss, hours-per-week: ○ nominal: workclass, education, marital-status, occupation, relationship, race, sex, native-country ● salary - classes: 2 (<= 50K and > 50K) ● missing values: ○ workclass & occupation: 1836 (6%) ○ native-country: 583 (2%) ● imbalance distribution of values ○ age, capital-gain, capital-loss, native-country

- 5. Dataset - Census Income Dataset ● imbalance distributions of attributes ● No strong seperation of classes Blue: <=50K Red: >50K

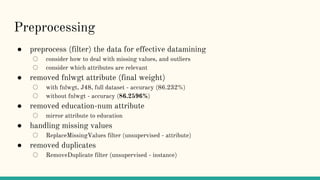

- 6. Preprocessing ● preprocess (filter) the data for effective datamining ○ consider how to deal with missing values, and outliers ○ consider which attributes are relevant ● removed fnlwgt attribute (final weight) ○ with fnlwgt, J48, full dataset - accuracy (86.232%) ○ without fnlwgt - accuracy (86.2596%) ● removed education-num attribute ○ mirror attribute to education ● handling missing values ○ ReplaceMissingValues filter (unsupervised - attribute) ● removed duplicates ○ RemoveDuplicate filter (unsupervised - instance)

- 7. Preprocessing ● grouped education attribute values ○ 16 values → 9 values HS-graduate Some-college Bechalor Prof-School Masters Doctorate Assoc-acdm Assoc-voc HS-not-finished HS-graduate Some-college Bechalor Prof-School Masters Doctorate Assoc-acdm Assoc-voc Pre-school 1st-4th 5th-6th 7th-8th 9th 10th 11th 12th HS-not-finished

- 8. Preprocessing - Balancing Class Distribution ● without balancing class distribution, the classifiers perform badly for classes with lower distributions

- 9. Preprocessing - Balancing Class Distribution Step 1: Apply the Resample filter Filters→supervised→instance→Resample Step 2: Set the biasToUniformClass parameter of the Resample Filter to 1.0 and click ‘Apply Filter’

- 10. Preprocessing - Outliers ● Outliers in data can skew and mislead the processing of algorithms. ● Outliers can be removed in the following manner

- 11. Preprocessing - Removing Outliers Step 1 : Select InterquartertileRange filter Filters→unsupervised→attribute→InteruartileRange--> Apply Result: Creates two attributes- outliers and extreme values with attribute no’s 14 and 15 respectively

- 12. Preprocessing - Removing Outliers Step 2 : a) Select another filter RemoveWithValues Filters→unsupervised→instance→RemoveWithValues b) Click on filter to get its parameters. Set attrıbuteIndex to 14 and nominalIndices to 2, since its only values set to yes that need to be removed.

- 13. Preprocessing - Removing Outliers Result: Removes all outliers from dataset Step 3:Remove the outlier and extreme attributes from the dataset

- 14. Preprocessing - Impact of Removing Outliers ● With outliers in dataset - 85.3302% correctly classified instances ● Without Outliers in dataset - 84.3549% correctly classified instances Since the percentage for correctly classified instances were greater for the dataset with outliers, this was selected! The reduced accuracy is due to the nature of our dataset (very skewed distributions in attributes ( capital-gain)).

- 15. Preprocessing ● Our preprocessing recap ○ removed fnlwgt, edu-num attributes ○ removed duplicate instances ○ fill in missing values ○ grouped some attribute values for education ○ rebalanced class distribution ● size of dataset: 14356 instances

- 16. Performance of Classifiers ● simplest measure: rate of correct predictions ● confusion matrix: ● Precision: how many positive predictions are correct (TP/(TP + FP)) ● Recall: how many positive predictions are caught (TP/(TP + FN)) ● F Measure: consider both precision and recall (2 * precision * recall / precision + recall)

- 17. Performance of Classifiers ● kappa statistic: chance corrected accuracy measure (must be bigger than 0) ● ROC Area: the bigger the area is, the better result (must be bigger than 0.5) ● Error rates: useful for regression ○ predicting real values ○ predictions are not just right or wrong ○ these reflects the magnitude of errors

- 18. Developing Classifiers ● ran algorithms with default parameters ● test parameter: cross-validation 10 fold ● preprocessed dataset Algorithm Accuracy J48 83.6305 % JRip 82.0075 % NaiveBayes 76.5464 % IBk 84.9401 % Logistics 82.3837 % ● chose J48 and IBk classifiers to develop further. ● IBk is best performing. ● J48 is very fast, second best, very popular.

- 19. J48 Algorithm ● Open source Java implementation of the C4.5 algorithm in the Weka data mining tools ● It creates a decision tree based on labelled input data ● The trees generated can be used for classification and for this reason is called a statistical classifier

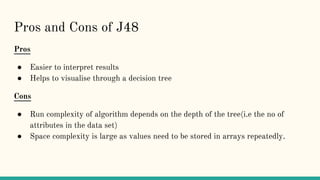

- 20. Pros and Cons of J48 Pros ● Easier to interpret results ● Helps to visualise through a decision tree Cons ● Run complexity of algorithm depends on the depth of the tree(i.e the no of attributes in the data set) ● Space complexity is large as values need to be stored in arrays repeatedly.

- 21. J48 - Using Default Parameters Number of Leaves : 811 Size of the tree : 1046

- 22. J48 -Setting bınarySplıts parameter to True

- 23. J48 -Setting unpruned parameter to True Number of Leaves : 3479 Size of the tree : 4214

- 25. J48 -Setting unpruned and bınarySplıts parameters to True

- 26. J48 - Observations ● we initially thought Education would be most important factor in classifying income. ● J48 tree (without binarization) has CapitalGain as root tree, instead of Education. ● It means CapitalGain contributes larger towards income than we initially thought.

- 27. IBk Classifier ● instance-based classifier ● k-nearest neighbors algorithm ● takes nearest k neighbors to make decisions ● use distance measures to get nearest neighbors ○ chi-square distance, euclidean distance (used by IBk) ● can use distance weighting ○ to give more influence to nearer neighbors ○ 1/distance and 1-distance ● can use for classification and regression ○ classification output - class value assigned as one most common among the neighbors ○ regression - value is the average of neighbors

- 28. Pros and Cons of IBk Pros ● easy to understand / implement ● perform well with enough representation ● choice between attributes and distance measures Cons ● large search space ○ have to search whole dataset to get nearest neighbors ● curse of dimensionality ● must choose meaningful distance measure

- 29. Improving IBk ran KNN algorithm with different combinations of parameters Parameters Correct Prediction ROC Area K-mean (k = 1, no weight) default 84.9401 % 0.860 K-mean (k = 5, no weight) 80.691 % 0.882 K-mean (k=5, inverse-distance-weight) 85.978 0.929 K-mean (k=10, no weight) 81.0323 % 0.887 K-mean (k=10, inverse-distance-weight) 86.5422 % 0.939 K-mean (k=10, similarity-weighted) 81.6244 % 0.892 K-mean (k=50, inverse-distance-weight) 86.8905 % 0.948 K-mean (k=100, inverse-distance-weight) 86.6397 % 0.947

- 30. IBk - Observations ● larger k gives better classification ○ up until certain number of k (50) ○ using inverse weight improve accuracy greatly ● limitations ○ we used euclidean distance (not the best for nominial values in dataset)

- 31. Vote Classifier ● we combined our classifier -> Meta ○ used average of probabilities Classifier Accuracy ROC Area J48 85.3998 % 0.879 K-mean (k=50, inverse-distance-weight) 86.8905 % 0.948 Logistics 82.3837 % 0.905 Vote 87.3084 % 0.947

- 32. What We Have Done ● Developing classifier for Census Income Dataset ○ a lot of preprocessing ○ learned in details about J48 and KNN classifiers ● Developed classifier with 87.3084 % accuracy and 0.947 ROC area. ○ using VOTE

- 33. Thank You.