Data Science and Deep Learning on Spark with 1/10th of the Code with Roope Astala and Sudarshan Ragunathan ()

- 1. Data science and deep learning on Spark with 1/10th of the code Roope Astala, Sudarshan Ragunathan Microsoft Corporation

- 2. Agenda • User story: Snow Leopard Conservation • Introducing Microsoft Machine Learning Library for Apache Spark – Vision: Productivity, Scalability, State-of-Art Algorithms, Open Source – Deep Learning and Image Processing – Text Analytics Disclaimer: Any roadmap items are subject to change without notice. Apache®, Apache Spark, and Spark® are either registered trademarks or trademarks of the Apache Software Foundation in the United States and/or other countries.

- 3. Rhetick Sengupta President, Board of Directors

- 4. Snow leopards • 3,900-6,500 individuals left in the wild • Variety of Threats – Poaching – Retribution killing – Loss of prey – Loss of habitat (mining) • Little known about their ecology, behavior, movement patterns, survival rates • More data required to influence survival

- 5. Range spread across 1.5 million km2

- 8. Camera trapping since 2009 • 1,700 sq km • 42 camera traps • 8,490 trap nights • 4 primary sampling periods • 56 secondary sampling periods • ~1.3 mil images

- 9. Camera Trap Images Manually classifying images averages 300 hours per survey

- 10. Automated Image Classification Benefits Short term • Thousands of hours of researcher and volunteer time saved • Resources redeployed to science and conservation vs image sorting • Much more accurate data on range and population Long term • Population numbers that can be accurately monitored • Influence governments on protected areas • Enhance community based conservation programs • Predict threats before they happen

- 11. www.snowleopard.org How can you help? We need more camera surveys! • 1,700 sq km surveyed of 1,500,000 • $500 will buy an additional camera • $5,000 will fund a researcher • Any amount helps Contact me directly at [email protected] or donate online.

- 12. Microsoft Machine Learning Library for Apache Spark (MMLSpark) GitHub Repo: https://github.com/Azure/mmlspark Get started now using Docker image: docker run -it -p 8888:8888 -e ACCEPT_EULA=yes microsoft/mmlspark Navigate to http://localhost:8888 to view example Jupyter notebooks

- 13. Challenges when using Spark for ML • User needs to write lots of “ceremonial” code to prepare features for ML algorithms. – Coerce types and data layout to that what’s expected by learner – Use different conventions for different learners • Lack of domain-specific libraries: computer vision or text analytics… • Limited capabilities for model evaluation & model management

- 14. Vision of Microsoft Machine Learning Library for Apache Spark • Enable you to solve ML problems dealing with large amounts of data. • Make you as a professional data scientist more productive on Spark, so you can focus on ML problems, not software engineering problems. • Provide cutting edge ML algorithms on Spark: deep learning, computer vision, text analytics… • Open Source at GitHub

- 15. Example: Hello MMLSpark Predict income from US Census data

- 19. Using MMLSpark from mmlspark import TrainClassifier, ComputeModelStatistics from pyspark.ml.classification import LogisticRegression model = TrainClassifier(model=LogisticRegression(), labelCol=" income").fit(train) prediction = model.transform(test) metrics = ComputeModelStatistics().transform(prediction)

- 20. Algorithms • Deep learning through Microsoft Cognitive Toolkit (CNTK) – Scale-out DNN featurization and scoring. Take an existing DNN model or train locally on big GPU machine, and deploy it to Spark cluster to score large data. – Scale-up training on a GPU VM. Preprocess large data on Spark cluster workers and feed to GPU to train the DNN. • Scale-out algorithms for “traditional” ML through SparkML

- 21. Design Principles • Run on every platform and on language supported by Spark • Follow SparkML pipeline model for composability. MML-Spark consists of Estimators and Transforms that can be combined with existing SparkML components into pipelines. • Use SparkML DataFrames as common format. You can use existing capabilities in Spark for reading in the data to your model. • Consistent handling of different datatypes – text, categoricals, images – for different algorithms. No need for low-level type coercion, encoding or vector assembly.

- 22. Deep Neural Net Featurization • Basic idea: Interior layers of pre-trained DNN models have high-order information about features • Using “headless” pre-trained DNNs allows us to extract really good set of features from images that can in turn be used to train more “traditional” models like random forests, SVM, logistic regression, etc. – Pre-trained DNNs are typically state-of-the-art models on datasets like ImageNet, MSCoco or CIFAR, for example ResNet (Microsoft), GoogLeNet (Google), Inception (Google), VGG, etc. • Transfer learning enables us to train effective models where we don’t have enough data, computational power or domain expertise to train a new DNN from scratch • Performance scales with executors High-order features Predictions

- 23. DNN Featurization using MML-Spark cntkModel = CNTKModel().setInputCol("images"). setOutputCol("features").setModelLocation(resnetModel). setOutputNode("z.x") featurizedImages = cntkModel.transform(imagesWithLabels). select(['labels','features']) model = TrainClassifier(model=LogisticRegression(),labelCol="labels"). fit(featurizedImages) The DNN featurization is incorporated as SparkML pipeline stage. The evaluation happens directly on JVM from Scala: no Python UDF overhead!

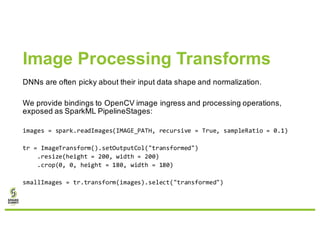

- 24. Image Processing Transforms DNNs are often picky about their input data shape and normalization. We provide bindings to OpenCV image ingress and processing operations, exposed as SparkML PipelineStages: images = spark.readImages(IMAGE_PATH, recursive = True, sampleRatio = 0.1) tr = ImageTransform().setOutputCol("transformed") .resize(height = 200, width = 200) .crop(0, 0, height = 180, width = 180) smallImages = tr.transform(images).select("transformed")

- 25. Image Pipeline for Object Detection and Classification • In real data, objects are often not framed and 1 per image. There can be manycandidate sub- images. • You could extract candidate sub-images using flatMapsand UDFs, but this gets cumbersome quickly. • We plan to provide simplified pipeline components to support generic object detection workflow. Detect candidate ROI Extract sub-image Classify sub-images Prediction: Cloud, None, Sun, Smiley, None …

- 26. Virtual network Training of DNNs on GPU node • GPUs are very powerful for training DNNs. However, running an entire cluster of GPUs is often too expensive and unnecessary. • Instead, load and prep large data on CPU Spark cluster, then feed the prepped data to GPU node on virtual network for training. Once DNN is trained, broadcast the model to CPU nodes for evaluation. learner = CNTKLearner(brainScript=brainscriptText, dataTransfer='hdfs-mount', gpuMachines=‘my-gpu-vm’, workingDir=‘file:/tmp/’).fit(trainData) predictions = learner.setOutputNode(‘z’).transform(testData) Raw data Processed data as DataFrame Trained DNN as PipelineStage

- 27. Text Analytics • Goal: provide one-step text featurization capability that lets you take free-form text columns and turn them into feature vectors for ML algorithms. – Tokenization, stop-word removal, case normalization – N-Gram creation, feature hashing, IDF weighing. • Future: multi-language support, more advanced text preprocessing and DNN featurization capabilities.

- 28. Example: Text analytics for document classification by sentiment from pyspark.ml import Pipeline from mmlspark import TextFeaturizer, SelectColumns, TrainClassifier from pyspark.ml.classification import LogisticRegression textFeaturizer = TextFeaturizer(inputCol="text", outputCol = "features", useStopWordsRemover=True, useIDF=True, minDocFreq=5, numFeatures=2**16) columnSelector = SelectColumns(cols=["features","label"]) classifier = TrainClassifier(model = LogisticRegression(), labelCol='label') textPipeline = Pipeline(stages= [textFeaturizer,columnSelector,classifier])

- 29. Easy to get started GitHub Repo: https://github.com/Azure/mmlspark Get started now using Docker image: docker run -it -p 8888:8888 -e ACCEPT_EULA=yes microsoft/mmlspark Navigate to http://localhost:8888 for example Jupyter notebooks Spark package installable for generic Spark 2.1 clusters Script Action installation on Azure HDInsight cluster

![DNN Featurization using MML-Spark

cntkModel = CNTKModel().setInputCol("images").

setOutputCol("features").setModelLocation(resnetModel).

setOutputNode("z.x")

featurizedImages = cntkModel.transform(imagesWithLabels).

select(['labels','features'])

model = TrainClassifier(model=LogisticRegression(),labelCol="labels").

fit(featurizedImages)

The DNN featurization is incorporated as SparkML pipeline stage. The evaluation

happens directly on JVM from Scala: no Python UDF overhead!](https://image.slidesharecdn.com/144astalaragunathan-170616132849/85/Data-Science-and-Deep-Learning-on-Spark-with-1-10th-of-the-Code-with-Roope-Astala-and-Sudarshan-Ragunathan-23-320.jpg)

![Example: Text analytics for document

classification by sentiment

from pyspark.ml import Pipeline

from mmlspark import TextFeaturizer, SelectColumns, TrainClassifier

from pyspark.ml.classification import LogisticRegression

textFeaturizer = TextFeaturizer(inputCol="text", outputCol = "features",

useStopWordsRemover=True, useIDF=True, minDocFreq=5,

numFeatures=2**16)

columnSelector = SelectColumns(cols=["features","label"])

classifier = TrainClassifier(model = LogisticRegression(), labelCol='label')

textPipeline = Pipeline(stages= [textFeaturizer,columnSelector,classifier])](https://image.slidesharecdn.com/144astalaragunathan-170616132849/85/Data-Science-and-Deep-Learning-on-Spark-with-1-10th-of-the-Code-with-Roope-Astala-and-Sudarshan-Ragunathan-28-320.jpg)