Ad

Data Structures and Algorithm Analysis

- 1. Algorithm Analysis Chapter 2 Mary Margarat Valentine

- 2. Objectives This Chapter introduces students to the analysis and design of computer algorithms. Upon completion of this chapter, students will be able to do the following: Analyze the asymptotic performance of algorithms. Demonstrate a familiarity with major algorithms and data structures. Apply important algorithmic design paradigms and methods of analysis. Synthesize efficient algorithms in common engineering design situations. 2

- 3. What is an Algorithm? 3

- 4. What is an Algorithm? Algorithms are the ideas behind computer programs. Algorithm is any well-defined computational procedure that takes some value, or set of values, as input and produces some value, or set of values, as output. Algorithm is thus a sequence of computational steps that transform the input into the output. 4

- 5. What is an Algorithm? Example: Directions to somebody’s house is an algorithm. A recipe for cooking a cake is an algorithm. 5

- 6. What is a Program? A program is a set of instructions which the computer will follow to solve a problem 6

- 7. Introduction Why we need algorithm analysis ? Writing a working program is not good enough. The program may be inefficient! If the program is run on a large data set, then the running time becomes an issue. 7

- 8. What to Analyze ? Correctness Does the input/output relation match algorithm requirement? Amount of work done Basic operations to do task finite amount of time Amount of space used Memory used 8

- 9. What to Analyze ? Simplicity, clarity Verification and implementation. Optimality Is it impossible to do better? 9

- 10. What to Analyze ? Time Complexity Amount of computer time it needs to execute the program to get the intended result. Space Complexity Memory requirements based on the problem size. 10

- 11. 11 Properties of algorithms Input: what the algorithm takes in as input Output: what the algorithm produces as output Definiteness: the steps are defined precisely Correctness: should produce the correct output Finiteness: the steps required should be finite Effectiveness: each step must be able to be performed in a finite amount of time.

- 12. What is DS & Algorithm ? Data Structure is a systematic way of organizing and accessing data. Algorithm is a step-by-step procedure for performing some task in a finite amount of time.

- 13. Types of DS Linear data structures: Array Linked list Stack Queue Non-linear data structures: Graphs Trees

- 14. Linear & nonlinear Data Structures Main difference between linear & nonlinear data structures lie in the way they organize data elements. In linear data structures, data elements are organized sequentially and therefore they are easy to implement in the computer’s memory. In nonlinear data structures, a data element can be attached to several other data elements to represent specific relationships that exist among them. 18

- 15. Linear & nonlinear Data Structures Due to this nonlinear structure, they might be difficult to be implemented in computer’s linear memory compared to implementing linear data structures. Selecting one data structure type over the other should be done carefully by considering the relationship among the data elements that needs to be stored. 19

- 16. Array An array is a collection of homogeneous type of data elements. An array is consisting of a collection of elements . 20

- 17. Stack A Stack is a list of elements in which an element may be inserted or deleted at one end which is known as TOP of the stack. 21

- 18. 22

- 19. STACKS A stack is a restricted linear list in which all additions and deletions are made at one end, the top. If we insert a series of data items into a stack and then remove them, the order of the data is reversed. This reversing attribute is why stacks are known as last in, first out (LIFO) data structures. Figure:-Three representations of stacks

- 20. Applications Data Structures are applied extensively in Operating System, Database Management System, Statistical analysis package, Numerical Analysis Graphics, Artificial Intelligence, Simulation

- 22. Asymptotic Notation (O, , ) 26 Asymptotic notations are the terminology that enables meaningful statements about time & space complexity. To Calculate Running Time of an algorithm

- 23. Asymptotic Notation (O, , ) 27 The time required by the given algorithm falls under three types 1. Best-case time or the minimum time required in executing the program. 2. Average case time or the average time required in executing program. 3. Worst-case time or the maximum time required in executing program.

- 24. Asymptotic Notation (O, , ) 28 Big “oh” (O) upper bound Omega () lower bound Theta () upper and lower bound

- 25. Time Complexity ---- For Loop Statement for(i=0; i <n; i++) Total time=time(stmt1)+time(stmt2)+….+time(stmt n) Note: For declarations, time count is 0 29 n+11 n Total : 1+n+1+n = 2n+2 O(n)

- 26. Time Complexity ---- If-Else Statement if(condition) { stmt 1; } else { stmt2; } 30 Either Stmt1 or Stmt2 will execute. So, Worst case is Max(time(stmt1, stmt2) Max (O(n), O(1)) O(n)

- 27. Nested For loops for(i=0; i <n; i++) { for(i=0; i <n; i++) { Stmts; } } Note: For all the “for loop” statement multiplied by the product. 31 Total : n *n O(n2)

- 28. Tabular Method 32 Iterative function to sum a list of numbers Statement Frequency float sum(float list[ ], int n) { float tempsum = 0; int i; for(i=0; i <n; i++) tempsum += list[i]; return tempsum; } 0 0 1 0 n+1 n 1 0 Total 2n+3 steps/execution

- 29. 33 Statement s/e Frequency Total steps float rsum(float list[ ], int n) { if (n) return rsum(list, n-1)+list[n-1]; return list[0]; } 0 0 0 0 0 0 1 n+1 n+1 1 n n 1 1 1 0 0 0 Total 2n+2 Step count table for recursive summing function

- 30. Examples Examples of Big Oh (O) Notation 34 O(1) Constant O(n) Linear O(n2) Quadratic O(n3) Cubic O(2n) Exponential O(log n) Logarithm

- 31. 35 Analysis of Algorithms An algorithm is a finite set of precise instructions for performing a computation or for solving a problem. What is the goal of analysis of algorithms? To compare algorithms mainly in terms of running time but also in terms of other factors (e.g., memory requirements, programmer's effort etc.) What do we mean by running time analysis? Determine how running time increases as the size of the problem increases.

- 32. 36 How do we compare algorithms? We need to define a number of objective measures. (1) Compare execution times? Not good: times are specific to a particular computer !! (2) Count the number of statements executed? Not good: number of statements vary with the programming language as well as the style of the individual programmer.

- 33. 37 Ideal Solution Express running time as a function of the input size n (i.e., f(n)). Compare different functions corresponding to running times. Such an analysis is independent of machine time, programming style, etc.

- 34. Asymptotic Notation (O, , ) 39 Big “oh” (O) upper bound Omega () lower bound Theta () upper and lower bound

- 35. 40 Asymptotic Notation O notation: asymptotic “less than”: f(n)=O(g(n)) implies: f(n) “≤” g(n) notation: asymptotic “greater than”: f(n)= (g(n)) implies: f(n) “≥” g(n) notation: asymptotic “equality”: f(n)= (g(n)) implies: f(n) “=” g(n)

- 36. Big Oh- Notation Denoted by “O”. Using this we can compute the max possible amount of time that an algorithm will take for its application. Consider f(n) and g(n) to be two positive function of “n” where the “n” is the input size. f(n) = Og(n) iff f(n)<=c.g(n) C>0 ; n0<n 41

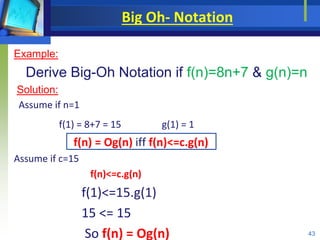

- 38. Big Oh- Notation Example: Derive Big-Oh Notation if f(n)=8n+7 & g(n)=n Solution: Assume if n=1 f(1) = 8+7 = 15 g(1) = 1 f(n) = Og(n) iff f(n)<=c.g(n) Assume if c=15 f(n)<=c.g(n) f(1)<=15.g(1) 15 <= 15 So f(n) = Og(n) 43

- 39. Big Oh- Notation Step- II Assume if n=2 f(2) = 16+7 = 23 g(2) = 2 f(2)<=15.g(2) 23 <= 30 So f(n) = Og(n) 44

- 40. Big Oh- Notation Step- III Assume if n=3 f(3) = 24+7 = 31 g(3) = 3 f(2)<=15.g(2) 31 <= 45 So f(n) = Og(n) Conclusion: f(n)<=c.g(n); for c=15 & n0 = 1 45

- 41. Omega Notation Denoted by “”. Using this we can compute the minimum amount of time that an algorithm will take for its computation. Consider f(n) and g(n) to be two positive function of “n” where the “n” is the input size. f(n) = g(n) iff f(n)>=c.g(n) C>0 ; n0<n 46

- 42. 47 Asymptotic notations (cont.) - notation

- 43. Theta Notation Denoted by “”. Using this we can compute the average amount of time that an algorithm will take for its computation. f(n) = g(n) iff c1.g(n) <= f(n) <=c2.g(n) 48

- 45. University Questions – Ch 1 & 2 Distinguish between datatype and data structure. Define O notation. What is recursive function? State its advantages What are linear and non-linear Data Structures What are Asymptotic Notation Why is it necessary to analyze an algorithm What is Data Structure and Abstract Data Type? Explain the Asymptotic notation to measure the time complexity of an algorithm? 50

- 46. University Questions – Ch 1 & 2 What is Recursion? Give disadvantages of recursion. Write a program to implement Towers of Hanoi .10 M Explain Asymptotic Notations and write the properties of asymptotic notations 10 M 51

![Tabular Method

32

Iterative function to sum a list of numbers

Statement Frequency

float sum(float list[ ], int n)

{

float tempsum = 0;

int i;

for(i=0; i <n; i++)

tempsum += list[i];

return tempsum;

}

0

0

1

0

n+1

n

1

0

Total 2n+3

steps/execution](https://image.slidesharecdn.com/dsaach2algorithmanalysis-200108114844/85/Data-Structures-and-Algorithm-Analysis-28-320.jpg)

![33

Statement s/e Frequency Total steps

float rsum(float list[ ], int n)

{

if (n)

return rsum(list, n-1)+list[n-1];

return list[0];

}

0 0 0

0 0 0

1 n+1 n+1

1 n n

1 1 1

0 0 0

Total 2n+2

Step count table for recursive summing function](https://image.slidesharecdn.com/dsaach2algorithmanalysis-200108114844/85/Data-Structures-and-Algorithm-Analysis-29-320.jpg)

![Data Structures - Lecture 7 [Linked List]](https://cdn.slidesharecdn.com/ss_thumbnails/lecture-7linkedlists-150121011916-conversion-gate02-thumbnail.jpg?width=560&fit=bounds)