Debs 2011 pattern rewritingforeventprocessingoptimization

- 1. Pattern Rewriting Framework for Event Processing Optimization Ella Rabinovich, Opher Etzion , Avigdor Gal

- 2. Motivation Adi A., Etzion O. Amit - the situation manager. The VLDB Journal – The International Journal on Very Large Databases. Volume 13 Issue 2, 2004. Previous studies indicate that there is a major performance degradation as application complexity increases. Mendes M., Bizarro P., Marques P. Benchmarking event processing systems: current state and future directions. WOSP/SIPEW 2010: 259-260 . Optimize complex scenarios

- 3. Optimization tools Blackbox optimizations: Distribution Parallelism Scheduling Load balancing Load shedding Whitebox optimizations: Implementation selection Implementation optimization Pattern rewriting Our focus

- 4. An example of a complex scenario E1 E2 E3 E15 E16 A process has 16 steps, that have to be executed in a predefined order; termination of each step creates an event with a status-code (SC). The process is reported as committed when The 16 steps have completed in the correct order (sequence pattern) and the pattern assertion is satisfied. The assertion that may look like: E 1 .SC == E2.SC or E3.SC < 4 For this scenario we succeeded to achieve more than tenfold decrease of latency, or more than 20% increase in throughput

- 5. Pattern Rewriting Approach The goal: create equivalent pattern that provides better performance Rewriting techniques exist in other domains such as: rule system, SQL queries Due to the inherent complexity of event processing patterns there are some unique challenges seq(E1,E2,E3,E4) seq(E1,E2,E3,E5,E6) seq(E1,E2,E3) seq(DE,E4) seq(DE,E5,E6) all(E1,E2,E3,E4) all(E1,E2) all(E3,E4) all(DE1,DE2) subsumption of a common logic splitting for parallel execution DE1 DE2 DE

- 6. Challenges: Assertion Split A pattern assertion (PA) is a predicate that event collection needs to satisfied for the pattern to be matched. seq(E1,E2) with PA’ seq(DE,E3) with PA’’ DE seq(E1,E2,E3) with pattern assertion: E1.SC == E2.SC OR E3.SC < 4 E1.SC == E2.SC OR E3.SC <4 E1.SC == E2.SC E3.SC < 4 seq(E1,E2,E3) with PA the direct connection of the two patterns implies “AND” operator between PA’ and PA’’ seq(E1,E3) with PA’ seq(DE,E2) with PA’’ DE seq(E1,E2,E3) with pattern assertion: E1.SC == E3.SC AND E2.SC = 0 E1.SC == E3.SC AND E2.SC = 0 E1.SC == E3.SC E2.SC = 0 seq(E1,E2,E3) with PA the assertion should be separable in terms of its variables

- 7. Assertion Split – Solution Convert the pattern assertion expression into conjunctive normal form (CNF). Identify independent participants’ sub-groups, by generating assertion variables dependency graph. Maximal number of independent partitions implies the finest granulation of the assertion expression. E1 E2 E4 E5 E6 E3 (E1.SC > E2.SC) AND (E4.SC > E5.SC) AND NOT ((E5.SC==E6.SC) AND (E3.SC==77)) (E1.SC > E2.SC) AND (E4.SC > E5.SC) AND (NOT(E5.SC==E6.SC) OR NOT(E3.SC==77)) CNF

- 8. Pattern Matching - Policies Pattern: seq(PG, ATM-W) within 10 minutes PG1 PG2 ATM-W1 Instance selection policy PG1 PG2 ATM-W1 ATM-W2 first detection additional detection? Cardinality policy PG1 PG2 ATM-W1 first detection – are instances consumed? ATM-W2 Consumption policy

- 9. Naïve pattern split, keeping the original policies in the rewritten version will result in incorrect matching: Challenges: Policies Mapping seq(E1,E2,E3) {single, last, …} seq(E1,E2) {single, last, …} seq(DE,E3) {single, last, …} e1.1 e3.1 e1.1 e3.1 e2.1 blood pressure measure e2.2 blood pressure measure e2.1 blood pressure measure e2.2 blood pressure measure detection point detection point detection point

- 10. Policies Mapping – Solution Mapping of policies in the rewritten alternative (f2’ + f2’’), based on the original pattern (f1): - reuse - Consumption last last last Instance selection single unrestricted single Cardinality rewritten (f2’’) rewritten (f2’) original (f1) policy seq(E1,E2,E3) seq(E1,E2) seq(DE,E3) + pattern assertion extensions consume reuse consume Consumption last each last Instance selection unrestricted unrestricted unrestricted Cardinality rewritten (f2’’) rewritten (f2’) original (f1) policy

- 11. Denotational semantics approach: Event processing pattern is a function (f) , mapping pattern’s input (participant set - PS) into its output (matching set - MS). We formally demonstrate that for the same PS both alternatives produce the identical MS: f1(PS, …) == f2’( (f2’’(PS’, …) PS’’) , …) PS Equivalence assurance seq(E 1 ,…, E N ) PA, Policies seq(E 1 , …, E K ) PA’, Policies’ seq(DE, E K+1 , …, E N ) PA’’, Policies’’ participant set (PS) participant set (PS) matching set (MS) matching set (MS)

- 12. Throughput vs. Latency Tradeoff Pattern throughput is an average rate of events it can process The detecting event latency as a delay between the last input event causing a this pattern detection and the detection itself, resulting in derivation of an output event. Example : seq(E1,E2,E3) produces derived event DE Detecting event latency = DE.detection_time - E3.detection_time DE.detection_time: time DE was detected by the system E3.detection_time: time E3 arrived to the system seq(E 1 ,…, E N ) seq(E 1 , …, E N-2 ) seq(DE, E N-1 , E N ) throughput latency throughput latency lazy evaluation eager evaluation

- 13. Bi-objective Performance Optimization Define bi-objective performance function Assign a scalar weight for each objective to be optimized Weight of to pattern throughput (th) Complementary weight (1- ) to the detecting event latency (lt) Minimize the goal function of the form: g = *lt + C*(1- )*(1/th) Simulation-based approach to select the optimal rewriting alternative (minimizing the goal function g) For a set of rewriting alternatives A = {A 1 , … A K } , find argmin Ai ( g )

- 14. Experimental Results Simulation results for seq (E1, …, E16) split of pairs The Pareto frontier Min latency Max throughput The base pattern Not in the Pareto frontier 15 95 7 : 1 8 32 163 6 : 2 7 63 172 5 : 3 6 95 165 4 : 4 5 rewritten pattern 147 155 3 : 5 4 174 142 2 : 6 3 189 110 1 : 7 2 260 140 0 : 8 1 Detected event latency (ms) throughput (event/s) rewriting # lazy eager

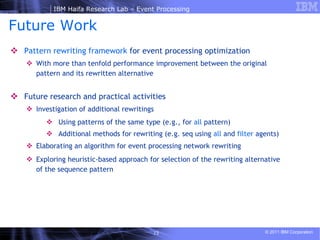

- 15. Pattern rewriting framework for event processing optimization With more than tenfold performance improvement between the original pattern and its rewritten alternative Future research and practical activities Investigation of additional rewritings Using patterns of the same type (e.g., for all pattern) Additional methods for rewriting (e.g. seq using all and filter agents) Elaborating an algorithm for event processing network rewriting Exploring heuristic-based approach for selection of the rewriting alternative of the sequence pattern Future Work

Editor's Notes

- #3: This was a bit of background on event processing and now lets explain what is the motivation for this optimization work. Performance studies of event processing systems demonstrate that there is a significant performance degradation as application’s complexity increases. At the left we can see that there is a major decrease in system’s throughput as we make our scenario more complex – starting with simple filtering, going through aggregations and eventually pattern detection is the one with worst performance with respect to system throughput. At the right we can see another example of a system performing well on standby world (...) but running into worse throughput (the upper one) and latency (the bottom one) as scenario complexity increases.

- #6: In this work we define a framework for pattern rewriting, in order to gain optimized yet logically equivalent alternative. The rewriting capability exists in most declarative languages, and we’ve explored using this technique for event processing patterns. What can be the usage of such a rewriting: we can see two example here – the first one is extracting a piece of common logic from two patterns, reusing its result twice and saving cpu time for the whole system; the second example demonstrates how pattern of type all (conjunction of several events where the order is not important), can be rewritten into three all patterns, such that the first two can be executed in parallel, in case we are running in a distributed environment or on a multi-core platform. Our basic technique is splitting a single pattern into two patterns of the same type, where the first one produces a derived event which in turn is used as an input to the second one. The split of a pattern into two (kind of independent) parts gives rise to some challenges, when performing the split and I go into details on the next couple of slides.

- #7: As specified here pattern assertion is the condition events need to meet in order to satisfy the pattern. As an example, in the speculative customer scenario we are looking for six events, in this order, where each pair indicate buy and sell transactions of the same stock. So the pattern assertion will have this form … When splitting the single pattern with six participants into two consecutive patterns with let’s say two and four participants respectively, we also need to spit the pattern assertion that originally refers to all six participants in a single statement. The most intuitive this to do is to separate the assertion into two parts where the first one refers to the first part participants and the other part to the second pattern. The intuition is pretty much correct, however, we need to make sure that the assertion after split satisfies two requirements: (1) both parts of the split condition are connected by the “AND” operator – due to the nature of this connection (both assertions parts will be necessarily satisfied by the final detection), and (2) the assertion split generated two independent parts in terms of participants (we can not have the same participant both in the left and in the right side of the split). These two requirements make the pattern assertion split a non-trivial task, and an informal algorithm for that is presented in the next slide…

- #8: First we convert a pattern assertion, which is an expression with mathematical and logical operators into CNF; that in order to generate an expression with sub-expressions connected by “AND” which is required be the split. We can see an example here – the original expression is not in CNF and this one is, since NOT is in before a literal now and not before a complex clause. Now we can visually separate the assertion into three part, however its still incorrect – because we have the event type E appearing both in this clause and in this one as well, which creates a dependency between these two clauses. In order to go ahead and identify independent components we need to analyze the dependencies between the clauses of the rewritten assertion and this can be some by generating variable dependency graph, as demonstrated. Two variables are connected if they appear at the same literal or if they appear in the same OR clause. Unconnected components of such a graph imply the finest granulation of the pattern assertion, and in turn the maximal number of interconnected sub-patterns in the rewritten version. Note that the presented technique is valid for both all and sequence patterns, however, for the sequence pattern it only will be valid if the order of events after assertion split is preserved, since the occurrence time is of importance.

- #10: Pattern policies that we’ve mentioned at the beginning of this presentation pose another challenge for rewriting. Naïve pattern split preserving the original policies does not provide a sufficient solution, as illustrated at this slide - … The reason for the incorrect matching resides in the fact that the rewritten pattern version, with two separate part, lost the synchronization that existed inherently in the original version, due to the fact that it was one single piece. The solution to this anomaly is in modifying pattern policies of the rewritten version (of its left part actually), so that it will work harder but produce sufficient input for the right hand pattern, that will eventually compensate the lack of synchronization caused by the split. In this example our intuition about the first pattern in the rewritten version is that it should report matching sets in an unrestricted manner and not a single one, that in order to finally report the one that is actually the last one before the entire detection, and since we would like to get the entire picture on this last and correct report we should keep instances and not consume them on particular detections before the critical last one. Next slide demonstrates policies mapping of the original and the rewritten version that lead to the correct detection and desired solution from our perspective.

- #11: The upper table demonstrates mapping done for the rewriting we’ve talked about in the previous slide. While this example is quite a simple one (due to the single cardinality policy), the bottom table demonstrates mapping of a more complex case, were we report matching sets in an unrestricted manner, i.e. multiple times within a single time window. As you can see, the last instance selection policy is not sufficient any more, we need to use “each” instead, and consumption switches to reuse like in the previous case. Due to the multiple reports, the reuse policy used here raises a problem since we don’t need to keep instance in the left pattern of the rewritten version forever, but only till they are consumed by the final detection. The extended pattern assertion assures, among others, that instanced participated in the final matching set are not reported any more. The policies mapping and pattern assertion here are the schematic solution, there can be several implementations following this schema.

- #12: We’ve supported our intuition about rewriting correctness with formal proof, were we formally defined pattern types (sequence, all), patterns’ split and demonstrated with formal statements that the original alternative is equivalent to the rewritten one. Using the denotational semantics we show that for the same input the original and the rewritten pattern forms produce identical output. Once we know how to split sequence and all patterns, we’ve leveraged this technique to balance different performance objectives of the sequence pattern, as demonstrated at the next slide.

- #13: Pattern throughput is … Pattern latency is… We can see that in the blue alternative we gain pretty good throughput, since we only do work on EN instances arrival. All the rest are pushed into pattern’s internal state, which makes the throughput of this original pattern very high. However, this high throughput comes at the cost of latency, since the amount of work we will have to perform on EN instance arrival is big. To summarize, the left alternative provides good throughput, but quite a poor latency. The green rewriting, where as much weight as possible is on the left side, and only the necessary remainder is on the right side provides much better latency since we perform preprocessing (as much as possible) in advance, the DE represents the result of this preprocessing, and only a small delta is executed here upon EN instance arrival. This rewriting provides good latency along with poor throughput; the latter is caused by the hard work this pattern is required to perform, including generation of the derived events and its transmission. Its easy to notice that throughput versus latency tradeoff of the sequence pattern mirrors the eager versus latency evaluation of the pattern, similar to different evaluation modes of database queries.

- #14: Once we know how throughput and latency are affected by different rewritings, the event processing application developer can configure his preference regarding the balance between this two. We can assign weight a to pattern latency and complementary weight (1-a) to pattern throughput and find the rewriting alternative minimizing our combined goal function g using simulation base approach. Experimental results are presented at the next slide.

- #15: At this slide we demonstrate results of the basic experiment, where sequence pattern with 8 participants was split in 3 different manners, for example at the second line two participants (a pair) were left at this side and six participants were moved to the right side - it is denoted by 1:3. And the last rewriting left as few participants as possible at the right side (a pair), and all the rest are at the left side – it is 3:1. Not surprisingly, the first line demonstrates the best throughput and the worst latency, and the last line – the opposite: worst throughput and best latency. You can see that the second line is this table is dominated by the third line both in sense of throughput and latency, so the rest three results actually create a set of paretto optimal solutions. The second table shows values of the g function based on values assigned to a in the goal function. We can see that following the previous observation regarding the second line it is never chosen as an optimal solution.

- #16: We believe that the potential of this work leaves much for further exploratory and practical activities. Among our future plans are …

![1.[1 5]implementation of pre compensation fuzzy for a cascade pid controller ...](https://cdn.slidesharecdn.com/ss_thumbnails/1-1-5implementationofprecompensationfuzzyforacascadepidcontrollerusingmatlabsimulink-111203184832-phpapp01-thumbnail.jpg?width=560&fit=bounds)