Deep learning for detecting anomalies and software vulnerabilities

- 1. 17/1/17 1 Source: rdn consulting Hanoi, Jan 17th 2017 Trần Thế Truyền Deakin University @truyenoz prada-research.net/~truyen [email protected] letdataspeak.blogspot.com goo.gl/3jJ1O0 DEEP LEARNING FOR DETECTING ANOMALIES AND SOFTWARE VULNERABILITIES tranthetruyen

- 2. REAL-WORLD FAILURE OF ANOMALY DETECTION London, July 7, 2005 17/1/17 2

- 3. Real world - what are the operators monitoring?17/1/17 3

- 4. PRADA @ DEAKIN, MELBOURNE 17/1/17 4

- 5. OUR APPROACH TO SECURITY: (DEEP) MACHINE LEARNING Usual detection of attacks are based on profiling and human skills But attacking tactics change overtime, creating zero-day attacks Systems are very complex now, and no humans can cover all à It is best to use machine to learn continuously and automatically. à Humans can provide feedbacks for the machine to correct itself. à Deep learning is on the rise. For now: It is best for human and machine to co-operate. 17/1/17 5

- 6. SOLVING REAL WORLD PROBLEMS IS REWARDING 17/1/17 6 Startups Contracts

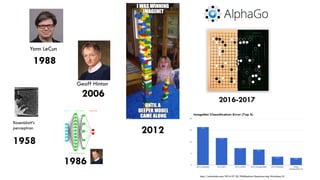

- 7. AGENDA Part I: Introduction to deep learning A brief history Top 3 architectures Unsupervised learning Part II: Anomaly detection Part III: Software vulnerabilities 17/1/17 7

- 9. DEEP LEARNING IS SUPER HOT 17/1/17 9 “deep learning” + data “deep learning” + intelligence Dec, 2016

- 10. 2016 DEEP LEARNING IS NEURAL NETS, BUT … http://blog.refu.co/wp-content/uploads/2009/05/mlp.png 1986 17/1/17 10

- 11. TWO LEARNING PARADIGMS Supervised learning (mostly machine) A à B Unsupervised learning (mostly human) Will be quickly solved for “easy” problems (Andrew Ng) 17/1/17 11

- 12. KEY IN MACHINE LEARNING: FEATURE ENGINEERING In typical machine learning projects, 80-90% effort is on feature engineering A right feature representation doesn’t need much work. Simple linear methods often work well. Text: BOW, n-gram, POS, topics, stemming, tf-idf, etc. Software: token, LOC, API calls, #loops, developer reputation, team complexity, report readability, discussion length, etc. Try yourself on Kaggle.com! 17/1/17 12

- 13. FEEDFORWARD NETS: FEATURE DETECTION Integrate-and-fire neuron andreykurenkov.com Feature detector Block representation17/1/17 13

- 14. RECURRENT NEURAL NETWORKS: TEMPORAL DYNAMICS Classification Image captioning Sentence classification Neural machine translation Sequence labelling Source: http://karpathy.github.io/assets/rnn/diags.jpeg 17/1/17 14

- 15. CONVOLUTIONAL NETS: MOTIF DETECTION adeshpande3.github.io 17/1/17 15

- 16. 17/1/17 16 Slide from Yann LeCun

- 17. UNSUPERVISED LEARNING 17/1/17 17 Photo credit: Brandon/Flickr

- 18. WE WILL BRIEFLY COVER Word embedding Deep autoencoder RBM à DBN à DBM Generative Adversarial Net (GAN) 17/1/17 18

- 19. WORD EMBEDDING (Mikolov et al, 2013)

- 20. DEEP AUTOENCODER – SELF RECONSTRUCTION OF DATA 17/1/17 20 Auto-encoderFeature detector Representation Raw data Reconstruction Deep Auto-encoder Encoder Decoder

- 21. GENERATIVE MODELS 17/1/17 21 Many applications: • Text to speech • Simulate data that are hard to obtain/share in real life (e.g., healthcare) • Generate meaningful sentences conditioned on some input (foreign language, image, video) • Semi-supervised learning • Planning

- 22. A FAMILY: RBM à DBN à DBM 17/1/17 22 energy Restricted Boltzmann Machine (~1994, 2001) Deep Belief Net (2006) Deep Boltzmann Machine (2009)

- 23. GAN: GENERATIVE ADVERSARIAL NETS (GOODFELLOW ET AL, 2014) Yann LeCun: GAN is one of best idea in past 10 years! Instead of modeling the entire distribution of data, learns to map ANY random distribution into the region of data, so that there is no discriminator that can distinguish sampled data from real data. Any random distribution in any space Binary discriminator, usually a neural classifier Neural net that maps z à x

- 24. GAN: GENERATED SAMPLES The best quality pictures generated thus far! 17/1/17 24 Real Generated http://kvfrans.com/generative-adversial-networks-explained/

- 25. DEEP LEARNING IN COGNITIVE DOMAINS 17/1/17 25 http://media.npr.org/ http://cdn.cultofmac.com/ Where human can recognise, act or answer accurately within seconds blogs.technet.microsoft.com

- 26. dbta.com DEEP LEARNING IN NON-COGNITIVE DOMAINS Where humans need extensive training to do well Domains that demand transparency & interpretability. … healthcare … security … genetics, foods, water … 17/1/17 26 TEKsystems

- 27. END OF PART I 17/1/17 27

- 28. ANOMALY DETECTION USING UNSUPERVISED LEARNING 17/1/17 28 dbta.com This work is partially supported by the Telstra-Deakin Centre of Excellence in Big Data and Machine Learning

- 29. AGENDA Part I: Introduction to deep learning Part II: Anomaly detection Multichannel Unusual mixed-data co-occurrence Object lifetime model Part III: Software vulnerabilities 17/1/17 29

- 30. BUT – we cannot define anomaly apriori Strategy: learn normality, anything does not fit in is abnormal 17/1/17 30

- 31. PROJECT: DISCOVERY IN TELSTRA SECURITY OPERATIONS We use smart people and smart tools to discover unknown malicious or risky behaviour to inform and protect Telstra and its customers. Discovery workflow:

- 32. SOFTWARE: SNAPSHOT A) Anomaly detection systems B) The main screen showing the residual signal, and the threshold for anomaly detection C) Top anomalies D) Event details for selected anomaly

- 33. MULTICHANNEL FRAMEWORK Detect common anomalous events that happen across multiple information channels 1. Cross-channel Autoencoder (CC-AE) General framework channel 1 channel N anomaly detection anomaly detection … … anomalies 1 anomalies N anomaly detection common cross- channel anomalies

- 34. DETECTION METHOD: AUTOENCODER 17/1/17 34 External outlier detector? Reconstruction error

- 35. METHOD: CROSS-CHANNEL AUTOENCODER 1. Single channel anomaly detection For each channel, model the data with an autoencoder Determine the anomalies by analysing the reconstruction errors 2. Augmenting the reconstruction errors Augment the reconstruction errors across channels Model the reconstruction errors with an autoencoder 3. Cross-channel anomaly detection Determine the cross-channel anomalies by analysing the reconstruction errors

- 36. RESULTS 1 – NEWS DATA A channel is defined to be the stream of articles about a specific topic published by a news agency, e.g. economy-related articles from BBC 3 news agencies: BBC, Reuters, and CNN 9 predefined topics: politics, sports, health, entertainment, world-news, technology, and Asian news Free-form text data Feature extraction: Bag of words representation Anomaly injection: Breastfeeding articles

- 37. RESULTS 1 – NEWS DATA

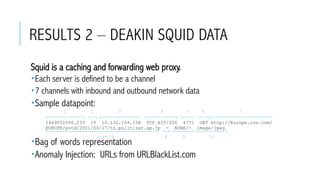

- 38. RESULTS 2 – DEAKIN SQUID DATA Squid is a caching and forwarding web proxy. Each server is defined to be a channel 7 channels with inbound and outbound network data Sample datapoint: Bag of words representation Anomaly Injection: URLs from URLBlackList.com 1469032590.233 19 10.132.169.158 TCP_HIT/200 4771 GET http://Europe.cnn.com/ EUROPE/potd/2001/04/17/tz.pulltizer.ap.jp - NONE/- image/jpeg 1 32 4 5 6 7 7 cont’d 8 9 10

- 39. RESULTS 2 – DEAKIN SQUID DATA

- 40. AGENDA Part I: Introduction to deep learning Part II: Anomaly detection Multichannel Unusual mixed-data co-occurrence Object lifetime model Part III: Software vulnerabilities 17/1/17 40

- 42. ENERGY-BASED METHOD 17/1/17 42 Restricted Boltzmann Machine Detection threshold Fee energy surface

- 43. MIXED-VARIATE RBM (TRAN ET AL, 2011) 17/1/17 43 þ ý þ ý ¤ ¡ ¡ ¤ ¡ ¡ 1 2 3

- 44. RESULTS OVER REAL DATASETS 44

- 45. ABNORMALITY ACROSS ABSTRACTIONS 17/1/17 45 F1(x1) F2(x2) Rank 1 Rank 2 F3(x3) Rank 3 Rank aggregation Mv.RBM Mv.DBN-L2 Mv.DBN-L3 WA1 WA1 WA2 WD1 WD2 WD3

- 46. 17/1/17 46

- 47. AGENDA Part I: Introduction to deep learning Part II: Anomaly detection Multichannel Unusual mixed-data co-occurrence Object lifetime model Part III: Software vulnerabilities 17/1/17 47

- 48. OBJECT LIFETIME MODELS Objects with a life User Devices Account Detect unusual behavior at a given time given object’s history I.e., (Low) conditional probability of next event/action/observation given the history Two properties: Irregular time by internal activities Intervention by external agents 17/1/17 48

- 49. DEEPEVOLVE: A MODEL OF EVOLVING BODY States are a dynamic memory process → LSTM moderated by time and intervention Discrete observations → vector embedding Time and previous intervention → “forgetting” of old states Current intervention → controlling the current states 17/1/17 49

- 50. DEEPEVOLVE: DYNAMICS 17/1/17 50 memory * input gate forget gate prev. memory output gate * * input aggregation over time → prediction previous intervention history states current data time gap current intervention current state New in DeepEvolve

- 51. END OF PART II 17/1/17 51

- 52. AGENDA Part I: Introduction to deep learning Part II: Anomaly detection Part III: Software vulnerabilities Malicious URL detection Unusual source code Code vulnerabilities 17/1/17 52

- 55. TRADITIONAL METHOD: FEATURE ENGINEERING + CLASSIFIER Protocols Domains, countries IP-based analysis Lexical analysis Query analysis 17/1/17 55 Handling shortening & dynamically-generated queries N-grams Special characters Blacklist

- 56. NEW METHOD: LEARNABLE CONVOLUTION AS FEATURE DETECTOR 17/1/17 56 http://colah.github.io/posts/2015-09-NN-Types-FP/ Learnable kernels andreykurenkov.com Feature detector, often many

- 57. END-TO-END MODEL OF MALICIOUS URLS 17/1/17 57 Safe/Unsafe max-pooling convolution -- motif detection Embedding (may be one-hot) Prediction with FFN 1 2 3 4 record vector char vector h t t p : / / w w w . s Train on 900K malicious URLs 1,000K good URLs Accuracy: 96% No feature engineering!

- 58. AGENDA Part I: Introduction to deep learning Part II: Anomaly detection Part III: Software vulnerabilities Malicious URL detection Unusual source code Code vulnerabilities 17/1/17 58

- 59. 17/1/17 59http://www.bentoaktechnologies.com/Images/code_scrn.jpg SOFTWARE ANALYTICS DATA-DRIVEN SOFTWARE ENGINEERING PROJECT: SAMSUNG GLOBAL REACH

- 60. MOTIVATIONS Software is eating the world. IoT development is exploding. Software security is an extremely critical issue Vulerable source files: 0.3-5%, depending on code review policy & quality ot code. General approach: Machine learning instead of human manual effort and programming heuristics Many software metrics have been found: Bugs, code complexity, churn rate, developer network activity metrics, fault history metrics, Question: can machine learn all of these by itself? 17/1/17 60

- 61. APPROACH: CODE MODELING Open source code is massive Bad coding is often the sign of bugs and security holes Malicious code may be different from the safe code Ideas: A code model assigns probability to a piece of code Given the code context, if conditional probability of a code piece per token is low compared to the rest à unusual code à more likely to contain defects or security vulnerability 17/1/17 61

- 62. A DEEP LANGUAGE MODEL FOR SOFTWARE CODE (DAM ET AL, FSE’16 SE+NL) A good language model for source code would capture the long-term dependencies The model can be used for various prediction tasks, e.g. defect prediction, code duplication, bug localization, etc. 17/1/17 62 Slide by Hoa Khanh Dam

- 63. CHARACTERISTICS OF SOFTWARE CODE Repetitiveness E.g. for (int i = 0; i < n; i++) Localness E.g. for (int size may appear more often that for (int i in some source files. Rich and explicit structural information E.g. nested loops, inheritance hierarchies Long-term dependencies try and catch (in Java) or file open and close are not immediately followed each other. 63 Slide by Hoa Khanh Dam

- 64. A LANGUAGE MODEL FOR SOFTWARE CODE Given a code sequence s= <w1, …, wk>, a language model estimate the probability distribution P(s): 64 Slide by Hoa Khanh Dam

- 65. TRADITIONAL MODEL: N-GRAMS Truncates the history length to n-1 words (usually 2 to 5 in practice) Useful and intuitive in making use of repetitive sequential patterns in code Context limited to a few code elements Not sufficient in complex SE prediction tasks. As we read a piece of code, we understand each code token based on our understanding of previous code tokens, i.e. the information persists. 65 Slide by Hoa Khanh Dam

- 66. NEW METHOD: LONG SHORT-TERM MEMORY (LSTM) 17/1/17 66 ct it ft Input

- 67. CODE LANGUAGE MODEL 67 Previous work has applied RNNs to model software code (White et al, MSR 2015) RNNs however do not capture the long-term dependencies in code Slide by Hoa Khanh Dam

- 68. EXPERIMENTS Built dataset of 10 Java projects: Ant, Batik, Cassandra, Eclipse-E4, Log4J, Lucene, Maven2, Maven3, Xalan-J, and Xerces. Comments and blank lines removed. Each source code file is tokenized to produce a sequence of code tokens. Integers, real numbers, exponential notation, hexadecimal numbers replaced with <num> token, and constant strings replaced with <str> token. Replaced less “popular” tokens with <unk> Code corpus of 6,103,191 code tokens, with a vocabulary of 81,213 unique tokens. 68 Slide by Hoa Khanh Dam

- 69. EXPERIMENTS (CONT.) 69 Both RNN and LSTM improve with more training data (whose size grows with sequence length). LSTM consistently performs better than RNN: 4.7% improvement to 27.2% (varying sequence length), 10.7% to 37.9% (varying embedding size). Slide by Hoa Khanh Dam

- 70. AGENDA Part I: Introduction to deep learning Part II: Anomaly detection Part III: Software vulnerabilities Malicious URL detection Unusual source code Code vulnerabilities 17/1/17 70

- 71. METHOD-1: LD-RNN FOR SEQUENCE CLASSIFICATION (CHOETKIERTIKUL ET AL, WORK IN PROGRESS) LD = Long Deep LSTM for document representation Highway-net with tied parameters for vulnerability score 17/1/17 71 pooling Embed LSTM Vulnerability score W1 W2 W3 W4 W5 W6 Recurrent Highway NetRegression Standardize XD logging to align with document representation h1 h2 h3 h4 h5 h6 …. …. …. ….

- 72. METHOD-2: DEEP SEQUENTIAL MULTI- INSTANCE LEARNING Code file as a bag Methods as instances Data are sequential 17/1/17 72 Headers Method 1 Method 2 Method N Vulnerability level . . .

- 73. COLUMN BUNDLE FOR N-TO-1 MAPPING (PHAM ET AL, WORK IN PROGRESS) 17/1/17 73 Function A Function B output Column representation

- 75. The popular On the rise The black sheep The good old • Representation learning (RBM, DBN, DBM, DDAE) • Ensemble • Back-propagation • Adaptive stochastic gradient • Dropouts & batch-norm • Rectifier linear transforms & skip-connections • Highway nets, LSTM & CNN • Differential Turing machines • Memory, attention & reasoning • Reinforcement learning & planning • Lifelong learning • Group theory (Lie algebra, renormalisation group, spin- class)

- 76. WHY DEEP LEARNING WORKS: PRINCIPLES Expressiveness Can represent the complexity of the world à Feedforward nets are universal function approximator Can compute anything computable à Recurrent nets are Turing-complete Learnability Have mechanism to learn from the training signals à Neural nets are highly trainable Generalizability Work on unseen data à Deep nets systems work in the wild (Self-driving cars, Google Translate/Voice, AlphaGo) 17/1/17 76

- 77. WHEN DEEP LEARNING WORKS Lots of data (e.g., millions) Strong, clean training signals (e.g., when human can provide correct labels – cognitive domains). Andrew Ng of Baidu: When humans do well within sub-second. Data structures are well-defined (e.g., image, speech, NLP, video) Data is compositional (luckily, most data are like this) The more primitive (raw) the data, the more benefit of using deep learning. 17/1/17 77

- 78. BONUS: HOW TO POSITION 17/1/17 78 “[…] the dynamics of the game will evolve. In the long run, the right way of playing football is to position yourself intelligently and to wait for the ball to come to you. You’ll need to run up and down a bit, either to respond to how the play is evolving or to get out of the way of the scrum when it looks like it might flatten you.” (Neil Lawrence, 7/2015, now with Amazon) http://inverseprobability.com/2015/07/12/Thoughts-on-ICML-2015/

- 79. THE ROOM IS WIDE OPEN Architecture engineering Non-cognitive apps Unsupervised learning Graphs Learning while preserving privacy Modelling of domain invariance Better data efficiency Multimodality Learning under adversarial stress Better optimization Going Bayesian http://smerity.com/articles/2016/architectures_are_the_new_feature_engineering.html

![BONUS: HOW TO POSITION

17/1/17 78

“[…] the dynamics of the game will evolve. In the long

run, the right way of playing football is to position yourself

intelligently and to wait for the ball to come to you. You’ll

need to run up and down a bit, either to respond to how

the play is evolving or to get out of the way of the scrum

when it looks like it might flatten you.” (Neil Lawrence,

7/2015, now with Amazon)

http://inverseprobability.com/2015/07/12/Thoughts-on-ICML-2015/](https://image.slidesharecdn.com/deep-anomaly-jan-2017-191027010405/85/Deep-learning-for-detecting-anomalies-and-software-vulnerabilities-78-320.jpg)

![Glary Utilities Pro 5.157.0.183 Crack + Key Download [Latest]](https://cdn.slidesharecdn.com/ss_thumbnails/artificialintelligence17-250529071922-ef6fe98e-thumbnail.jpg?width=560&fit=bounds)