Ad

Deep learning presentation

- 2. 22

- 4. 4 Figure 1 : Subsets of AI(Adapted from: www.edureka.co)

- 5. 5Figure 2: Some Applications of Artificial Intelligence

- 6. 6 Figure 3: AI Technologies Timeline (Adapted from: www.edureka.co)

- 7. 7 Figure 4: Process Involved in Machine Learning (Adapted from: www.edureka.co)

- 8. 8 Figure 5: Limitation of ML (Source: www.edureka.co)

- 9. 9

- 10. 10

- 11. 11

- 12. 12 Figure 6: Artificial Neural Networks (Adapted from: www.edureka.co) 12

- 13. 13 (Deng & Yu, 2014).

- 15. 15

- 16. 16 Figure 7: Biological and Artificial Neuron (Adapted from: www.edureka.co)

- 17. 17

- 18. 2318

- 19. 19

- 20. 20 Figure 8: Pipeline of the general CNN Architecture (Source: Guo et al., 2016)

- 21. 21

- 22. 22

- 23. 23 Figure 11: DBN, DBN and DEM (Source: Guo et al., 2016).

- 24. 24 Figure 12: The pipeline of an autoencoder (Source: Guo et al., 2016).

- 25. 25

- 26. (1) Learning Algorithms Table 1: A categorization of the basic deep NN learning algorithms and related approaches.(Source: Guo et al., 2016). CNN RBM AUTOENCODER SPARSE CODING AlexNet (Krizhevsky et al, 2012) Deep Belief Net (Hinton, et al, 2006) Sparse Autoencoder (Poultney et al 2006) Sparse Coding (Yang et al, 2009) Clarifai (Zeiler, et al 2014) Deep Boltzmann Machine (Salakhutdinov et al., 2009) Denoising Autoencoder (Vincent, et al. 2008) Laplacian Sparse coding (Gao et al, 2010) SPP (He et al, 2014) Deep Energy Models (Ngiam et al., 2011) Contractive Autoencoder (Rifai, et al.2011) Local Co-ordinate coding (Yu et al, 2009) VGG (Simonyan et al., 2014) Super-Vector coding (Zhou et al, 2010) GoogLeNet (Szegedy et al., 2015) 3126

- 28. Related Works S/N Research Focus Contribution 1 To discover a fast and more efficient way of initializing weights for effective learning of low- dimensional codes from high-dimensional data in multi-layer neural networks (Hinton et al., 2006; Salakhutdinov et al., 2009; Vincent et al., 2010; Cho et al., 2011). Implementation of a novel learning algorithm for initializing weights that allows deep AE networks and deep boltmzann machines to learn useful higher representations 2 To explore the possibility of allowing hashing function learning(learning of efficient binary codes that preserve neighborhood structure in the original data space) and feature learning occur simultaneously (Salakhutdinov et al., 2009; Erin et al., 2015; Zhong et al., 2016). Introduction of a state–of-the-art deep hashing, supervised deep hashing and semantic hashing methods for large scale visual search, image retrieval and text mining 3 To bridge the gap between the success of CNNSs for supervised learning and unsupervised learning (Springenberg et al., 2014; Radford et al., 2015). Introduction of a class of CNNs called deep convolutional generative adversarial networks (DCGANs) for unsupervised learning.

- 30. Related Works S/N Research Focus Contribution 1 To mitigate the problem of overfitting in large neural networks with sparse datasets (Zeiler et al., 2013; Srivastava et al., 2014; Pasupa et al., 2016;). Implementation of several regularization techniques such as “dropout”, stochastic pooling, weight decay, flipped image augmentation amongst others for ensuring stability in DNN 2 To investigate how to automatically rank source CNNs for transfer learning and use transfer learning to improve a Sum-Product Network for probabilistic inference when using sparse datasets (Afridi et al., 2017; Zhao et al., 2017). Design of a reliable theoretical framework that perform zeroshot ranking of CNNs for transfer learning for a given target task in Sum-Product networks 30

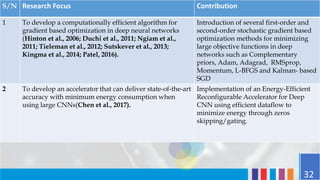

- 32. Related Works S/N Research Focus Contribution 1 To develop a computationally efficient algorithm for gradient based optimization in deep neural networks (Hinton et al., 2006; Duchi et al., 2011; Ngiam et al., 2011; Tieleman et al., 2012; Sutskever et al., 2013; Kingma et al., 2014; Patel, 2016). Introduction of several first-order and second-order stochastic gradient based optimization methods for minimizing large objective functions in deep networks such as Complementary priors, Adam, Adagrad, RMSprop, Momentum, L-BFGS and Kalman- based SGD 2 To develop an accelerator that can deliver state-of-the-art accuracy with minimum energy consumption when using large CNNs(Chen et al., 2017). Implementation of an Energy-Efficient Reconfigurable Accelerator for Deep CNN using efficient dataflow to minimize energy through zeros skipping/gating. 32

- 33. 5. Deep Learning Variants 33

- 34. Related Works S/N Research Focus Contribution 1 To demonstrate the advantage of combining deep neural networks with support vector machines (Zhong et al., 2000; Nagi et al., 2012; Tang et al., 2013; Li et al., 2017). Introduction of a novel classifier architecture that combines two heterogeneous supervised classification techniques, CNN and SVM for feature extraction and for classification 2 To exploit the power of deep neural networks in optimizing the performance of nearest neighbor classifiers in kNN Classification tasks (Min et al., 2009; Ren et al., 2014). They presented a framework for learning convolutional nonlinear features for K nearest neighbor (kNN) classification. 34

- 35. Application Literature Review Deep learning has been applied in so many ways to solve real life problems among which are: Methodology Application of DL 1 Domain Application of DL 2 3135

- 36. (1) Methodology Application of DL Author and Title Objective Methodology Contribution Araque et al.(2017). Enhancing deep learning sentiment analysis with ensemble techniques in social applications. To improve the performance of sentiment analysis in social applications by integrating deep learning techniques with traditional feature based approaches based on hand-crafted or manually extracted features The utilization of a word embedding's model and a linear machine learning algorithm to develop a deep learning based sentiment classifier(baseline), the use of two ensemble techniques namely ensemble of classifiers (CEM) and ensemble of features (MSG and MGA) Development of ensemble models for sentiment analysis which surpass that of the original baseline classifier 3136

- 37. Author and Title Objective Methodology Contribution Betru et al. (2017). Deep Learning Methods on Recommender System. A Survey of State-of-the-art: To distinguish between the various traditional recommendation techniques and introducing deep learning collaborative and content based approaches As pointed out by the authors, the methodology adopted in (Wang, Wang, & Yeung, 2015) integrated a Bayesian Stack De- noising Auto Encoder (SDAE) and Collaborative Topic Regression to perform collaborative deep learning. The implementation of a novel collaborative deep learning approach, the first of its kind to learn from review texts and ratings. 3137 Methodology Application of DL (Contd)

- 38. Author and Title Objective Methodology Contribution Luo et al.(2016). A deep learning approach for credit scoring using credit default swaps. To implement a novel method which leverages a DBN model for carrying out credit scoring in credit default swaps (CDS) markets The methodology adopted by the researchers in their experiments was to compare the results of MLR, MLP, and SVM with the Deep Belief Networks (DBN) with the Restricted Boltzmann Machine by applying 10-fold cross- validation on a dataset The contribution made by the researchers to this literature is investigating the performance of DBN in corporate credit scoring. The results demonstrate that the deep learning algorithm significantly outperforms the baselines. 38 Methodology Application of DL (Contd)

- 39. Author and Title Objective Methodology Contribution Grinblat et al.(2016). Deep learning for plant identification using vein morphological patterns. The authors aimed to eliminate the use of handcrafted features extractors by proposing the use of deep convolutional network for the problem of plant identification from leaf vein patterns. The methodology adopted to classify three plant species: white bean, red bean and soybean was the use of dataset containing leaf images, a CNN of 6 layers trained with the SGD method, a training set using 20 samples as mini batches with a 50% dropout for regularization. The relevance of deep learning to agriculture using CNN as a model for plant identification based on vein morphological pattern. 39 Methodology Application of DL (Contd)

- 40. Author and Title Objective Methodology Contribution Evermann et al.(2017). Predicting process behaviour using deep learning. To come up with a novel method of carrying out process prediction without the use of explicit models using deep learning. The approach used to implement this novel idea included the use of a framework called Tensorflow as it provides (RNN) functionality embedded with LSTM cells which can be run on high performance parallel, cluster and GPU platforms. Improvement in state- of-the-art in process prediction, the needless use of explicit model and the inherent advantages of using an artificial intelligence approach. 40 Methodology Application of DL (Contd)

- 41. Author and Title Objective Methodology Contribution Kang et al. (2016). A deep-learning- based emergency alert system. proposed a deep learning emergency alert system to overcome the limitations of the traditional emergency alert systems A heuristic based machine learning technology was used to generate descriptors starts for labels in the problem domain, an API analyzer that utilized convolutional neural network for object detection and parsing to generate compositional models was also used. Contribution of this research shows that the EAS can be adapted to other monitoring devices asides from CCTV 41 Methodology Application of DL (Contd)

- 42. (2) Domain Applications Of Deep Learning Domain Deep learning is Applied to perform Topic &Reference Recommender System Sentiment Analysis/Opinion mining) Collaborative Deep Learning for Recommender Systems(Wang, Wang, & Yeung, 2015) Social Applications (Sentiment Analysis/Opinion mining/Facial Recognition) Enhancing deep learning sentiment analysis with ensemble techniques in social applications (Araque et al., 2017). Medicine (Medical Diagnosis) A survey on deep learning in medical image analysis(Litjens et al., 2017) Finance (Credit Scoring, stock market prediction) A deep learning approach for credit scoring using credit default swaps (Luo et al., 2016). 42

- 43. Domain Deep learning is Applied to perform Topic &Reference Transportation Traffic flow prediction Deep learning for short-term traffic flow prediction(Polson et al.,2017). Business Process prediction Predicting Process Behaviour Using Deep Learning (Evermann et al., 2017). Emergency Emergency Alert A Deep-Learning-Based Emergency Alert System (Kang et al., 2016) Agriculture (Plant Identification) Deep Learning for Plant Identification Using Vein Morphological Patterns (Grinblat et al.,2016). 43 Domain Applications 0f Deep Learning (Contd)

- 44. 44Figure 13: Face Recognition (Adapted from www.edureka.co)

- 45. 6045 (Contd) Figure 14: Google Lens (Adapted from www.edureka.co)

- 46. 6146 (Contd) Figure 15: Machine Translation (Adapted from www.edureka.co)

- 47. 6247Figure 16: Instant Visual Translation (Adapted from www.edureka.co)

- 48. 6348Figure 17: Self Driving Cars (Adapted from www.edureka.co)

- 49. 6449Figure 18: Machine Translation (Adapted from www.edureka.co)

- 50. Trends in Deep Learning Research 1. Design of more powerful deep models to learn from fewer training data. (Guo et al, 2016; pasupa et al., 2016 ;Li, et al 2017) 2. Use of better optimization algorithms to adjust network parameters i.e. regularization techniques (zeng et al, 2016; Li, et al 2017) 3. Implementation of deep learning algorithms on mobile devices (Li, et al 2017) 4. Stability analysis of deep neural network (Li, et al 2017) 50

- 51. Trends in Deep Learning Research (Contd) 5. Combining probabilistic , auto-encoder and manifold learning models.(bengio et al., 2013) 6. Applications of deep neural networks in nonlinear networked control systems (NCSs) (Li, et al 2017) 7. Applications of unsupervised, semi-supervised and reinforcement-learning approaches to DNNs for complex systems (Li, et al 2017) 8. Learning deep networks for other machine learning techniques e.g. deep kNN (Zoran et al, 2017), deep SVM (Li, et al 2017). 51

- 52. Research Issues/challenges in Deep Learning 1. High Computational cost/burden in training phase (pasupa et al., 2016) 2. Over-fitting problem when the data-set is small. (pasupa et al., 2016, Guo et al, 2016) 3. Optimization issues due to local minima or use of first order methods. (pasupa et al., 2016) 4. Little or no clear understanding of the underlying theoretical foundation of which deep learning architecture should perform well or outperform other approaches. (Guo et al, 2016) 5. Time complexity (Guo et al, 2016) 52

- 53. Deep Learning – Use Case Let’s look at a use case where we can use DL for image recognition 53

- 54. Practical Application of deep learning in Facial Recognition Problem Scenario Suppose we want to create a system that can recognize faces of different people in an image. How do we solve this as a typical machine learning problem and/or using a deep learning approach? 54

- 55. Classical Machine Learning Approach We will define facial features such as eyes, nose, ears etc. and then, the system will identify which features are more important for which person on its own or by itself. 55

- 56. Deep Learning Approach Now, deep learning takes this one step ahead. Deep learning automatically finds out the features which are important for classification because of deep neural networks, whereas in case of Machine Learning we had to manually define these features. 56

- 57. Practical Application of deep learning - Facial Recognition (Contd) 57 Figure 19: Face Recognition Using deep networks (Source: www.edureka.co)

- 58. Deep Face Recognition (1) Phase-I: Enrollment phase – Model / system is trained using millions of prototype face images and a trained model is generated. Generated face features are stored in database and (2) Phase-II: Recognition phase – Query face image is given as input to the model generated in phase- I to recognise it correctly. 58 Face recognition applications have two parts or phases viz: Figure 20: Face Recognition Architecture (Source: aiehive.com)

- 59. Deep Face Recognition (Contd) Steps within Enrollment Phase Includes 1. Face Detection 2. Feature extraction 3. Store Model and extracted feature in Database Steps within Recognition Phase / Query Phase Includes 1. Face Detection 2. Preprocessing 3. Feature Extraction 4. Recognition 59

- 60. Step 1: Face Detection - Enrollment Phase Face Detection: Face needs to be located and region of interest is computed. • Histogram of Oriented Gradients (HOG) is a faster and easier algorithm for face detection. • Detected faces are given to next step of feature extraction. 60 Figure 21: Multiple Face Detection (Source: aiehive.com)

- 61. Step 2: Feature Extraction- Enrollment Phase Deep learning can determine which parts of a face are important to measure. Deep Convolution Neural Network (DCNN) can be trained to learn important features. (Simonyan et al., 2014) What is the best feature measure that represents human face in a best way? 61

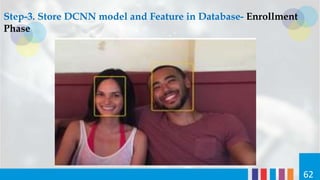

- 62. Step-3. Store DCNN model and Feature in Database- Enrollment Phase 62

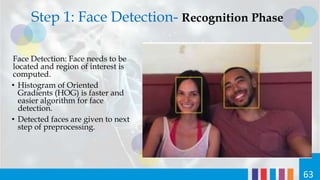

- 63. Step 1: Face Detection- Recognition Phase Face Detection: Face needs to be located and region of interest is computed. • Histogram of Oriented Gradients (HOG) is faster and easier algorithm for face detection. • Detected faces are given to next step of preprocessing. 63

- 64. Step-2. Pre-processing- Recognition Phase • Pre-process to overcome issues like noise, illumination using any suitable filters [Kalman Filter, Adaptive Retinex (AR), Multi-Scale Self Quotient (SQI), Gabor Filter, etc.] • Pose/rotation can be accounted by using 3D transformation or affine transformation or face landmark estimation • Determine 68 landmark points on every face— the top of the chin, the outside edge of each eye, the inner edge of each eyebrow, etc. 64 Figure 22: Landmark point estimation (Source: aiehive.com)

- 65. Step 3: Feature Extraction-Recognition Phase • In this third step of Deep Face Recognition, we have to use trained DCNN model, which was generated during feature extraction step of enrollment phase • A query image is given as input. • The DCNN generates 128 feature values. • This feature vector is then compared with feature vector stored in database Step 4: Recognition-Recognition Phase • This can be done by using any basic machine learning classification algorithm SVM classifier, Bayesian classifier, Euclidean Distance classifier, for matching database feature vector with query feature vector. • Gives ID of best matching face image from database as a recognition output. 65

- 66. Conclusion • Deep learning is a representation learning method and the new state-of-the-art technique for performing automatic feature extraction in large unlabeled data • Various categories of deep learning architectures and basic algorithms together with their related approaches have been discussed • Several theoretical concepts and practical application areas have been presented • It is a promising research area for tackling feature extraction for complex real-world problems without having to undergo the process of manual feature engineering. • With the rapid development of hardware resources and computation technologies, it is certain that deep neural networks will receive wider attention and find broader applications in the future. 66

- 67. References • Afridi, M. J., Ross, A., & Shapiro, E. M. (2017). On automated source selection for transfer learning in convolutional neural networks. Pattern Recognition. • Araque, O., Corcuera-Platas, I., Sánchez-Rada, J. F., & Iglesias, C. A. (2017). Enhancing deep learning sentiment analysis with ensemble techniques in social applications. Expert Systems with Applications, 77, 236-246. • Betru, B. T., Onana, C. A., & Batchakui, B. (2017). A Survey of State-of-the-art: Deep Learning Methods on Recommender System. International Journal of Computer Applications, 162(10). • Chen, Y. H., Krishna, T., Emer, J. S., & Sze, V. (2017). Eyeriss: An energy-efficient reconfigurable accelerator for deep convolutional neural networks. IEEE Journal of Solid-State Circuits, 52(1), 127- 138. • Cho, K., Raiko, T., & Ihler, A. T. (2011). Enhanced gradient and adaptive learning rate for training restricted Boltzmann machines. In Proceedings of the 28th International Conference on Machine Learning (ICML-11) (pp. 105-112). 67

- 68. References (Contd). • Deng, L., & Yu, D. (2014). Deep learning: methods and applications. Foundations and Trends® in Signal Processing, 7(3–4), 197-387. • Duchi, J., Hazan, E., & Singer, Y. (2011). Adaptive subgradient methods for online learning and stochastic optimization. Journal of Machine Learning Research, 12(Jul), 2121-2159. • Erin Liong, V., Lu, J., Wang, G., Moulin, P., & Zhou, J. (2015). Deep hashing for compact binary codes learning. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (pp. 2475-2483). • Evermann, J., Rehse, J. R., & Fettke, P. (2017). Predicting process behaviour using deep learning. Decision Support Systems. • Gao, S., Tsang, I. W. H., Chia, L. T., & Zhao, P. (2010, June). Local features are not lonely– Laplacian sparse coding for image classification. In Computer Vision and Pattern Recognition (CVPR), 2010 IEEE Conference on (pp. 3555-3561). IEEE. 68

- 69. References (Contd). • Grinblat, G. L., Uzal, L. C., Larese, M. G., & Granitto, P. M. (2016). Deep learning for plant identification using vein morphological patterns. Computers and Electronics in Agriculture, 127, 418-424. • Guo, Y., Liu, Y., Oerlemans, A., Lao, S., Wu, S., & Lew, M. S. (2016). Deep learning for visual understanding: A review. Neurocomputing, 187, 27-48. • He, K., Zhang, X., Ren, S., & Sun, J. (2014, September). Spatial pyramid pooling in deep convolutional networks for visual recognition. In European Conference on Computer Vision (pp. 346-361). Springer, Cham. • Hinton, G. E., Osindero, S., & Teh, Y. W. (2006). A fast learning algorithm for deep belief nets. Neural computation, 18(7), 1527-1554. • Kang, B., & Choo, H. (2016). A deep-learning-based emergency alert system. ICT Express, 2(2), 67- 70. 69

- 70. References (Contd). • Kingma, D., & Ba, J. (2014). Adam: A method for stochastic optimization. arXiv preprint arXiv:1412.6980. • Krizhevsky, A., Sutskever, I., & Hinton, G. E. (2012). Imagenet classification with deep convolutional neural networks. In Advances in neural information processing systems (pp. 1097- 1105). • LeCun, Y., Bengio, Y., & Hinton, G. (2015). Deep learning. Nature, 521(7553), 436-444. • LeCun, Y., Bottou, L., Bengio, Y., & Haffner, P. (1998). Gradient-based learning applied to document recognition. Proceedings of the IEEE, 86(11), 2278-2324. • Lee, H., Grosse, R., Ranganath, R., & Ng, A. Y. (2011). Unsupervised learning of hierarchical representations with convolutional deep belief networks. Communications of the ACM, 54(10), 95- 103. 70

- 71. References (Contd). • Li, Y., & Zhang, T. (2017). Deep neural mapping support vector machines. Neural Networks, 93, 185-194. • Litjens, G., Kooi, T., Bejnordi, B. E., Setio, A. A. A., Ciompi, F., Ghafoorian, M., & Sánchez, C. I. (2017). A survey on deep learning in medical image analysis. arXiv preprint arXiv:1702.05747. • Luo, C., Wu, D., & Wu, D. (2016). A deep learning approach for credit scoring using credit default swaps. Engineering Applications of Artificial Intelligence. • Makwana, M. A.(2016, Dec)Deep Face Recognition Using Deep Convolutional Neural Network.Retrieved from http://aiehive.com • Min, R., Stanley, D. A., Yuan, Z., Bonner, A., & Zhang, Z. (2009, December). A deep non-linear feature mapping for large-margin knn classification. In Data Mining, 2009. ICDM'09. Ninth IEEE International Conference on (pp. 357-366). IEEE. • Nagi, J., Di Caro, G. A., Giusti, A., Nagi, F., & Gambardella, L. M. (2012, December). Convolutional neural support vector machines: hybrid visual pattern classifiers for multi-robot systems. In Machine Learning and Applications (ICMLA), 2012 11th International Conference on (Vol. 1, pp. 71

- 72. References (Contd). • Ngiam, J., Chen, Z., Koh, P. W., & Ng, A. Y. (2011). Learning deep energy models. In Proceedings of the 28th International Conference on Machine Learning (ICML-11) (pp. 1105-1112). • Pasupa, K., & Sunhem, W. (2016, October). A comparison between shallow and deep architecture classifiers on small dataset. In Information Technology and Electrical Engineering (ICITEE), 2016 8th International Conference on (pp. 1-6). IEEE. • Patel, V. (2016). Kalman-based stochastic gradient method with stop condition and insensitivity to conditioning. SIAM Journal on Optimization, 26(4), 2620-2648. • Polson, N. G., & Sokolov, V. O. (2017). Deep learning for short-term traffic flow prediction. Transportation Research Part C: Emerging Technologies, 79, 1-17. • Poultney, C., Chopra, S., & Cun, Y. L. (2007). Efficient learning of sparse representations with an energy-based model. In Advances in neural information processing systems (pp. 1137-1144). 72

- 73. References (Contd). • Radford, A., Metz, L., & Chintala, S. (2015). Unsupervised representation learning with deep convolutional generative adversarial networks. arXiv preprint arXiv:1511.06434. • Ren, W., Yu, Y., Zhang, J., & Huang, K. (2014, August). Learning convolutional nonlinear features for k nearest neighbor image classification. In Pattern Recognition (ICPR), 2014 22nd International Conference on (pp. 4358-4363). IEEE. • Rifai, S., Vincent, P., Muller, X., Glorot, X., & Bengio, Y. (2011). Contractive auto-encoders: Explicit invariance during feature extraction. In Proceedings of the 28th international conference on machine learning (ICML-11) (pp. 833-840). • Salakhutdinov, R., & Hinton, G. (2009). Semantic hashing. International Journal of Approximate Reasoning, 50(7), 969-978. • Salakhutdinov, R., & Hinton, G. (2009, April). Deep boltzmann machines. In Artificial Intelligence and Statistics (pp. 448-455). 73

- 74. References (Contd). • Simonyan, K., & Zisserman, A. (2014). Very deep convolutional networks for large-scale image recognition. arXiv preprint arXiv:1409.1556. • Springenberg, J. T., Dosovitskiy, A., Brox, T., & Riedmiller, M. (2014). Striving for simplicity: The all convolutional net. arXiv preprint arXiv:1412.6806. • Srivastava, N., Hinton, G. E., Krizhevsky, A., Sutskever, I., & Salakhutdinov, R. (2014). Dropout: a simple way to prevent neural networks from overfitting. Journal of machine learning research, 15(1), 1929-1958. • Sutskever, I., Martens, J., Dahl, G., & Hinton, G. (2013, February). On the importance of initialization and momentum in deep learning. In International conference on machine learning (pp. 1139-1147). • Szegedy, C., Liu, W., Jia, Y., Sermanet, P., Reed, S., Anguelov, D., ... & Rabinovich, A. (2015). Going deeper with convolutions. In Proceedings of the IEEE conference on computer vision and pattern recognition (pp. 1-9). 74

- 75. References (Contd). • Tang, Y. (2013). Deep learning using support vector machines. CoRR, abs/1306.0239, 2. • Tieleman, T., & Hinton, G. (2012). Lecture 6.5-rmsprop: Divide the gradient by a running average of its recent magnitude. COURSERA: Neural networks for machine learning, 4(2), 26-31. • Vincent, P., Larochelle, H., Bengio, Y., & Manzagol, P. A. (2008, July). Extracting and composing robust features with denoising autoencoders. In Proceedings of the 25th international conference on Machine learning (pp. 1096-1103). ACM. • Vincent, P., Larochelle, H., Lajoie, I., Bengio, Y., & Manzagol, P. A. (2010). Stacked denoising autoencoders: Learning useful representations in a deep network with a local denoising criterion. Journal of Machine Learning Research, 11(Dec), 3371-3408. • Wang, H., Wang, N., & Yeung, D. Y. (2015, August). Collaborative deep learning for recommender systems. In Proceedings of the 21th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining (pp. 1235-1244). ACM. • what is deep learning.(Web log post).Retrieved September 6, 2017 from 75

- 76. References (Contd). • Yang, J., Yu, K., Gong, Y., & Huang, T. (2009, June). Linear spatial pyramid matching using sparse coding for image classification. In Computer Vision and Pattern Recognition, 2009. CVPR 2009. IEEE Conference on (pp. 1794-1801). IEEE. • Yu, K., Zhang, T., & Gong, Y. (2009). Nonlinear learning using local coordinate coding. In Advances in neural information processing systems (pp. 2223-2231). • Zeiler, M. D., & Fergus, R. (2014, September). Visualizing and understanding convolutional networks. In European conference on computer vision (pp. 818-833). Springer, Cham. • Zhao, J., & Ho, S. S. (2017). Structural knowledge transfer for learning Sum-Product Networks. Knowledge-Based Systems, 122, 159-166. • Zhong, G., Xu, H., Yang, P., Wang, S., & Dong, J. (2016, July). Deep hashing learning networks. In Neural Networks (IJCNN), 2016 International Joint Conference on (pp. 2236-2243). IEEE. • Zhong, S., & Ghosh, J. (2000). Decision boundary focused neural network classifier. 76

- 77. References (Contd). • Zhou, X., Yu, K., Zhang, T., & Huang, T. S. (2010, September). Image classification using super- vector coding of local image descriptors. In European conference on computer vision (pp. 141-154). Springer, Berlin, Heidelberg. • Zoran, D., Lakshminarayanan, B., & Blundell, C. (2017). Learning Deep Nearest Neighbor Representations Using Differentiable Boundary Trees. arXiv preprint arXiv:1702.08833. 77

- 78. • Almighty God for His sufficient grace. • I would like to appreciate the HOD, Dr Osamor V.C. and the PG Coordinator, Dr. Azeta for their contribution toward the reality of the presentation today. • Special recognition to my Mentor, Dr. Olufunke Oladipupo who gave this work the depth of knowledge it possesses • I also appreciate the entire faculty members in the department for their support. Acknowledgement 78

- 79. 79

Editor's Notes

- #2: Here is an outline of my presentation

- #4: By way of a gentle informal introduction deep learning can be viewed as a transitive subset of AI via machine learning as depicted in the figure. DL is currently the hottest trend in AI and ML so a quick reminder of what AI and ML entails is necessary

- #6: As a way of tracing the history of AI,ML and DL, here is a technology timeline showing the evolution of these 3 concepts and how dominant they have remained over the years

- #7: Next we look at ML which is a kind of AI

- #8: But Machine learning is fraught with a major challenge/limitation which is a process called feature extraction for complex problems such as object recognition and this is where DL comes to the rescue.

- #9: The aim of this seminar is to review the concept, architecture

- #10: Objectives include

- #11: What is Deep Learning?

- #12: A class of machine learning techniques that exploit many layers of non-linear information processing for supervised or unsupervised feature extraction and transformation, and for pattern analysis and classification (Deng & Yu, 2014)

- #13: DL can also be seen as a subfield of ML

- #14: Why is deep learning happening now?

- #15: Lets take a look at how DL works

- #18: Here is a list of some deep learning tools used to perform deep learning or train very deep neural networks

- #19: Lets have a look at the Categories of Deep Architecture

- #23: Of the three variants, the DEM are the most common

- #25: Due to the peculiarities of deep learning, we chose to divide the literature review into 2 aspects viz: Theoretical and Application

- #26: Under learning algorithms, the Basic Learning Algo or technique used for deep learning are

- #27: What is the best feature measure to use for performing detection or classification

- #29: Usually when deep networks are trained with sparse datasets, overfitting occurs

- #50: Deep learning research is currently undergoing an upward trend in terms of the following

- #52: Some research issues faced in deep learning include

- #53: Lets look at a scenario where we can use deep learning for facial recognition

- #54: Suppose we are faced with a problem scenario

- #57: I’l like to give a brief overview of how deep learning works in facial recognition and go into details afterwards

- #58: Now for a detailed explanation of deep face recognition

![Step-2. Pre-processing- Recognition Phase

• Pre-process to overcome issues like noise,

illumination using any suitable filters

[Kalman Filter, Adaptive Retinex (AR),

Multi-Scale Self Quotient (SQI), Gabor

Filter, etc.]

• Pose/rotation can be accounted by using

3D transformation or affine transformation

or face landmark estimation

• Determine 68 landmark points on every

face— the top of the chin, the outside edge

of each eye, the inner edge of each

eyebrow, etc.

64

Figure 22: Landmark point estimation (Source: aiehive.com)](https://image.slidesharecdn.com/deeplearningpresentation-180625071236/85/Deep-learning-presentation-64-320.jpg)