Ad

Deep State Space Models for Time Series Forecasting の紹介

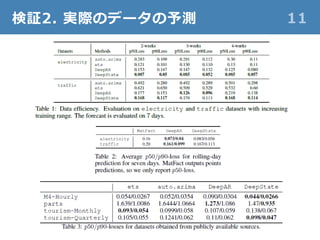

- 1. Syama Sundar Rangapuram, Matthias Seeger, Jan Gasthaus, Lorenzo Stella, Yuyang Wang, Tim Januschowski. Deep State Space Models for Time Series Forecasting. In Advances in Neural Information Processing Systems 31, 2018. 2019年1月26日 三原 千尋 NeurIPS2018 論文紹介 複数時系列の状態空間モデルに対する RNNを用いた横断的パラメータ推定 Deep State Space Models for Time Series Forecasting 出典 https://papers.nips.cc /paper/8004-deep-state-space-models...

- 2. 予測したい時系列 2 例1. 各顧客の15分ごとの消費電力 https://archive.ics.uci.edu/ml/datasets/ElectricityLoadDiagrams20112014 UCI Machine Learning Repository: ElectricityLoadDiagrams20112014 Data Set • 15分毎に計測された370の顧客の電力消費量(kW) × 3年分のデータ。 • 上図はある5つの顧客について約1週間分のみプロット。

- 3. 予測したい時系列 3 例2. 道路上の各地点の10分ごとの混雑率 https://archive.ics.uci.edu/ml/datasets/PEMS-SF UCI Machine Learning Repository: PEMS-SF Data Set • 10分毎に計測された963箇所のセンサーの計測値 × 15か月分のデータ。 但し各日は日付ではなく曜日ラベルで特定されている。 • 上図は3か所のセンサーについてある2日分のみプロット。

- 4. 予測したい時系列の特徴 4 • 季節性(ここでの季節性とは、朝~昼~夜の時間帯ごとの特徴や、 曜日ごとの特徴)がある。→ この特徴を考慮してモデリングしたい。 • 類似の時系列が複数ある(たくさんの家庭、たくさんの道路上のセ ンサー)。→ ある家庭の未来の消費電力を予測するのに、その家庭 の現時点までのデータだけでなく、他の家庭の現時点までのデータ も参考になりそう。

- 5. やりたいこと 5 𝑧1 (1) , 𝑧2 (1) , 𝑧3 (1) , ⋯ , 𝑧 𝑇1 (1) 𝑧1 (2) , 𝑧2 (2) , 𝑧3 (2) , ⋯ , 𝑧 𝑇2 (2) 𝑧 𝑇1+1 (1) , ⋯ , 𝑧 𝑇1+𝜏 (1) 𝑧 𝑇2+1 (2) , ⋯ , 𝑧 𝑇2+𝜏 (2) 𝑧1 (𝑁) , 𝑧2 (𝑁) , 𝑧3 (𝑁) , ⋯ , 𝑧 𝑇 𝑁 (𝑁) 𝑧 𝑇 𝑁+1 (𝑁) , ⋯ , 𝑧 𝑇 𝑁+𝜏 (𝑁) N 本の時系列データが 観測されている。 各時系列の τ ステップ先 までの未来を予測したい。 観測値たちを入力したら予測値たちを出力する箱がほしい。

- 6. とりうるアプローチ 6 状態空間モデル(SSM) 深層ニューラルネットワーク(DNN) 𝑧1 (𝑖) 𝑙1 (𝑖) 𝑙0 (𝑖) 𝑙2 (𝑖) 𝑧2 (𝑖) DNN 𝑙 𝑡 (𝑖) 𝑧 𝑡 (𝑖) RNN RNN RNN RNN RNN 適当な 入力期間 ほしい 予測期間 再帰的 でも よい 𝐹1 𝑎1 𝐹2 𝑎2初期分布 • 観測値の裏に観測できない 「状態」 𝑙 𝑡 (𝑖) があると考える。 • 初期分布、時間発展モデル、 観測モデルを特定する。 • 観測値の下での予測値の事後 分布を計算する。 観測できない「状態」があると考える 観測値 𝐹𝑡 𝑎 𝑡 予測値 顧客1 顧客2 顧客3 顧客1 顧客2 顧客3 …… 分布がガウシアン&モデルが線形 だと解析的に解ける。

- 7. とりうるアプローチ 7 状態空間モデル(SSM) ○ 時間発展に考えている想定(季節性など)を取り込むことができる。 ○ 学習されたモデルの意味を解釈しやすい。 × 複雑な特徴をもつモデルを考えるのは大変。 × 複数の類似時系列(の相関構造がよくわかっていなくてモデリング できない場合)の情報を相互に活用する方法が確立されていない。 深層ニューラルネットワーク(DNN) ○ 複雑な特徴も抽出してくれる(はず)。 ○ 複数時系列間の相関構造も学習してくれる(はず)。 × 想定している構造を組み込むことはあまりできない。 × 学習されたモデルの意味を解釈できない。

- 8. 今回のアプローチ 8 各時系列に対するSSMのパラメータを共通のRNNで学習する。 Ex. • 顧客ID • 顧客属性 • 曜日、時刻 RNN RNN RNN RNN RNN 𝑧1 (𝑖) 𝑙1 (𝑖) 𝑙0 (𝑖) 𝑙2 (𝑖) 𝑧2 (𝑖) 𝐹1 𝑎1 𝐹2 𝑎2初期分布 観測値 𝑙3 (𝑖) 𝑧3 (𝑖) 𝐹3 𝑎3 𝑙4 (𝑖) 𝑧4 (𝑖) 𝐹4 𝑎4 𝑙5 (𝑖) 𝑧5 (𝑖) 𝐹5 𝑎5 時系列 𝑖 のSSM のパラメータ Θ 𝑡 (𝑖) 時系列 𝑖 に伴う 特徴ベクトル系列 𝑥 𝑡 (𝑖) RNN (全時系列共通) SSM (時系列毎) • 分布はガ ウシアン • モデルは 線形 𝑥1 (𝑖) 𝑥2 (𝑖) 𝑥3 (𝑖) 𝑥4 (𝑖) 𝑥5 (𝑖) Θ1 (𝑖) Θ2 (𝑖) Θ3 (𝑖) Θ4 (𝑖) Θ5 (𝑖)

- 9. 今回のアプローチ 9 学習 各時系列の対数尤度の和を最大化するSSMのパラメータを出力 するように RNN (今回は LSTM)を学習する。 予測 SSMのパラメータが学習できたら、カルマンフィルタによって 観測値が与えられた下での予測値の事後分布を計算する。 𝐿 Φ = 𝑖=1 𝑁 log 𝑝 𝑧1:𝑇 𝑖 (𝑖) 𝑥1:𝑇 𝑖 (𝑖) , Φ

- 12. まとめ 12 まとめ • 季節性などの構造が想定され、相互に予測のヒントを与えそう な複数時系列を、「個々の状態空間モデルのパラメータを共通 のRNNで学習する」ことで、効果的に予測した。 ‐ ベースは状態空間モデルなので、好きな季節性などを入れ込める。 ‐ そのパラメータは各時系列の特徴ベクトルから RNN で学ぶので、 あらゆる特徴から効果的に情報を抽出できる。 ‐ 全時系列が RNN を共有するので、横断的に情報を活用できる (例えばある時系列のみでノイズ発生してもカバーできるはず)。 ‐ RNN 出力は自分が考えたモデルのパラメータなので解釈可能。 今後の展望 • 分布がガウシアンでない状態空間モデルへの拡張。

- 13. SSM×DNN の先行研究 13 • Deep Markov Model (DMM) [Krishnan2017, Krishnan2015] • Stochastic RNNs [Fraccaro2016] • Variational RNNs [Chung2015] • Latent LSTM Allocation [Zaheer2017] • State-Space LSTM [Zheng2017] • 状態空間モデルの教師なし学習 [Karl2017] • Kalman Variational Auto-Encoder (KVAE) [Fraccaro2017] ― 本論文での提案手法に最も近い。 • 上と似たアイデア [Johnson2016]。

- 14. DeepAR 14 https://arxiv.org/abs/1704.04110 Valentin Flunkert, David Salinas, Jan Gasthaus. DeepAR: Probabilistic Forecasting with Autoregressive Recurrent Networks. CoRR, abs /1704.04110, 2017. • 本論文と同じ Amazon のグループからの発表。 • 8ページの図とだいたい同じで、ただしこちらでは RNN で各時系列 の自己回帰(AR)モデルのパラメータを推定する。

![SSM×DNN の先行研究 13

• Deep Markov Model (DMM) [Krishnan2017, Krishnan2015]

• Stochastic RNNs [Fraccaro2016]

• Variational RNNs [Chung2015]

• Latent LSTM Allocation [Zaheer2017]

• State-Space LSTM [Zheng2017]

• 状態空間モデルの教師なし学習 [Karl2017]

• Kalman Variational Auto-Encoder (KVAE) [Fraccaro2017] ―

本論文での提案手法に最も近い。

• 上と似たアイデア [Johnson2016]。](https://image.slidesharecdn.com/deepssms-190126005812/85/Deep-State-Space-Models-for-Time-Series-Forecasting-13-320.jpg)

![[DL輪読会]Flow-based Deep Generative Models](https://cdn.slidesharecdn.com/ss_thumbnails/20190307-190328024744-thumbnail.jpg?width=560&fit=bounds)

![[DL輪読会]Control as Inferenceと発展](https://cdn.slidesharecdn.com/ss_thumbnails/20191004-191204055019-thumbnail.jpg?width=560&fit=bounds)

![[DL輪読会]相互情報量最大化による表現学習](https://cdn.slidesharecdn.com/ss_thumbnails/20190913iwasawa-190913002312-thumbnail.jpg?width=560&fit=bounds)

![[DL輪読会]“SimPLe”,“Improved Dynamics Model”,“PlaNet” 近年のVAEベース系列モデルの進展とそのモデルベース...](https://cdn.slidesharecdn.com/ss_thumbnails/20190426akuzawa-190426020057-thumbnail.jpg?width=560&fit=bounds)

![SSII2021 [OS2-01] 転移学習の基礎:異なるタスクの知識を利用するための機械学習の方法](https://cdn.slidesharecdn.com/ss_thumbnails/os2-02final-210610091211-thumbnail.jpg?width=560&fit=bounds)

![[DL輪読会]Learning Latent Dynamics for Planning from Pixels](https://cdn.slidesharecdn.com/ss_thumbnails/taniguchi20181221-190104064850-thumbnail.jpg?width=560&fit=bounds)

![[DL輪読会]逆強化学習とGANs](https://cdn.slidesharecdn.com/ss_thumbnails/irlgans-171128063119-thumbnail.jpg?width=560&fit=bounds)

![[DL輪読会]Grokking: Generalization Beyond Overfitting on Small Algorithmic Datasets](https://cdn.slidesharecdn.com/ss_thumbnails/20220325okimura-220405024717-thumbnail.jpg?width=560&fit=bounds)

![[論文紹介] LSTM (LONG SHORT-TERM MEMORY)](https://cdn.slidesharecdn.com/ss_thumbnails/2018-181019021803-thumbnail.jpg?width=560&fit=bounds)