Ad

DevFestMN 2017 - Learning Docker and Kubernetes with Openshift

- 1. LEARNING DOCKER AND KUBERNETES WITH OPENSHIFT A Hands-on Lab Exclusively for DevFestMN Keith Resar Container PaaS Solution Architect February 4th, 2017 @KeithResar [email protected]

- 3. @KeithResar 1: GETTING TO CONTAINERS THE BASICS, WHERE WE EXPLORE “WHY CONTAINERS?” AND “WHY ORCHESTRATION?” 2: ARCHITECTURE AND DISCOVERY LAB DIVE INTO KUBERNETES, OPENSHIFT 3: SOURCE TO IMAGE AND APP LAB FROM SOURCE CODE TO RUNNING APP

- 4. @KeithResar Keith Resar: Bio Wear many hats @KeithResar [email protected] Coder Open Source Contributor and Advocate Infrastructure Architect

- 6. CHALLENGE #1 INFRASTRUCTURE LIMITS YOUR APPS

- 9. CONTAINERS MAKE MICROSERVICES COST EFFECTIVE

- 10. CHALLENGE #2 CONTAINER MANAGEMENT IS HARD

- 11. For those of you not in the room, this is where I saved you from cliche images of container ships in disaster. You’re Welcome!

- 12. CHALLENGE #2 CONTAINER MANAGEMENT IS HARD

- 14. @KeithResar

- 15. @KeithResar

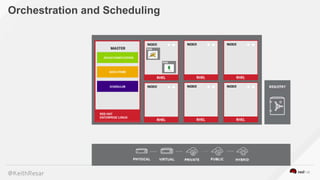

- 18. @KeithResar OpenShift runs on your choice of infrastructure

- 19. @KeithResar Nodes are instances of Linux where apps will run

- 20. @KeithResar Apps and components run in containers Container Image Container Pod

- 21. @KeithResar Pods are the orchestrated unit in OpenShift

- 22. @KeithResar Masters are the Control Plane

- 24. @KeithResar Desired and Current State

- 27. @KeithResar Services connect application components

- 29. @KeithResar What about unhealthy Pods?

- 30. @KeithResar The Master remediates Pod failures

- 31. @KeithResar What about app data?

- 32. @KeithResar Routing layer for external accessibility

- 33. @KeithResar Access via Web UI, CLI, IDE, API

- 34. SOURCE TO IMAGE

- 35. Source 2 Image Walk Through Code Developers can leverage existing development tools and then access the OpenShift Web, CLI or IDE interfaces to create new application services and push source code via GIT. OpenShift can also accept binary deployments or be fully integrated with a customer’s existing CI/CD environment.

- 36. Source 2 Image Walk Through Container Image Registry Build OpenShift automates the Docker image build process with Source- to-Image (S2I). S2I combines source code with a corresponding Builder image from the integrated Docker registry. Builds can also be triggered manually or automatically by setting a Git webhook. Add in Build pipelines

- 37. Source 2 Image Walk Through Container Image Registry Deploy OpenShift automates the deployment of application containers across multiple Node hosts via the Kubernetes scheduler. Users can automatically trigger deployments on application changes and do rollbacks, configure A/B deployments & other custom deployment types.

- 38. @KeithResar Community Callouts DOCKER MEETUP KUBERNETES MEETUP OPENSHIFT MEETUP ANSIBLE MEETUP

- 40. @KeithResar

Editor's Notes

- #3: New release of OCP / OpenShift origin 1.4/3.4 last week.

- #8: IT organizations must evolve to meet organizational demands This is driving many of the conversations we are having with our customers about DevOps and more Many of these customers are looking to shift their development and deployment processes, from traditional Waterfall development and Agile methods to DevOps and they want to learn how to enable that We are also seeing customers asking about Microservices, as they switch their application architectures, away from existing monolithic and n-tier applications to highly distributed, componentized services-based apps On the infrastructure side, we are seeing a lot of interest in Linux Containers, driven by the popularity of Docker, as an alternative to existing virtualization technologies. Containers can be a key enabler of DevOps and Microservices. And finally we are seeing customers increasingly wanting to deploy their applications across a hybrid cloud environment

- #9: IT organizations must evolve to meet organizational demands This is driving many of the conversations we are having with our customers about DevOps and more Many of these customers are looking to shift their development and deployment processes, from traditional Waterfall development and Agile methods to DevOps and they want to learn how to enable that We are also seeing customers asking about Microservices, as they switch their application architectures, away from existing monolithic and n-tier applications to highly distributed, componentized services-based apps On the infrastructure side, we are seeing a lot of interest in Linux Containers, driven by the popularity of Docker, as an alternative to existing virtualization technologies. Containers can be a key enabler of DevOps and Microservices. And finally we are seeing customers increasingly wanting to deploy their applications across a hybrid cloud environment

- #10: By applying it to commodity hardware, this makes it cost-effective.

- #14: New release of OCP / OpenShift origin 1.4/3.4 last week.

- #16: OpenShift Commons is an interactive community for OpenShift Users, Customers, Contributors, Partners, Service Providers and Developers Commons participants collaborate with Red Hat and other participants to share ideas, code, best practices, and experiences Get more information at http://origin.openshift.com/commons

- #18: Speaker: * This is a high level architecture diagram of the OpenShift 3 platform. On the subsequent slides we will dive down and investigate how these components interact within an OpenShift infrastructure. Discussion: * Set the stage for describing the OpenShift architecture. Transcript: OpenShift has a complex multi-component architecture. This presentation is usable to help prospects understand how the components work together.

- #19: Speaker: * From bare metal physical machines to virtualized infrastructure, or in private or certified public clouds, OpenShift is supported anywhere that Red Hat Enterprise Linux is. * This includes all the supported virtualization platforms - RHEV, vSphere or Hyper-V. * Red Hat’s OpenStack platform and certified public cloud providers like Amazon, Google and more are supported, too. * You can even take a hybrid approach and deploy instances of OpenShift Enterprise across all of these infrastructures. Discussion: * Show the flexibility of deploying OpenShift * Technically only x86 platforms are supported. Transcript: OpenShift is fully supported anywhere Red Hat Enterprise Linux is. Hybrid deployments across multiple infrastructures can be achieved, but many customers are still adopting OpenShift inside their existing, traditional virtualized environments.

- #20: Speaker: * OpenShift has two types of systems. The first are called nodes. * Nodes are instances of RHEL 7 or RHEL Atomic with the OpenShift software installed. * Ultimately, Nodes are where end-user applications are run. Discussion: * The Nodes are what the Masters will orchestrate - you will learn about Masters shortly. * Nodes are just instances of RHEL or Atomic. * OpenShift’s node daemon and other software runs on a node. Transcript: OpenShift can run on either RHEL or RHEL Atomic. Nodes are just instances of RHEL or Atomic that will ultimately host application instances in containers.

- #21: Speaker: * Application instances and application components run in Docker containers * Each OpenShift Node can run many containers Discussion: * A node’s capacity is related to the memory and CPU capabilities of the underlying “hardware” Transcript: All of the end-user application instances and application components will run inside Docker containers on the nodes. RHEL instances with bigger CPU and memory footprints can run more applications.

- #22: Speaker: * While app components run in Docker containers, the “unit” that OpenShift is orchestrating and managing is called a Pod * In this case, a Docker image is the executable or runnable components, and the container is the actual running instance with runtime and environment parameters * One or more containers make up a Pod, and OpenShift will schedule and run all containers in a Pod together * Complex applications are made up of many Pods each with their own containers, all interacting with one another inside an OpenShift environment Discussion: * OpenShift consumes Docker Images and runs them in containers wrapped by the meta object called a Pod. * The Pod is what OpenShift schedules, manages and runs. * Pods can have multiple containers but there are not many well-defined use cases * Services, described soon, are how different application components are “wired” together. Different application components (eg: app server, db) are not placed in a single Pod Transcript: OpenShift can use any native Docker image, and schedules and runs containers in a unit called a Pod. While Pods can have multiple containers, generally a Pod should provide a single function, like an app server, as opposed to multiple functions, like a database and an app server. This allows for individual application components to be easily scaled horizontally.

- #23: Speaker: * The other type of OpenShift system is the Master * Masters keep and understand the state of the environment and orchestrate all activities on the Nodes * Masters are also instances of RHEL or Atomic, and multiple masters can be used in an environment for high availability * Masters have four primary jobs or functions Discussion: * The OpenShift Master is the orchestrator of the entire OpenShift environment. * The OpenShift Master knows about and maintains state within the OpenShift environment. * Just like Nodes, Masters run on RHEL or Atomic * You will discuss the four primary functions of the Master in the next slides Transcript: Just like Nodes, the OpenShift Master is installed on RHEL or Atomic. The Master is the orchestration and scheduling engine for OpenShift, and is responsible for knowing and maintaining the state of the OpenShift environment. The Master has four primary functions which you will now describe.

- #24: Speaker: * The Master provides the single API that all tooling and systems interact with. Everything must go through this API. * All API requests are SSL-encrypted and authenticated. Authorizations are handled via fine-grained role-based access control (RBAC) * The Master can be tied into external identity management systems, from LDAP and AD to OAuth providers like GitHub and Google Discussion: * All requests go through the Master’s API * The Master evaluates requests for both AuthentacioN (AuthN - you are who you say you are) and AuthoriZation (AuthZ - you’re allowed to do what you requested) * The Master can be tied into external identity management systems like LDAP/AD, OAuth, and more * Apache modules can also be used for authentication in front of the API Transcript: The Master is the gateway to the OpenShift environment. All requests must go through the Master and must be both authenticated and authorized. A wide array of external identity management systems can be the source of authentication information in an OpenShift environment.

- #25: Speaker: * The desired and current state of the OpenShift environment is held in the data store * The data store uses etcd, a distributed key-value store * Other things like the RBAC rules, application environment information, and non-application-user-data are kept in the data store Discussion: * etcd holds information about the desired and current state of OpenShift * Additionally, user account info, RBAC rules, environment variables, secrets, and many other bits of OpenShift information are held in the data store * More generally, any OpenShift data object is stored in etcd Transcript: Etcd is another of the critical components of the OpenShift architecture. It is the distributed key-value data store for state and other information within the OpenShift environment. Reads and writes of information generally are going to hit the data store.

- #26: Speaker: * The scheduler portion of the Master is the specific component that is responsible for determining Pod placement * The scheduler takes the current memory, CPU and other environment utilization into account when placing Pods on the various nodes Discussion: * The scheduler is responsible for Pod placement * Current CPU, Memory and other environment utilization is considered during the scheduling process Transcript: The OpenShift scheduler uses a combination of configuration and environment state to determine the best fit for running Pods across the Nodes in the environment.

- #27: Speaker: * The real-world topology of the OpenShift deployment (regions, zones, and etc) is used to inform the configuration of the scheduler * Administrators can configure complex scenarios for scheduling workloads Discussion: * The scheduler is configured with a simple JSON file in combination with node labels to carve up the OpenShift environment to make it look like the real world topology Transcript: The topology of the real-world environment is used by platform administrators to determine how to configure the scheduler and the Node labels.

- #28: Speaker: * OpenShift’s service layer enables application components to easily communicate with one another * The service layer provides for internal load balancing as well as discovery and code reusability Discussion: * The service layer is used to connect application components together * OpenShift automatically injects some service information into running containers to provide for ease of discovery * Services provide for simple internal load balancing across application components Transcript: OpenShift’s service layer is how application components communicate with one another. A front-end web service would connect to database instances by communicating with the database service. OpenShift would automatically handle load balancing across the database instances. Service information is injected into running containers and provides for ease of application

- #29: Speaker: * The Master is responsible for monitoring the health of Pods, and for automatically scaling them as desired * Users configure Pod probes for liveness and readiness * Currently pods may be automatically scaled based on CPU utilization Discussion: * The Master handles checking the health of pods by executing the defined liveness and readiness probes * Probes can be easily defined by users * Autoscaling is available today against CPU only * The next slides will depict a health event for you to describe Transcript: The OpenShift Master is capable of monitoring application health via user-defined Pod probes. The Master is also capable of scaling out Pods based on CPU utilization metrics.

- #30: Speaker: * What happens when the Master sees that a Pod is failing its probes? * What happens if containers inside the Pod exited because of a crash or other issue? Discussion: * Here you are describing the beginning of how the Master detects failures and remediates them Transcript: This first slide allows you to ask the question “What happens when…?”. The next slide will describe the remediation process for failed Pods.

- #31: Speaker: * The Master will automatically restart Pods that have failed probes or possibly exited due to container crashes. * Pods that fail too often are marked as bad and are temporarily not restarted. * OpenShift’s service layer makes sure that it only sends traffic to healthy Pods, maintaining component availability -- all orchestrated by the Master automatically. Discussion: * The Master will restart Pods that are determined to be failing, for whatever reason. * The Service layer is automatically updated with only healthy Pods as endpoints. * All of this is done automatically. * Pods that continually fail are not restarted for a while. This is a “crash loop back-off”. Transcript: The OpenShift Master is capable of remediating Pod failures automatically. It manages the traffic through the Service layer to ensure application availability and handles restarting Pods, all automatically and without any user intervention.

- #32: Speaker: * Applications are only as useful as the data they can manipulate. * Docker containers are natively ephemeral - data is not saved when containers are restarted or created. * OpenShift provides a persistent storage subsystem that will automatically connect real-world storage to the right Pods, allowing for stateful applications to be used on the platform. * A wide array of persistent storage types are usable, from raw devices (iSCSI, FC) to enterprise storage (NFS) to cloud-type options (Gluster/Ceph, EBS, pDisk, etc) Discussion: * OpenShift’s persistent volume system automatically connects storage to Pods * The persistent volume system allows stateful apps to be used on the platform * OpenShift provides flexibility in the types of storage that Pods can consume Transcript: OpenShift’s persistent volume system allows end users to consume a wide array of storage types to enable stateful applications to be run on the platform. Whether OpenShift is running locally in the datacenter or in the cloud, there are storage options that can be used.

- #33: Speaker: * Not every consumer of applications exists inside the OpenShift platform. External clients need to be able to access things running inside OpenShift * The routing layer is a close partner to the service layer, providing automated load balancing to Pods for external clients * The routing layer is pluggable and extensible if a hardware or non-OpenShift software router is desired Discussion: * The OpenShift router runs in pods on the platform itself, but receives traffic from the outside world and proxies it to the right pods * The router uses the service endpoint information to determine where to load balance traffic, but it does not send traffic through the service layer * The router is built with HAProxy, but is a pluggable solution. Red Hat currently supports F5 integration as another option. Transcript: OpenShift’s routing layer provides access for external clients to reach applications running on the platform. The routing layer runs in Pods inside OpenShift, and features similar load balancing and auto-routing around unhealthy Pods as the Service layer.

- #34: Speaker: * All users, either operators, developers, or application administrators, access OpenShift through the same standard interfaces * Ultimately, the Web UI, CLI and IDEs all go through the authenticated and RBAC-controlled API * Users do not need system-level access to any of the OpenShift hosts -- even for complicated debugging and troubleshooting. * Continuous Integration (CI) and Continuous Deployment (CD) systems can be easily integrated with OpenShift through these interfaces, too. * Operators and Administrators can utilize existing management and monitoring tooling in many ways. Discussion: * All interaction with the OpenShift environment and its tools goes through the API and is controlled by the defined RBAC settings. * The tools and the API provide for ways to access application instances -- even for things like shells and terminals for debugging * Existing RHEL management tooling and many existing monitoring suites can be integrated with OpenShift * CI/CD solutions can be integrated with OpenShift to provide for complete automated lifecycle management. Transcript: Interacting with OpenShift boils down to interacting with the API, no matter what tools are being used. CI, CD, management, monitoring and other tooling can all go through the OpenShift API for automation purposes. And, since OpenShift is built on top of RHEL, existing systems management and systems monitoring tools can be used, too.

- #39: OCP Meetup: Grant Shipley - director of OCP platform Burr Sutter - director of developer experience

- #40: DevFestMN Link Traffic Stats: http://bit.do/devfestmn-

![[D2 COMMUNITY] Open Container Seoul Meetup - Kubernetes를 이용한 서비스 구축과 openshift](https://cdn.slidesharecdn.com/ss_thumbnails/kubernetesopenshift-161207045304-thumbnail.jpg?width=560&fit=bounds)