DF1 - Py - Kalaidin - Introduction to Word Embeddings with Python

0 likes599 views

Presentation from Moscow Data Fest #1, September 12. Moscow Data Fest is a free one-day event that brings together Data Scientists for sessions on both theory and practice. Link: http://www.meetup.com/Moscow-Data-Fest/

1 of 74

Downloaded 15 times

![credits: [x]](https://image.slidesharecdn.com/df1-py-2-kalaidin-introductiontowordembeddingswithpython-150921141354-lva1-app6891/85/DF1-Py-Kalaidin-Introduction-to-Word-Embeddings-with-Python-15-320.jpg)

![here comes word2vec

Distributed Representations of Words and Phrases and their Compositionality, Mikolov et al: [paper]](https://image.slidesharecdn.com/df1-py-2-kalaidin-introductiontowordembeddingswithpython-150921141354-lva1-app6891/85/DF1-Py-Kalaidin-Introduction-to-Word-Embeddings-with-Python-24-320.jpg)

![word2vec Explained: Deriving Mikolov et al.’s Negative-Sampling

Word-Embedding Method, Goldberg et al, 2014 [arxiv]](https://image.slidesharecdn.com/df1-py-2-kalaidin-introductiontowordembeddingswithpython-150921141354-lva1-app6891/85/DF1-Py-Kalaidin-Introduction-to-Word-Embeddings-with-Python-38-320.jpg)

![deep walk:

DeepWalk: Online Learning of Social

Representations [link]](https://image.slidesharecdn.com/df1-py-2-kalaidin-introductiontowordembeddingswithpython-150921141354-lva1-app6891/85/DF1-Py-Kalaidin-Introduction-to-Word-Embeddings-with-Python-67-320.jpg)

![predicting hashtags

interesting read: #TAGSPACE: Semantic

Embeddings from Hashtags [link]](https://image.slidesharecdn.com/df1-py-2-kalaidin-introductiontowordembeddingswithpython-150921141354-lva1-app6891/85/DF1-Py-Kalaidin-Introduction-to-Word-Embeddings-with-Python-69-320.jpg)

Ad

Recommended

Context-Aware Neural Machine Translation Learns Anaphora Resolution

Context-Aware Neural Machine Translation Learns Anaphora ResolutionElena Voita This document presents research on context-aware neural machine translation. It introduces a model that incorporates context from previous sentences to help with translating ambiguous words like pronouns. The model outperforms baselines on a test set involving pronouns. Analysis shows the model learns to implicitly resolve anaphora by attending to the antecedent noun phrase provided in the context. This suggests the model is capturing this discourse phenomenon without being explicitly trained for anaphora resolution.

Conference PefNet in CZ

Conference PefNet in CZMiss. Antónia FICOVÁ, Engineer. (Not yet Dr.) The document is an abstracts booklet from the 18th European scientific conference of doctoral students organized by the Faculty of Business and Economics at Mendel University in Brno. It contains abstracts from papers presented at the conference across several sessions, including business economics, economics and finance, and informatics. The abstracts cover topics such as price for ski lift tickets, corporate bankruptcy determinants, machinery investment criteria, organic farm success, dairy product labeling effects, hotel services in Libya, debt crisis impacts, capital structure determinants, leadership models, e-commerce competitor benchmarking, social media potential in healthcare, uncertainty in leasing reporting, employment structure shifts, public procurement tender prices, odds betting, corporate governance intelligence, working capital

Secondary School Offers for Sevenoaks Students

Secondary School Offers for Sevenoaks StudentsSevenoaks ACE Presentation showing the number of children from state primary sectors within the Sevenoaks area that were made offers to the local school, and other grammar, non-selective and faith schools in nearby towns in 2009/10 and 2010/11

Developing Single Page Applications on Plone using AngularJS

Developing Single Page Applications on Plone using AngularJSAlin Voinea A short introduction to using the AngularJS framework to simplify development of complex JS powered edit interfaces

15 fotos bettas 2012

15 fotos bettas 2012Aquatic Alzira The document lists the names of various types of betta fish balls, including descriptions related to color such as red, gold, green, and blue. A variety of patterns and species are also referenced, including dragon, salamander, butterfly, kitti, and dumbo. Over 50 different types of betta fish balls are named.

DF1 - ML - Vorontsov - BigARTM Topic Modelling of Large Text Collections

DF1 - ML - Vorontsov - BigARTM Topic Modelling of Large Text CollectionsMoscowDataFest Presentation from Moscow Data Fest #1, September 12.

Moscow Data Fest is a free one-day event that brings together Data Scientists for sessions on both theory and practice.

Link: http://www.meetup.com/Moscow-Data-Fest/

plone.api

plone.apigilforcada This document introduces plone.api, which provides a simple Python API for common Plone development tasks. It aims to cover 20% of tasks developers do 80% of the time through clear and discoverable API methods. The goals are to keep everything in one place, stay introspectable and discoverable, and be Pythonic. It was developed using test-driven development, sprint sessions helped contribute. Examples show how it can get the portal root or check permissions in a cleaner way than before. Future work may include more methods to make additional common tasks simpler.

Resume - Jarod M Wachtel (PA)

Resume - Jarod M Wachtel (PA)Jarod Wachtel Jarod M. Wachtel has over 5 years of legal experience in Colorado. He received his Juris Doctor from Regent University School of Law in 2014 and is licensed to practice law in Colorado. He has worked as a solo practitioner since 2015 handling both criminal and civil cases with a focus on business disputes. Prior to that, he worked as an associate trial attorney for a law office in Denver representing clients in domestic relations cases. He also completed internships during law school focusing on family law, tax law, and challenging compulsory union fees. In his free time, he volunteers providing limited legal advice through a local legal aid clinic.

Оптимизация производительности Python

Оптимизация производительности PythonPyNSK Слайды с доклада на Codefest 2016

От Александра Маршалова

В докладе были рассмотрены основные способы микро и не микро оптимизаций для Python

Основы языка Питон: типы данных, операторы

Основы языка Питон: типы данных, операторыTheoretical mechanics department Презентация к лекции курса "Технологии и языки программирования"

Backtesting Trading Strategies with R

Backtesting Trading Strategies with Reraviv The document discusses connecting to financial data sources from R for trading strategies. It covers using Yahoo Finance for end-of-day data from the past year and connecting to Interactive Brokers for intraday data through their API. It notes that IB has extensive APIs for connecting through Java and C and allows for retrieving high frequency intraday data if the necessary programming is done, though there are limits on the number of requests and no more than one year of past data can be accessed at a time.

R in finance: Introduction to R and Its Applications in Finance

R in finance: Introduction to R and Its Applications in FinanceLiang C. Zhang (張良丞) This presentation is designed for experts in Finance but not familiar with R. I use some Finance applications (data mining, technical trading, and performance analysis) that you are probably most familiar with. In this short one-hour event, I focus on the "using R" rather than the Finance examples. Therefore, few interpretations of these examples will be provided. Instead, I would like you to use your field of knowledge to help yourself and hope that you can extend what you learn to other finance R packages.

Yoav Goldberg: Word Embeddings What, How and Whither

Yoav Goldberg: Word Embeddings What, How and WhitherMLReview This document discusses word embeddings and how they work. It begins by explaining how the author became an expert in distributional semantics without realizing it. It then discusses how word2vec works, specifically skip-gram models with negative sampling. The key points are that word2vec is learning word and context vectors such that related words and contexts have similar vectors, and that this is implicitly factorizing the word-context pointwise mutual information matrix. Later sections discuss how hyperparameters are important to word2vec's success and provide critiques of common evaluation tasks like word analogies that don't capture true semantic similarity. The overall message is that word embeddings are fundamentally doing the same thing as older distributional semantic models through matrix factorization.

Lda2vec text by the bay 2016 with notes

Lda2vec text by the bay 2016 with notes👋 Christopher Moody lda2vec with presenter notes

Without notes:

http://www.slideshare.net/ChristopherMoody3/lda2vec-text-by-the-bay-2016

Word embeddings

Word embeddingsShruti kar Word embeddings are a technique for converting words into vectors of numbers so that they can be processed by machine learning algorithms. Words with similar meanings are mapped to similar vectors in the vector space. There are two main types of word embedding models: count-based models that use co-occurrence statistics, and prediction-based models like CBOW and skip-gram neural networks that learn embeddings by predicting nearby words. Word embeddings allow words with similar contexts to have similar vector representations, and have applications such as document representation.

A Simple Introduction to Word Embeddings

A Simple Introduction to Word EmbeddingsBhaskar Mitra In information retrieval there is a long history of learning vector representations for words. In recent times, neural word embeddings have gained significant popularity for many natural language processing tasks, such as word analogy and machine translation. The goal of this talk is to introduce basic intuitions behind these simple but elegant models of text representation. We will start our discussion with classic vector space models and then make our way to recently proposed neural word embeddings. We will see how these models can be useful for analogical reasoning as well applied to many information retrieval tasks.

Paper dissected glove_ global vectors for word representation_ explained _ ...

Paper dissected glove_ global vectors for word representation_ explained _ ...Nikhil Jaiswal This document summarizes and explains the GloVe model for generating word embeddings. GloVe aims to capture word meaning in vector space while taking advantage of global word co-occurrence counts. Unlike word2vec, GloVe learns embeddings based on a co-occurrence matrix rather than streaming sentences. It trains vectors so their differences predict co-occurrence ratios. The document outlines the key steps in building GloVe, including data preparation, defining the prediction task, deriving the GloVe equation, and comparisons to word2vec.

Word Embeddings - Introduction

Word Embeddings - IntroductionChristian Perone The document provides an introduction to word embeddings and two related techniques: Word2Vec and Word Movers Distance. Word2Vec is an algorithm that produces word embeddings by training a neural network on a large corpus of text, with the goal of producing dense vector representations of words that encode semantic relationships. Word Movers Distance is a method for calculating the semantic distance between documents based on the embedded word vectors, allowing comparison of documents with different words but similar meanings. The document explains these techniques and provides examples of their applications and properties.

Vectorization In NLP.pptx

Vectorization In NLP.pptxChode Amarnath Vectorization is the process of converting words into numerical representations. Common techniques include bag-of-words which counts word frequencies, and TF-IDF which weights words based on frequency and importance. Word embedding techniques like Word2Vec and GloVe generate vector representations of words that encode semantic and syntactic relationships. Word2Vec uses the CBOW and Skip-gram models to predict words from contexts to learn embeddings, while GloVe uses global word co-occurrence statistics from a corpus. These pre-trained word embeddings can then be used for downstream NLP tasks.

Query Understanding

Query UnderstandingEoin Hurrell, PhD Talk given at the 6th Irish NLP Meetup on query understanding using conceptual slices and word embeddings.

https://www.meetup.com/NLP-Dublin/events/237998517/

Word_Embeddings.pptx

Word_Embeddings.pptxGowrySailaja The document discusses word embeddings, which learn vector representations of words from large corpora of text. It describes two popular methods for learning word embeddings: continuous bag-of-words (CBOW) and skip-gram. CBOW predicts a word based on surrounding context words, while skip-gram predicts surrounding words from the target word. The document also discusses techniques like subsampling frequent words and negative sampling that improve the training of word embeddings on large datasets. Finally, it outlines several applications of word embeddings, such as multi-task learning across languages and embedding images with text.

Lda and it's applications

Lda and it's applicationsBabu Priyavrat LDA (latent Dirichlet allocation) is a probabilistic model for topic modeling that represents documents as mixtures of topics and topics as mixtures of words. It uses the Dirichlet distribution to model the probability of topics occurring in documents and words occurring in topics. LDA can be represented as a Bayesian network. It has been used for applications like identifying topics in sentences and documents. Python packages like NLTK, Gensim, and Stopwords can be used for preprocessing text and building LDA models.

Michael Alcorn, Sr. Software Engineer, Red Hat Inc. at MLconf SF 2017

Michael Alcorn, Sr. Software Engineer, Red Hat Inc. at MLconf SF 2017MLconf This document provides an overview of representation learning techniques used at Red Hat, including word2vec, doc2vec, url2vec, and customer2vec. Word2vec is used to learn word embeddings from text, while doc2vec extends it to learn embeddings for documents. Url2vec and customer2vec apply the same technique to learn embeddings for URLs and customer accounts based on browsing behavior. These embeddings can be used for tasks like search, troubleshooting, and data-driven customer segmentation. Duplicate detection is another application, where title and content embeddings are compared. Representation learning is also explored for baseball players to model player value.

Designing, Visualizing and Understanding Deep Neural Networks

Designing, Visualizing and Understanding Deep Neural Networksconnectbeubax The document discusses different approaches for representing the semantics and meaning of text, including propositional models that represent sentences as logical formulas and vector-based models that embed texts in a high-dimensional semantic space. It describes word embedding models like Word2vec that learn vector representations of words based on their contexts, and how these embeddings capture linguistic regularities and semantic relationships between words. The document also discusses how composition operations can be performed in the vector space to model the meanings of multi-word expressions.

NLP_guest_lecture.pdf

NLP_guest_lecture.pdfSoha82 This document provides an overview of natural language processing (NLP). It discusses how NLP systems have achieved shallow matching to understand language but still have fundamental limitations in deep understanding that requires context and linguistic structure. It also describes technologies like speech recognition, text-to-speech, question answering and machine translation. It notes that while text data may seem superficial, language is complex with many levels of structure and meaning. Corpus-based statistical methods are presented as one approach in NLP.

Word Embeddings, why the hype ?

Word Embeddings, why the hype ? Hady Elsahar Continuous representations of words and documents, which is recently referred to as Word Embeddings, have recently demonstrated large advancements in many of the Natural language processing tasks.

In this presentation we will provide an introduction to the most common methods of learning these representations. As well as previous methods in building these representations before the recent advances in deep learning, such as dimensionality reduction on the word co-occurrence matrix.

Moreover, we will present the continuous bag of word model (CBOW), one of the most successful models for word embeddings and one of the core models in word2vec, and in brief a glance of many other models of building representations for other tasks such as knowledge base embeddings.

Finally, we will motivate the potential of using such embeddings for many tasks that could be of importance for the group, such as semantic similarity, document clustering and retrieval.

Ad

More Related Content

Viewers also liked (6)

Resume - Jarod M Wachtel (PA)

Resume - Jarod M Wachtel (PA)Jarod Wachtel Jarod M. Wachtel has over 5 years of legal experience in Colorado. He received his Juris Doctor from Regent University School of Law in 2014 and is licensed to practice law in Colorado. He has worked as a solo practitioner since 2015 handling both criminal and civil cases with a focus on business disputes. Prior to that, he worked as an associate trial attorney for a law office in Denver representing clients in domestic relations cases. He also completed internships during law school focusing on family law, tax law, and challenging compulsory union fees. In his free time, he volunteers providing limited legal advice through a local legal aid clinic.

Оптимизация производительности Python

Оптимизация производительности PythonPyNSK Слайды с доклада на Codefest 2016

От Александра Маршалова

В докладе были рассмотрены основные способы микро и не микро оптимизаций для Python

Основы языка Питон: типы данных, операторы

Основы языка Питон: типы данных, операторыTheoretical mechanics department Презентация к лекции курса "Технологии и языки программирования"

Backtesting Trading Strategies with R

Backtesting Trading Strategies with Reraviv The document discusses connecting to financial data sources from R for trading strategies. It covers using Yahoo Finance for end-of-day data from the past year and connecting to Interactive Brokers for intraday data through their API. It notes that IB has extensive APIs for connecting through Java and C and allows for retrieving high frequency intraday data if the necessary programming is done, though there are limits on the number of requests and no more than one year of past data can be accessed at a time.

R in finance: Introduction to R and Its Applications in Finance

R in finance: Introduction to R and Its Applications in FinanceLiang C. Zhang (張良丞) This presentation is designed for experts in Finance but not familiar with R. I use some Finance applications (data mining, technical trading, and performance analysis) that you are probably most familiar with. In this short one-hour event, I focus on the "using R" rather than the Finance examples. Therefore, few interpretations of these examples will be provided. Instead, I would like you to use your field of knowledge to help yourself and hope that you can extend what you learn to other finance R packages.

Similar to DF1 - Py - Kalaidin - Introduction to Word Embeddings with Python (20)

Yoav Goldberg: Word Embeddings What, How and Whither

Yoav Goldberg: Word Embeddings What, How and WhitherMLReview This document discusses word embeddings and how they work. It begins by explaining how the author became an expert in distributional semantics without realizing it. It then discusses how word2vec works, specifically skip-gram models with negative sampling. The key points are that word2vec is learning word and context vectors such that related words and contexts have similar vectors, and that this is implicitly factorizing the word-context pointwise mutual information matrix. Later sections discuss how hyperparameters are important to word2vec's success and provide critiques of common evaluation tasks like word analogies that don't capture true semantic similarity. The overall message is that word embeddings are fundamentally doing the same thing as older distributional semantic models through matrix factorization.

Lda2vec text by the bay 2016 with notes

Lda2vec text by the bay 2016 with notes👋 Christopher Moody lda2vec with presenter notes

Without notes:

http://www.slideshare.net/ChristopherMoody3/lda2vec-text-by-the-bay-2016

Word embeddings

Word embeddingsShruti kar Word embeddings are a technique for converting words into vectors of numbers so that they can be processed by machine learning algorithms. Words with similar meanings are mapped to similar vectors in the vector space. There are two main types of word embedding models: count-based models that use co-occurrence statistics, and prediction-based models like CBOW and skip-gram neural networks that learn embeddings by predicting nearby words. Word embeddings allow words with similar contexts to have similar vector representations, and have applications such as document representation.

A Simple Introduction to Word Embeddings

A Simple Introduction to Word EmbeddingsBhaskar Mitra In information retrieval there is a long history of learning vector representations for words. In recent times, neural word embeddings have gained significant popularity for many natural language processing tasks, such as word analogy and machine translation. The goal of this talk is to introduce basic intuitions behind these simple but elegant models of text representation. We will start our discussion with classic vector space models and then make our way to recently proposed neural word embeddings. We will see how these models can be useful for analogical reasoning as well applied to many information retrieval tasks.

Paper dissected glove_ global vectors for word representation_ explained _ ...

Paper dissected glove_ global vectors for word representation_ explained _ ...Nikhil Jaiswal This document summarizes and explains the GloVe model for generating word embeddings. GloVe aims to capture word meaning in vector space while taking advantage of global word co-occurrence counts. Unlike word2vec, GloVe learns embeddings based on a co-occurrence matrix rather than streaming sentences. It trains vectors so their differences predict co-occurrence ratios. The document outlines the key steps in building GloVe, including data preparation, defining the prediction task, deriving the GloVe equation, and comparisons to word2vec.

Word Embeddings - Introduction

Word Embeddings - IntroductionChristian Perone The document provides an introduction to word embeddings and two related techniques: Word2Vec and Word Movers Distance. Word2Vec is an algorithm that produces word embeddings by training a neural network on a large corpus of text, with the goal of producing dense vector representations of words that encode semantic relationships. Word Movers Distance is a method for calculating the semantic distance between documents based on the embedded word vectors, allowing comparison of documents with different words but similar meanings. The document explains these techniques and provides examples of their applications and properties.

Vectorization In NLP.pptx

Vectorization In NLP.pptxChode Amarnath Vectorization is the process of converting words into numerical representations. Common techniques include bag-of-words which counts word frequencies, and TF-IDF which weights words based on frequency and importance. Word embedding techniques like Word2Vec and GloVe generate vector representations of words that encode semantic and syntactic relationships. Word2Vec uses the CBOW and Skip-gram models to predict words from contexts to learn embeddings, while GloVe uses global word co-occurrence statistics from a corpus. These pre-trained word embeddings can then be used for downstream NLP tasks.

Query Understanding

Query UnderstandingEoin Hurrell, PhD Talk given at the 6th Irish NLP Meetup on query understanding using conceptual slices and word embeddings.

https://www.meetup.com/NLP-Dublin/events/237998517/

Word_Embeddings.pptx

Word_Embeddings.pptxGowrySailaja The document discusses word embeddings, which learn vector representations of words from large corpora of text. It describes two popular methods for learning word embeddings: continuous bag-of-words (CBOW) and skip-gram. CBOW predicts a word based on surrounding context words, while skip-gram predicts surrounding words from the target word. The document also discusses techniques like subsampling frequent words and negative sampling that improve the training of word embeddings on large datasets. Finally, it outlines several applications of word embeddings, such as multi-task learning across languages and embedding images with text.

Lda and it's applications

Lda and it's applicationsBabu Priyavrat LDA (latent Dirichlet allocation) is a probabilistic model for topic modeling that represents documents as mixtures of topics and topics as mixtures of words. It uses the Dirichlet distribution to model the probability of topics occurring in documents and words occurring in topics. LDA can be represented as a Bayesian network. It has been used for applications like identifying topics in sentences and documents. Python packages like NLTK, Gensim, and Stopwords can be used for preprocessing text and building LDA models.

Michael Alcorn, Sr. Software Engineer, Red Hat Inc. at MLconf SF 2017

Michael Alcorn, Sr. Software Engineer, Red Hat Inc. at MLconf SF 2017MLconf This document provides an overview of representation learning techniques used at Red Hat, including word2vec, doc2vec, url2vec, and customer2vec. Word2vec is used to learn word embeddings from text, while doc2vec extends it to learn embeddings for documents. Url2vec and customer2vec apply the same technique to learn embeddings for URLs and customer accounts based on browsing behavior. These embeddings can be used for tasks like search, troubleshooting, and data-driven customer segmentation. Duplicate detection is another application, where title and content embeddings are compared. Representation learning is also explored for baseball players to model player value.

Designing, Visualizing and Understanding Deep Neural Networks

Designing, Visualizing and Understanding Deep Neural Networksconnectbeubax The document discusses different approaches for representing the semantics and meaning of text, including propositional models that represent sentences as logical formulas and vector-based models that embed texts in a high-dimensional semantic space. It describes word embedding models like Word2vec that learn vector representations of words based on their contexts, and how these embeddings capture linguistic regularities and semantic relationships between words. The document also discusses how composition operations can be performed in the vector space to model the meanings of multi-word expressions.

NLP_guest_lecture.pdf

NLP_guest_lecture.pdfSoha82 This document provides an overview of natural language processing (NLP). It discusses how NLP systems have achieved shallow matching to understand language but still have fundamental limitations in deep understanding that requires context and linguistic structure. It also describes technologies like speech recognition, text-to-speech, question answering and machine translation. It notes that while text data may seem superficial, language is complex with many levels of structure and meaning. Corpus-based statistical methods are presented as one approach in NLP.

Word Embeddings, why the hype ?

Word Embeddings, why the hype ? Hady Elsahar Continuous representations of words and documents, which is recently referred to as Word Embeddings, have recently demonstrated large advancements in many of the Natural language processing tasks.

In this presentation we will provide an introduction to the most common methods of learning these representations. As well as previous methods in building these representations before the recent advances in deep learning, such as dimensionality reduction on the word co-occurrence matrix.

Moreover, we will present the continuous bag of word model (CBOW), one of the most successful models for word embeddings and one of the core models in word2vec, and in brief a glance of many other models of building representations for other tasks such as knowledge base embeddings.

Finally, we will motivate the potential of using such embeddings for many tasks that could be of importance for the group, such as semantic similarity, document clustering and retrieval.

Subword tokenizers

Subword tokenizersHa Loc Do A brief literature review on language-agnostic tokenization, covering state of the art algorithms: BPE and Unigram model. This slide is part of a weekly sharing activity.

[Emnlp] what is glo ve part ii - towards data science![[Emnlp] what is glo ve part ii - towards data science](https://cdn.slidesharecdn.com/ss_thumbnails/emnlpwhatisglovepartii-towardsdatascience-200228052050-thumbnail.jpg?width=560&fit=bounds)

![[Emnlp] what is glo ve part ii - towards data science](https://cdn.slidesharecdn.com/ss_thumbnails/emnlpwhatisglovepartii-towardsdatascience-200228052050-thumbnail.jpg?width=560&fit=bounds)

![[Emnlp] what is glo ve part ii - towards data science](https://cdn.slidesharecdn.com/ss_thumbnails/emnlpwhatisglovepartii-towardsdatascience-200228052050-thumbnail.jpg?width=560&fit=bounds)

![[Emnlp] what is glo ve part ii - towards data science](https://cdn.slidesharecdn.com/ss_thumbnails/emnlpwhatisglovepartii-towardsdatascience-200228052050-thumbnail.jpg?width=560&fit=bounds)

[Emnlp] what is glo ve part ii - towards data scienceNikhil Jaiswal GloVe is a new model for learning word embeddings from co-occurrence matrices that combines elements of global matrix factorization and local context window methods. It trains on the nonzero elements in a word-word co-occurrence matrix rather than the entire sparse matrix or individual context windows. This allows it to efficiently leverage statistical information from the corpus. The model produces a vector space with meaningful structure, as shown by its performance of 75% on a word analogy task. It outperforms related models on similarity tasks and named entity recognition. The full paper describes GloVe's global log-bilinear regression model and how it addresses drawbacks of previous models to encode linear directions of meaning in the vector space.

Explanations in Dialogue Systems through Uncertain RDF Knowledge Bases

Explanations in Dialogue Systems through Uncertain RDF Knowledge BasesDaniel Sonntag We implemented a generic dialogue shell that can be configured for and applied to domain-specific dialogue applications. The dialogue system works robustly for a new domain when the application backend can automatically infer previously unknown knowledge (facts) and provide explanations for the inference steps involved. For this purpose, we employ URDF, a query engine for uncertain and potentially inconsistent RDF knowledge bases. URDF supports rule-based, first-order predicate logic as used in OWL-Lite and OWL-DL, with simple and effective top-down reasoning capabilities. This mechanism also generates explanation graphs. These graphs can then be displayed in the GUI of the dialogue shell and help the user understand the underlying reasoning processes. We believe that proper explanations are a main factor for increasing the level of user trust in end-to-end human-computer interaction systems.

Semantic Web: From Representations to Applications

Semantic Web: From Representations to ApplicationsGuus Schreiber This document discusses semantic web representations and applications. It provides an overview of the W3C Web Ontology Working Group and Semantic Web Best Practices and Deployment Working Group, including their goals and key issues addressed. Examples of semantic web applications are also described, such as using ontologies to integrate information from heterogeneous cultural heritage sources.

Ad

More from MoscowDataFest (6)

DF1 - R - Natekin - Improving Daily Analysis with data.table

DF1 - R - Natekin - Improving Daily Analysis with data.tableMoscowDataFest Presentation from Moscow Data Fest #1, September 12.

Moscow Data Fest is a free one-day event that brings together Data Scientists for sessions on both theory and practice.

Link: http://www.meetup.com/Moscow-Data-Fest/

DF1 - Py - Ovcharenko - Theano Tutorial

DF1 - Py - Ovcharenko - Theano TutorialMoscowDataFest Presentation from Moscow Data Fest #1, September 12.

Moscow Data Fest is a free one-day event that brings together Data Scientists for sessions on both theory and practice.

Link: http://www.meetup.com/Moscow-Data-Fest/

DF1 - ML - Petukhov - Azure Ml Machine Learning as a Service

DF1 - ML - Petukhov - Azure Ml Machine Learning as a ServiceMoscowDataFest Presentation from Moscow Data Fest #1, September 12.

Moscow Data Fest is a free one-day event that brings together Data Scientists for sessions on both theory and practice.

Link: http://www.meetup.com/Moscow-Data-Fest/

DF1 - DL - Lempitsky - Compact and Very Compact Image Descriptors

DF1 - DL - Lempitsky - Compact and Very Compact Image DescriptorsMoscowDataFest Presentation from Moscow Data Fest #1, September 12.

Moscow Data Fest is a free one-day event that brings together Data Scientists for sessions on both theory and practice.

Link: http://www.meetup.com/Moscow-Data-Fest/

DF1 - BD - Baranov - Mining Large Datasets with Apache Spark

DF1 - BD - Baranov - Mining Large Datasets with Apache SparkMoscowDataFest Presentation from Moscow Data Fest #1, September 12.

Moscow Data Fest is a free one-day event that brings together Data Scientists for sessions on both theory and practice.

Link: http://www.meetup.com/Moscow-Data-Fest/

DF1 - BD - Degtiarev - Practical Aspects of Big Data in Pharmaceutical

DF1 - BD - Degtiarev - Practical Aspects of Big Data in PharmaceuticalMoscowDataFest Presentation from Moscow Data Fest #1, September 12.

Moscow Data Fest is a free one-day event that brings together Data Scientists for sessions on both theory and practice.

Link: http://www.meetup.com/Moscow-Data-Fest/

Ad

Recently uploaded (20)

Effect of nutrition in Entomophagous Insectson

Effect of nutrition in Entomophagous InsectsonJabaskumarKshetri Effect of nutrition in Entomophagous Insects

Explains about insect nutrition and their effects.

Metallurgical process class 11_Govinda Pathak

Metallurgical process class 11_Govinda PathakGovindaPathak6 Different Methods for the Extraction of Metal

On the Lunar Origin of Near-Earth Asteroid 2024 PT5

On the Lunar Origin of Near-Earth Asteroid 2024 PT5Sérgio Sacani The near-Earth asteroid (NEA) 2024 PT5 is on an Earth-like orbit that remained in Earth's immediate vicinity for several months at the end of 2024. PT5's orbit is challenging to populate with asteroids originating from the main belt and is more commonly associated with rocket bodies mistakenly identified as natural objects or with debris ejected from impacts on the Moon. We obtained visible and near-infrared reflectance spectra of PT5 with the Lowell Discovery Telescope and NASA Infrared Telescope Facility on 2024 August 16. The combined reflectance spectrum matches lunar samples but does not match any known asteroid types—it is pyroxene-rich, while asteroids of comparable spectral redness are olivine-rich. Moreover, the amount of solar radiation pressure observed on the PT5 trajectory is orders of magnitude lower than what would be expected for an artificial object. We therefore conclude that 2024 PT5 is ejecta from an impact on the Moon, thus making PT5 the second NEA suggested to be sourced from the surface of the Moon. While one object might be an outlier, two suggest that there is an underlying population to be characterized. Long-term predictions of the position of 2024 PT5 are challenging due to the slow Earth encounters characteristic of objects in these orbits. A population of near-Earth objects that are sourced by the Moon would be important to characterize for understanding how impacts work on our nearest neighbor and for identifying the source regions of asteroids and meteorites from this understudied population of objects on very Earth-like orbits. Unified Astronomy Thesaurus concepts: Asteroids (72); Earth-moon system (436); The Moon (1692); Asteroid dynamics (2210)

Antliff, Mark. - Avant-Garde Fascism. The Mobilization of Myth, Art, and Cult...

Antliff, Mark. - Avant-Garde Fascism. The Mobilization of Myth, Art, and Cult...Francisco Sandoval Martínez Investigating the central role that theories of the visual arts and creativity played in the development of fascism in France, Mark Antliff examines the aesthetic dimension of fascist myth-making within the history of the avant-garde. Between 1909 and 1939, a surprising array of modernists were implicated in this project, including such well-known figures as the symbolist painter Maurice Denis, the architects Le Corbusier and Auguste Perret, the sculptors Charles Despiau and Aristide Maillol, the “New Vision” photographer Germaine Krull, and the fauve Maurice Vlaminck.

SuperconductingMagneticEnergyStorage.pptx

SuperconductingMagneticEnergyStorage.pptxBurkanAlpKale Ultra-fast, ultra-efficient grid storage

Presented by:

Burkan Alp Kale 20050711038

Numan Akbudak 21050711021

Nebil weddady 21050741003

Osama Alfares 21050741013

VERMICOMPOSTING A STEP TOWARDS SUSTAINABILITY.pptx

VERMICOMPOSTING A STEP TOWARDS SUSTAINABILITY.pptxhipachi8 Vermicomposting: A sustainable practice converting organic waste into nutrient-rich fertilizer using worms, promoting eco-friendly agriculture, reducing waste, and supporting environmentally conscious gardening and farming practices naturally.

RAPID DIAGNOSTIC TEST (RDT) overviewppt.pptx

RAPID DIAGNOSTIC TEST (RDT) overviewppt.pptxnietakam This a overview on rapid diagnostic test which is also known as rapid test focusing primarily on its principle which is lateral flow assay

Preparation of Permanent mounts of Parasitic Protozoans.pptx

Preparation of Permanent mounts of Parasitic Protozoans.pptxDr Showkat Ahmad Wani Permanent Mount of Protozoans

Examining Visual Attention in Gaze-Driven VR Learning: An Eye-Tracking Study ...

Examining Visual Attention in Gaze-Driven VR Learning: An Eye-Tracking Study ...Yasasi Abeysinghe This study presents an eye-tracking user study for analyzing visual attention in a gaze-driven VR learning environment using a consumer-grade Meta Quest Pro VR headset. Eye tracking data were captured through the headset's built-in eye tracker. We then generated basic and advanced eye-tracking measures—such as fixation duration, saccade amplitude, and the ambient/focal attention coefficient K—as indicators of visual attention within the VR setting. The generated gaze data are visualized in an advanced gaze analytics dashboard, enabling us to assess users' gaze behaviors and attention during interactive VR learning tasks. This study contributes by proposing a novel approach for integrating advanced eye-tracking technology into VR learning environments, specifically utilizing consumer-grade head-mounted displays.

Culture Media Microbiology Presentation.pptx

Culture Media Microbiology Presentation.pptxmythorlegendbusiness This document explains the various Culture Media

Body temperature_chemical thermogenesis_hypothermia_hypothermiaMetabolic acti...

Body temperature_chemical thermogenesis_hypothermia_hypothermiaMetabolic acti...muralinath2 Homeothermic animals, poikilothermic animals, metabolic activities, muscular activities, radiation of heat from environment, shivering, brown fat tissue, temperature, cinduction, convection, radiation, evaporation, panting, chemical thermogenesis, hyper pyrexia, hypothermia, second law of thermodynamics, mild hypothrtmia, moderate hypothermia, severe hypothertmia, low-grade fever, moderate=grade fever, high-grade fever, heat loss center, heat gain center

whole ANATOMY OF EYE with eye ball .pptx

whole ANATOMY OF EYE with eye ball .pptxsimranjangra13 The human eye is a complex organ responsible for vision, composed of various structures working together to capture and process light into images. The key components include the sclera, cornea, iris, pupil, lens, retina, optic nerve, and various fluids like aqueous and vitreous humor. The eye is divided into three main layers: the fibrous layer (sclera and cornea), the vascular layer (uvea, including the choroid, ciliary body, and iris), and the neural layer (retina).

Here's a more detailed look at the eye's anatomy:

1. Outer Layer (Fibrous Layer):

Sclera:

The tough, white outer layer that provides shape and protection to the eye.

Cornea:

The transparent, clear front part of the eye that helps focus light entering the eye.

2. Middle Layer (Vascular Layer/Uvea):

Choroid:

A layer of blood vessels located between the retina and the sclera, providing oxygen and nourishment to the outer retina.

Ciliary Body:

A ring of tissue behind the iris that produces aqueous humor and controls the shape of the lens for focusing.

Iris:

The colored part of the eye that controls the size of the pupil, regulating the amount of light entering the eye.

Pupil:

The black opening in the center of the iris that allows light to enter the eye.

3. Inner Layer (Neural Layer):

Retina:

The light-sensitive layer at the back of the eye that converts light into electrical signals that are sent to the brain via the optic nerve.

Optic Nerve:

A bundle of nerve fibers that carries visual signals from the retina to the brain.

4. Other Important Structures:

Lens:

A transparent, flexible structure behind the iris that focuses light onto the retina.

Aqueous Humor:

A clear, watery fluid that fills the space between the cornea and the lens, providing nourishment and maintaining eye shape.

Vitreous Humor:

A clear, gel-like substance that fills the space between the lens and the retina, helping maintain eye shape.

Macula:

A small area in the center of the retina responsible for sharp, central vision.

Fovea:

The central part of the macula with the highest concentration of cone cells, providing the sharpest vision.

These structures work together to allow us to see, with the light entering the eye being focused by the cornea and lens onto the retina, where it is converted into electrical signals that are transmitted to the brain for interpretation.

he eye sits in a protective bony socket called the orbit. Six extraocular muscles in the orbit are attached to the eye. These muscles move the eye up and down, side to side, and rotate the eye.

The extraocular muscles are attached to the white part of the eye called the sclera. This is a strong layer of tissue that covers nearly the entire surface of the eyeball.he layers of the tear film keep the front of the eye lubricated.

Tears lubricate the eye and are made up of three layers. These three layers together are called the tear film. The mucous layer is made by the conjunctiva. The watery part of the tears is made by the lacrimal gland

Lipids: Classification, Functions, Metabolism, and Dietary Recommendations

Lipids: Classification, Functions, Metabolism, and Dietary RecommendationsSarumathi Murugesan This presentation offers a comprehensive overview of lipids, covering their classification, chemical composition, and vital roles in the human body and diet. It details the digestion, absorption, transport, and metabolism of fats, with special emphasis on essential fatty acids, sources, and recommended dietary allowances (RDA). The impact of dietary fat on coronary heart disease and current recommendations for healthy fat consumption are also discussed. Ideal for students and professionals in nutrition, dietetics, food science, and health sciences.

Antliff, Mark. - Avant-Garde Fascism. The Mobilization of Myth, Art, and Cult...

Antliff, Mark. - Avant-Garde Fascism. The Mobilization of Myth, Art, and Cult...Francisco Sandoval Martínez

DF1 - Py - Kalaidin - Introduction to Word Embeddings with Python

- 1. Introduction to word embeddings Pavel Kalaidin @facultyofwonder Moscow Data Fest, September, 12th, 2015

- 5. лойс

- 6. годно, лойс лойс за песню из принципа не поставлю лойс взаимные лойсы лойс, если согласен What is the meaning of лойс?

- 7. годно, лойс лойс за песню из принципа не поставлю лойс взаимные лойсы лойс, если согласен What is the meaning of лойс?

- 8. кек

- 9. кек, что ли? кек))))))) ну ты кек What is the meaning of кек?

- 10. кек, что ли? кек))))))) ну ты кек What is the meaning of кек?

- 12. simple and flexible platform for understanding text and probably not messing up

- 13. one-hot encoding? 1 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0

- 14. co-occurrence matrix recall: word-document co-occurrence matrix for LSA

- 15. credits: [x]

- 16. from entire document to window (length 5-10)

- 17. still seems suboptimal -> big, sparse, etc.

- 18. lower dimensions, we want dense vectors (say, 25-1000)

- 19. How?

- 22. lots of memory?

- 23. idea: directly learn low- dimensional vectors

- 24. here comes word2vec Distributed Representations of Words and Phrases and their Compositionality, Mikolov et al: [paper]

- 25. idea: instead of capturing co- occurrence counts predict surrounding words

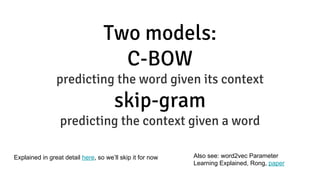

- 26. Two models: C-BOW predicting the word given its context skip-gram predicting the context given a word Explained in great detail here, so we’ll skip it for now Also see: word2vec Parameter Learning Explained, Rong, paper

- 28. CBOW: several times faster than skip-gram, slightly better accuracy for the frequent words Skip-Gram: works well with small amount of data, represents well rare words or phrases

- 29. Examples?

- 36. Wwoman - Wman = Wqueen - Wking classic example

- 38. word2vec Explained: Deriving Mikolov et al.’s Negative-Sampling Word-Embedding Method, Goldberg et al, 2014 [arxiv]

- 39. all done with gensim: github.com/piskvorky/gensim/

- 40. ...failing to take advantage of the vast amount of repetition in the data

- 41. so back to co-occurrences

- 42. GloVe for Global Vectors Pennington et al, 2014: nlp.stanford. edu/pubs/glove.pdf

- 43. Ratios seem to cancel noise

- 44. The gist: model ratios with vectors

- 45. The model

- 49. recall:

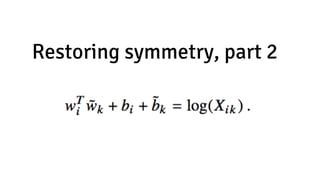

- 51. Restoring symmetry, part 2

- 52. Least squares problem it is now

- 53. SGD->AdaGrad

- 54. ok, Python code

- 56. two sets of vectors input and context + bias average/sum/drop

- 57. complexity |V|2

- 65. Abusing models

- 67. deep walk: DeepWalk: Online Learning of Social Representations [link]

- 68. user interests Paragraph vectors: cs.stanford. edu/~quocle/paragraph_vector.pdf

- 69. predicting hashtags interesting read: #TAGSPACE: Semantic Embeddings from Hashtags [link]

- 70. RusVectōrēs: distributional semantic models for Russian: ling.go.mail. ru/dsm/en/

- 72. corpus matters

- 73. building block for bigger models ╰(*´︶`*)╯

- 74. </slides>

![Hardy_Weinbergs_law_and[1]. A simple Explanation](https://cdn.slidesharecdn.com/ss_thumbnails/hardyweinbergslawand1-250505160803-e672c3cf-thumbnail.jpg?width=560&fit=bounds)