Dimension reduction techniques[Feature Selection]

- 1. Email; [email protected] Dimension Reduction Techniques By: MS. AAKANKSHA JAIN

- 3. Content 01 02 03 04 What is dimensionality reduction? Feature Selection and Feature Extraction Techniques to achieve dimension reduction Backward feature elimination and Forward feature selection technique Hand-on session on feature selection Why dimension reduction is important? Basic understanding of feature selection Python implementation on Jupiter lab

- 4. What is dimensionality Reduction? Dimensionality reduction, or dimension reduction, is the transformation of data from a high-dimensional space into a low-dimensional space so that the low-dimensional representation retains some meaningful properties of the original data Source: Internet

- 5. Curse of Dimensionality DATA INJESTION DATA STORAGE Heterogeneous DATA Feature Engineering Data Pre Processing Data Collection for ML model INTERNET RESOURCES Data for Model Training

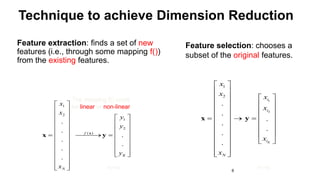

- 6. 6 Technique to achieve Dimension Reduction Feature extraction: finds a set of new features (i.e., through some mapping f()) from the existing features. 1 2 1 2 . . . . . . . K i i i N x x x x x x x y 1 2 1 2 ( ) . . . . . . . f K N x x y y y x x x y Feature selection: chooses a subset of the original features. The mapping f() could be linear or non-linear K<<N K<<N

- 7. Feature Selection Techniques Embedded Method Features are selected in combined quality of Filter and Wrapper method WRAPPER Method Selects the best combinations of the features that produces the best result FILTER Method Features are being selected via various statistical test score.

- 8. Backward Feature Elimination Feature Selection Keeping Most Significant Feature Complete Dataset All Features Select Most Significant Feature Initially we start with all the features Iterative checking of significance of feature Dependent Variable Iterative Learning Checking impact on model performance after removal Feature removal

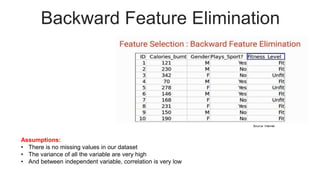

- 9. Backward Feature Elimination Assumptions: • There is no missing values in our dataset • The variance of all the variable are very high • And between independent variable, correlation is very low

- 10. Backward Feature Elimination Steps-I: To perform Backward feature elimination Firstly, train the model using all variable let say n Step-II: Next, we will calculate the performance of the model ACCURACY: 92%

- 11. Backward Feature Elimination Steps-III: Next, we will eliminate a variable (Calories_brunt) and train the model with remaining ones say n-1 variables. Accuracy : 90%

- 12. Backward Feature Elimination Steps-IV: Again, we will eliminate some other variables (Gender) and train the model with remaining ones say n-1 variables. Accuracy:91.6%

- 13. Backward Feature Elimination Steps-V: Again, we will eliminate some other variables (Play_Sport?) and train the model with remaining ones say n-1 variables. Accuracy:88%

- 14. Backward Feature Elimination Steps-VI: When done, we will identify the eliminated variable which does not having much impact on model’s performance

- 15. Hands-on ID season holiday workingday weather temp humidity windspeed count AB101 1 0 0 1 9.84 81 0 16 AB102 1 0 0 1 9.02 80 0 40 AB103 1 0 0 1 9.02 80 0 32 AB104 1 0 0 1 9.84 75 0 13 AB105 1 0 0 1 9.84 75 0 1 AB106 1 0 0 2 9.84 75 6.0032 1 AB107 1 0 0 1 9.02 80 0 2 AB108 1 0 0 1 8.2 86 0 3 AB109 1 0 0 1 9.84 75 0 8 AB110 1 0 0 1 13.12 76 0 14 AB111 1 0 0 1 15.58 76 16.9979 36 AB112 1 0 0 1 14.76 81 19.0012 56 AB113 1 0 0 1 17.22 77 19.0012 84

- 16. Python Code #importing the libraries import pandas as pd #reading the file data = pd.read_csv('backward_feature_elimination.csv') # first 5 rows of the data data.head() #shape of the data data.shape # creating the training data X = data.drop(['ID', 'count'], axis=1) y = data['count'] #Checking Shape X.shape, y.shape #Installation of MlEXTEND !pip install mlxtend

- 17. Python Code #importing the libraries from mlxtend.feature_selection import SequentialFeatureSelector as sfs from sklearn.linear_model import LinearRegression #Setting parameters to apply Backward Feature Elimination lreg = LinearRegression() sfs1 = sfs(lreg, k_features=4, forward=False, verbose=1, scoring='neg_mean_squared_error') #Apply Backward Feature Elimination sfs1 = sfs1.fit(X, y) #Checking selected features feat_names = list(sfs1.k_feature_names_) print(feat_names) #Setting new dataframe new_data = data[feat_names] new_data['count'] = data['count']

- 18. Python Code # first five rows of the new data new_data.head() # shape of new and original data new_data.shape, data.shape Congratulations!! We have successfully implemented Backward feature elimination

- 26. Final Output

![Python Code

#importing the libraries

import pandas as pd

#reading the file

data = pd.read_csv('backward_feature_elimination.csv')

# first 5 rows of the data

data.head()

#shape of the data

data.shape

# creating the training data

X = data.drop(['ID', 'count'], axis=1)

y = data['count']

#Checking Shape

X.shape, y.shape

#Installation of MlEXTEND

!pip install mlxtend](https://image.slidesharecdn.com/dimensionreductiontechnibyaakankshajain-210625102243/85/Dimension-reduction-techniques-Feature-Selection-16-320.jpg)

![Python Code

#importing the libraries

from mlxtend.feature_selection import SequentialFeatureSelector as sfs

from sklearn.linear_model import LinearRegression

#Setting parameters to apply Backward Feature Elimination

lreg = LinearRegression()

sfs1 = sfs(lreg, k_features=4, forward=False, verbose=1, scoring='neg_mean_squared_error')

#Apply Backward Feature Elimination

sfs1 = sfs1.fit(X, y)

#Checking selected features

feat_names = list(sfs1.k_feature_names_)

print(feat_names)

#Setting new dataframe

new_data = data[feat_names]

new_data['count'] = data['count']](https://image.slidesharecdn.com/dimensionreductiontechnibyaakankshajain-210625102243/85/Dimension-reduction-techniques-Feature-Selection-17-320.jpg)