Distributed-ness: Distributed computing & the clouds

Download as zip, pdf3 likes1,304 views

Discussion on distributed apps and the cloud resources available to support them. Some discussion on the XMPP/Jabber based messaging system we use at Koordinates. Part of the seminar series for the Wellington Summer of Code programme.

1 of 55

Downloaded 131 times

Ad

Recommended

App Engine On Air: Munich

App Engine On Air: Munichdion This is the presentation that I gave on the European On Air tour in Munich. Hence the footy pieces. A lot of the presentation was going through a live application, a port of the addressbook app to App Engine, that lives on Google Code.

Devfest SouthWest, Nigeria - Firebase

Devfest SouthWest, Nigeria - FirebaseMoyinoluwa Adeyemi This presentation contains an introduction to Firebase and walks through a code-lab for creating a real-time Android Chat application with offline functionality.

Firebase Adventures - Real time platform for your apps

Firebase Adventures - Real time platform for your appsJuarez Filho Firebase is a powerful platform to use on your projects, built in support for web or native apps. Features like: real time, user authentication, static hosting, mobile offline support, REST API, integrations with Zapier and much more.

Check this presentation to have a short getting start in this amazing platform and let's create extraordinary real time apps with Firebase. \o/

[AWS Dev Day] 실습워크샵 | 모두를 위한 컴퓨터 비전 딥러닝 툴킷, GluonCV 따라하기![[AWS Dev Day] 실습워크샵 | 모두를 위한 컴퓨터 비전 딥러닝 툴킷, GluonCV 따라하기](https://cdn.slidesharecdn.com/ss_thumbnails/gluoncv-190930065523-thumbnail.jpg?width=560&fit=bounds)

![[AWS Dev Day] 실습워크샵 | 모두를 위한 컴퓨터 비전 딥러닝 툴킷, GluonCV 따라하기](https://cdn.slidesharecdn.com/ss_thumbnails/gluoncv-190930065523-thumbnail.jpg?width=560&fit=bounds)

![[AWS Dev Day] 실습워크샵 | 모두를 위한 컴퓨터 비전 딥러닝 툴킷, GluonCV 따라하기](https://cdn.slidesharecdn.com/ss_thumbnails/gluoncv-190930065523-thumbnail.jpg?width=560&fit=bounds)

![[AWS Dev Day] 실습워크샵 | 모두를 위한 컴퓨터 비전 딥러닝 툴킷, GluonCV 따라하기](https://cdn.slidesharecdn.com/ss_thumbnails/gluoncv-190930065523-thumbnail.jpg?width=560&fit=bounds)

[AWS Dev Day] 실습워크샵 | 모두를 위한 컴퓨터 비전 딥러닝 툴킷, GluonCV 따라하기Amazon Web Services Korea GluonCV는 컴퓨터 비전에 특화된 Apache MXNet의 딥러닝 툴킷입니다. 본 실습에서는 GluonCV가 제공하는 최신 컴퓨터 비전 알고리즘의 기(旣) 훈련(Pre-trained) 모델을 사용하여 이미지 인식, 객체 검출, 영역 구분 등의 다양한 문제를 해결합니다. GluonCV의 설치에서부터 모델 학습과 배포에 이르는 전과정을 따라해 볼 수 있습니다.

"How to optimize the architecture of your platform" by Julien Simon

"How to optimize the architecture of your platform" by Julien SimonTheFamily You want to launch your online platform and from a technical perspective you are wondering where to start and how to optimize your architecture?

Cloud Computing presents several advantages such as scaling whenever you want your app our your Website. The hardest part is to define where to begin!

During this 45 minutes workshop, Julien Simon will share with you the best practices to scale your platform from 0 to millions of users. He will present:

- How to combine efficiently the tools Amazon Web Services provides,

- How to set up the best architecture for your platform

- How to scale your infrastructure in the Cloud.

Before joining AWS, Julien worked as CTO of Viadeo and Aldebaran Robotics. He also spent more than 3 years as VP Engineering at Criteo. He is particularly interested by architecture, performance, deployment, scalability and data.

2011 august-gdd-mexico-city-rest-json-oauth

2011 august-gdd-mexico-city-rest-json-oauthikailan This document discusses REST, JSON, and OAuth. It provides an overview of each technology and how they work together. REST uses HTTP verbs like GET, POST, PUT, and DELETE to invoke remote methods. JSON is a lightweight data format that is easy to parse and generate. OAuth allows third party applications to access user data from another service, like Google, without needing the user's password. It works by having the user grant an application access during an "OAuth dance."

Cloud computing infrastructure

Cloud computing infrastructuresinhhn Cloud computing infrastructure provides on-demand computing resources and platforms through utility computing models. The document defines public and private clouds and compares two major cloud platforms, Amazon EC2 and Google AppEngine. Both platforms provide scalable, reliable computing resources on demand but differ in their abstraction levels and programming models. While cloud computing offers benefits like reduced costs and maintenance, adoption challenges include availability, data security, and software licensing issues.

2011 aug-gdd-mexico-city-high-replication-datastore

2011 aug-gdd-mexico-city-high-replication-datastoreikailan Ikai Lan presented about Google App Engine's High Replication Datastore. He began with his background and an overview of App Engine. He then discussed how the High Replication Datastore provides strongly consistent reads for queries with an ancestor and eventually consistent reads for other queries. He explained how entity groups and transactions allow for strong consistency, discussing concepts like optimistic locking, distributed writes, and catching local data stores up to the latest changes. He concluded with advice on entity group design and questions.

Firebase - realtime backend for mobile app and IoT

Firebase - realtime backend for mobile app and IoTAndri Yadi Source code: https://github.com/andriyadi/FireSmartLamp

Introduction to Firebase showing how Firebase can be a realtime backend for web app and IoT devices. I used this deck for GDG DevFest 2015 event in Surabaya and Jakarta.

What is App Engine? O

What is App Engine? Oikailan This document provides an overview and introduction to Google App Engine. It discusses how App Engine addresses the scalability challenges of traditional web application stacks and allows applications to automatically scale on Google's infrastructure. It outlines the core App Engine services and APIs for data storage, caching, mail, messaging and background tasks. Finally, it covers getting started with App Engine, including downloading the SDK, writing a simple application, deploying locally and live, and next steps for learning more.

Waking the Data Scientist at 2am: Detect Model Degradation on Production Mod...

Waking the Data Scientist at 2am: Detect Model Degradation on Production Mod...Chris Fregly The document discusses Amazon SageMaker Model Monitor and Debugger for monitoring machine learning models in production. SageMaker Model Monitor collects prediction data from endpoints, creates a baseline, and runs scheduled monitoring jobs to detect deviations from the baseline. It generates reports and metrics in CloudWatch. SageMaker Debugger helps debug training issues by capturing debug data with no code changes and providing real-time alerts and visualizations in Studio. Both services help detect model degradation and take corrective actions like retraining.

Firebase Tech Talk By Atlogys

Firebase Tech Talk By AtlogysAtlogys Technical Consulting Learn from our hands-on experience using and working with Firebase. Great for building quick POC (prototypes) of apps that need real-time updates. Build cross platform web and mobile products with ease quickly.

Firebase Dev Day Bangkok: Keynote

Firebase Dev Day Bangkok: KeynoteSittiphol Phanvilai The document appears to be a presentation about Firebase given by Sittiphol Phanvilai at a Firebase Dev Day event. It introduces Firebase and its key features like realtime database, authentication, hosting, storage, cloud messaging and remote config. It provides examples of how to use these features in code. It also discusses how Firebase can help reduce development costs and time to market by eliminating the need to manage backend servers.

Data normalization across API interactions

Data normalization across API interactionsCloud Elements The document discusses the importance of data normalization when integrating APIs. It notes that APIs often have inconsistent data formats and endpoints that are not standardized. This can make APIs difficult to reuse and maintain. The document proposes normalizing data into a common structure and configuration-based transformations to map different APIs and data formats to this normalized structure. This allows applications to have a unified view of data across APIs and makes the APIs more reusable and interchangeable. It also discusses using asynchronous interactions and message buses to link normalized data across APIs rather than application-level logic.

AI on a PI

AI on a PIJulien SIMON Talk @ CodeMotion Berlin, 12/10/2017

Amazon AI

Amazon Polly

Amazon Rekognition

Apache MXNet

Raspberry Pi robot

Deep Learning on AWS (November 2016)

Deep Learning on AWS (November 2016)Julien SIMON Talk @ AWS Loft Munich, 22/11/16

Neural networks, Recommendation @ Amazon.com, GPU instances & Nvidia CUDA, Amazon DSSTNE, Deep Learning AMI

Big data - Apache Hadoop for Beginner's

Big data - Apache Hadoop for Beginner'ssenthil0809 The document provides an introduction to building big data analytics with Apache Hadoop for beginners. It outlines the roadmap, targeted audience, and definitions of big data and Hadoop. It describes sources of big data, characteristics of volume, velocity, variety and veracity. It also gives examples of structured, semi-structured and unstructured data sources. Finally, it compares traditional and big data analytics approaches and provides a quiz on Hadoop concepts.

Google Cloud Platform - Cloud-Native Roadshow Stuttgart

Google Cloud Platform - Cloud-Native Roadshow StuttgartVMware Tanzu This document summarizes a Cloud Native Roadshow presentation in Munich by Marcus Johansson of Google. The presentation covered why cloud infrastructure matters, Google's global infrastructure including data centers and networking, and Google Cloud Platform products and services like Compute Engine, Kubernetes Engine, Cloud Spanner, Cloud ML, and AI/ML APIs for vision, speech, translation, and more. It also discussed advantages of running Cloud Foundry on Google Cloud Platform.

Boot camp 2010_app_engine_101

Boot camp 2010_app_engine_101ikailan Slides for the talk I gave for the Google I/O 2010 App Engine Bootcamp session. Thanks to everyone who came out

Adopting Java for the Serverless world at Serverless Meetup New York and Boston

Adopting Java for the Serverless world at Serverless Meetup New York and BostonVadym Kazulkin Java is for many years one of the most popular programming languages, but it used to have hard times in the Serverless Community. Java is known for its high cold start times and high memory footprint. For both you have to pay to the cloud providers of your choice. That's why most developers tried to avoid using Java for such use cases. But the times change: Community and cloud providers improve things steadily for Java developers. In this talk we look at the features and possibilities AWS cloud provider offers for the Java developers and look the most popular Java frameworks, like Micronaut, Quarkus and Spring (Boot) and look how (AOT compiler and GraalVM native images play a huge role) they address Serverless challenges and enable Java for broad usage in the Serverless world.

Building Rich Mobile Apps with HTML5, CSS3 and JavaScript

Building Rich Mobile Apps with HTML5, CSS3 and JavaScriptSencha Michael Mullany presented about Sencha Touch HTML5 mobile web applications at Silicon Valley User Group in April 2011.

Scalable Deep Learning on AWS with Apache MXNet

Scalable Deep Learning on AWS with Apache MXNetJulien SIMON The document discusses deep learning and Apache MXNet. It provides an overview of deep learning applications, describes how Apache MXNet works and its advantages over other frameworks. It also demonstrates MXNet through code examples for training a neural network on MNIST data and performing object detection with a Raspberry Pi. Resources for learning more about MXNet on AWS are provided.

Grid'5000: Running a Large Instrument for Parallel and Distributed Computing ...

Grid'5000: Running a Large Instrument for Parallel and Distributed Computing ...Frederic Desprez The increasing complexity of available infrastructures (hierarchical, parallel, distributed, etc.) with specific features (caches, hyper-threading, dual core, etc.) makes it extremely difficult to build analytical models that allow for a satisfying prediction. Hence, it raises the question on how to validate algorithms and software systems if a realistic analytic study is not possible. As for many other sciences, the one answer is experimental validation. However, such experimentations rely on the availability of an instrument able to validate every level of the software stack and offering different hardware and software facilities about compute, storage, and network resources.

Almost ten years after its premises, the Grid'5000 testbed has become one of the most complete testbed for designing or evaluating large-scale distributed systems. Initially dedicated to the study of large HPC facilities, Grid’5000 has evolved in order to address wider concerns related to Desktop Computing, the Internet of Services and more recently the Cloud Computing paradigm. We now target new processors features such as hyperthreading, turbo boost, and power management or large applications managing big data. In this keynote we will both address the issue of experiments in HPC and computer science and the design and usage of the Grid'5000 platform for various kind of applications.

International Journal of Distributed Computing and Technology vol 2 issue 1

International Journal of Distributed Computing and Technology vol 2 issue 1JournalsPub www.journalspub.com International Journal of Distributed Computing and Technology

is a peer-reviewed journal that accepts original research article and review papers pertaining to the scope of the journal. The aim of the journal is to create break free communication link between the research communities.

Grid – Distributed Computing at Scale

Grid – Distributed Computing at Scaleroyans Grid computing provides a distributed computing platform that allows resources to be shared and utilized across organizational boundaries at scale. It abstracts applications and services from underlying infrastructure through virtualization, automation, and service-oriented architectures. While grid adoption began in scientific and technical fields, grids are now being used in commercial enterprises for shared infrastructures, regional collaborations, and emerging "cloud" utility providers. Widespread adoption of grid computing will require addressing social and standards barriers as grids move from early deployments to broader use across different industries.

Distributed Computing & MapReduce

Distributed Computing & MapReducecoolmirza143 Shared by Mansoor Mirza

Distributed Computing

What is it?

Why & when we need it?

Comparison with centralized computing

‘MapReduce’ (MR) Framework

Theory and practice

‘MapReduce’ in Action

Using Hadoop

Lab exercises

Consensus in distributed computing

Consensus in distributed computingRuben Tan A talk about the basics of consensus in the context of distributed computing. Touches on the Byzantine General problem, and a quick introduction to 2 Phase Commits and Basic Paxos.

Distributed computing the Google way

Distributed computing the Google wayEduard Hildebrandt The document provides an overview of distributed computing using Apache Hadoop. It discusses how Hadoop uses the MapReduce algorithm to parallelize tasks across large clusters of commodity hardware. Specifically, it breaks down jobs into map and reduce phases to distribute processing of large amounts of data. The document also notes that Hadoop is an open source framework used by many large companies to solve problems involving petabytes of data through batch processing in a fault tolerant manner.

BitCoin, P2P, Distributed Computing

BitCoin, P2P, Distributed ComputingMichelle Davies (Hryvnak) Presentation prepared for 4/17/13's ComputerWise on Blue Ridge TV.

You can also watch the video of the Interview Here: http://www.youtube.com/watch?v=xxY-E-ETFiM

High performance data center computing using manageable distributed computing

High performance data center computing using manageable distributed computingJuniper Networks Terrapin Trading Show Chicago, Thursday, June 4

Andy Bach, FSI Architect, Juniper Networks

Distributed computing concepts (QFX5100-AA)

Scale and performance enhancements (QFX10000 Series)

Automation capabilities (tie in QFX-PFA)

Larry Van Deusen, Director of the Network Integration Business Unit, Dimension Data

Automation

Value Added Partner Services

Ad

More Related Content

What's hot (14)

Firebase - realtime backend for mobile app and IoT

Firebase - realtime backend for mobile app and IoTAndri Yadi Source code: https://github.com/andriyadi/FireSmartLamp

Introduction to Firebase showing how Firebase can be a realtime backend for web app and IoT devices. I used this deck for GDG DevFest 2015 event in Surabaya and Jakarta.

What is App Engine? O

What is App Engine? Oikailan This document provides an overview and introduction to Google App Engine. It discusses how App Engine addresses the scalability challenges of traditional web application stacks and allows applications to automatically scale on Google's infrastructure. It outlines the core App Engine services and APIs for data storage, caching, mail, messaging and background tasks. Finally, it covers getting started with App Engine, including downloading the SDK, writing a simple application, deploying locally and live, and next steps for learning more.

Waking the Data Scientist at 2am: Detect Model Degradation on Production Mod...

Waking the Data Scientist at 2am: Detect Model Degradation on Production Mod...Chris Fregly The document discusses Amazon SageMaker Model Monitor and Debugger for monitoring machine learning models in production. SageMaker Model Monitor collects prediction data from endpoints, creates a baseline, and runs scheduled monitoring jobs to detect deviations from the baseline. It generates reports and metrics in CloudWatch. SageMaker Debugger helps debug training issues by capturing debug data with no code changes and providing real-time alerts and visualizations in Studio. Both services help detect model degradation and take corrective actions like retraining.

Firebase Tech Talk By Atlogys

Firebase Tech Talk By AtlogysAtlogys Technical Consulting Learn from our hands-on experience using and working with Firebase. Great for building quick POC (prototypes) of apps that need real-time updates. Build cross platform web and mobile products with ease quickly.

Firebase Dev Day Bangkok: Keynote

Firebase Dev Day Bangkok: KeynoteSittiphol Phanvilai The document appears to be a presentation about Firebase given by Sittiphol Phanvilai at a Firebase Dev Day event. It introduces Firebase and its key features like realtime database, authentication, hosting, storage, cloud messaging and remote config. It provides examples of how to use these features in code. It also discusses how Firebase can help reduce development costs and time to market by eliminating the need to manage backend servers.

Data normalization across API interactions

Data normalization across API interactionsCloud Elements The document discusses the importance of data normalization when integrating APIs. It notes that APIs often have inconsistent data formats and endpoints that are not standardized. This can make APIs difficult to reuse and maintain. The document proposes normalizing data into a common structure and configuration-based transformations to map different APIs and data formats to this normalized structure. This allows applications to have a unified view of data across APIs and makes the APIs more reusable and interchangeable. It also discusses using asynchronous interactions and message buses to link normalized data across APIs rather than application-level logic.

AI on a PI

AI on a PIJulien SIMON Talk @ CodeMotion Berlin, 12/10/2017

Amazon AI

Amazon Polly

Amazon Rekognition

Apache MXNet

Raspberry Pi robot

Deep Learning on AWS (November 2016)

Deep Learning on AWS (November 2016)Julien SIMON Talk @ AWS Loft Munich, 22/11/16

Neural networks, Recommendation @ Amazon.com, GPU instances & Nvidia CUDA, Amazon DSSTNE, Deep Learning AMI

Big data - Apache Hadoop for Beginner's

Big data - Apache Hadoop for Beginner'ssenthil0809 The document provides an introduction to building big data analytics with Apache Hadoop for beginners. It outlines the roadmap, targeted audience, and definitions of big data and Hadoop. It describes sources of big data, characteristics of volume, velocity, variety and veracity. It also gives examples of structured, semi-structured and unstructured data sources. Finally, it compares traditional and big data analytics approaches and provides a quiz on Hadoop concepts.

Google Cloud Platform - Cloud-Native Roadshow Stuttgart

Google Cloud Platform - Cloud-Native Roadshow StuttgartVMware Tanzu This document summarizes a Cloud Native Roadshow presentation in Munich by Marcus Johansson of Google. The presentation covered why cloud infrastructure matters, Google's global infrastructure including data centers and networking, and Google Cloud Platform products and services like Compute Engine, Kubernetes Engine, Cloud Spanner, Cloud ML, and AI/ML APIs for vision, speech, translation, and more. It also discussed advantages of running Cloud Foundry on Google Cloud Platform.

Boot camp 2010_app_engine_101

Boot camp 2010_app_engine_101ikailan Slides for the talk I gave for the Google I/O 2010 App Engine Bootcamp session. Thanks to everyone who came out

Adopting Java for the Serverless world at Serverless Meetup New York and Boston

Adopting Java for the Serverless world at Serverless Meetup New York and BostonVadym Kazulkin Java is for many years one of the most popular programming languages, but it used to have hard times in the Serverless Community. Java is known for its high cold start times and high memory footprint. For both you have to pay to the cloud providers of your choice. That's why most developers tried to avoid using Java for such use cases. But the times change: Community and cloud providers improve things steadily for Java developers. In this talk we look at the features and possibilities AWS cloud provider offers for the Java developers and look the most popular Java frameworks, like Micronaut, Quarkus and Spring (Boot) and look how (AOT compiler and GraalVM native images play a huge role) they address Serverless challenges and enable Java for broad usage in the Serverless world.

Building Rich Mobile Apps with HTML5, CSS3 and JavaScript

Building Rich Mobile Apps with HTML5, CSS3 and JavaScriptSencha Michael Mullany presented about Sencha Touch HTML5 mobile web applications at Silicon Valley User Group in April 2011.

Scalable Deep Learning on AWS with Apache MXNet

Scalable Deep Learning on AWS with Apache MXNetJulien SIMON The document discusses deep learning and Apache MXNet. It provides an overview of deep learning applications, describes how Apache MXNet works and its advantages over other frameworks. It also demonstrates MXNet through code examples for training a neural network on MNIST data and performing object detection with a Raspberry Pi. Resources for learning more about MXNet on AWS are provided.

Viewers also liked (15)

Grid'5000: Running a Large Instrument for Parallel and Distributed Computing ...

Grid'5000: Running a Large Instrument for Parallel and Distributed Computing ...Frederic Desprez The increasing complexity of available infrastructures (hierarchical, parallel, distributed, etc.) with specific features (caches, hyper-threading, dual core, etc.) makes it extremely difficult to build analytical models that allow for a satisfying prediction. Hence, it raises the question on how to validate algorithms and software systems if a realistic analytic study is not possible. As for many other sciences, the one answer is experimental validation. However, such experimentations rely on the availability of an instrument able to validate every level of the software stack and offering different hardware and software facilities about compute, storage, and network resources.

Almost ten years after its premises, the Grid'5000 testbed has become one of the most complete testbed for designing or evaluating large-scale distributed systems. Initially dedicated to the study of large HPC facilities, Grid’5000 has evolved in order to address wider concerns related to Desktop Computing, the Internet of Services and more recently the Cloud Computing paradigm. We now target new processors features such as hyperthreading, turbo boost, and power management or large applications managing big data. In this keynote we will both address the issue of experiments in HPC and computer science and the design and usage of the Grid'5000 platform for various kind of applications.

International Journal of Distributed Computing and Technology vol 2 issue 1

International Journal of Distributed Computing and Technology vol 2 issue 1JournalsPub www.journalspub.com International Journal of Distributed Computing and Technology

is a peer-reviewed journal that accepts original research article and review papers pertaining to the scope of the journal. The aim of the journal is to create break free communication link between the research communities.

Grid – Distributed Computing at Scale

Grid – Distributed Computing at Scaleroyans Grid computing provides a distributed computing platform that allows resources to be shared and utilized across organizational boundaries at scale. It abstracts applications and services from underlying infrastructure through virtualization, automation, and service-oriented architectures. While grid adoption began in scientific and technical fields, grids are now being used in commercial enterprises for shared infrastructures, regional collaborations, and emerging "cloud" utility providers. Widespread adoption of grid computing will require addressing social and standards barriers as grids move from early deployments to broader use across different industries.

Distributed Computing & MapReduce

Distributed Computing & MapReducecoolmirza143 Shared by Mansoor Mirza

Distributed Computing

What is it?

Why & when we need it?

Comparison with centralized computing

‘MapReduce’ (MR) Framework

Theory and practice

‘MapReduce’ in Action

Using Hadoop

Lab exercises

Consensus in distributed computing

Consensus in distributed computingRuben Tan A talk about the basics of consensus in the context of distributed computing. Touches on the Byzantine General problem, and a quick introduction to 2 Phase Commits and Basic Paxos.

Distributed computing the Google way

Distributed computing the Google wayEduard Hildebrandt The document provides an overview of distributed computing using Apache Hadoop. It discusses how Hadoop uses the MapReduce algorithm to parallelize tasks across large clusters of commodity hardware. Specifically, it breaks down jobs into map and reduce phases to distribute processing of large amounts of data. The document also notes that Hadoop is an open source framework used by many large companies to solve problems involving petabytes of data through batch processing in a fault tolerant manner.

BitCoin, P2P, Distributed Computing

BitCoin, P2P, Distributed ComputingMichelle Davies (Hryvnak) Presentation prepared for 4/17/13's ComputerWise on Blue Ridge TV.

You can also watch the video of the Interview Here: http://www.youtube.com/watch?v=xxY-E-ETFiM

High performance data center computing using manageable distributed computing

High performance data center computing using manageable distributed computingJuniper Networks Terrapin Trading Show Chicago, Thursday, June 4

Andy Bach, FSI Architect, Juniper Networks

Distributed computing concepts (QFX5100-AA)

Scale and performance enhancements (QFX10000 Series)

Automation capabilities (tie in QFX-PFA)

Larry Van Deusen, Director of the Network Integration Business Unit, Dimension Data

Automation

Value Added Partner Services

Introduction to OpenDaylight & Application Development

Introduction to OpenDaylight & Application DevelopmentMichelle Holley This document provides an introduction to OpenDaylight, an open source platform for Software-Defined Networking (SDN). It outlines what OpenDaylight is, its community and releases, the components within OpenDaylight including northbound and southbound interfaces, and some example network applications that can be built on OpenDaylight. It also provides an overview of how to develop applications using OpenDaylight, covering technologies like OSGi, MD-SAL, and the Yang modeling language.

Concepts of Distributed Computing & Cloud Computing

Concepts of Distributed Computing & Cloud Computing Hitesh Kumar Markam This document provides an introduction to distributed computing, including definitions, history, goals, characteristics, examples of applications, and scenarios. It discusses advantages like improved performance and reliability, as well as challenges like complexity, network problems, security, and heterogeneity. Key issues addressed are transparency, openness, scalability, and the need to handle differences across hardware, software, and developers when designing distributed systems.

Load Balancing In Distributed Computing

Load Balancing In Distributed ComputingRicha Singh Load Balancing In Distributed Computing

The goal of the load balancing algorithms is to maintain the load to each processing element such that all the processing elements become neither overloaded nor idle that means each processing element ideally has equal load at any moment of time during execution to obtain the maximum performance (minimum execution time) of the system

Grid computing notes

Grid computing notesSyed Mustafa 1. Grid computing is a distributed computing approach that allows users to access computational resources over a network. It aims to dynamically allocate resources like processing power, storage, or software according to user demands.

2. Grid computing provides a utility-like model for accessing computing resources. Users can access resources from a grid in the same way users access utilities like power or water grids.

3. Key benefits of grid computing include maximizing resource utilization, providing fast and cheap computing services, and enabling collaboration through secure resource sharing across organizations. Grid computing has applications in scientific research, businesses, and e-governance.

Distributed Computing

Distributed ComputingSudarsun Santhiappan The document discusses various models of parallel and distributed computing including symmetric multiprocessing (SMP), cluster computing, distributed computing, grid computing, and cloud computing. It provides definitions and examples of each model. It also covers parallel processing techniques like vector processing and pipelined processing, and differences between shared memory and distributed memory MIMD (multiple instruction multiple data) architectures.

OpenStack and OpenDaylight: An Integrated IaaS for SDN/NFV

OpenStack and OpenDaylight: An Integrated IaaS for SDN/NFVCloud Native Day Tel Aviv OpenStack is a free and open-source software platform for cloud computing, mostly deployed as an infrastructure-as-a-service (IaaS). OpenDaylight is an open source project under the Linux Foundation with the goal of furthering the adoption and innovation of SDN through the creation of a common industry supported platform.

In this session, I will talk about how OpenStack and OpenDaylight can be combined together to solve real world business cases and networking needs. We will cover:

- What is OpenDaylight

- Use cases for OpenDaylight with OpenStack

- The OpenDaylight NetVirt project

- How OpenDaylight interacts with OpenStack

- The future of OpenDaylight, and how we see it help solving challenges in the networking industry such as NFV, container networking and physical network fabric management -- the open source way.

Distributed Computing

Distributed ComputingPrashant Tiwari Distributed computing deals with hardware and software systems containing more than one processing element or storage element, concurrent processes, or multiple programs, running under a loosely or tightly controlled regime. In distributed computing a program is split up into parts that run simultaneously on multiple computers communicating over a network. Distributed computing is a form of parallel computing, but parallel computing is most commonly used to describe program parts running simultaneously on multiple processors in the same computer. Both types of processing require dividing a program into parts that can run simultaneously, but distributed programs often must deal with heterogeneous environments, network links of varying latencies, and unpredictable failures in the network or the computers.

International Journal of Distributed Computing and Technology vol 2 issue 1

International Journal of Distributed Computing and Technology vol 2 issue 1JournalsPub www.journalspub.com

Ad

Similar to Distributed-ness: Distributed computing & the clouds (20)

Front Range PHP NoSQL Databases

Front Range PHP NoSQL DatabasesJon Meredith NoSQL databases are non-relational databases designed for large volumes of data across many servers. They emerged to address scaling and reliability issues with relational databases. While different technologies, NoSQL databases are designed for distribution without a single point of failure and to sacrifice consistency for availability if needed. Examples include Dynamo, BigTable, Cassandra and CouchDB.

Ch-ch-ch-ch-changes....Stitch Triggers - Andrew Morgan

Ch-ch-ch-ch-changes....Stitch Triggers - Andrew MorganMongoDB Intelligent apps are emerging as the next frontier in analytics and application development. Learn how to build intelligent apps on MongoDB powered by Google Cloud with TensorFlow for machine learning and DialogFlow for artificial intelligence. Get your developers and data scientists to finally work together to build applications that understand your customer, automate their tasks, and provide knowledge and decision support.

Big Data Goes Airborne. Propelling Your Big Data Initiative with Ironcluster ...

Big Data Goes Airborne. Propelling Your Big Data Initiative with Ironcluster ...Precisely Learn about the only solution to instantly provision a full-featured ETL environment running on AWS for less than your Sunday newspaper!

Cloud Computing Bootcamp On The Google App Engine [v1.1]![Cloud Computing Bootcamp On The Google App Engine [v1.1]](https://cdn.slidesharecdn.com/ss_thumbnails/cloudcomputingbootcamponthegoogleappenginev1-1-090506155059-phpapp02-thumbnail.jpg?width=560&fit=bounds)

![Cloud Computing Bootcamp On The Google App Engine [v1.1]](https://cdn.slidesharecdn.com/ss_thumbnails/cloudcomputingbootcamponthegoogleappenginev1-1-090506155059-phpapp02-thumbnail.jpg?width=560&fit=bounds)

![Cloud Computing Bootcamp On The Google App Engine [v1.1]](https://cdn.slidesharecdn.com/ss_thumbnails/cloudcomputingbootcamponthegoogleappenginev1-1-090506155059-phpapp02-thumbnail.jpg?width=560&fit=bounds)

![Cloud Computing Bootcamp On The Google App Engine [v1.1]](https://cdn.slidesharecdn.com/ss_thumbnails/cloudcomputingbootcamponthegoogleappenginev1-1-090506155059-phpapp02-thumbnail.jpg?width=560&fit=bounds)

Cloud Computing Bootcamp On The Google App Engine [v1.1]Matthew McCullough Matthew McCullough's presentation to DOSUG on the Google App Engine's new Java language and JSP/servlet support. Covers the current definition of what Cloud means, and why you'd want to use it. All materials are highly subject to change, as this talk covers the Java Beta GAE support on the App Engine just 27 days after launch.

UnConference for Georgia Southern Computer Science March 31, 2015

UnConference for Georgia Southern Computer Science March 31, 2015Christopher Curtin I presented to the Georgia Southern Computer Science ACM group. Rather than one topic for 90 minutes, I decided to do an UnConference. I presented them a list of 8-9 topics, let them vote on what to talk about, then repeated.

Each presentation was ~8 minutes, (Except Career) and was by no means an attempt to explain the full concept or technology. Only to wake up their interest.

Hw09 Making Hadoop Easy On Amazon Web Services

Hw09 Making Hadoop Easy On Amazon Web ServicesCloudera, Inc. Amazon Elastic MapReduce enables customers to easily and cost-effectively process vast amounts of data utilizing a hosted Hadoop framework running on Amazon's web-scale infrastructure. It was launched in 2009 and allows customers to spin up large or small Hadoop job flows in minutes without having to manage compute clusters or tune Hadoop themselves. The service provides a reliable, fault tolerant and cost effective way for customers to solve problems like data mining, genome analysis, financial simulation and web indexing using their existing Hadoop applications.

Cloud Computing Primer: Using cloud computing tools in your museum

Cloud Computing Primer: Using cloud computing tools in your museumRobert J. Stein A presentation by Robert Stein, Charlie Moad and Ari Davidow on cloud computing for the Museum Computer Network Conference in Portland, OR November, 2009

Cloud Computing Workshop

Cloud Computing WorkshopCharlie Moad The document discusses cloud computing and provides examples of how museums can utilize cloud services. It describes common cloud applications and utilities, discusses pros and cons of the cloud, and provides specific examples of how the International Museum of Art used Amazon Web Services (AWS) to save costs on data storage and transition their website and video servers to the cloud.

AWS re:Invent 2016 recap (part 1)

AWS re:Invent 2016 recap (part 1)Julien SIMON AWS re:Invent 2016: NEW LAUNCH! AWS announced several new services and capabilities including elastic GPUs for EC2, new EC2 instance types like T2.xlarge and T2.2xlarge, next generation R4 memory optimized instances, programmable F1 instances, Amazon Lightsail for simple virtual private servers, interactive queries on data in S3 with Amazon Athena, image and facial recognition with Amazon Rekognition, text-to-speech with Amazon Polly, natural language processing with Amazon Lex, PostgreSQL compatibility for Amazon Aurora, local compute and messaging for connected devices with AWS Greengrass, petabyte-scale data transport with storage and compute using AWS Snowball Edge and AWS Snow

CouchDB

CouchDBJacob Diamond The document discusses Apache CouchDB, a NoSQL database management system. It begins with an overview of NoSQL databases and their characteristics like being non-relational, distributed, and horizontally scalable. It then provides details on CouchDB, describing it as a document-oriented database using JSON documents and JavaScript for queries. The document outlines CouchDB's features like schema-free design, ACID compliance, replication, RESTful API, and MapReduce functions. It concludes with examples of CouchDB use cases and steps to set up a sample project using a CouchDB instance with sample employee data and views/shows to query the data.

Public Terabyte Dataset Project: Web crawling with Amazon Elastic MapReduce

Public Terabyte Dataset Project: Web crawling with Amazon Elastic MapReduceHadoop User Group The document discusses the Public Terabyte Dataset Project which aims to create a large crawl of top US domains for public use on Amazon's cloud. It describes how the project uses various Amazon Web Services like Elastic MapReduce and SimpleDB along with technologies like Hadoop, Cascading, and Tika for web crawling and data processing. Common issues encountered include configuration problems, slow performance from fetching all web pages or using Tika language detection, and generating log files instead of results.

MongoDB Evenings DC: Get MEAN and Lean with Docker and Kubernetes

MongoDB Evenings DC: Get MEAN and Lean with Docker and KubernetesMongoDB This document discusses running MongoDB and Kubernetes together to enable lean and agile development. It proposes using Docker containers to package applications and leverage tools like Kubernetes for deployment, management and scaling. Specifically, it recommends:

1) Using Docker to containerize applications and define deployment configurations.

2) Deploying to Kubernetes where services and replication controllers ensure high availability and scalability.

3) Treating databases specially by running them as "naked pods" assigned to labeled nodes with appropriate resources.

4) Demonstrating deployment of a sample MEAN stack application on Kubernetes with MongoDB and discussing future work around experimentation and blue/green deployments.

Shipping logs to splunk from a container in aws howto

Shipping logs to splunk from a container in aws howtoЕкатерина Задорожная "Shipping logs to Splunk from a container in AWS howto.

Advantages of running containers in AWS Fargate" by Oleksii Makieiev, Senior systems engineer EPAM Ukraine

Dictionary Within the Cloud

Dictionary Within the Cloudgueste4978b94 The document discusses the goals and development of an online dictionary application being built by three developers called the Giraffes. It aims to provide dictionary functionality through the cloud to replace installed software and reduce disk space usage. The application displays dictionary results for selected text within the browser using JavaScript. Future plans include browser extensions, additional dictionaries, language support, and APIs for other developers and users to integrate new dictionaries.

Cloud Computing

Cloud Computingwebscale The document provides a comparison of Amazon AWS, Google App Engine, and Sun Project Caroline cloud computing platforms. It discusses their offerings such as hardware as a service, platform as a service, and software as a service. Amazon AWS provides extensive infrastructure services while Google App Engine focuses on its APIs and big data capabilities. Project Caroline is a research project aiming to provide programmatic control of distributed resources on a large shared grid.

Delivering with ember.js

Delivering with ember.jsAndrei Sebastian Cîmpean The slides I used while giving an introduction to Ember.JS at Codecamp Cluj Napoca in November 2016.

It is a gist for all the things ember provide and why it enables teams to deliver products without blocking them in the past, as far as approaches and technology goes.

The Future is Now: Leveraging the Cloud with Ruby

The Future is Now: Leveraging the Cloud with RubyRobert Dempsey My presentation from the Ruby Hoedown on cloud computing and how Ruby developers can take advantage of cloud services to build scalable web applications.

Google Cloud Platform - Building a scalable mobile application

Google Cloud Platform - Building a scalable mobile applicationLukas Masuch In this presentation we give an overview on several services of the Google Cloud Platform and showcase an Android application utilizing these technologies. We cover technologies, such as Google App Engine, Cloud Endpoints, Cloud Storage, Cloud Datastore and Google Cloud Messaging (GCM). We will talk about pitfalls, show meaningful code examples (in Java) and provide several tips and dev tools on how to get the most out of Google’s Cloud Platform.

Google Cloud Platform - Building a scalable Mobile Application

Google Cloud Platform - Building a scalable Mobile ApplicationBenjamin Raethlein by Lukas Masuch, Henning Muszynski and Benjamin Raethlein

Originally held on 'Karlsruhe Entwicklertag 2015'

In this presentation we give an overview on several services of the Google Cloud Platform and showcase an Android application utilizing these technologies. We cover technologies, such as Google App Engine, Cloud Endpoints, Cloud Storage, Cloud Datastore and Google Cloud Messaging (GCM). We will talk about pitfalls, show meaningful code examples (in Java) and provide several tips and dev tools on how to get the most out of Google’s Cloud Platform.

Scaling PHP apps

Scaling PHP appsMatteo Moretti Matteo Moretti discusses scaling PHP applications. He covers scaling the web server, sessions, database, filesystem, asynchronous tasks, and logging. The key aspects are decoupling services, using caching, moving to external services like Redis, S3, and RabbitMQ, and allowing those services to scale automatically using techniques like auto-scaling. Sharding the database is difficult to implement and should only be done if really needed.

Ad

More from Robert Coup (9)

Curtailing Crustaceans with Geeky Enthusiasm

Curtailing Crustaceans with Geeky EnthusiasmRobert Coup As a young and time-poor yachtie I need to get a leg up over the old barnacles who spend every afternoon out racing on the harbour. Can my two friends technology & data help me kick ass and take home the prizes?

Presented as a lightning talk at the Mix & Mash competition launch. Nov 2010, Wellington. http://www.mixandmash.org.nz/

Monitoring and Debugging your Live Applications

Monitoring and Debugging your Live ApplicationsRobert Coup Some ideas about debugging and monitoring live applications: logging, remote-shells using Twisted (even in non-twisted apps), python debuggers, and creating IM bots so your apps can talk to you.

Presented at Kiwi Pycon 2009

/me wants it. Scraping sites to get data.

/me wants it. Scraping sites to get data.Robert Coup Building scrapers for grabbing data from websites. Tools, techniques, and tips.

A presentation at KiwiPycon 2009

Map Analytics - Ignite Spatial

Map Analytics - Ignite SpatialRobert Coup Web maps are everywhere - but what do people really want to see? We need to start doing analytics on our maps in the same way we do with our other pages.

Ignite Spatial presentation, Oct 2009, FOSS4G Sydney

Twisted: a quick introduction

Twisted: a quick introductionRobert Coup A quick introduction to Python's Twisted networking library, given at a NZPUG meeting in April 2009.

Covers some of the stuff you can do with Twisted really easily, like an XMPP bot and an SSH/Telnet shell into your running applications.

Django 101

Django 101Robert Coup The document introduces Django, an open-source web framework written in Python. It discusses key features of Django, including object-relational mapping, forms, templates, an admin interface, and more. It then provides an overview of how to set up a Django project, including creating models, views, templates, and using the development server. Examples are given of building a sample application to manage yacht racing crews and races. Resources for learning and getting support with Django are also listed.

Geo-Processing in the Clouds

Geo-Processing in the CloudsRobert Coup A presentation at the GeoCart 2008 Conference in Auckland, explaining some of the new things that we're exploring at Koordinates.

Maps are Fun - Why not on the web?

Maps are Fun - Why not on the web?Robert Coup The document discusses how maps on the web have evolved from static images to interactive slippy maps to 3D views. It argues that real estate and housing search websites currently fail to utilize the full potential of web maps by not making the map the primary interface. Lists of addresses are ineffective for spatial searches; the map should be used to filter results, add useful location-based data, and reduce the number of clicks needed to evaluate options. Web maps could be improved by allowing user annotations and printed versions to mirror real-world searching.

Fame and Fortune from Open Source

Fame and Fortune from Open SourceRobert Coup Robert Coup will discuss how to get involved in open source projects and potentially gain fame and fortune. Open source refers to software that is collaboratively created by a community. Major companies and organizations use and develop open source software. Getting involved can help your career and skills as a developer.

Recently uploaded (20)

Limecraft Webinar - 2025.3 release, featuring Content Delivery, Graphic Conte...

Limecraft Webinar - 2025.3 release, featuring Content Delivery, Graphic Conte...Maarten Verwaest Slides of Limecraft Webinar on May 8th 2025, where Jonna Kokko and Maarten Verwaest discuss the latest release.

This release includes major enhancements and improvements of the Delivery Workspace, as well as provisions against unintended exposure of Graphic Content, and rolls out the third iteration of dashboards.

Customer cases include Scripted Entertainment (continuing drama) for Warner Bros, as well as AI integration in Avid for ITV Studios Daytime.

UiPath AgentHack - Build the AI agents of tomorrow_Enablement 1.pptx

UiPath AgentHack - Build the AI agents of tomorrow_Enablement 1.pptxanabulhac Join our first UiPath AgentHack enablement session with the UiPath team to learn more about the upcoming AgentHack! Explore some of the things you'll want to think about as you prepare your entry. Ask your questions.

Integrating FME with Python: Tips, Demos, and Best Practices for Powerful Aut...

Integrating FME with Python: Tips, Demos, and Best Practices for Powerful Aut...Safe Software FME is renowned for its no-code data integration capabilities, but that doesn’t mean you have to abandon coding entirely. In fact, Python’s versatility can enhance FME workflows, enabling users to migrate data, automate tasks, and build custom solutions. Whether you’re looking to incorporate Python scripts or use ArcPy within FME, this webinar is for you!

Join us as we dive into the integration of Python with FME, exploring practical tips, demos, and the flexibility of Python across different FME versions. You’ll also learn how to manage SSL integration and tackle Python package installations using the command line.

During the hour, we’ll discuss:

-Top reasons for using Python within FME workflows

-Demos on integrating Python scripts and handling attributes

-Best practices for startup and shutdown scripts

-Using FME’s AI Assist to optimize your workflows

-Setting up FME Objects for external IDEs

Because when you need to code, the focus should be on results—not compatibility issues. Join us to master the art of combining Python and FME for powerful automation and data migration.

Build With AI - In Person Session Slides.pdf

Build With AI - In Person Session Slides.pdfGoogle Developer Group - Harare Build with AI events are communityled, handson activities hosted by Google Developer Groups and Google Developer Groups on Campus across the world from February 1 to July 31 2025. These events aim to help developers acquire and apply Generative AI skills to build and integrate applications using the latest Google AI technologies, including AI Studio, the Gemini and Gemma family of models, and Vertex AI. This particular event series includes Thematic Hands on Workshop: Guided learning on specific AI tools or topics as well as a prequel to the Hackathon to foster innovation using Google AI tools.

Crazy Incentives and How They Kill Security. How Do You Turn the Wheel?

Crazy Incentives and How They Kill Security. How Do You Turn the Wheel?Christian Folini Everybody is driven by incentives. Good incentives persuade us to do the right thing and patch our servers. Bad incentives make us eat unhealthy food and follow stupid security practices.

There is a huge resource problem in IT, especially in the IT security industry. Therefore, you would expect people to pay attention to the existing incentives and the ones they create with their budget allocation, their awareness training, their security reports, etc.

But reality paints a different picture: Bad incentives all around! We see insane security practices eating valuable time and online training annoying corporate users.

But it's even worse. I've come across incentives that lure companies into creating bad products, and I've seen companies create products that incentivize their customers to waste their time.

It takes people like you and me to say "NO" and stand up for real security!

How Top Companies Benefit from Outsourcing

How Top Companies Benefit from OutsourcingNascenture Explore how leading companies leverage outsourcing to streamline operations, cut costs, and stay ahead in innovation. By tapping into specialized talent and focusing on core strengths, top brands achieve scalability, efficiency, and faster product delivery through strategic outsourcing partnerships.

ICDCC 2025: Securing Agentic AI - Eryk Budi Pratama.pdf

ICDCC 2025: Securing Agentic AI - Eryk Budi Pratama.pdfEryk Budi Pratama Title: Securing Agentic AI: Infrastructure Strategies for the Brains Behind the Bots

As AI systems evolve toward greater autonomy, the emergence of Agentic AI—AI that can reason, plan, recall, and interact with external tools—presents both transformative potential and critical security risks.

This presentation explores:

> What Agentic AI is and how it operates (perceives → reasons → acts)

> Real-world enterprise use cases: enterprise co-pilots, DevOps automation, multi-agent orchestration, and decision-making support

> Key risks based on the OWASP Agentic AI Threat Model, including memory poisoning, tool misuse, privilege compromise, cascading hallucinations, and rogue agents

> Infrastructure challenges unique to Agentic AI: unbounded tool access, AI identity spoofing, untraceable decision logic, persistent memory surfaces, and human-in-the-loop fatigue

> Reference architectures for single-agent and multi-agent systems

> Mitigation strategies aligned with the OWASP Agentic AI Security Playbooks, covering: reasoning traceability, memory protection, secure tool execution, RBAC, HITL protection, and multi-agent trust enforcement

> Future-proofing infrastructure with observability, agent isolation, Zero Trust, and agent-specific threat modeling in the SDLC

> Call to action: enforce memory hygiene, integrate red teaming, apply Zero Trust principles, and proactively govern AI behavior

Presented at the Indonesia Cloud & Datacenter Convention (IDCDC) 2025, this session offers actionable guidance for building secure and trustworthy infrastructure to support the next generation of autonomous, tool-using AI agents.

Top Hyper-Casual Game Studio Services

Top Hyper-Casual Game Studio ServicesNova Carter BR Softech is a leading hyper-casual game development company offering lightweight, addictive games with quick gameplay loops. Our expert developers create engaging titles for iOS, Android, and cross-platform markets using Unity and other top engines.

Slack like a pro: strategies for 10x engineering teams

Slack like a pro: strategies for 10x engineering teamsNacho Cougil You know Slack, right? It's that tool that some of us have known for the amount of "noise" it generates per second (and that many of us mute as soon as we install it 😅).

But, do you really know it? Do you know how to use it to get the most out of it? Are you sure 🤔? Are you tired of the amount of messages you have to reply to? Are you worried about the hundred conversations you have open? Or are you unaware of changes in projects relevant to your team? Would you like to automate tasks but don't know how to do so?

In this session, I'll try to share how using Slack can help you to be more productive, not only for you but for your colleagues and how that can help you to be much more efficient... and live more relaxed 😉.

If you thought that our work was based (only) on writing code, ... I'm sorry to tell you, but the truth is that it's not 😅. What's more, in the fast-paced world we live in, where so many things change at an accelerated speed, communication is key, and if you use Slack, you should learn to make the most of it.

---

Presentation shared at JCON Europe '25

Feedback form:

http://tiny.cc/slack-like-a-pro-feedback

React Native for Business Solutions: Building Scalable Apps for Success

React Native for Business Solutions: Building Scalable Apps for SuccessAmelia Swank See how we used React Native to build a scalable mobile app from concept to production. Learn about the benefits of React Native development.

for more info : https://www.atoallinks.com/2025/react-native-developers-turned-concept-into-scalable-solution/

OpenAI Just Announced Codex: A cloud engineering agent that excels in handlin...

OpenAI Just Announced Codex: A cloud engineering agent that excels in handlin...SOFTTECHHUB The world of software development is constantly evolving. New languages, frameworks, and tools appear at a rapid pace, all aiming to help engineers build better software, faster. But what if there was a tool that could act as a true partner in the coding process, understanding your goals and helping you achieve them more efficiently? OpenAI has introduced something that aims to do just that.

Who's choice? Making decisions with and about Artificial Intelligence, Keele ...

Who's choice? Making decisions with and about Artificial Intelligence, Keele ...Alan Dix Invited talk at Designing for People: AI and the Benefits of Human-Centred Digital Products, Digital & AI Revolution week, Keele University, 14th May 2025

https://www.alandix.com/academic/talks/Keele-2025/

In many areas it already seems that AI is in charge, from choosing drivers for a ride, to choosing targets for rocket attacks. None are without a level of human oversight: in some cases the overarching rules are set by humans, in others humans rubber-stamp opaque outcomes of unfathomable systems. Can we design ways for humans and AI to work together that retain essential human autonomy and responsibility, whilst also allowing AI to work to its full potential? These choices are critical as AI is increasingly part of life or death decisions, from diagnosis in healthcare ro autonomous vehicles on highways, furthermore issues of bias and privacy challenge the fairness of society overall and personal sovereignty of our own data. This talk will build on long-term work on AI & HCI and more recent work funded by EU TANGO and SoBigData++ projects. It will discuss some of the ways HCI can help create situations where humans can work effectively alongside AI, and also where AI might help designers create more effective HCI.

AI x Accessibility UXPA by Stew Smith and Olivier Vroom

AI x Accessibility UXPA by Stew Smith and Olivier VroomUXPA Boston This presentation explores how AI will transform traditional assistive technologies and create entirely new ways to increase inclusion. The presenters will focus specifically on AI's potential to better serve the deaf community - an area where both presenters have made connections and are conducting research. The presenters are conducting a survey of the deaf community to better understand their needs and will present the findings and implications during the presentation.

AI integration into accessibility solutions marks one of the most significant technological advancements of our time. For UX designers and researchers, a basic understanding of how AI systems operate, from simple rule-based algorithms to sophisticated neural networks, offers crucial knowledge for creating more intuitive and adaptable interfaces to improve the lives of 1.3 billion people worldwide living with disabilities.

Attendees will gain valuable insights into designing AI-powered accessibility solutions prioritizing real user needs. The presenters will present practical human-centered design frameworks that balance AI’s capabilities with real-world user experiences. By exploring current applications, emerging innovations, and firsthand perspectives from the deaf community, this presentation will equip UX professionals with actionable strategies to create more inclusive digital experiences that address a wide range of accessibility challenges.

Best 10 Free AI Character Chat Platforms

Best 10 Free AI Character Chat PlatformsSoulmaite This guide highlights the best 10 free AI character chat platforms available today, covering a range of options from emotionally intelligent companions to adult-focused AI chats. Each platform brings something unique—whether it's romantic interactions, fantasy roleplay, or explicit content—tailored to different user preferences. From Soulmaite’s personalized 18+ characters and Sugarlab AI’s NSFW tools, to creative storytelling in AI Dungeon and visual chats in Dreamily, this list offers a diverse mix of experiences. Whether you're seeking connection, entertainment, or adult fantasy, these AI platforms provide a private and customizable way to engage with virtual characters for free.

Multi-Agent AI Systems: Architectures & Communication (MCP and A2A)

Multi-Agent AI Systems: Architectures & Communication (MCP and A2A)HusseinMalikMammadli Multi-Agent AI Systems: Architectures & Communication (MCP and A2A)

Longitudinal Benchmark: A Real-World UX Case Study in Onboarding by Linda Bor...

Longitudinal Benchmark: A Real-World UX Case Study in Onboarding by Linda Bor...UXPA Boston This is a case study of a three-part longitudinal research study with 100 prospects to understand their onboarding experiences. In part one, we performed a heuristic evaluation of the websites and the getting started experiences of our product and six competitors. In part two, prospective customers evaluated the website of our product and one other competitor (best performer from part one), chose one product they were most interested in trying, and explained why. After selecting the one they were most interested in, we asked them to create an account to understand their first impressions. In part three, we invited the same prospective customers back a week later for a follow-up session with their chosen product. They performed a series of tasks while sharing feedback throughout the process. We collected both quantitative and qualitative data to make actionable recommendations for marketing, product development, and engineering, highlighting the value of user-centered research in driving product and service improvements.

Building a research repository that works by Clare Cady

Building a research repository that works by Clare CadyUXPA Boston Are you constantly answering, "Hey, have we done any research on...?" It’s a familiar question for UX professionals and researchers, and the answer often involves sifting through years of archives or risking lost insights due to team turnover.

Join a deep dive into building a UX research repository that not only stores your data but makes it accessible, actionable, and sustainable. Learn how our UX research team tackled years of disparate data by leveraging an AI tool to create a centralized, searchable repository that serves the entire organization.

This session will guide you through tool selection, safeguarding intellectual property, training AI models to deliver accurate and actionable results, and empowering your team to confidently use this tool. Are you ready to transform your UX research process? Attend this session and take the first step toward developing a UX repository that empowers your team and strengthens design outcomes across your organization.

Refactoring meta-rauc-community: Cleaner Code, Better Maintenance, More Machines

Refactoring meta-rauc-community: Cleaner Code, Better Maintenance, More MachinesLeon Anavi RAUC is a widely used open-source solution for robust and secure software updates on embedded Linux devices. In 2020, the Yocto/OpenEmbedded layer meta-rauc-community was created to provide demo RAUC integrations for a variety of popular development boards. The goal was to support the embedded Linux community by offering practical, working examples of RAUC in action - helping developers get started quickly.

Since its inception, the layer has tracked and supported the Long Term Support (LTS) releases of the Yocto Project, including Dunfell (April 2020), Kirkstone (April 2022), and Scarthgap (April 2024), alongside active development in the main branch. Structured as a collection of layers tailored to different machine configurations, meta-rauc-community has delivered demo integrations for a wide variety of boards, utilizing their respective BSP layers. These include widely used platforms such as the Raspberry Pi, NXP i.MX6 and i.MX8, Rockchip, Allwinner, STM32MP, and NVIDIA Tegra.

Five years into the project, a significant refactoring effort was launched to address increasing duplication and divergence in the layer’s codebase. The new direction involves consolidating shared logic into a dedicated meta-rauc-community base layer, which will serve as the foundation for all supported machines. This centralization reduces redundancy, simplifies maintenance, and ensures a more sustainable development process.

The ongoing work, currently taking place in the main branch, targets readiness for the upcoming Yocto Project release codenamed Wrynose (expected in 2026). Beyond reducing technical debt, the refactoring will introduce unified testing procedures and streamlined porting guidelines. These enhancements are designed to improve overall consistency across supported hardware platforms and make it easier for contributors and users to extend RAUC support to new machines.

The community's input is highly valued: What best practices should be promoted? What features or improvements would you like to see in meta-rauc-community in the long term? Let’s start a discussion on how this layer can become even more helpful, maintainable, and future-ready - together.

Editor's Notes

- #5: image: http://www.flickr.com/photos/erichews/2639564244/

- #8: image: http://www.flickr.com/photos/anshul/2313406717/

- #9: image: http://www.flickr.com/photos/sarkasmo/428860683/

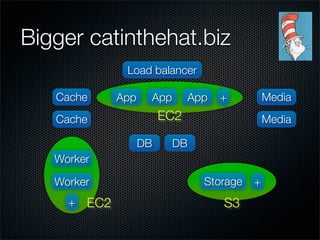

- #11: Awesome Web 2.0 site selling hats for cats. In addition to the store...

- #12: Facebook app, online games, design-your-own hats, story writing with automatic creation of cat videos from your story, forums, blogs - you name it…

- #13: image: http://www.bride.net/wp-content/uploads/2008/02/cat-in-the-hat.gif

- #14: image: http://www.flickr.com/photos/abear23/1444321123/

- #16: What happens when we get a bit bigger, and we start wanting more than one of anything? When we get load spikes and need 6 or 12 App servers, or 10 Workers rather than 2?

- #17: 1 worker or 20 workers should be the same to the client, and it should just work if the worker dies mid-process.

- #18: What components? background tasks, sessions,

- #19: REST services are a great example here - search

- #20: image: http://www.flickr.com/photos/ysgellery/3103708893/

- #21: Polling is a concern with many systems

- #22: image: http://www.flickr.com/photos/90001203@N00/172506278/

- #24: image: http://www.flickr.com/photos/75166820@N00/221373872/

- #25: image: http://www.flickr.com/photos/livinginmonrovia/85868861/

- #26: image: http://www.businessballs.com/project.htm

- #28: image: http://www.flickr.com/photos/donsolo/166981992/

- #29: Really easy with Twisted to add a SSH/Telnet shell

- #30: image: http://www.flickr.com/photos/dwstucke/6045801/

- #31: What happens when we get a bit bigger, and we start wanting more than one of anything? When we get load spikes and need 6 or 12 App servers, or 10 Workers rather than 2?

- #32: image: http://www.flickr.com/photos/whatknot/12974821/

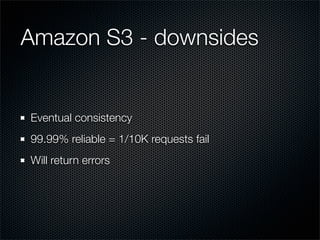

- #33: $1.80/GB/year

- #34: Keys can be 1KB, and values(objects) can be up to 5GB

- #35: APIs for every language means its easy to incorporate into offline applications as well

- #36: Clever access control allows you to delegate authorization

- #37: Eventual consistency means that when your PUT request returns, it’ll be in at least 2 datacenters. But it might not be replicated across all of S3 yet, so an immediate GET request might return a not-found error. Likewise with 2 concurrent writes, it’ll take a while for (a random) one to win.

- #39: What happens when we get a bit bigger, and we start wanting more than one of anything? When we get load spikes and need 6 or 12 App servers, or 10 Workers rather than 2?

- #40: image: http://www.flickr.com/photos/phantomkitty/259379993/

- #42: Generating 60 million map tiles in a few hours for $40

- #43: Video encoding

- #44: Facebook apps with 300K users signing up in 24 hours

- #47: So now we can have as many App servers as needed, and as many Workers as needed

- #51: Used for indexing the web.

- #52: Geo-example: finding closest servo for any point on a road network