Ad

【DL輪読会】Drag Your GAN: Interactive Point-based Manipulation on the Generative Image Manifold

- 1. 1 DEEP LEARNING JP [DL Papers] http://deeplearning.jp/ Drag Your GAN: Interactive Point-based Manipulation on the Generative Image Manifold Yuki Sato, University ofTsukuba M2

- 2. 書誌情報 Drag Your GAN: Interactive Point-based Manipulation on the Generative Image Manifold Xingang Pan1,2, Ayush Tewari3, Thomas Leimkühler1, Lingjie Liu1,4, Abhimitra Meka5, Christian Theobalt1,2 1Max Planck Instutute 2Saarbrücken Research Center 3MIT 4University of Pennsylvania 5Google AR/VR • 投稿先: SIGGRAPH 2023 • プロジェクトページ: https://vcai.mpi-inf.mpg.de/projects/DragGAN/ • 選定理由 ➢ GANの生成画像の潜在変数を直接最適化することで、追加のネットワークの学習を必要とせず、 短時間で実行可能である ➢ インタラクティブな操作による高品質な画像編集を可能とした 2

- 3. 概要 • GANの生成画像に対して、画像内で任意のハンドル点をターゲット点に近づくよ うに逐次的に処理する画像編集手法を提案 • 追加のネットワークを学習するのではなく、StyleGANの特徴マップを直接最適化 することで高速な画像生成が可能 • 複数の点を同時に変形させつつ、ハンドル点と関連のない画像領域を保存可能 3

- 4. 背景 目的:任意の生成画像のポーズ・形状・表情・レイアウトの正確な制御 既存手法: • 3次元表現を用いた手法やアノテーションデータを用いた教師あり学習 → 学習データに依存し、編集可能なオブジェクトが限定される • 自然言語による条件付け → 生成画像の精度や、位置・形状・レイアウトなど異なる条件を独立して制御 することが難しい 対話的に画像内の複数のハンドル点を操作 ・GANの特徴マップを直接最適化するため追加の学習が必要なく、オブジェクト の種類に限定されない ・ポイントベースの操作による正確な制御が可能 4

- 5. StyleGAN StyleGAN[1] • Mapping Networkを用いて特徴量のもつれ をなくした中間潜在変数を利用し、各解 像度で正規化を行うことで、細かな特徴 を制御可能な高解像度画像生成が可能 StyleGAN2[2] • AdaINを標準偏差を用いた正規化に置き 換え、Generator, Discriminatorの構造を改 良することで、生成画像の品質向上を達 成 5 [1]より引用 1. Karras, Tero, Samuli Laine, and Timo Aila. “A style-based generator architecture for generative adversarial networks." Proceedings of the IEEE/CVF conference on computer vision and pattern recognition. 2019. 2. Karras, Tero, et al. "Analyzing and improving the image quality of stylegan." Proceedings of the IEEE/CVF conference on computer vision and pattern recognition. 2020.

- 6. GANの制御性 潜在変数ベクトルを編集 • アノテーションデータや3Dモデルを用いた教師あり学習を利用 • 物体位置の移動精度が低いなど正確な制御が難しい ポイントベースの手法 • 画像の特徴を独立に、正確に操作可能 • GANWarping[3]:ポイントベースの編集手法だが、3次元姿勢の制御など困難なタ スクが存在 • UserControllableLT[4]:GANの潜在変数をユーザの入力を用いて変換して入力するこ とで画像を編集するが、画像内で1方向へのみドラッグ可能であり複数点を異な る方向に同時に編集できない 6 3. Wang, Sheng-Yu, David Bau, and Jun-Yan Zhu. "Rewriting geometric rules of a gan." ACM Transactions on Graphics (TOG) 41.4 (2022): 1-16. 4. Endo, Yuki. "User-Controllable Latent Transformer for StyleGAN Image Layout Editing." arXiv preprint arXiv:2208.12408 (2022).

- 7. Point tracking 目的:連続した画像間における対応する点の動きを推定 • 連続したフレーム間のオプティカルフロー推定 RAFT[5] • 画素単位で特徴量を抽出し相関を算出し、RNNによる反復処理で推定を行う PIPs[6] • 複数フレームにまたがる任意のピクセルを追跡してフローを推論可能 両手法ともフロー予測のためのモデルを別途学習させる必要がある 7 5. Teed, Zachary, and Jia Deng. "Raft: Recurrent all-pairs field transforms for optical flow." Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, August 23–28, 2020, Proceedings, Part II 16. Springer International Publishing, 2020. 6. Harley, Adam W., Zhaoyuan Fang, and Katerina Fragkiadaki. "Particle Video Revisited: Tracking Through Occlusions Using Point Trajectories." Computer Vision–ECCV 2022: 17th European Conference, Tel Aviv, Israel, October 23–27, 2022, Proceedings, Part XXII. Cham: Springer Nature Switzerland, 2022.

- 8. DragGAN: 概要 目的: 𝑛個のハンドル点𝑝𝑖を対応するターゲット点𝑡𝑖に到達するように中間潜在変 数wを最適化する →追加のネットワークの学習を必要としないポイントベースの編集手法 入力: • ハンドル点 𝑝𝑖 = 𝑥𝑝,𝑖, 𝑦𝑝,𝑖 | 𝑖 = 1,2, … 𝑛 • ターゲット点 𝑡𝑖 = 𝑥𝑡,𝑖, 𝑦𝑡,𝑖 | 𝑖 = 1,2, … 𝑛 • バイナリマスク(任意) M 出力: • 変換後の画像 8

- 9. DragGAN : 概要 StyleGAN2 • 特徴マップ(F)にはStyleGAN2の6ブロック目の出力を使用 – 実験より、解像度と識別性(特徴マップを用いたL1損失によるハンドル点の追跡精度)のトレー ドオフが最も良いため – 最適化の対象である中間潜在変数wも6ブロック目の入力までとした • Fを生成画像と同じ解像度にバイリニア補完 反復処理 1. motion supervision: 𝑝𝑖を𝑡𝑖の方向へ近づけるようにwを更新し特徴マップF′を獲得 2. point tracking: F′を用いて𝑝𝑖を更新 9

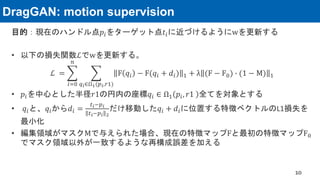

- 10. DragGAN: motion supervision 目的:現在のハンドル点𝑝𝑖をターゲット点𝑡𝑖に近づけるようにwを更新する • 以下の損失関数ℒでwを更新する。 ℒ = 𝑖=0 𝑛 𝑞𝑖∈Ω1(𝑝𝑖,𝑟1) F 𝑞𝑖 − F(𝑞𝑖 + 𝑑𝑖) 1 + λ (F − F0) ∙ (1 − M) 1 • 𝑝𝑖を中心とした半径𝑟1の円内の座標𝑞𝑖 ∈ Ω1 𝑝𝑖, 𝑟1 全てを対象とする • 𝑞𝑖と、𝑞𝑖から𝑑𝑖 = 𝑡𝑖−𝑝𝑖 𝑡𝑖−𝑝𝑖 2 だけ移動した𝑞𝑖 + 𝑑𝑖に位置する特徴ベクトルのL1損失を 最小化 • 編集領域がマスクMで与えられた場合、現在の特徴マップFと最初の特徴マップF0 でマスク領域以外が一致するような再構成誤差を加える 10

- 11. DragGAN: point tracking 目的:特徴マップF′内で対応するハンドル点𝑝𝑖を探索し、更新する • 以下の更新式を適用 𝑝𝑖 ≔ argmin 𝑞𝑖∈Ω2(𝑝𝑖,𝑟2) 𝐹′ 𝑞𝑖 − 𝐹0 𝑝𝑖 1 • Ω2 𝑝𝑖, 𝑟2 = 𝑥, 𝑦 𝑥 − 𝑥𝑝,𝑖 < 𝑟2, 𝑦 − 𝑦𝑝,𝑖 < 𝑟2 に属する座標𝑞𝑖全てを対象とす る 11

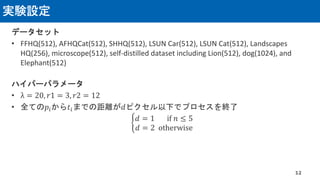

- 12. 実験設定 データセット • FFHQ(512), AFHQCat(512), SHHQ(512), LSUN Car(512), LSUN Cat(512), Landscapes HQ(256), microscope(512), self-distilled dataset including Lion(512), dog(1024), and Elephant(512) ハイパーパラメータ • λ = 20, 𝑟1 = 3, 𝑟2 = 12 • 全ての𝑝𝑖から𝑡𝑖までの距離が𝑑ピクセル以下でプロセスを終了 ቊ 𝑑 = 1 if 𝑛 ≤ 5 𝑑 = 2 otherwise 12

- 13. 実験内容 実験の種類 • 生成画像に対する編集 • 実画像を入力とし、GAN Inversionを用いて潜在変数を獲得して編集 • 顔画像を2枚生成しそれぞれのランドマークを検出、入力画像のランドマークを 編集しターゲットのランドマークと一致するように最大300回反復して編集 • 2枚の画像A,Bを生成しAからBへのフローを算出し、フロー内のランダムな点をハ ンドル点としてAをBと一致するように最大100回反復して編集 (Ablation study) StyleGAN2の使用するブロックを変更 / 𝑟1を変更 評価方法 • 生成精度:FID • 再構成誤差:MSE, LPIPS, MD(ターゲット点と最終更新後のハンドル点の平均距離) • 処理時間 13

- 14. 比較手法 対話的な点ベースの編集 • UserControllableLTをベースラインとした • マスクを用いた実験を行う際、 UserControllableLTはマスク入力を受け付けないた め、16 × 16のグリッドでマスクに含まれない点を固定点とした point tracking手法の比較 • DragGANのpoint tracking手法を、従来手法であるRAFTとPIPsに置き換えて精度を比 較した 14

- 16. 実験結果: point trackingの比較 • RAFTやPIPsでは、操作中にハンドル点が異なる点となり、正しく移動できなかっ た • point trackingを行わない場合、ハンドル点は背景に着いてしまい編集されなかっ た 16

- 17. 実験結果: 画像を入力とした編集 • PIT[7]を用いて実画像から潜在変数を獲得して編集した結果、表情や姿勢、形状を 高い精度で編集できていた 17 7. Roich, Daniel, et al. "Pivotal tuning for latent-based editing of real images." ACM Transactions on Graphics (TOG) 42.1 (2022): 1-13.

- 18. 実験結果: ハンドル点のトラッキング精度 • 実験は1000回行い平均を算 出した • DragGANではターゲットに合 わせて口を開け、顎の形状 がターゲット点と近かった • DragGANは高精度なトラッキ ングにより、従来手法と比 較して高い精度を示した • 実行時間については、 UserControllableLTが最も高速 であった 18 ハンドル点の数ごとのMD ハンドル点が1点の場合の結果

- 19. 実験結果: 再構成の精度 • 実験では、潜在変数𝑤1から得られた画像と、 𝑤1 にランダムな摂動を加えた 𝑤2 から得られる画像をペアとしてフローを計算した • 実験を1000回行い平均を算出した • 再構成タスクにおいて、DragGANは既存手法を上回る精度を示した • point trackingについてもDragGANで提案された手法が最も良い精度を示した 19

- 20. 実験結果: Ablation study • StyleGANの6ブロック後の特徴マップが最も性能が良かった • 𝑟1の変化に対して敏感ではないが𝑟1 = 3が若干精度が高かった 20

- 21. Discussion マスクの有効性 • マスクを加えることでマスク領域外を保 持して編集が可能である 分布外の表現 • 口の内部などデータ分布に含まれない画 像を生成可能であるが学習データに依存 すると考えられる Limitation • データ分布に沿わないデータを生成しよ うとするとアーティファクトが現れた • テクスチャがない点をハンドル点とする とトラッキングが正常に動作しない 21

- 23. DragDiffusion[8] 概要: 大規模拡散モデルを用いた広いドメインに対応する対話的編集モデル DragGANの手法をDiffusion Modelの特定の時刻のデータに対して適用 (A)学習済みモデルをLoRA[9]を用いてfine-tuneし、入力画像を再構成できるパラメータを獲得 (B) DDIMを用いて拡散過程を計算し、逆拡散過程の特定の時刻𝑡でノイズデータを編集する 1. 時刻𝑡のノイズデータ𝑧𝑡を𝑧𝑡 0 とし、 𝑧𝑡 𝑘 にDragGANで提案されたmotion supervisionを適用 したෝ 𝑧𝑡 𝑘 を用いて損失を計算し𝑧𝑡 𝑘+1を得る 2. 更新した𝑧𝑡 𝑘+1 と𝑧𝑡 0 を用いてDragGANで提案されたpoint trackingを行い、ハンドル点を更 新する 23 8. Yujun, Shi, et al. “DragDiffusion: Harnessing Diffusion Models for Interactive Point-based Image Editing”. arXiv preprint arXiv:2306.14435 (2023). 9. Hu, Edward J., et al. "Lora: Low-rank adaptation of large language models." arXiv preprint arXiv:2106.09685 (2021). [8]より引用

- 24. DragDiffusion 実験設定 • Diffusion Model: Stable Diffusion 1.5[10], LoRA: 200 step, DDIM: 50 step • DDIMの40step目を編集 実験結果 • 定性的には自然に編集できている 気になる点 • LoRAなしでデータセットに沿った画像を編集した 結果 • 実行時間 • DragGANのlimitationで述べられていた点に関する 実験結果 24 10. Rombach, Robin, et al. "High-resolution image synthesis with latent diffusion models." Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 2022.

- 25. 感想 • GANを用いることで反復処理の実行時間を短縮しており、アプリケーション等へ の応用が期待できる – テキストを用いた条件付けと比較して明確な編集が可能であり、意図した画像を生成可能 – DragDiffusionではLoRAの処理が含まれるためDragGANと比較して低速であると考えられる • 3次元生成モデルが持つ3次元表現へ拡張することで編集の幅が広がる • Latent DiffusionのDecoderに対しても同様のことが成立するのか気になる – Diffusion Modelの高い表現能力とDragGANの高速な編集能力を両立できるか • StyleGAN-XLのような広いドメインを扱うモデルに対しても同様のことが成立する のか気になる – DragDiffusionではLoRAを用いたfine-tuneこの点をある程度考慮できていると考えられる • 編集後の生成画像の品質という点ではDiffusion Modelを用いる手法が勝ると考え られる – Diffusion Modelのノイズデータと生成画像は同じ解像度であるためDragGANと比較して編集位 置を明確化できている 25

![1

DEEP LEARNING JP

[DL Papers]

http://deeplearning.jp/

Drag Your GAN: Interactive Point-based Manipulation on

the Generative Image Manifold

Yuki Sato, University ofTsukuba M2](https://image.slidesharecdn.com/sato20230630v2-230705050613-5438760d/85/DL-Drag-Your-GAN-Interactive-Point-based-Manipulation-on-the-Generative-Image-Manifold-1-320.jpg)

![StyleGAN

StyleGAN[1]

• Mapping Networkを用いて特徴量のもつれ

をなくした中間潜在変数を利用し、各解

像度で正規化を行うことで、細かな特徴

を制御可能な高解像度画像生成が可能

StyleGAN2[2]

• AdaINを標準偏差を用いた正規化に置き

換え、Generator, Discriminatorの構造を改

良することで、生成画像の品質向上を達

成

5

[1]より引用

1. Karras, Tero, Samuli Laine, and Timo Aila. “A style-based generator architecture for generative adversarial networks." Proceedings of the IEEE/CVF conference on computer vision and pattern

recognition. 2019.

2. Karras, Tero, et al. "Analyzing and improving the image quality of stylegan." Proceedings of the IEEE/CVF conference on computer vision and pattern recognition. 2020.](https://image.slidesharecdn.com/sato20230630v2-230705050613-5438760d/85/DL-Drag-Your-GAN-Interactive-Point-based-Manipulation-on-the-Generative-Image-Manifold-5-320.jpg)

![GANの制御性

潜在変数ベクトルを編集

• アノテーションデータや3Dモデルを用いた教師あり学習を利用

• 物体位置の移動精度が低いなど正確な制御が難しい

ポイントベースの手法

• 画像の特徴を独立に、正確に操作可能

• GANWarping[3]:ポイントベースの編集手法だが、3次元姿勢の制御など困難なタ

スクが存在

• UserControllableLT[4]:GANの潜在変数をユーザの入力を用いて変換して入力するこ

とで画像を編集するが、画像内で1方向へのみドラッグ可能であり複数点を異な

る方向に同時に編集できない

6

3. Wang, Sheng-Yu, David Bau, and Jun-Yan Zhu. "Rewriting geometric rules of a gan." ACM Transactions on Graphics (TOG) 41.4 (2022): 1-16.

4. Endo, Yuki. "User-Controllable Latent Transformer for StyleGAN Image Layout Editing." arXiv preprint arXiv:2208.12408 (2022).](https://image.slidesharecdn.com/sato20230630v2-230705050613-5438760d/85/DL-Drag-Your-GAN-Interactive-Point-based-Manipulation-on-the-Generative-Image-Manifold-6-320.jpg)

![Point tracking

目的:連続した画像間における対応する点の動きを推定

• 連続したフレーム間のオプティカルフロー推定

RAFT[5]

• 画素単位で特徴量を抽出し相関を算出し、RNNによる反復処理で推定を行う

PIPs[6]

• 複数フレームにまたがる任意のピクセルを追跡してフローを推論可能

両手法ともフロー予測のためのモデルを別途学習させる必要がある

7

5. Teed, Zachary, and Jia Deng. "Raft: Recurrent all-pairs field transforms for optical flow." Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, August 23–28, 2020, Proceedings, Part

II 16. Springer International Publishing, 2020.

6. Harley, Adam W., Zhaoyuan Fang, and Katerina Fragkiadaki. "Particle Video Revisited: Tracking Through Occlusions Using Point Trajectories." Computer Vision–ECCV 2022: 17th European Conference,

Tel Aviv, Israel, October 23–27, 2022, Proceedings, Part XXII. Cham: Springer Nature Switzerland, 2022.](https://image.slidesharecdn.com/sato20230630v2-230705050613-5438760d/85/DL-Drag-Your-GAN-Interactive-Point-based-Manipulation-on-the-Generative-Image-Manifold-7-320.jpg)

![実験結果: 画像を入力とした編集

• PIT[7]を用いて実画像から潜在変数を獲得して編集した結果、表情や姿勢、形状を

高い精度で編集できていた

17

7. Roich, Daniel, et al. "Pivotal tuning for latent-based editing of real images." ACM Transactions on Graphics (TOG) 42.1 (2022): 1-13.](https://image.slidesharecdn.com/sato20230630v2-230705050613-5438760d/85/DL-Drag-Your-GAN-Interactive-Point-based-Manipulation-on-the-Generative-Image-Manifold-17-320.jpg)

![DragDiffusion[8]

概要: 大規模拡散モデルを用いた広いドメインに対応する対話的編集モデル

DragGANの手法をDiffusion Modelの特定の時刻のデータに対して適用

(A)学習済みモデルをLoRA[9]を用いてfine-tuneし、入力画像を再構成できるパラメータを獲得

(B) DDIMを用いて拡散過程を計算し、逆拡散過程の特定の時刻𝑡でノイズデータを編集する

1. 時刻𝑡のノイズデータ𝑧𝑡を𝑧𝑡

0

とし、 𝑧𝑡

𝑘

にDragGANで提案されたmotion supervisionを適用

したෝ

𝑧𝑡

𝑘

を用いて損失を計算し𝑧𝑡

𝑘+1を得る

2. 更新した𝑧𝑡

𝑘+1

と𝑧𝑡

0

を用いてDragGANで提案されたpoint trackingを行い、ハンドル点を更

新する

23

8. Yujun, Shi, et al. “DragDiffusion: Harnessing Diffusion Models for Interactive Point-based Image Editing”. arXiv preprint arXiv:2306.14435 (2023).

9. Hu, Edward J., et al. "Lora: Low-rank adaptation of large language models." arXiv preprint arXiv:2106.09685 (2021).

[8]より引用](https://image.slidesharecdn.com/sato20230630v2-230705050613-5438760d/85/DL-Drag-Your-GAN-Interactive-Point-based-Manipulation-on-the-Generative-Image-Manifold-23-320.jpg)

![DragDiffusion

実験設定

• Diffusion Model: Stable Diffusion 1.5[10], LoRA: 200 step, DDIM: 50 step

• DDIMの40step目を編集

実験結果

• 定性的には自然に編集できている

気になる点

• LoRAなしでデータセットに沿った画像を編集した

結果

• 実行時間

• DragGANのlimitationで述べられていた点に関する

実験結果

24

10. Rombach, Robin, et al. "High-resolution image synthesis with latent diffusion models." Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 2022.](https://image.slidesharecdn.com/sato20230630v2-230705050613-5438760d/85/DL-Drag-Your-GAN-Interactive-Point-based-Manipulation-on-the-Generative-Image-Manifold-24-320.jpg)

![[DL輪読会]Flow-based Deep Generative Models](https://cdn.slidesharecdn.com/ss_thumbnails/20190307-190328024744-thumbnail.jpg?width=560&fit=bounds)

![[DL輪読会]GQNと関連研究,世界モデルとの関係について](https://cdn.slidesharecdn.com/ss_thumbnails/20180817-180827085537-thumbnail.jpg?width=560&fit=bounds)

![[DL輪読会]NVAE: A Deep Hierarchical Variational Autoencoder](https://cdn.slidesharecdn.com/ss_thumbnails/nvaeadeephierarchicalvariationalautoencoder-201113004930-thumbnail.jpg?width=560&fit=bounds)

![[DL輪読会]StarGAN: Unified Generative Adversarial Networks for Multi-Domain Ima...](https://cdn.slidesharecdn.com/ss_thumbnails/171222stargan-171225064145-thumbnail.jpg?width=560&fit=bounds)

![[DL輪読会]World Models](https://cdn.slidesharecdn.com/ss_thumbnails/20180427-180427003856-thumbnail.jpg?width=560&fit=bounds)

![[DL輪読会]Disentangling by Factorising](https://cdn.slidesharecdn.com/ss_thumbnails/20180720disentanglingbyfactorising-180720000930-thumbnail.jpg?width=560&fit=bounds)

![[DL輪読会]Understanding Black-box Predictions via Influence Functions](https://cdn.slidesharecdn.com/ss_thumbnails/dlhacksinffunc-170822055634-thumbnail.jpg?width=560&fit=bounds)

![[DL輪読会]Learning Latent Dynamics for Planning from Pixels](https://cdn.slidesharecdn.com/ss_thumbnails/taniguchi20181221-190104064850-thumbnail.jpg?width=560&fit=bounds)

![SSII2022 [TS3] コンテンツ制作を支援する機械学習技術〜 イラストレーションやデザインの基礎から最新鋭の技術まで 〜](https://cdn.slidesharecdn.com/ss_thumbnails/ts32022ssiiess-220607054523-e80be8dc-thumbnail.jpg?width=560&fit=bounds)

![[DL輪読会]3D Hair Synthesis Using Volumetric Variational Autoencoders](https://cdn.slidesharecdn.com/ss_thumbnails/201811303dhairkuboshizuma-181130002310-thumbnail.jpg?width=560&fit=bounds)

![[DL輪読会]Swin Transformer: Hierarchical Vision Transformer using Shifted Windows](https://cdn.slidesharecdn.com/ss_thumbnails/swintransformer-210514020542-thumbnail.jpg?width=560&fit=bounds)

![[チュートリアル講演]画像データを対象とする情報ハイディング〜JPEG画像を利用したハイディング〜](https://cdn.slidesharecdn.com/ss_thumbnails/slideupload-130524001604-phpapp02-thumbnail.jpg?width=560&fit=bounds)