Ad

[DL輪読会]Model soups: averaging weights of multiple fine-tuned models improves accuracy without increasing inference time

- 1. http://deeplearning.jp/ Model soups: averaging weights of multiple fine-tuned models improves accuracy without increasing inference time 小林 範久 Present Square Co.,Ltd. DEEP LEARNING JP [DL Papers] 1

- 2. Copyright (C) Present Square Co., Ltd. All Rights Reserved. 書誌情報 Model soups: averaging weights of multiple fine-tuned models improves accuracy without increasing inference time https://arxiv.org/abs/2203.05482 タイトル: 著者: Mitchell Wortsmany, Gabriel Ilharco, Samir Yitzhak Gadre, Rebecca Roelofs, Raphael Gontijo-Lopes, Ari S. Morcos, Hongseok Namkoong, Ali Farhadi, Yair Carmon, Simon Kornblith, Ludwig Schmidt • 異なるハイパーパラメータの構成で学習した複数のファインチューニングモデルの「重み」を平均化すること で、「精度」と「ロバスト性」が向上させる手法「Model soups」を提案。 • 従来のアンサンブルとは異なり、推論コストやメモリコストをかけることなく、多くのモデルを平均化することが できる。 • CLIP、ALIGN、JFTで事前学習したViT-Gを利用することで、ImageNetで最良のモデルよりも大幅 に改善し、90.94%のトップ1精度を達成。 • さらにこのアプローチが、複数の画像分類や自然言語処理タスクに拡張され、分布外性能を向上させ、 新しい下流タスクのゼロショット性能を向上させることを示す。 概要: 2

- 3. Copyright (C) Present Square Co., Ltd. All Rights Reserved. アジェンダ 1. 導入 2. 先行研究 3. 手法 4. 実験 5. まとめ 3

- 4. Copyright (C) Present Square Co., Ltd. All Rights Reserved. 1. 導入 背景 4 • 従来、モデルの精度を最大化するためには、 (1)様々なハイパーパラメータ構成でモデルをファインチューニングする。 (2)最も良い性能を示すモデルを選択する。(残りのモデルは破棄する。)という方法がある このとき、捨ててしまう他のモデル(の重み)を有効利用できないか、ということが本モデルの着眼点。 1つのモデルを選び、それ以外を捨てることには、いくつかのデメリットがある。 (1)選択されたモデルが最高の性能を発揮するとは限らない。特に、多くのモデルの出力を集めたアンサンブルは、 推論時に高い計算コストになるものの、最良の単一モデルを上回る性能を発揮することがある。 (2)下流タスクでモデルをファインチューニングすると、性能が低下することがある。

- 5. Copyright (C) Present Square Co., Ltd. All Rights Reserved. 5 モデルの重みの平均 • モデルの重みの平均化は、凸最適化および深層学習においてよく使われるアプローチである。 • ほとんどのアプリケーションは、同じ最適化軌道に沿ってモデルを研究している。 • Frankleらは、同じハイパーパラメータ構成でデータ順序が異なるモデルのペアをゼロから学習する場合、重みを補間 するとランダムな精度より良くならないことを発見した。しかし、2つのモデルが最適化の軌跡の一部を共有している場 合、それらを重みを補間(平均化)しても精度は落ちない。 • Frankleらは、2つのモデルが同じ事前学習されたモデルでファインチューニングするとき、補間されたモデルは少なくとも 終点において高い精度を達成することを実証している。 2. 先行研究 Model soupの違い • 初期化を共有するが独立して最適化したモデルの重み平均を利用する。 • FrankleらやNeyshaburらとは異なり、様々なハイパーパラメータ構成を持つ多くのモデルの平均化を考慮する。

- 6. Copyright (C) Present Square Co., Ltd. All Rights Reserved. 6 Averaging Weights Leads to Wider Optima and Better Generalization 出典:https://arxiv.org/pdf/1803.05407.pdf 2. 先行研究 • 「ニューラルネットワークは非線形であり、さまざまな損失盆地に多くの解が存在する可能性があるため、 個別にファインチューニングしたモデルの重みを平均化することで高いパフォーマンスが得られる」 • Loss randscapeの同じ盆地のなかで同じように初期化している。 • 単一の訓練軌道に沿った重みの平均化は、ランダムな初期化から訓練されたモデルのパフォーマンスを改善すること が示されている。

- 7. Copyright (C) Present Square Co., Ltd. All Rights Reserved. 7 • コンピュータビジョンや自然言語処理では,最適なモデルを大規模なデータセットで事前学習した後、目的のタスク のデータでファインチューニングすることが多い。転移学習とも呼ばれる。 • 近年、コンピュータビジョンにおいて、画像-テキスト事前学習は事前学習タスクとしてますます普及している。 2. 先行研究 事前学習とファインチューニング Model soupの違い • 初期化時にモデルを正則化する、調整する層を選択する、学習過程で層を再初期化する、あるいはデータ依存の ゲーティングで複数の事前学習済みモデルを使うことにより、転移学習を改善することが試みられているが、model soup では、標準的なエンドツーエンドのファインチューニングモデルを探求している。

- 8. Copyright (C) Present Square Co., Ltd. All Rights Reserved. 8 2. 先行研究 アンサンブルモデル • 多くのモデルの出力を組み合わせることは、精度を向上させるための基礎的な技法である。機械学習モデルの頑健 性がある。 • Ovadiaらは、分布シフトのもとでアンサンブルは高い精度を示すことを示している。 • Mustafaらは、事前学習したモデルのサブセットを識別してファインチューニングを行い、その後アンサンブルを行う方 法を提案し、強い分布内精度と分布シフトに対する頑健性を見出した。 • Gontijo-Lopes らは、アンサンブルの大規模な研究を行い、学習方法における高いダイバージェンスは、相関のな いエラーと、より良いアンサンブル精度につながることを発見した。 • これまでの研究では、ハイパーパラメータ探索によって生成されたモデルのアンサンブルを構築している。そのため、各モ デルを個別に推論する必要があり、計算コストが増加することが問題である。 Model soupの違い • アンサンブルとは異なり、model soupは推論時に余分な計算を必要としない。

- 9. Copyright (C) Present Square Co., Ltd. All Rights Reserved. 3. 手法 本研究の手法:Model soup 9 • 大規模な事前学習済みモデルのファインチューニングにおいて、より正確でロバストな代替案を提案する。 • 事前学習されたモデルのうち、最も精度の高いモデルを選択するのではなく、個別に調整したモデルの重みを平均化し、 その結果をModel soupと呼ぶ。 • 最初のステップの結果、ファインチューニングしたモデルに対してハイパーパラメータスイープを行い、これらのモデルのいくつ かを平均化してモデルスープを形成すると、追加の学習は必要なく、推論時のコストもかからない。 • 単一の学習軌道に沿った重みの平均化は、モデルの性能を向上させることが以前に示されている。本手法は、重み平 均をファインチューニングの文脈に拡張し、多くのデータ、モデル、タスクにわたっても有効であることを発見。

- 10. Copyright (C) Present Square Co., Ltd. All Rights Reserved. 10 model Soup の式とアルゴリズム 3. 手法 model soups θ = 𝐹𝑖𝑛𝑒𝑇𝑢𝑛𝑒(θ0, ℎ) θ𝑖 = 𝐹𝑖𝑛𝑒𝑇𝑢𝑛𝑒(θ0, ℎ𝑖) 𝑓(𝑥, θ𝑠) ニューラルネットワークのモデル関数 𝑓(𝑥, θ) ファインチューニングしたパラーメータ (入力 𝑥 , パラメータ 𝜃) (初期値 θ0 , ハイパーパラメータ ℎ) ファインチューニングしたパラーメータ (ハイパーパラメータ構成を考慮) (ハイパーパラメータ構成 ℎ1,・・・, ℎ𝑘 )

- 11. Copyright (C) Present Square Co., Ltd. All Rights Reserved. 11 3. 手法 ファインチューニングの前提 • 事前学習モデルの重みは同じ 具体的な方法 ハイパーパラメータの構成 1. 最適化関数(optimizer) 2. データ拡張(data augmentation) 3. 学習回数(training iterations) 4. データ順を決めるランダムシード(a random seed which will determine data order) Model Soupの種類 1. uniform soup:全てのモデルを一律組み込んで平均する。 2. greedy soup:検証データに対して精度が良かったもののみを平均する。 3. learned soup:勾配ベースのミニバッチ最適化によって重みを補完する。 (全てのモデルを同時にローディングする必要が有り、巨大なモデルを利用しているようになる。)

- 12. Copyright (C) Present Square Co., Ltd. All Rights Reserved. 4. 実験 セットアップ 12 • Fine-tuning:最終層だけでなく、すべての層も含め再学習させる。 • 利用するモデル:CLIP、ALIGN、ViT-G/14(特に明記しない限りCLIP ViT-B/32)。 • 分類器の初期化:LP初期化。 ※Fine-tuning前の分類器の初期化については、LP初期化とゼロショット初期化があるが、 両者は似たような傾向を示したので、前者を採用している。 • LP初期化:linear probe からモデルを初期化する方法。 • ゼロショット初期化:初期化としてCLIPもしくはALIGNのテクストタワーを利用しているもの。 • アンサブルのベースライン:正規化されていない出力をアンサンブル。 • 損失関数:クロスエントロピーロス。

- 13. Copyright (C) Present Square Co., Ltd. All Rights Reserved. 13 結果 • JFT-3Bで事前学習したViTG/14モデルをImageNet 上でファインチューニングした場合、個々の最適なモデルより も精度を向上。 • 分布シフト下でのモデル性能を評価するために、 ImageNet-V2, ImageNet-R, ImageNet-Sketch, ObjectNet, ImageNet-Aの平均精度を比較し、 Greedy soupの精度が上回ることを確認。 4. 実験 • Uniform soup(青丸)は、学習率、重み減衰、反復、 データ増大、混合、ラベル平滑化に関するランダムハイパー パラメータ探索において、すべてのファインチューニングモデル (緑の菱形)を平均化したものである。 • ImageNet上のCLIP ViT-B/32モデルを大規模かつラ ンダムにハイパーパラメータ探索し、ファインチューニングを行っ た場合、model soupは個々の最適なモデルよりも精度を 向上させる。

- 14. Copyright (C) Present Square Co., Ltd. All Rights Reserved. 4. 実験 Error landscape 14 • CLIPをImageNetでファインチューニングしたときの結果。 • ランダムシードや学習率が異なるときの学習時の損失とテストの不正解率、平均不正解率を示した図。 • これらの結果は、(1)2つのファインチューニングされたソリューションの重みを内挿すると、個々のモデルと比較して精度が 向上する可能性があり、(2)より相関のないソリューション(90度に近い角度を形成するモデル)が線形補間の精度を 高める可能性があることを示している。 最も高い正解率がファインチューニ ングモデルよりもそれらの間にある ことがわかる。 ※3つのモデルがまたがる平面の 正規直交基底u1、u2を取得し、 x軸とy軸はそれぞれこれらの方向 のパラメーター空間での動きを示 している。

- 15. Copyright (C) Present Square Co., Ltd. All Rights Reserved. 15 4. 実験 • 精度と角度(θ1 − θ0と θ2 − θ0) の相関を調べるために、異なる シード、学習率、データ補強で学習させた一連のモデルを考える。 • θ1、θ2について、以下の値を比較する(補間メリットと呼ぶ) • 右図より、内挿メリットと角度Φには相関があり、学習率、シード、 データ補強を変化させると、より直交性の高い解が得られることがわ かる。 精度と角度

- 16. Copyright (C) Present Square Co., Ltd. All Rights Reserved. 4. 結果 Fine-tuning CLIP and ALIGN 16 • x軸はモデルの数、y軸は精度。 • Uniform soupとGreedy soupの性 能、および、これまでの最良の単一モデ ルとアンサンブルの精度とモデル数の関 数として示している。 • Greedy soupはImageNetでは、 Uniform soupより良く、分布外では Uniform soupと同程度である。 • アンサンブルはImageNetではGreedy soupより優れているが、分布外では劣っている。 • すべての方法は、同じ量の学習と推論時の計算コストを必要とするが、アンサンブルは例外で、各モデルを個別に通過さ せる必要がある。

- 17. Copyright (C) Present Square Co., Ltd. All Rights Reserved. 4. 結果 Ablation on multiple methods 17 • CLIP ViT-B/32をランダムハイパーパラメータ探索でファインチューニングしたときのアブレーション。 • Greedy soup (random order)では、3つのランダムオーダーのレポート平均と標準偏差を試している。 • アンサンブルがモデルのキャリブレーションを向上させたのに対して、model soup は向上させることはできなかった。 Fine-tuning CLIP and ALIGN

- 18. Copyright (C) Present Square Co., Ltd. All Rights Reserved. 4. 実験 Fine-tuning CLIP and ALIGN 18 • Model soupの一般性を確認するために、分類タスクWILDS-FMoW と WILDS-iWildCam においても検証。 • 同様にmodel soupが精度を向上させる。これらの結果から、以下のことが確認できた。 (1)Greedy Soup は、最良の個別モデルよりも優れている。 追加のトレーニングや推論中の追加の計算がなくても、より優れたモデルを作成することができる。 (2)Uniform Soup は最高の個々のモデルよりも優れている可能性はあるが、 すべての個々のモデルが高精度を達成した場合にのみ、その可能性がある。

- 19. Copyright (C) Present Square Co., Ltd. All Rights Reserved. 4. 実験 Fine-tuning a ViT-G model pre-trained on JFT-3B 19 • ImageNet検証セット、5つの分布シフトデータセット、および、ReaL と multilabel という2つの再ラベル化ImageNet 検証セットでの結果を検証。 • このSoupは、ObjectNetを除く全てのデータセットにおいて、ファインチューニングした58のモデルのうち14を選択している。 • ReaL と ObjectNetにおいてのみ、Soupよりも統計的に有意に良い性能を示す個別モデルが存在し、この2つのデータ セットでは最適なモデルが異なっている。 • Greedy ensembleは、ImageNet top-1およびマルチラベル精度において、Greedy soupと同様の性能を示し、 ReaLではわずかに優れているが、Greedy soupの方が、ImageNet-V2を除くすべての分布シフトデータセットで有意に 優っている。

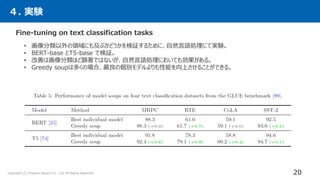

- 20. Copyright (C) Present Square Co., Ltd. All Rights Reserved. 4. 実験 Fine-tuning on text classification tasks 20 • 画像分類以外の領域にも及ぶかどうかを検証するために、自然言語処理にて実験。 • BERT-base とT5-base で検証。 • 改善は画像分類ほど顕著ではないが、自然言語処理においても効果がある。 • Greedy soupは多くの場合、最良の個別モデルよりも性能を向上させることができる。

- 21. Copyright (C) Present Square Co., Ltd. All Rights Reserved. 4. 実験 Robust fine-tuning 21 • ロバストファインチューニングのベースラインと比較したModel soup。 • WiSE-FTは、初期値θ0からファインチューニングしたモデルθ1 を θ1と θ0 の間で補間することにより、ロバスト性を向上させる。 • 下図は、通常のファインチューニングモデルとModel soupの両方について、これらの補間曲線に沿ったモデルの精度を表示し ている(左:LP初期化によるランダムハイパーパラメータ探索。 右:ゼロショット初期化による格子探索)。 • modelは個々のモデルが生成するWiSE-FT曲線の先にあり、model soupにWiSE-FTを適用することで、分布シフトの 精度を向上させることができる。

- 22. Copyright (C) Present Square Co., Ltd. All Rights Reserved. 4. 実験 Cross-dataset soups 22 • これまでの実験では、同じデータセットで異なるハイパラメータでファインチューニングされたmodel soupを検証してきた。 • 本節では、異なるデータセットでファインチューニングされたmodel soupを用意する。得られたsoupを、ラベル付き学習 データを用いないホールドアウトデータセットで評価する(すなわち、ゼロショット評価)。 • CIFAR-10 、Describable Textures 、Food-101 、SUN397 、Stanford Cars と ImageNet で個別にファイン チューニングした6モデルとともにCLIPゼロショット初期化に基づいてsoupを考察する。 • CIFAR-10とクラスを共有していないCIFAR-100 で評価する。 • 各タスクは異なるクラス集合を持つため、最後の層はスープの一部にはなり得ない。そこで、ファインチューニングの際に、 CLIPのテキストタワーが生成する線形headを凍結し、タスク固有の学習がバックボーン重みにのみ取り込まれるようにする。 • テスト時には、CLIPのテキストタワーとCIFAR-100のクラス名から構築したゼロショットheadを持つ「バックボーンスープ」を、 RadfordらがImageNetで用いたプロンプトアンサンブルを使用する。 内容

- 23. Copyright (C) Present Square Co., Ltd. All Rights Reserved. 4. 実験 23 Soups と アンサンブル の比較 • ゼロショットCLIPから、ImageNet、CIFAR-10、Food101、 SUN397、DTD、Carsでファインチューニングしたモデルを追加して soupを作成し、CIFAR-100で評価している。 • モデルを追加する順番が異なる場合は、薄く線を引いて示している。 • これらの各データセットで学習したモデルとゼロショットモデルを含む model soupにより、CIFAR-100のゼロショット性能がCLIPベー スラインより6.4%ポイント向上していることを示してる。 • Y軸に示したデータセットでファインチューニングしたモデルを Model soupに追加した場合のCIFAR-100の精度の平均変 化量を示す。 • どのファインチューニングされたモデルを含めるかの選択が、結果と して得られるsoupの精度に大きな影響を与えることを示している。

- 24. Copyright (C) Present Square Co., Ltd. All Rights Reserved. 4. 実験 24 • パラメータθ0とθ1 の2つのモデルのみからなるスープを考える。 • 以上から、損失差の近似値を以下のように導出する。 θα = 1 − α θ0 + αθ1 𝑒𝑟𝑟α 𝑒𝑛𝑠 は、通常 min{𝑒𝑟𝑟0, 𝑒𝑟𝑟1} より小さい。 交差エントロピー誤差 重みパラメータ 𝛼 ∈ [0, 1] とすると が、両エンドポイントである min{𝑒𝑟𝑟0, 𝑒𝑟𝑟1 }の最小値より低くなるのはいつなのか? アンサンブルモデルの式 アンサンブルモデルの 2-model soupとアンサンブルの性能差に関する解析的近似値の検証

- 25. Copyright (C) Present Square Co., Ltd. All Rights Reserved. 4. 実験 25 2-model soupとアンサンブルの性能差に関する解析的近似値の検証 • 近似をテストするために、異なる学習率、データ拡張、ランダムシード、𝛼値を持つファインチューニングしたモデルのセットで 評価した。soup modelを較正するためにβを設定し、soup/アンサンブル誤差の差を予測する近似の能力を向上させ ることがわかった。 • 10−4 という高い学習率を除いた場合、近似 値はerrorの差だけでなく、真のlossの差に も強い相関があり、近似値と真のlossの差 は概ね符号が一致していることが分かる。 全学習率(loss) 学習率𝟏𝟎−𝟒 未満(loss) 学習率𝟏𝟎−𝟒 未満(error) • 散布図上の各マーカーは、 (θ0, θ1 )と補間 重み 𝛼 の異なる選択を表す。 • 縦軸はsoupとアンサンブルの真の性能差(左 と中央はloss、右はerror)を示し、正の値は アンサンブルが優れていることを示す。横軸は、 損失差に対する近似値を示す。 • 上段は、較正パラメータ β を調整。下段はβを 1に固定した場合の結果。

- 26. Copyright (C) Present Square Co., Ltd. All Rights Reserved. 5. まとめ 結論 26 • 異なるハイパーパラメータで学習された複数のファインチューニングモデルの「重み」を平均化することで、 「精度」と「ロバスト性」が向上させる手法「Model soups」を提案。 • アンサンブルとは異なり、モデルスープは推論時に余分な計算を必要としない。 • 「CLIP、ALIGN、ViT-G」などで単一モデルよりも高い精度を達成。 • ゼロショット転移の性能が向上することを確認。 • 画像のみでなく、自然言語などのタスクにも利用できることを確認。 • 重み平均「weight-averaging」とアンサンブル「logit-ensemble」の類似性について分析し、実験的 に明らかにしている。

- 27. Copyright (C) Present Square Co., Ltd. All Rights Reserved. Appendix 参考文献 • [32] Jonathan Frankle, Gintare Karolina Dziugaite, Daniel Roy, and Michael Carbin. Linear mode connectivity and the lottery ticket hypothesis. In International Conference on Machine Learning (ICML), 2020. https://arxiv.org/abs/1912.05671. • [46] Pavel Izmailov, Dmitrii Podoprikhin, Timur Garipov, Dmitry Vetrov, and Andrew Gordon Wilson. Averaging weights leads to wider optima and better generalization. In Conference on Uncertainty in Articial Intelligence(UAI), 2018. https://arxiv.org/abs/1803.05407. • [47] Chao Jia, Yinfei Yang, Ye Xia, Yi-Ting Chen, Zarana Parekh, Hieu Pham, Quoc V Le, Yunhsuan Sung, Zhen Li, and Tom Duerig. Scaling up visual and vision-language representation learning with noisy text supervision. In International Conference on Machine Learning (ICML), 2021. https://arxiv.org/abs/2102.05918. • [72] Alec Radford, Jong Wook Kim, Chris Hallacy, Aditya Ramesh, Gabriel Goh, Sandhini Agarwal, Girish Sastry, Amanda Askell, Pamela Mishkin, Jack Clark, Gretchen Krueger, and Ilya Sutskever. Learning transferable visual models from natural language supervision. In International Conference on Machine Learning (ICML), 2021. https://arxiv.org/abs/2103.00020. • [102] Xiaohua Zhai, Alexander Kolesnikov, Neil Houlsby, and Lucas Beyer. Scaling vision transformers, 2021. https://arxiv.org/abs/2106.04560. 27

![http://deeplearning.jp/

Model soups: averaging weights of multiple fine-tuned models

improves accuracy without increasing inference time

小林 範久 Present Square Co.,Ltd.

DEEP LEARNING JP

[DL Papers]

1](https://image.slidesharecdn.com/dl0401-220405031053/85/DL-Model-soups-averaging-weights-of-multiple-fine-tuned-models-improves-accuracy-without-increasing-inference-time-1-320.jpg)

![Copyright (C) Present Square Co., Ltd. All Rights Reserved.

4. 実験

24

• パラメータθ0とθ1 の2つのモデルのみからなるスープを考える。

• 以上から、損失差の近似値を以下のように導出する。

θα = 1 − α θ0 + αθ1

𝑒𝑟𝑟α

𝑒𝑛𝑠

は、通常 min{𝑒𝑟𝑟0, 𝑒𝑟𝑟1} より小さい。

交差エントロピー誤差

重みパラメータ 𝛼 ∈ [0, 1] とすると

が、両エンドポイントである min{𝑒𝑟𝑟0, 𝑒𝑟𝑟1 }の最小値より低くなるのはいつなのか?

アンサンブルモデルの式

アンサンブルモデルの

2-model soupとアンサンブルの性能差に関する解析的近似値の検証](https://image.slidesharecdn.com/dl0401-220405031053/85/DL-Model-soups-averaging-weights-of-multiple-fine-tuned-models-improves-accuracy-without-increasing-inference-time-24-320.jpg)

![Copyright (C) Present Square Co., Ltd. All Rights Reserved.

Appendix

参考文献

• [32] Jonathan Frankle, Gintare Karolina Dziugaite, Daniel Roy, and Michael Carbin. Linear mode connectivity and the

lottery ticket hypothesis. In International Conference on Machine Learning (ICML), 2020.

https://arxiv.org/abs/1912.05671.

• [46] Pavel Izmailov, Dmitrii Podoprikhin, Timur Garipov, Dmitry Vetrov, and Andrew Gordon Wilson. Averaging weights

leads to wider optima and better generalization. In Conference on Uncertainty in Articial Intelligence(UAI), 2018.

https://arxiv.org/abs/1803.05407.

• [47] Chao Jia, Yinfei Yang, Ye Xia, Yi-Ting Chen, Zarana Parekh, Hieu Pham, Quoc V Le, Yunhsuan Sung, Zhen Li, and

Tom Duerig. Scaling up visual and vision-language representation learning with noisy text supervision. In International

Conference on Machine Learning (ICML), 2021. https://arxiv.org/abs/2102.05918.

• [72] Alec Radford, Jong Wook Kim, Chris Hallacy, Aditya Ramesh, Gabriel Goh, Sandhini Agarwal, Girish Sastry, Amanda

Askell, Pamela Mishkin, Jack Clark, Gretchen Krueger, and Ilya Sutskever. Learning transferable visual models from

natural language supervision. In International Conference on Machine Learning (ICML), 2021.

https://arxiv.org/abs/2103.00020.

• [102] Xiaohua Zhai, Alexander Kolesnikov, Neil Houlsby, and Lucas Beyer. Scaling vision transformers, 2021.

https://arxiv.org/abs/2106.04560.

27](https://image.slidesharecdn.com/dl0401-220405031053/85/DL-Model-soups-averaging-weights-of-multiple-fine-tuned-models-improves-accuracy-without-increasing-inference-time-27-320.jpg)

![[DL輪読会]GLIDE: Guided Language to Image Diffusion for Generation and Editing](https://cdn.slidesharecdn.com/ss_thumbnails/glide2-220107030326-thumbnail.jpg?width=560&fit=bounds)

![[DL輪読会]GQNと関連研究,世界モデルとの関係について](https://cdn.slidesharecdn.com/ss_thumbnails/20180817-180827085537-thumbnail.jpg?width=560&fit=bounds)

![[DL輪読会]Grokking: Generalization Beyond Overfitting on Small Algorithmic Datasets](https://cdn.slidesharecdn.com/ss_thumbnails/20220325okimura-220405024717-thumbnail.jpg?width=560&fit=bounds)

![[DL輪読会]“SimPLe”,“Improved Dynamics Model”,“PlaNet” 近年のVAEベース系列モデルの進展とそのモデルベース...](https://cdn.slidesharecdn.com/ss_thumbnails/20190426akuzawa-190426020057-thumbnail.jpg?width=560&fit=bounds)

![[DL輪読会]Decision Transformer: Reinforcement Learning via Sequence Modeling](https://cdn.slidesharecdn.com/ss_thumbnails/decisiontransformer20210709zhangxin-210709021501-thumbnail.jpg?width=560&fit=bounds)

![[DL輪読会] Spectral Norm Regularization for Improving the Generalizability of De...](https://cdn.slidesharecdn.com/ss_thumbnails/dlhackspectralnorm1-170907072536-thumbnail.jpg?width=560&fit=bounds)

![SSII2021 [OS2-01] 転移学習の基礎:異なるタスクの知識を利用するための機械学習の方法](https://cdn.slidesharecdn.com/ss_thumbnails/os2-02final-210610091211-thumbnail.jpg?width=560&fit=bounds)

![[DL輪読会]Focal Loss for Dense Object Detection](https://cdn.slidesharecdn.com/ss_thumbnails/focalloss-180208092846-thumbnail.jpg?width=560&fit=bounds)