[DL輪読会]Swin Transformer: Hierarchical Vision Transformer using Shifted Windows

Download as PPTX, PDF4 likes4,004 views

2021/05/14 Deep Learning JP: http://deeplearning.jp/seminar-2/

1 of 27

Downloaded 56 times

![1

DEEP LEARNING JP

[DL Papers]

http://deeplearning.jp/

Swin Transformer: Hierarchical Vision Transformer using

ShiftedWindows

Kazuki Fujikawa](https://image.slidesharecdn.com/swintransformer-210514020542/85/DL-Swin-Transformer-Hierarchical-Vision-Transformer-using-Shifted-Windows-1-320.jpg)

![関連研究

• Vision Transformer [Dosovitskiy+, ICLR2021]

– 入力画像をパッチ(画像の断片)に分割し、Transformerへ入力

• Patch Embedding: パッチ内のピクセルを1次元に並び替えた上で線形写像を取る

• Patch Embedding に Positional Encoding を加えることで、パッチの元画像内での位置を表現

7](https://image.slidesharecdn.com/swintransformer-210514020542/85/DL-Swin-Transformer-Hierarchical-Vision-Transformer-using-Shifted-Windows-7-320.jpg)

![関連研究

• Vision Transformer [Dosovitskiy+, ICLR2021]

– 課題: Self-Attention の計算コスト

• 画像内のすべてのPatchに対してAttentionの計算を行うため、計算コストは画像サイズに対して

二乗で増加する

8](https://image.slidesharecdn.com/swintransformer-210514020542/85/DL-Swin-Transformer-Hierarchical-Vision-Transformer-using-Shifted-Windows-8-320.jpg)

![実験: Object Detection

• 実験設定

– タスク

• COCO Object Detection

• 4種の主要な物体検出フレームワークのバックボーンに採用して実験

– Cascade Mask R-CNN [He+, 2016]

– ATSS [Zagoruyko+, 2016]

– RedPoints v2 [Chen+, 2020]

– Sparse RCNN [Sun+, 2020]

20](https://image.slidesharecdn.com/swintransformer-210514020542/85/DL-Swin-Transformer-Hierarchical-Vision-Transformer-using-Shifted-Windows-20-320.jpg)

Ad

Recommended

Swin Transformer (ICCV'21 Best Paper) を完璧に理解する資料

Swin Transformer (ICCV'21 Best Paper) を完璧に理解する資料Yusuke Uchida 第9回全日本コンピュータビジョン勉強会「ICCV2021論文読み会」の発表資料です

https://kantocv.connpass.com/event/228283/

ICCV'21 Best PaperであるSwin Transformerを完全に理解するためにふんだんに余談を盛り込んだ資料となります

Apresentação do documento 85 da cnbb

Apresentação do documento 85 da cnbbBernadetecebs . O documento discute a importância de conhecer os jovens para poder evangelizá-los. A juventude é vista como uma realidade teológica significativa, e os jovens precisam ouvir sobre um Deus que é real em sua experiência juvenil. A evangelização da Igreja deve mostrar aos jovens a beleza e sacralidade de sua juventude.

【メタサーベイ】Video Transformer

【メタサーベイ】Video Transformercvpaper. challenge cvpaper.challenge の メタサーベイ発表スライドです。

cvpaper.challengeはコンピュータビジョン分野の今を映し、トレンドを創り出す挑戦です。論文サマリ作成・アイディア考案・議論・実装・論文投稿に取り組み、凡ゆる知識を共有します。

http://xpaperchallenge.org/cv/

ドメイン適応の原理と応用

ドメイン適応の原理と応用Yoshitaka Ushiku 2020/6/11 画像センシングシンポジウム オーガナイズドセッション2 「限られたデータからの深層学習」

https://confit.atlas.jp/guide/event/ssii2020/static/organized#OS2

での招待講演資料です。

コンピュータビジョン分野を中心とした転移学習についての講演です。

パブリックなデータセットも増えていて、物体検出や領域分割などの研究も盛んですが、実際に社会実装しようとするときのデータは学習データと異なる性質(異なるドメイン)のデータである場合も非常に多いです。

本講演では、そのような場合に有効なドメイン適応の原理となるアプローチ2つと応用としての物体検出と領域分割の事例を紹介しています。

Transformer メタサーベイ

Transformer メタサーベイcvpaper. challenge cvpaper.challenge の メタサーベイ発表スライドです。

cvpaper.challengeはコンピュータビジョン分野の今を映し、トレンドを創り出す挑戦です。論文サマリ作成・アイディア考案・議論・実装・論文投稿に取り組み、凡ゆる知識を共有します。

http://xpaperchallenge.org/cv/

【DL輪読会】ViT + Self Supervised Learningまとめ

【DL輪読会】ViT + Self Supervised LearningまとめDeep Learning JP Several recent papers have explored self-supervised learning methods for vision transformers (ViT). Key approaches include:

1. Masked prediction tasks that predict masked patches of the input image.

2. Contrastive learning using techniques like MoCo to learn representations by contrasting augmented views of the same image.

3. Self-distillation methods like DINO that distill a teacher ViT into a student ViT using different views of the same image.

4. Hybrid approaches that combine masked prediction with self-distillation, such as iBOT.

SSII2022 [TS1] Transformerの最前線〜 畳込みニューラルネットワークの先へ 〜![SSII2022 [TS1] Transformerの最前線〜 畳込みニューラルネットワークの先へ 〜](https://cdn.slidesharecdn.com/ss_thumbnails/ts120220608ssiitransformerr2-220607054025-3adacf07-thumbnail.jpg?width=560&fit=bounds)

![SSII2022 [TS1] Transformerの最前線〜 畳込みニューラルネットワークの先へ 〜](https://cdn.slidesharecdn.com/ss_thumbnails/ts120220608ssiitransformerr2-220607054025-3adacf07-thumbnail.jpg?width=560&fit=bounds)

![SSII2022 [TS1] Transformerの最前線〜 畳込みニューラルネットワークの先へ 〜](https://cdn.slidesharecdn.com/ss_thumbnails/ts120220608ssiitransformerr2-220607054025-3adacf07-thumbnail.jpg?width=560&fit=bounds)

![SSII2022 [TS1] Transformerの最前線〜 畳込みニューラルネットワークの先へ 〜](https://cdn.slidesharecdn.com/ss_thumbnails/ts120220608ssiitransformerr2-220607054025-3adacf07-thumbnail.jpg?width=560&fit=bounds)

SSII2022 [TS1] Transformerの最前線〜 畳込みニューラルネットワークの先へ 〜SSII 6/8 (水) 09:45~10:55メイン会場

講師:牛久 祥孝 氏

(オムロンサイニックエックス株式会社)

概要: 2017年に機械翻訳を対象として提案されたTransformerは、従来の畳込みや再帰を排して自己注意機構を活用したニューラルネットワークである。2019年頃からコンピュータビジョン分野でも急速に応用が進んでおり、より柔軟かつ高精度なネットワーク構造としての地位を確立しつつある。本チュートリアルでは、Transformerおよびその周辺のネットワーク構造について、コンピュータビジョンへの応用を中心とした最前線を概説する。

画像生成・生成モデル メタサーベイ

画像生成・生成モデル メタサーベイcvpaper. challenge cvpaper.challenge の メタサーベイ発表スライドです。

cvpaper.challengeはコンピュータビジョン分野の今を映し、トレンドを創り出す挑戦です。論文サマリ作成・アイディア考案・議論・実装・論文投稿に取り組み、凡ゆる知識を共有します。

http://xpaperchallenge.org/cv/

【DL輪読会】言語以外でのTransformerのまとめ (ViT, Perceiver, Frozen Pretrained Transformer etc)

【DL輪読会】言語以外でのTransformerのまとめ (ViT, Perceiver, Frozen Pretrained Transformer etc)Deep Learning JP This document summarizes recent research on applying self-attention mechanisms from Transformers to domains other than language, such as computer vision. It discusses models that use self-attention for images, including ViT, DeiT, and T2T, which apply Transformers to divided image patches. It also covers more general attention modules like the Perceiver that aims to be domain-agnostic. Finally, it discusses work on transferring pretrained language Transformers to other modalities through frozen weights, showing they can function as universal computation engines.

SSII2021 [SS1] Transformer x Computer Visionの 実活用可能性と展望 〜 TransformerのCompute...![SSII2021 [SS1] Transformer x Computer Visionの 実活用可能性と展望 〜 TransformerのCompute...](https://cdn.slidesharecdn.com/ss_thumbnails/ss1-01-210607043349-thumbnail.jpg?width=560&fit=bounds)

![SSII2021 [SS1] Transformer x Computer Visionの 実活用可能性と展望 〜 TransformerのCompute...](https://cdn.slidesharecdn.com/ss_thumbnails/ss1-01-210607043349-thumbnail.jpg?width=560&fit=bounds)

![SSII2021 [SS1] Transformer x Computer Visionの 実活用可能性と展望 〜 TransformerのCompute...](https://cdn.slidesharecdn.com/ss_thumbnails/ss1-01-210607043349-thumbnail.jpg?width=560&fit=bounds)

![SSII2021 [SS1] Transformer x Computer Visionの 実活用可能性と展望 〜 TransformerのCompute...](https://cdn.slidesharecdn.com/ss_thumbnails/ss1-01-210607043349-thumbnail.jpg?width=560&fit=bounds)

SSII2021 [SS1] Transformer x Computer Visionの 実活用可能性と展望 〜 TransformerのCompute...SSII SSII2021 [SS1] Transformer x Computer Visionの 実活用可能性と展望 〜 TransformerのComputer Visionにおける躍進と 肥大化する計算資源 〜

6/10 (木) 14:00~14:30

講師:藤井 亮宏 氏(株式会社エクサウィザーズ)

概要: Vision Transformer (ViT) が2020年末に発表され、ImageNetの認識精度においてConvolutional Neural Networks (CNN) ベースのモデルをTransformerのみを使ったモデルが凌駕した。それによってTransformerがAlexNet以降画像系タスクを支配していたCNNに取って換わる可能性が高くなったが、ViTでは大量のデータと大規模な計算資源を必要とすることが障壁となっている。本チュートリアル」では、Computer vision (CV) 系のタスクでTransformerの用途とその成果、実活用の視点からCNNとTransformerの比較、今後Transformer x CVの展望、を議論する。

講師による公開場所:

https://www.slideshare.net/exwzds/210610-ssiii2021-computer-vision-x-trasnformer

[DL輪読会]GQNと関連研究,世界モデルとの関係について![[DL輪読会]GQNと関連研究,世界モデルとの関係について](https://cdn.slidesharecdn.com/ss_thumbnails/20180817-180827085537-thumbnail.jpg?width=560&fit=bounds)

![[DL輪読会]GQNと関連研究,世界モデルとの関係について](https://cdn.slidesharecdn.com/ss_thumbnails/20180817-180827085537-thumbnail.jpg?width=560&fit=bounds)

![[DL輪読会]GQNと関連研究,世界モデルとの関係について](https://cdn.slidesharecdn.com/ss_thumbnails/20180817-180827085537-thumbnail.jpg?width=560&fit=bounds)

![[DL輪読会]GQNと関連研究,世界モデルとの関係について](https://cdn.slidesharecdn.com/ss_thumbnails/20180817-180827085537-thumbnail.jpg?width=560&fit=bounds)

[DL輪読会]GQNと関連研究,世界モデルとの関係についてDeep Learning JP 2018/08/17

Deep Learning JP:

http://deeplearning.jp/seminar-2/

[DL輪読会]MetaFormer is Actually What You Need for Vision![[DL輪読会]MetaFormer is Actually What You Need for Vision](https://cdn.slidesharecdn.com/ss_thumbnails/20220121metaformer-220121085750-thumbnail.jpg?width=560&fit=bounds)

![[DL輪読会]MetaFormer is Actually What You Need for Vision](https://cdn.slidesharecdn.com/ss_thumbnails/20220121metaformer-220121085750-thumbnail.jpg?width=560&fit=bounds)

![[DL輪読会]MetaFormer is Actually What You Need for Vision](https://cdn.slidesharecdn.com/ss_thumbnails/20220121metaformer-220121085750-thumbnail.jpg?width=560&fit=bounds)

![[DL輪読会]MetaFormer is Actually What You Need for Vision](https://cdn.slidesharecdn.com/ss_thumbnails/20220121metaformer-220121085750-thumbnail.jpg?width=560&fit=bounds)

[DL輪読会]MetaFormer is Actually What You Need for VisionDeep Learning JP 2022/01/21

Deep Learning JP:

http://deeplearning.jp/seminar-2/

[DL輪読会]Focal Loss for Dense Object Detection![[DL輪読会]Focal Loss for Dense Object Detection](https://cdn.slidesharecdn.com/ss_thumbnails/focalloss-180208092846-thumbnail.jpg?width=560&fit=bounds)

![[DL輪読会]Focal Loss for Dense Object Detection](https://cdn.slidesharecdn.com/ss_thumbnails/focalloss-180208092846-thumbnail.jpg?width=560&fit=bounds)

![[DL輪読会]Focal Loss for Dense Object Detection](https://cdn.slidesharecdn.com/ss_thumbnails/focalloss-180208092846-thumbnail.jpg?width=560&fit=bounds)

![[DL輪読会]Focal Loss for Dense Object Detection](https://cdn.slidesharecdn.com/ss_thumbnails/focalloss-180208092846-thumbnail.jpg?width=560&fit=bounds)

[DL輪読会]Focal Loss for Dense Object DetectionDeep Learning JP Deep Learning JP:

http://deeplearning.jp/seminar-2/

【DL輪読会】Patches Are All You Need? (ConvMixer)

【DL輪読会】Patches Are All You Need? (ConvMixer)Deep Learning JP ConvMixer is a simple CNN-based model that achieves state-of-the-art results on ImageNet classification. It divides the input image into patches and embeds them into high-dimensional vectors, similar to ViT. However, unlike ViT, it does not use attention but instead applies simple convolutional layers between the patch embedding and classification layers. Experiments show that despite its simplicity, ConvMixer outperforms more complex models like ResNet, ViT, and MLP-Mixer on ImageNet, demonstrating that patch embeddings may be as important as attention mechanisms for vision tasks.

Triplet Loss 徹底解説

Triplet Loss 徹底解説tancoro ClassificationとMetric Learningの違い、Contrastive Loss と Triplet Loss、Triplet Lossの改良の変遷など

[解説スライド] NeRF: Representing Scenes as Neural Radiance Fields for View Synthesis![[解説スライド] NeRF: Representing Scenes as Neural Radiance Fields for View Synthesis](https://cdn.slidesharecdn.com/ss_thumbnails/nerf20200327slideshare-200326131430-thumbnail.jpg?width=560&fit=bounds)

![[解説スライド] NeRF: Representing Scenes as Neural Radiance Fields for View Synthesis](https://cdn.slidesharecdn.com/ss_thumbnails/nerf20200327slideshare-200326131430-thumbnail.jpg?width=560&fit=bounds)

![[解説スライド] NeRF: Representing Scenes as Neural Radiance Fields for View Synthesis](https://cdn.slidesharecdn.com/ss_thumbnails/nerf20200327slideshare-200326131430-thumbnail.jpg?width=560&fit=bounds)

![[解説スライド] NeRF: Representing Scenes as Neural Radiance Fields for View Synthesis](https://cdn.slidesharecdn.com/ss_thumbnails/nerf20200327slideshare-200326131430-thumbnail.jpg?width=560&fit=bounds)

[解説スライド] NeRF: Representing Scenes as Neural Radiance Fields for View SynthesisKento Doi NeRF: Representing Scenes as Neural Radiance Fields for View Synthesisの解説

paper: https://arxiv.org/abs/2003.08934

project page: http://www.matthewtancik.com/nerf

video presentation: https://www.youtube.com/watch?v=JuH79E8rdKc

SSII2022 [SS2] 少ないデータやラベルを効率的に活用する機械学習技術 〜 足りない情報をどのように補うか?〜![SSII2022 [SS2] 少ないデータやラベルを効率的に活用する機械学習技術 〜 足りない情報をどのように補うか?〜](https://cdn.slidesharecdn.com/ss_thumbnails/ss2ssii2022-220607054716-2760bd30-thumbnail.jpg?width=560&fit=bounds)

![SSII2022 [SS2] 少ないデータやラベルを効率的に活用する機械学習技術 〜 足りない情報をどのように補うか?〜](https://cdn.slidesharecdn.com/ss_thumbnails/ss2ssii2022-220607054716-2760bd30-thumbnail.jpg?width=560&fit=bounds)

![SSII2022 [SS2] 少ないデータやラベルを効率的に活用する機械学習技術 〜 足りない情報をどのように補うか?〜](https://cdn.slidesharecdn.com/ss_thumbnails/ss2ssii2022-220607054716-2760bd30-thumbnail.jpg?width=560&fit=bounds)

![SSII2022 [SS2] 少ないデータやラベルを効率的に活用する機械学習技術 〜 足りない情報をどのように補うか?〜](https://cdn.slidesharecdn.com/ss_thumbnails/ss2ssii2022-220607054716-2760bd30-thumbnail.jpg?width=560&fit=bounds)

SSII2022 [SS2] 少ないデータやラベルを効率的に活用する機械学習技術 〜 足りない情報をどのように補うか?〜SSII 6/9 (木) 14:45~15:15 メイン会場

講師:石井 雅人 氏(ソニーグループ株式会社)

概要: 機械学習技術の急速な発達により、コンピュータによる知的処理は様々なタスクで人間に匹敵あるいは凌駕する性能を達成してきた。一方、このような高い性能は大量かつ高品質な学習データによって支えられており、多様化する機械学習応用においてデータの収集コストが大きな導入障壁の1つとなっている。本講演では、少ないデータやラベルから効率的に学習するための様々な技術について、「足りない情報をどのように補うか?」という観点から概観するとともに、特に画像認識分野における最新の研究動向についても紹介する。

【DL輪読会】How Much Can CLIP Benefit Vision-and-Language Tasks?

【DL輪読会】How Much Can CLIP Benefit Vision-and-Language Tasks? Deep Learning JP 2022/7/1

Deep Learning JP

http://deeplearning.jp/seminar-2/

畳み込みニューラルネットワークの高精度化と高速化

畳み込みニューラルネットワークの高精度化と高速化Yusuke Uchida 2012年の画像認識コンペティションILSVRCにおけるAlexNetの登場以降,画像認識においては畳み込みニューラルネットワーク (CNN) を用いることがデファクトスタンダードとなった.CNNは画像分類だけではなく,セグメンテーションや物体検出など様々なタスクを解くためのベースネットワークとしても広く利用されてきている.本講演では,AlexNet以降の代表的なCNNの変遷を振り返るとともに,近年提案されている様々なCNNの改良手法についてサーベイを行い,それらを幾つかのアプローチに分類し,解説する.更に,実用上重要な高速化手法について、畳み込みの分解や枝刈り等の分類を行い,それぞれ解説を行う.

Recent Advances in Convolutional Neural Networks and Accelerating DNNs

第21回ステアラボ人工知能セミナー講演資料

https://stair.connpass.com/event/126556/

[DL輪読会]相互情報量最大化による表現学習![[DL輪読会]相互情報量最大化による表現学習](https://cdn.slidesharecdn.com/ss_thumbnails/20190913iwasawa-190913002312-thumbnail.jpg?width=560&fit=bounds)

![[DL輪読会]相互情報量最大化による表現学習](https://cdn.slidesharecdn.com/ss_thumbnails/20190913iwasawa-190913002312-thumbnail.jpg?width=560&fit=bounds)

![[DL輪読会]相互情報量最大化による表現学習](https://cdn.slidesharecdn.com/ss_thumbnails/20190913iwasawa-190913002312-thumbnail.jpg?width=560&fit=bounds)

![[DL輪読会]相互情報量最大化による表現学習](https://cdn.slidesharecdn.com/ss_thumbnails/20190913iwasawa-190913002312-thumbnail.jpg?width=560&fit=bounds)

[DL輪読会]相互情報量最大化による表現学習Deep Learning JP 2019/09/13

Deep Learning JP:

http://deeplearning.jp/seminar-2/

[DL輪読会]EfficientNet: Rethinking Model Scaling for Convolutional Neural Networks![[DL輪読会]EfficientNet: Rethinking Model Scaling for Convolutional Neural Networks](https://cdn.slidesharecdn.com/ss_thumbnails/yokota20190621dlhack-190621022108-thumbnail.jpg?width=560&fit=bounds)

![[DL輪読会]EfficientNet: Rethinking Model Scaling for Convolutional Neural Networks](https://cdn.slidesharecdn.com/ss_thumbnails/yokota20190621dlhack-190621022108-thumbnail.jpg?width=560&fit=bounds)

![[DL輪読会]EfficientNet: Rethinking Model Scaling for Convolutional Neural Networks](https://cdn.slidesharecdn.com/ss_thumbnails/yokota20190621dlhack-190621022108-thumbnail.jpg?width=560&fit=bounds)

![[DL輪読会]EfficientNet: Rethinking Model Scaling for Convolutional Neural Networks](https://cdn.slidesharecdn.com/ss_thumbnails/yokota20190621dlhack-190621022108-thumbnail.jpg?width=560&fit=bounds)

[DL輪読会]EfficientNet: Rethinking Model Scaling for Convolutional Neural NetworksDeep Learning JP 2019/06/21

Deep Learning JP:

http://deeplearning.jp/seminar-2/

三次元表現まとめ(深層学習を中心に)

三次元表現まとめ(深層学習を中心に)Tomohiro Motoda 最近の三次元表現やこれをニューラルネットワークのような深層学習モデルに入力,出力する際の工夫についてまとめています.気まぐれに一晩で作成したので,多少の粗さはお許しください.

Deep Learningによる超解像の進歩

Deep Learningによる超解像の進歩Hiroto Honda This document summarizes recent advances in single image super-resolution (SISR) using deep learning methods. It discusses early SISR networks like SRCNN, VDSR and ESPCN. SRResNet is presented as a baseline method, incorporating residual blocks and pixel shuffle upsampling. SRGAN and EDSR are also introduced, with EDSR achieving state-of-the-art PSNR results. The relationship between reconstruction loss, perceptual quality and distortion is examined. While PSNR improves yearly, a perception-distortion tradeoff remains. Developments are ongoing to produce outputs that are both accurately restored and naturally perceived.

[DL輪読会]A Higher-Dimensional Representation for Topologically Varying Neural R...![[DL輪読会]A Higher-Dimensional Representation for Topologically Varying Neural R...](https://cdn.slidesharecdn.com/ss_thumbnails/ahigher-dimensionalrepresentationfortopologicallyvaryingneuralradiancefields1-210924021911-thumbnail.jpg?width=560&fit=bounds)

![[DL輪読会]A Higher-Dimensional Representation for Topologically Varying Neural R...](https://cdn.slidesharecdn.com/ss_thumbnails/ahigher-dimensionalrepresentationfortopologicallyvaryingneuralradiancefields1-210924021911-thumbnail.jpg?width=560&fit=bounds)

![[DL輪読会]A Higher-Dimensional Representation for Topologically Varying Neural R...](https://cdn.slidesharecdn.com/ss_thumbnails/ahigher-dimensionalrepresentationfortopologicallyvaryingneuralradiancefields1-210924021911-thumbnail.jpg?width=560&fit=bounds)

![[DL輪読会]A Higher-Dimensional Representation for Topologically Varying Neural R...](https://cdn.slidesharecdn.com/ss_thumbnails/ahigher-dimensionalrepresentationfortopologicallyvaryingneuralradiancefields1-210924021911-thumbnail.jpg?width=560&fit=bounds)

[DL輪読会]A Higher-Dimensional Representation for Topologically Varying Neural R...Deep Learning JP 2021/09/24

Deep Learning JP:

http://deeplearning.jp/seminar-2/

【DeepLearning研修】Transformerの基礎と応用 --第3回 Transformerの画像での応用

【DeepLearning研修】Transformerの基礎と応用 --第3回 Transformerの画像での応用Sony - Neural Network Libraries YouTube nnabla channelの次の動画で利用したスライドです。

【DeepLearning研修】Transformerの基礎と応用 --第3回 Transformerの画像での応用

https://youtu.be/rkuayDInyF0

【参考文献】

・Deep Residual Learning for Image Recognition

https://arxiv.org/abs/1512.03385

・An Image is Worth 16x16 Words: Transformers for Image Recognition at Scale

https://arxiv.org/abs/2010.11929

・ON THE RELATIONSHIP BETWEEN SELF-ATTENTION AND CONVOLUTIONAL LAYERS

https://arxiv.org/abs/1911.03584

・Image Style Transfer Using Convolutional Neural Networks

https://ieeexplore.ieee.org/document/7780634

・Are Convolutional Neural Networks or Transformers more like human vision

https://arxiv.org/abs/2105.07197

・HOW DO VISION TRANSFORMERS WORK?

https://arxiv.org/abs/2202.06709

・Grad-CAM: Visual Explanations from Deep Networks via Gradient-based Localization

https://arxiv.org/abs/1610.02391

・Quantifying Attention Flow in Transformers

https://arxiv.org/abs/2005.00928

・Transformer Interpretability Beyond Attention Visualization

https://arxiv.org/abs/2012.09838

・End-to-End Object Detection with Transformers

https://arxiv.org/abs/2005.12872

・SegFormer: Simple and Efficient Design for Semantic Segmentation with Transformers

https://arxiv.org/abs/2105.15203

・Training data-efficient image transformers & distillation through attention

https://arxiv.org/abs/2012.12877

・Swin Transformer: Hierarchical Vision Transformer using Shifted Windows

https://arxiv.org/abs/2103.14030

・Masked Autoencoders Are Scalable Vision Learners

https://arxiv.org/abs/2111.06377

・Emerging Properties in Self-Supervised Vision Transformers

https://arxiv.org/abs/2104.14294

・Scaling Laws for Neural Language Models

https://arxiv.org/abs/2001.08361

・Learning Transferable Visual Models From Natural Language Supervision

https://arxiv.org/abs/2103.00020

・Scaling Rectified Flow Transformers for High-Resolution Image Synthesis

https://arxiv.org/abs/2403.03206

・Sora: A Review on Background, Technology, Limitations, and Opportunities of Large Vision Models

https://arxiv.org/abs/2402.17177

・SSII2024技術マップ

https://confit.atlas.jp/guide/event/ssii2024/static/special_project_tech_map

Ad

More Related Content

What's hot (20)

SSII2022 [TS1] Transformerの最前線〜 畳込みニューラルネットワークの先へ 〜![SSII2022 [TS1] Transformerの最前線〜 畳込みニューラルネットワークの先へ 〜](https://cdn.slidesharecdn.com/ss_thumbnails/ts120220608ssiitransformerr2-220607054025-3adacf07-thumbnail.jpg?width=560&fit=bounds)

![SSII2022 [TS1] Transformerの最前線〜 畳込みニューラルネットワークの先へ 〜](https://cdn.slidesharecdn.com/ss_thumbnails/ts120220608ssiitransformerr2-220607054025-3adacf07-thumbnail.jpg?width=560&fit=bounds)

![SSII2022 [TS1] Transformerの最前線〜 畳込みニューラルネットワークの先へ 〜](https://cdn.slidesharecdn.com/ss_thumbnails/ts120220608ssiitransformerr2-220607054025-3adacf07-thumbnail.jpg?width=560&fit=bounds)

![SSII2022 [TS1] Transformerの最前線〜 畳込みニューラルネットワークの先へ 〜](https://cdn.slidesharecdn.com/ss_thumbnails/ts120220608ssiitransformerr2-220607054025-3adacf07-thumbnail.jpg?width=560&fit=bounds)

SSII2022 [TS1] Transformerの最前線〜 畳込みニューラルネットワークの先へ 〜SSII 6/8 (水) 09:45~10:55メイン会場

講師:牛久 祥孝 氏

(オムロンサイニックエックス株式会社)

概要: 2017年に機械翻訳を対象として提案されたTransformerは、従来の畳込みや再帰を排して自己注意機構を活用したニューラルネットワークである。2019年頃からコンピュータビジョン分野でも急速に応用が進んでおり、より柔軟かつ高精度なネットワーク構造としての地位を確立しつつある。本チュートリアルでは、Transformerおよびその周辺のネットワーク構造について、コンピュータビジョンへの応用を中心とした最前線を概説する。

画像生成・生成モデル メタサーベイ

画像生成・生成モデル メタサーベイcvpaper. challenge cvpaper.challenge の メタサーベイ発表スライドです。

cvpaper.challengeはコンピュータビジョン分野の今を映し、トレンドを創り出す挑戦です。論文サマリ作成・アイディア考案・議論・実装・論文投稿に取り組み、凡ゆる知識を共有します。

http://xpaperchallenge.org/cv/

【DL輪読会】言語以外でのTransformerのまとめ (ViT, Perceiver, Frozen Pretrained Transformer etc)

【DL輪読会】言語以外でのTransformerのまとめ (ViT, Perceiver, Frozen Pretrained Transformer etc)Deep Learning JP This document summarizes recent research on applying self-attention mechanisms from Transformers to domains other than language, such as computer vision. It discusses models that use self-attention for images, including ViT, DeiT, and T2T, which apply Transformers to divided image patches. It also covers more general attention modules like the Perceiver that aims to be domain-agnostic. Finally, it discusses work on transferring pretrained language Transformers to other modalities through frozen weights, showing they can function as universal computation engines.

SSII2021 [SS1] Transformer x Computer Visionの 実活用可能性と展望 〜 TransformerのCompute...![SSII2021 [SS1] Transformer x Computer Visionの 実活用可能性と展望 〜 TransformerのCompute...](https://cdn.slidesharecdn.com/ss_thumbnails/ss1-01-210607043349-thumbnail.jpg?width=560&fit=bounds)

![SSII2021 [SS1] Transformer x Computer Visionの 実活用可能性と展望 〜 TransformerのCompute...](https://cdn.slidesharecdn.com/ss_thumbnails/ss1-01-210607043349-thumbnail.jpg?width=560&fit=bounds)

![SSII2021 [SS1] Transformer x Computer Visionの 実活用可能性と展望 〜 TransformerのCompute...](https://cdn.slidesharecdn.com/ss_thumbnails/ss1-01-210607043349-thumbnail.jpg?width=560&fit=bounds)

![SSII2021 [SS1] Transformer x Computer Visionの 実活用可能性と展望 〜 TransformerのCompute...](https://cdn.slidesharecdn.com/ss_thumbnails/ss1-01-210607043349-thumbnail.jpg?width=560&fit=bounds)

SSII2021 [SS1] Transformer x Computer Visionの 実活用可能性と展望 〜 TransformerのCompute...SSII SSII2021 [SS1] Transformer x Computer Visionの 実活用可能性と展望 〜 TransformerのComputer Visionにおける躍進と 肥大化する計算資源 〜

6/10 (木) 14:00~14:30

講師:藤井 亮宏 氏(株式会社エクサウィザーズ)

概要: Vision Transformer (ViT) が2020年末に発表され、ImageNetの認識精度においてConvolutional Neural Networks (CNN) ベースのモデルをTransformerのみを使ったモデルが凌駕した。それによってTransformerがAlexNet以降画像系タスクを支配していたCNNに取って換わる可能性が高くなったが、ViTでは大量のデータと大規模な計算資源を必要とすることが障壁となっている。本チュートリアル」では、Computer vision (CV) 系のタスクでTransformerの用途とその成果、実活用の視点からCNNとTransformerの比較、今後Transformer x CVの展望、を議論する。

講師による公開場所:

https://www.slideshare.net/exwzds/210610-ssiii2021-computer-vision-x-trasnformer

[DL輪読会]GQNと関連研究,世界モデルとの関係について![[DL輪読会]GQNと関連研究,世界モデルとの関係について](https://cdn.slidesharecdn.com/ss_thumbnails/20180817-180827085537-thumbnail.jpg?width=560&fit=bounds)

![[DL輪読会]GQNと関連研究,世界モデルとの関係について](https://cdn.slidesharecdn.com/ss_thumbnails/20180817-180827085537-thumbnail.jpg?width=560&fit=bounds)

![[DL輪読会]GQNと関連研究,世界モデルとの関係について](https://cdn.slidesharecdn.com/ss_thumbnails/20180817-180827085537-thumbnail.jpg?width=560&fit=bounds)

![[DL輪読会]GQNと関連研究,世界モデルとの関係について](https://cdn.slidesharecdn.com/ss_thumbnails/20180817-180827085537-thumbnail.jpg?width=560&fit=bounds)

[DL輪読会]GQNと関連研究,世界モデルとの関係についてDeep Learning JP 2018/08/17

Deep Learning JP:

http://deeplearning.jp/seminar-2/

[DL輪読会]MetaFormer is Actually What You Need for Vision![[DL輪読会]MetaFormer is Actually What You Need for Vision](https://cdn.slidesharecdn.com/ss_thumbnails/20220121metaformer-220121085750-thumbnail.jpg?width=560&fit=bounds)

![[DL輪読会]MetaFormer is Actually What You Need for Vision](https://cdn.slidesharecdn.com/ss_thumbnails/20220121metaformer-220121085750-thumbnail.jpg?width=560&fit=bounds)

![[DL輪読会]MetaFormer is Actually What You Need for Vision](https://cdn.slidesharecdn.com/ss_thumbnails/20220121metaformer-220121085750-thumbnail.jpg?width=560&fit=bounds)

![[DL輪読会]MetaFormer is Actually What You Need for Vision](https://cdn.slidesharecdn.com/ss_thumbnails/20220121metaformer-220121085750-thumbnail.jpg?width=560&fit=bounds)

[DL輪読会]MetaFormer is Actually What You Need for VisionDeep Learning JP 2022/01/21

Deep Learning JP:

http://deeplearning.jp/seminar-2/

[DL輪読会]Focal Loss for Dense Object Detection![[DL輪読会]Focal Loss for Dense Object Detection](https://cdn.slidesharecdn.com/ss_thumbnails/focalloss-180208092846-thumbnail.jpg?width=560&fit=bounds)

![[DL輪読会]Focal Loss for Dense Object Detection](https://cdn.slidesharecdn.com/ss_thumbnails/focalloss-180208092846-thumbnail.jpg?width=560&fit=bounds)

![[DL輪読会]Focal Loss for Dense Object Detection](https://cdn.slidesharecdn.com/ss_thumbnails/focalloss-180208092846-thumbnail.jpg?width=560&fit=bounds)

![[DL輪読会]Focal Loss for Dense Object Detection](https://cdn.slidesharecdn.com/ss_thumbnails/focalloss-180208092846-thumbnail.jpg?width=560&fit=bounds)

[DL輪読会]Focal Loss for Dense Object DetectionDeep Learning JP Deep Learning JP:

http://deeplearning.jp/seminar-2/

【DL輪読会】Patches Are All You Need? (ConvMixer)

【DL輪読会】Patches Are All You Need? (ConvMixer)Deep Learning JP ConvMixer is a simple CNN-based model that achieves state-of-the-art results on ImageNet classification. It divides the input image into patches and embeds them into high-dimensional vectors, similar to ViT. However, unlike ViT, it does not use attention but instead applies simple convolutional layers between the patch embedding and classification layers. Experiments show that despite its simplicity, ConvMixer outperforms more complex models like ResNet, ViT, and MLP-Mixer on ImageNet, demonstrating that patch embeddings may be as important as attention mechanisms for vision tasks.

Triplet Loss 徹底解説

Triplet Loss 徹底解説tancoro ClassificationとMetric Learningの違い、Contrastive Loss と Triplet Loss、Triplet Lossの改良の変遷など

[解説スライド] NeRF: Representing Scenes as Neural Radiance Fields for View Synthesis![[解説スライド] NeRF: Representing Scenes as Neural Radiance Fields for View Synthesis](https://cdn.slidesharecdn.com/ss_thumbnails/nerf20200327slideshare-200326131430-thumbnail.jpg?width=560&fit=bounds)

![[解説スライド] NeRF: Representing Scenes as Neural Radiance Fields for View Synthesis](https://cdn.slidesharecdn.com/ss_thumbnails/nerf20200327slideshare-200326131430-thumbnail.jpg?width=560&fit=bounds)

![[解説スライド] NeRF: Representing Scenes as Neural Radiance Fields for View Synthesis](https://cdn.slidesharecdn.com/ss_thumbnails/nerf20200327slideshare-200326131430-thumbnail.jpg?width=560&fit=bounds)

![[解説スライド] NeRF: Representing Scenes as Neural Radiance Fields for View Synthesis](https://cdn.slidesharecdn.com/ss_thumbnails/nerf20200327slideshare-200326131430-thumbnail.jpg?width=560&fit=bounds)

[解説スライド] NeRF: Representing Scenes as Neural Radiance Fields for View SynthesisKento Doi NeRF: Representing Scenes as Neural Radiance Fields for View Synthesisの解説

paper: https://arxiv.org/abs/2003.08934

project page: http://www.matthewtancik.com/nerf

video presentation: https://www.youtube.com/watch?v=JuH79E8rdKc

SSII2022 [SS2] 少ないデータやラベルを効率的に活用する機械学習技術 〜 足りない情報をどのように補うか?〜![SSII2022 [SS2] 少ないデータやラベルを効率的に活用する機械学習技術 〜 足りない情報をどのように補うか?〜](https://cdn.slidesharecdn.com/ss_thumbnails/ss2ssii2022-220607054716-2760bd30-thumbnail.jpg?width=560&fit=bounds)

![SSII2022 [SS2] 少ないデータやラベルを効率的に活用する機械学習技術 〜 足りない情報をどのように補うか?〜](https://cdn.slidesharecdn.com/ss_thumbnails/ss2ssii2022-220607054716-2760bd30-thumbnail.jpg?width=560&fit=bounds)

![SSII2022 [SS2] 少ないデータやラベルを効率的に活用する機械学習技術 〜 足りない情報をどのように補うか?〜](https://cdn.slidesharecdn.com/ss_thumbnails/ss2ssii2022-220607054716-2760bd30-thumbnail.jpg?width=560&fit=bounds)

![SSII2022 [SS2] 少ないデータやラベルを効率的に活用する機械学習技術 〜 足りない情報をどのように補うか?〜](https://cdn.slidesharecdn.com/ss_thumbnails/ss2ssii2022-220607054716-2760bd30-thumbnail.jpg?width=560&fit=bounds)

SSII2022 [SS2] 少ないデータやラベルを効率的に活用する機械学習技術 〜 足りない情報をどのように補うか?〜SSII 6/9 (木) 14:45~15:15 メイン会場

講師:石井 雅人 氏(ソニーグループ株式会社)

概要: 機械学習技術の急速な発達により、コンピュータによる知的処理は様々なタスクで人間に匹敵あるいは凌駕する性能を達成してきた。一方、このような高い性能は大量かつ高品質な学習データによって支えられており、多様化する機械学習応用においてデータの収集コストが大きな導入障壁の1つとなっている。本講演では、少ないデータやラベルから効率的に学習するための様々な技術について、「足りない情報をどのように補うか?」という観点から概観するとともに、特に画像認識分野における最新の研究動向についても紹介する。

【DL輪読会】How Much Can CLIP Benefit Vision-and-Language Tasks?

【DL輪読会】How Much Can CLIP Benefit Vision-and-Language Tasks? Deep Learning JP 2022/7/1

Deep Learning JP

http://deeplearning.jp/seminar-2/

畳み込みニューラルネットワークの高精度化と高速化

畳み込みニューラルネットワークの高精度化と高速化Yusuke Uchida 2012年の画像認識コンペティションILSVRCにおけるAlexNetの登場以降,画像認識においては畳み込みニューラルネットワーク (CNN) を用いることがデファクトスタンダードとなった.CNNは画像分類だけではなく,セグメンテーションや物体検出など様々なタスクを解くためのベースネットワークとしても広く利用されてきている.本講演では,AlexNet以降の代表的なCNNの変遷を振り返るとともに,近年提案されている様々なCNNの改良手法についてサーベイを行い,それらを幾つかのアプローチに分類し,解説する.更に,実用上重要な高速化手法について、畳み込みの分解や枝刈り等の分類を行い,それぞれ解説を行う.

Recent Advances in Convolutional Neural Networks and Accelerating DNNs

第21回ステアラボ人工知能セミナー講演資料

https://stair.connpass.com/event/126556/

[DL輪読会]相互情報量最大化による表現学習![[DL輪読会]相互情報量最大化による表現学習](https://cdn.slidesharecdn.com/ss_thumbnails/20190913iwasawa-190913002312-thumbnail.jpg?width=560&fit=bounds)

![[DL輪読会]相互情報量最大化による表現学習](https://cdn.slidesharecdn.com/ss_thumbnails/20190913iwasawa-190913002312-thumbnail.jpg?width=560&fit=bounds)

![[DL輪読会]相互情報量最大化による表現学習](https://cdn.slidesharecdn.com/ss_thumbnails/20190913iwasawa-190913002312-thumbnail.jpg?width=560&fit=bounds)

![[DL輪読会]相互情報量最大化による表現学習](https://cdn.slidesharecdn.com/ss_thumbnails/20190913iwasawa-190913002312-thumbnail.jpg?width=560&fit=bounds)

[DL輪読会]相互情報量最大化による表現学習Deep Learning JP 2019/09/13

Deep Learning JP:

http://deeplearning.jp/seminar-2/

[DL輪読会]EfficientNet: Rethinking Model Scaling for Convolutional Neural Networks![[DL輪読会]EfficientNet: Rethinking Model Scaling for Convolutional Neural Networks](https://cdn.slidesharecdn.com/ss_thumbnails/yokota20190621dlhack-190621022108-thumbnail.jpg?width=560&fit=bounds)

![[DL輪読会]EfficientNet: Rethinking Model Scaling for Convolutional Neural Networks](https://cdn.slidesharecdn.com/ss_thumbnails/yokota20190621dlhack-190621022108-thumbnail.jpg?width=560&fit=bounds)

![[DL輪読会]EfficientNet: Rethinking Model Scaling for Convolutional Neural Networks](https://cdn.slidesharecdn.com/ss_thumbnails/yokota20190621dlhack-190621022108-thumbnail.jpg?width=560&fit=bounds)

![[DL輪読会]EfficientNet: Rethinking Model Scaling for Convolutional Neural Networks](https://cdn.slidesharecdn.com/ss_thumbnails/yokota20190621dlhack-190621022108-thumbnail.jpg?width=560&fit=bounds)

[DL輪読会]EfficientNet: Rethinking Model Scaling for Convolutional Neural NetworksDeep Learning JP 2019/06/21

Deep Learning JP:

http://deeplearning.jp/seminar-2/

三次元表現まとめ(深層学習を中心に)

三次元表現まとめ(深層学習を中心に)Tomohiro Motoda 最近の三次元表現やこれをニューラルネットワークのような深層学習モデルに入力,出力する際の工夫についてまとめています.気まぐれに一晩で作成したので,多少の粗さはお許しください.

Deep Learningによる超解像の進歩

Deep Learningによる超解像の進歩Hiroto Honda This document summarizes recent advances in single image super-resolution (SISR) using deep learning methods. It discusses early SISR networks like SRCNN, VDSR and ESPCN. SRResNet is presented as a baseline method, incorporating residual blocks and pixel shuffle upsampling. SRGAN and EDSR are also introduced, with EDSR achieving state-of-the-art PSNR results. The relationship between reconstruction loss, perceptual quality and distortion is examined. While PSNR improves yearly, a perception-distortion tradeoff remains. Developments are ongoing to produce outputs that are both accurately restored and naturally perceived.

[DL輪読会]A Higher-Dimensional Representation for Topologically Varying Neural R...![[DL輪読会]A Higher-Dimensional Representation for Topologically Varying Neural R...](https://cdn.slidesharecdn.com/ss_thumbnails/ahigher-dimensionalrepresentationfortopologicallyvaryingneuralradiancefields1-210924021911-thumbnail.jpg?width=560&fit=bounds)

![[DL輪読会]A Higher-Dimensional Representation for Topologically Varying Neural R...](https://cdn.slidesharecdn.com/ss_thumbnails/ahigher-dimensionalrepresentationfortopologicallyvaryingneuralradiancefields1-210924021911-thumbnail.jpg?width=560&fit=bounds)

![[DL輪読会]A Higher-Dimensional Representation for Topologically Varying Neural R...](https://cdn.slidesharecdn.com/ss_thumbnails/ahigher-dimensionalrepresentationfortopologicallyvaryingneuralradiancefields1-210924021911-thumbnail.jpg?width=560&fit=bounds)

![[DL輪読会]A Higher-Dimensional Representation for Topologically Varying Neural R...](https://cdn.slidesharecdn.com/ss_thumbnails/ahigher-dimensionalrepresentationfortopologicallyvaryingneuralradiancefields1-210924021911-thumbnail.jpg?width=560&fit=bounds)

[DL輪読会]A Higher-Dimensional Representation for Topologically Varying Neural R...Deep Learning JP 2021/09/24

Deep Learning JP:

http://deeplearning.jp/seminar-2/

Similar to [DL輪読会]Swin Transformer: Hierarchical Vision Transformer using Shifted Windows (20)

【DeepLearning研修】Transformerの基礎と応用 --第3回 Transformerの画像での応用

【DeepLearning研修】Transformerの基礎と応用 --第3回 Transformerの画像での応用Sony - Neural Network Libraries YouTube nnabla channelの次の動画で利用したスライドです。

【DeepLearning研修】Transformerの基礎と応用 --第3回 Transformerの画像での応用

https://youtu.be/rkuayDInyF0

【参考文献】

・Deep Residual Learning for Image Recognition

https://arxiv.org/abs/1512.03385

・An Image is Worth 16x16 Words: Transformers for Image Recognition at Scale

https://arxiv.org/abs/2010.11929

・ON THE RELATIONSHIP BETWEEN SELF-ATTENTION AND CONVOLUTIONAL LAYERS

https://arxiv.org/abs/1911.03584

・Image Style Transfer Using Convolutional Neural Networks

https://ieeexplore.ieee.org/document/7780634

・Are Convolutional Neural Networks or Transformers more like human vision

https://arxiv.org/abs/2105.07197

・HOW DO VISION TRANSFORMERS WORK?

https://arxiv.org/abs/2202.06709

・Grad-CAM: Visual Explanations from Deep Networks via Gradient-based Localization

https://arxiv.org/abs/1610.02391

・Quantifying Attention Flow in Transformers

https://arxiv.org/abs/2005.00928

・Transformer Interpretability Beyond Attention Visualization

https://arxiv.org/abs/2012.09838

・End-to-End Object Detection with Transformers

https://arxiv.org/abs/2005.12872

・SegFormer: Simple and Efficient Design for Semantic Segmentation with Transformers

https://arxiv.org/abs/2105.15203

・Training data-efficient image transformers & distillation through attention

https://arxiv.org/abs/2012.12877

・Swin Transformer: Hierarchical Vision Transformer using Shifted Windows

https://arxiv.org/abs/2103.14030

・Masked Autoencoders Are Scalable Vision Learners

https://arxiv.org/abs/2111.06377

・Emerging Properties in Self-Supervised Vision Transformers

https://arxiv.org/abs/2104.14294

・Scaling Laws for Neural Language Models

https://arxiv.org/abs/2001.08361

・Learning Transferable Visual Models From Natural Language Supervision

https://arxiv.org/abs/2103.00020

・Scaling Rectified Flow Transformers for High-Resolution Image Synthesis

https://arxiv.org/abs/2403.03206

・Sora: A Review on Background, Technology, Limitations, and Opportunities of Large Vision Models

https://arxiv.org/abs/2402.17177

・SSII2024技術マップ

https://confit.atlas.jp/guide/event/ssii2024/static/special_project_tech_map

SSII2022 [TS3] コンテンツ制作を支援する機械学習技術〜 イラストレーションやデザインの基礎から最新鋭の技術まで 〜![SSII2022 [TS3] コンテンツ制作を支援する機械学習技術〜 イラストレーションやデザインの基礎から最新鋭の技術まで 〜](https://cdn.slidesharecdn.com/ss_thumbnails/ts32022ssiiess-220607054523-e80be8dc-thumbnail.jpg?width=560&fit=bounds)

![SSII2022 [TS3] コンテンツ制作を支援する機械学習技術〜 イラストレーションやデザインの基礎から最新鋭の技術まで 〜](https://cdn.slidesharecdn.com/ss_thumbnails/ts32022ssiiess-220607054523-e80be8dc-thumbnail.jpg?width=560&fit=bounds)

![SSII2022 [TS3] コンテンツ制作を支援する機械学習技術〜 イラストレーションやデザインの基礎から最新鋭の技術まで 〜](https://cdn.slidesharecdn.com/ss_thumbnails/ts32022ssiiess-220607054523-e80be8dc-thumbnail.jpg?width=560&fit=bounds)

![SSII2022 [TS3] コンテンツ制作を支援する機械学習技術〜 イラストレーションやデザインの基礎から最新鋭の技術まで 〜](https://cdn.slidesharecdn.com/ss_thumbnails/ts32022ssiiess-220607054523-e80be8dc-thumbnail.jpg?width=560&fit=bounds)

SSII2022 [TS3] コンテンツ制作を支援する機械学習技術〜 イラストレーションやデザインの基礎から最新鋭の技術まで 〜SSII 6/10 (金) 09:30~10:40メイン会場

講師:シモセラ エドガー 氏(早稲田大学)

概要: インターネットが現代社会の柱の基本的な構成要素になりつつある現在、大規模なコンテンツ制作がこれまで以上に重要になってきています。しかし、イラストレーションやウェブデザインなどのコンテンツ制作には、高解像度、構造付きデータ、インタラクティブ性など、コンピュータービジョンと機械学習にとって一連のユニークな課題があります。本講演では、機械学習技術を利用して、コンテンツ制作の多様な課題を解決し、クリエイターの能力を向上させる方法について説明します。

[DL輪読会]An Image is Worth 16x16 Words: Transformers for Image Recognition at S...![[DL輪読会]An Image is Worth 16x16 Words: Transformers for Image Recognition at S...](https://cdn.slidesharecdn.com/ss_thumbnails/dl10161-201016015214-thumbnail.jpg?width=560&fit=bounds)

![[DL輪読会]An Image is Worth 16x16 Words: Transformers for Image Recognition at S...](https://cdn.slidesharecdn.com/ss_thumbnails/dl10161-201016015214-thumbnail.jpg?width=560&fit=bounds)

![[DL輪読会]An Image is Worth 16x16 Words: Transformers for Image Recognition at S...](https://cdn.slidesharecdn.com/ss_thumbnails/dl10161-201016015214-thumbnail.jpg?width=560&fit=bounds)

![[DL輪読会]An Image is Worth 16x16 Words: Transformers for Image Recognition at S...](https://cdn.slidesharecdn.com/ss_thumbnails/dl10161-201016015214-thumbnail.jpg?width=560&fit=bounds)

[DL輪読会]An Image is Worth 16x16 Words: Transformers for Image Recognition at S...Deep Learning JP 2020/10/16

Deep Learning JP:

http://deeplearning.jp/seminar-2/

[DL輪読会]QUASI-RECURRENT NEURAL NETWORKS![[DL輪読会]QUASI-RECURRENT NEURAL NETWORKS](https://cdn.slidesharecdn.com/ss_thumbnails/quasi-recurrentneuralnetworks-170512014332-thumbnail.jpg?width=560&fit=bounds)

![[DL輪読会]QUASI-RECURRENT NEURAL NETWORKS](https://cdn.slidesharecdn.com/ss_thumbnails/quasi-recurrentneuralnetworks-170512014332-thumbnail.jpg?width=560&fit=bounds)

![[DL輪読会]QUASI-RECURRENT NEURAL NETWORKS](https://cdn.slidesharecdn.com/ss_thumbnails/quasi-recurrentneuralnetworks-170512014332-thumbnail.jpg?width=560&fit=bounds)

![[DL輪読会]QUASI-RECURRENT NEURAL NETWORKS](https://cdn.slidesharecdn.com/ss_thumbnails/quasi-recurrentneuralnetworks-170512014332-thumbnail.jpg?width=560&fit=bounds)

[DL輪読会]QUASI-RECURRENT NEURAL NETWORKSDeep Learning JP 2017/5/12

Deep Learning JP:

http://deeplearning.jp/seminar-2/

猫でも分かるVariational AutoEncoder

猫でも分かるVariational AutoEncoderSho Tatsuno 生成モデルとかをあまり知らない人にもなるべく分かりやすい説明を心がけたVariational AutoEncoderのスライド

実装と簡単な補足は以下を参照

http://sh-tatsuno.com/blog/index.php/2016/07/30/variationalautoencoder/

[AI05] 目指せ、最先端 AI 技術の実活用!Deep Learning フレームワーク 「Microsoft Cognitive Toolkit 」...![[AI05] 目指せ、最先端 AI 技術の実活用!Deep Learning フレームワーク 「Microsoft Cognitive Toolkit 」...](https://cdn.slidesharecdn.com/ss_thumbnails/ai05-170602095345-thumbnail.jpg?width=560&fit=bounds)

![[AI05] 目指せ、最先端 AI 技術の実活用!Deep Learning フレームワーク 「Microsoft Cognitive Toolkit 」...](https://cdn.slidesharecdn.com/ss_thumbnails/ai05-170602095345-thumbnail.jpg?width=560&fit=bounds)

![[AI05] 目指せ、最先端 AI 技術の実活用!Deep Learning フレームワーク 「Microsoft Cognitive Toolkit 」...](https://cdn.slidesharecdn.com/ss_thumbnails/ai05-170602095345-thumbnail.jpg?width=560&fit=bounds)

![[AI05] 目指せ、最先端 AI 技術の実活用!Deep Learning フレームワーク 「Microsoft Cognitive Toolkit 」...](https://cdn.slidesharecdn.com/ss_thumbnails/ai05-170602095345-thumbnail.jpg?width=560&fit=bounds)

[AI05] 目指せ、最先端 AI 技術の実活用!Deep Learning フレームワーク 「Microsoft Cognitive Toolkit 」...de:code 2017 Microsoft がオープンソース化し、今なお進化し続けている Deep Learning フレームワーク「Microsoft Cognitive Toolkit (CNTK)」その CNTK はすでに Microsoft の数々の実サービスにも利用されています。こちらのセッションではそんな CNTK を効率よく学び、新しい問題解決の手段を手に入れることにフォーカスしてお話しします。「CNTK の概要」、「活用方法をイメージするための実事例紹介」、「開発の始め方」、「GPU インスタンスを活用し高速に学習する方法」等のポイントについてご紹介し、皆さんの Deep Learning プロジェクトがロケット スタートできるようになることを目指します。

受講対象: アルゴリズムの難解な解説はいいから、プロジェクトで実際に Deep Learning を活用してみたい! という開発者、これから Deep Learning を始めたいデータ サイエンティストの方にお勧めのセッションです。データ分析の分野で広く使われている Python でのご紹介になります。

製品/テクノロジ: AI (人工知能)/Deep Learning (深層学習)/Machine Learning (機械学習)/Microsoft Azure

藤本 浩介

日本マイクロソフト株式会社

デベロッパー エバンジェリズム統括本部

エバンジェリスト

Deep learning実装の基礎と実践

Deep learning実装の基礎と実践Seiya Tokui 2014年8月26日の日本神経回路学会主催セミナー「Deep Learningが拓く世界」における発表スライドです。Deep Learningの主なフレームワークで共通する設計部分と、実験の仕方について説明しています。

点群深層学習 Meta-study

点群深層学習 Meta-studyNaoya Chiba cvpaper.challenge2019のMeta Study Groupでの発表スライド

点群深層学習についてのサーベイ ( https://www.slideshare.net/naoyachiba18/ss-120302579 )を経た上でのMeta Study

畳み込みニューラルネットワークの研究動向

畳み込みニューラルネットワークの研究動向Yusuke Uchida 2017年12月に開催されたパターン認識・メディア理解研究会(PRMU)にて発表した畳み込みニューラルネットワークのサーベイ

「2012年の画像認識コンペティションILSVRCにおけるAlexNetの登場以降,画像認識においては畳み込みニューラルネットワーク (CNN) を用いることがデファクトスタンダードとなった.ILSVRCでは毎年のように新たなCNNのモデルが提案され,一貫して認識精度の向上に寄与してきた.CNNは画像分類だけではなく,セグメンテーションや物体検出など様々なタスクを解くためのベースネットワークとしても広く利用されてきている.

本稿では,AlexNet以降の代表的なCNNの変遷を振り返るとともに,近年提案されている様々なCNNの改良手法についてサーベイを行い,それらを幾つかのアプローチに分類し,解説する.更に,代表的なモデルについて複数のデータセットを用いて学習および網羅的な精度評価を行い,各モデルの精度および学習時間の傾向について議論を行う.」

attention_is_all_you_need_nips17_論文紹介

attention_is_all_you_need_nips17_論文紹介Masayoshi Kondo ラボ勉強会での論文紹介スライド.Google BrainらによるNIPS2017採録論文.

従来のRNN-Seq2Seqモデルは、系列の並列処理性が構造上できないため訓練時間が大きくなること・系列が長くなるとメモリの制約上バッチサイズが制限されるといった問題があった.提案法”Transformer”は、Attention機構(self-attention)のみで構成される新しいニューラルモデルで、系列に対する並列処理性と系列内の任意2要素に関する計算コストを一定に抑えることができ、前述の問題を解決できる.

文献紹介:Video Transformer Network

文献紹介:Video Transformer NetworkToru Tamaki Daniel Neimark, Omri Bar, Maya Zohar, Dotan Asselmann; Video Transformer Network, Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV) Workshops, 2021, pp. 3163-3172

https://openaccess.thecvf.com/content/ICCV2021W/CVEU/html/Neimark_Video_Transformer_Network_ICCVW_2021_paper.html

https://arxiv.org/abs/2102.00719

Ad

More from Deep Learning JP (20)

【DL輪読会】AdaptDiffuser: Diffusion Models as Adaptive Self-evolving Planners

【DL輪読会】AdaptDiffuser: Diffusion Models as Adaptive Self-evolving PlannersDeep Learning JP 2023/8/25

Deep Learning JP

http://deeplearning.jp/seminar-2/

【DL輪読会】事前学習用データセットについて

【DL輪読会】事前学習用データセットについてDeep Learning JP 2023/8/24

Deep Learning JP

http://deeplearning.jp/seminar-2/

【DL輪読会】 "Learning to render novel views from wide-baseline stereo pairs." CVP...

【DL輪読会】 "Learning to render novel views from wide-baseline stereo pairs." CVP...Deep Learning JP 2023/8/18

Deep Learning JP

http://deeplearning.jp/seminar-2/

【DL輪読会】Zero-Shot Dual-Lens Super-Resolution

【DL輪読会】Zero-Shot Dual-Lens Super-ResolutionDeep Learning JP 2023/8/18

Deep Learning JP

http://deeplearning.jp/seminar-2/

【DL輪読会】BloombergGPT: A Large Language Model for Finance arxiv

【DL輪読会】BloombergGPT: A Large Language Model for Finance arxivDeep Learning JP 2023/8/18

Deep Learning JP

http://deeplearning.jp/seminar-2/

【DL輪読会】マルチモーダル LLM

【DL輪読会】マルチモーダル LLMDeep Learning JP 2023/8/16

Deep Learning JP

http://deeplearning.jp/seminar-2/

【 DL輪読会】ToolLLM: Facilitating Large Language Models to Master 16000+ Real-wo...

【 DL輪読会】ToolLLM: Facilitating Large Language Models to Master 16000+ Real-wo...Deep Learning JP 2023/8/16

Deep Learning JP

http://deeplearning.jp/seminar-2/

【DL輪読会】AnyLoc: Towards Universal Visual Place Recognition

【DL輪読会】AnyLoc: Towards Universal Visual Place RecognitionDeep Learning JP 2023/8/4

Deep Learning JP

http://deeplearning.jp/seminar-2/

【DL輪読会】Can Neural Network Memorization Be Localized?

【DL輪読会】Can Neural Network Memorization Be Localized?Deep Learning JP 2023/8/4

Deep Learning JP

http://deeplearning.jp/seminar-2/

【DL輪読会】Hopfield network 関連研究について

【DL輪読会】Hopfield network 関連研究についてDeep Learning JP 2023/8/4

Deep Learning JP

http://deeplearning.jp/seminar-2/

【DL輪読会】SimPer: Simple self-supervised learning of periodic targets( ICLR 2023 )

【DL輪読会】SimPer: Simple self-supervised learning of periodic targets( ICLR 2023 )Deep Learning JP 2023/7/28

Deep Learning JP

http://deeplearning.jp/seminar-2/

【DL輪読会】RLCD: Reinforcement Learning from Contrast Distillation for Language M...

【DL輪読会】RLCD: Reinforcement Learning from Contrast Distillation for Language M...Deep Learning JP 2023/7/27

Deep Learning JP

http://deeplearning.jp/seminar-2/

【DL輪読会】"Secrets of RLHF in Large Language Models Part I: PPO"

【DL輪読会】"Secrets of RLHF in Large Language Models Part I: PPO"Deep Learning JP 2023/7/21

Deep Learning JP

http://deeplearning.jp/seminar-2/

【DL輪読会】"Language Instructed Reinforcement Learning for Human-AI Coordination "

【DL輪読会】"Language Instructed Reinforcement Learning for Human-AI Coordination "Deep Learning JP 2023/7/21

Deep Learning JP

http://deeplearning.jp/seminar-2/

【DL輪読会】Llama 2: Open Foundation and Fine-Tuned Chat Models

【DL輪読会】Llama 2: Open Foundation and Fine-Tuned Chat ModelsDeep Learning JP 2023/7/20

Deep Learning JP

http://deeplearning.jp/seminar-2/

【DL輪読会】"Learning Fine-Grained Bimanual Manipulation with Low-Cost Hardware"

【DL輪読会】"Learning Fine-Grained Bimanual Manipulation with Low-Cost Hardware"Deep Learning JP 2023/7/14

Deep Learning JP

http://deeplearning.jp/seminar-2/

【DL輪読会】Parameter is Not All You Need:Starting from Non-Parametric Networks fo...

【DL輪読会】Parameter is Not All You Need:Starting from Non-Parametric Networks fo...Deep Learning JP 2023/7/7

Deep Learning JP

http://deeplearning.jp/seminar-2/

【DL輪読会】Drag Your GAN: Interactive Point-based Manipulation on the Generative ...

【DL輪読会】Drag Your GAN: Interactive Point-based Manipulation on the Generative ...Deep Learning JP 2023/6/30

Deep Learning JP

http://deeplearning.jp/seminar-2/

【DL輪読会】Self-Supervised Learning from Images with a Joint-Embedding Predictive...

【DL輪読会】Self-Supervised Learning from Images with a Joint-Embedding Predictive...Deep Learning JP 2023/6/30

Deep Learning JP

http://deeplearning.jp/seminar-2/

【DL輪読会】Towards Understanding Ensemble, Knowledge Distillation and Self-Distil...

【DL輪読会】Towards Understanding Ensemble, Knowledge Distillation and Self-Distil...Deep Learning JP 2023/6/30

Deep Learning JP

http://deeplearning.jp/seminar-2/

Ad

[DL輪読会]Swin Transformer: Hierarchical Vision Transformer using Shifted Windows

- 1. 1 DEEP LEARNING JP [DL Papers] http://deeplearning.jp/ Swin Transformer: Hierarchical Vision Transformer using ShiftedWindows Kazuki Fujikawa

- 2. サマリ • 書誌情報 – Swin Transformer: Hierarchical Vision Transformer using Shifted Windows • Arxiv:2103.14030 • Ze Liu, Yutong Lin, Yue Cao, Han Hu, Yixuan Wei, Zheng Zhang, Stephen Lin, Baining Guo (Microsoft Research Asia) • 概要 – CVの汎用バックボーン: Swin Transformerを提案 • Transformerの画像への適用で課題になる、画像サイズ対して二乗で計算量が増える問題を 線形の増加に緩和 – モデルの複雑度・速度のトレードオフで良好な結果を確認 • Object Detection, Semantic Segmentation タスクで SoTA 2

- 3. アウトライン • 背景 • 関連研究 • 提案手法 • 実験・結果 3

- 4. アウトライン • 背景 • 関連研究 • 提案手法 • 実験・結果 4

- 5. 背景 • Tranformerベースのアーキテクチャは、NLPではデファクトスタンダードと なり、CVの世界でも活用可能であることが報告されている • Transformerを言語から画像へ適用する際の課題として、解像度の問題が 挙げられる – 画像における解像度は、自然言語におけるトークン数と比較して、スケールの変化が大きい – Self-Attentionは解像度に対して二乗の計算コストを要する 5 画像サイズに対してスケーラブルな Transformerアーキテクチャを考えたい!

- 6. アウトライン • 背景 • 関連研究 • 提案手法 • 実験・結果 6

- 7. 関連研究 • Vision Transformer [Dosovitskiy+, ICLR2021] – 入力画像をパッチ(画像の断片)に分割し、Transformerへ入力 • Patch Embedding: パッチ内のピクセルを1次元に並び替えた上で線形写像を取る • Patch Embedding に Positional Encoding を加えることで、パッチの元画像内での位置を表現 7

- 8. 関連研究 • Vision Transformer [Dosovitskiy+, ICLR2021] – 課題: Self-Attention の計算コスト • 画像内のすべてのPatchに対してAttentionの計算を行うため、計算コストは画像サイズに対して 二乗で増加する 8

- 9. アウトライン • 背景 • 関連研究 • 提案手法 • 実験・結果 9

- 10. 提案手法: Swin Transformer • 以下の3モジュールで構成 – Patch Partition + Linear embedding – Swin Transformer Block • Window based Multihead Self-Attention (W-MSA) • Shifted window based Multihead Self-Attention (SW-MSA) – Patch Merging 10

- 11. 提案手法: Swin Transformer • Patch Partition + Linear embedding – Patch Embedding の 計算は Vision Transformer と同様 • パッチへの分割 → 線形写像 11

- 12. 提案手法: Swin Transformer • Window based Multihead Self-Attention(W-MSA) – 画像をパッチに分割後、パッチの集合であるウィンドウを定義 – Window内のパッチに対してのみ、Self-Attentionで参照する • → Self-Attentionの計算コストは画像サイズの大きさに対して線形に増加 12 Patch (e.g. 4x4 pixel) Window (e.g. 4x4 patch) Swin Transformer Block

- 13. 提案手法: Swin Transformer • Shifted window based Multihead Self-Attention (SW-MSA) – W-MSA では、ウィンドウ間の関係性をモデリングできない • → ウィンドウをシフトさせ、ウィンドウ間の関係性をモデリングできるようにした • (下図: 縦方向に2patch, 横方向に2patch, ウィンドウをシフトしている) 13 Swin Transformer Block

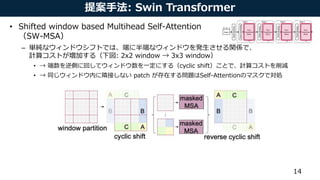

- 14. 提案手法: Swin Transformer • Shifted window based Multihead Self-Attention (SW-MSA) – 単純なウィンドウシフトでは、端に半端なウィンドウを発生させる関係で、 計算コストが増加する(下図: 2x2 window → 3x3 window) • → 端数を逆側に回してウィンドウ数を一定にする(cyclic shift)ことで、計算コストを削減 • → 同じウィンドウ内に隣接しない patch が存在する問題はSelf-Attentionのマスクで対処 14

- 15. 提案手法: Swin Transformer • Patch Merging – Swin Transformer Block を数層重ねた後、隣接する 2x2 の patch を統合する • Pixel embedding 同様、2x2 の patch を 1次元に並び替えて線形写像を取る • その後の Swin Transformer Blockでは Window 内の patch 数は一定に保つため、 計算コストはそのままで広範な範囲に対して Self-Attention を計算することになる 15

- 16. アウトライン • 背景 • 関連研究 • 提案手法 • 実験・結果 16

- 17. 実験: Image Classification • 実験設定 – アーキテクチャ: レイヤー数、チャネル数の異なる複数のモデルを定義 • Swin-T: C = 96, layer numbers = {2, 2, 6, 2} • Swin-S: C = 96, layer numbers = {2, 2, 18, 2} • Swin-B: C = 128, layer numbers = {2, 2, 18, 2} • Swin-L: C = 192, layer numbers = {2, 2, 18, 2} – タスク • ImageNet 1000クラス分類のタスク(train: 1.23M)をスクラッチで学習 • 他のモデルで同様の複雑度(パラメータ数・速度)のものと比較して評価 17

- 18. 実験: Image Classification • 実験結果 – SoTA の Transformer ベースのアーキテクチャ(DeiT)に対し、同様の複雑度で良い パフォーマンスを実現 – SoTA の CNN ベースのアーキテクチャ(RegNet, EfficientNet)に対し、速度-精度の トレードオフでわずかに改善 18

- 19. 実験: Image Classification • 実験結果 – SoTA の Transformer ベースのアーキテクチャ(DeiT)に対し、同様の複雑度で良い パフォーマンスを実現 – SoTA の CNN ベースのアーキテクチャ(RegNet, EfficientNet)に対し、速度-精度の トレードオフでわずかに改善 19

- 20. 実験: Object Detection • 実験設定 – タスク • COCO Object Detection • 4種の主要な物体検出フレームワークのバックボーンに採用して実験 – Cascade Mask R-CNN [He+, 2016] – ATSS [Zagoruyko+, 2016] – RedPoints v2 [Chen+, 2020] – Sparse RCNN [Sun+, 2020] 20

- 21. 実験: Object Detection • 実験結果 – いずれの物体検出フレームワークでもベースライン(ResNet50)からの改善を確認 – Transformerベースのバックボーン: DeiT と比較して、精度と共に速度も改善 – SoTAモデルとの比較でも改善を確認 21

- 22. 実験: Object Detection • 実験結果 – いずれの物体検出フレームワークでもベースライン(ResNet50)からの改善を確認 – Transformerベースのバックボーン: DeiT と比較して、精度と共に速度も改善 – SoTAモデルとの比較でも改善を確認 22

- 23. 実験: Object Detection • 実験結果 – いずれの物体検出フレームワークでもベースライン(ResNet50)からの改善を確認 – Transformerベースのバックボーン: DeiT と比較して、精度と共に速度も改善 – SoTAモデルとの比較でも改善を確認 23

- 24. 実験: Semantic Segmentation • 実験設定 – タスク: ADE20K • 実験結果 – DeiTの同等の複雑度のモデルより高速で、高精度の予測ができることを確認 – SoTAモデル(SETR)より少ないパラメータで、高精度な予測ができることを確認 24

- 25. 実験: Semantic Segmentation • 実験設定 – タスク: ADE20K • 実験結果 – DeiTの同等の複雑度のモデルより高速で、高精度の予測ができることを確認 – SoTAモデル(SETR)より少ないパラメータで、高精度な予測ができることを確認 25

- 26. 結論 • CVの汎用バックボーン: Swin Transformerを提案 – Transformerの画像への適用で課題になる、画像サイズの増加に対して二乗で計算量が 増える問題を線形の増加に緩和 • モデルの複雑度・速度のトレードオフで良好な結果を確認 – Object Detection, Semantic Segmentation タスクで SoTA 26

- 27. References • Liu, Ze, et al. "Swin transformer: Hierarchical vision transformer using shifted windows." arXiv preprint arXiv:2103.14030 (2021). • Dosovitskiy, Alexey, et al. "An image is worth 16x16 words: Transformers for image recognition at scale." In ICLR2021. 27

![[論文紹介] Convolutional Neural Network(CNN)による超解像](https://cdn.slidesharecdn.com/ss_thumbnails/cnn-presen-161218113749-thumbnail.jpg?width=560&fit=bounds)

![[チュートリアル講演]画像データを対象とする情報ハイディング〜JPEG画像を利用したハイディング〜](https://cdn.slidesharecdn.com/ss_thumbnails/slideupload-130524001604-phpapp02-thumbnail.jpg?width=560&fit=bounds)