Drupal as a Semantic Web platform - ISWC 2012

4 likes1,579 views

This presentation describes some use cases and deployments of Drupal for building bio-medical platforms powered by semantic web technologies such as RDF, SPARQL, JSON-LD.

1 of 56

Downloaded 16 times

Ad

Recommended

Data strategies - Drupal Decision Makers training

Data strategies - Drupal Decision Makers trainingscorlosquet This document discusses data strategies in Drupal, including using structured data like Schema.org to enhance search engine results. It explains how to describe content types and their properties to help machines understand web pages. The document also introduces RDF extensions that allow Drupal to expose structured data through formats like RDF, JSON-LD and SPARQL to integrate with the semantic web.

Slides semantic web and Drupal 7 NYCCamp 2012

Slides semantic web and Drupal 7 NYCCamp 2012scorlosquet This document summarizes a presentation about using semantic web technologies like RDFa, schema.org, and JSON-LD with Drupal 7. It discusses how Drupal 7 outputs RDFa by default and can be extended through contributed modules to support additional RDF formats, a SPARQL endpoint, schema.org mapping, and JSON-LD. Examples of semantic markup for events and people are provided.

The Semantic Web and Drupal 7 - Loja 2013

The Semantic Web and Drupal 7 - Loja 2013scorlosquet Presentation given at Drupal Summit Latino 2013 in Loja, Ecuador, covering the topic of the Semantic Web and Drupal 7.

Drupal and the semantic web - SemTechBiz 2012

Drupal and the semantic web - SemTechBiz 2012scorlosquet This document provides a summary of a presentation on leveraging the semantic web with Drupal 7. The presentation introduces Drupal and its uses as a content management system. It discusses Drupal 7's integration with the semantic web through its built-in RDFa support and contributed modules that add additional semantic web capabilities like SPARQL querying and JSON-LD serialization. The presentation demonstrates these semantic web features in Drupal through examples and demos. It also introduces Domeo, a web-based tool for semantically annotating online documents that can integrate with Drupal.

Apache Marmotta (incubating)

Apache Marmotta (incubating)Sergio Fernández Sergio Fernández gave a presentation on Marmotta, an open platform for linked data. He discussed Marmotta's main features like supporting read-write linked data and SPARQL/LDPath querying. He also covered Marmotta's architecture, timeline including joining the Apache incubator in 2012, and how its team of 11 committers from 6 organizations work using the Apache Way process. Fernández encouraged participation to help contribute code and documentation to the project.

Solr in drupal 7 index and search more entities

Solr in drupal 7 index and search more entitiesBiglazy This document discusses using Apache Solr for search in Drupal 7. It notes that in Drupal 7, everything is an entity, including content, users, taxonomy terms, and files. It recommends using Apache Solr modules like apachesolr, apachesolr_user_indexer, and apachesolr_file to index these various entity types for full-text search. Finally, it provides examples of sites using Solr search and links to a tutorial on setting up Solr with Drupal 7.

Intro ror

Intro rortim_tang Ruby on Rails is a full-stack web application framework used by companies like Twitter, GitHub, and Groupon. It uses conventions over configurations, following typical directory structures and naming conventions. Ruby on Rails promotes agile development through rapid prototyping, built-in generators, and plugins and libraries.

RDFauthor (EKAW)

RDFauthor (EKAW)Norman Heino My presentation on RDFauthor at EKAW2010, Lisbon. For more information on RDFauthor visit http://aksw.org/Projects/RDFauthor; for the code visit http://code.google.com/p/rdfauthor/.

Drupal 7 and schema.org module (Jan 2012)

Drupal 7 and schema.org module (Jan 2012)scorlosquet Overview of the current implementation of schema.org and Drupal 7 - http://drupal.org/project/schemaorg

Drupal 7 and schema.org module

Drupal 7 and schema.org modulescorlosquet Overview of the current implementation of schema.org and Drupal 7 - http://drupal.org/project/schemaorg

Semantic Media Management with Apache Marmotta

Semantic Media Management with Apache MarmottaThomas Kurz Thomas Kurz gives a presentation on semantic media management using Apache Marmotta. He plans to create a new Marmotta module that supports storing images, annotating image fragments, and retrieving images and fragments based on annotations. This will make use of linked data platform, media fragment URIs, open annotation model, and SPARQL-MM. The goal is to create a Marmotta module and webapp that extends LDP for image fragments and provides a UI for image annotation and retrieval.

Enabling access to Linked Media with SPARQL-MM

Enabling access to Linked Media with SPARQL-MMThomas Kurz The amount of audio, video and image data on the web is immensely growing, which leads to data management problems based on the hidden character of multimedia. Therefore the interlinking of semantic concepts and media data with the aim to bridge the gap between the document web and the Web of Data has become a common practice and is known as Linked Media. However, the value of connecting media to its semantic meta data is limited due to lacking access methods specialized for media assets and fragments as well as to the variety of used description models. With SPARQL-MM we extend SPARQL, the standard query language for the Semantic Web with media specific concepts and functions to unify the access to Linked Media. In this paper we describe the motivation for SPARQL-MM, present the State of the Art of Linked Media description formats and Multimedia query languages, and outline the specification and implementation of the SPARQL-MM function set.

Linked Media Management with Apache Marmotta

Linked Media Management with Apache MarmottaThomas Kurz The document introduces Apache Marmotta, an open source linked data platform. It provides a linked data server, SPARQL endpoint, and libraries for building linked data applications. Marmotta allows users to easily publish and query RDF data on the web. It also includes features for multimedia management such as semantic annotation of media and extensions for querying over media fragments.

How to create your own ODF

How to create your own ODFRob Snelders This document discusses how to create OpenDocument Format (ODF) files in 3 main ways: 1) using LibreOffice macros or command line interface to programmatically generate files, 2) by converting existing files to ODF using converters, or 3) by directly writing an ODF file using its underlying XML structure and required elements. It then provides more details on the typical file structure for ODF files created in LibreOffice or minimally, and goes on to explain how to handle different content types like text, spreadsheets, presentations and images in ODF files.

Custom Drupal Development, Secure and Performant

Custom Drupal Development, Secure and PerformantDoug Green This document discusses concepts and best practices for custom Drupal development including:

- Optimizing performance through caching, database indexing, and reducing page assets

- Addressing scalability, threads, and potential DDoS concerns

- Leveraging APIs, infrastructure like CDNs, and front-end techniques for improved user experience

Linked Media and Data Using Apache Marmotta

Linked Media and Data Using Apache MarmottaSebastian Schaffert Overview over the history of Linked Media in the context of Apache Marmotta. Invited talk at LIME2014 workshop at ESWC2014.

Fedora 4 Deep Dive

Fedora 4 Deep DiveDavid Wilcox This document provides an overview of Fedora 4 including its goals, features, and roadmap. The key points are:

- Fedora 4 aims to improve performance, support flexible storage options, research data management, linked open data, and be an improved platform for developers.

- Fedora 4 beta was released in 2014 and featured the same capabilities as the upcoming production release. Acceptance testing, beta pilots, and community feedback will inform the production release.

- Fedora 4 highlights include content modeling, authorization, versioning, scaling to large files and objects, integrated and external search, and linked data/RDF support.

Web Development

Web DevelopmentShivakrishna Gannu This document provides an overview of the web development skills and tools included in the author's portfolio. It describes HTML as the language that defines the structure of web pages using tags, CSS as the language used to describe page presentation and styles, JavaScript as an essential programming language for interactive web pages, and Bootstrap as a popular front-end framework for designing responsive websites and applications. Screenshots of the author's portfolio are also included.

Open source data_warehousing_overview

Open source data_warehousing_overviewAlex Meadows This document summarizes different approaches to data warehousing including Inmon's 3NF model, Kimball's conformed dimensions model, Linstedt's data vault model, and Rönnbäck's anchor model. It discusses the challenges of data warehousing and provides examples of open source software that can be used to implement each approach including MySQL, PostgreSQL, Greenplum, Infobright, and Hadoop. Cautions are also noted for each methodology.

Dynamic websites

Dynamic websitesJason Castellano Dynamic website that changes daily automatically. A dynamic website can contain client-side scripting or server-side scripting to generate the changing content, or a combination of both scripting types. These sites also include HTML programming for the basic structure.

Steam Learn: An introduction to Redis

Steam Learn: An introduction to Redisinovia This document provides an introduction and overview of Redis. Redis is described as an in-memory non-relational database and data structure server. It is simple to use with no schema or user required. Redis supports a variety of data types including strings, hashes, lists, sets, sorted sets, and more. It is flexible and can be configured for caching, persistence, custom functions, transactions, and publishing/subscribing. Redis is scalable through replication and partitioning. It is widely adopted by companies like GitHub, Instagram, and Twitter for uses like caching, queues, and leaderboards.

Arango DB

Arango DBNexThoughts Technologies ArangoDB is a native multi-model database system developed by triAGENS GmbH. The database system supports three important data models (key/value, documents, graphs) with one database core and a unified query language AQL (ArangoDB Query Language). ArangoDB is a NoSQL database system but AQL is similar in many ways to SQL

Redis

RedisRamon Wartala Redis is an advanced key-value store that is similar to memcached but supports different value types like strings, lists, sets, and sorted sets. It has master-slave replication, expiration of keys, and can be accessed from Ruby through libraries like redis-rb. The Redis server was written in C and supports semi and fully persistent modes.

Web Development Intro

Web Development IntroCindy Royal The document discusses the history and categories of web development. It begins with early technologies like HTML, CSS, and JavaScript. It then discusses how PHP and ASP allowed programming concepts and connecting to databases. Now there are many ways to have an online presence without knowing every technology, including blog platforms, content management systems, and web frameworks. Data visualization is highlighted as an important future area, with open source tools mentioned. Challenges of learning new skills and innovating are also noted.

DOC Presentation by DOC Contractor Alison McCauley

DOC Presentation by DOC Contractor Alison McCauleyFederal Communicators Network The document discusses the goals and plan for building a new content management system (CMS) platform to manage multiple Department of Commerce websites. The key goals were to move to Drupal 7, have a responsive design, and create shared functionality across sites for a cohesive experience. The plan was to build reusable features like content types, galleries, and taxonomies that could be enabled or disabled on each site as needed from a single code repository. While complex, this allows for easier development, maintenance, and a more cohesive user experience across sites.

Mongo DB for Java, Python and PHP Developers

Mongo DB for Java, Python and PHP DevelopersRick Hightower Getting started with MongoDB. Covers basic why Mongo, features, architecture (replica sets, map reduce, aggregation framework), code examples in JavaScript, Java, Python and PHP.

Drupal 7 and RDF

Drupal 7 and RDFscorlosquet This document discusses Drupal 7 and its new capabilities for representing content as Resource Description Framework (RDF) data. It provides an overview of Drupal's history with RDF and semantic technologies. It describes how Drupal 7 core is now RDFa enabled out of the box and how contributed modules can import vocabularies and provide SPARQL endpoints. The document advocates experimenting with the new RDF features in Drupal 7.

Embedding Linked Data Invisibly into Web Pages: Strategies and Workflows for ...

Embedding Linked Data Invisibly into Web Pages: Strategies and Workflows for ...National Information Standards Organization (NISO) As described in the April NISO/DCMI webinar by Dan Brickley, schema.org is a search-engine initiative aimed at helping webmasters use structured data markup to improve the discovery and display of search results. Drupal 7 makes it easy to markup HTML pages with schema.org terms, allowing users to quickly build websites with structured data that can be understood by Google and displayed as Rich Snippets.

Improved search results are only part of the story, however. Data-bearing documents become machine-processable once you find them. The subject matter, important facts, calendar events, authorship, licensing, and whatever else you might like to share become there for the taking. Sales reports, RSS feeds, industry analysis, maps, diagrams and process artifacts can now connect back to other data sets to provide linkage to context and related content. The key to this is the adoption standards for both the data model (RDF) and the means of weaving it into documents (RDFa). Drupal 7 has become the leading content platform to adopt these standards.

This webinar will describe how RDFa and Drupal 7 can improve how organizations publish information and data on the Web for both internal and external consumption. It will discuss what is required to use these features and how they impact publication workflow. The talk will focus on high-level and accessible demonstrations of what is possible. Technical people should learn how to proceed while non-technical people will learn what is possible.

Drupal and RDF

Drupal and RDFscorlosquet Drupal is an open source content management system that has been exposing its data in RDF format since 2006 through contributed modules. For Drupal 7, RDF support is being built directly into the core, allowing Drupal sites to natively publish structured data using vocabularies like FOAF, SIOC, and Dublin Core. This will empower Drupal users and site builders to more directly participate in the Web of Linked Data and help create new types of semantic applications.

When Drupal and RDF meet

When Drupal and RDF meetscorlosquet This document discusses integrating RDF and semantic web technologies with the Drupal content management system. It provides an overview of Drupal, describes how its data model of content types and fields can be mapped to RDF classes and properties, and details an experiment exposing Drupal data in RDF format. It notes that Drupal 7 will natively support RDFa and help expose more linked data on the web through its large user base of over 227,000 sites.

Ad

More Related Content

What's hot (18)

Drupal 7 and schema.org module (Jan 2012)

Drupal 7 and schema.org module (Jan 2012)scorlosquet Overview of the current implementation of schema.org and Drupal 7 - http://drupal.org/project/schemaorg

Drupal 7 and schema.org module

Drupal 7 and schema.org modulescorlosquet Overview of the current implementation of schema.org and Drupal 7 - http://drupal.org/project/schemaorg

Semantic Media Management with Apache Marmotta

Semantic Media Management with Apache MarmottaThomas Kurz Thomas Kurz gives a presentation on semantic media management using Apache Marmotta. He plans to create a new Marmotta module that supports storing images, annotating image fragments, and retrieving images and fragments based on annotations. This will make use of linked data platform, media fragment URIs, open annotation model, and SPARQL-MM. The goal is to create a Marmotta module and webapp that extends LDP for image fragments and provides a UI for image annotation and retrieval.

Enabling access to Linked Media with SPARQL-MM

Enabling access to Linked Media with SPARQL-MMThomas Kurz The amount of audio, video and image data on the web is immensely growing, which leads to data management problems based on the hidden character of multimedia. Therefore the interlinking of semantic concepts and media data with the aim to bridge the gap between the document web and the Web of Data has become a common practice and is known as Linked Media. However, the value of connecting media to its semantic meta data is limited due to lacking access methods specialized for media assets and fragments as well as to the variety of used description models. With SPARQL-MM we extend SPARQL, the standard query language for the Semantic Web with media specific concepts and functions to unify the access to Linked Media. In this paper we describe the motivation for SPARQL-MM, present the State of the Art of Linked Media description formats and Multimedia query languages, and outline the specification and implementation of the SPARQL-MM function set.

Linked Media Management with Apache Marmotta

Linked Media Management with Apache MarmottaThomas Kurz The document introduces Apache Marmotta, an open source linked data platform. It provides a linked data server, SPARQL endpoint, and libraries for building linked data applications. Marmotta allows users to easily publish and query RDF data on the web. It also includes features for multimedia management such as semantic annotation of media and extensions for querying over media fragments.

How to create your own ODF

How to create your own ODFRob Snelders This document discusses how to create OpenDocument Format (ODF) files in 3 main ways: 1) using LibreOffice macros or command line interface to programmatically generate files, 2) by converting existing files to ODF using converters, or 3) by directly writing an ODF file using its underlying XML structure and required elements. It then provides more details on the typical file structure for ODF files created in LibreOffice or minimally, and goes on to explain how to handle different content types like text, spreadsheets, presentations and images in ODF files.

Custom Drupal Development, Secure and Performant

Custom Drupal Development, Secure and PerformantDoug Green This document discusses concepts and best practices for custom Drupal development including:

- Optimizing performance through caching, database indexing, and reducing page assets

- Addressing scalability, threads, and potential DDoS concerns

- Leveraging APIs, infrastructure like CDNs, and front-end techniques for improved user experience

Linked Media and Data Using Apache Marmotta

Linked Media and Data Using Apache MarmottaSebastian Schaffert Overview over the history of Linked Media in the context of Apache Marmotta. Invited talk at LIME2014 workshop at ESWC2014.

Fedora 4 Deep Dive

Fedora 4 Deep DiveDavid Wilcox This document provides an overview of Fedora 4 including its goals, features, and roadmap. The key points are:

- Fedora 4 aims to improve performance, support flexible storage options, research data management, linked open data, and be an improved platform for developers.

- Fedora 4 beta was released in 2014 and featured the same capabilities as the upcoming production release. Acceptance testing, beta pilots, and community feedback will inform the production release.

- Fedora 4 highlights include content modeling, authorization, versioning, scaling to large files and objects, integrated and external search, and linked data/RDF support.

Web Development

Web DevelopmentShivakrishna Gannu This document provides an overview of the web development skills and tools included in the author's portfolio. It describes HTML as the language that defines the structure of web pages using tags, CSS as the language used to describe page presentation and styles, JavaScript as an essential programming language for interactive web pages, and Bootstrap as a popular front-end framework for designing responsive websites and applications. Screenshots of the author's portfolio are also included.

Open source data_warehousing_overview

Open source data_warehousing_overviewAlex Meadows This document summarizes different approaches to data warehousing including Inmon's 3NF model, Kimball's conformed dimensions model, Linstedt's data vault model, and Rönnbäck's anchor model. It discusses the challenges of data warehousing and provides examples of open source software that can be used to implement each approach including MySQL, PostgreSQL, Greenplum, Infobright, and Hadoop. Cautions are also noted for each methodology.

Dynamic websites

Dynamic websitesJason Castellano Dynamic website that changes daily automatically. A dynamic website can contain client-side scripting or server-side scripting to generate the changing content, or a combination of both scripting types. These sites also include HTML programming for the basic structure.

Steam Learn: An introduction to Redis

Steam Learn: An introduction to Redisinovia This document provides an introduction and overview of Redis. Redis is described as an in-memory non-relational database and data structure server. It is simple to use with no schema or user required. Redis supports a variety of data types including strings, hashes, lists, sets, sorted sets, and more. It is flexible and can be configured for caching, persistence, custom functions, transactions, and publishing/subscribing. Redis is scalable through replication and partitioning. It is widely adopted by companies like GitHub, Instagram, and Twitter for uses like caching, queues, and leaderboards.

Arango DB

Arango DBNexThoughts Technologies ArangoDB is a native multi-model database system developed by triAGENS GmbH. The database system supports three important data models (key/value, documents, graphs) with one database core and a unified query language AQL (ArangoDB Query Language). ArangoDB is a NoSQL database system but AQL is similar in many ways to SQL

Redis

RedisRamon Wartala Redis is an advanced key-value store that is similar to memcached but supports different value types like strings, lists, sets, and sorted sets. It has master-slave replication, expiration of keys, and can be accessed from Ruby through libraries like redis-rb. The Redis server was written in C and supports semi and fully persistent modes.

Web Development Intro

Web Development IntroCindy Royal The document discusses the history and categories of web development. It begins with early technologies like HTML, CSS, and JavaScript. It then discusses how PHP and ASP allowed programming concepts and connecting to databases. Now there are many ways to have an online presence without knowing every technology, including blog platforms, content management systems, and web frameworks. Data visualization is highlighted as an important future area, with open source tools mentioned. Challenges of learning new skills and innovating are also noted.

DOC Presentation by DOC Contractor Alison McCauley

DOC Presentation by DOC Contractor Alison McCauleyFederal Communicators Network The document discusses the goals and plan for building a new content management system (CMS) platform to manage multiple Department of Commerce websites. The key goals were to move to Drupal 7, have a responsive design, and create shared functionality across sites for a cohesive experience. The plan was to build reusable features like content types, galleries, and taxonomies that could be enabled or disabled on each site as needed from a single code repository. While complex, this allows for easier development, maintenance, and a more cohesive user experience across sites.

Mongo DB for Java, Python and PHP Developers

Mongo DB for Java, Python and PHP DevelopersRick Hightower Getting started with MongoDB. Covers basic why Mongo, features, architecture (replica sets, map reduce, aggregation framework), code examples in JavaScript, Java, Python and PHP.

Similar to Drupal as a Semantic Web platform - ISWC 2012 (20)

Drupal 7 and RDF

Drupal 7 and RDFscorlosquet This document discusses Drupal 7 and its new capabilities for representing content as Resource Description Framework (RDF) data. It provides an overview of Drupal's history with RDF and semantic technologies. It describes how Drupal 7 core is now RDFa enabled out of the box and how contributed modules can import vocabularies and provide SPARQL endpoints. The document advocates experimenting with the new RDF features in Drupal 7.

Embedding Linked Data Invisibly into Web Pages: Strategies and Workflows for ...

Embedding Linked Data Invisibly into Web Pages: Strategies and Workflows for ...National Information Standards Organization (NISO) As described in the April NISO/DCMI webinar by Dan Brickley, schema.org is a search-engine initiative aimed at helping webmasters use structured data markup to improve the discovery and display of search results. Drupal 7 makes it easy to markup HTML pages with schema.org terms, allowing users to quickly build websites with structured data that can be understood by Google and displayed as Rich Snippets.

Improved search results are only part of the story, however. Data-bearing documents become machine-processable once you find them. The subject matter, important facts, calendar events, authorship, licensing, and whatever else you might like to share become there for the taking. Sales reports, RSS feeds, industry analysis, maps, diagrams and process artifacts can now connect back to other data sets to provide linkage to context and related content. The key to this is the adoption standards for both the data model (RDF) and the means of weaving it into documents (RDFa). Drupal 7 has become the leading content platform to adopt these standards.

This webinar will describe how RDFa and Drupal 7 can improve how organizations publish information and data on the Web for both internal and external consumption. It will discuss what is required to use these features and how they impact publication workflow. The talk will focus on high-level and accessible demonstrations of what is possible. Technical people should learn how to proceed while non-technical people will learn what is possible.

Drupal and RDF

Drupal and RDFscorlosquet Drupal is an open source content management system that has been exposing its data in RDF format since 2006 through contributed modules. For Drupal 7, RDF support is being built directly into the core, allowing Drupal sites to natively publish structured data using vocabularies like FOAF, SIOC, and Dublin Core. This will empower Drupal users and site builders to more directly participate in the Web of Linked Data and help create new types of semantic applications.

When Drupal and RDF meet

When Drupal and RDF meetscorlosquet This document discusses integrating RDF and semantic web technologies with the Drupal content management system. It provides an overview of Drupal, describes how its data model of content types and fields can be mapped to RDF classes and properties, and details an experiment exposing Drupal data in RDF format. It notes that Drupal 7 will natively support RDFa and help expose more linked data on the web through its large user base of over 227,000 sites.

Drupal and the Semantic Web - ESIP Webinar

Drupal and the Semantic Web - ESIP Webinarscorlosquet This document summarizes a presentation about using semantic web technologies like the Resource Description Framework (RDF) and Linked Data with Drupal 7. It discusses how Drupal 7 maps content types and fields to RDF vocabularies by default and how additional modules can add features like mapping to Schema.org and exposing SPARQL and JSON-LD endpoints. The presentation also covers how Drupal integrates with the larger Semantic Web through technologies like Linked Open Data.

Linked data enhanced publishing for special collections (with Drupal)

Linked data enhanced publishing for special collections (with Drupal)Joachim Neubert This document discusses using Drupal 7 as a content management system for publishing special collections as linked open data. It provides an overview of how Drupal allows customizing content types and fields for mapping to RDF properties. While Drupal 7 provides basic RDFa support out of the box, there are some limitations around nested RDF structures and multiple entities per page that may require custom code. The document outlines some additional linked data modules for Drupal 7 and highlights improved RDF support anticipated in Drupal 8.

Drupal in-depth

Drupal in-depthKathryn Carruthers Drupal is an open-source content management system (CMS) that allows users to build and manage websites. It provides features like blogs, galleries, and the ability to restrict content by user roles. Drupal is highly customizable through modules and themes and supports moving sites between development, test, and production environments. While it uses some technical terms like "nodes" and "taxonomy," Drupal is accessible to non-developers and can be installed on common web hosting with Apache, MySQL, and PHP. Resources for learning Drupal include books, training videos, online communities, and conferences.

Doctrine Project

Doctrine ProjectDaniel Lima Doctrine is a PHP library that provides persistence services and related functionality. It includes an object relational mapper (ORM) for mapping database records to PHP objects, and a database abstraction layer (DBAL). Other libraries include an object document mapper (ODM) for NoSQL databases, common annotations, caching, data fixtures, and migrations. The presentation provides an overview of each library and how to use Doctrine for common tasks like mapping classes, saving and retrieving objects, and schema migrations. Help and contribution opportunities are available on Google Groups, IRC channels, and the project's GitHub page.

Introduction to drupal

Introduction to drupalPedro Cambra Slides from an introduction to Drupal training, basics concepts and examples for better understanding about Drupal.

Introduction to Apache Spark

Introduction to Apache Sparkdatamantra Apache Spark is a fast, general engine for large-scale data processing. It provides unified analytics engine for batch, interactive, and stream processing using an in-memory abstraction called resilient distributed datasets (RDDs). Spark's speed comes from its ability to run computations directly on data stored in cluster memory and optimize performance through caching. It also integrates well with other big data technologies like HDFS, Hive, and HBase. Many large companies are using Spark for its speed, ease of use, and support for multiple workloads and languages.

Lupus Decoupled Drupal - Drupal Austria Meetup - 2023-04.pdf

Lupus Decoupled Drupal - Drupal Austria Meetup - 2023-04.pdfWolfgangZiegler6 Wolfgang Ziegler presented on Lupus Decoupled Drupal, a component-oriented decoupled Drupal stack built with Nuxt.js. It provides a complete, integrated solution for building decoupled Drupal applications with out-of-the-box features like API routing and CORS headers. Components render each Drupal page into reusable frontend components. The stack allows for performance benefits like caching while retaining Drupal features like content editing and authentication. Current work includes finishing JSON views support, automated testing, and documentation to stabilize the beta release.

[HKDUG] #20151017 - BarCamp 2015 - Drupal 8 is Coming! Are You Ready?![[HKDUG] #20151017 - BarCamp 2015 - Drupal 8 is Coming! Are You Ready?](https://cdn.slidesharecdn.com/ss_thumbnails/hkdug20151017-barcamp2015-drupal8iscomingareyouready-151017054334-lva1-app6891-thumbnail.jpg?width=560&fit=bounds)

![[HKDUG] #20151017 - BarCamp 2015 - Drupal 8 is Coming! Are You Ready?](https://cdn.slidesharecdn.com/ss_thumbnails/hkdug20151017-barcamp2015-drupal8iscomingareyouready-151017054334-lva1-app6891-thumbnail.jpg?width=560&fit=bounds)

![[HKDUG] #20151017 - BarCamp 2015 - Drupal 8 is Coming! Are You Ready?](https://cdn.slidesharecdn.com/ss_thumbnails/hkdug20151017-barcamp2015-drupal8iscomingareyouready-151017054334-lva1-app6891-thumbnail.jpg?width=560&fit=bounds)

![[HKDUG] #20151017 - BarCamp 2015 - Drupal 8 is Coming! Are You Ready?](https://cdn.slidesharecdn.com/ss_thumbnails/hkdug20151017-barcamp2015-drupal8iscomingareyouready-151017054334-lva1-app6891-thumbnail.jpg?width=560&fit=bounds)

[HKDUG] #20151017 - BarCamp 2015 - Drupal 8 is Coming! Are You Ready?Wong Hoi Sing Edison The document is about a BarCamp event on Drupal 8 and readiness for it. It provides an agenda that includes an introduction to Drupal, what's new in Drupal 8 like its focus on mobile and multilingual capabilities, why upgrade to Drupal 8 for improvements, the release timeline and status, and next steps and resources for learning more. The speaker is introduced as a Drupal developer and contributor since 2005 who also co-founded the Hong Kong Drupal User Group.

Bio2RDF presentation at Combine 2012

Bio2RDF presentation at Combine 2012François Belleau This document discusses how semantic web technologies like RDF and SPARQL can help navigate complex bioinformatics databases. It describes a three step method for building a semantic mashup: 1) transform data from sources into RDF, 2) load the RDF into a triplestore, and 3) explore and query the dataset. As an example, it details how Bio2RDF transformed various database cross-reference resources into RDF and loaded them into Virtuoso to answer questions about namespace usage.

Drupal in 5mins + Previewing Drupal 8.x

Drupal in 5mins + Previewing Drupal 8.xWong Hoi Sing Edison This document provides an overview of Drupal and previews Drupal 8 features from a presentation given at BarCamp Hong Kong 2013. It introduces Drupal as an open-source CMS, outlines the presentation topics which include popular Drupal modules, a Drupal 7 demo installation, creating a new dummy site, and reviewing new features in Drupal 8. Key new features highlighted for Drupal 8 include Views and configurable being included in the core, improved support for HTML5, configuration management, web services, layouts, and multilingual capabilities.

Publishing Linked Data using Schema.org

Publishing Linked Data using Schema.orgDESTIN-Informatique.com An introduction to Linked Data publishing, starting from the "Why?" and giving the outline of the "How?"

Using schema.org to improve SEO

Using schema.org to improve SEOscorlosquet Using schema.org to improve SEO presented at DrupalCamp Asheville in August 2014.

http://drupalasheville.com/drupal-camp-asheville-2014/sessions/using-schemaorg-improve-seo

Linked Data Publishing with Drupal (SWIB13 workshop)

Linked Data Publishing with Drupal (SWIB13 workshop)Joachim Neubert Publishing Linked Open Data in a user-appealing way is still a challenge: Generic solutions to convert arbitrary RDF structures to HTML out-of-the-box are available, but leave users perplexed. Custom-built web applications to enrich web pages with semantic tags "under the hood" require high efforts in programming. Given this dilemma, content management systems (CMS) could be a natural enhancement point for data on the web. In the case of Drupal, one of the most popular CMS nowadays, Semantic Web enrichment is provided as part of the CMS core. In a simple declarative approach, classes and properties from arbitrary vocabularies can be added to Drupal content types and fields, and are turned into Linked Data on the web pages automagically. The embedded RDFa marked-up data can be easily extracted by other applications. This makes the pages part of the emerging Web of Data, and in the same course helps discoverability with the major search engines.

In the workshop, you will learn how to make use of the built-in Drupal 7 features to produce RDFa enriched pages. You will build new content types, add custom fields and enhance them with RDF markup from mixed vocabularies. The gory details of providing LOD-compatible "cool" URIs will not be skipped, and current limitations of RDF support in Drupal will be explained. Exposing the data in a REST-ful application programming interface or as a SPARQL endpoint are additional options provided by Drupal modules. The workshop will also introduce modules such as Web Taxonomy, which allows linking to thesauri or authority files on the web via simple JSON-based autocomplete lookup. Finally, we will touch the upcoming Drupal 8 version. (Workshop announcement)

DrupalCamp NJ 2014 Solr and Schema.org

DrupalCamp NJ 2014 Solr and Schema.orgscorlosquet The document discusses how search engines are incorporating knowledge graphs and rich snippets to provide more detailed information to users. It describes Google's Knowledge Graph and how search engines like Bing are implementing similar features. The document then outlines how the Schema.org standard and modules like Schema.org and Rich Snippets for Drupal can help structure Drupal content to be understood by search engines and displayed as rich snippets in search results. Integrating these can provide benefits like a consistent search experience across public and private Drupal content.

Beginners Guide to Drupal

Beginners Guide to DrupalGerald Villorente This document provides an overview of Drupal, an open-source content management framework (CMS) written in PHP. Drupal allows for rapid website development, has a large community and support network, and is used by thousands of sites including whitehouse.gov and cnn.com. The document outlines Drupal's modular architecture and installation process, and provides resources for learning more about using and customizing Drupal.

The Future of Search and SEO in Drupal

The Future of Search and SEO in Drupalscorlosquet Stéphane Corlosquet and Nick Veenhof presented on the future of search and SEO. They discussed how search engines like Google are moving towards knowledge graphs that understand relationships between entities rather than just keyword matching. They explained how the Schema.org standard and modules like Schema.org and Rich Snippets for Drupal help structure Drupal content to be understood by search engines and display rich snippets in search results. The presentation demonstrated how these techniques improve search and allow Drupal sites to integrate with non-Drupal data.

Embedding Linked Data Invisibly into Web Pages: Strategies and Workflows for ...

Embedding Linked Data Invisibly into Web Pages: Strategies and Workflows for ...National Information Standards Organization (NISO)

Ad

More from scorlosquet (6)

Keeping your Drupal site secure 2013

Keeping your Drupal site secure 2013scorlosquet This document discusses keeping Drupal sites secure. It recommends using HTTPS, SSH, strong passwords, and limiting permissions. Drupal 7 introduced stronger password hashing and login flood control. Modules can enhance security, and hosted options like Pantheon focus on security updates. Site maintainers should follow best practices, take backups, and sanitize shared backups. Drupal 8 introduces Twig templating to prevent PHP execution and filters uploaded images to the same site. References are provided for further security information.

Schema.org & Drupal (FR)

Schema.org & Drupal (FR)scorlosquet Présentation éclair faite à la rencontre Drupal de Paris au sujet de schema.org et Drupal 7. Essentiellement des liens vers les modules.

Security - Drupal Decision Makers training

Security - Drupal Decision Makers trainingscorlosquet This document discusses security best practices for Drupal, including using HTTPS and SSH, strong passwords, keeping the server and site settings secure, and modules that can enhance security. It also covers Drupal 7 security improvements like password hashing and login flood control, as well as the importance of ongoing maintenance, backups, and following the Drupal security process.

How to Build Linked Data Sites with Drupal 7 and RDFa

How to Build Linked Data Sites with Drupal 7 and RDFascorlosquet Slides of the tutorial Stéphane Corlosquet, Lin Clark and Alexandre Passant presented at SemTech 2010 in San Francisco http://semtech2010.semanticuniverse.com/sessionPop.cfm?confid=42& proposalid=2889

RDF presentation at DrupalCon San Francisco 2010

RDF presentation at DrupalCon San Francisco 2010scorlosquet The document discusses RDF and the Semantic Web in Drupal 7. It introduces RDF, how resources can be described as relationships between properties and values, and how this turns the web into a giant linked database. It describes Drupal 7's new RDF and RDFa support which exposes entity relationships and allows for machine-readable semantic data. Future improvements discussed include custom RDF mappings, SPARQL querying of site data, and connecting to external RDF sources.

Produce and Consume Linked Data with Drupal!

Produce and Consume Linked Data with Drupal!scorlosquet Currently a large number of Web sites are driven by Content Management Systems (CMS) which manage textual and multimedia content but also - inherently - carry valuable information about a site's structure and content model. Exposing this structured information to the Web of Data has so far required considerable expertise in RDF and OWL modelling and additional programming effort. In this paper we tackle one of the most popular CMS: Drupal. We enable site administrators to export their site content model and data to the Web of Data without requiring extensive knowledge on Semantic Web technologies. Our modules create RDFa annotations and - optionally - a SPARQL endpoint for any Drupal site out of the box. Likewise, we add the means to map the site data to existing ontologies on the Web with a search interface to find commonly used ontology terms. We also allow a Drupal site administrator to include existing RDF data from remote SPARQL endpoints on the Web in the site. When brought together, these features allow networked RDF Drupal sites that reuse and enrich Linked Data. We finally discuss the adoption of our modules and report on a use case in the biomedical field and the current status of its deployment.

Ad

Recently uploaded (20)

Noah Loul Shares 5 Steps to Implement AI Agents for Maximum Business Efficien...

Noah Loul Shares 5 Steps to Implement AI Agents for Maximum Business Efficien...Noah Loul Artificial intelligence is changing how businesses operate. Companies are using AI agents to automate tasks, reduce time spent on repetitive work, and focus more on high-value activities. Noah Loul, an AI strategist and entrepreneur, has helped dozens of companies streamline their operations using smart automation. He believes AI agents aren't just tools—they're workers that take on repeatable tasks so your human team can focus on what matters. If you want to reduce time waste and increase output, AI agents are the next move.

Rusty Waters: Elevating Lakehouses Beyond Spark

Rusty Waters: Elevating Lakehouses Beyond Sparkcarlyakerly1 Spark is a powerhouse for large datasets, but when it comes to smaller data workloads, its overhead can sometimes slow things down. What if you could achieve high performance and efficiency without the need for Spark?

At S&P Global Commodity Insights, having a complete view of global energy and commodities markets enables customers to make data-driven decisions with confidence and create long-term, sustainable value. 🌍

Explore delta-rs + CDC and how these open-source innovations power lightweight, high-performance data applications beyond Spark! 🚀

How analogue intelligence complements AI

How analogue intelligence complements AIPaul Rowe

Artificial Intelligence is providing benefits in many areas of work within the heritage sector, from image analysis, to ideas generation, and new research tools. However, it is more critical than ever for people, with analogue intelligence, to ensure the integrity and ethical use of AI. Including real people can improve the use of AI by identifying potential biases, cross-checking results, refining workflows, and providing contextual relevance to AI-driven results.

News about the impact of AI often paints a rosy picture. In practice, there are many potential pitfalls. This presentation discusses these issues and looks at the role of analogue intelligence and analogue interfaces in providing the best results to our audiences. How do we deal with factually incorrect results? How do we get content generated that better reflects the diversity of our communities? What roles are there for physical, in-person experiences in the digital world?

HCL Nomad Web – Best Practices und Verwaltung von Multiuser-Umgebungen

HCL Nomad Web – Best Practices und Verwaltung von Multiuser-Umgebungenpanagenda Webinar Recording: https://www.panagenda.com/webinars/hcl-nomad-web-best-practices-und-verwaltung-von-multiuser-umgebungen/

HCL Nomad Web wird als die nächste Generation des HCL Notes-Clients gefeiert und bietet zahlreiche Vorteile, wie die Beseitigung des Bedarfs an Paketierung, Verteilung und Installation. Nomad Web-Client-Updates werden “automatisch” im Hintergrund installiert, was den administrativen Aufwand im Vergleich zu traditionellen HCL Notes-Clients erheblich reduziert. Allerdings stellt die Fehlerbehebung in Nomad Web im Vergleich zum Notes-Client einzigartige Herausforderungen dar.

Begleiten Sie Christoph und Marc, während sie demonstrieren, wie der Fehlerbehebungsprozess in HCL Nomad Web vereinfacht werden kann, um eine reibungslose und effiziente Benutzererfahrung zu gewährleisten.

In diesem Webinar werden wir effektive Strategien zur Diagnose und Lösung häufiger Probleme in HCL Nomad Web untersuchen, einschließlich

- Zugriff auf die Konsole

- Auffinden und Interpretieren von Protokolldateien

- Zugriff auf den Datenordner im Cache des Browsers (unter Verwendung von OPFS)

- Verständnis der Unterschiede zwischen Einzel- und Mehrbenutzerszenarien

- Nutzung der Client Clocking-Funktion

AI EngineHost Review: Revolutionary USA Datacenter-Based Hosting with NVIDIA ...

AI EngineHost Review: Revolutionary USA Datacenter-Based Hosting with NVIDIA ...SOFTTECHHUB I started my online journey with several hosting services before stumbling upon Ai EngineHost. At first, the idea of paying one fee and getting lifetime access seemed too good to pass up. The platform is built on reliable US-based servers, ensuring your projects run at high speeds and remain safe. Let me take you step by step through its benefits and features as I explain why this hosting solution is a perfect fit for digital entrepreneurs.

Complete Guide to Advanced Logistics Management Software in Riyadh.pdf

Complete Guide to Advanced Logistics Management Software in Riyadh.pdfSoftware Company Explore the benefits and features of advanced logistics management software for businesses in Riyadh. This guide delves into the latest technologies, from real-time tracking and route optimization to warehouse management and inventory control, helping businesses streamline their logistics operations and reduce costs. Learn how implementing the right software solution can enhance efficiency, improve customer satisfaction, and provide a competitive edge in the growing logistics sector of Riyadh.

Andrew Marnell: Transforming Business Strategy Through Data-Driven Insights

Andrew Marnell: Transforming Business Strategy Through Data-Driven InsightsAndrew Marnell With expertise in data architecture, performance tracking, and revenue forecasting, Andrew Marnell plays a vital role in aligning business strategies with data insights. Andrew Marnell’s ability to lead cross-functional teams ensures businesses achieve sustainable growth and operational excellence.

Role of Data Annotation Services in AI-Powered Manufacturing

Role of Data Annotation Services in AI-Powered ManufacturingAndrew Leo From predictive maintenance to robotic automation, AI is driving the future of manufacturing. But without high-quality annotated data, even the smartest models fall short.

Discover how data annotation services are powering accuracy, safety, and efficiency in AI-driven manufacturing systems.

Precision in data labeling = Precision on the production floor.

AI and Data Privacy in 2025: Global Trends

AI and Data Privacy in 2025: Global TrendsInData Labs In this infographic, we explore how businesses can implement effective governance frameworks to address AI data privacy. Understanding it is crucial for developing effective strategies that ensure compliance, safeguard customer trust, and leverage AI responsibly. Equip yourself with insights that can drive informed decision-making and position your organization for success in the future of data privacy.

This infographic contains:

-AI and data privacy: Key findings

-Statistics on AI data privacy in the today’s world

-Tips on how to overcome data privacy challenges

-Benefits of AI data security investments.

Keep up-to-date on how AI is reshaping privacy standards and what this entails for both individuals and organizations.

Quantum Computing Quick Research Guide by Arthur Morgan

Quantum Computing Quick Research Guide by Arthur MorganArthur Morgan This is a Quick Research Guide (QRG).

QRGs include the following:

- A brief, high-level overview of the QRG topic.

- A milestone timeline for the QRG topic.

- Links to various free online resource materials to provide a deeper dive into the QRG topic.

- Conclusion and a recommendation for at least two books available in the SJPL system on the QRG topic.

QRGs planned for the series:

- Artificial Intelligence QRG

- Quantum Computing QRG

- Big Data Analytics QRG

- Spacecraft Guidance, Navigation & Control QRG (coming 2026)

- UK Home Computing & The Birth of ARM QRG (coming 2027)

Any questions or comments?

- Please contact Arthur Morgan at [email protected].

100% human made.

Cyber Awareness overview for 2025 month of security

Cyber Awareness overview for 2025 month of securityriccardosl1 Cyber awareness training educates employees on risk associated with internet and malicious emails

TrustArc Webinar: Consumer Expectations vs Corporate Realities on Data Broker...

TrustArc Webinar: Consumer Expectations vs Corporate Realities on Data Broker...TrustArc Most consumers believe they’re making informed decisions about their personal data—adjusting privacy settings, blocking trackers, and opting out where they can. However, our new research reveals that while awareness is high, taking meaningful action is still lacking. On the corporate side, many organizations report strong policies for managing third-party data and consumer consent yet fall short when it comes to consistency, accountability and transparency.

This session will explore the research findings from TrustArc’s Privacy Pulse Survey, examining consumer attitudes toward personal data collection and practical suggestions for corporate practices around purchasing third-party data.

Attendees will learn:

- Consumer awareness around data brokers and what consumers are doing to limit data collection

- How businesses assess third-party vendors and their consent management operations

- Where business preparedness needs improvement

- What these trends mean for the future of privacy governance and public trust

This discussion is essential for privacy, risk, and compliance professionals who want to ground their strategies in current data and prepare for what’s next in the privacy landscape.

DevOpsDays Atlanta 2025 - Building 10x Development Organizations.pptx

DevOpsDays Atlanta 2025 - Building 10x Development Organizations.pptxJustin Reock Building 10x Organizations with Modern Productivity Metrics

10x developers may be a myth, but 10x organizations are very real, as proven by the influential study performed in the 1980s, ‘The Coding War Games.’

Right now, here in early 2025, we seem to be experiencing YAPP (Yet Another Productivity Philosophy), and that philosophy is converging on developer experience. It seems that with every new method we invent for the delivery of products, whether physical or virtual, we reinvent productivity philosophies to go alongside them.

But which of these approaches actually work? DORA? SPACE? DevEx? What should we invest in and create urgency behind today, so that we don’t find ourselves having the same discussion again in a decade?

TrsLabs - Fintech Product & Business Consulting

TrsLabs - Fintech Product & Business ConsultingTrs Labs Hybrid Growth Mandate Model with TrsLabs

Strategic Investments, Inorganic Growth, Business Model Pivoting are critical activities that business don't do/change everyday. In cases like this, it may benefit your business to choose a temporary external consultant.

An unbiased plan driven by clearcut deliverables, market dynamics and without the influence of your internal office equations empower business leaders to make right choices.

Getting things done within a budget within a timeframe is key to Growing Business - No matter whether you are a start-up or a big company

Talk to us & Unlock the competitive advantage

Technology Trends in 2025: AI and Big Data Analytics

Technology Trends in 2025: AI and Big Data AnalyticsInData Labs At InData Labs, we have been keeping an ear to the ground, looking out for AI-enabled digital transformation trends coming our way in 2025. Our report will provide a look into the technology landscape of the future, including:

-Artificial Intelligence Market Overview

-Strategies for AI Adoption in 2025

-Anticipated drivers of AI adoption and transformative technologies

-Benefits of AI and Big data for your business

-Tips on how to prepare your business for innovation

-AI and data privacy: Strategies for securing data privacy in AI models, etc.

Download your free copy nowand implement the key findings to improve your business.

Increasing Retail Store Efficiency How can Planograms Save Time and Money.pptx

Increasing Retail Store Efficiency How can Planograms Save Time and Money.pptxAnoop Ashok In today's fast-paced retail environment, efficiency is key. Every minute counts, and every penny matters. One tool that can significantly boost your store's efficiency is a well-executed planogram. These visual merchandising blueprints not only enhance store layouts but also save time and money in the process.

Cybersecurity Identity and Access Solutions using Azure AD

Cybersecurity Identity and Access Solutions using Azure ADVICTOR MAESTRE RAMIREZ Cybersecurity Identity and Access Solutions using Azure AD

Drupal as a Semantic Web platform - ISWC 2012

- 1. Drupal as a Semantic Web platform Stéphane Corlosquet, Sudeshna Das, Emily Merrill, Paolo Ciccarese, and Tim Clark Massachusetts General Hospital ISWC 2012, Boston, USA – Nov 14th, 2012

- 2. Drupal ● Dries Buytaert - small news site in 2000 ● Open Source - 2001 ● Content Management System ● LAMP stack ● Non-developers can build sites and publish content ● Control panels instead of code http://www.flickr.com/photos/funkyah/2400889778

- 10. Who uses Drupal?

- 11. Who uses Drupal?

- 12. Who uses Drupal? http://buytaert.net/tag/drupal-sites

- 13. Drupal ● Open & modular architecture ● Extensible by modules ● Standards-based ● Low resource hosting ● Scalable http://drupal.org/getting-started/before/overview

- 14. Building a Drupal site http://www.flickr.com/photos/toomuchdew/3792159077/

- 15. Building a Drupal site ● Create the content types you need Blog, article, wiki, forum, polls, image, video, podcast, e- commerce... (be creative) http://www.flickr.com/photos/georgivar/4795856532/

- 16. Building a Drupal site ● Enable the features you want Comments, tags, voting/rating, location, translations, revisions, search... http://www.flickr.com/photos/skip/42288941/

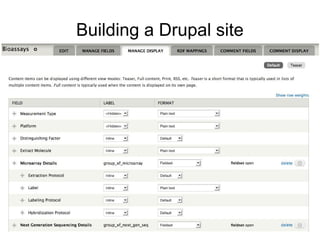

- 17. Building a Drupal site

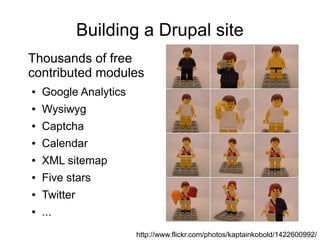

- 18. Building a Drupal site Thousands of free contributed modules ● Google Analytics ● Wysiwyg ● Captcha ● Calendar ● XML sitemap ● Five stars ● Twitter ● ... http://www.flickr.com/photos/kaptainkobold/1422600992/

- 19. The Drupal Community http://www.flickr.com/photos/x-foto/4923221504/

- 20. Use Case #1: Stem Cell Commons http://stemcellcommons.org

- 21. Repository • New repository for stem cell data as part of Stem Cell Commons • Harvard Stem Cell Institute (HSCI): Blood and Cancer program system • Designed to incorporate - multiple stem cell types - multiple assay types - user requested features • Integrated with analytical tools • Enhanced search and browsing capabilities

- 26. Content types

- 29. Integrated with Analysis tools

- 30. What about RDF?

- 31. Drupal 7 default RDF Schema

- 32. SCC RDF Schema

- 34. Modules used ● Contributed module for more features ● RDF Extensions ● Serialization formats: RDF/XML, Turtle, N-Triples ● SPARQL ● Expose Drupal RDF data in a SPARQL Endpoint ● Features and packaging ● Build distributions / deployment workflow

- 35. SPARQL Endpoint ● SPARQL Endpoint available at /sparql

- 36. SPARQL Endpoint ● Need to query Drupal data across different classes from R ● Need a standard query language ● SQL? ● Query Drupal data with SPARQL

- 39. SPARQL query PREFIX obo: <http://purl.obolibrary.org/obo/> PREFIX mged: <http://mged.sourceforge.net/ontologies/MGEDontology.php#> PREFIX dc: <http://purl.org/dc/terms/> SELECT ?bioassay_title WHERE { ?experiment obo:OBI_0000070 ?bioassay; dc:title ?bioassay_title . ?bioassay mged:LabelCompound <http://exframe-dev.sciencecollaboration.org/taxonomy/term/588> . } GROUP BY ?bioassay_title ORDER BY ASC(dc:date)

- 40. Wrap up use case #1 ● Drupal is a good fit for building web frontends ● Editing User Interfaces out of the box ● Querying Data in SQL: ● not very friendly ● may not be appropriate / performant ● Querying with SPARQL: ● Use the backend that match your needs ● ARC2 can be sufficient for prototyping and lightweight use cases

- 41. Use Case #2: Data Layers Domeo + Drupal

- 42. Domeo ● Annotation Tool developed by MIND Informatics, Massachusetts General Hospital ● Annotate HTML documents ● Share annotations ● Annotation Ontology (AO), provenance, ACL ● JSON-LD Service to retrieve annotations ● http://annotationframework.org/

- 43. Domeo

- 44. Domeo

- 45. Domeo

- 46. Domeo

- 47. JSON-LD ● JSON for Linked Data ● Client side as well as server side friendly ● Browser Scripting: – Native javascript format – RDFa API in the DOM ● Data can be fetched from anywhere: – Cross-Origin Resource Sharing (CORS) required ● Clients can mash data

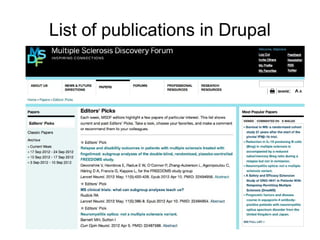

- 48. List of publications in Drupal

- 49. Can we layer personal annotations on top?

- 50. What do we have? ● RDFa markup for each publication

- 51. RDFa API ● Extract structured data from RDFa documents ● Green Turtle: RDFa 1.1 library in Javascript document.getElementsByType('http://schema.org/ScholarlyArticle');

- 52. RDFa API

- 53. Domeo + Drupal ● Data mash up from independent sources

- 54. Domeo + Drupal

- 55. Wrap up use case #2 ● Another use case for exposing data as RDFa ● RDFa and JSON-LD fit well together ● HTML → RDFa ● JSON → JSON-LD ● CORS support not yet available everywhere ● Grails didn't have it ● Use JSONP instead

- 56. Thanks! ● Stéphane Corlosquet ● [email protected] ● @scorlosquet ● http://openspring.net/ ● MIND Informatics ● http://www.mindinformatics.org/