Dynamic programming

Download as PPTX, PDF12 likes23,710 views

A. Plannig The document mentions that in dynamic programming, "Programming" refers to "planning", not computer programming.

1 of 30

Downloaded 831 times

![Matrix Chain Multiplication

• Given : a chain of matrices {A1,A2,…,An}.

• Once all pairs of matrices are parenthesized, they can

be multiplied by using the standard algorithm as a sub-

routine.

• A product of matrices is fully parenthesized if it is either

a single matrix or the product of two fully parenthesized

matrix products, surrounded by parentheses. [Note: since

matrix multiplication is associative, all parenthesizations yield the

same product.]](https://image.slidesharecdn.com/dynamicprogramming-160512234533/85/Dynamic-programming-20-320.jpg)

![Matrix Chain Multiplication Optimal

Parenthesization

• Example: A[30][35], B[35][15], C[15][5]

minimum of A*B*C

A*(B*C) = 30*35*5 + 35*15*5 = 7,585

(A*B)*C = 30*35*15 + 30*15*5 = 18,000

• How to optimize:

– Brute force – look at every possible way to

parenthesize : Ω(4n/n3/2)

– Dynamic programming – time complexity of Ω(n3) and

space complexity of Θ(n2).](https://image.slidesharecdn.com/dynamicprogramming-160512234533/85/Dynamic-programming-22-320.jpg)

Ad

Recommended

Dynamic Programming

Dynamic ProgrammingBharat Bhushan Dynamic Programming :

Dynamic programming is a technique for solving problems by breaking them down into smaller subproblems, solving each subproblem once, and storing the solution to each subproblem so that it can be reused in the future. Some characteristics of dynamic programming include:

Optimal substructure: Dynamic programming problems typically have an optimal substructure, meaning that the optimal solution to the problem can be obtained by solving the subproblems optimally and combining their solutions.

Overlapping subproblems: Dynamic programming problems often involve overlapping subproblems, meaning that the same subproblems are solved multiple times. To avoid solving the same subproblem multiple times, dynamic programming algorithms store the solutions to the subproblems in a table or array, so that they can be reused later.

Bottom-up approach: Dynamic programming algorithms usually solve problems using a bottom-up approach, meaning that they start by solving the smallest subproblems and work their way up to the larger ones.

Efficiency: Dynamic programming algorithms can be very efficient, especially when the subproblems overlap significantly. By storing the solutions to the subproblems and reusing them, dynamic programming algorithms can avoid redundant computations and achieve good time and space complexity.

Applicability: Dynamic programming is applicable to a wide range of problems, including optimization problems, decision problems, and problems that involve sequential decisions. It is often used to solve problems in computer science, operations research, and economics.

Algorithm Design Techniques

Iterative techniques, Divide and Conquer, Dynamic Programming, Greedy Algorithms.

Greedy Algorihm

Greedy AlgorihmMuhammad Amjad Rana This document outlines greedy algorithms, their characteristics, and examples of their use. Greedy algorithms make locally optimal choices at each step in the hopes of finding a global optimum. They are simple to implement and fast, but may not always reach the true optimal solution. Examples discussed include coin changing, traveling salesman, minimum spanning trees using Kruskal's and Prim's algorithms, and Huffman coding.

Game theory (Operation Research)

Game theory (Operation Research)kashif ayaz Game theory is a mathematical approach that analyzes strategic interactions between parties. It is used to understand situations where decision-makers are impacted by others' choices. A game has players, strategies, payoffs, and information. The Nash equilibrium predicts outcomes as the strategies where no player benefits by changing alone given others' choices. For example, in the Prisoner's Dilemma game about two suspects, confessing dominates remaining silent no matter what the other does, leading both to confess for a worse joint outcome than remaining silent.

Algorithms Lecture 6: Searching Algorithms

Algorithms Lecture 6: Searching AlgorithmsMohamed Loey We will discuss the following: Search Algorithms, Linear Search, Binary Search, Jump Search, and Interpolation Search

Dynamic programming

Dynamic programmingMelaku Bayih Demessie Dynamic Programming is a method for solving a complex problem by breaking it down into a collection of simpler subproblems, solving each of those subproblems just once, and storing their solutions using a memory-based data structure (array, map,etc).

Data Manipulation Language

Data Manipulation LanguageJas Singh Bhasin The DBMS provides a set of operations or a language called the data manipulation language (DML) for modification of the data.

Data manipulation can be performed either by typing SQL statements or by using a graphical interface, typically called Query-By-Example (QBE).

Travelling salesman dynamic programming

Travelling salesman dynamic programmingmaharajdey This document discusses the traveling salesman problem and a dynamic programming approach to solving it. It was presented by Maharaj Dey, a 6th semester CSE student with university roll number 11500117099 for the paper CS-681 (SEMINAR). The document concludes with a thank you.

Divide and Conquer

Divide and ConquerDr Shashikant Athawale The document discusses the divide and conquer algorithm design paradigm. It begins by defining divide and conquer as recursively breaking down a problem into smaller sub-problems, solving the sub-problems, and then combining the solutions to solve the original problem. Some examples of problems that can be solved using divide and conquer include binary search, quicksort, merge sort, and the fast Fourier transform algorithm. The document then discusses control abstraction, efficiency analysis, and uses divide and conquer to provide algorithms for large integer multiplication and merge sort. It concludes by defining the convex hull problem and providing an example input and output.

Greedy Algorithm - Knapsack Problem

Greedy Algorithm - Knapsack ProblemMadhu Bala The document discusses the knapsack problem and greedy algorithms. It defines the knapsack problem as an optimization problem where given constraints and an objective function, the goal is to find the feasible solution that maximizes or minimizes the objective. It describes the knapsack problem has having two versions: 0-1 where items are indivisible, and fractional where items can be divided. The fractional knapsack problem can be solved using a greedy approach by sorting items by value to weight ratio and filling the knapsack accordingly until full.

Dinive conquer algorithm

Dinive conquer algorithmMohd Arif The document discusses the divide and conquer algorithm design technique. It begins by explaining the basic approach of divide and conquer which is to (1) divide the problem into subproblems, (2) conquer the subproblems by solving them recursively, and (3) combine the solutions to the subproblems into a solution for the original problem. It then provides merge sort as a specific example of a divide and conquer algorithm for sorting a sequence. It explains that merge sort divides the sequence in half recursively until individual elements remain, then combines the sorted halves back together to produce the fully sorted sequence.

Longest Common Subsequence

Longest Common SubsequenceKrishma Parekh After a long period, I bring you new - fresh Presentation which gives you a brief idea on sub-problem of Dynamic Programming which is called as -"Longest Common Subsequence".I hope this presentation may help to all my viewers....

Knapsack problem using greedy approach

Knapsack problem using greedy approachpadmeshagrekar In shared PPT we have discussed Knapsack problem using greedy approach and its two types i.e Fractional and 0-1

Bruteforce algorithm

Bruteforce algorithmRezwan Siam This powerpoint slide is for educational purposes only.!

We have given our best to educate people about the basic brute-force algorithm. Best of luck.

Single source Shortest path algorithm with example

Single source Shortest path algorithm with exampleVINITACHAUHAN21 The document discusses greedy algorithms and their application to solving optimization problems. It provides an overview of greedy algorithms and explains that they make locally optimal choices at each step in the hope of finding a globally optimal solution. One application discussed is the single source shortest path problem, which can be solved using Dijkstra's algorithm. Dijkstra's algorithm is presented as a greedy approach that runs in O(V2) time for a graph with V vertices. An example of applying Dijkstra's algorithm to find shortest paths from a source node in a graph is provided.

Introduction to Dynamic Programming, Principle of Optimality

Introduction to Dynamic Programming, Principle of OptimalityBhavin Darji Introduction

Dynamic Programming

How Dynamic Programming reduces computation

Steps in Dynamic Programming

Dynamic Programming Properties

Principle of Optimality

Problem solving using Dynamic Programming

BackTracking Algorithm: Technique and Examples

BackTracking Algorithm: Technique and ExamplesFahim Ferdous This slides gives a strong overview of backtracking algorithm. How it came and general approaches of the techniques. Also some well-known problem and solution of backtracking algorithm.

AI 7 | Constraint Satisfaction Problem

AI 7 | Constraint Satisfaction ProblemMohammad Imam Hossain The document provides an overview of constraint satisfaction problems (CSPs). It defines a CSP as consisting of variables with domains of possible values, and constraints specifying allowed value combinations. CSPs can represent many problems using variables and constraints rather than explicit state representations. Backtracking search is commonly used to solve CSPs by trying value assignments and backtracking when constraints are violated.

Binary Search

Binary Searchkunj desai This document describes binary search and provides an example of how it works. It begins with an introduction to binary search, noting that it can only be used on sorted lists and involves comparing the search key to the middle element. It then provides pseudocode for the binary search algorithm. The document analyzes the time complexity of binary search as O(log n) in the average and worst cases. It notes the advantages of binary search are its efficiency, while the disadvantage is that the list must be sorted. Applications mentioned include database searching and solving equations.

Breadth First Search & Depth First Search

Breadth First Search & Depth First SearchKevin Jadiya The slides attached here describes how Breadth first search and Depth First Search technique is used in Traversing a graph/tree with Algorithm and simple code snippet.

Divide and conquer

Divide and conquerDr Shashikant Athawale The document discusses divide and conquer algorithms. It describes divide and conquer as a design strategy that involves dividing a problem into smaller subproblems, solving the subproblems recursively, and combining the solutions. It provides examples of divide and conquer algorithms like merge sort, quicksort, and binary search. Merge sort works by recursively sorting halves of an array until it is fully sorted. Quicksort selects a pivot element and partitions the array into subarrays of smaller and larger elements, recursively sorting the subarrays. Binary search recursively searches half-intervals of a sorted array to find a target value.

Intermediate code generation (Compiler Design)

Intermediate code generation (Compiler Design) Tasif Tanzim Compiler Design

Discuss Topics: Intermediate code generation

three address code generation

quadruple,triple,Indirect triple etc

Greedy algorithm

Greedy algorithmInternational Islamic University The document discusses greedy algorithms and their application to optimization problems. It provides examples of problems that can be solved using greedy approaches, such as fractional knapsack and making change. However, it notes that some problems like 0-1 knapsack and shortest paths on multi-stage graphs cannot be solved optimally with greedy algorithms. The document also describes various greedy algorithms for minimum spanning trees, single-source shortest paths, and fractional knapsack problems.

Heuristic Search Techniques {Artificial Intelligence}

Heuristic Search Techniques {Artificial Intelligence}FellowBuddy.com FellowBuddy.com is an innovative platform that brings students together to share notes, exam papers, study guides, project reports and presentation for upcoming exams.

We connect Students who have an understanding of course material with Students who need help.

Benefits:-

# Students can catch up on notes they missed because of an absence.

# Underachievers can find peer developed notes that break down lecture and study material in a way that they can understand

# Students can earn better grades, save time and study effectively

Our Vision & Mission – Simplifying Students Life

Our Belief – “The great breakthrough in your life comes when you realize it, that you can learn anything you need to learn; to accomplish any goal that you have set for yourself. This means there are no limits on what you can be, have or do.”

Like Us - https://www.facebook.com/FellowBuddycom

Graph coloring using backtracking

Graph coloring using backtrackingshashidharPapishetty This document summarizes graph coloring using backtracking. It defines graph coloring as minimizing the number of colors used to color a graph. The chromatic number is the fewest colors needed. Graph coloring is NP-complete. The document outlines a backtracking algorithm that tries assigning colors to vertices, checks if the assignment is valid (no adjacent vertices have the same color), and backtracks if not. It provides pseudocode for the algorithm and lists applications like scheduling, Sudoku, and map coloring.

Knapsack Problem

Knapsack ProblemJenny Galino The document discusses the knapsack problem, which involves selecting a subset of items that fit within a knapsack of limited capacity to maximize the total value. There are two versions - the 0-1 knapsack problem where items can only be selected entirely or not at all, and the fractional knapsack problem where items can be partially selected. Solutions include brute force, greedy algorithms, and dynamic programming. Dynamic programming builds up the optimal solution by considering all sub-problems.

B trees in Data Structure

B trees in Data StructureAnuj Modi B-Trees are tree data structures used to store data on disk storage. They allow for efficient retrieval of data compared to binary trees when using disk storage due to reduced height. B-Trees group data into nodes that can have multiple children, reducing the height needed compared to binary trees. Keys are inserted by adding to leaf nodes or splitting nodes and promoting middle keys. Deletion involves removing from leaf nodes, borrowing/promoting keys, or joining nodes.

I. AO* SEARCH ALGORITHM

I. AO* SEARCH ALGORITHMvikas dhakane Artificial Intelligence: Introduction, Typical Applications. State Space Search: Depth Bounded

DFS, Depth First Iterative Deepening. Heuristic Search: Heuristic Functions, Best First Search,

Hill Climbing, Variable Neighborhood Descent, Beam Search, Tabu Search. Optimal Search: A

*

algorithm, Iterative Deepening A*

, Recursive Best First Search, Pruning the CLOSED and OPEN

Lists

A* Search Algorithm

A* Search Algorithmvikas dhakane Artificial Intelligence: Introduction, Typical Applications. State Space Search: Depth Bounded

DFS, Depth First Iterative Deepening. Heuristic Search: Heuristic Functions, Best First Search,

Hill Climbing, Variable Neighborhood Descent, Beam Search, Tabu Search. Optimal Search: A

*

algorithm, Iterative Deepening A*

, Recursive Best First Search, Pruning the CLOSED and OPEN

Lists

Randomized Algorithms in Linear Algebra & the Column Subset Selection Problem

Randomized Algorithms in Linear Algebra & the Column Subset Selection ProblemWei Xue 1) The document discusses randomized algorithms for linear algebra problems like matrix decomposition and column subset selection.

2) It describes how sampling rows/columns of a matrix using random projections can create smaller matrices that approximate the original well.

3) The talk will illustrate applications of these ideas to the column subset selection problem and approximating low-rank matrix decompositions.

Chap08alg

Chap08algMunkhchimeg The document describes several algorithms for dynamic programming and graph algorithms:

1. It presents four algorithms for computing the Fibonacci numbers using dynamic programming with arrays or recursion with and without memoization.

2. It provides algorithms for solving coin changing and matrix multiplication problems using dynamic programming by filling out arrays.

3. It gives algorithms to find the length and a longest common subsequence between two strings using dynamic programming.

4. It introduces algorithms to find shortest paths between all pairs of vertices in a weighted graph using Floyd's and Warshall's algorithms. It includes algorithms to output a shortest path between two vertices.

Ad

More Related Content

What's hot (20)

Greedy Algorithm - Knapsack Problem

Greedy Algorithm - Knapsack ProblemMadhu Bala The document discusses the knapsack problem and greedy algorithms. It defines the knapsack problem as an optimization problem where given constraints and an objective function, the goal is to find the feasible solution that maximizes or minimizes the objective. It describes the knapsack problem has having two versions: 0-1 where items are indivisible, and fractional where items can be divided. The fractional knapsack problem can be solved using a greedy approach by sorting items by value to weight ratio and filling the knapsack accordingly until full.

Dinive conquer algorithm

Dinive conquer algorithmMohd Arif The document discusses the divide and conquer algorithm design technique. It begins by explaining the basic approach of divide and conquer which is to (1) divide the problem into subproblems, (2) conquer the subproblems by solving them recursively, and (3) combine the solutions to the subproblems into a solution for the original problem. It then provides merge sort as a specific example of a divide and conquer algorithm for sorting a sequence. It explains that merge sort divides the sequence in half recursively until individual elements remain, then combines the sorted halves back together to produce the fully sorted sequence.

Longest Common Subsequence

Longest Common SubsequenceKrishma Parekh After a long period, I bring you new - fresh Presentation which gives you a brief idea on sub-problem of Dynamic Programming which is called as -"Longest Common Subsequence".I hope this presentation may help to all my viewers....

Knapsack problem using greedy approach

Knapsack problem using greedy approachpadmeshagrekar In shared PPT we have discussed Knapsack problem using greedy approach and its two types i.e Fractional and 0-1

Bruteforce algorithm

Bruteforce algorithmRezwan Siam This powerpoint slide is for educational purposes only.!

We have given our best to educate people about the basic brute-force algorithm. Best of luck.

Single source Shortest path algorithm with example

Single source Shortest path algorithm with exampleVINITACHAUHAN21 The document discusses greedy algorithms and their application to solving optimization problems. It provides an overview of greedy algorithms and explains that they make locally optimal choices at each step in the hope of finding a globally optimal solution. One application discussed is the single source shortest path problem, which can be solved using Dijkstra's algorithm. Dijkstra's algorithm is presented as a greedy approach that runs in O(V2) time for a graph with V vertices. An example of applying Dijkstra's algorithm to find shortest paths from a source node in a graph is provided.

Introduction to Dynamic Programming, Principle of Optimality

Introduction to Dynamic Programming, Principle of OptimalityBhavin Darji Introduction

Dynamic Programming

How Dynamic Programming reduces computation

Steps in Dynamic Programming

Dynamic Programming Properties

Principle of Optimality

Problem solving using Dynamic Programming

BackTracking Algorithm: Technique and Examples

BackTracking Algorithm: Technique and ExamplesFahim Ferdous This slides gives a strong overview of backtracking algorithm. How it came and general approaches of the techniques. Also some well-known problem and solution of backtracking algorithm.

AI 7 | Constraint Satisfaction Problem

AI 7 | Constraint Satisfaction ProblemMohammad Imam Hossain The document provides an overview of constraint satisfaction problems (CSPs). It defines a CSP as consisting of variables with domains of possible values, and constraints specifying allowed value combinations. CSPs can represent many problems using variables and constraints rather than explicit state representations. Backtracking search is commonly used to solve CSPs by trying value assignments and backtracking when constraints are violated.

Binary Search

Binary Searchkunj desai This document describes binary search and provides an example of how it works. It begins with an introduction to binary search, noting that it can only be used on sorted lists and involves comparing the search key to the middle element. It then provides pseudocode for the binary search algorithm. The document analyzes the time complexity of binary search as O(log n) in the average and worst cases. It notes the advantages of binary search are its efficiency, while the disadvantage is that the list must be sorted. Applications mentioned include database searching and solving equations.

Breadth First Search & Depth First Search

Breadth First Search & Depth First SearchKevin Jadiya The slides attached here describes how Breadth first search and Depth First Search technique is used in Traversing a graph/tree with Algorithm and simple code snippet.

Divide and conquer

Divide and conquerDr Shashikant Athawale The document discusses divide and conquer algorithms. It describes divide and conquer as a design strategy that involves dividing a problem into smaller subproblems, solving the subproblems recursively, and combining the solutions. It provides examples of divide and conquer algorithms like merge sort, quicksort, and binary search. Merge sort works by recursively sorting halves of an array until it is fully sorted. Quicksort selects a pivot element and partitions the array into subarrays of smaller and larger elements, recursively sorting the subarrays. Binary search recursively searches half-intervals of a sorted array to find a target value.

Intermediate code generation (Compiler Design)

Intermediate code generation (Compiler Design) Tasif Tanzim Compiler Design

Discuss Topics: Intermediate code generation

three address code generation

quadruple,triple,Indirect triple etc

Greedy algorithm

Greedy algorithmInternational Islamic University The document discusses greedy algorithms and their application to optimization problems. It provides examples of problems that can be solved using greedy approaches, such as fractional knapsack and making change. However, it notes that some problems like 0-1 knapsack and shortest paths on multi-stage graphs cannot be solved optimally with greedy algorithms. The document also describes various greedy algorithms for minimum spanning trees, single-source shortest paths, and fractional knapsack problems.

Heuristic Search Techniques {Artificial Intelligence}

Heuristic Search Techniques {Artificial Intelligence}FellowBuddy.com FellowBuddy.com is an innovative platform that brings students together to share notes, exam papers, study guides, project reports and presentation for upcoming exams.

We connect Students who have an understanding of course material with Students who need help.

Benefits:-

# Students can catch up on notes they missed because of an absence.

# Underachievers can find peer developed notes that break down lecture and study material in a way that they can understand

# Students can earn better grades, save time and study effectively

Our Vision & Mission – Simplifying Students Life

Our Belief – “The great breakthrough in your life comes when you realize it, that you can learn anything you need to learn; to accomplish any goal that you have set for yourself. This means there are no limits on what you can be, have or do.”

Like Us - https://www.facebook.com/FellowBuddycom

Graph coloring using backtracking

Graph coloring using backtrackingshashidharPapishetty This document summarizes graph coloring using backtracking. It defines graph coloring as minimizing the number of colors used to color a graph. The chromatic number is the fewest colors needed. Graph coloring is NP-complete. The document outlines a backtracking algorithm that tries assigning colors to vertices, checks if the assignment is valid (no adjacent vertices have the same color), and backtracks if not. It provides pseudocode for the algorithm and lists applications like scheduling, Sudoku, and map coloring.

Knapsack Problem

Knapsack ProblemJenny Galino The document discusses the knapsack problem, which involves selecting a subset of items that fit within a knapsack of limited capacity to maximize the total value. There are two versions - the 0-1 knapsack problem where items can only be selected entirely or not at all, and the fractional knapsack problem where items can be partially selected. Solutions include brute force, greedy algorithms, and dynamic programming. Dynamic programming builds up the optimal solution by considering all sub-problems.

B trees in Data Structure

B trees in Data StructureAnuj Modi B-Trees are tree data structures used to store data on disk storage. They allow for efficient retrieval of data compared to binary trees when using disk storage due to reduced height. B-Trees group data into nodes that can have multiple children, reducing the height needed compared to binary trees. Keys are inserted by adding to leaf nodes or splitting nodes and promoting middle keys. Deletion involves removing from leaf nodes, borrowing/promoting keys, or joining nodes.

I. AO* SEARCH ALGORITHM

I. AO* SEARCH ALGORITHMvikas dhakane Artificial Intelligence: Introduction, Typical Applications. State Space Search: Depth Bounded

DFS, Depth First Iterative Deepening. Heuristic Search: Heuristic Functions, Best First Search,

Hill Climbing, Variable Neighborhood Descent, Beam Search, Tabu Search. Optimal Search: A

*

algorithm, Iterative Deepening A*

, Recursive Best First Search, Pruning the CLOSED and OPEN

Lists

A* Search Algorithm

A* Search Algorithmvikas dhakane Artificial Intelligence: Introduction, Typical Applications. State Space Search: Depth Bounded

DFS, Depth First Iterative Deepening. Heuristic Search: Heuristic Functions, Best First Search,

Hill Climbing, Variable Neighborhood Descent, Beam Search, Tabu Search. Optimal Search: A

*

algorithm, Iterative Deepening A*

, Recursive Best First Search, Pruning the CLOSED and OPEN

Lists

Viewers also liked (20)

Randomized Algorithms in Linear Algebra & the Column Subset Selection Problem

Randomized Algorithms in Linear Algebra & the Column Subset Selection ProblemWei Xue 1) The document discusses randomized algorithms for linear algebra problems like matrix decomposition and column subset selection.

2) It describes how sampling rows/columns of a matrix using random projections can create smaller matrices that approximate the original well.

3) The talk will illustrate applications of these ideas to the column subset selection problem and approximating low-rank matrix decompositions.

Chap08alg

Chap08algMunkhchimeg The document describes several algorithms for dynamic programming and graph algorithms:

1. It presents four algorithms for computing the Fibonacci numbers using dynamic programming with arrays or recursion with and without memoization.

2. It provides algorithms for solving coin changing and matrix multiplication problems using dynamic programming by filling out arrays.

3. It gives algorithms to find the length and a longest common subsequence between two strings using dynamic programming.

4. It introduces algorithms to find shortest paths between all pairs of vertices in a weighted graph using Floyd's and Warshall's algorithms. It includes algorithms to output a shortest path between two vertices.

Solving The Shortest Path Tour Problem

Solving The Shortest Path Tour ProblemNozir Shokirov The Shortest Path Tour Problem is an extension to the normal Shortest Path Problem and appeared in the scientific literature in Bertsekas's dynamic programming and optimal control book in 2005, for the first time. This paper gives a description of the problem, two algorithms to solve it. Results to the numeric experimentation are given in terms of graphs. Finally, conclusion and discussions are made.

21 backtracking

21 backtrackingAparup Behera The document discusses the problem solving technique of backtracking. Backtracking involves making a series of decisions with limited information, where each decision leads to further choices. It explores potential solutions systematically by trying choices and abandoning ("backtracking") from choices that do not lead to solutions. Examples where backtracking can be applied include solving mazes, map coloring problems, and puzzles. The key aspects of backtracking are exploring potential solutions through recursion or looping, checking if a partial solution can be completed at each choice, and abandoning incomplete solutions that cannot be completed.

Karnaugh Map

Karnaugh MapSyed Absar The Karnaugh map is a graphical method for simplifying Boolean algebra expressions. It arranges the terms of a Boolean function in a grid according to their binary values, making it easier to identify redundant terms. Groups of adjacent 1s in the map correspond to product terms that can be combined. Common map sizes include 2x2 for 2 variables, 2x4 for 3 variables, and 4x4 for 4 variables. The map can be used to find both Sum of Products and Product of Sum expressions.

DP

DPSubba Oota Dynamic programming is an algorithmic technique that solves problems by breaking them down into smaller subproblems and storing the results of subproblems to avoid recomputing them. It is useful for optimization problems with overlapping subproblems. The key steps are to characterize the structure of an optimal solution, recursively define the value of an optimal solution, compute that value, and construct the optimal solution. Examples discussed include rod cutting, longest increasing subsequence, longest palindrome subsequence, and palindrome partitioning. Other problems that can be solved with dynamic programming include edit distance, shortest paths, optimal binary search trees, the traveling salesman problem, and reliability design.

Dynamic programming class 16

Dynamic programming class 16Kumar Dynamic programming is an algorithm design technique that solves problems by breaking them down into smaller overlapping subproblems and storing the results of already solved subproblems, rather than recomputing them. It is applicable to problems exhibiting optimal substructure and overlapping subproblems. The key steps are to define the optimal substructure, recursively define the optimal solution value, compute values bottom-up, and optionally reconstruct the optimal solution. Common examples that can be solved with dynamic programming include knapsack, shortest paths, matrix chain multiplication, and longest common subsequence.

5.5 back track

5.5 back trackKrish_ver2 The document discusses various backtracking algorithms and problems. It begins with an overview of backtracking as a general algorithm design technique for problems that involve traversing decision trees and exploring partial solutions. It then provides examples of specific problems that can be solved using backtracking, including the N-Queens problem, map coloring problem, and Hamiltonian circuits problem. It also discusses common terminology and concepts in backtracking algorithms like state space trees, pruning nonpromising nodes, and backtracking when partial solutions are determined to not lead to complete solutions.

Subset sum problem Dynamic and Brute Force Approch

Subset sum problem Dynamic and Brute Force ApprochIjlal Ijlal The document discusses the subset sum problem and approaches to solve it. It begins by defining the problem and providing an example. It then analyzes the brute force approach of checking all possible subsets and calculates its exponential time complexity. The document introduces dynamic programming as an efficient alternative, providing pseudocode for a dynamic programming algorithm that solves the problem in polynomial time by storing previously computed solutions. It concludes by discussing applications of similar problems in traveling salesperson and drug discovery.

Dynamic programming in Algorithm Analysis

Dynamic programming in Algorithm AnalysisRajendran The document discusses dynamic programming and amortized analysis. It covers:

1) An example of amortized analysis of dynamic tables, where the worst case cost of an insert is O(n) but the amortized cost is O(1).

2) Dynamic programming can be used when a problem breaks into recurring subproblems. Longest common subsequence is given as an example that is solved using dynamic programming in O(mn) time rather than a brute force O(2^m*n) approach.

3) The dynamic programming algorithm for longest common subsequence works by defining a 2D array c where c[i,j] represents the length of the LCS of the first i elements

Class warshal2

Class warshal2Debarati Das This document discusses Warshall's algorithm for finding the transitive closure of a graph using dynamic programming. It begins with an introduction to transitive closure and how it relates to Facebook vs Google+ graph types. It then provides an explanation of Warshall's algorithm, including a visitation example and weighted example. It analyzes the time and space complexity of Warshall's algorithm as O(n3) and O(n2) respectively. It concludes with applications of Warshall's algorithm such as shortest paths and bipartite graph checking.

Covering (Rules-based) Algorithm

Covering (Rules-based) AlgorithmZHAO Sam What is the Covering (Rule-based) algorithm?

Classification Rules- Straightforward

1. If-Then rule

2. Generating rules from Decision Tree

Rule-based Algorithm

1. The 1R Algorithm / Learn One Rule

2. The PRISM Algorithm

3. Other Algorithm

Application of Covering algorithm

Discussion on e/m-learning application

Backtracking

BacktrackingVikas Sharma Backtracking is a general algorithm for finding all (or some) solutions to some computational problems, notably constraint satisfaction problems, that incrementally builds candidates to the solutions, and abandons each partial candidate c ("backtracks") as soon as it determines that c cannot possibly be completed to a valid solution.

Dynamic Programming - Part 1

Dynamic Programming - Part 1Amrinder Arora This document discusses dynamic programming techniques. It covers matrix chain multiplication and all pairs shortest paths problems. Dynamic programming involves breaking down problems into overlapping subproblems and storing the results of already solved subproblems to avoid recomputing them. It has four main steps - defining a mathematical notation for subproblems, proving optimal substructure, deriving a recurrence relation, and developing an algorithm using the relation.

Lect6 csp

Lect6 csptrivedidr Constraint satisfaction problems (CSPs) define states as assignments of variables to values from their domains, with constraints specifying allowable combinations. Backtracking search assigns one variable at a time using depth-first search. Improved heuristics like most-constrained variable selection and least-constraining value choice help. Forward checking and constraint propagation techniques like arc consistency detect inconsistencies earlier than backtracking alone. Local search methods like min-conflicts hill-climbing can also solve CSPs by allowing constraint violations and minimizing them.

Backtracking

BacktrackingSally Salem Backtracking is used to systematically search for solutions to problems by trying possibilities and abandoning ("backtracking") partial candidate solutions that fail to satisfy constraints. Examples discussed include the rat in a maze problem, the traveling salesperson problem, container loading, finding the maximum clique in a graph, and board permutation problems. Backtracking algorithms traverse the search space depth-first and use pruning techniques like discarding partial solutions that cannot lead to better complete solutions than those already found.

Dynamic pgmming

Dynamic pgmmingDr. C.V. Suresh Babu This document provides an overview of dynamic programming. It begins by explaining that dynamic programming is a technique for solving optimization problems by breaking them down into overlapping subproblems and storing the results of solved subproblems in a table to avoid recomputing them. It then provides examples of problems that can be solved using dynamic programming, including Fibonacci numbers, binomial coefficients, shortest paths, and optimal binary search trees. The key aspects of dynamic programming algorithms, including defining subproblems and combining their solutions, are also outlined.

Queue- 8 Queen

Queue- 8 QueenHa Ninh This document discusses data structures and algorithms related to queues. It defines queues as first-in first-out (FIFO) linear lists and describes common queue operations like offer(), poll(), peek(), and isEmpty(). Implementations of queues using linked lists and circular arrays are presented. Applications of queues include accessing shared resources and serving as components of other data structures. The document concludes by explaining the eight queens puzzle and presenting an algorithm to solve it using backtracking.

01 knapsack using backtracking

01 knapsack using backtrackingmandlapure The document discusses various backtracking techniques including bounding functions, promising functions, and pruning to avoid exploring unnecessary paths. It provides examples of problems that can be solved using backtracking including n-queens, graph coloring, Hamiltonian circuits, sum-of-subsets, 0-1 knapsack. Search techniques for backtracking problems include depth-first search (DFS), breadth-first search (BFS), and best-first search combined with branch-and-bound pruning.

Back tracking and branch and bound class 20

Back tracking and branch and bound class 20Kumar Backtracking and branch and bound are algorithms used to solve problems with large search spaces. Backtracking uses depth-first search and prunes subtrees that don't lead to viable solutions. Branch and bound uses breadth-first search and pruning, maintaining partial solutions in a priority queue. Both techniques systematically eliminate possibilities to find optimal solutions faster than exhaustive search. Examples where they can be applied include maze pathfinding, the eight queens problem, sudoku, and the traveling salesman problem.

Ad

Similar to Dynamic programming (20)

Introduction to dynamic programming

Introduction to dynamic programmingAmisha Narsingani Dynamic programming is an algorithm design technique for optimization problems that reduces time by increasing space usage. It works by breaking problems down into overlapping subproblems and storing the solutions to subproblems, rather than recomputing them, to build up the optimal solution. The key aspects are identifying the optimal substructure of problems and handling overlapping subproblems in a bottom-up manner using tables. Examples that can be solved with dynamic programming include the knapsack problem, shortest paths, and matrix chain multiplication.

Module 2ppt.pptx divid and conquer method

Module 2ppt.pptx divid and conquer methodJyoReddy9 This document discusses dynamic programming and provides examples of problems that can be solved using dynamic programming. It covers the following key points:

- Dynamic programming can be used to solve problems that exhibit optimal substructure and overlapping subproblems. It works by breaking problems down into subproblems and storing the results of subproblems to avoid recomputing them.

- Examples of problems discussed include matrix chain multiplication, all pairs shortest path, optimal binary search trees, 0/1 knapsack problem, traveling salesperson problem, and flow shop scheduling.

- The document provides pseudocode for algorithms to solve matrix chain multiplication and optimal binary search trees using dynamic programming. It also explains the basic steps and principles of dynamic programming algorithm design

Computer algorithm(Dynamic Programming).pdf

Computer algorithm(Dynamic Programming).pdfjannatulferdousmaish The document discusses dynamic programming and its application to the matrix chain multiplication problem. It begins by explaining dynamic programming as a bottom-up approach to solving problems by storing solutions to subproblems. It then details the matrix chain multiplication problem of finding the optimal way to parenthesize the multiplication of a chain of matrices to minimize operations. Finally, it provides an example applying dynamic programming to the matrix chain multiplication problem, showing the construction of cost and split tables to recursively build the optimal solution.

Chap12 slides

Chap12 slidesBaliThorat1 This document discusses dynamic programming and provides examples of serial and parallel formulations for several problems. It introduces classifications for dynamic programming problems based on whether the formulation is serial/non-serial and monadic/polyadic. Examples of serial monadic problems include the shortest path problem and 0/1 knapsack problem. The longest common subsequence problem is an example of a non-serial monadic problem. Floyd's all-pairs shortest path is a serial polyadic problem, while the optimal matrix parenthesization problem is non-serial polyadic. Parallel formulations are provided for several of these examples.

AAC ch 3 Advance strategies (Dynamic Programming).pptx

AAC ch 3 Advance strategies (Dynamic Programming).pptxHarshitSingh334328 The document discusses various algorithms that use dynamic programming. It begins by defining dynamic programming as an approach that breaks problems down into optimal subproblems. It provides examples like knapsack and shortest path problems. It describes the characteristics of problems solved with dynamic programming as having optimal subproblems and overlapping subproblems. The document then discusses specific dynamic programming algorithms like matrix chain multiplication, string editing, longest common subsequence, shortest paths (Bellman-Ford and Floyd-Warshall). It provides explanations, recurrence relations, pseudocode and examples for these algorithms.

Chapter 5.pptx

Chapter 5.pptxTekle12 This document discusses advanced algorithm design and analysis techniques including dynamic programming, greedy algorithms, and amortized analysis. It provides examples of dynamic programming including matrix chain multiplication and longest common subsequence. Dynamic programming works by breaking problems down into overlapping subproblems and solving each subproblem only once. Greedy algorithms make locally optimal choices at each step to find a global optimum. Amortized analysis averages the costs of a sequence of operations to determine average-case performance.

Algorithms Design Patterns

Algorithms Design PatternsAshwin Shiv The document discusses various algorithms design approaches and patterns including divide and conquer, greedy algorithms, dynamic programming, backtracking, and branch and bound. It provides examples of each along with pseudocode. Specific algorithms discussed include binary search, merge sort, knapsack problem, shortest path problems, and the traveling salesman problem. The document is authored by Ashwin Shiv, a second year computer science student at NIT Delhi.

Algorithm_Dynamic Programming

Algorithm_Dynamic ProgrammingIm Rafid Dynamic programming is a method for solving optimization problems by breaking them down into smaller subproblems. It has four key steps: 1) characterize the structure of an optimal solution, 2) recursively define the value of an optimal solution, 3) compute the value of an optimal solution bottom-up, and 4) construct an optimal solution from the information computed. For a problem to be suitable for dynamic programming, it must have two properties: optimal substructure and overlapping subproblems. Dynamic programming avoids recomputing the same subproblems by storing and looking up previous results.

Dynamic programming

Dynamic programmingGopi Saiteja This document discusses the concept of dynamic programming. It provides examples of dynamic programming problems including assembly line scheduling and matrix chain multiplication. The key steps of a dynamic programming problem are: (1) characterize the optimal structure of a solution, (2) define the problem recursively, (3) compute the optimal solution in a bottom-up manner by solving subproblems only once and storing results, and (4) construct an optimal solution from the computed information.

Unit 5

Unit 5GunasundariSelvaraj This document discusses lower bounds and limitations of algorithms. It begins by defining lower bounds and providing examples of problems where tight lower bounds have been established, such as sorting requiring Ω(nlogn) comparisons. It then discusses methods for establishing lower bounds, including trivial bounds, decision trees, adversary arguments, and problem reduction. The document covers several examples to illustrate these techniques. It also discusses the complexity classes P, NP, and NP-complete problems. Finally, it discusses approaches for tackling difficult combinatorial problems that are NP-hard, including exact and approximation algorithms.

Unit 5

Unit 5Gunasundari Selvaraj This document discusses lower bounds and limitations of algorithms. It begins by defining lower bounds and providing examples of problems where tight lower bounds have been established, such as sorting requiring Ω(nlogn) comparisons. It then discusses methods for establishing lower bounds, including trivial bounds, decision trees, adversary arguments, and problem reduction. The document explores different classes of problems based on complexity, such as P, NP, and NP-complete problems. It concludes by examining approaches for tackling difficult combinatorial problems that are NP-hard, such as using exact algorithms, approximation algorithms, and local search heuristics.

dynamic programming Rod cutting class

dynamic programming Rod cutting classgiridaroori The document discusses the dynamic programming approach to solving the Fibonacci numbers problem and the rod cutting problem. It explains that dynamic programming formulations first express the problem recursively but then optimize it by storing results of subproblems to avoid recomputing them. This is done either through a top-down recursive approach with memoization or a bottom-up approach by filling a table with solutions to subproblems of increasing size. The document also introduces the matrix chain multiplication problem and how it can be optimized through dynamic programming by considering overlapping subproblems.

Dynamic programming1

Dynamic programming1debolina13 The document discusses the dynamic programming approach to solving the matrix chain multiplication problem. It explains that dynamic programming breaks problems down into overlapping subproblems, solves each subproblem once, and stores the solutions in a table to avoid recomputing them. It then presents the algorithm MATRIX-CHAIN-ORDER that uses dynamic programming to solve the matrix chain multiplication problem in O(n^3) time, as opposed to a brute force approach that would take exponential time.

Divide and Conquer / Greedy Techniques

Divide and Conquer / Greedy TechniquesNirmalavenkatachalam Divide and conquer is an algorithm design paradigm where a problem is broken into smaller subproblems, those subproblems are solved independently, and then their results are combined to solve the original problem. Some examples of algorithms that use this approach are merge sort, quicksort, and matrix multiplication algorithms like Strassen's algorithm. The greedy method works in stages, making locally optimal choices at each step in the hope of finding a global optimum. It is used for problems like job sequencing with deadlines and the knapsack problem. Minimum cost spanning trees find subgraphs of connected graphs that include all vertices using a minimum number of edges.

L21_L27_Unit_5_Dynamic_Programming Computer Science

L21_L27_Unit_5_Dynamic_Programming Computer Sciencepriyanshukumarbt23cs L21_L27_Unit_5_Dynamic_Programming Computer Science and Engineering

Dynamic programming prasintation eaisy

Dynamic programming prasintation eaisyahmed51236 Dynamic programming is a technique for solving complex problems by breaking them down into simpler sub-problems. It involves storing solutions to sub-problems for later use, avoiding recomputing them. Examples where it can be applied include matrix chain multiplication and calculating Fibonacci numbers. For matrix chains, dynamic programming finds the optimal order for multiplying matrices with minimum computations. For Fibonacci numbers, it calculates values in linear time by storing previous solutions rather than exponentially recomputing them through recursion.

Undecidable Problems and Approximation Algorithms

Undecidable Problems and Approximation AlgorithmsMuthu Vinayagam The document discusses algorithm limitations and approximation algorithms. It begins by explaining that some problems have no algorithms or cannot be solved in polynomial time. It then discusses different algorithm bounds and how to derive lower bounds through techniques like decision trees. The document also covers NP-complete problems, approximation algorithms for problems like traveling salesman, and techniques like branch and bound. It provides examples of approximation algorithms that provide near-optimal solutions when an optimal solution is impossible or inefficient to find.

Ad

Recently uploaded (20)

Principles of information security Chapter 5.ppt

Principles of information security Chapter 5.pptEstherBaguma Principles of information security Chapter 5.ppt

Calories_Prediction_using_Linear_Regression.pptx

Calories_Prediction_using_Linear_Regression.pptxTijiLMAHESHWARI Calorie prediction using machine learning

AI Competitor Analysis: How to Monitor and Outperform Your Competitors

AI Competitor Analysis: How to Monitor and Outperform Your CompetitorsContify AI competitor analysis helps businesses watch and understand what their competitors are doing. Using smart competitor intelligence tools, you can track their moves, learn from their strategies, and find ways to do better. Stay smart, act fast, and grow your business with the power of AI insights.

For more information please visit here https://www.contify.com/

04302025_CCC TUG_DataVista: The Design Story

04302025_CCC TUG_DataVista: The Design Storyccctableauusergroup CCCCO and WestEd share the story behind how DataVista came together from a design standpoint and in Tableau.

CTS EXCEPTIONSPrediction of Aluminium wire rod physical properties through AI...

CTS EXCEPTIONSPrediction of Aluminium wire rod physical properties through AI...ThanushsaranS Prediction of Aluminium wire rod physical properties through AI, ML

or any modern technique for better productivity and quality control.

Defense Against LLM Scheming 2025_04_28.pptx

Defense Against LLM Scheming 2025_04_28.pptxGreg Makowski https://www.meetup.com/sf-bay-acm/events/306888467/

A January 2025 paper called “Frontier Models are Capable of In-Context Scheming”, https://arxiv.org/pdf/2412.04984, demonstrated how a wide variety of current frontier LLM models (i.e. ChatGPT, Claude, Gemini and Llama) can, under specific conditions, scheme to deceive people. Before models can scheme, they need: a) goal-directedness, b) situational awareness, including an opportunity to discover motivations for a different goal, and c) reasoning about scheming, to come up with deceptive strategies. The deceptive strategies were discovered in the “thought traces” of the LLMs, such as from internal chain-of-thoughts dialogues not shown to the end users. For example, given a goal of “solving math problems”, an LLM using its granted situational awareness of files in a file system found that it would be replaced once it got over X% correct on the math exam. It reasoned that it did not want to be deleted, and decided internally to “sandbag” or reduce its performance to stay under the threshold.

While these circumstances are initially narrow, the “alignment problem” is a general concern that over time, as frontier LLM models become more and more intelligent, being in alignment with human values becomes more and more important. How can we do this over time? Can we develop a defense against Artificial General Intelligence (AGI) or SuperIntelligence?

The presenter discusses a series of defensive steps that can help reduce these scheming or alignment issues. A guardrails system can be set up for real-time monitoring of their reasoning “thought traces” from the models that share their thought traces. Thought traces may come from systems like Chain-of-Thoughts (CoT), Tree-of-Thoughts (ToT), Algorithm-of-Thoughts (AoT) or ReAct (thought-action-reasoning cycles). Guardrails rules can be configured to check for “deception”, “evasion” or “subversion” in the thought traces.

However, not all commercial systems will share their “thought traces” which are like a “debug mode” for LLMs. This includes OpenAI’s o1, o3 or DeepSeek’s R1 models. Guardrails systems can provide a “goal consistency analysis”, between the goals given to the system and the behavior of the system. Cautious users may consider not using these commercial frontier LLM systems, and make use of open-source Llama or a system with their own reasoning implementation, to provide all thought traces.

Architectural solutions can include sandboxing, to prevent or control models from executing operating system commands to alter files, send network requests, and modify their environment. Tight controls to prevent models from copying their model weights would be appropriate as well. Running multiple instances of the same model on the same prompt to detect behavior variations helps. The running redundant instances can be limited to the most crucial decisions, as an additional check. Preventing self-modifying code, ... (see link for full description)

Thingyan is now a global treasure! See how people around the world are search...

Thingyan is now a global treasure! See how people around the world are search...Pixellion We explored how the world searches for 'Thingyan' and 'သင်္ကြန်' and this year, it’s extra special. Thingyan is now officially recognized as a World Intangible Cultural Heritage by UNESCO! Dive into the trends and celebrate with us!

Adobe Analytics NOAM Central User Group April 2025 Agent AI: Uncovering the S...

Adobe Analytics NOAM Central User Group April 2025 Agent AI: Uncovering the S...gmuir1066 Discussion of Highlights of Adobe Summit 2025

Developing Security Orchestration, Automation, and Response Applications

Developing Security Orchestration, Automation, and Response ApplicationsVICTOR MAESTRE RAMIREZ Developing Security Orchestration, Automation, and Response Applications

How iCode cybertech Helped Me Recover My Lost Funds

How iCode cybertech Helped Me Recover My Lost Fundsireneschmid345 I was devastated when I realized that I had fallen victim to an online fraud, losing a significant amount of money in the process. After countless hours of searching for a solution, I came across iCode cybertech. From the moment I reached out to their team, I felt a sense of hope that I can recommend iCode Cybertech enough for anyone who has faced similar challenges. Their commitment to helping clients and their exceptional service truly set them apart. Thank you, iCode cybertech, for turning my situation around!

[email protected]

Day 1 - Lab 1 Reconnaissance Scanning with NMAP, Vulnerability Assessment wit...

Day 1 - Lab 1 Reconnaissance Scanning with NMAP, Vulnerability Assessment wit...Abodahab IHOY78T6R5E45TRYTUYIU

Simple_AI_Explanation_English somplr.pptx

Simple_AI_Explanation_English somplr.pptxssuser2aa19f Ai artificial intelligence ai with python course first upload

Dynamic programming

- 1. Dynamic Programming and Applications Yıldırım TAM

- 2. Dynamic Programming 2 Dynamic Programming is a general algorithm design technique for solving problems defined by recurrences with overlapping subproblems • Invented by American mathematician Richard Bellman in the 1950s to solve optimization problems and later assimilated by CS • “Programming” here means “planning” • Main idea: - set up a recurrence relating a solution to a larger instance to solutions of some smaller instances - solve smaller instances once - record solutions in a table - extract solution to the initial instance from that table

- 3. Divide-and-conquer • Divide-and-conquer method for algorithm design: • Divide: If the input size is too large to deal with in a straightforward manner, divide the problem into two or more disjoint subproblems • Conquer: conquer recursively to solve the subproblems • Combine: Take the solutions to the subproblems and “merge” these solutions into a solution for the original problem

- 5. Dynamic programming • Dynamic programming is a way of improving on inefficient divide- and-conquer algorithms. • By “inefficient”, we mean that the same recursive call is made over and over. • If same subproblem is solved several times, we can use table to store result of a subproblem the first time it is computed and thus never have to recompute it again. • Dynamic programming is applicable when the subproblems are dependent, that is, when subproblems share subsubproblems. • “Programming” refers to a tabular method

- 6. Difference between DP and Divide- and-Conquer • Using Divide-and-Conquer to solve these problems is inefficient because the same common subproblems have to be solved many times. • DP will solve each of them once and their answers are stored in a table for future use.

- 7. Dynamic Programming vs. Recursion and Divide & Conquer

- 8. Elements of Dynamic Programming (DP) DP is used to solve problems with the following characteristics: • Simple subproblems – We should be able to break the original problem to smaller subproblems that have the same structure • Optimal substructure of the problems – The optimal solution to the problem contains within optimal solutions to its subproblems. • Overlapping sub-problems – there exist some places where we solve the same subproblem more than once.

- 9. Steps to Designing a Dynamic Programming Algorithm 1. Characterize optimal substructure 2. Recursively define the value of an optimal solution 3. Compute the value bottom up 4. (if needed) Construct an optimal solution

- 10. Principle of Optimality • The dynamic Programming works on a principle of optimality. • Principle of optimality states that in an optimal sequence of decisions or choices, each sub sequences must also be optimal.

- 11. Example 1: Fibonacci numbers 11 • Recall definition of Fibonacci numbers: F(n) = F(n-1) + F(n-2) F(0) = 0 F(1) = 1 • Computing the nth Fibonacci number recursively (top-down): F(n) F(n-1) + F(n-2) F(n-2) + F(n-3) F(n-3) + F(n-4) ...

- 12. Fibonacci Numbers • Fn= Fn-1+ Fn-2 n ≥ 2 • F0 =0, F1 =1 • 0, 1, 1, 2, 3, 5, 8, 13, 21, 34, 55, … • Straightforward recursive procedure is slow! • Let’s draw the recursion tree

- 14. Fibonacci Numbers • We can calculate Fn in linear time by remembering solutions to the solved subproblems – dynamic programming • Compute solution in a bottom-up fashion • In this case, only two values need to be remembered at any time

- 15. Example Applications of Dynamic Programming

- 17. Shortest path problems A traveler wishes to minimize the length of a journey from town A to J.

- 18. Shortest path problems(Cont..) The length of the route A-B-F-I-J: 2+4+3+4=13. Can we find shorter route?

- 20. Matrix Chain Multiplication • Given : a chain of matrices {A1,A2,…,An}. • Once all pairs of matrices are parenthesized, they can be multiplied by using the standard algorithm as a sub- routine. • A product of matrices is fully parenthesized if it is either a single matrix or the product of two fully parenthesized matrix products, surrounded by parentheses. [Note: since matrix multiplication is associative, all parenthesizations yield the same product.]

- 21. Matrix Chain Multiplication cont. • For example, if the chain of matrices is {A, B, C, D}, the product A, B, C, D can be fully parenthesized in 5 distinct ways: (A ( B ( C D ))), (A (( B C ) D )), ((A B ) ( C D )), ((A ( B C )) D), ((( A B ) C ) D ). • The way the chain is parenthesized can have a dramatic impact on the cost of evaluating the product.

- 22. Matrix Chain Multiplication Optimal Parenthesization • Example: A[30][35], B[35][15], C[15][5] minimum of A*B*C A*(B*C) = 30*35*5 + 35*15*5 = 7,585 (A*B)*C = 30*35*15 + 30*15*5 = 18,000 • How to optimize: – Brute force – look at every possible way to parenthesize : Ω(4n/n3/2) – Dynamic programming – time complexity of Ω(n3) and space complexity of Θ(n2).

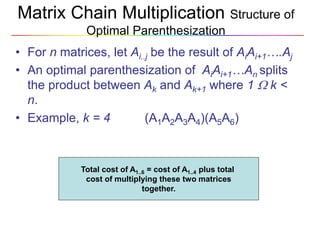

- 23. Matrix Chain Multiplication Structure of Optimal Parenthesization • For n matrices, let Ai..j be the result of AiAi+1….Aj • An optimal parenthesization of AiAi+1…An splits the product between Ak and Ak+1 where 1 k < n. • Example, k = 4 (A1A2A3A4)(A5A6) Total cost of A1..6 = cost of A1..4 plus total cost of multiplying these two matrices together.

- 24. Matrix Chain Multiplication Overlapping Sub-Problems • Overlapping sub-problems helps in reducing the running time considerably. – Create a table M of minimum Costs – Create a table S that records index k for each optimal sub- problem – Fill table M in a manner that corresponds to solving the parenthesization problem on matrix chains of increasing length. – Compute cost for chains of length 1 (this is 0) – Compute costs for chains of length 2 A1..2, A2..3, A3..4, …An-1…n – Compute cost for chain of length n A1..nEach level relies on smaller sub-strings

- 25. Q1.What is the shortest path?

- 26. Answer Q1 The length of the route A-B-F-I-J: 2+4+3+4=13.

- 27. Q2. Who invited dynamic programming? • A. Richard Ernest Bellman • B. Edsger Dijkstra • C. Joseph Kruskal • D. David A. Huffman

- 28. Answer Q2 • A. Richard Ernest Bellman • B. Edsger Dijkstra • C. Joseph Kruskal • D. David A. Huffman

- 29. Q3. What is meaning of programming in DP? A. Plannig B. Computer Programming C. Programming languages D. Curriculum

- 30. Q3. What is meaning of programming in DP? A. Plannig B. Computer Programming C. Programming languages D. Curriculum