Effectiveness and code optimization in Java

Download as PPTX, PDF1 like1,021 views

-- What is effectiveness? -- Code optimization -- JVM optimization -- Code samples -- Measurements

1 of 102

Downloaded 21 times

Ad

Recommended

JEEConf 2016. Effectiveness and code optimization in Java applications

JEEConf 2016. Effectiveness and code optimization in Java applicationsStrannik_2013 This document discusses code optimization techniques in Java applications. It begins with an overview of code effectiveness and optimization, noting that optimization should not be done prematurely. It then covers various optimization techniques including JVM options, code samples, measurements using JMH of different techniques like method vs field access, strings, arrays, collections, and loops vs streams. It finds that techniques like using ArrayList/HashMap, compiler and JIT optimization, and measurement tools can improve performance. The document emphasizes measuring optimizations to determine real effectiveness.

"Эффективность и оптимизация кода в Java 8" Сергей Моренец

"Эффективность и оптимизация кода в Java 8" Сергей МоренецFwdays Если мы захотим понять, что такое совершенный(идеальный) код, то одной из его характеристик будет эффективность. Это включает в себя и быстродействие кода, и объем потребляемых ресурсов(память, дисковых, I/O).

Зачастую эффективность отодвигается на второй план, поскольку ее не так просто рассчитать заранее, а также точно определить на ревью кода. В то же время это единственная характеристика, которая затрагивает конечного пользователя наших проектов.

В моем докладе я рассмотрю, что такое эффективность, как ее правильно измерять, мы коснемся мифов об эффективности, которые очень популярны сейчас, рассмотрим примеры эффективного и неэффективного кода, нужной и бессмысленной оптимизации кода.

Главный упор будет сделан на функциональности, которая была добавлена в Java 8.

Filtering 100M objects in Coherence cache. What can go wrong?

Filtering 100M objects in Coherence cache. What can go wrong?aragozin The document discusses filtering 100 million objects from a Coherence cache. It describes initial problems encountered including out of memory errors and high memory usage. Several strategies are proposed and tested to address these issues including using indexes more efficiently, incremental retrieval of results in batches to control traffic, and implementing basic multi-version concurrency control to provide snapshot consistency for queries.

Performance Test Driven Development with Oracle Coherence

Performance Test Driven Development with Oracle Coherencearagozin This presentation discusses test driven development with Oracle Coherence. It outlines the philosophy of PTDD and challenges of testing Coherence, including the need for a cluster and sensitivity to network issues. It discusses automating tests using tools like NanoCloud for managing nodes and executing tests remotely. Different types of tests are described like microbenchmarks, performance regression tests, and bottleneck analysis. Common pitfalls of performance testing like fixed users vs fixed request rates are also covered.

"Walk in a distributed systems park with Orleans" Евгений Бобров

"Walk in a distributed systems park with Orleans" Евгений БобровFwdays Долгое время разработка производительных, масштабируемых, надежных и экономически эффективных распределенных систем, была прерогативой узкого круга специалистов. Переезд в «облако», сам по себе, проблему не решил. Обещанная провайдерами дешевая линейная масштабируемость, по-прежнему, недостижимая мечта для всех, сидящих «на игле» реляционных баз данных и монолитных архитектур.

С выходом Microsoft Orleans, разработчики, наконец-то, получили максимально простую и удобную платформу для создания масштабируемых и отказоустойчивых распределенных систем, предназначенных для запуска в «облаке» или в приватном дата-центре.

В докладе будут рассмотрены основные концепции и прецеденты использования платформы, такие как: Internet Of Things (IoT), распределенная обработка потоков данных, масштабирование РСУБД и любых других ограниченных ресурсов, отказоустойчивая координация длительно выполняющихся бизнес-процессов.

Performance tests with Gatling

Performance tests with GatlingAndrzej Ludwikowski Performance tests with Gatling are difficult for three main reasons:

1) The test environment must closely simulate production in terms of hardware, software, and load.

2) Proper infrastructure for monitoring, logging, and isolating tests is required.

3) Performance intuition can be wrong, so statistics like percentiles must be used rather than averages.

Spark summit2014 techtalk - testing spark

Spark summit2014 techtalk - testing sparkAnu Shetty The document discusses best practices for testing Spark applications including setting up test environments for unit and integration testing Spark in both batch and streaming modes. It also covers performance testing Spark applications using Gatling, including developing a sample performance test for a word count Spark job run on Spark job server. Key steps for testing, code coverage, continuous integration and analyzing performance test results are provided.

Performance Test Automation With Gatling

Performance Test Automation With GatlingKnoldus Inc. Gatling is a lightweight dsl written in scala by which you can treat your performance test as a production code means you can easily write a readable code to test the performance of an application it s a framework based on Scala, Akka and Netty.

Stream processing from single node to a cluster

Stream processing from single node to a clusterGal Marder Building data pipelines shouldn't be so hard, you just need to choose the right tools for the task.

We will review Akka and Spark streaming, how they work and how to use them and when.

Project Orleans - Actor Model framework

Project Orleans - Actor Model frameworkNeil Mackenzie Project "Orleans" is an Actor Model framework from Microsoft Research that is currently in public preview. It is designed to make it easy for .NET developers to develop and deploy an actor-based distributed system into Microsoft Azure.

Effective testing for spark programs Strata NY 2015

Effective testing for spark programs Strata NY 2015Holden Karau This session explores best practices of creating both unit and integration tests for Spark programs as well as acceptance tests for the data produced by our Spark jobs. We will explore the difficulties with testing streaming programs, options for setting up integration testing with Spark, and also examine best practices for acceptance tests.

Unit testing of Spark programs is deceptively simple. The talk will look at how unit testing of Spark itself is accomplished, as well as factor out a number of best practices into traits we can use. This includes dealing with local mode cluster creation and teardown during test suites, factoring our functions to increase testability, mock data for RDDs, and mock data for Spark SQL.

Testing Spark Streaming programs has a number of interesting problems. These include handling of starting and stopping the Streaming context, and providing mock data and collecting results. As with the unit testing of Spark programs, we will factor out the common components of the tests that are useful into a trait that people can use.

While acceptance tests are not always part of testing, they share a number of similarities. We will look at which counters Spark programs generate that we can use for creating acceptance tests, best practices for storing historic values, and some common counters we can easily use to track the success of our job.

Relevant Spark Packages & Code:

https://github.com/holdenk/spark-testing-base / http://spark-packages.org/package/holdenk/spark-testing-base

https://github.com/holdenk/spark-validator

Dive into spark2

Dive into spark2Gal Marder Abstract –

Spark 2 is here, while Spark has been the leading cluster computation framework for severl years, its second version takes Spark to new heights. In this seminar, we will go over Spark internals and learn the new concepts of Spark 2 to create better scalable big data applications.

Target Audience

Architects, Java/Scala developers, Big Data engineers, team leaders

Prerequisites

Java/Scala knowledge and SQL knowledge

Contents:

- Spark internals

- Architecture

- RDD

- Shuffle explained

- Dataset API

- Spark SQL

- Spark Streaming

Performance tests with gatling

Performance tests with gatlingSoftwareMill In 30 minutes I would like to show:

1. Why is it worth to spend some time and learn Gatling - a tool for integration/performance test of your web application?

2. Under what circumstances it is necessary to have Gatling in your toolbox?

3. What are Gatling cons and what kind of problems can you expect?

For sure there is no silver bullet in testing tools area, but you will definitely love Gatling DSL.

2015 Java update and roadmap, JUG sevilla

2015 Java update and roadmap, JUG sevillaTrisha Gee Not my material! Courtesy of Oracle's Aurelio García-Ribeyro and Georges Saab.

Presentation given to the Sevilla Java User Group about the state of Java

Reactive programming with Rxjava

Reactive programming with RxjavaChristophe Marchal After explaining what problem Reactive Programming solves I will give an introduction to one implementation: RxJava. I show how to compose Observable without concurrency first and then with Scheduler. I finish the talk by showing examples of flow control and draw backs.

Inspired from https://www.infoq.com/presentations/rxjava-reactor and https://www.infoq.com/presentations/rx-service-architecture

Code: https://github.com/toff63/Sandbox/tree/master/java/rsjug-rx/rsjug-rx/src/main/java/rs/jug/rx

RxJava - introduction & design

RxJava - introduction & designallegro.tech The document introduces RxJava, a library for composing asynchronous and event-based programs using observable sequences for the Java Virtual Machine. It discusses the problems with asynchronous programming, provides an overview of reactive programming and reactive extensions, and describes RxJava's core types like Observable and Observer. It also covers key concepts like operators, schedulers, subjects, and dealing with backpressure in RxJava.

Whatthestack using Tempest for testing your OpenStack deployment

Whatthestack using Tempest for testing your OpenStack deploymentChristian Schwede 1) The presenters introduce WhatTheStack, a web application that allows users to easily run the Tempest deployment testing framework on their OpenStack deployments without needing to install anything.

2) Tempest is used to test and verify the functionality and API behavior of OpenStack deployments. WhatTheStack simplifies running Tempest by allowing users to enter their OpenStack credentials through a web interface and receive a summary of the test results.

3) Behind the scenes, WhatTheStack encrypts the OpenStack credentials, runs a subset of the Tempest tests via a worker, decrypts the results, and displays a summarized report of the tests by service, such as which passed and failed. This allows automated, independent testing of

IRB Galaxy CloudMan radionica

IRB Galaxy CloudMan radionicaEnis Afgan A workshop introducing Galaxy and CloudMan applications in the context of bioinformatics data analysis.

Open stack qa and tempest

Open stack qa and tempestKamesh Pemmaraju Tempest is an Openstack test suite which runs against all the OpenStack service endpoints. It makes sure that all the OpenStack components work together properly and that no APIs are changed. Tempest is a "gate" for all commits to OpenStack repositories and will prevent merges if tests fail.

Agile Developer Immersion Workshop, LASTconf Melbourne, Australia, 19th July ...

Agile Developer Immersion Workshop, LASTconf Melbourne, Australia, 19th July ...Victoria Schiffer Based on the Legacy CodeRetreat - Daniel Prager, Tomasz Janowski and I ran this all day workshop at this year's LASTconf.

Get ready to level up at refactoring at LAST Conference's first Refactoring Developer workshop. Inspired by Code Retreat, we have run a similar session, for the basics of agile development, at LAST Conference for the past few years. We have felt that it's Important to support learning in technical disciplines that are extremely important in agile software development.

Too many Agile and DevOps initiatives are stymied by code bases that are hard to change and understand.

While disciplined teams who rigorously practice pair programming, test-driven design (TDD) and other technical Agile practices avoid producing new legacy code in the first place, cleaning up a pre-existing mess is notoriously difficult and dangerous. Without the safety net of excellent automated test coverage, the risk of breaking something else as you refactor is extremely high. Also, code that wasn't designed and written with testability in mind makes it really difficult to get started. So most don't even try ...

In the Refactoring workshop developers learn how to build an initial safety net before applying multiple refactorings, and have lots of fun along the way!

To read more about how to run a classic CodeRetreat, I recommend this blogpost: https://medium.com/seek-blog/coderetreat-at-seek-clean-code-vs-comfort-zone-cfb1da64909d

Mario on spark

Mario on sparkIgor Berman Igor Berman presented an overview of Mario, a job scheduling system they developed for Dynamic Yield to address some limitations of Luigi. Mario defines jobs as classes with inputs, outputs, and work to be done. It provides features like running jobs on different clusters, saving job statuses to Redis for better performance than Luigi, and a UI for controlling job execution. Some challenges discussed include properly partitioning RDDs to avoid shuffles, persisting data efficiently like to local SSDs, and issues with Avro and HDFS/S3. Future plans include open sourcing Mario and using technologies like Elasticsearch and Flink.

JVM languages "flame wars"

JVM languages "flame wars"Gal Marder There are many programming languages that can be used on the JVM (Java, Scala, Groovy, Kotlin, ...). In this session, we'll outline the main differences between them and discuss advantages and disadvantages for each.

Dev nexus 2017

Dev nexus 2017Roy Russo Elasticsearch is a distributed, RESTful search and analytics engine that can be used for processing big data with Apache Spark. It allows ingesting large volumes of data in near real-time for search, analytics, and machine learning applications like feature generation. Elasticsearch is schema-free, supports dynamic queries, and integrates with Spark, making it a good fit for ingesting streaming data from Spark jobs. It must be deployed with consideration for fast reads, writes, and dynamic querying to support large-scale predictive analytics workloads.

Devnexus 2018

Devnexus 2018Roy Russo Elasticsearch is a distributed, RESTful search and analytics engine that can be used for processing big data with Apache Spark. Data is ingested from Spark into Elasticsearch for features generation and predictive modeling. Elasticsearch allows for fast reads and writes of large volumes of time-series and other data through its use of inverted indexes and dynamic mapping. It is deployed on AWS for its elastic scalability, high availability, and integration with Spark via fast queries. Ongoing maintenance includes archiving old data, partitioning indices, and reindexing large datasets.

Spark real world use cases and optimizations

Spark real world use cases and optimizationsGal Marder This document provides an overview of Spark, its core abstraction of resilient distributed datasets (RDDs), and common transformations and actions. It discusses how Spark partitions and distributes data across a cluster, its lazy evaluation model, and the concept of dependencies between RDDs. Common use cases like word counting, bucketing user data, finding top results, and analytics reporting are demonstrated. Key topics covered include avoiding expensive shuffle operations, choosing optimal aggregation methods, and potentially caching data in memory.

Transformation Priority Premise @Softwerkskammer MUC

Transformation Priority Premise @Softwerkskammer MUCDavid Völkel The document discusses the "Transformation Priority Premise" strategy for test-driven development (TDD). It proposes a sequence of code transformations from simple to complex that can help guide developers to choose which tests and code to implement next. The sequence includes transformations like adding code that returns nil, replacing constants with variables, adding conditional statements, and extracting functions. Following this sequence and choosing tests that match higher priority transformations is intended to help developers make steady progress with TDD without getting stuck on large changes. However, the priorities presented are informal and their correctness and completeness require further discussion.

Tempest scenariotests 20140512

Tempest scenariotests 20140512Masayuki Igawa Tempest provides scenario tests that test integration between multiple OpenStack services by executing sequences of operations. Current scenario tests cover operations like boot instances, attach volumes, manage snapshots and check network connectivity. Running scenario tests helps operators validate their cloud and developers check for regressions. While useful, scenario tests have issues like needing more test coverage, complex configuration, and difficulty analyzing failures. The future includes making scenario tests easier to use without command line skills and more flexible in specifying test environments.

Optimizing Laravel for content heavy and high-traffic websites by Man, Apples...

Optimizing Laravel for content heavy and high-traffic websites by Man, Apples...appleseeds-my The document discusses various techniques for optimizing Laravel applications for content-heavy and high-traffic websites. It covers optimizing at the language level, framework level, and infrastructure level. Some key optimizations discussed include route and config caching, optimizing auto-loading of classes, removing unused libraries, using raw SQL over Eloquent ORM for complex queries, solving the N+1 query problem through eager loading, and implementing caching through middleware and infrastructure like Nginx.

Gradle.Enemy at the gates

Gradle.Enemy at the gatesStrannik_2013 Gradle is a flexible, general-purpose build automation tool that improves upon existing build tools like Ant, Maven, and Ivy. It uses Groovy as its configuration language, allowing builds to be written more clearly and concisely compared to XML formats. Gradle aims to provide the flexibility of Ant, the dependency management and conventions of Maven, and the speed of Git. It handles tasks, dependencies, plugins, and multiproject builds. Gradle configurations map closely to Maven scopes and it has good support for plugins, testing, caching, and integration with tools like Ant and Maven.

Effective Java applications

Effective Java applicationsStrannik_2013 This presentation covers effectiveness of Java applications. There are a lot of code samples that were measured using JMH library. For some samples we performed optimization to improve performance

Ad

More Related Content

What's hot (20)

Stream processing from single node to a cluster

Stream processing from single node to a clusterGal Marder Building data pipelines shouldn't be so hard, you just need to choose the right tools for the task.

We will review Akka and Spark streaming, how they work and how to use them and when.

Project Orleans - Actor Model framework

Project Orleans - Actor Model frameworkNeil Mackenzie Project "Orleans" is an Actor Model framework from Microsoft Research that is currently in public preview. It is designed to make it easy for .NET developers to develop and deploy an actor-based distributed system into Microsoft Azure.

Effective testing for spark programs Strata NY 2015

Effective testing for spark programs Strata NY 2015Holden Karau This session explores best practices of creating both unit and integration tests for Spark programs as well as acceptance tests for the data produced by our Spark jobs. We will explore the difficulties with testing streaming programs, options for setting up integration testing with Spark, and also examine best practices for acceptance tests.

Unit testing of Spark programs is deceptively simple. The talk will look at how unit testing of Spark itself is accomplished, as well as factor out a number of best practices into traits we can use. This includes dealing with local mode cluster creation and teardown during test suites, factoring our functions to increase testability, mock data for RDDs, and mock data for Spark SQL.

Testing Spark Streaming programs has a number of interesting problems. These include handling of starting and stopping the Streaming context, and providing mock data and collecting results. As with the unit testing of Spark programs, we will factor out the common components of the tests that are useful into a trait that people can use.

While acceptance tests are not always part of testing, they share a number of similarities. We will look at which counters Spark programs generate that we can use for creating acceptance tests, best practices for storing historic values, and some common counters we can easily use to track the success of our job.

Relevant Spark Packages & Code:

https://github.com/holdenk/spark-testing-base / http://spark-packages.org/package/holdenk/spark-testing-base

https://github.com/holdenk/spark-validator

Dive into spark2

Dive into spark2Gal Marder Abstract –

Spark 2 is here, while Spark has been the leading cluster computation framework for severl years, its second version takes Spark to new heights. In this seminar, we will go over Spark internals and learn the new concepts of Spark 2 to create better scalable big data applications.

Target Audience

Architects, Java/Scala developers, Big Data engineers, team leaders

Prerequisites

Java/Scala knowledge and SQL knowledge

Contents:

- Spark internals

- Architecture

- RDD

- Shuffle explained

- Dataset API

- Spark SQL

- Spark Streaming

Performance tests with gatling

Performance tests with gatlingSoftwareMill In 30 minutes I would like to show:

1. Why is it worth to spend some time and learn Gatling - a tool for integration/performance test of your web application?

2. Under what circumstances it is necessary to have Gatling in your toolbox?

3. What are Gatling cons and what kind of problems can you expect?

For sure there is no silver bullet in testing tools area, but you will definitely love Gatling DSL.

2015 Java update and roadmap, JUG sevilla

2015 Java update and roadmap, JUG sevillaTrisha Gee Not my material! Courtesy of Oracle's Aurelio García-Ribeyro and Georges Saab.

Presentation given to the Sevilla Java User Group about the state of Java

Reactive programming with Rxjava

Reactive programming with RxjavaChristophe Marchal After explaining what problem Reactive Programming solves I will give an introduction to one implementation: RxJava. I show how to compose Observable without concurrency first and then with Scheduler. I finish the talk by showing examples of flow control and draw backs.

Inspired from https://www.infoq.com/presentations/rxjava-reactor and https://www.infoq.com/presentations/rx-service-architecture

Code: https://github.com/toff63/Sandbox/tree/master/java/rsjug-rx/rsjug-rx/src/main/java/rs/jug/rx

RxJava - introduction & design

RxJava - introduction & designallegro.tech The document introduces RxJava, a library for composing asynchronous and event-based programs using observable sequences for the Java Virtual Machine. It discusses the problems with asynchronous programming, provides an overview of reactive programming and reactive extensions, and describes RxJava's core types like Observable and Observer. It also covers key concepts like operators, schedulers, subjects, and dealing with backpressure in RxJava.

Whatthestack using Tempest for testing your OpenStack deployment

Whatthestack using Tempest for testing your OpenStack deploymentChristian Schwede 1) The presenters introduce WhatTheStack, a web application that allows users to easily run the Tempest deployment testing framework on their OpenStack deployments without needing to install anything.

2) Tempest is used to test and verify the functionality and API behavior of OpenStack deployments. WhatTheStack simplifies running Tempest by allowing users to enter their OpenStack credentials through a web interface and receive a summary of the test results.

3) Behind the scenes, WhatTheStack encrypts the OpenStack credentials, runs a subset of the Tempest tests via a worker, decrypts the results, and displays a summarized report of the tests by service, such as which passed and failed. This allows automated, independent testing of

IRB Galaxy CloudMan radionica

IRB Galaxy CloudMan radionicaEnis Afgan A workshop introducing Galaxy and CloudMan applications in the context of bioinformatics data analysis.

Open stack qa and tempest

Open stack qa and tempestKamesh Pemmaraju Tempest is an Openstack test suite which runs against all the OpenStack service endpoints. It makes sure that all the OpenStack components work together properly and that no APIs are changed. Tempest is a "gate" for all commits to OpenStack repositories and will prevent merges if tests fail.

Agile Developer Immersion Workshop, LASTconf Melbourne, Australia, 19th July ...

Agile Developer Immersion Workshop, LASTconf Melbourne, Australia, 19th July ...Victoria Schiffer Based on the Legacy CodeRetreat - Daniel Prager, Tomasz Janowski and I ran this all day workshop at this year's LASTconf.

Get ready to level up at refactoring at LAST Conference's first Refactoring Developer workshop. Inspired by Code Retreat, we have run a similar session, for the basics of agile development, at LAST Conference for the past few years. We have felt that it's Important to support learning in technical disciplines that are extremely important in agile software development.

Too many Agile and DevOps initiatives are stymied by code bases that are hard to change and understand.

While disciplined teams who rigorously practice pair programming, test-driven design (TDD) and other technical Agile practices avoid producing new legacy code in the first place, cleaning up a pre-existing mess is notoriously difficult and dangerous. Without the safety net of excellent automated test coverage, the risk of breaking something else as you refactor is extremely high. Also, code that wasn't designed and written with testability in mind makes it really difficult to get started. So most don't even try ...

In the Refactoring workshop developers learn how to build an initial safety net before applying multiple refactorings, and have lots of fun along the way!

To read more about how to run a classic CodeRetreat, I recommend this blogpost: https://medium.com/seek-blog/coderetreat-at-seek-clean-code-vs-comfort-zone-cfb1da64909d

Mario on spark

Mario on sparkIgor Berman Igor Berman presented an overview of Mario, a job scheduling system they developed for Dynamic Yield to address some limitations of Luigi. Mario defines jobs as classes with inputs, outputs, and work to be done. It provides features like running jobs on different clusters, saving job statuses to Redis for better performance than Luigi, and a UI for controlling job execution. Some challenges discussed include properly partitioning RDDs to avoid shuffles, persisting data efficiently like to local SSDs, and issues with Avro and HDFS/S3. Future plans include open sourcing Mario and using technologies like Elasticsearch and Flink.

JVM languages "flame wars"

JVM languages "flame wars"Gal Marder There are many programming languages that can be used on the JVM (Java, Scala, Groovy, Kotlin, ...). In this session, we'll outline the main differences between them and discuss advantages and disadvantages for each.

Dev nexus 2017

Dev nexus 2017Roy Russo Elasticsearch is a distributed, RESTful search and analytics engine that can be used for processing big data with Apache Spark. It allows ingesting large volumes of data in near real-time for search, analytics, and machine learning applications like feature generation. Elasticsearch is schema-free, supports dynamic queries, and integrates with Spark, making it a good fit for ingesting streaming data from Spark jobs. It must be deployed with consideration for fast reads, writes, and dynamic querying to support large-scale predictive analytics workloads.

Devnexus 2018

Devnexus 2018Roy Russo Elasticsearch is a distributed, RESTful search and analytics engine that can be used for processing big data with Apache Spark. Data is ingested from Spark into Elasticsearch for features generation and predictive modeling. Elasticsearch allows for fast reads and writes of large volumes of time-series and other data through its use of inverted indexes and dynamic mapping. It is deployed on AWS for its elastic scalability, high availability, and integration with Spark via fast queries. Ongoing maintenance includes archiving old data, partitioning indices, and reindexing large datasets.

Spark real world use cases and optimizations

Spark real world use cases and optimizationsGal Marder This document provides an overview of Spark, its core abstraction of resilient distributed datasets (RDDs), and common transformations and actions. It discusses how Spark partitions and distributes data across a cluster, its lazy evaluation model, and the concept of dependencies between RDDs. Common use cases like word counting, bucketing user data, finding top results, and analytics reporting are demonstrated. Key topics covered include avoiding expensive shuffle operations, choosing optimal aggregation methods, and potentially caching data in memory.

Transformation Priority Premise @Softwerkskammer MUC

Transformation Priority Premise @Softwerkskammer MUCDavid Völkel The document discusses the "Transformation Priority Premise" strategy for test-driven development (TDD). It proposes a sequence of code transformations from simple to complex that can help guide developers to choose which tests and code to implement next. The sequence includes transformations like adding code that returns nil, replacing constants with variables, adding conditional statements, and extracting functions. Following this sequence and choosing tests that match higher priority transformations is intended to help developers make steady progress with TDD without getting stuck on large changes. However, the priorities presented are informal and their correctness and completeness require further discussion.

Tempest scenariotests 20140512

Tempest scenariotests 20140512Masayuki Igawa Tempest provides scenario tests that test integration between multiple OpenStack services by executing sequences of operations. Current scenario tests cover operations like boot instances, attach volumes, manage snapshots and check network connectivity. Running scenario tests helps operators validate their cloud and developers check for regressions. While useful, scenario tests have issues like needing more test coverage, complex configuration, and difficulty analyzing failures. The future includes making scenario tests easier to use without command line skills and more flexible in specifying test environments.

Optimizing Laravel for content heavy and high-traffic websites by Man, Apples...

Optimizing Laravel for content heavy and high-traffic websites by Man, Apples...appleseeds-my The document discusses various techniques for optimizing Laravel applications for content-heavy and high-traffic websites. It covers optimizing at the language level, framework level, and infrastructure level. Some key optimizations discussed include route and config caching, optimizing auto-loading of classes, removing unused libraries, using raw SQL over Eloquent ORM for complex queries, solving the N+1 query problem through eager loading, and implementing caching through middleware and infrastructure like Nginx.

Viewers also liked (16)

Gradle.Enemy at the gates

Gradle.Enemy at the gatesStrannik_2013 Gradle is a flexible, general-purpose build automation tool that improves upon existing build tools like Ant, Maven, and Ivy. It uses Groovy as its configuration language, allowing builds to be written more clearly and concisely compared to XML formats. Gradle aims to provide the flexibility of Ant, the dependency management and conventions of Maven, and the speed of Git. It handles tasks, dependencies, plugins, and multiproject builds. Gradle configurations map closely to Maven scopes and it has good support for plugins, testing, caching, and integration with tools like Ant and Maven.

Effective Java applications

Effective Java applicationsStrannik_2013 This presentation covers effectiveness of Java applications. There are a lot of code samples that were measured using JMH library. For some samples we performed optimization to improve performance

JSF 2: Myth of panacea? Magic world of user interfaces

JSF 2: Myth of panacea? Magic world of user interfacesStrannik_2013 Presentation about JSF 2 framework, his advantages and shortcomings.

Additional information about RichFaces usage

Getting ready to java 8

Getting ready to java 8Strannik_2013 1. Lambda expressions

2. Streams API

3. Changes in interfaces

4. Nashorn

5. Optional

6. Java time

7. Accumulators

Java 8 in action.Jinq

Java 8 in action.JinqStrannik_2013 This document provides an overview and agenda for a presentation on Java 8 features and the Jinq library. It discusses Java 8 language features like lambda expressions and default methods. It then describes Jinq, an open-source library that allows functional-style database queries in Java by translating Java code into SQL. The document outlines how Jinq works, its configuration, supported query operations like filtering, sorting and joins, and limitations. It also briefly mentions alternative libraries like JOOQ.

Gradle 2.Write once, builde everywhere

Gradle 2.Write once, builde everywhereStrannik_2013 The document discusses Gradle, an open-source build automation tool. It provides an overview of Gradle, including its key features like flexible configuration, Groovy DSL, and improvements on Ant, Maven, and Ivy. The document also covers Gradle basics like projects, tasks, dependencies, plugins, testing and more. It compares Gradle to Maven and Ant and discusses how to integrate with them. The author concludes with pros and cons of Gradle along with its future outlook.

Gradle 2.Breaking stereotypes

Gradle 2.Breaking stereotypesStrannik_2013 This document summarizes the key points about Gradle build automation tool. It discusses some limitations of Ant and Maven, how Gradle addresses them using Groovy as its configuration language. Gradle provides features like caching, daemon, plugins, and integration with Maven. It offers better performance than Maven for multi-project builds. The document compares Gradle and Maven build times on sample projects and outlines some pros and cons of Gradle.

Top 10 reasons to migrate to Gradle

Top 10 reasons to migrate to GradleStrannik_2013 Top 10 reasons to migrate to Gradle from any other existing build systems(Ant,Maven):

Actuality

Programmability

Compactness

JVM-based and Java-based

DSL and API

Plugins

Integration

Configurations

Flexibility

Performance

Git.From thorns to the stars

Git.From thorns to the starsStrannik_2013 This document provides a summary of Git and its features:

Git is a distributed version control system designed by Linus Torvalds for tracking changes in source code during software development. It allows developers to work simultaneously and merge their changes. Key features include rapid branching and merging, distributed development, strong integrity and consistency. Git stores content addressed objects in its database and uses SHA-1 hashes to identify content.

Spring Web flow. A little flow of happiness

Spring Web flow. A little flow of happinessStrannik_2013 Spring Web Flow is a framework that introduces the concept of flows to extend the navigation capabilities of the Spring MVC framework. It allows expressing navigation rules and managing conversational state through the use of flow definitions composed of states and transitions. This provides advantages over traditional approaches like JSP, Struts, and JSF by making the navigation logic more modular, reusable, and visually understandable through tools like the Spring Tools Suite flow editor. While it adds capabilities, it also introduces some performance overhead and complexity that may not be suitable for all applications.

Spring Boot. Boot up your development

Spring Boot. Boot up your developmentStrannik_2013 Spring Boot allows developers to create stand-alone, production-grade Spring-based applications that can be launched using Java -main() without the need for XML configuration. It embeds Tomcat or Jetty servers directly and handles automatic configuration and dependencies. Spring Boot includes features like starters for common dependencies, hot swapping of code without restarts, and Actuator endpoints for monitoring and management.

Spring Web Flow. A little flow of happiness.

Spring Web Flow. A little flow of happiness.Alex Tumanoff Spring Web Flow provides a framework for modeling complex navigation flows in web applications. It introduces the concept of flows, which define navigation through a series of states. States can include view states, action states, and decision states. Variables can be defined and shared across scopes like request, view, flow, and conversation. SWF integrates with Spring MVC and other frameworks and aims to simplify navigation management compared to alternatives.

Serialization and performance in Java

Serialization and performance in JavaStrannik_2013 This document summarizes a presentation on serialization frameworks and their performance. It discusses the purpose of serialization, common data formats like binary, XML, and JSON, and several Java serialization frameworks including Java serialization, Kryo, Protocol Buffers, Jackson, Google GSON, and others. Benchmark results show that Kryo and Protocol Buffers generally have the best performance for serialization and deserialization speed as well as output size. The document provides recommendations on when to use different frameworks based on usage cases and priorities for speed, size, or flexibility.

Spring Boot. Boot up your development. JEEConf 2015

Spring Boot. Boot up your development. JEEConf 2015Strannik_2013 This document discusses Spring Boot, an open-source framework for building microservices and web applications. It provides an overview of Spring Boot's key features like embedded servers, auto-configuration, starters for common dependencies, and production monitoring with Spring Boot Actuator. The document also covers configuration, customization, security, and compares Spring Boot to alternatives like Dropwizard.

Spring.Boot up your development

Spring.Boot up your developmentStrannik_2013 This document discusses Spring Boot, a framework for creating stand-alone, production-grade Spring based applications that can be "just run". Spring Boot focuses on using sensible default configurations and automatic configuration so that developers can focus on the business problem rather than infrastructure. It provides features like embedded Tomcat/Jetty servers, auto configuration of Spring and third party libraries, actuator endpoints for monitoring apps, and works with properties files, environment variables and JNDI. The document also covers Spring configuration, annotations, issues, Groovy, environment configuration, initialization, auto-configuration classes, properties, and the health and metrics endpoints of Spring Boot Actuator.

Junior,middle,senior?

Junior,middle,senior?Strannik_2013 How to define candidate level on the interview?

How to do that quickly and precisely?

How to know one's technical level? How to improve it and build successful IT career? I try to answer all those questions in my talk.

Ad

Similar to Effectiveness and code optimization in Java (20)

Java/Scala Lab 2016. Сергей Моренец: Способы повышения эффективности в Java 8.

Java/Scala Lab 2016. Сергей Моренец: Способы повышения эффективности в Java 8.GeeksLab Odessa 16.4.16 Java/Scala Lab

Upcoming events: goo.gl/I2gJ4H

Зачастую эффективности и оптимизации кода уделяется мало времени при разработке проекта. В результате проблемы с быстродействием возникают уже на стадии финального тестирования или внедрения, когда исправить это не так просто. Приложение или потребляет слишком много ресурсов или работает слишком медленно. Мы рассмотрим основные ошибки, которые допускают Java разработчики при написании кода, а также я дам советы, как сделать ваш код более эффективным. Все примеры в презентации будут подробно прокомментированы.

Analyzing and Interpreting AWR

Analyzing and Interpreting AWRpasalapudi This document provides an overview and interpretation of the Automatic Workload Repository (AWR) report in Oracle database. Some key points:

- AWR collects snapshots of database metrics and performance data every 60 minutes by default and retains them for 7 days. This data is used by tools like ADDM for self-management and diagnosing issues.

- The top timed waits in the AWR report usually indicate where to focus tuning efforts. Common waits include I/O waits, buffer busy waits, and enqueue waits.

- Other useful AWR metrics include parse/execute ratios, wait event distributions, and top activities to identify bottlenecks like parsing overhead, locking issues, or inefficient SQL.

Apache con 2020 use cases and optimizations of iotdb

Apache con 2020 use cases and optimizations of iotdbZhangZhengming This document summarizes a presentation about IoTDB, an open source time series database optimized for IoT data. It discusses IoTDB's architecture, use cases, optimizations, and common questions. Key points include that IoTDB uses a time-oriented storage engine and tree-structured schema to efficiently store and query IoT sensor data, and that optimizations like schema design, memory allocation, and handling out-of-order data can improve performance. Common issues addressed relate to version compatibility, system load, and error conditions.

Searching Algorithms

Searching AlgorithmsAfaq Mansoor Khan Linear search examines each element of a list sequentially, one by one, and checks if it is the target value. It has a time complexity of O(n) as it requires searching through each element in the worst case. While simple to implement, linear search is inefficient for large lists as other algorithms like binary search require fewer comparisons.

EKON 23 Code_review_checklist

EKON 23 Code_review_checklistMax Kleiner Code Review Checklist: How far is a code review going? "Metrics measure the design of code after it has been written, a Review proofs it and Refactoring improves code."

In this paper a document structure is shown and tips for a code review.

Some checks fits with your existing tools and simply raises a hand when the quality or security of your codebase is impaired.

Adam Sitnik "State of the .NET Performance"

Adam Sitnik "State of the .NET Performance"Yulia Tsisyk MSK DOT NET #5

2016-12-07

In this talk Adam will describe how latest changes in.NET are affecting performance.

Adam wants to go through:

C# 7: ref locals and ref returns, ValueTuples.

.NET Core: Spans, Buffers, ValueTasks

And how all of these things help build zero-copy streams aka Channels/Pipelines which are going to be a game changer in the next year.

State of the .Net Performance

State of the .Net PerformanceCUSTIS This document summarizes Adam Sitnik's presentation on .NET performance. It discusses new features in C# 7 like ValueTuple, ref returns and locals, and Span. It also covers .NET Core improvements such as ArrayPool and ValueTask that reduce allocations. The presentation shows how these features improve performance through benchmarks and reduces GC pressure. It provides examples and guidance on best using new features like Span, pipelines, and unsafe code.

Large scalecplex

Large scalecplexoptimizatiodirectdirect - Optimization Direct is an IBM business partner that sells CPLEX optimization software and provides consulting services to help customers implement optimization solutions and maximize the benefits of IBM's software.

- The document discusses how to address modeling and optimization challenges for very large optimization models, including exploiting sparsity, tightening formulations, tuning the optimizer, and using heuristics to find good solutions within time limits.

- As an example, the document describes a heuristic scheduling approach that delivers solutions within 12-16% optimality gaps for large scheduling models that cannot be solved directly, outperforming serial solutions and providing speedups of 2-8x when run in parallel.

.NET Fest 2019. Николай Балакин. Микрооптимизации в мире .NET

.NET Fest 2019. Николай Балакин. Микрооптимизации в мире .NETNETFest Что делать, если все, что можно уже закэшировано, а код всё ещё тормозит? В этом докладе мы обсудим, как работают некоторые низкоуровневые механизмы .NET и как мы с их помощью можем выиграть драгоценные секунды, когда счет идет на отдельные такты процессора.

Java 8

Java 8Raghda Salah The document discusses the new features introduced in Java 8 including lambda expressions, method references, streams, default methods, the new date and time API, and the Nashorn JavaScript engine. Lambda expressions allow eliminating anonymous classes and nested functions. Method references provide a simpler way to refer to existing methods. Streams enable parallel processing of data. Default methods allow adding new methods to interfaces without breaking existing code. The date and time API improves on the previous APIs by making it thread-safe and more intuitive. The Nashorn engine allows embedding JavaScript in Java applications.

In memory databases presentation

In memory databases presentationMichael Keane This document provides an overview of in-memory databases, summarizing different types including row stores, column stores, compressed column stores, and how specific databases like SQLite, Excel, Tableau, Qlik, MonetDB, SQL Server, Oracle, SAP Hana, MemSQL, and others approach in-memory storage. It also discusses hardware considerations like GPUs, FPGAs, and new memory technologies that could enhance in-memory database performance.

Performance Testing Java Applications

Performance Testing Java ApplicationsC4Media Video and slides synchronized, mp3 and slide download available at http://bit.ly/14w07bK.

Martin Thompson explores performance testing, how to avoid the common pitfalls, how to profile when the results cause your team to pull a funny face, and what you can do about that funny face. Specific issues to Java and managed runtimes in general will be explored, but if other languages are your poison, don't be put off as much of the content can be applied to any development. Filmed at qconlondon.com.

Martin Thompson is a high-performance and low-latency specialist, with over two decades working with large scale transactional and big-data systems, in the automotive, gaming, financial, mobile, and content management domains. Martin was the co-founder and CTO of LMAX, until he left to specialize in helping other people achieve great performance with their software.

NYJavaSIG - Big Data Microservices w/ Speedment

NYJavaSIG - Big Data Microservices w/ SpeedmentSpeedment, Inc. Microservices solutions can provide fast access to large datasets by synchronizing SQL data into an in-JVM memory store and using key-value and column key stores. This allows querying terabytes of data in microseconds by mapping the data in memory and providing application programming interfaces. The solution uses periodic synchronization to initially load and periodically reload data, as well as reactive synchronization to capture and replay database changes.

Intro to Time Series

Intro to Time Series InfluxData This document provides an introduction to time series data and InfluxDB. It defines time series data as measurements taken from the same source over time that can be plotted on a graph with one axis being time. Examples of time series data include weather, stock prices, and server metrics. Time series databases like InfluxDB are optimized for storing and processing huge volumes of time series data in a high performance manner. InfluxDB uses a simple data model where points consist of measurements, tags, fields, and timestamps.

Benchmarking Solr Performance at Scale

Benchmarking Solr Performance at Scalethelabdude Organizations continue to adopt Solr because of its ability to scale to meet even the most demanding workflows. Recently, LucidWorks has been leading the effort to identify, measure, and expand the limits of Solr. As part of this effort, we've learned a few things along the way that should prove useful for any organization wanting to scale Solr. Attendees will come away with a better understanding of how sharding and replication impact performance. Also, no benchmark is useful without being repeatable; Tim will also cover how to perform similar tests using the Solr-Scale-Toolkit in Amazon EC2.

BIRTE-13-Kawashima

BIRTE-13-KawashimaHideyuki Kawashima This paper proposes a multiple query optimization (MQO) scheme for change point detection (CPD) that can significantly reduce the number of operators needed. CPD is used to detect anomalies in time series data but requires tuning parameters, which leads to running multiple CPDs with different parameters. The paper identifies four patterns for sharing CPD operators between queries based on whether parameter values are the same. Experiments show the proposed MQO approach reduces the number of operators by up to 80% compared to running each CPD independently, thus improving performance. Integrating MQO with hardware accelerators is suggested as future work.

Databases Have Forgotten About Single Node Performance, A Wrongheaded Trade Off

Databases Have Forgotten About Single Node Performance, A Wrongheaded Trade OffTimescale The earliest relational databases were monolithic on-premise systems that were powerful and full-featured. Fast forward to the Internet and NoSQL: BigTable, DynamoDB and Cassandra. These distributed systems were built to scale out for ballooning user bases and operations. As more and more companies vied to be the next Google, Amazon, or Facebook, they too "required" horizontal scalability.

But in a real way, NoSQL and even NewSQL have forgotten single node performance where scaling out isn't an option. And single node performance is important because it allows you to do more with much less. With a smaller footprint and simpler stack, overhead decreases and your application can still scale.

In this talk, we describe TimescaleDB's methods for single node performance. The nature of time-series workloads and how data is partitioned allows users to elastically scale up even on single machines, which provides operational ease and architectural simplicity, especially in cloud environments.

Oracle Performance Tuning Fundamentals

Oracle Performance Tuning FundamentalsEnkitec Any DBA from beginner to advanced level, who wants to fill in some gaps in his/her knowledge about Performance Tuning on an Oracle Database, will benefit from this workshop.

CPLEX Optimization Studio, Modeling, Theory, Best Practices and Case Studies

CPLEX Optimization Studio, Modeling, Theory, Best Practices and Case Studiesoptimizatiodirectdirect Recent advancements in Linear and Mixed Programing give us the capability to solve larger Optimization Problems. CPLEX Optimization Studio solves large-scale optimization problems and enables better business decisions and resulting financial benefits in areas such as supply chain management, operations, healthcare, retail, transportation, logistics and asset management. In this workshop using CPLEX Optimization Studio we will discuss modeling practices, case studies and demonstrate good practices for solving Hard Optimization Problems. We will also discuss recent CPLEX performance improvements and recently added features.

Predicting Optimal Parallelism for Data Analytics

Predicting Optimal Parallelism for Data AnalyticsDatabricks A key benefit of serverless computing is that resources can be allocated on demand, but the quantity of resources to request, and allocate, for a job can profoundly impact its running time and cost. For a job that has not yet run, how can we provide users with an estimate of how the job’s performance changes with provisioned resources, so that users can make an informed choice upfront about cost-performance tradeoffs?

This talk will describe several related research efforts at Microsoft to address this question. We focus on optimizing the amount of computational resources that control a data analytics query’s achieved intra-parallelism. These use machine learning models on query characteristics to predict the run time or Performance Characteristic Curve (PCC) as a function of the maximum parallelism that the query will be allowed to exploit.

The AutoToken project uses models to predict the peak number of tokens (resource units) that is determined by the maximum parallelism that the recurring SCOPE job can ever exploit while running in Cosmos, an Exascale Big Data analytics platform at Microsoft. AutoToken_vNext, or TASQ, predicts the PCC as a function of the number of allocated tokens (limited parallelism). The AutoExecutor project uses models to predict the PCC for Apache Spark SQL queries as a function of the number of executors. The AutoDOP project uses models to predict the run time for SQL Server analytics queries, running on a single machine, as a function of their maximum allowed Degree Of Parallelism (DOP).

We will present our approaches and prediction results for these scenarios, discuss some common challenges that we handled, and outline some open research questions in this space.

Ad

Recently uploaded (20)

Douwan Crack 2025 new verson+ License code

Douwan Crack 2025 new verson+ License codeaneelaramzan63 Copy & Paste On Google >>> https://dr-up-community.info/

Douwan Preactivated Crack Douwan Crack Free Download. Douwan is a comprehensive software solution designed for data management and analysis.

Maxon CINEMA 4D 2025 Crack FREE Download LINK

Maxon CINEMA 4D 2025 Crack FREE Download LINKyounisnoman75 ⭕️➡️ FOR DOWNLOAD LINK : http://drfiles.net/ ⬅️⭕️

Maxon Cinema 4D 2025 is the latest version of the Maxon's 3D software, released in September 2024, and it builds upon previous versions with new tools for procedural modeling and animation, as well as enhancements to particle, Pyro, and rigid body simulations. CG Channel also mentions that Cinema 4D 2025.2, released in April 2025, focuses on spline tools and unified simulation enhancements.

Key improvements and features of Cinema 4D 2025 include:

Procedural Modeling: New tools and workflows for creating models procedurally, including fabric weave and constellation generators.

Procedural Animation: Field Driver tag for procedural animation.

Simulation Enhancements: Improved particle, Pyro, and rigid body simulations.

Spline Tools: Enhanced spline tools for motion graphics and animation, including spline modifiers from Rocket Lasso now included for all subscribers.

Unified Simulation & Particles: Refined physics-based effects and improved particle systems.

Boolean System: Modernized boolean system for precise 3D modeling.

Particle Node Modifier: New particle node modifier for creating particle scenes.

Learning Panel: Intuitive learning panel for new users.

Redshift Integration: Maxon now includes access to the full power of Redshift rendering for all new subscriptions.

In essence, Cinema 4D 2025 is a major update that provides artists with more powerful tools and workflows for creating 3D content, particularly in the fields of motion graphics, VFX, and visualization.

Adobe Marketo Engage Champion Deep Dive - SFDC CRM Synch V2 & Usage Dashboards

Adobe Marketo Engage Champion Deep Dive - SFDC CRM Synch V2 & Usage DashboardsBradBedford3 Join Ajay Sarpal and Miray Vu to learn about key Marketo Engage enhancements. Discover improved in-app Salesforce CRM connector statistics for easy monitoring of sync health and throughput. Explore new Salesforce CRM Synch Dashboards providing up-to-date insights into weekly activity usage, thresholds, and limits with drill-down capabilities. Learn about proactive notifications for both Salesforce CRM sync and product usage overages. Get an update on improved Salesforce CRM synch scale and reliability coming in Q2 2025.

Key Takeaways:

Improved Salesforce CRM User Experience: Learn how self-service visibility enhances satisfaction.

Utilize Salesforce CRM Synch Dashboards: Explore real-time weekly activity data.

Monitor Performance Against Limits: See threshold limits for each product level.

Get Usage Over-Limit Alerts: Receive notifications for exceeding thresholds.

Learn About Improved Salesforce CRM Scale: Understand upcoming cloud-based incremental sync.

FL Studio Producer Edition Crack 2025 Full Version

FL Studio Producer Edition Crack 2025 Full Versiontahirabibi60507 Copy & Past Link 👉👉

http://drfiles.net/

FL Studio is a Digital Audio Workstation (DAW) software used for music production. It's developed by the Belgian company Image-Line. FL Studio allows users to create and edit music using a graphical user interface with a pattern-based music sequencer.

Agentic AI Use Cases using GenAI LLM models

Agentic AI Use Cases using GenAI LLM modelsManish Chopra This document presents specific use cases for Agentic AI (Artificial Intelligence), featuring Large Language Models (LLMs), Generative AI, and snippets of Python code alongside each use case.

Landscape of Requirements Engineering for/by AI through Literature Review

Landscape of Requirements Engineering for/by AI through Literature ReviewHironori Washizaki Hironori Washizaki, "Landscape of Requirements Engineering for/by AI through Literature Review," RAISE 2025: Workshop on Requirements engineering for AI-powered SoftwarE, 2025.

Xforce Keygen 64-bit AutoCAD 2025 Crack

Xforce Keygen 64-bit AutoCAD 2025 Crackusmanhidray Copy & Past Link 👉👉

http://drfiles.net/

When you say Xforce with GTA 5, it sounds like you might be talking about Xforce Keygen — a tool that's often mentioned in connection with cracking software like Autodesk programs.

BUT, when it comes to GTA 5, Xforce isn't officially part of the game or anything Rockstar made.

If you're seeing "Xforce" related to GTA 5 downloads or cracks, it's usually some unofficial (and risky) tool for pirating the game — which can be super dangerous because:

Solidworks Crack 2025 latest new + license code

Solidworks Crack 2025 latest new + license codeaneelaramzan63 Copy & Paste On Google >>> https://dr-up-community.info/

The two main methods for installing standalone licenses of SOLIDWORKS are clean installation and parallel installation (the process is different ...

Disable your internet connection to prevent the software from performing online checks during installation

Adobe Master Collection CC Crack Advance Version 2025

Adobe Master Collection CC Crack Advance Version 2025kashifyounis067 🌍📱👉COPY LINK & PASTE ON GOOGLE http://drfiles.net/ 👈🌍

Adobe Master Collection CC (Creative Cloud) is a comprehensive subscription-based package that bundles virtually all of Adobe's creative software applications. It provides access to a wide range of tools for graphic design, video editing, web development, photography, and more. Essentially, it's a one-stop-shop for creatives needing a broad set of professional tools.

Key Features and Benefits:

All-in-one access:

The Master Collection includes apps like Photoshop, Illustrator, InDesign, Premiere Pro, After Effects, Audition, and many others.

Subscription-based:

You pay a recurring fee for access to the latest versions of all the software, including new features and updates.

Comprehensive suite:

It offers tools for a wide variety of creative tasks, from photo editing and illustration to video editing and web development.

Cloud integration:

Creative Cloud provides cloud storage, asset sharing, and collaboration features.

Comparison to CS6:

While Adobe Creative Suite 6 (CS6) was a one-time purchase version of the software, Adobe Creative Cloud (CC) is a subscription service. CC offers access to the latest versions, regular updates, and cloud integration, while CS6 is no longer updated.

Examples of included software:

Adobe Photoshop: For image editing and manipulation.

Adobe Illustrator: For vector graphics and illustration.

Adobe InDesign: For page layout and desktop publishing.

Adobe Premiere Pro: For video editing and post-production.

Adobe After Effects: For visual effects and motion graphics.

Adobe Audition: For audio editing and mixing.

Adobe Illustrator Crack FREE Download 2025 Latest Version

Adobe Illustrator Crack FREE Download 2025 Latest Versionkashifyounis067 🌍📱👉COPY LINK & PASTE ON GOOGLE http://drfiles.net/ 👈🌍

Adobe Illustrator is a powerful, professional-grade vector graphics software used for creating a wide range of designs, including logos, icons, illustrations, and more. Unlike raster graphics (like photos), which are made of pixels, vector graphics in Illustrator are defined by mathematical equations, allowing them to be scaled up or down infinitely without losing quality.

Here's a more detailed explanation:

Key Features and Capabilities:

Vector-Based Design:

Illustrator's foundation is its use of vector graphics, meaning designs are created using paths, lines, shapes, and curves defined mathematically.

Scalability:

This vector-based approach allows for designs to be resized without any loss of resolution or quality, making it suitable for various print and digital applications.

Design Creation:

Illustrator is used for a wide variety of design purposes, including:

Logos and Brand Identity: Creating logos, icons, and other brand assets.

Illustrations: Designing detailed illustrations for books, magazines, web pages, and more.

Marketing Materials: Creating posters, flyers, banners, and other marketing visuals.

Web Design: Designing web graphics, including icons, buttons, and layouts.

Text Handling:

Illustrator offers sophisticated typography tools for manipulating and designing text within your graphics.

Brushes and Effects:

It provides a range of brushes and effects for adding artistic touches and visual styles to your designs.

Integration with Other Adobe Software:

Illustrator integrates seamlessly with other Adobe Creative Cloud apps like Photoshop, InDesign, and Dreamweaver, facilitating a smooth workflow.

Why Use Illustrator?

Professional-Grade Features:

Illustrator offers a comprehensive set of tools and features for professional design work.

Versatility:

It can be used for a wide range of design tasks and applications, making it a versatile tool for designers.

Industry Standard:

Illustrator is a widely used and recognized software in the graphic design industry.

Creative Freedom:

It empowers designers to create detailed, high-quality graphics with a high degree of control and precision.

Get & Download Wondershare Filmora Crack Latest [2025]![Get & Download Wondershare Filmora Crack Latest [2025]](https://cdn.slidesharecdn.com/ss_thumbnails/revolutionizingresidentialwi-fi-250422112639-60fb726f-250429170801-59e1b240-thumbnail.jpg?width=560&fit=bounds)

![Get & Download Wondershare Filmora Crack Latest [2025]](https://cdn.slidesharecdn.com/ss_thumbnails/revolutionizingresidentialwi-fi-250422112639-60fb726f-250429170801-59e1b240-thumbnail.jpg?width=560&fit=bounds)

![Get & Download Wondershare Filmora Crack Latest [2025]](https://cdn.slidesharecdn.com/ss_thumbnails/revolutionizingresidentialwi-fi-250422112639-60fb726f-250429170801-59e1b240-thumbnail.jpg?width=560&fit=bounds)

![Get & Download Wondershare Filmora Crack Latest [2025]](https://cdn.slidesharecdn.com/ss_thumbnails/revolutionizingresidentialwi-fi-250422112639-60fb726f-250429170801-59e1b240-thumbnail.jpg?width=560&fit=bounds)

Get & Download Wondershare Filmora Crack Latest [2025]saniaaftab72555 Copy & Past Link 👉👉

https://dr-up-community.info/

Wondershare Filmora is a video editing software and app designed for both beginners and experienced users. It's known for its user-friendly interface, drag-and-drop functionality, and a wide range of tools and features for creating and editing videos. Filmora is available on Windows, macOS, iOS (iPhone/iPad), and Android platforms.

Adobe Photoshop CC 2025 Crack Full Serial Key With Latest

Adobe Photoshop CC 2025 Crack Full Serial Key With Latestusmanhidray Copy & Past Link👉👉💖

💖http://drfiles.net/

Adobe Photoshop is a widely-used, professional-grade software for digital image editing and graphic design. It allows users to create, manipulate, and edit raster images, which are pixel-based, and is known for its extensive tools and capabilities for photo retouching, compositing, and creating intricate visual effects.

Salesforce Aged Complex Org Revitalization Process .pdf

Salesforce Aged Complex Org Revitalization Process .pdfSRINIVASARAO PUSULURI Revitalizing a high-volume, underperforming Salesforce environment requires a structured, phased plan. The objective for company is to stabilize, scale, and future-proof the platform.

Here presenting various improvement techniques that i learned over a decade of experience

Who Watches the Watchmen (SciFiDevCon 2025)

Who Watches the Watchmen (SciFiDevCon 2025)Allon Mureinik Tests, especially unit tests, are the developers’ superheroes. They allow us to mess around with our code and keep us safe.

We often trust them with the safety of our codebase, but how do we know that we should? How do we know that this trust is well-deserved?

Enter mutation testing – by intentionally injecting harmful mutations into our code and seeing if they are caught by the tests, we can evaluate the quality of the safety net they provide. By watching the watchmen, we can make sure our tests really protect us, and we aren’t just green-washing our IDEs to a false sense of security.

Talk from SciFiDevCon 2025

https://www.scifidevcon.com/courses/2025-scifidevcon/contents/680efa43ae4f5

Adobe Illustrator Crack | Free Download & Install Illustrator

Adobe Illustrator Crack | Free Download & Install Illustratorusmanhidray Copy & Link Here 👉👉

http://drfiles.net/

Adobe Illustrator is a vector graphics editor and design software, developed and marketed by Adobe, used for creating logos, icons, illustrations, and other graphics that can be scaled without loss of quality. It's a powerful tool for graphic designers, web designers, and artists who need to create crisp, scalable artwork for various applications like print, web, and mobile.

Avast Premium Security Crack FREE Latest Version 2025

Avast Premium Security Crack FREE Latest Version 2025mu394968 🌍📱👉COPY LINK & PASTE ON GOOGLE https://dr-kain-geera.info/👈🌍

Avast Premium Security is a paid subscription service that provides comprehensive online security and privacy protection for multiple devices. It includes features like antivirus, firewall, ransomware protection, and website scanning, all designed to safeguard against a wide range of online threats, according to Avast.

Key features of Avast Premium Security:

Antivirus: Protects against viruses, malware, and other malicious software, according to Avast.

Firewall: Controls network traffic and blocks unauthorized access to your devices, as noted by All About Cookies.

Ransomware protection: Helps prevent ransomware attacks, which can encrypt your files and hold them hostage.

Website scanning: Checks websites for malicious content before you visit them, according to Avast.

Email Guardian: Scans your emails for suspicious attachments and phishing attempts.

Multi-device protection: Covers up to 10 devices, including Windows, Mac, Android, and iOS, as stated by 2GO Software.

Privacy features: Helps protect your personal data and online privacy.

In essence, Avast Premium Security provides a robust suite of tools to keep your devices and online activity safe and secure, according to Avast.

Kubernetes_101_Zero_to_Platform_Engineer.pptx

Kubernetes_101_Zero_to_Platform_Engineer.pptxCloudScouts Presentacion de la primera sesion de Zero to Platform Engineer

Exceptional Behaviors: How Frequently Are They Tested? (AST 2025)

Exceptional Behaviors: How Frequently Are They Tested? (AST 2025)Andre Hora Exceptions allow developers to handle error cases expected to occur infrequently. Ideally, good test suites should test both normal and exceptional behaviors to catch more bugs and avoid regressions. While current research analyzes exceptions that propagate to tests, it does not explore other exceptions that do not reach the tests. In this paper, we provide an empirical study to explore how frequently exceptional behaviors are tested in real-world systems. We consider both exceptions that propagate to tests and the ones that do not reach the tests. For this purpose, we run an instrumented version of test suites, monitor their execution, and collect information about the exceptions raised at runtime. We analyze the test suites of 25 Python systems, covering 5,372 executed methods, 17.9M calls, and 1.4M raised exceptions. We find that 21.4% of the executed methods do raise exceptions at runtime. In methods that raise exceptions, on the median, 1 in 10 calls exercise exceptional behaviors. Close to 80% of the methods that raise exceptions do so infrequently, but about 20% raise exceptions more frequently. Finally, we provide implications for researchers and practitioners. We suggest developing novel tools to support exercising exceptional behaviors and refactoring expensive try/except blocks. We also call attention to the fact that exception-raising behaviors are not necessarily “abnormal” or rare.

Revolutionizing Residential Wi-Fi PPT.pptx

Revolutionizing Residential Wi-Fi PPT.pptxnidhisingh691197 Discover why Wi-Fi 7 is set to transform wireless networking and how Router Architects is leading the way with next-gen router designs built for speed, reliability, and innovation.

PDF Reader Pro Crack Latest Version FREE Download 2025

PDF Reader Pro Crack Latest Version FREE Download 2025mu394968 🌍📱👉COPY LINK & PASTE ON GOOGLE https://dr-kain-geera.info/👈🌍

PDF Reader Pro is a software application, often referred to as an AI-powered PDF editor and converter, designed for viewing, editing, annotating, and managing PDF files. It supports various PDF functionalities like merging, splitting, converting, and protecting PDFs. Additionally, it can handle tasks such as creating fillable forms, adding digital signatures, and performing optical character recognition (OCR).

Effectiveness and code optimization in Java

- 1. Effectiveness and code optimization in Java Sergey Morenets, [email protected] December, 4 2015

- 2. About author • Works in IT since 2000 • 12 year of Java SE/EE experience • Regular speaker at Java conferences • Author of “Development of Java applications” and “Main errors in Java programming ”books • Founder of http://it-simulator.com

- 3. Preface

- 4. Agenda

- 5. Agenda • What is effectiveness? • Code optimization • JVM optimization • Code samples • Measurements

- 6. Ideal code Concise Readable Self-describing Reusable Testable Modern Flexible Scalable Effective

- 7. Effectiveness • Hard to determine on code/design review stages or in unit-tests • Is relevant for the specific project configuration • Cannot be defined in development environment • Depends on the application environment • Premature optimization is evil • Hardware-specific • The only aspect of the ideal code that affects users

- 14. Effectiveness • Can be measured • Can be static or dynamic • Can be tuned

- 15. Tuning • JVM options • Metaspace/heap/stack size • Garbage collector options • http://blog.sokolenko.me/2014/11/javavm-options- production.html • http://www.javaspecialists.eu/

- 16. Code optimization Java compiler JIT compiler JVM

- 18. Code optimization public int execute(); Code: 0: iconst_2 1: istore_1 2: iinc 1, 1 5: iconst_1 6: ireturn

- 20. Code optimization public int execute(); Code: 0: iconst_2 1: istore_1 2: iconst_1 3: ireturn

- 22. Code optimization public void execute(); Code: 0: return

- 24. Code optimization public static boolean get(); Code: 0: iconst_1 1: ireturn

- 26. Code optimization public void execute(); Code: 0: return

- 28. Code optimization public int execute(); Code: 0: iconst_2 1: istore_1 2: iconst_4 3: istore_2 4: iload_1 5: iload_2 6: iadd 7: ireturn

- 30. Code optimization public int execute(); Code: 0: bipush 6 2: ireturn

- 32. Code optimization public int execute(); Code: 0: bipush 12 2: ireturn

- 33. Javacompiler Dead code elimination Constant folding Fixed expression calculation

- 34. Measurements • JMH is micro benchmarking framework • Developed by Oracle engineers • First release in 2013 • Requires build tool(Maven, Gradle) • Can measure throughput or average time • Includes warm-up period

- 35. Warm-up

- 36. Environment • JMH 1.11.1 • Maven 3.3.3 • JDK 1.8.0.65 • Intel Core i7, 4 cores, 16 GB

- 37. Measurements Type Time(ns) Multiply 4 2,025 Shift 2,024

- 38. Measurements Type Time(ns) Multiply 17 2,04 Shift 2,04

- 39. Sample

- 40. Method vs Field

- 44. Increment

- 46. Swap

- 47. Measurements Type Time(ns) Standard 2,10 Addiction 2,08 XOR 2,04

- 48. Conditions

- 49. Measurements Type Time(ns) Ternary operation 2,05 If operator 2,03

- 50. Parsing

- 51. Measurements Type Time(ns) Long usage(small numbers) 3 Long usage(average numbers) 19 Long usage(big numbers) 33 Long usage (not a number) 849 Regexp (small numbers) 58 Regexp (average numbers) 75 Regexp (big numbers) 82 Regexp (not a number) 47

- 52. Copying arrays

- 53. Measurements Type Time(ns) Loop(1000 elements) 52,6 arrayCopy(1000 elements) 45,6 Loop(100 000 elements) 21666 arrayCopy(100 000 elements) 21707 Loop(10 000 000 elements) 6432557 arrayCopy(10 000 000 elements) 6616976

- 55. Measurements Type Time(ns) Rows(10 elements) 37 Columns(10 elements) 67 Rows(100 elements) 2954 Columns(100 elements) 5567 Rows(1000 elements) 264340 Columns(1000 elements) 1300244 Rows(5000 elements) 9,6(ms) Columns(5000 elements) 387(ms)

- 56. Strings

- 57. Measurements Type Time(ns) + 7,62 Concat 13,4 StringBuffer 7,32 StringBuilder 7,24

- 58. Maps

- 59. Measurements Type Time(ns) Entries (10 pairs) 30 Keys/Values(10 pairs) 70 Entries (1000 pairs) 2793 Keys/Values(1000 pairs) 8798 Entries (200 000 pairs) 237652 Keys/Values(200 000 pairs) 350821

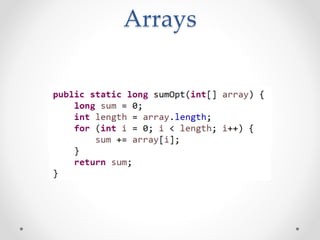

- 60. Arrays

- 61. Measurements Type Time(ns) For (10 elements) 4,9 For-each (10 elements) 5,1 For (1000 elements) 260 For-each (1000 elements) 259,9 For (50000 elements) 12957 For-each (50000 elements) 12958

- 62. Arrays

- 63. Measurements Type Time(ns) For (10 elements) 5,04 For optimized(10 elements) 5,07 For (1000 elements) 258,9 For-each (1000 elements) 258,7

- 64. Arrays

- 65. Measurements Type Time(ns) Sequential (10 elements) 5 Parallel (10 elements) 6230 Sequential (1000 elements) 263 Parallel (1000 elements) 8688 Sequential (50000 elements) 13115 Parallel (50000 elements) 34695

- 66. Measurements Type Time(ns) Sequential (10 elements) 5 Parallel (10 elements) 6230 Sequential (1000 elements) 263 Parallel (1000 elements) 8688 Sequential (50000 elements) 13115 Parallel (50000 elements) 34695 Sequential (5 000 000 elements) 1 765 206 Parallel (5 000 000 elements) 2 668 564 Sequential (500 000 000) 183 ms Parallel (500 000 000) 174 ms

- 67. Exceptions

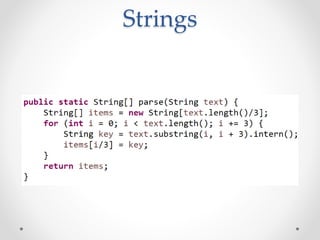

- 69. Strings

- 70. Strings

- 71. Measurements Type Time(ns) Parse(1000 tokens) 12239 Parse with intern(1000 tokens) 72814

- 72. Arrays

- 73. Bitset

- 74. Measurements Type Time(ns) Fill array (1000 elements) 148 Fill bit set(1000 elements) 1520 Fill array (50 000 elements) 4669 Fill bit set(50 000 elements) 71395

- 75. Bitset