Multiprocessor Scheduling

- 2. Introduction When a computer system contains more than a single processor, several new issues are introduced into the design of scheduling functions. We will examine these issues and the details of scheduling algorithms for tightly coupled multi-processor systems.

- 3. Classifications of Multiprocessor Systems Loosely coupled or distributed multiprocessor, or cluster Each processor has its own memory and I/O channels Functionally specialized processors Such as I/O processor Controlled by a master processor Tightly coupled multiprocessing Processors share main memory Controlled by operating system

- 4. Interconnection Network P P P Memory disk Memory Memory disk disk Shared Nothing (Loosely Coupled) P P P Interconnection Network Global Shared Memory disk disk disk Shared Memory (Tightly Coupled) Memory Memory Memory P P P Interconnection Network disk disk disk Shared Disk

- 5. Granularity A good metric for characterizing multiprocessors and placing then in context with other architectures is to consider the synchronization granularity, or frequency of synchronization, between processes in a system. Five categories of parallelism that differ in the degree of granularity can be defined: 1. Independent parallelism 2. Very coarse granularity 3. Coarse granularity 4. Medium granularity 5. Fine granularity

- 6. Independent Parallelism With independent parallelism there is no explicit synchronization among processes. Each process represents a separate, independent application or job. This type of parallelism is typical of a time-sharing system. The multiprocessor provides the same service as a multiprogrammed uniprocessor, however, because more than one processor is available, average response times to the user tend to be less.

- 7. Coarse and Very Coarse-Grained Parallelism With coarse and very coarse grained parallelism, there is synchronization among processes, but at a very gross level. This type of situation is easily handled as a set of concurrent processes running on a multi-programmed uni-processor and can be supported on a multiprocessor with little or no change to user software. Good for concurrent processes that need to communicate or synchronize can benefit from the use of a multiprocessor architecture. In the case of very infrequent interaction among the processes, a distributed system can provide good support. However, if the interaction is somewhat more frequent, then the overhead of communication across the network may negate some of the potential speedup. In that case, the multiprocessor organization provides the most effective support.

- 8. Medium-Grained Parallelism Single application is a collection of threads Threads usually interact frequently Fine-Grained Parallelism Highly parallel applications Specialized and fragmented area

- 9. Design Issues For Multiprocessor Scheduling Scheduling on a multiprocessor involves three interrelated issues: 1. The assignment of processes to processors 2. The use of multiprogramming on individual processors 3. The actual dispatching of a process Looking at these three issues, it is important to keep in mind that the approach taken will depend, in general, on the degree of granularity of the applications and on the number of processors available.

- 10. 1: Assignment of Processes to Processors Treat processors as a pooled resource and assign process to processors on demand Permanently assign process to a processor Known as group or gang scheduling Dedicate short-term queue for each processor Less overhead Processor could be idle while another processor has a backlog Global queue Schedule to any available processor

- 11. Assignment of Processes to Processors Master/slave architecture Key kernel functions always run on a particular processor Master is responsible for scheduling Slave sends service request to the master Disadvantages Failure of master brings down whole system Master can become a performance bottleneck Peer architecture Kernel can execute on any processor Each processor does self-scheduling Complicates the operating system Make sure two processors do not choose the same process

- 12. Process Scheduling Single queue for all processes Multiple queues are used for priorities All queues feed to the common pool of processors

- 13. Multiprocessor Thread Scheduling Executes separate from the rest of the process An application can be a set of threads that cooperate and execute concurrently in the same address space Load sharing Processes are not assigned to a particular processor Gang scheduling A set of related threads is scheduled to run on a set of processors at the same time Dedicated processor assignment Threads are assigned to a specific processor Dynamic scheduling Number of threads can be altered during course of execution

- 14. Load Sharing Load is distributed evenly across the processors No centralized scheduler required Use global queues Disadvantages of Load Sharing Central queue needs mutual exclusion Preemptive threads are unlikely to resume execution on the same processor If all threads are in the global queue, all threads of a program will not gain access to the processors at the same time

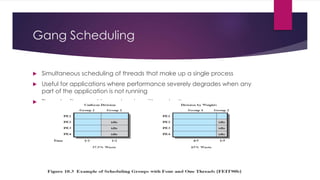

- 15. Gang Scheduling Simultaneous scheduling of threads that make up a single process Useful for applications where performance severely degrades when any part of the application is not running Threads often need to synchronize with each other

- 16. Dedicated Processor Assignment When application is scheduled, its threads are assigned to a processor Some processors may be idle No multiprogramming of processors

- 17. Dynamic Scheduling Number of threads in a process are altered dynamically by the application Operating system adjust the load to improve utilization Assign idle processors New arrivals may be assigned to a processor that is used by a job currently using more than one processor Hold request until processor is available Assign processor a job in the list that currently has no processors (i.e., to all waiting new arrivals)