Ad

Envoy and Kafka

- 2. Adam Kotwasinski Principal Software Development Engineer Workday

- 3. Agenda 01 What is Kafka? 02 Proxying Kafka 03 Envoy as Kafka proxy 04 Envoy Kafka broker filter 05 Envoy Kafka mesh filter

- 5. • Streaming solution for sending and receiving records • Records are stored in topics which are divided into partitions ‒ partition is a unit of assignment ‒ a single consumer can have multiple partitions assigned • High throughput ‒ producer records stored as-is (no record format translation) ‒ zero-copy implementation • Re-reading ‒ a record can be consumed multiple times (unlike typical messaging solutions) • Durability ‒ partitions are replicated to other brokers in a cluster (replication factor) ‒ topics have a time / size-based retention configuration What is Kafka?

- 6. • Some examples - https://kafka.apache.org/uses ‒ messaging (topics == queues), ‒ website activity tracking (e.g. topic per activity type, high volume due to multiple client actions), ‒ metrics, ‒ log aggregation (abstracts out log files and puts all the logs in a single place), ‒ external commit log. Capabilities

- 7. • Raw clients: consumer, producer, admin ‒ (official) Java Apache Kafka client, librdkafka for C & C++ • Wrappers / frameworks ‒ spring-kafka, alpakka, smallrye • Kafka-streams API ‒ stream-friendly DSL: map, filter, join, group-by • Kafka Connect ‒ framework service for defining source and sink connectors ‒ allows pulling data from / pushing data into other services for example: Redis, SQL, Hadoop Rich ecosystem

- 8. • Kafka cluster is composed of 1+ Kafka brokers that store the partitions. • A topic is composed of 1+ partitions. Kafka cluster

- 9. • A partition is effectively an append-only record list. • Producers append only at the end of partition. • Consumers can consume from any offset. Partition

- 10. • Key, value and headers. • https://kafka.apache.org/documentation/#record Record

- 11. • Producers append the records to the end of partition. • Configurable batching capabilities (batch.size and linger.ms). • Target partition is chosen depending on producer’s configuration (org.apache.kafka.clients.producer.internals.DefaultPartitioner): ‒ if partition provided explicitly – use the partition provided, ‒ if key present – use hash(key) % partition count, ‒ if no partition nor key present – use the same partition for a single batch; ‒ latter two cases require the producer to know how many partitions are in a topic (this will be important for kafka-mesh-filter). • Broker acknowledgements (acks): ‒ leader replica (acks = 1), ‒ all replicas (acks = all), ‒ no confirmation (acks = 0). • Transaction / idempotence capabilities. Kafka Producer

- 12. • Consumer specifies which topics / partitions it wants to poll the records from. • Partition assignment can be either explicit (assign API) or cluster-managed (subscribe API). ‒ Subscription API requires consumer group id. • Records are received from current consumer position. ‒ Position can be changed with seek API (similar to any file-reader API). Kafka Consumer

- 13. • Kafka mechanism that allows for automatic distribution of partitions across consumer group members. • Auto-balancing if group members join or die (heartbeat). • Strategy configurable with partition.assignment.strategy property. Consumer groups

- 14. • Consumers can store their position either in external system, or in Kafka (internal topic __consumer_offsets). • Effectively a triple of group name, partition and offset. • Java client: ‒ commitSync, commitAsync, configuration property enable.auto.commit • Delivery semantics: ‒ at most once – offset committed before it is processed, ‒ at least once – offset committed after it is processed, ‒ exactly once – transaction API (if the processing == writing to the same Kafka cluster); storing offset in external system together with processed data. Consumer offsets

- 15. • https://kafka.apache.org/31/protocol.html#protocol_api_keys • Smart clients (producers, consumers) negotiate the protocol version ‒ API-versions response contains a map of understood request types • Automatic discovery of cluster members ‒ metadata response contains cluster topology information what topics are present how many partitions these topics have which brokers are leaders and replicas for partitions brokers’ host and port info Protocol

- 17. Proxying Kafka

- 18. • The host & port of Kafka broker, that the client will send requests to, come from broker’s advertised.listeners property. • As we want our traffic to go through the proxy, Kafka broker needs to advertise the socket it is listening on. • This requires configuration on both ends: ‒ proxy needs to point to Kafka broker, ‒ Kafka broker needs to advertise proxy’s address instead of itself. • This is not Envoy-specific. Kafka advertised.listeners

- 19. • Naïve proxying makes the broker-to-broker traffic go through the proxy. Naïve proxying

- 20. • Brokers can be configured to listen to multiple listeners, and we can specify which ones to use for inter-broker traffic. • inter.broker.listener.name • This way, only external traffic is routed through the proxy. Inter-broker traffic

- 21. Envoy as Kafka proxy

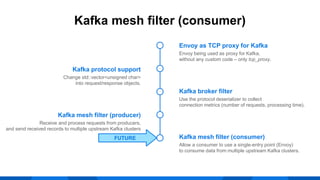

- 22. TCP proxy filter Envoy being used as proxy for Kafka, without any custom code – only TCP proxy filter. Envoy as TCP proxy for Kafka Use the protocol deserializer to collect connection metrics (number of requests, processing time). Kafka broker filter Allow a consumer to use a single-entry point (Envoy) to consume data from multiple upstream Kafka clusters. Kafka mesh filter (consumer) Change std::vector<unsigned char> into request/response objects. Kafka protocol support Receive and process requests from producers, and send received records to multiple upstream Kafka clusters Kafka mesh filter (producer)

- 23. • If we want to proxy a Kafka cluster with Envoy, we need to provide as many listeners as there are brokers. • Each of listeners would then use the TCP proxy filter to point to an upstream Kafka broker (which is present in Envoy cluster configuration object). • The filter chain can then be enhanced with other filters. • In general, a 1-1 mapping between a broker and Envoy listener needs to be kept. Proxying Kafka with Envoy

- 24. Example: single Envoy instance proxying two Kafka brokers ENVOY CONFIG BROKER 1 CONFIG BROKER 2 CONFIG

- 25. Envoy Kafka broker filter

- 26. Kafka protocol support in Envoy Envoy being used as proxy for Kafka, without any custom code – only tcp_proxy. Envoy as TCP proxy for Kafka Use the protocol deserializer to collect connection metrics (number of requests, processing time). Kafka broker filter Allow a consumer to use a single-entry point (Envoy) to consume data from multiple upstream Kafka clusters. Kafka mesh filter (consumer) Change std::vector<unsigned char> into request/response objects. Kafka protocol support Receive and process requests from producers, and send received records to multiple upstream Kafka clusters Kafka mesh filter (producer)

- 27. • Kafka message protocol is described in language-agnostic specification files. • These files are used to generate Java server/client code. • The same files were used to generate corresponding C++ code for Envoy – https://github.com/envoyproxy/envoy/pull/4950 – Python templates that generate headers to be included in the broker/mesh filter code. • https://github.com/apache/kafka/tree/3.1.0/clients/src/main/resource s/common/message Kafka message spec files

- 28. • Kafka messages have an increasing correlation id (sequence number). • https://kafka.apache.org/31/protocol.html#protocol_messages • This allows us to match a response with its request, as we can keep track when a request with particular id was received. ‒ absl::flat_hash_map<int32_t, MonotonicTime> request_arrivals_ (filter.h) • Requests (version 1+) also contain a client identifier. Request header

- 29. Kafka broker filter Envoy being used as proxy for Kafka, without any custom code – only tcp_proxy. Envoy as TCP proxy for Kafka Use the protocol deserializer to collect connection metrics (number of requests, processing time). Kafka broker filter Allow a consumer to use a single-entry point (Envoy) to consume data from multiple upstream Kafka clusters. Kafka mesh filter (consumer) Change std::vector<unsigned char> into request/response objects. Kafka protocol support Receive and process requests from producers, and send received records to multiple upstream Kafka clusters Kafka mesh filter (producer)

- 30. • https://www.envoyproxy.io/docs/envoy/v1.22.0/configuration/listeners/ne twork_filters/kafka_broker_filter • Intermediary filter intended to be used in a filter chain before TCP-filter that sends the traffic to the upstream broker. • As of now, data is sent without any changes. • Captures: ‒ request metrics, ‒ request processing time. • Entry point for future features (e.g. filtering by client identifier). • https://adam-kotwasinski.medium.com/deploying-envoy-and-kafka- 8aa7513ec0a0 Kafka broker filter features

- 32. Envoy Kafka mesh filter

- 33. Kafka mesh filter (producer) Envoy being used as proxy for Kafka, without any custom code – only tcp_proxy. Envoy as TCP proxy for Kafka Use the protocol deserializer to collect connection metrics (number of requests, processing time). Kafka broker filter Allow a consumer to use a single-entry point (Envoy) to consume data from multiple upstream Kafka clusters. Kafka mesh filter (consumer) Change std::vector<unsigned char> into request/response objects. Kafka protocol support Receive and process requests from producers, and send received records to multiple upstream Kafka clusters Kafka mesh filter (producer) CURRENT STATE

- 34. • Use Envoy as a facade for multiple Kafka clusters. • Clients are not aware of Kafka clusters; Envoy would perform necessary traffic routing. • Received Kafka requests would be routed to correct clusters depending on filter configuration. Motivation

- 35. • Terminal filter in Envoy filter chain. • https://www.envoyproxy.io/docs/envoy/v1.22.0/configuration/listener s/network_filters/kafka_mesh_filter • From the client perspective, an Envoy instance acts as a Kafka broker in one-broker cluster. • Upstream connections are performed by embedded librdkafka producer instances. • https://adam-kotwasinski.medium.com/kafka-mesh-filter-in-envoy- a70b3aefcdef Kafka mesh filter

- 37. 1. Filter instance pretends to be a broker in a single-broker cluster. 2. All partitions requested are hosted by the “Envoy-broker”. 3. When Produce requests are received, the filter extracts the records. 4. Extracted records are forwarded to embedded librdkafka producers pointing at upstream clusters. ‒ Upstream is chosen depending on the forwarding rules. 5. Filter waits for all delivery responses (failures too) before the response can be sent back downstream. Typical flow

- 38. • API-Versions response ‒ the filter supports only a limited subset of Kafka requests API-versions – to negotiate the request versions with clients Metadata – to make clients send all traffic to Envoy Produce – to receive the records and send them upstream to real Kafka clusters • Metadata response ‒ Broker’s host & port – required configuration properties for a filter instance Same purpose as broker’s advertised.listeners property ‒ Partition numbers for a topic Required configuration properties in upstream cluster definition This data is also used by default partitioner if key is not present Future improvement: fetch the configuration from upstream cluster API-Versions & Metadata

- 39. • As we can parse the Kafka messages, we can extract the necessary information and pass it to forwarding logic. • Current implementation uses only topic names to decide which upstream cluster should be used. ‒ First match in the configured prefix list, ‒ No match – exception (closes the connection), ‒ KafkaProducer& getProducerForTopic(const std::string& topic) (upstream_kafka_facade.h). • Single request can contain multiple records that would map to multiple upstream clusters. ‒ Downstream response is sent after all upstreams have finished (or failed). Forwarding policy

- 40. • We create an instance of Kafka producer (RdKafka::Producer) per internal worker thread (--concurrency) (source) • Custom configuration for each upstream (e.g. acks, buffer size). Embedded producer

- 42. Kafka mesh filter (consumer) Envoy being used as proxy for Kafka, without any custom code – only tcp_proxy. Envoy as TCP proxy for Kafka Use the protocol deserializer to collect connection metrics (number of requests, processing time). Kafka broker filter Allow a consumer to use a single-entry point (Envoy) to consume data from multiple upstream Kafka clusters. Kafka mesh filter (consumer) Change std::vector<unsigned char> into request/response objects. Kafka protocol support Receive and process requests from producers, and send received records to multiple upstream Kafka clusters Kafka mesh filter (producer) FUTURE

- 43. Kafka consumer types • Single consumer instance would handle multiple FetchRequests. • Messages would be distributed across multiple connections from downstream. • Similar to Kafka REST proxy and Kafka consumer groups (but without partition assignment). Shared consumer • New consumer for every downstream connection. • Multiple connections could receive the same message. • Consumer group support might be possible (would need to investigate JoinGroup & similar requests). Dedicated consumer

- 44. Q&A

- 45. Thank You

![Download Wondershare Filmora Crack [2025] With Latest](https://cdn.slidesharecdn.com/ss_thumbnails/neo4j-howkgsareshapingthefutureofgenerativeaiatawssummitlondonapril2024-240426125209-2d9db05d-250419-250428115407-a04afffa-thumbnail.jpg?width=560&fit=bounds)