Esxi troubleshooting

- 1. vSphere essentials Basic introduction to VMWare’s vSphere

- 3. vsphere essentials - vshpere defines the entire set of products offered by VMWare - vcentre is a server that runs on windows which enables easy management of more than 1 ESX hosts (HA, SRM, vMotion, DRS) - vsphere client is the UI for vshpere - ESX / ESXi hosts are single Type 1 hypervisors - ESX vs ESXi - ESX uses the Linux kernel on which the vmkernel is loaded as a module. ESXi uses the vmkernel, and multiple GNU tools the operating system kernel of VMware ESXi product consists of three key components: ● the proprietary component “vmkernel”, which is released in binary form only, ● the kernel module “vmklinux”, which contains modified Linux Code, and for which (at least some) source code is provided. ● other kernel modules with device drivers, most of which are modified Linux drivers, and for which (at least

- 5. The main features of vSphere are: VMware ESXi – The virtualisation hypervisor. VMware vCenter Server – The central point for configuration, provisioning, and management of the virtualised environment. VMware vSphere Client – The interface that allows remote connection to the vCenter Server, or a standalone ESXi server. VMware vSphere Web Access – A web based interface that allows Virtual Machine management and access to remote consoles. VMware vStorage VMFS – The high-performance clustered file-system used for storing Virtual Machines. VMware Virtual SMP – This feature allows a single Virtual Machine to use multiple physical processors simultaneously. VMware Distributed Resource Scheduler (DRS) – Allows for management of available resources across the whole environment and can relocate Virtual Machines onto alternate hosts. VMware High Availability (HA) – Provides failover capabilities should a physical host fail.

- 7. vSphere 5.5 maximums - per VM: 64 CPUs / 1TB RAM / 60 disks / 4 SCSI adapters / remote console logins 40 / NICs 10^6 - per ESXi host: 320 CPUs / VMs 512 / Virtual CPUs per core 32 / virtual disks 2048 / 8 HBAs of any type / NFS mounts 256 / LUNs 256 / LUN size 64TB / paths to LUN 32 / vMotion operations 128 per datastore / block size 1MB / files per volume ~130,690. - per cluster: hosts 32 / VMs in cluster 4000 / powered on VMs per datastore in cluster 2048 - per vcentre: hosts 1000 / powered on VMs10000 / registered VMs 15000 / 2 concurrent vMotions per host and 8 per datastore

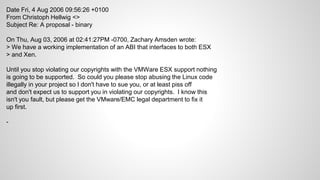

- 8. - ESXi is POSIX compliant. It follows the posix filesystem structure starting at / - ESXi uses common OpenSource tools such as common shells (sh, ash), openssh, NFS, sunrpc, opensource network drivers (e1000) - uses files such as /etc/hosts, /etc/resolv.conf, /etc/shells, /etc/passwd - contains main config files in /etc like any POSIX compliant OS - it is NOT based on or derived from UNIX, Solaris or Linux (vmware claims) - however VmWare was forced in the past to release code due to GPL violations - that still is the case today (Christoph Hellwig sued in Germany)

- 9. Date Fri, 4 Aug 2006 09:56:26 +0100 From Christoph Hellwig <> Subject Re: A proposal - binary On Thu, Aug 03, 2006 at 02:41:27PM -0700, Zachary Amsden wrote: > We have a working implementation of an ABI that interfaces to both ESX > and Xen. Until you stop violating our copyrights with the VMWare ESX support nothing is going to be supported. So could you please stop abusing the Linux code illegally in your project so I don't have to sue you, or at least piss off and don't expect us to support you in violating our copyrights. I know this isn't you fault, but please get the VMware/EMC legal department to fix it up first. -

- 10. ESXi CLI There are 3 sets of ESXi commands: 1. "esxcfg-" commands - usable but deprecated as of version 5.0 2. "esxcli" the new supported way to configure ESXi using the CLI 3. "vim-cmd" are commands used to manage VMs (power on/off/snapshot/convert to or from template etc) esxcfg- and esxcli can be used to get to the same results. And many other UNIX-like commands: df, dd, cp, chown, chmod, cat, less, find etc. Other vmware specific commands (vmkfstools, esxtop, vmkiscsi-tool, vmkping, etc). Most vmware commands start with vmk… ~# vmkerrcode -l lists a table of used esxi error codes

- 11. ESXi performance 1. CPU - ESXi uses a CPU scheduler to share physical resources to it's VMs which is handled by the vmkernel. The processes are called worlds. A vm with 4vCPUs has therefore 4 worlds. The worlds active on any given vCPU can reside on different logical CPUs, and can be moved by the scheduler at any time to allow for faster operations, this make measuring CPU utilization at ESXi host level useless when troubleshooting performance. CPU makes sense mostly at VM level - the scheduler is always looking at all the worlds' state and checks which one is ready to run next. A world changes state from WAIT to READY to let the scheduler know it must run, it is either given resources or sent to WAIT queue - there is also a priority list the scheduler uses to assign resources to the worlds in the READY queue - can use ps -us / ps -s / esxtop

- 12. Sometimes multiple vCPUs assigned to a VM lead to poor VM performance To determine if this is the case: ~# esxtop Look at %CSTP - if this value is 3.00 or greater the vCPU count must be lowered This counter shows the percentage of time the VM is waiting to execute commands but that it's waiting for the availability of multiple CPUs as the VM has been configured to use multiple vCPUs; the %RDY counter - a value over 5 indicates the VM was ready but could not be scheduled to run on a CPU (overloaded ESXi host) 'load average' - 1.00 indicates that the esxi's physical CPUs are fully utilised. 0.5 shows they are half utilised. a load average of 2.00 shows that the system is overloaded.

- 13. PCPU USED(%) - physical CPU if hyperthreading is not enabled, or logical CPU with hyperthreading. PCPU USED(%) displays the following percentages: percentage of CPU usage per PCPU and percentage of CPU usage averaged over all PCPUs PCPU UTIL(%) - percentage of real time that the PCPU was not idle (raw PCPU utilization) NWLD - Number of members in the resource pool or virtual machine of the world that is running. %USED - Percentage of physical CPU core cycles used by the resource pool, virtual machine, or world. %SYS - Percentage of time spent in the ESXi VMkernel on behalf of the resource pool, virtual machine, or world to process interrupts and to perform other system activities. %WAIT - Percentage of time the resource pool, virtual machine, or world spent in the blocked or busy wait state. Includes IDLE time %VMWAIT - The total percentage of time the Resource Pool/World spent in a blocked state waiting for events. %RDY - Percentage of time the resource pool, virtual machine, or world was ready to run, but was not provided CPU resources on which to execute.

- 14. 2. Memory ESXi uses a complex resource allocation algorithm to provide virtual memory to each VM as they need it. Most times the host's physical memory is overcommited, and it must constantly reclaim memory from VMs and allocate to other VMs: - Transparent page sharing: removes pages with similar content - Ballooning: reclaims memory by artificially increasing the pressure on the VMs memory. It's a driver inside the VMs OS that takes free memory and makes it available to the ESXi kernel (through a direct channel used by the baloon driver and the vmkernel) - Hypervisor swapping: ESXi directly swaps out the VMs memory - Memory compression: compresses pages that need to be swapped out

- 15. ~# esxtop MEM overcommit avg - 5 shows the physical memory is 50% overcommited, 2 = 20% etc. esxtop - m - j field MCTLSZ shows the mem used by the balloon driver SWCUR shows swap usage If balloning and swap usage are high, and mem is overcommited, VMs shoudl be vmotioned to different hosts, or VMs memory limits should be adjusted. These are indications the ESXi server is overloaded MEMSZ - amount of configured guest physical memory GRANT - the amount of guest physical memory granted to the group, i.e., mapped to machine memory. The overhead memory, "OVHD" is not included in GRANT. The shared memory, "SHRD", is part of "GRANT" "MEMSZ" = "GRANT" + "MCTLSZ" + "SWCUR" + "never touched" SZTGT - amount of machine memory to be allocated, includes the overhead memory for a VM. It is an internal ESXi counter, calculated by the memory scheduler. It's useless in troubleshooting performance issues. TCHD - amount of guest physical memory recently used by the VM, which is estimated by VMKernel, doesn't include baloon memory ACTV - Percentage of active guest physical memory, current value. ACTVx - are estimates what active will be MCTLSZ - memory reclaimed by the baloon driver SWCUR - current swap usage

- 16. VMs As far as a user is concerned a Virtual Machine is just the same as a physical machine except that it is created in software. An operating system and applications are installed on it just like any other physical device. An operating system that is running on a Virtual Machine is called a Guest Operating System. The hypervisor sees the Virtual Machine as a number of discrete files that reside in the hypervisors file-system. The files include a configuration file, virtual disk files, NVRAM setting file and log files. From time-to-time other files may also be required. VM files: VMX file – main configuration file VMDK Files – Disk related files, includes .VMDK, -delta.vmdk, -rdm.vmds VSWP File – Memory overflow (SWAP) file VMSD File – Snapshot details VMSS File – Memory contents of suspended VM VMSN File – Snapshot Files NVRAM File – BIOS file Log files

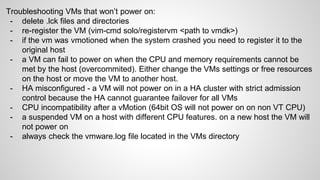

- 17. Troubleshooting VMs that won’t power on: - delete .lck files and directories - re-register the VM (vim-cmd solo/registervm <path to vmdk>) - if the vm was vmotioned when the system crashed you need to register it to the original host - a VM can fail to power on when the CPU and memory requirements cannot be met by the host (overcommited). Either change the VMs settings or free resources on the host or move the VM to another host. - HA misconfigured - a VM will not power on in a HA cluster with strict admission control because the HA cannot guarantee failover for all VMs - CPU incompatibility after a vMotion (64bit OS will not power on on non VT CPU) - a suspended VM on a host with different CPU features. on a new host the VM will not power on - always check the vmware.log file located in the VMs directory

- 18. Storage - datastores via NFS or iSCSI / FC - shared between all nodes in the cluster - in large environments NFS is mostly used for iso files to act as CDROM drives for VMS - iSCSI is the preferred solution for VMWare - guest OS IO timeouts - vmware recommends a max of 190 sec (no min) - snapshot / clone LUNs signature - multiple, smaller LUNs provide better performance than large LUNs - iSCSI hardware or software initiator - boot from SAN - the LUN should not be a vmfs datastore and should be visible only to the host that boots from it - RDM - /Disk/UseLunReset = 1 (use Lun reset instead of device reset) - ~ # esxcli system settings advanced set -o /Disk/UseLunReset -i 1

- 19. Datastores Datastores are used for holding VMs, ISO Images, & Templates. There are two types of Datastore: VMFS (VMware vStorage partition). NFS (Network File System). VMFS Datastores are also used to hold RDM mapping files so a VM can access Raw Data on a LUN. VMFS: Clustered File System. Allows concurrent access to shared storage. Uses on-disk locking. Can reside on Local, FCP and iSCSI storage. A VMkernel Port is required if the VMFS partition is using iSCSI. Can be increased in size in two ways: Add an Extent (a separate partition on another LUN). Grow an Extent (only if there is free space after the Extent on the existing LUN). Raw Device Maps (RDMs) Is a raw disk that is attached directly to a Virtual Machine, bypassing VMware’s file-system. A RDM mapping file is held on a VMFS partition. This is used by the VM to locate the physical raw disk that will be used by the RDM. RDMs can be as large as the Virtual Machine’s OS and the underlying Storage Array can handle.

- 20. NFS - esxcli storage nfs list | add | remove NFS datastore params ~ # esxcli storage nfs param get -n all Volume Name MaxQueueDepth MaxReadTransferSize MaxWriteTransferSize ------------- ------------- ------------------- -------------------- nfs_datastore 4294967295 1048576 1048576 Mount datastores manually: ~ # esxcli storage nfs add -H 172.21.201.233 -s /pools/bigpool/rsync -v nfs_datastore mount errors in /var/log/vmkernel.log Unmount datastores manually: ~ # esxcli storage nfs remove -v nfs_datastore

- 21. SAN - esxcli storage core device list -- full information about LUNs -- multipathing can be determined, VAAI support, unmap support -- LUNs can hang in a APD (all paths down) or PDL (permanent device loss) state. One needs to rescan the HBAs, remove the paths, rescan etc - can be very difficult to recover --esxcli storage core device world list - helps identify worlds keeping LUNs from being unmounted -- ATS locking might need to be disabled sometimes: # vmkfstools -Ph -v1 /vmfs/volumes/VMFS-volume-name VMFS-5.54 file system spanning 1 partitions. File system label (if any): ats-test-1 Mode: public ATS-only # esxcli system settings advanced list -o /VMFS3/HardwareAcceleratedLocking Path: /VMFS3/HardwareAcceleratedLocking Type: integer Int Value: 1 - Per datastore # vmkfstools --configATSOnly 0 /vmfs/devices/disks/naa.xxxxxxxxxxxxxxxxxxxxxxxxxx - Per esxi host # esxcli system settings advanced set -i 0 -o /VMFS3/HardwareAcceleratedLocking

- 22. Use CLI to add a new iSCSI LUN: 1. to get the state or enable software iSCSI ~ # esxcli iscsi software get | set -e ~ # esxcli iscsi adapter list 2. to discover the target: ~ # esxcli iscsi adapter discovery sendtarget add -a 172.16.41.132 -A vmhba33 3. Rescan the adapter: ~ # esxcli storage core adapter rescan –all Check that the LUNs have been discovered: ~ # esxcli storage core path list ~ # ls -s /dev/disks/ 52428800 naa.600144f04f16450000005463a0ac0001

- 23. 4. To get partition types supported: ~ # partedUtil showGuids Partition Type GUID vmfs AA31E02A400F11DB9590000C2911D1B8 vmkDiagnostic 9D27538040AD11DBBF97000C2911D1B8 vsan 381CFCCC728811E092EE000C2911D0B2 VMware Reserved 9198EFFC31C011DB8F78000C2911D1B8 Basic Data EBD0A0A2B9E5443387C068B6B72699C7 Linux Swap 0657FD6DA4AB43C484E50933C84B4F4F Linux Lvm E6D6D379F50744C2A23C238F2A3DF928 Linux Raid A19D880F05FC4D3BA006743F0F84911E Efi System C12A7328F81F11D2BA4B00A0C93EC93B Microsoft Reserved E3C9E3160B5C4DB8817DF92DF00215AE Unused Entry 00000000000000000000000000000000 ~ #

- 24. 5. Get partition information for the LUN: ~ # partedUtil "getptbl" /vmfs/devices/disks/naa.600144f04f16450000005463a0ac0001 gpt 6527 255 63 104857600 6. Create a new partition: ~ # partedUtil "setptbl" "/vmfs/devices/disks/naa.600144f04f16450000005463a0ac0001" "gpt" "1 2048 104857566 AA31E02A400F11DB9590000C2911D1B8 0" gpt 0 0 0 0 1 2048 104857566 AA31E02A400F11DB9590000C2911D1B8 0 in order: - partition number - starting sector - last sector - GUID/type - attr Note: the partition attribute is a number which describes the partition type. Common used are 128 (0x80) for bootable partitions, otherwise 0 for most partitions.

- 25. 7. Create the vmfs5 datastore using vmkfstools: ~ # vmkfstools -C vmfs5 -b 1m -S iscsi_datastore /vmfs/devices/disks/naa.600144f04f16450000005463a0ac0001:1 8. Check the new datastore is mounted: ~ # df -h Filesystem Size Used Available Use% Mounted on VMFS-5 32.5G 1.1G 31.4G 3% /vmfs/volumes/datastore1 VMFS-5 49.8G 972.0M 48.8G 2% /vmfs/volumes/iscsi_datastore

- 26. SAN latency: ~# esxtop -- u ---DQLEN - LUN depth queue length --CMDS - total ammount of commands --DAVG (ms) - device latency (r/w/avg). Anything higher then 10ms is not good, >20ms is bad, if constantly running at that value --KAVG (ms) - vmkernel latency - 2-3 ms at most --GAVG - latency seen by the guest OS VMWare recommends as an IO test using iometer or hd_speed to a directly attached LUN (RDM). For KAVG the ESXi host is responsible DAVG are latencies that are measured outside of the ESXi NIC , ie they depend on the Network and Storage array performance. **With VAAI KAVG and DAVG can be unusual high. Also esxi 5.5 update 2 has a bug where DAVG and KAVG show very high numbers. -- n --- show network stats for the physical nics c:cpu i:interrupt m:memory n:network d:disk adapter u:disk device v:disk VM p:power mgmt

- 28. VMware Networking There are two different types of Virtual Switch: 1. Standard Switches Where each ESX server is configured on an individual basis. 2. Distributed Switches A consistent network configuration for the Virtual Machines is created across multiple hosts. vNetwork Services There are two types of vNetwork Service: 1. Connect Virtual Machines to the physical network. 2. Connect Vmkernel services to the physical network. Such as NFS, iSCSI, vMotion.

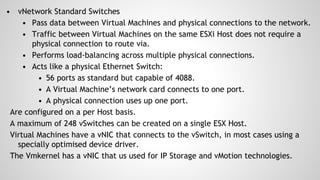

- 30. • vNetwork Standard Switches • Pass data between Virtual Machines and physical connections to the network. • Traffic between Virtual Machines on the same ESXi Host does not require a physical connection to route via. • Performs load-balancing across multiple physical connections. • Acts like a physical Ethernet Switch: • 56 ports as standard but capable of 4088. • A Virtual Machine’s network card connects to one port. • A physical connection uses up one port. Are configured on a per Host basis. A maximum of 248 vSwitches can be created on a single ESX Host. Virtual Machines have a vNIC that connects to the vSwitch, in most cases using a specially optimised device driver. The Vmkernel has a vNIC that us used for IP Storage and vMotion technologies.

- 31. • vNetwork Distributed Switches. • Provides similar functions to a Standard Switch but exists across the datacentre. • Is configured at the vCenter Server level so it is consistent across all ESX Hosts that use it. • Supports VMkernel port groups, and VM port groups. • Host specific configuration such as VMkernel and the Hosts individual IP addressing are still configured at the Host level as these will always change from host to host. Benefits of Distributed Switches. Simplify administration. Provide support for Private VLANs. Allows networking statistics and policies to migrate with a Virtual Machine when using vMotion, this can aid with debugging and troubleshooting.

- 32. Configuring ESXi with VLANs is recommended for the following reasons. - It integrates the host into a pre-existing environment. - It isolates and secures network traffic. - It reduces network traffic congestion. You can configure VLANs in ESXi using three methods: External Switch Tagging (EST), Virtual Switch Tagging (VST), and Virtual Guest Tagging (VGT). With EST, all VLAN tagging of packets is performed on the physical switch. Host network adapters are connected to access ports on the physical switch. Port groups that are connected to the virtual switch must have their VLAN ID set to 0. With VST, all VLAN tagging of packets is performed by the virtual switch before leaving the host. Host network adapters must be connected to trunk ports on the physical switch. Port groups that are connected to the virtual switch must have a VLAN ID between 1 and 4094. With VGT, all VLAN tagging is done by the virtual machine. VLAN tags are preserved between the virtual machine networking stack and external switch when frames pass to and from virtual switches. Host network adapters must be connected to trunk ports on the physical switch. The VLAN ID of port groups with VGT must be set to 4095.