Ad

Etl Overview (Extract, Transform, And Load)

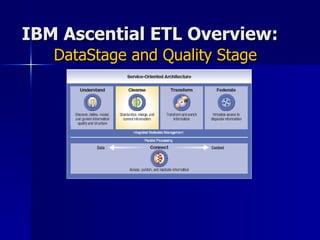

- 1. IBM Ascential ETL Overview: DataStage and Quality Stage

- 2. More than ever, businesses today need to understand their operations, customers, suppliers, partners, employees, and stockholders. They need to know what is happening with the business, analyze their operations, reach to market conditions, make the right decisions to drive revenue growth, increase profits and improve productivity and efficiency.

- 3. CIOs are responding to their organizations’ strategic needs by developing IT initiatives that align corporate data with business objectives. These initiatives include: Business intelligence Master data management Business transformation Infrastructure rationalization Risk and compliance

- 4. Connect to any data or content, wherever it resides Understand and analyze information, including relationships and lineage Cleanse information to ensure its quality and consistency Transform information to provide enrichment and tailoring for its specific purposes Federate information to make it accessible to people, processes and applications IBM WebSphere Information Integration platform enables businesses to perform five key integration functions :

- 5. Data Analysis : Define, annotate, and report on fields of business data. Data Quality : Standardize source data fields Match records across or within data sources, remove duplicate data Survive records from the best information across sources Data Transformation & Movement : Move data and transform it to meet the requirements of its target systems Integrate data and content Provide views as if from a single source while maintaining source integrity Software: Profile stage in QualityStage Software: QualityStage Software: DataStage Software: N/A (not used at NCEN) Software: QualityStage Software: DataStage This presentation will deal with ETL QualityStage and DataStage .

- 6. QualityStage QualityStage is used to cleanse and enrich data to meet business needs and data quality management standards. Data preparation (often referred to as data cleansing ) is critical to the success of an integration project. QualityStage provides a set of integrated modules for accomplishing data reengineering tasks, such as: Investigating Standardizing Designing and running matches Determining what data records survive = data cleansing

- 7. QualityStage Main QS stages used in the BRM project: Investigate – gives you complete visibility into the actual condition of data (not used in the BRM project because the users really know their data) Standardize – allows you to reformat data from multiple systems to ensure that each data type has the correct and consistent content and format Match – helps to ensure data integrity by linking records from one or more data sources that correspond to the same real-world entity. Matching can be used to identify duplicate entities resulting from data entry variations or account-oriented business practices Survive – helps to ensure that the best available data survives and is correctly prepared for the target destination

- 8. QualityStage Investigate Standardize Match Survive Word Investigation parses freeform fields into individual tokens, which are analyzed to create patterns. In addition, Word Investigation provides frequency counts on the tokens.

- 9. QualityStage Investigate Standardize Match Survive For example , to create the patterns in address data: Word Investigation uses a set of rules for classifying personal names, business names and addresses . Word Investigation provides prebuilt rule sets for investigating patterns on names and postal addresses for a number of different countries . For the United States, the address data would include: USPREP (parses name, address and area if data not previously formatted) USNAME (for individual and organization names) USADDR (for street and mailing addresses) USAREA (for city, state, ZIP code and so on)

- 10. QualityStage Investigate Standardize Match Survive Field parsing breaks the address into individual tokens of “123”, “St.”, “Virginia” and “St.” Example : The test field “ 123 St. Virginia St. ” would be analyzed in the following way: Lexical analysis determines the business significance of each piece 123 = Number St. = Street type Virginia = Alpha St. = Street type Context analysis identifies the variations data structures and content as “123 St. Virginia St.” 123 = House number St. Virginia = Street address St. = Street type

- 11. QualityStage Investigate Standardize Match Survive The Standardize stage allows you to reformat data from multiple systems to ensure that each data type has the correct and consistent content and format.

- 12. QualityStage Investigate Standardize Match Survive Standardization is used to invoke specific standardization Rule Sets and standardize one or more fields using that Rule Set. Standardization is used to invoke specific standardization Rule Sets and standardize one or more fields using that Rule Set. For example, a Rule Set can be used so that “ Boulevard ” will always be “ Blvd ” Standardization is used to invoke specific standardization Rule Sets and standardize one or more fields using that Rule Set. For example, a Rule Set can be used so that “ Boulevard ” will always be “ Blvd ”, not “ Boulevard ”, “ Blv .”, “ Boulev ”, or some other variation. The USNAME rule set is used to standardize First Name, Middle Name, Last Name The USADDR rule set is used to standardize Address data The USAREA rules set is used to standardize City, State, Zip Code The VTAXID rule set is used to validate Social Security Number The VEMAIL rule set is used to validate Email Address The VPHONE rule set is used to validate Work Phone Number The list below shows some of the more commonly-used Rule Sets .

- 13. QualityStage Investigate Standardize Match Survive Data matching is used to find records in a single data source or independent data sources Data matching is used to find records in a single data source or independent data sources that refer to the same entity Data matching is used to find records in a single data source or independent data sources that refer to the same entity (such as a person, organization, location, product, or material) regardless of the availability of a predetermined key.

- 14. Blocking Matching QualityStage Matching Stage basically consists of two steps: QualityStage Investigate Standardize Match Survive

- 15. XA = master record (during the first pass, this was the first record found to match with another record) DA = duplicates CP = clerical procedure (records with a weighting within a set cutoff range) RA = residuals (those records that remain isolated) Operations in the Matching module: 2. Processing Files 1. Unduplication Match Fields Suspect Match Values by Match Pass Vartypes Cutoff Weights 1. Unduplication (group records into sets having similar attributes) QualityStage Investigate Standardize Match Survive

- 16. QualityStage Investigate Standardize Match Survive Survivorship is used to create a ‘best record’ from all available information about an entity (such as a person, location, material, etc.). Survivorship and formatting ensure that the best available data survives and is correctly prepared for the target destination. Using the rules setup screen, it implements business and mapping rules, creating the necessary output structures for the target application and identifying fields that do not conform to load standards.

- 17. QualityStage Investigate Standardize Match Survive Supplies missing values in one record with values from other records on the same entity Populates missing values in one record with values from corresponding records that have been identified as a group in the matching stage Enriches existing data with external data The Survive stage does the following:

- 18. DataStage = data transformation

- 19. DataStage In its simplest form, DataStage performs data transformation and movement from source systems to target systems in batch and in real time. The data sources may include indexed files, sequential files, relational databases, archives, external data sources, enterprise applications and message queues.

- 20. DataStage DataStage Administrator DataStage Manager DataStage Designer DataStage Director The DataStage client components are:

- 21. Specify general server defaults Add and delete projects Set project properties Access DataStage Repository by command interface DataStage Administrator Manager Designer Director Use DataStage Administrator to:

- 22. DataStage Administrator Manager Designer Director

- 23. DataStage Administrator Manager Designer Director DataStage Manager is the primary interface to the DataStage repository. In addition to table and file layouts, it displays the routines, transforms, and jobs that are defines in the project. It also allows us to move or copy ETL jobs from one project to another.

- 24. Specify how the data is extracted Specify data transformations Decode (denormalize) data going into the data mart using referenced lookups Aggregate data Split data into multiple outputs on the basis of defined constraints DataStage Administrator Manager Designer Director Use DataStage Designer to:

- 25. DataStage Administrator Manager Designer Director Use DataStage Director to run, schedule, and monitor your DataStage jobs. You can also gather statistics as the job runs. Also used for looking at logs for debugging purposes.

- 26. Set up a project – Before you can create any DataStage jobs, you must set up your project by entering information about your data. Create a job – When a DataStage project is installed, it is empty and you must create the jobs you need in DataStage Designer. Define Table Definitions Develop the job – Jobs are designed and developed using the Designer. Each data source, the data warehouse, and each processing step is represented by a stage in the job design. The stages are linked together to show the flow of data. DataStage: Getting Started

- 27. DataStage Designer Developing a job

- 28. DataStage Designer Developing a job

- 29. DataStage Designer Input Stage

- 30. DataStage Designer Transformer Stage The Transformer stage performs any data conversion required before the data is output to another stage in the job design. After you are done, compile and run the job.

- 35. T10 takes .txt files from the Pre-event folder and transforms them into rows. Straight_moves moves the files into the stg_file_contact table, stg_file_broker table, or the reject file. If it says “lead source”, it will go to the reject file (constraint). If it does not say “lead source”, it will evaluate the entire row to determine whether it will go to the contact or broker table (derivation). DataStage An example : Preventing the header row from inserting into MDM_Contact and MDM_Broker

- 36. Questions?

- 37. Thank you for attending

Editor's Notes

- #4: Master data management – Reliably create and maintain consistent, complete, contextual and accurate business information about entities such as customers and products across multiple systems Business intelligence – Take the guesswork out of important decisions by gathering, storing, analyzing, and providing access to diverse enterprise information. Business transformation – Isolate users and applications from the underlying information completely to enable On Demand Business. Infrastructure rationalization – Quickly and accurately streamline corporate information by repurposing and reconciling data whenever it is required Risk and compliance - Deliver a dependable information management foundation to any quality control, corporate reporting visibility and data audit infrastructure.

- #22: DS Administrator is used for administration tasks such as setting up users, logging, creating and moving projects and setting up purging criteria

- #23: Permissions - Assign user categories to operating system user groups or enable operators to view all the details of an event in a job log file. Tracing – Enable or disable tracing on the server. Schedule – Set up a user name and password to use for running scheduled DataStage jobs. Mainframe – Set mainframe job properties and the default platform type. Turntables – Configure cache settings for Hashed File stages. Parallel – Set parallel job properties and defaults for date/time and number formats. Sequence – Set compilation defaults for job sequences. Remote – If you have specified that parallel jobs in the project are to be deployed on a USS system, this page allows you to specify deployment mode and USS machine details.

- #25: DataStage Designer – used to create DataStage applications (known as jobs). Each job specifies the data sources, the transformations required, and the destination of the data. Jobs are compiled to create executables that are scheduled by the Director and run on the server.

- #26: DataStage Director – used to validate, schedule, run, and monitor DataStage job sequences.

- #36: Constraint - Prevents data from getting into the processing piece of the ETL job (reject) Derivation - Logic at the field level (example: is it “open”? (“click through”))