Exploring Generating AI with Diffusion Models

Generative AI models, such as GANs and VAEs, have the potential to create realistic and diverse synthetic data for various applications, from image and speech synthesis to drug discovery and language modeling. However, training these models can be challenging due to the instability and mode collapse issues that often arise. In this workshop, we will explore how stable diffusion, a recent training method that combines diffusion models and Langevin dynamics, can address these challenges and improve the performance and stability of generative models. We will use a pre-configured development environment for machine learning, to run hands-on experiments and train stable diffusion models on different datasets. By the end of the session, attendees will have a better understanding of generative AI and stable diffusion, and how to build and deploy stable generative models for real-world use cases.

Recommended

More Related Content

What's hot (20)

Similar to Exploring Generating AI with Diffusion Models (20)

More from KonfHubTechConferenc (9)

Recently uploaded (20)

![Get & Download Wondershare Filmora Crack Latest [2025]](https://cdn.slidesharecdn.com/ss_thumbnails/revolutionizingresidentialwi-fi-250422112639-60fb726f-250429170801-59e1b240-thumbnail.jpg?width=560&fit=bounds)

![Get & Download Wondershare Filmora Crack Latest [2025]](https://cdn.slidesharecdn.com/ss_thumbnails/revolutionizingresidentialwi-fi-250422112639-60fb726f-250429170801-59e1b240-thumbnail.jpg?width=560&fit=bounds)

![Pixologic ZBrush Crack Plus Activation Key [Latest 2025] New Version](https://cdn.slidesharecdn.com/ss_thumbnails/fashionevolution2-250322112409-f76abaa7-250428124909-b51264ff-250504160528-fc2bb1c5-thumbnail.jpg?width=560&fit=bounds)

![Pixologic ZBrush Crack Plus Activation Key [Latest 2025] New Version](https://cdn.slidesharecdn.com/ss_thumbnails/fashionevolution2-250322112409-f76abaa7-250428124909-b51264ff-250504160528-fc2bb1c5-thumbnail.jpg?width=560&fit=bounds)

Exploring Generating AI with Diffusion Models

- 1. Exploring GenAI with Diffusion Model

- 2. Agenda Generative AI Diffusion Models Building GenAI Prompt Engineering

- 3. About me I’m Bismillah Kani Staff AI/ML Scientist at Waygate Technologies AWS Community Builder - Machine Learning AWS Certified SAA and MLS /in/bismillah-kani [email protected] https://github.com/bismillahkani

- 4. Text Generation Generative AI This technology relies on machine learning models, specifically Foundation Models (FMs), which are extensively trained on enormous datasets. Generative AI refers to a type of artificial intelligence that has the capability to generate new content and concepts, such as stories, conversations, videos, images, and music. Image Generation Code Generation Virtual Assistant

- 5. a brown leather jacket

- 6. 2014-2018 2014-2018 2020-2021 VAE, VQ-VAE GAN, PIX2PIX, Cycle GAN, Style GAN Vision Transformers, CLIP, DALL-E DDPM, Latent Diffusion These advancements pave the way for an exciting future in the field of generative AI, promising further innovations and breakthroughs. 2020-2022 2022 DALL-E 2, Imagen, Midjourney Stable Diffusion, Dreambooth, InstructPix2Pix 2022 2023 ControlNet, DeepFloyd IF, GPT4 Generative Image Models

- 7. Generative Image Models Image-credit: https://lilianweng.github.io/

- 8. Diffusion models are iterative denoising autoencoders that progressively enhance an image to achieve a final, clean, and denoised output. This process starts with random noise and undergoes multiple steps of refinement. During each step, the model determines the optimal transformation from the current input to a denoised version. Diffusion Models Image-credit: https://cvpr2022-tutorial-diffusion-models.github.io/

- 9. Stable Diffusion Diffusion models can face challenges with generating high-resolution images due to increased computational requirements when processing larger images with U-Net architectures. A solution to this challenge involves performing diffusion operations in a latent space, utilizing an encoder-decoder framework for image conversion. By incorporating text conditioning, diffusion models can generate desired images based on specific textual prompts, rather than random image generation. Stable Diffusion, which utilizes these techniques, has achieved state-of-the-art results and can be deployed on consumer GPUs to produce high-quality images. The model was trained on a curated dataset of aesthetically pleasing images, specifically a subset of LAION 5B referred to as LAION aesthetics.

- 10. 1 2 3 4 Stable Diffusion Encoder compress the input image into a 2D latent vector Z Apply difusion and de-noising process on latent vector Z Add conditioning via text encoder and cross-attention Decoder reconstruct images from latent vector Z 1 2 3 4

- 11. Desired content or elements to be depicted in the image The material or medium utilized to create the artwork The artistic style or aesthetic approach desired for the image Referencin g the style of a specific artist as a point of inspiration Represent s the level of sharpness and detail present in the image Exerting control over the overall color palette of the image Substantia l impact on the visual appearanc e and ambiance of the image. SUBJECT Prompt Engineering MEDIUM STYLE ARTIST RESOLN COLOR LIGHTNING Prompt engineering is the process of structuring words that can be interpreted and understood by a text-to-image model. Think of it as the language you need to speak in order to tell an AI model what to draw.

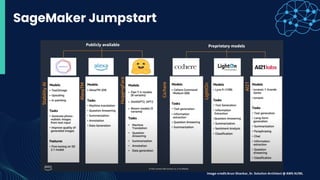

- 12. SageMaker Jumpstart To utilize a big model like Stable Diffusion on Amazon SageMaker, JumpStart provides a simplfied process, by offering pre-tested, readily available scripts accessible through the Studio UI with a single click or through the JumpStart APIs with minimal code. JumpStart is the machine learning (ML) hub of SageMaker that provides hundreds of built-in algorithms, pre- trained models, and end-to-end solution templates to help you quickly get started with ML.

- 13. SageMaker Jumpstart image-credit:Arun Shankar, Sr. Solution Architect @ AWS AI/ML

- 14. Demo App The web application is created using Streamlit, a Python library that facilitates the development and sharing of customized web apps for machine learning and data science. To host the web application, we utilize Amazon Elastic Container Service (Amazon ECS) in conjunction with AWS Fargate, which allows for container execution without the need to manage servers, clusters, or virtual machines. The generative AI model endpoints are launched via SageMaker Jumpstart images stored in Amazon Elastic Container Registry (Amazon ECR). The interaction between the web application and models takes place through Amazon API Gateway and AWS Lambda functions, as depicted in the diagram below.

- 15. Thank you