Exploring Optimization in Vowpal Wabbit

- 1. Exploring Optimization in Vowpal Wabbit -Shiladitya Sen

- 2. Vowpal Wabbit • Online • Open Source • Machine Learning Library • Has achieved record-breaking speed by implementation of • Parallel Processing • Caching • Hashing,etc. • A “true” library: offers a wide range of machine learning and optimization algorithms

- 3. Machine Learning Models • Linear Regressor ( --loss_function squared) • Logistic Regressor (--loss_function logistic) • SVM (--loss_function hinge) • Neural Networks ( --nn <arg> ) • Matrix Factorization • Latent Dirichlet Allocation ( --lda <arg> ) • Active Learning ( --active_learning)

- 4. Regularization • L1 Regularization ( --l1 <arg> ) • L2 Regularization ( --l2 <arg> )

- 5. Optimization Algorithms • Online Gradient Descent ( default ) • Conjugate Gradient ( --conjugate_gradient ) • L-BFGS ( --bfgs )

- 9. It can be proved from the definition of convex functions that such a function can have no maxima. In other words… Might have at most one minima i.e. Local minima is global minima Loss functions which are convex help in optimization for Machine Learning

- 10. Optimization Algorithm I : Online Gradient Descent

- 11. What the batch implementation of Gradient Descent (GD) does

- 12. How does Batch-version of GD work? • Expresses total loss J as a function of a set of parameters : x • • Takes a calculated step α in that direction to reach a new point, with new co-ordinate values of x descent.steepestofdirectionisJ(x)-So, ascent.steepestofdirectiontheasJ(x)Calculates achieved.istolerancerequireduntilcontinuesThis )( :Algorithm 1 tttt xJxx

- 13. What is the online implementation of GD?

- 14. How does online GD work? 1. Takes a point from the dataset : 2. Using existing hypothesis, predicts value 3. True value is revealed 4. Calculates error J as a function of parameters x for point 5. 6. 7. 8. Moves onto next point tp tp )(Evaluates txJ )( :descentsteepestofdirectionin thestepaTakes txJ )(:asparametersUpdates 1 ttt xJxx 1tp

- 15. Looking Deeper into Online GD • Essentially calculates error function J(x) independently for each point, as opposed to calculating J(x) as sum of all errors as in Batch implementation (Offline) GD • To achieve accuracy, Online GD takes multiple passes through the dataset (Continued…)

- 16. Still deeper… • So that a convergence is reached, the step η in each pass is reduced. In VW, this is implemented as: • Cache file used for multiple passes (-c) 10][late]learning_r-[--l- 0.5][p-initial_p- 1][i-initial_t- 1][drning_rate-decay_lea- )( . ' ' 1 p ee e pn ii ild e

- 17. So why Online GD? • It takes less space… • And my system needs its space!

- 18. Optimization Algorithm II: Method of Conjugate Gradients

- 19. What is wrong with Gradient Descent? •Often takes steps in the same direction •Convergence Issues

- 21. The need for Conjugate Gradients: Wouldn’t it be wonderful if we did not need to take steps in the same direction to minimize error in that direction? This is where Conjugate Gradient comes in…

- 22. Method of Orthogonal Directions • In an (n+1) dimensional vector space where J is defined with n parameters, at most n linearly independent directions for parameters exist • Error function may have a component in at most n linearly independent (orthogonal) directions • Intended: A step in each of these directions i.e. at most n steps to minimize the error • Not solvable for orthogonal directions

- 23. Conjugate Directions: 0:)respect towithConjugate( 0:Orthogonal directionssearchared,d ji j T i j T i AddA dd

- 24. How do we get the conjugate directions? • We first choose n mutually orthogonal directions: • We calculate as: nuuu ,...,, 21 id .calculateto,...,,toorthogonal- notarewhichofcomponentsanyoutSubtracts k21 1 1 n i i k kkii dddA u dud

- 25. So what is Method of Conjugate Gradients? • If we set to , the gradient in the i-th step, we have the Method of Conjugate Gradients. • The step size in the direction is found by an exact line search. iu ir jitindependanlinearlyare, ji rr id

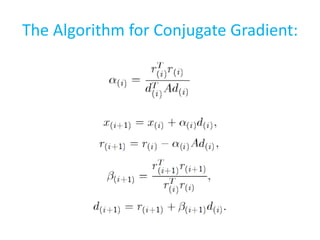

- 26. The Algorithm for Conjugate Gradient:

- 27. Requirement for Preconditioning: • Round-off errors – leads to slight deviations from Conjugate Directions • As a result, Conjugate Gradient is implemented iteratively • To minimize number of iterations, preconditioning is done on the vector space

- 28. What is Pre-conditioning? • The vector space is modified by multiplying a matrix such that M is a symmetric, positive-definite matrix. • This leads to a better clustering of the eigenvectors and a faster convergence. -1 M

- 30. Why think linearly? Newton’s Method proposes a step along a non- linear path as opposed to a linear one as in GD and CG.. Leads to a faster convergence…

- 31. Newton’s Method: )).(()( 2 1 )).(()()( :)(ofexpansionseriessTaylor'order2nd 2 xxxJxx xxxJxJxxJ xxJ T :getwe,respect towithMinimizing x )()]([ 12 xJxJx )()]([ :formiterativeIn 12 1 xJxJxx nn

- 32. What is this BFGS Algorithm? • • Named after Broyden-Fletcher-Goldfarb- Shanno • Maintains an approximate matrix B and updates B upon each iteration BFGS.iswhichamongpopularmost Methods,Newton-toQuasiled )]([][gcalculatininonsComplicati 121 xJHB

- 33. BFGS Algorithm:

- 34. Memory is a limited asset • In Vowpal Wabbit, the version of BFGS implemented is L-BFGS • In L-BFGS, all the previous updates to B are not stored in memory • At a particular iteration i, only the last m updates are stored and used to make new update • Also, the step size η in each step is calculated by an inexact line search following Wolfe’s Conditions.

![Still deeper…

• So that a convergence is reached, the step η in

each pass is reduced. In VW, this is

implemented as:

• Cache file used for multiple passes (-c)

10][late]learning_r-[--l-

0.5][p-initial_p-

1][i-initial_t-

1][drning_rate-decay_lea-

)(

.

'

'

1

p

ee

e

pn

ii

ild

e](https://image.slidesharecdn.com/28a80d43-f001-4232-b5b4-0fd2bf6dd25c-160112040201/85/Exploring-Optimization-in-Vowpal-Wabbit-16-320.jpg)

()]([

:formiterativeIn

12

1 xJxJxx nn

](https://image.slidesharecdn.com/28a80d43-f001-4232-b5b4-0fd2bf6dd25c-160112040201/85/Exploring-Optimization-in-Vowpal-Wabbit-31-320.jpg)