Extreme Apache Spark: how in 3 months we created a pipeline that can process 2.5 billion rows a day

- 1. 2 How to create a Pipeline capable of processing 2.5 Billion records/day in just 3 Months Josef Habdank Lead Data Scientist & Data Platform Architect at Infare Solutions @jahabdank [email protected] linkedin.com/in/jahabdank

- 2. • Using Spark currently is the most fundamental skill in BigData and DataScience world • Main reason: helps to solve most of BigData problems: processing, transformation, abstraction and Machine Learning You are in the right place! Spark currently is de facto standard for BigData Google Trends for “Apache Spark” 2013-2015 was insane Pretty much all serious BigData players are using Spark Good old‘n’slow Hadoop MapReduce days

- 3. What is this talk about? Presentation consists of 4 parts: • Quick intro to Spark internals and optimization • N-billion rows/day system architecture • How exactly we did what we did • Focus on Spark’s Performance, getting maximum bang for the buck • Data Warehouse and Messaging • (optional) How to deploy Spark so it does not backfire

- 4. The Story • “Hey guys, we might land a new cool project” • “It might be 5-10x as much data as we have so far” • “In 1+year it probably will be much more than 10x” • “Oh, and can you do it in 6 months?” “Lets do that in 3 months!”

- 5. The Result • 5 low-cost servers (8core, 64gb RAM) • Located on Amazon with Hosted Apache Spark • a fraction of cost that any other technology would cost • Initial max capacity load tested on 2.5bn/day • Currently improved to max capacity 6-8bn/day, ~250- 350mil/hour (with no extra hardware required) • As Spark scales with hardware, we could do 15Bn with 10-15 machines • Delivered in 3 months, in production for 1.5 year now

- 6. Developing code for distributed systems

- 7. Normal workflow • Code locally on your machine • Compile and assemble • Upload JAR + make sure deps are present on all nodes • Run job and test if work, spend time looking for results Notebook workflow • Write code online • Shift+Enter to compile (on master), send to cluster nodes, run and show results in browser + Can support Git lifecycle + Allows mixing Python/Scala/Sql (which is awesome ) Code in Notebooks, they are awesome • Development on Cluster systems is by nature not easy • Best you can do locally is to know that code compiles, unit tests pass and if the code runs on some sample data • You do not actually know if it works until you test-run on the PreProd/Dev cluster as the data defines the correctness, not syntax

- 8. Traps of Notebooks • Code is compiled on the fly • When the chunk of code is executed as a Spark Job (on a whole cluster) all the dependent objects will be serialized and packaged with the job • Sometimes the dependency structure is very non-trivial and the Notebook will start serializing huge amounts of data (completely silently, and attaching it to the Job) • PRO TIP: have as few global variables as possible, if needed use objects

- 9. Traps of Notebooks Code as distributed JAR vs Code as lambda

- 10. Traps of Notebooks Code as distributed JAR vs Code as lambda This is compiled to JAR and distributed and bootstrapped to JVMs across cluster (when initializing JAR it will open the connection) This is serialized on master and attached to the job (connection object will fail to work after deserialization)

- 11. Writing high throughput code in Spark

- 12. Spark’s core: the collections • Spark is just a processing framework • Works on distributed collections: • Collections are partitioned • The number of partitions is defined from the source • Collections are lazily evaluated (nothing done until you request results) • Spark collection you only write a ‘recipe’ for what spark has to do (called lineage) • Types of collections: • RDDs, just collections of Java objects. Slowest, but most flexible • DataFrames/DataSet’s, mainly tabular data, can do structured data but is not trivial. Much faster serialization/deserialization, more compact, faster memory management, SparkSql compatible

- 13. Spark’s core: in-memory map reduce • Spark Implements Map-LocalReduce- LocalShuffle-Shuffle-Reduce paradigm • Each step in the ‘recipe’/lineage is a combination of the above • Why in that way? Vast majority of BigData problems can be converted to this paradigm: • All SqlQueries/data extracts • In many cases DataScience (modelling)

- 14. Master node 2 node 1 Map-LocalReduce-Shuffle-Reduce %scala val lirdd = sc.parallelize( loremIpsum.split(" ") ) val wordCount = lirdd .map(w => (w,1)) .reduceByKey(_ + _) .collect %sql select word, count(*) as word_count from words group by word (“lorem”) (“Ipsum”) (“lorem”) (“Ipsum”) (“sicut”) (“sicut”) (“lorem”, 1) (“Ipsum”, 1) (“lorem”, 1) (“Ipsum”, 1) (“sicut”, 1) (“sicut”, 1) (“lorem”, 2) (“Ipsum”, 1) (“Ipsum”, 1) (“sicut”, 2) (“lorem”, 2) (“Ipsum”, 1) (“Ipsum”, 1) (“sicut”, 2) (“lorem”, 2) (“Ipsum”, 2) (“sicut”, 2) LocalReduceMap Shuffle Reduce .map([...]) .reduceByKey([...])

- 15. Master node 2 node 1 Map-LocalReduce-Shuffle-Reduce %scala val lirdd = sc.parallelize( loremIpsum.split(" ") ) val wordCount = lirdd .map(w => (w,1)) .reduceByKey(_ + _) .collect %sql select word, count(*) as word_count from words group by word (“lorem”) (“Ipsum”) (“lorem”) (“Ipsum”) (“sicut”) (“sicut”) (“lorem”, 1) (“Ipsum”, 1) (“lorem”, 1) (“Ipsum”, 1) (“sicut”, 1) (“sicut”, 1) (“lorem”, 2) (“Ipsum”, 1) (“Ipsum”, 1) (“sicut”, 2) (“lorem”, 2) (“Ipsum”, 1) (“Ipsum”, 1) (“sicut”, 2) (“lorem”, 2) (“Ipsum”, 2) (“sicut”, 2) LocalReduceMap Shuffle Reduce .map([...]) .reduceByKey([...]) Slowest part: data is serialized from objects to BLOBs, send over network and deserialized

- 16. Map only operations %scala // val rawBytesRDD is defined // and contains blobs with // serialized Avro objects rawBytesRDD .map(fromAvroToObj) .toDF.write .parquet(outputPath) 0x00[…] Spark knows shuffle is expensive and tries to avoid it if can 0x00[…] 0x00[…] 0x00[…] 0x00[…] 0x00[…] .map([...]) Obj 001-100 Obj 101-200 Obj 201-300 Obj 301-400 Obj 401-500 Obj 501-600 incoming blobs incoming blobs node 1 node 2 File 1 File 2 File 3 File 4 File 5 File 6

- 17. Local Shuffle-Map operations %scala // val rawBytesRDD is defined // and contains blobs with // serialized Avro objects rawBytesRDD .coalesce(2) //** .map(fromAvroToObj) .toDF.write .parquet(outputPath) // ** never set to so low!! // This is just an example // Aim in at least 2x node count. // Moreover, if possible // coalesce() or repartition() // on binary blobs 0x00[…] For fragmented collections (with too many partitions) 0x00[…] 0x00[…] 0x00[…] 0x00[…] 0x00[…] 0x00[…] 0x00[…] 0x00[…] 0x00[…] 0x00[…] 0x00[…] Obj 001-300 Obj 301-600 Map .map([...]) Local Shuffle .coalesce(2) node 1 node 2 File 1 File 2 incoming blobs incoming blobs

- 18. node 2 node 1 Why Python/PySpark is (generally) slower than Scala 0x00[…] 0x00[…] 0x00[…] 0x00[…] 0x00[…] 0x00[…] 0x00[…] 0x00[…] 0x00[…] 0x00[…] 0x00[…] 0x00[…] ObjA ObjB ObjC ObjD ObjE ObjF Map .map([...]) Conditional Shuffle .coalesce(2) • All rows will be serialized between JVM and Python • There are exceptions • Within the same machine, so is very fast • Nonetheless significant overhead Python JVM-> Python Serde do the map Python -> JVM Serde Python JVM-> Python Serde do the map Python -> JVM Serde

- 19. Why Python/PySpark is (generally) slower than Scala • In Spark 2.0 with new version of Catalyst and dynamic code generation Spark will try to convert Python code to native Spark functions • This means in some occasions Python might work equally fast as Scala, as in fact Python code is translated into native Spark calls • Catalyst and code generation will not be able to do it for RDD map operations as well as custom UDFs in DataFrames • PRO TIP: avoid using RDDs as Spark will serialize whole objects. For UDFs it only will serialize few columns and will do it in a very efficient way df2 = df1 .filter(df1.old_column >= 30) .withColumn("new_column1", ((df1.old_column - 2) % 7) + 1) df3 = df2 .withColumn("new_column2", custom_function(df2.new_column1))

- 20. N bn Data Platform design

- 21. What Infare does Leading provider of Airfare Intelligence Solutions to the Aviation Industry Business Intelligence on flight tickets prices, such that airlines know competitors prices and market trends Advanced Analytics and Data Science predicting prices, ticket demand and well as financial data Collect and processes 1.5+ billion distinct airfares daily

- 22. What we were supposed to do Data Collection Scalable DataWarehouse (aimed at 500+bn rows) Customer specific Data Warehouse Data Collection Data Collection Data Collection Customer specific Data Warehouse Customer specific Data Warehouse Customer specific Data Warehouse *Scalable to billions of rows a day

- 23. What we need Data Collection Data Collection Data Collection Data Collection Scalable low cost permanent storage Scalable fast-access temporary storage Processing Framework ALL BigData systems in the world look like that

- 24. What we first did Data Collection Data Collection Data Collection Data Collection Message Broker (Kinesis) Monitoring/stats Real time analytics Preaggregation Micro Batch Micro Batch Mini Batch S3 Offline analytics Data Science Data Warehouse Avro blobs compressed with Snappy uncompressed Parquet micro batches partitioned aggregated Parquet DataWarehouse Monitoring System Data Streamer Temporary Storage Permanent Storage

- 25. Did it work? Data Collection Data Collection Data Collection Data Collection Message Broker (Kinesis) Monitoring/stats Real time analytics Preaggregation Micro Batch Micro Batch Mini Batch S3 Offline analytics Data Science Data Warehouse Avro blobs compressed with Snappy uncompressed Parquet micro batches partitioned aggregated Parquet DataWarehouse Monitoring System Data Streamer S3 has latency (inconsistent for deletes) Temporary Storage Permanent Storage

- 26. • No Spark native driver, so no clustered queries • Parallelize in current implementation has a memory leak Why DynamoDB was a failure: Spark’s Parallelize hell DynamoDB historically DID NOT SUPPORT Spark WHATSOEVER, we effectively ended up writing our own Spark driver from scratch, WEEKS of wasted effort I have to admit since our initial huge disappointment 1 year ago Amazon released a Spark driver, and I do not know how good it is. My opinion is still a closed-source DB with limited support and usage will always be inferior to other technologies

- 27. How are we doing it now Data Collection Data Collection Data Collection Data Collection Message Broker Monitoring/stats Real time analytics Preaggregation Micro Batch Micro Batch Mini Batch S3 Offline analytics Data Science Data Warehouse Avro blobs Compressed with Snappy uncompressed Parquet micro batches partitioned aggregated Parquet/ORC DataWarehouse Data Streamer Temporary Storage Permanent Storage Monitoring System Elastic Search 5.2 has an amazing Spark support New Kafka 0.10.2 has great streaming support

- 28. Kinesis: • The messages are max 25kB (if larger the driver will slice the message into multiple PUT requests) • Avro serialized and Snappy compressed data to max 25kB (~200 data rows per message) • Obtained 10x throughput compared to sending individual rows (each 180 bytes) Getting maximum out of the Kinesis/Kafka Message Broker https://engineering.linkedin.com/kafka/benchmarking-apache-kafka-2-million-writes-second-three-cheap-machines Serialize and send micro batches of data, not individual messages Kafka: • The messages size is 1MB, but that is very large • Jay Kreps @ Linkedin researched optimal message size for Kafka and it is between 10-100kB • From his research at those message sizes allow sending as much as hardware/network allows Data Collection Data Collection Data Collection Data Collection Data Streamer

- 29. • Creates a stream of MiniBatches, which are RDDs created every n seconds • Spark Driver continuously polls message broker, default every 200ms • Each received block (every 200ms) becomes an RDD partition • Consider using repartition/coalesce, as the number of partitions gets very large quickly (for 60 sec, there will be up to 300 partitions, thus 300 files) • NOTE: in Spark 2.1 they added Structured Streaming (streaming on DataFrames, not RDDs, very cool but still limited in functionality) Spark Streaming Data Collection Data Collection Data Collection Data Collection Mini Batch Mini Batch kstream .repartition(3 * nodeCount) .foreachRDD(rawBytes => { [...] }) n-seconds Message Broker

- 30. • Retry the MiniBatch n times (4 default) • If fails all retries, kill the streaming job • Conclusion: must do error handling Default error handling in Spark Streaming Data Collection Data Collection Data Collection Data Collection Micro Batch Mini Batch Message Broker

- 31. In stream error handling, low error rate Data Collection Data Collection Data Collection Data Collection Mini Batch Mini Batch • For low error rate, handle each error individually • Open connection to storage, save the error packet for later processing, close connection • Will clog the stream for high error rate streams kstream .repartition(concurency) .foreachRDD(objects => { val res = objects.flatMap(b => { try { // can use Option instead of Seq [do something, rare error] } catch { case e: => [save the error packet] Seq[Obj]() } }) [do something with res] }) Errors Message Broker

- 32. Error Batch Error Batch Advanced error handling, high error rate Data Collection Data Collection Data Collection Data Collection Mini Batch Mini Batch • For high error rate, can’t store each error individually • Unfortunately Spark does not support Multicast operation (stream splitting) Message Broker

- 33. Advanced error handling, high error rate • Try high error probability action (such as API request) • Use transform to return Either[String, Obj] • Either class is like tuple but guarantees that only one or the other is present (String for error, Obj for success) • cache() to prevent reprocessing • individually process error and success stream • NOTE: cache() should be used cautiously kstream .repartition(concurency) .foreachRDD(objects => { val res = objects.map(b => { Try([frequent error]) .transform( { b => Success(Right(b)) }, { e => Success(Left(e.getMessage)) } ).get }) res.cache() // cautious, can be slow res .filter(ei => ei.isLeft) .map(ei => ei.left.get) .[process errors as stream] res .filter(ei => ei.isRight) .map(ei => ei.right.get) .[process successes as stream] })

- 34. To cache or not to cache • Cache is the most abused function in Spark • It is NOT (!) a simple storing of a pointer to a collection in memory of the process • It is a SERIALISATION of the data to BLOB and storing it in cluster’s shared memory • When reusing data which was cached, it has to deserialize the data from BLOB • By default uses generic JAVA serializer Step1 Step2 cache serialize Step3 Step1 … deserialize … Job1 Job2

- 35. Message Broker with Avro objects Standard Spark Streaming Scenario: • Incoming Avro stream • Step 1) Stats computation, storing stats • Step 2) Storing data from the stream Question: Will caching make it faster? Deserialize Avro Compute Stats Save Stats Message Broker with Avro objects cache Save Data Deserialize Avro Compute Stats Save Stats Save Data Deserialize Avro

- 36. Standard Spark Streaming Scenario: • Incoming Avro stream • Step 1) Stats computation, storing stats • Step 2) Storing data from the stream Question: Will caching make it faster? Nope Faster Message Broker with Avro objects Deserialize Avro Compute Stats Save Stats Message Broker with Avro objects cache Save Data Deserialize Avro Compute Stats Save Stats Save Data Deserialize Avro

- 37. Standard Spark Streaming Scenario: • Incoming Avro stream • Step 1) Stats computation, storing stats • Step 2) Storing data from the stream Question: Will caching make it faster? Nope Message Broker with Avro objects cache Compute Stats Save Stats Save DataDeserialize Avro Serialize Java Deserialize Java Faster Message Broker with Avro objects Deserialize Avro Compute Stats Save Stats Save Data Deserialize Avro

- 38. To cache or not to cache • Cache is the most abused function in Spark • It is NOT (!) a simple storing of a pointer to a collection in memory of the process • It is a SERIALISATION of the data to BLOB and storing it in cluster’s shared memory • When reusing data which was cached, it has to deserialize the data from BLOB • By default uses generic JAVA serializer, which is SLOW • Even super fast serde like Kryo, are much slower as are generic (serializer does not know the type at compile time) • Avro is amazingly fast as it is a Specific serializer (knows the type) • Often you will be quicker to reprocess the data from your source than use the cache, especially for complex objects (pure strings/byte arrays cache fast) • Caching is faster in DataFrames/Tungsten API, but even then it might be slower than reprocessing • Pro TIP: when using cache, make sure it actually helps. And monitor CPU consumption too Step1 Step2 cache serialize Step3 Step1 … deserialize … Job1 Job2

- 39. Tungsten Memory Manager + Row Serializer • Introduced in Spark 1.5, used in DataFrames/DataSets • Stores data in memory as a readable binary blobs, not as Java objects • Since Spark 2.0 the blobs are in columnar format (much better compression) • Does some black magic wizardy with L1/L2/L3 CPU cache • Much faster: 10x-100x faster then RDDs • Rule of thumb: always when possible use DataFrames and you will get Tungsten case class MyRow(val col: Option[String], val exception: Option[String]) kstream .repartition(concurency) .foreachRDD(objects => { val res = objects.map(b => { Try([frequent error]).transform( { b => Success(MyRow(b, None))}, { e => Success(MyRow(None, e.getMessage))} ).get }).toDF() res.cache() res.select("exception") .filter("exception is not null") .[process errors as stream] res.select("col") .filter("exception is null") .[process successes as stream] })

- 40. Data Warehouse and Messaging

- 41. Data Storage: Row Store vs Column Store • What are DataBases: collections of objects • Main difference: • Row store require only one row at the time to serialize • Column Store requires a batch of data to serialize • Serialization: • Row store can serialize online (as rows come into the serializer, can be appended to the binary buffer) • Column Store requires w whole batch to present at the moment of serialization, data can be processed (index creation, sorting, duplicate removal etc.) • Reading: • Row store always reads all data from a file • Column Store allows reading only selected columns

- 42. JSON/CSV Row Store Pros: • Human readable (do not underestimate that) • No dev time required • Compression algorithms work very well on ASCII text (compressed CSV ‘only’ 2x larger than compressed Avro) Cons: • Large(CSV) and very large (JSON) volume • Slow serialization/deserialization Overall: worth considering, especially during dev phase

- 43. Avro Row Store Pros: • BLAZING FAST serialization/deserialization • Apache Avro lib is amazing (buffer based serde) • Binary/compact storage • Compresses about 70% with Snappy (compressed 200 objects with 50 cols result in 20kb) Cons: • Hard to debug, once BLOB is corrupt it is very hard to find out what went wrong

- 44. Avro Scala classes • Avro serialization/deserialization requires Avro contract compatible with Apache Avro library • In principle the Avro classes are logically similar to the Parquet classes (definition/field accessor) class ObjectModelV1Avro ( var dummy_id: Long, var jobgroup: Int, var instance_id: Int, [...] ) extends SpecificRecordBase with SpecificRecord { def get(field: Int): AnyRef = { field match { case pos if pos == 0 => { dummy_id }.asInstanceOf[AnyRef] [...] } def put(field: Int, value: Any): Unit = { field match { case pos if pos == 0 => this.dummy_id = { value }.asInstanceOf[Long] [...] } def getSchema: org.apache.avro.Schema = new Schema.Parser().parse("[...]“) }

- 45. Avro real-time serialization • Apache Avro allows serializing on the fly Row by row • Incoming data stream can be serialized on the fly into a binary buffer // C# code byte[] raw; using (var ms = new MemoryStream()) { using (var dfw = DataFileWriter<T> .OpenWriter(_datumWriter, ms, _codec)) { // can be yielded microBatch.ForEach(dfw.Append); } raw = ms.ToArray(); } // Scala val writer: DataFileWriter[T] = new DataFileWriter[T](datumWriter) writer.create(objs(0).getSchema, outputStream) // can be streamed for (obj <- objs) { writer.append(obj) } writer.close val encodedByteArray: Array[Byte] = outputStream.toByteArray

- 46. Parquet Column Store Pros: • Meant for large data sets • Single column searchable • Compressed (eliminated duplicates etc.) • Contains in-file stats and metadata located in TAIL • Very well supported in Spark: • predicate/filter pushdown • VECTORIZED READER AND TUNGSTEN INTEGRATION (5-10x faster then Java Parquet library) Cons: • Not indexed spark .read .parquet(dwLoc) .filter('col1 === “text“) .select("col2")

- 47. More on predicate/filter pushdown • Processing separate from the storage • Predicate/filter pushdown gives Spark uniform way to push query to the source • Spark remains oblivious how the driver executes the query, it only cares if the Driver can or can’t execute the pushdown request • If driver can’t execute the request Spark will load all data and filter it in Spark Storage Parquet/ORC API Apache Spark DataFrame makes pushdown request to API Parquet/ORC driver executes pushed request on binary data

- 48. More on predicate/filter pushdown • Processing separate from the storage • Predicate/filter pushdown gives Spark uniform way to push query to the source • Spark remains oblivious how the driver executes the query, it only cares if the Driver can or can’t execute the pushdown request • If driver can’t execute the request Spark will load all data and filter it in Spark • Such abstraction allows easy replacement of storage, Spark does not care if the storage is S3 files or a DataBase Apache Spark DataFrame makes pushdown request to API

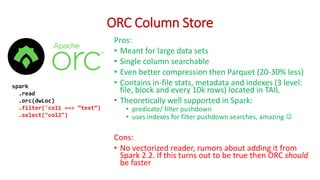

- 49. ORC Column Store Pros: • Meant for large data sets • Single column searchable • Even better compression then Parquet (20-30% less) • Contains in-file stats, metadata and indexes (3 level: file, block and every 10k rows) located in TAIL • Theoretically well supported in Spark: • predicate/ filter pushdown • uses indexes for filter pushdown searches, amazing Cons: • No vectorized reader, rumors about adding it from Spark 2.2. If this turns out to be true then ORC should be faster spark .read .orc(dwLoc) .filter('col1 === “text“) .select("col2")

- 50. DataWarehouse building • Think of it as a collection of read only files • Recommended to use Parquet/ORC files in a folder structure (aim at >100-1000Mb files, use coalesce) • Folders are partitions • Spark supports append for Parquet/ORC • Compression: • Use Snappy (decompression speed ~500MB/sec per core) • Gzip (decompression speed ~60MB/sec per core) • Note: Snappy is not splittable, keep files under 1GB • Ordering: if you can (often can not) order your data, as then columnar deduplication will work better • In our case this saves 50% of space, and thus 50% of reading time df .coalesce(fileCount) .write .option("compression",“snappy") .mode("append") .partitionBy( "year", "month", "day") //.orderBy(“some_column") .parquet(outputLoc) //.orc(outputLoc)

- 51. DataWarehouse query execution dwFolder/year=2016/month=3/day=10/part-[ManyFiles] dwFolder/year=2016/month=3/day=11/part-[ManyFiles] [...] • Partition pruning: Spark will only look for the files in appropriate folders • Row group pruning: uses row group stats to skip data (if filtered data is outside of min/max value of Row Group stats in a Parquet file, data will be skipped, turned off by default, as is expensive and gives benefit for ordered files) • Reads only col1 and col2 from the file, and col1 as filter (never seen by Spark, handled by the API), col2 returned to Spark for processing • If DW is ORC, it will use in-file indexes to speed up the scan (Parquet will still scan through entire column in each scanned file to filter col1) sqlContext .read .parquet(dwLoc) .filter( 'year === 2016 && 'month === 1 && 'day === 1 && 'col1 === "text") .select("col2") sqlContext.setConf("spark.sql.parquet.filterPushdown", “True“)

- 52. DataWarehouse schema evolution the SLOW way • Schema evolution = columns changing over time • Spark allows Schema-on-read paradigm • Allows only adding columns • Removing is be done by predicate pushdown in SELECT • Renaming is handled in Spark • Each file in DW (both ORC and Parquet) is schema aware • Each file can have different columns • By default Spark (for speed purposes) assumes all files with the same schema • In order to enable schema merging, manually set a flag during the read • there is a heavy speed penalty for doing this • How to make it: simply append data with different columns to already existing store sqlContext .read .option( "mergeSchema", "true") .parquet(dwLoc) .filter( 'year === 2016 && 'day === 1 && 'col1 === "text") .select("col2") .withColumnRenamed( "col2", "newAwesomeColumn")

- 53. DataWarehouse schema evolution the RIGHT way • The much faster way it to create multiple warehouses, and merging the by calling UNION • The union requires the columns and types to be the same in ALL dataframes/datawarehouses • The dataframes have to be aligned by adding/renaming the columns using default values etc. • The advantage of doing this is that Spark now is dealing with small(er) number of datawarehouses, where within them it can assume the same types, which can save massive amount of resources • Spark is smart enough to figure out to execute partition pruning and filter/predicate pushdown to all unioned warehouses, therefore this is a recommended way val df1 = sqlContext .read.parquet(dwLoc1) .withColumn([...]) val df2 = sqlContext .read.parquet(dwLoc2) .withColumn([...]) val dfs = Seq(df1, df2) val df_union = dfs .reduce(_ union _) // df_union is your queryable // warehouse, including // partitions etc

- 54. Scala Case classes limitation • Spark can only automatically build the DataFrames from RDDs consisting of case classes • This means for saving Parquet/ORC you have to use case classes • In Scala 2.10 case classes can have max 22 fields (limitation not present in Scala 2.11), thus only 22 columns • Case classes are implicitly extending Product type, if in need of DataWarehouse with more than 22 columns, create a POJO class extending the Product type case class MyRow( val col1: Option[String], val col2: Option[String] ) // val rdd: RDD[MyRow] val df = rdd.toDF() df.coalesce(fileCount) .write .parquet(outputLoc)

- 55. Scala Product Class example class ObjectModelV1 ( var dummy_id: Long, var jobgroup: Int, var instance_id: Int, [...] ) extends java.io.Serializable with Product { def canEqual(that: Any) = that.isInstanceOf[ObjectModelV1] def productArity = 50 def productElement(idx: Int) = idx match { case 0 => dummy_id case 1 => jobgroup case 2 => instance_id [...] } }

- 56. Scala Avro + Parquet contract combined • Avro + Parquet contract can be the same (no inheritance collision) • Save unnecessary object conversion/data copy which in 5bn rage is actually large cost • Spark Streaming can receive objects as Avro and directly convert to Parquet/ORC + class ObjectModelV1 ( var dummy_id: Long, var jobgroup: Int, var instance_id: Int, [...] ) extends SpecificRecordBase with SpecificRecord with Serializable with Product { [...] }

- 57. Summary: Key to high performance Data Collection Data Collection Data Collection Data Collection Message Broker (Kinesis) Monitoring/stats Preaggregation Micro Batch Micro Batch Mini Batch S3 Offline analytics Data Science Data Warehouse + Buffer by 25kb + Submit stats C# Kinesis Uploader + Buffer by 1min, 12threads + Submit stats + Build daily DW + Aim in 100-500Mb files + Submit stats • Incremental aggregation/batching • Always make sure to have as many write threads as cores in cluster • Avoid reduce phase at all costs, avoid shuffle unless have a good reason** • “If it can wait do it later in the pipeline” • Use DataFrames whenever possible • When using cashing, make sure it actually helps

- 58. 2x Senior Data Scientist, working with Apache Spark + R/Python doing Airfare/price forecasting 4x Senior Data Platform Engineer, working with Apache Spark/S3/Cassandra/Scala backend + MicroAPIs http://www.infare.com/jobs/ [email protected] Want to work with cutting edge 100% Apache Spark projects? We are hiring!!! 1x Network Administrator for Big Data systems 2x DevOps Engineer for Big Data, working on as Hadoop, Spark, Kubernetes, OpenStack and more

- 59. Thank You!!! Q/A? And remember, we are hiring!!! http://www.infare.com/jobs/ [email protected]

- 61. How to deploy Spark so it does not backfire

- 62. Hardware Own + Fully customizable + Cheaper, if you already have enough OPS capacity, best case scenario 30-40% cheaper - Dealing with bandwidth limits - Dealing with hardware failures - No on-demand scalability Hosted + Much more failsafe + On-demand scalability + No burden on current OPS - Deal with dependencies with existing systems (e.g. inter-data center communication)

- 63. Data Platform MapReduce + HDFS/S3 + Simple platform + Can be fully hosted -Much slower - Possibly more coding required, less maintainable (Java/Pig/Hive) - Less future oriented Spark + HDFS/S3 + More advanced platform + Can be fully hosted + Possibly less coding thanks to Scala + ML enabled (SparkML, Python), future oriented +MessageBroker enabled Spark + Cassandra + The state of art for BigData systems + Might not need message broker (can easily with- stand 100k’s inserts /sec) + Amazing future possibilities - Can not (yet) by hosted - Possibly still needs HDFS/S3

- 64. Spark on Amazon Deployment only ~132$/month license per 8core/64Gb RAM No spot instances No support incl. SSH access Self customizable Notebook Zeppelin Out of the box platform ~500$/month license per 8core/64Gb RAM (min 5) Allowed spot instances Support with debug SSH access (new in 2017) Limited customization Notebook DataBricks Out of the box platform ~170$/month license per 8core/64Gb RAM Allowed spot instances Platform support SSH access Support in customization Notebook Zeppelin

- 65. Spark on Amazon Deployment only, so requires a lot of IT/Unix related knowledge to go All you need for Spark system + amazing support, a little pricey but ‘just works’ and worth it Cheap and fully customizable platform, needs more low level knowledge

![Master

node 2

node 1

Map-LocalReduce-Shuffle-Reduce

%scala

val lirdd =

sc.parallelize(

loremIpsum.split(" ")

)

val wordCount =

lirdd

.map(w => (w,1))

.reduceByKey(_ + _)

.collect

%sql

select

word,

count(*) as word_count

from words

group by word

(“lorem”)

(“Ipsum”)

(“lorem”)

(“Ipsum”)

(“sicut”)

(“sicut”)

(“lorem”, 1)

(“Ipsum”, 1)

(“lorem”, 1)

(“Ipsum”, 1)

(“sicut”, 1)

(“sicut”, 1)

(“lorem”, 2)

(“Ipsum”, 1)

(“Ipsum”, 1)

(“sicut”, 2)

(“lorem”, 2)

(“Ipsum”, 1)

(“Ipsum”, 1)

(“sicut”, 2)

(“lorem”, 2)

(“Ipsum”, 2)

(“sicut”, 2)

LocalReduceMap Shuffle

Reduce

.map([...]) .reduceByKey([...])](https://image.slidesharecdn.com/sparktrainingatbigdatadenmarkpublished-160319160738/85/Extreme-Apache-Spark-how-in-3-months-we-created-a-pipeline-that-can-process-2-5-billion-rows-a-day-14-320.jpg)

![Master

node 2

node 1

Map-LocalReduce-Shuffle-Reduce

%scala

val lirdd =

sc.parallelize(

loremIpsum.split(" ")

)

val wordCount =

lirdd

.map(w => (w,1))

.reduceByKey(_ + _)

.collect

%sql

select

word,

count(*) as word_count

from words

group by word

(“lorem”)

(“Ipsum”)

(“lorem”)

(“Ipsum”)

(“sicut”)

(“sicut”)

(“lorem”, 1)

(“Ipsum”, 1)

(“lorem”, 1)

(“Ipsum”, 1)

(“sicut”, 1)

(“sicut”, 1)

(“lorem”, 2)

(“Ipsum”, 1)

(“Ipsum”, 1)

(“sicut”, 2)

(“lorem”, 2)

(“Ipsum”, 1)

(“Ipsum”, 1)

(“sicut”, 2)

(“lorem”, 2)

(“Ipsum”, 2)

(“sicut”, 2)

LocalReduceMap Shuffle

Reduce

.map([...]) .reduceByKey([...])

Slowest part:

data is serialized from objects

to BLOBs, send over network

and deserialized](https://image.slidesharecdn.com/sparktrainingatbigdatadenmarkpublished-160319160738/85/Extreme-Apache-Spark-how-in-3-months-we-created-a-pipeline-that-can-process-2-5-billion-rows-a-day-15-320.jpg)

![Map only operations

%scala

// val rawBytesRDD is defined

// and contains blobs with

// serialized Avro objects

rawBytesRDD

.map(fromAvroToObj)

.toDF.write

.parquet(outputPath)

0x00[…]

Spark knows shuffle is expensive and tries to avoid it if can

0x00[…]

0x00[…]

0x00[…]

0x00[…]

0x00[…]

.map([...])

Obj 001-100

Obj 101-200

Obj 201-300

Obj 301-400

Obj 401-500

Obj 501-600

incoming

blobs

incoming

blobs

node 1

node 2

File 1

File 2

File 3

File 4

File 5

File 6](https://image.slidesharecdn.com/sparktrainingatbigdatadenmarkpublished-160319160738/85/Extreme-Apache-Spark-how-in-3-months-we-created-a-pipeline-that-can-process-2-5-billion-rows-a-day-16-320.jpg)

![Local Shuffle-Map operations

%scala

// val rawBytesRDD is defined

// and contains blobs with

// serialized Avro objects

rawBytesRDD

.coalesce(2) //**

.map(fromAvroToObj)

.toDF.write

.parquet(outputPath)

// ** never set to so low!!

// This is just an example

// Aim in at least 2x node count.

// Moreover, if possible

// coalesce() or repartition()

// on binary blobs

0x00[…]

For fragmented collections (with too many partitions)

0x00[…]

0x00[…]

0x00[…]

0x00[…]

0x00[…]

0x00[…]

0x00[…]

0x00[…]

0x00[…]

0x00[…]

0x00[…]

Obj 001-300

Obj 301-600

Map

.map([...])

Local Shuffle

.coalesce(2)

node 1

node 2

File 1

File 2

incoming

blobs

incoming

blobs](https://image.slidesharecdn.com/sparktrainingatbigdatadenmarkpublished-160319160738/85/Extreme-Apache-Spark-how-in-3-months-we-created-a-pipeline-that-can-process-2-5-billion-rows-a-day-17-320.jpg)

![node 2

node 1

Why Python/PySpark is (generally)

slower than Scala

0x00[…]

0x00[…]

0x00[…]

0x00[…]

0x00[…]

0x00[…]

0x00[…]

0x00[…]

0x00[…]

0x00[…]

0x00[…]

0x00[…]

ObjA

ObjB

ObjC

ObjD

ObjE

ObjF

Map

.map([...])

Conditional Shuffle

.coalesce(2)

• All rows will be

serialized between JVM

and Python

• There are exceptions

• Within the same

machine, so is very fast

• Nonetheless significant

overhead

Python

JVM-> Python Serde

do the map

Python -> JVM Serde

Python

JVM-> Python Serde

do the map

Python -> JVM Serde](https://image.slidesharecdn.com/sparktrainingatbigdatadenmarkpublished-160319160738/85/Extreme-Apache-Spark-how-in-3-months-we-created-a-pipeline-that-can-process-2-5-billion-rows-a-day-18-320.jpg)

![• Creates a stream of MiniBatches, which are RDDs

created every n seconds

• Spark Driver continuously polls message broker,

default every 200ms

• Each received block (every 200ms) becomes an RDD

partition

• Consider using repartition/coalesce, as the number

of partitions gets very large quickly (for 60 sec, there

will be up to 300 partitions, thus 300 files)

• NOTE: in Spark 2.1 they added Structured Streaming

(streaming on DataFrames, not RDDs, very cool but

still limited in functionality)

Spark Streaming

Data

Collection

Data

Collection

Data

Collection

Data

Collection

Mini

Batch

Mini

Batch

kstream

.repartition(3 * nodeCount)

.foreachRDD(rawBytes => {

[...]

})

n-seconds

Message

Broker](https://image.slidesharecdn.com/sparktrainingatbigdatadenmarkpublished-160319160738/85/Extreme-Apache-Spark-how-in-3-months-we-created-a-pipeline-that-can-process-2-5-billion-rows-a-day-29-320.jpg)

![In stream error handling, low error rate

Data

Collection

Data

Collection

Data

Collection

Data

Collection

Mini

Batch

Mini

Batch

• For low error rate, handle each error

individually

• Open connection to storage, save

the error packet for later processing,

close connection

• Will clog the stream for high error

rate streams

kstream

.repartition(concurency)

.foreachRDD(objects => {

val res = objects.flatMap(b => {

try { // can use Option instead of Seq

[do something, rare error]

} catch { case e: =>

[save the error packet]

Seq[Obj]()

}

})

[do something with res]

})

Errors

Message

Broker](https://image.slidesharecdn.com/sparktrainingatbigdatadenmarkpublished-160319160738/85/Extreme-Apache-Spark-how-in-3-months-we-created-a-pipeline-that-can-process-2-5-billion-rows-a-day-31-320.jpg)

![Advanced error handling, high error rate

• Try high error probability action (such as

API request)

• Use transform to return Either[String, Obj]

• Either class is like tuple but guarantees

that only one or the other is present

(String for error, Obj for success)

• cache() to prevent reprocessing

• individually process error and success

stream

• NOTE: cache() should be used cautiously

kstream

.repartition(concurency)

.foreachRDD(objects => {

val res = objects.map(b => {

Try([frequent error])

.transform(

{ b => Success(Right(b)) },

{ e => Success(Left(e.getMessage)) }

).get

})

res.cache() // cautious, can be slow

res

.filter(ei => ei.isLeft)

.map(ei => ei.left.get)

.[process errors as stream]

res

.filter(ei => ei.isRight)

.map(ei => ei.right.get)

.[process successes as stream]

})](https://image.slidesharecdn.com/sparktrainingatbigdatadenmarkpublished-160319160738/85/Extreme-Apache-Spark-how-in-3-months-we-created-a-pipeline-that-can-process-2-5-billion-rows-a-day-33-320.jpg)

![Tungsten Memory Manager + Row Serializer

• Introduced in Spark 1.5, used in

DataFrames/DataSets

• Stores data in memory as a readable binary

blobs, not as Java objects

• Since Spark 2.0 the blobs are in columnar

format (much better compression)

• Does some black magic wizardy with

L1/L2/L3 CPU cache

• Much faster: 10x-100x faster then RDDs

• Rule of thumb: always when possible use

DataFrames and you will get Tungsten

case class MyRow(val col: Option[String], val

exception: Option[String])

kstream

.repartition(concurency)

.foreachRDD(objects => {

val res = objects.map(b => {

Try([frequent error]).transform(

{ b => Success(MyRow(b, None))},

{ e => Success(MyRow(None, e.getMessage))}

).get

}).toDF()

res.cache()

res.select("exception")

.filter("exception is not null")

.[process errors as stream]

res.select("col")

.filter("exception is null")

.[process successes as stream]

})](https://image.slidesharecdn.com/sparktrainingatbigdatadenmarkpublished-160319160738/85/Extreme-Apache-Spark-how-in-3-months-we-created-a-pipeline-that-can-process-2-5-billion-rows-a-day-39-320.jpg)

![Avro Scala classes

• Avro

serialization/deserialization

requires Avro contract

compatible with Apache

Avro library

• In principle the Avro classes

are logically similar to the

Parquet classes

(definition/field accessor)

class ObjectModelV1Avro (

var dummy_id: Long,

var jobgroup: Int,

var instance_id: Int,

[...]

) extends SpecificRecordBase with SpecificRecord {

def get(field: Int): AnyRef = { field match {

case pos if pos == 0 => { dummy_id

}.asInstanceOf[AnyRef]

[...]

}

def put(field: Int, value: Any): Unit = {

field match {

case pos if pos == 0 => this.dummy_id = {

value }.asInstanceOf[Long]

[...]

}

def getSchema: org.apache.avro.Schema =

new Schema.Parser().parse("[...]“)

}](https://image.slidesharecdn.com/sparktrainingatbigdatadenmarkpublished-160319160738/85/Extreme-Apache-Spark-how-in-3-months-we-created-a-pipeline-that-can-process-2-5-billion-rows-a-day-44-320.jpg)

![Avro real-time serialization

• Apache Avro allows serializing on the fly Row by row

• Incoming data stream can be serialized on the fly

into a binary buffer

// C# code

byte[] raw;

using (var ms = new MemoryStream())

{

using (var dfw =

DataFileWriter<T>

.OpenWriter(_datumWriter,

ms, _codec))

{

// can be yielded

microBatch.ForEach(dfw.Append);

}

raw = ms.ToArray();

}

// Scala

val writer: DataFileWriter[T] =

new DataFileWriter[T](datumWriter)

writer.create(objs(0).getSchema,

outputStream)

// can be streamed

for (obj <- objs) {

writer.append(obj)

}

writer.close

val encodedByteArray: Array[Byte] =

outputStream.toByteArray](https://image.slidesharecdn.com/sparktrainingatbigdatadenmarkpublished-160319160738/85/Extreme-Apache-Spark-how-in-3-months-we-created-a-pipeline-that-can-process-2-5-billion-rows-a-day-45-320.jpg)

![DataWarehouse query execution

dwFolder/year=2016/month=3/day=10/part-[ManyFiles]

dwFolder/year=2016/month=3/day=11/part-[ManyFiles]

[...]

• Partition pruning: Spark will only look for the files in

appropriate folders

• Row group pruning: uses row group stats to skip data

(if filtered data is outside of min/max value of Row Group

stats in a Parquet file, data will be skipped, turned off by

default, as is expensive and gives benefit for ordered files)

• Reads only col1 and col2 from the file, and col1 as filter

(never seen by Spark, handled by the API), col2 returned to

Spark for processing

• If DW is ORC, it will use in-file indexes to speed up the scan

(Parquet will still scan through entire column in each

scanned file to filter col1)

sqlContext

.read

.parquet(dwLoc)

.filter(

'year === 2016 &&

'month === 1 &&

'day === 1 &&

'col1 === "text")

.select("col2")

sqlContext.setConf("spark.sql.parquet.filterPushdown", “True“)](https://image.slidesharecdn.com/sparktrainingatbigdatadenmarkpublished-160319160738/85/Extreme-Apache-Spark-how-in-3-months-we-created-a-pipeline-that-can-process-2-5-billion-rows-a-day-51-320.jpg)

![DataWarehouse schema evolution

the RIGHT way

• The much faster way it to create multiple warehouses,

and merging the by calling UNION

• The union requires the columns and types to be the

same in ALL dataframes/datawarehouses

• The dataframes have to be aligned by

adding/renaming the columns using default values

etc.

• The advantage of doing this is that Spark now is

dealing with small(er) number of datawarehouses,

where within them it can assume the same types,

which can save massive amount of resources

• Spark is smart enough to figure out to execute

partition pruning and filter/predicate pushdown to

all unioned warehouses, therefore this is a

recommended way

val df1 = sqlContext

.read.parquet(dwLoc1)

.withColumn([...])

val df2 = sqlContext

.read.parquet(dwLoc2)

.withColumn([...])

val dfs = Seq(df1, df2)

val df_union = dfs

.reduce(_ union _)

// df_union is your queryable

// warehouse, including

// partitions etc](https://image.slidesharecdn.com/sparktrainingatbigdatadenmarkpublished-160319160738/85/Extreme-Apache-Spark-how-in-3-months-we-created-a-pipeline-that-can-process-2-5-billion-rows-a-day-53-320.jpg)

![Scala Case classes limitation

• Spark can only automatically build the DataFrames

from RDDs consisting of case classes

• This means for saving Parquet/ORC you have to

use case classes

• In Scala 2.10 case classes can have max 22 fields

(limitation not present in Scala 2.11), thus only 22

columns

• Case classes are implicitly extending Product type,

if in need of DataWarehouse with more than 22

columns, create a POJO class extending the

Product type

case class MyRow(

val col1: Option[String],

val col2: Option[String]

)

// val rdd: RDD[MyRow]

val df = rdd.toDF()

df.coalesce(fileCount)

.write

.parquet(outputLoc)](https://image.slidesharecdn.com/sparktrainingatbigdatadenmarkpublished-160319160738/85/Extreme-Apache-Spark-how-in-3-months-we-created-a-pipeline-that-can-process-2-5-billion-rows-a-day-54-320.jpg)

![Scala Product Class example

class ObjectModelV1 (

var dummy_id: Long,

var jobgroup: Int,

var instance_id: Int,

[...]

) extends java.io.Serializable with Product {

def canEqual(that: Any) = that.isInstanceOf[ObjectModelV1]

def productArity = 50

def productElement(idx: Int) = idx match {

case 0 => dummy_id

case 1 => jobgroup

case 2 => instance_id

[...]

}

}](https://image.slidesharecdn.com/sparktrainingatbigdatadenmarkpublished-160319160738/85/Extreme-Apache-Spark-how-in-3-months-we-created-a-pipeline-that-can-process-2-5-billion-rows-a-day-55-320.jpg)

![Scala Avro + Parquet contract combined

• Avro + Parquet contract can be the same (no

inheritance collision)

• Save unnecessary object conversion/data copy

which in 5bn rage is actually large cost

• Spark Streaming can receive objects as Avro and

directly convert to Parquet/ORC

+

class ObjectModelV1 (

var dummy_id: Long,

var jobgroup: Int,

var instance_id: Int,

[...]

) extends SpecificRecordBase with SpecificRecord

with Serializable with Product {

[...]

}](https://image.slidesharecdn.com/sparktrainingatbigdatadenmarkpublished-160319160738/85/Extreme-Apache-Spark-how-in-3-months-we-created-a-pipeline-that-can-process-2-5-billion-rows-a-day-56-320.jpg)