FCN-Based 6D Robotic Grasping for Arbitrary Placed Objects

1 like1,921 views

This is the slide used for IEEE International Conference on Robotics and Automation (ICRA) 2017, Workshop on Learning and Control for Autonomous Manipulation Systems on June 2nd, 2017.

1 of 11

Download to read offline

Ad

Recommended

Introduction to Chainer

Introduction to ChainerPreferred Networks Chainer is a deep learning framework which is flexible, intuitive, and powerful.

This slide introduces some unique features of Chainer and its additional packages such as ChainerMN (distributed learning), ChainerCV (computer vision), ChainerRL (reinforcement learning)

Introduction to Chainer

Introduction to ChainerShunta Saito Chainer is a deep learning framework which is flexible, intuitive, and powerful. This slide introduces some unique features of Chainer and its additional packages such as ChainerMN (distributed learning), ChainerCV (computer vision), ChainerRL (reinforcement learning)

Chainer v3

Chainer v3Seiya Tokui Introduction of new features of Chainer v3.0.0rc1 and CuPy v2.0.0rc1. Presented at Chainer Meetup #06 in Tokyo.

IIBMP2019 講演資料「オープンソースで始める深層学習」

IIBMP2019 講演資料「オープンソースで始める深層学習」Preferred Networks 2019年9月に開催された日本バイオインフォマティクス学会2019年年会 第8回生命医薬情報学連合大会(IIBMP2019)のスポンサーセッション講演資料です。

・鈴木脩司「Preferred Networksについて」

・大野健太「深層学習フレームワーク Chainer」

・菅原洋平「深層学習初学者向け無料オンライン教材の紹介」

・中郷孝祐「化学・生物学分野のための深層学習ライブラリ ChainerChemistry」

・太田健「ハイパーパラメータ自動最適化フレームワーク Optuna」

Introduction to Chainer 11 may,2018

Introduction to Chainer 11 may,2018Preferred Networks Published on 11 may, 2018

Chainer is a deep learning framework which is flexible, intuitive, and powerful.

This slide introduces some unique features of Chainer and its additional packages such as ChainerMN (distributed learning), ChainerCV (computer vision), ChainerRL (reinforcement learning), Chainer Chemistry (biology and chemistry), and ChainerUI (visualization).

Intro to TensorFlow and PyTorch Workshop at Tubular Labs

Intro to TensorFlow and PyTorch Workshop at Tubular LabsKendall These are some introductory slides for the Intro to TensorFlow and PyTorch workshop at Tubular Labs. The Github code is available at:

https://github.com/PythonWorkshop/Intro-to-TensorFlow-and-PyTorch

Distributed implementation of a lstm on spark and tensorflow

Distributed implementation of a lstm on spark and tensorflowEmanuel Di Nardo Academic project based on developing a LSTM distributing it on Spark and using Tensorflow for numerical operations.

Source code: https://github.com/EmanuelOverflow/LSTM-TensorSpark

PFN Summer Internship 2019 / Kenshin Abe: Extension of Chainer-Chemistry for ...

PFN Summer Internship 2019 / Kenshin Abe: Extension of Chainer-Chemistry for ...Preferred Networks Graph Neural Network implementation with the sparse data structure.

PFN summer internship 2019 by Kenshin Abe.

Comparison of deep learning frameworks from a viewpoint of double backpropaga...

Comparison of deep learning frameworks from a viewpoint of double backpropaga...Kenta Oono This document compares deep learning frameworks from the perspective of double backpropagation. It discusses the typical technology stacks and design choices of frameworks like Chainer, PyTorch, and TensorFlow. It also provides a primer on double backpropagation, explaining how it computes the differentiation of a loss function with respect to inputs. Code examples of double backpropagation are shown for Chainer, PyTorch and TensorFlow.

深層学習フレームワーク概要とChainerの事例紹介

深層学習フレームワーク概要とChainerの事例紹介Kenta Oono Deep learning framework Chainer was introduced. Chainer allows defining neural networks as Python programs for flexible construction. It supports both CPU and GPU computation and various deep learning libraries have been developed on top of Chainer like ChainerRL for reinforcement learning. The development team maintains and improves Chainer through frequent releases and community events.

Deep Learning with TensorFlow: Understanding Tensors, Computations Graphs, Im...

Deep Learning with TensorFlow: Understanding Tensors, Computations Graphs, Im...Altoros 1. The elements of Neural Networks: Weights, Biases, and Gating functions

2. MNIST (Hand writing recognition) using simple NN in TensorFlow (Introduce Tensors, Computation Graphs)

3. MNIST using Convolution NN in TensorFlow

4. Understanding words and sentences as Vectors

5. word2vec in TensorFlow

[251] implementing deep learning using cu dnn![[251] implementing deep learning using cu dnn](https://cdn.slidesharecdn.com/ss_thumbnails/215implementingdeeplearningusingcudnn-150915052020-lva1-app6892-thumbnail.jpg?width=560&fit=bounds)

![[251] implementing deep learning using cu dnn](https://cdn.slidesharecdn.com/ss_thumbnails/215implementingdeeplearningusingcudnn-150915052020-lva1-app6892-thumbnail.jpg?width=560&fit=bounds)

![[251] implementing deep learning using cu dnn](https://cdn.slidesharecdn.com/ss_thumbnails/215implementingdeeplearningusingcudnn-150915052020-lva1-app6892-thumbnail.jpg?width=560&fit=bounds)

![[251] implementing deep learning using cu dnn](https://cdn.slidesharecdn.com/ss_thumbnails/215implementingdeeplearningusingcudnn-150915052020-lva1-app6892-thumbnail.jpg?width=560&fit=bounds)

[251] implementing deep learning using cu dnnNAVER D2 This document provides an overview of deep learning and implementation on GPU using cuDNN. It begins with a brief history of neural networks and an introduction to common deep learning models like convolutional neural networks. It then discusses implementing deep learning models using cuDNN, including initialization, forward and backward passes for layers like convolution, pooling and fully connected. It covers optimization issues like initialization and speeding up training. Finally, it introduces VUNO-Net, the company's deep learning framework, and discusses its performance, applications and visualization.

CUDA and Caffe for deep learning

CUDA and Caffe for deep learningAmgad Muhammad This document discusses GPU computing and CUDA programming. It begins with an introduction to GPU computing and CUDA. CUDA (Compute Unified Device Architecture) allows programming of Nvidia GPUs for parallel computing. The document then provides examples of optimizing matrix multiplication and closest pair problems using CUDA. It also discusses implementing and optimizing convolutional neural networks (CNNs) and autoencoders for GPUs using CUDA. Performance results show speedups for these deep learning algorithms when using GPUs versus CPU-only implementations.

Alex Smola, Professor in the Machine Learning Department, Carnegie Mellon Uni...

Alex Smola, Professor in the Machine Learning Department, Carnegie Mellon Uni...MLconf Fast, Cheap and Deep – Scaling Machine Learning: Distributed high throughput machine learning is both a challenge and a key enabling technology. Using a Parameter Server template we are able to distribute algorithms efficiently over multiple GPUs and in the cloud. This allows us to design very fast recommender systems, factorization machines, classifiers, and deep networks. This degree of scalability allows us to tackle computationally expensive problems efficiently, yielding excellent results e.g. in visual question answering.

Introduction to Neural Networks in Tensorflow

Introduction to Neural Networks in TensorflowNicholas McClure Nick McClure gave an introduction to neural networks using Tensorflow. He explained the basic unit of neural networks as operational gates and how multiple gates can be combined. He discussed loss functions, learning rates, and activation functions. McClure also covered convolutional neural networks, recurrent neural networks, and applications such as image captioning and style transfer. He concluded by discussing resources for staying up to date with advances in machine learning.

TensorFlow Tutorial Part1

TensorFlow Tutorial Part1Sungjoon Choi This document provides an overview and outline of a TensorFlow tutorial. It discusses handling images, logistic regression, multi-layer perceptrons, and convolutional neural networks. Key concepts explained include the goal of deep learning as mapping vectors, one-hot encoding of output classes, the definitions of epochs, batch size, and iterations in training, and loading and preprocessing image data for a TensorFlow tutorial.

Overview of Chainer and Its Features

Overview of Chainer and Its FeaturesSeiya Tokui The slide of the talk given at Deep Learning Tokyo on Mar. 20, 2016. http://passmarket.yahoo.co.jp/event/show/detail/01ga1ky1mv5c.html

Introduction to Chainer Chemistry

Introduction to Chainer ChemistryPreferred Networks The document introduces two approaches to chemical prediction: quantum simulation based on density functional theory and machine learning based on data. It then discusses using graph-structured neural networks for chemical prediction on datasets like QM9. It presents Neural Fingerprint (NFP) and Gated Graph Neural Network (GGNN) models for predicting molecular properties from graph-structured data. Chainer Chemistry is introduced as a library for chemical and biological machine learning that implements these graph convolutional networks.

Deep learning for molecules, introduction to chainer chemistry

Deep learning for molecules, introduction to chainer chemistryKenta Oono 1) The document introduces machine learning and deep learning techniques for predicting chemical properties, including rule-based approaches versus learning-based approaches using neural message passing algorithms.

2) It discusses several graph neural network models like NFP, GGNN, WeaveNet and SchNet that can be applied to molecular graphs to predict characteristics. These models update atom representations through message passing and graph convolution operations.

3) Chainer Chemistry is introduced as a deep learning framework that can be used with these graph neural network models for chemical property prediction tasks. Examples of tasks include drug discovery and molecular generation.

GTC Japan 2016 Chainer feature introduction

GTC Japan 2016 Chainer feature introductionKenta Oono This document introduces Chainer's new trainer and dataset abstraction features which provide a standardized way to implement training loops and access datasets. The key aspects are:

- Trainer handles the overall training loop and allows extensions to customize checkpoints, logging, evaluation etc.

- Updater handles fetching mini-batches and model optimization within each loop.

- Iterators handle accessing datasets and returning mini-batches.

- Extensions can be added to the trainer for tasks like evaluation, visualization, and saving snapshots.

This abstraction makes implementing training easier and more customizable while still allowing manual control when needed. Common iterators, updaters, and extensions are provided to cover most use cases.

TensorFlow Dev Summit 2018 Extended: TensorFlow Eager Execution

TensorFlow Dev Summit 2018 Extended: TensorFlow Eager ExecutionTaegyun Jeon TensorFlow's eager execution allows running operations immediately without building graphs. This makes debugging easier and improves the development workflow. Eager execution can be enabled with tf.enable_eager_execution(). Common operations like variables, gradients, control flow work the same in eager and graph modes. Code written with eager execution in mind is compatible with graph-based execution for deployment. Eager execution provides benefits for iteration and is useful alongside TensorFlow's high-level APIs.

Electricity price forecasting with Recurrent Neural Networks

Electricity price forecasting with Recurrent Neural NetworksTaegyun Jeon This document discusses using recurrent neural networks (RNNs) for electricity price forecasting with TensorFlow. It begins with an introduction to the speaker, Taegyun Jeon from GIST. The document then provides an overview of RNNs and their implementation in TensorFlow. It describes two case studies - using an RNN to predict a sine function and using one to forecast electricity prices. The document concludes with information on running and evaluating the RNN graph and a question and answer section.

Keras on tensorflow in R & Python

Keras on tensorflow in R & PythonLonghow Lam Keras with Tensorflow backend can be used for neural networks and deep learning in both R and Python. The document discusses using Keras to build neural networks from scratch on MNIST data, using pre-trained models like VGG16 for computer vision tasks, and fine-tuning pre-trained models on limited data. Examples are provided for image classification, feature extraction, and calculating image similarities.

Chainer v2 and future dev plan

Chainer v2 and future dev planSeiya Tokui Slides for Chainer Meetup #05 held at Microsoft Japan. Introducing Chainer v2.0.0 and the future development plan of Chainer.

Deep Learning with PyTorch

Deep Learning with PyTorchMayur Bhangale The document discusses deep learning concepts without requiring advanced degrees. It introduces StoreKey, a Python package for scientific computing on GPUs and deep learning research. It covers basics like variables, tensors, and autograd in Python. Predictive models discussed include linear regression, logistic regression, and convolutional neural networks. Linear regression fits a line to data to predict unobserved values. Logistic regression predicts binary outcomes by fitting data to a logit function. A convolutional neural network example is shown with input, output, and hidden layers for classification problems.

Cloud Computing

Cloud Computingbutest Nexus is a system that allows multiple distributed computing frameworks like Hadoop and MPI to efficiently share cluster resources. It uses a fine-grained resource sharing approach, offering individual tasks to frameworks rather than entire machines. This approach maximizes cluster utilization. Nexus also aims to allocate resources fairly between frameworks using a dominant resource fairness policy. Experiments show Nexus imposes low overhead while enabling dynamic sharing of resources between multiple Hadoop deployments and efficient elastic web serving.

Deep Learning in Python with Tensorflow for Finance

Deep Learning in Python with Tensorflow for FinanceBen Ball Speaker: Ben Ball

Abstract: Python is becoming the de facto standard for many machine learning applications. We have been using Python with deep learning and other ML techniques, with a focus in prediction and exploitation in transactional markets. I am presenting one of our implementations (A dueling double DQN - a class of Reinforcement Learning algorithm) in Python using TensorFlow, along with information and background around the class of deep learning algorithm, and the application to financial markets we have employed. Attendees will learn how to implement a DQN using Tensorflow, and how to design a system for deep learning for solving a wide range of problems. The code will be available on github for attendees.

Bio: Ben is a believer in making a career out of what you love. He is inspired by the joining of excellent technology and research and likes building software that is easy to use, but does amazing things. His work has spanned 15 years, with a dual focus in AI Software Engineering and Algorithmic Trading. He is currently working as the CTO of http://prediction-machines.com

Video of the presentation:

https://engineers.sg/video/deep-learning-with-python-in-finance-singapore-python-user-group--1875

Slide tesi

Slide tesiNicolò Savioli This thesis implements the WHAM algorithm for estimating free energy profiles from molecular dynamics simulations using NVIDIA CUDA to run it on GPUs. WHAM iteratively calculates unbiased probability distributions and free energy values from multiple biased simulations. The author parallelized WHAM using CUDA, testing performance on different GPU architectures versus CPUs. GPU-WHAM achieved convergence comparable to CPU-WHAM but was up to twice as fast, with the speedup increasing with GPU computational capabilities. The GPU/CPU speed ratio remained constant with varying problem sizes, indicating good scaling.

Kk3517971799

Kk3517971799IJERA Editor International Journal of Engineering Research and Applications (IJERA) is an open access online peer reviewed international journal that publishes research and review articles in the fields of Computer Science, Neural Networks, Electrical Engineering, Software Engineering, Information Technology, Mechanical Engineering, Chemical Engineering, Plastic Engineering, Food Technology, Textile Engineering, Nano Technology & science, Power Electronics, Electronics & Communication Engineering, Computational mathematics, Image processing, Civil Engineering, Structural Engineering, Environmental Engineering, VLSI Testing & Low Power VLSI Design etc.

PointNet

PointNetPetteriTeikariPhD Journal club done with Vid Stojevic for PointNet:

https://arxiv.org/abs/1612.00593

https://github.com/charlesq34/pointnet

http://stanford.edu/~rqi/pointnet/

Deep learning for Indoor Point Cloud processing. PointNet, provides a unified architecture operating directly on unordered point clouds without voxelisation for applications ranging from object classification, part segmentation, to scene semantic parsing.

Alternative download link:

https://www.dropbox.com/s/ziyhgi627vg9lyi/3D_v2017_initReport.pdf?dl=0

Ad

More Related Content

What's hot (20)

Comparison of deep learning frameworks from a viewpoint of double backpropaga...

Comparison of deep learning frameworks from a viewpoint of double backpropaga...Kenta Oono This document compares deep learning frameworks from the perspective of double backpropagation. It discusses the typical technology stacks and design choices of frameworks like Chainer, PyTorch, and TensorFlow. It also provides a primer on double backpropagation, explaining how it computes the differentiation of a loss function with respect to inputs. Code examples of double backpropagation are shown for Chainer, PyTorch and TensorFlow.

深層学習フレームワーク概要とChainerの事例紹介

深層学習フレームワーク概要とChainerの事例紹介Kenta Oono Deep learning framework Chainer was introduced. Chainer allows defining neural networks as Python programs for flexible construction. It supports both CPU and GPU computation and various deep learning libraries have been developed on top of Chainer like ChainerRL for reinforcement learning. The development team maintains and improves Chainer through frequent releases and community events.

Deep Learning with TensorFlow: Understanding Tensors, Computations Graphs, Im...

Deep Learning with TensorFlow: Understanding Tensors, Computations Graphs, Im...Altoros 1. The elements of Neural Networks: Weights, Biases, and Gating functions

2. MNIST (Hand writing recognition) using simple NN in TensorFlow (Introduce Tensors, Computation Graphs)

3. MNIST using Convolution NN in TensorFlow

4. Understanding words and sentences as Vectors

5. word2vec in TensorFlow

[251] implementing deep learning using cu dnn![[251] implementing deep learning using cu dnn](https://cdn.slidesharecdn.com/ss_thumbnails/215implementingdeeplearningusingcudnn-150915052020-lva1-app6892-thumbnail.jpg?width=560&fit=bounds)

![[251] implementing deep learning using cu dnn](https://cdn.slidesharecdn.com/ss_thumbnails/215implementingdeeplearningusingcudnn-150915052020-lva1-app6892-thumbnail.jpg?width=560&fit=bounds)

![[251] implementing deep learning using cu dnn](https://cdn.slidesharecdn.com/ss_thumbnails/215implementingdeeplearningusingcudnn-150915052020-lva1-app6892-thumbnail.jpg?width=560&fit=bounds)

![[251] implementing deep learning using cu dnn](https://cdn.slidesharecdn.com/ss_thumbnails/215implementingdeeplearningusingcudnn-150915052020-lva1-app6892-thumbnail.jpg?width=560&fit=bounds)

[251] implementing deep learning using cu dnnNAVER D2 This document provides an overview of deep learning and implementation on GPU using cuDNN. It begins with a brief history of neural networks and an introduction to common deep learning models like convolutional neural networks. It then discusses implementing deep learning models using cuDNN, including initialization, forward and backward passes for layers like convolution, pooling and fully connected. It covers optimization issues like initialization and speeding up training. Finally, it introduces VUNO-Net, the company's deep learning framework, and discusses its performance, applications and visualization.

CUDA and Caffe for deep learning

CUDA and Caffe for deep learningAmgad Muhammad This document discusses GPU computing and CUDA programming. It begins with an introduction to GPU computing and CUDA. CUDA (Compute Unified Device Architecture) allows programming of Nvidia GPUs for parallel computing. The document then provides examples of optimizing matrix multiplication and closest pair problems using CUDA. It also discusses implementing and optimizing convolutional neural networks (CNNs) and autoencoders for GPUs using CUDA. Performance results show speedups for these deep learning algorithms when using GPUs versus CPU-only implementations.

Alex Smola, Professor in the Machine Learning Department, Carnegie Mellon Uni...

Alex Smola, Professor in the Machine Learning Department, Carnegie Mellon Uni...MLconf Fast, Cheap and Deep – Scaling Machine Learning: Distributed high throughput machine learning is both a challenge and a key enabling technology. Using a Parameter Server template we are able to distribute algorithms efficiently over multiple GPUs and in the cloud. This allows us to design very fast recommender systems, factorization machines, classifiers, and deep networks. This degree of scalability allows us to tackle computationally expensive problems efficiently, yielding excellent results e.g. in visual question answering.

Introduction to Neural Networks in Tensorflow

Introduction to Neural Networks in TensorflowNicholas McClure Nick McClure gave an introduction to neural networks using Tensorflow. He explained the basic unit of neural networks as operational gates and how multiple gates can be combined. He discussed loss functions, learning rates, and activation functions. McClure also covered convolutional neural networks, recurrent neural networks, and applications such as image captioning and style transfer. He concluded by discussing resources for staying up to date with advances in machine learning.

TensorFlow Tutorial Part1

TensorFlow Tutorial Part1Sungjoon Choi This document provides an overview and outline of a TensorFlow tutorial. It discusses handling images, logistic regression, multi-layer perceptrons, and convolutional neural networks. Key concepts explained include the goal of deep learning as mapping vectors, one-hot encoding of output classes, the definitions of epochs, batch size, and iterations in training, and loading and preprocessing image data for a TensorFlow tutorial.

Overview of Chainer and Its Features

Overview of Chainer and Its FeaturesSeiya Tokui The slide of the talk given at Deep Learning Tokyo on Mar. 20, 2016. http://passmarket.yahoo.co.jp/event/show/detail/01ga1ky1mv5c.html

Introduction to Chainer Chemistry

Introduction to Chainer ChemistryPreferred Networks The document introduces two approaches to chemical prediction: quantum simulation based on density functional theory and machine learning based on data. It then discusses using graph-structured neural networks for chemical prediction on datasets like QM9. It presents Neural Fingerprint (NFP) and Gated Graph Neural Network (GGNN) models for predicting molecular properties from graph-structured data. Chainer Chemistry is introduced as a library for chemical and biological machine learning that implements these graph convolutional networks.

Deep learning for molecules, introduction to chainer chemistry

Deep learning for molecules, introduction to chainer chemistryKenta Oono 1) The document introduces machine learning and deep learning techniques for predicting chemical properties, including rule-based approaches versus learning-based approaches using neural message passing algorithms.

2) It discusses several graph neural network models like NFP, GGNN, WeaveNet and SchNet that can be applied to molecular graphs to predict characteristics. These models update atom representations through message passing and graph convolution operations.

3) Chainer Chemistry is introduced as a deep learning framework that can be used with these graph neural network models for chemical property prediction tasks. Examples of tasks include drug discovery and molecular generation.

GTC Japan 2016 Chainer feature introduction

GTC Japan 2016 Chainer feature introductionKenta Oono This document introduces Chainer's new trainer and dataset abstraction features which provide a standardized way to implement training loops and access datasets. The key aspects are:

- Trainer handles the overall training loop and allows extensions to customize checkpoints, logging, evaluation etc.

- Updater handles fetching mini-batches and model optimization within each loop.

- Iterators handle accessing datasets and returning mini-batches.

- Extensions can be added to the trainer for tasks like evaluation, visualization, and saving snapshots.

This abstraction makes implementing training easier and more customizable while still allowing manual control when needed. Common iterators, updaters, and extensions are provided to cover most use cases.

TensorFlow Dev Summit 2018 Extended: TensorFlow Eager Execution

TensorFlow Dev Summit 2018 Extended: TensorFlow Eager ExecutionTaegyun Jeon TensorFlow's eager execution allows running operations immediately without building graphs. This makes debugging easier and improves the development workflow. Eager execution can be enabled with tf.enable_eager_execution(). Common operations like variables, gradients, control flow work the same in eager and graph modes. Code written with eager execution in mind is compatible with graph-based execution for deployment. Eager execution provides benefits for iteration and is useful alongside TensorFlow's high-level APIs.

Electricity price forecasting with Recurrent Neural Networks

Electricity price forecasting with Recurrent Neural NetworksTaegyun Jeon This document discusses using recurrent neural networks (RNNs) for electricity price forecasting with TensorFlow. It begins with an introduction to the speaker, Taegyun Jeon from GIST. The document then provides an overview of RNNs and their implementation in TensorFlow. It describes two case studies - using an RNN to predict a sine function and using one to forecast electricity prices. The document concludes with information on running and evaluating the RNN graph and a question and answer section.

Keras on tensorflow in R & Python

Keras on tensorflow in R & PythonLonghow Lam Keras with Tensorflow backend can be used for neural networks and deep learning in both R and Python. The document discusses using Keras to build neural networks from scratch on MNIST data, using pre-trained models like VGG16 for computer vision tasks, and fine-tuning pre-trained models on limited data. Examples are provided for image classification, feature extraction, and calculating image similarities.

Chainer v2 and future dev plan

Chainer v2 and future dev planSeiya Tokui Slides for Chainer Meetup #05 held at Microsoft Japan. Introducing Chainer v2.0.0 and the future development plan of Chainer.

Deep Learning with PyTorch

Deep Learning with PyTorchMayur Bhangale The document discusses deep learning concepts without requiring advanced degrees. It introduces StoreKey, a Python package for scientific computing on GPUs and deep learning research. It covers basics like variables, tensors, and autograd in Python. Predictive models discussed include linear regression, logistic regression, and convolutional neural networks. Linear regression fits a line to data to predict unobserved values. Logistic regression predicts binary outcomes by fitting data to a logit function. A convolutional neural network example is shown with input, output, and hidden layers for classification problems.

Cloud Computing

Cloud Computingbutest Nexus is a system that allows multiple distributed computing frameworks like Hadoop and MPI to efficiently share cluster resources. It uses a fine-grained resource sharing approach, offering individual tasks to frameworks rather than entire machines. This approach maximizes cluster utilization. Nexus also aims to allocate resources fairly between frameworks using a dominant resource fairness policy. Experiments show Nexus imposes low overhead while enabling dynamic sharing of resources between multiple Hadoop deployments and efficient elastic web serving.

Deep Learning in Python with Tensorflow for Finance

Deep Learning in Python with Tensorflow for FinanceBen Ball Speaker: Ben Ball

Abstract: Python is becoming the de facto standard for many machine learning applications. We have been using Python with deep learning and other ML techniques, with a focus in prediction and exploitation in transactional markets. I am presenting one of our implementations (A dueling double DQN - a class of Reinforcement Learning algorithm) in Python using TensorFlow, along with information and background around the class of deep learning algorithm, and the application to financial markets we have employed. Attendees will learn how to implement a DQN using Tensorflow, and how to design a system for deep learning for solving a wide range of problems. The code will be available on github for attendees.

Bio: Ben is a believer in making a career out of what you love. He is inspired by the joining of excellent technology and research and likes building software that is easy to use, but does amazing things. His work has spanned 15 years, with a dual focus in AI Software Engineering and Algorithmic Trading. He is currently working as the CTO of http://prediction-machines.com

Video of the presentation:

https://engineers.sg/video/deep-learning-with-python-in-finance-singapore-python-user-group--1875

Slide tesi

Slide tesiNicolò Savioli This thesis implements the WHAM algorithm for estimating free energy profiles from molecular dynamics simulations using NVIDIA CUDA to run it on GPUs. WHAM iteratively calculates unbiased probability distributions and free energy values from multiple biased simulations. The author parallelized WHAM using CUDA, testing performance on different GPU architectures versus CPUs. GPU-WHAM achieved convergence comparable to CPU-WHAM but was up to twice as fast, with the speedup increasing with GPU computational capabilities. The GPU/CPU speed ratio remained constant with varying problem sizes, indicating good scaling.

Similar to FCN-Based 6D Robotic Grasping for Arbitrary Placed Objects (20)

Kk3517971799

Kk3517971799IJERA Editor International Journal of Engineering Research and Applications (IJERA) is an open access online peer reviewed international journal that publishes research and review articles in the fields of Computer Science, Neural Networks, Electrical Engineering, Software Engineering, Information Technology, Mechanical Engineering, Chemical Engineering, Plastic Engineering, Food Technology, Textile Engineering, Nano Technology & science, Power Electronics, Electronics & Communication Engineering, Computational mathematics, Image processing, Civil Engineering, Structural Engineering, Environmental Engineering, VLSI Testing & Low Power VLSI Design etc.

PointNet

PointNetPetteriTeikariPhD Journal club done with Vid Stojevic for PointNet:

https://arxiv.org/abs/1612.00593

https://github.com/charlesq34/pointnet

http://stanford.edu/~rqi/pointnet/

Deep learning for Indoor Point Cloud processing. PointNet, provides a unified architecture operating directly on unordered point clouds without voxelisation for applications ranging from object classification, part segmentation, to scene semantic parsing.

Alternative download link:

https://www.dropbox.com/s/ziyhgi627vg9lyi/3D_v2017_initReport.pdf?dl=0

How to Make Hand Detector on Native Activity with OpenCV

How to Make Hand Detector on Native Activity with OpenCVIndustrial Technology Research Institute (ITRI)(工業技術研究院, 工研院) This document provides an overview and instructions for making a hand detector on Android using OpenCV. It discusses calculating a skin color histogram, detecting skin areas in an image, finding the largest skin area, and matching histograms using Histograms of Oriented Gradients (HoG). Steps include converting to HSV, generating a histogram in H-S space, applying the histogram to detect skin pixels, filtering and finding contours, labeling connected areas, and getting the largest. HoG extracts gradient features from blocks for matching.

K-Means Clustering in Moving Objects Extraction with Selective Background

K-Means Clustering in Moving Objects Extraction with Selective BackgroundIJCSIS Research Publications We presents a technique for moving objects extraction. There are several different approaches for moving object extraction, clustering is one of object extraction method with a stronger teorical foundation used in many applications. And need high performance in many extraction process of moving object. We compare K-Means and Self-Organizing Map method for extraction moving objects, for performance measurement of moving object extraction by applying MSE and PSNR. According to experimental result that the MSE value of K-Means is smaller than Self-Organizing Map. It is also that PSNR of K-Means is higher than Self-Organizing Map algorithm. The result proves that K-Means is a promising method to cluster pixels in moving objects extraction.

SkyStitch: a Cooperative Multi-UAV-based Real-time Video Surveillance System ...

SkyStitch: a Cooperative Multi-UAV-based Real-time Video Surveillance System ...Kitsukawa Yuki パターン・映像情報処理特論において論文を紹介した時の発表資料です。

Xiangyun Meng, Wei Wang, and Ben Leong. 2015. SkyStitch: A Cooperative Multi-UAV-based Real-time Video Surveillance System with Stitching. In Proceedings of the 23rd ACM international conference on Multimedia (MM '15). ACM, New York, NY, USA, 261-270. DOI=http://dx.doi.org/10.1145/2733373.2806225

Intelligent Auto Horn System Using Artificial Intelligence

Intelligent Auto Horn System Using Artificial IntelligenceIRJET Journal The document proposes an intelligent auto horn system using artificial intelligence to reduce unnecessary noise pollution from vehicle horns. The system uses sensors and cameras to collect environmental data and an on-board computer uses AI techniques like SIFT, SURF and other computer vision algorithms to process the data. Based on factors like the distance between objects, road width, and object size, the AI will control the horn sound horizontally and vertically. The system aims to only sound the horn as loud as needed based on the situation to reduce noise pollution while maintaining safety. The system is described as not compromising safety and automatically adjusting the horn sound using fixed horn mechanisms based on AI analysis of the environment.

Flow Trajectory Approach for Human Action Recognition

Flow Trajectory Approach for Human Action RecognitionIRJET Journal This document proposes a method for human action recognition in videos using scale-invariant feature transform (SIFT) and flow trajectory analysis. The key steps are:

1. Extract SIFT features from each video frame to detect keypoints.

2. Track the keypoints across frames and calculate the magnitude and direction of motion for each keypoint.

3. Analyze the tracked keypoints and their motion parameters to recognize the human action, such as walking, running, etc. occurring in the video.

Foreground algorithms for detection and extraction of an object in multimedia...

Foreground algorithms for detection and extraction of an object in multimedia...IJECEIAES Background Subtraction of a foreground object in multimedia is one of the major preprocessing steps involved in many vision-based applications. The main logic for detecting moving objects from the video is difference of the current frame and a reference frame which is called “background image” and this method is known as frame differencing method. Background Subtraction is widely used for real-time motion gesture recognition to be used in gesture enabled items like vehicles or automated gadgets. It is also used in content-based video coding, traffic monitoring, object tracking, digital forensics and human-computer interaction. Now-a-days due to advent in technology it is noticed that most of the conferences, meetings and interviews are done on video calls. It’s quite obvious that a conference room like atmosphere is not always readily available at any point of time. To eradicate this issue, an efficient algorithm for foreground extraction in a multimedia on video calls is very much needed. This paper is not to just build Background Subtraction application for Mobile Platform but to optimize the existing OpenCV algorithm to work on limited resources on mobile platform without reducing the performance. In this paper, comparison of various foreground detection, extraction and feature detection algorithms are done on mobile platform using OpenCV. The set of experiments were conducted to appraise the efficiency of each algorithm over the other. The overall performances of these algorithms were compared on the basis of execution time, resolution and resources required.

A Three-Dimensional Representation method for Noisy Point Clouds based on Gro...

A Three-Dimensional Representation method for Noisy Point Clouds based on Gro...Sergio Orts-Escolano Slides used for the thesis defense of the PhD candidate Sergio Orts-Escolano.

The research described in this thesis was motivated by the need of a robust model capable of representing 3D data obtained with 3D sensors, which are inherently noisy. In addition, time constraints have to be considered as these sensors are capable of providing a 3D data stream in real time.This thesis proposed the use of Self-Organizing Maps (SOMs) as a 3D representation model. In particular, we proposed the use of the Growing Neural Gas (GNG) network, which has been successfully used for clustering, pattern recognition and topology representation of multi-dimensional data. Until now, Self-Organizing Maps have been primarily computed offline and their application in 3D data has mainly focused on free noise models, without considering time constraints. It is proposed a hardware implementation leveraging the computing power of modern GPUs, which takes advantage of a new paradigm coined as General-Purpose Computing on Graphics Processing Units (GPGPU). The proposed methods were applied to different problems and applications in the area of computer vision such as the recognition and localization of objects, visual surveillance or 3D reconstruction.

report

reportShikhar Gupta This paper presents a vision-based method for autonomous landing of a quadcopter UAV. An onboard camera and NVIDIA Jetson TK1 processor are used to detect the landing platform using SIFT feature extraction and match keypoints between the detected image and a training image. The distance and position of the landing platform relative to the UAV is then estimated. This information is used by the ROS framework to generate control signals to maneuver the UAV's roll and pitch and autonomously land it precisely on the target platform. Experiments show the method can achieve precise landing within 10 cm under steady wind conditions.

Strategy for Foreground Movement Identification Adaptive to Background Variat...

Strategy for Foreground Movement Identification Adaptive to Background Variat...IJECEIAES Video processing has gained a lot of significance because of its applications in various areas of research. This includes monitoring movements in public places for surveillance. Video sequences from various standard datasets such as I2R, CAVIAR and UCSD are often referred for video processing applications and research. Identification of actors as well as the movements in video sequences should be accomplished with the static and dynamic background. The significance of research in video processing lies in identifying the foreground movement of actors and objects in video sequences. Foreground identification can be done with a static or dynamic background. This type of identification becomes complex while detecting the movements in video sequences with a dynamic background. For identification of foreground movement in video sequences with dynamic background, two algorithms are proposed in this article. The algorithms are termed as Frame Difference between Neighboring Frames using Hue, Saturation and Value (FDNF-HSV) and Frame Difference between Neighboring Frames using Greyscale (FDNF-G). With regard to F-measure, recall and precision, the proposed algorithms are evaluated with state-of-art techniques. Results of evaluation show that, the proposed algorithms have shown enhanced performance.

Robot Localisation: An Introduction - Luis Contreras 2020.06.09 | RoboCup@Hom...

Robot Localisation: An Introduction - Luis Contreras 2020.06.09 | RoboCup@Hom...robocupathomeedu The document summarizes Luis Contreras' upcoming lecture on robot localization using particle filters. The key points covered are:

1. Robot localization is the process of determining a robot's pose (position and orientation) over time using motion and sensor measurements within a map.

2. Particle filters represent the robot's uncertain pose as a set of weighted particles, with each particle being a hypothesis of the robot's state.

3. As the robot moves and senses its environment, the particles are propagated and weighted according to the motion and sensor models to estimate the posterior probability distribution over poses.

Automatic selection of object recognition methods using reinforcement learning

Automatic selection of object recognition methods using reinforcement learningShunta Saito The document discusses using reinforcement learning to automatically select between two object recognition methods. The goal is for a robot to decide which method to use depending on current conditions. It describes using Q-learning to choose between Lowe's feature matching or a vocabulary tree algorithm. State is defined based on image attributes, and actions update the value of state-action pairs to select the best recognition method.

IRJET- Moving Object Detection using Foreground Detection for Video Surveil...

IRJET- Moving Object Detection using Foreground Detection for Video Surveil...IRJET Journal This document summarizes a research paper that proposes a new method for detecting moving objects in videos using foreground detection and background subtraction. The key steps of the proposed method include initializing a background model using the median of initial frames, dynamically updating the background model to adapt to lighting changes, subtracting the background model from current frames and applying a threshold to detect moving objects, and using morphological operations and projection analysis to extract human bodies and remove noise. The experimental results showed that the proposed method can accurately and reliably detect moving human bodies in real-time video surveillance.

Partial Object Detection in Inclined Weather Conditions

Partial Object Detection in Inclined Weather ConditionsIRJET Journal This document provides a comprehensive analysis of imbalance problems in object detection. It presents a taxonomy to classify different types of imbalances and discusses solutions proposed in literature. The analysis highlights significant gaps including existing imbalances that require further attention, as well as entirely new imbalances that have never been addressed before. A survey of imbalance problems caused by weather conditions and common object imbalances is conducted. Methods for addressing imbalances include data augmentation using GANs and balancing training based on class performance.

"Separable Convolutions for Efficient Implementation of CNNs and Other Vision...

"Separable Convolutions for Efficient Implementation of CNNs and Other Vision...Edge AI and Vision Alliance For the full video of this presentation, please visit:

https://www.embedded-vision.com/platinum-members/embedded-vision-alliance/embedded-vision-training/videos/pages/may-2019-embedded-vision-summit-yu

For more information about embedded vision, please visit:

http://www.embedded-vision.com

Chen-Ping Yu, Co-founder and CEO of Phiar, presents the "Separable Convolutions for Efficient Implementation of CNNs and Other Vision Algorithms" tutorial at the May 2019 Embedded Vision Summit.

Separable convolutions are an important technique for implementing efficient convolutional neural networks (CNNs), made popular by MobileNet’s use of depthwise separable convolutions. But separable convolutions are not a new concept, and their utility is not limited to CNNs. Separable convolutions have been widely studied and employed in classical computer vision algorithms as well, in order to reduce computation demands.

We begin this talk with an introduction to separable convolutions. We then explore examples of their application in classical computer vision algorithms and in efficient CNNs, comparing some recent neural network models. We also examine practical considerations of when and how to best utilize separable convolutions in order to maximize their benefits.

Pontillo Semanti Code Using Content Similarity And Database Driven Matching T...

Pontillo Semanti Code Using Content Similarity And Database Driven Matching T...Kalle Laboratory eyetrackers, constrained to a fixed display and static (or accurately tracked) observer, facilitate automated analysis of fixation data. Development of wearable eyetrackers has extended environments and tasks that can be studied at the expense of automated analysis. Wearable eyetrackers provide 2D point-of-regard (POR) in scene-camera coordinates, but the researcher is typically interested in some high-level semantic property (e.g., object identity, region, or material) surrounding individual fixation points. The synthesis of POR into fixations and semantic information remains a labor-intensive manual task, limiting the application of wearable eyetracking.

We describe a system that segments POR videos into fixations and allows users to train a database-driven, object-recognition system. A correctly trained library results in a very accurate and semi-automated translation of raw POR data into a sequence of objects, regions or materials.

Portfolio

PortfolioIvan Khomyakov Ivan Khomyakov's portfolio summarizes his skills and experience. He has extensive knowledge of programming languages like C++, C#, Python, and technologies including OpenCV, SQL, machine learning, AWS, and Unity 3D. Some of his projects include developing a fast cubemap filter for rendering environments, a real-time locating system for tracking objects, and a dynamic map module for navigation systems. He also has experience with route editing tools, augmented reality applications, medical image segmentation, and machine learning algorithms. His background includes both academic and professional work on computer vision, image processing, statistics, and more.

IRJET- Object Detection and Recognition using Single Shot Multi-Box Detector

IRJET- Object Detection and Recognition using Single Shot Multi-Box DetectorIRJET Journal This document summarizes research on object detection and recognition using the Single Shot Multi-Box Detector (SSD) deep learning model. SSD improves on existing object detection systems by eliminating the need for generating object proposals and resampling pixels or features, thereby making detection faster and encapsulating all computation in a single neural network. The researchers applied SSD to standard datasets like PASCAL VOC, COCO, and ILSVRC and achieved competitive accuracy compared to methods using additional proposal steps, with SSD running significantly faster at 59 FPS. Experimental results on PASCAL VOC using SSD achieved a mean average precision of 74.3%, outperforming a comparable Faster R-CNN model.

A Novel Background Subtraction Algorithm for Dynamic Texture Scenes

A Novel Background Subtraction Algorithm for Dynamic Texture ScenesIJMER International Journal of Modern Engineering Research (IJMER) is Peer reviewed, online Journal. It serves as an international archival forum of scholarly research related to engineering and science education.

How to Make Hand Detector on Native Activity with OpenCV

How to Make Hand Detector on Native Activity with OpenCVIndustrial Technology Research Institute (ITRI)(工業技術研究院, 工研院)

K-Means Clustering in Moving Objects Extraction with Selective Background

K-Means Clustering in Moving Objects Extraction with Selective BackgroundIJCSIS Research Publications

A Three-Dimensional Representation method for Noisy Point Clouds based on Gro...

A Three-Dimensional Representation method for Noisy Point Clouds based on Gro...Sergio Orts-Escolano

"Separable Convolutions for Efficient Implementation of CNNs and Other Vision...

"Separable Convolutions for Efficient Implementation of CNNs and Other Vision...Edge AI and Vision Alliance

Ad

Recently uploaded (20)

Build Your Own Copilot & Agents For Devs

Build Your Own Copilot & Agents For DevsBrian McKeiver May 2nd, 2025 talk at StirTrek 2025 Conference.

Procurement Insights Cost To Value Guide.pptx

Procurement Insights Cost To Value Guide.pptxJon Hansen Procurement Insights integrated Historic Procurement Industry Archives, serves as a powerful complement — not a competitor — to other procurement industry firms. It fills critical gaps in depth, agility, and contextual insight that most traditional analyst and association models overlook.

Learn more about this value- driven proprietary service offering here.

TrustArc Webinar: Consumer Expectations vs Corporate Realities on Data Broker...

TrustArc Webinar: Consumer Expectations vs Corporate Realities on Data Broker...TrustArc Most consumers believe they’re making informed decisions about their personal data—adjusting privacy settings, blocking trackers, and opting out where they can. However, our new research reveals that while awareness is high, taking meaningful action is still lacking. On the corporate side, many organizations report strong policies for managing third-party data and consumer consent yet fall short when it comes to consistency, accountability and transparency.

This session will explore the research findings from TrustArc’s Privacy Pulse Survey, examining consumer attitudes toward personal data collection and practical suggestions for corporate practices around purchasing third-party data.

Attendees will learn:

- Consumer awareness around data brokers and what consumers are doing to limit data collection

- How businesses assess third-party vendors and their consent management operations

- Where business preparedness needs improvement

- What these trends mean for the future of privacy governance and public trust

This discussion is essential for privacy, risk, and compliance professionals who want to ground their strategies in current data and prepare for what’s next in the privacy landscape.

AI EngineHost Review: Revolutionary USA Datacenter-Based Hosting with NVIDIA ...

AI EngineHost Review: Revolutionary USA Datacenter-Based Hosting with NVIDIA ...SOFTTECHHUB I started my online journey with several hosting services before stumbling upon Ai EngineHost. At first, the idea of paying one fee and getting lifetime access seemed too good to pass up. The platform is built on reliable US-based servers, ensuring your projects run at high speeds and remain safe. Let me take you step by step through its benefits and features as I explain why this hosting solution is a perfect fit for digital entrepreneurs.

Noah Loul Shares 5 Steps to Implement AI Agents for Maximum Business Efficien...

Noah Loul Shares 5 Steps to Implement AI Agents for Maximum Business Efficien...Noah Loul Artificial intelligence is changing how businesses operate. Companies are using AI agents to automate tasks, reduce time spent on repetitive work, and focus more on high-value activities. Noah Loul, an AI strategist and entrepreneur, has helped dozens of companies streamline their operations using smart automation. He believes AI agents aren't just tools—they're workers that take on repeatable tasks so your human team can focus on what matters. If you want to reduce time waste and increase output, AI agents are the next move.

AI Changes Everything – Talk at Cardiff Metropolitan University, 29th April 2...

AI Changes Everything – Talk at Cardiff Metropolitan University, 29th April 2...Alan Dix Talk at the final event of Data Fusion Dynamics: A Collaborative UK-Saudi Initiative in Cybersecurity and Artificial Intelligence funded by the British Council UK-Saudi Challenge Fund 2024, Cardiff Metropolitan University, 29th April 2025

https://alandix.com/academic/talks/CMet2025-AI-Changes-Everything/

Is AI just another technology, or does it fundamentally change the way we live and think?

Every technology has a direct impact with micro-ethical consequences, some good, some bad. However more profound are the ways in which some technologies reshape the very fabric of society with macro-ethical impacts. The invention of the stirrup revolutionised mounted combat, but as a side effect gave rise to the feudal system, which still shapes politics today. The internal combustion engine offers personal freedom and creates pollution, but has also transformed the nature of urban planning and international trade. When we look at AI the micro-ethical issues, such as bias, are most obvious, but the macro-ethical challenges may be greater.

At a micro-ethical level AI has the potential to deepen social, ethnic and gender bias, issues I have warned about since the early 1990s! It is also being used increasingly on the battlefield. However, it also offers amazing opportunities in health and educations, as the recent Nobel prizes for the developers of AlphaFold illustrate. More radically, the need to encode ethics acts as a mirror to surface essential ethical problems and conflicts.

At the macro-ethical level, by the early 2000s digital technology had already begun to undermine sovereignty (e.g. gambling), market economics (through network effects and emergent monopolies), and the very meaning of money. Modern AI is the child of big data, big computation and ultimately big business, intensifying the inherent tendency of digital technology to concentrate power. AI is already unravelling the fundamentals of the social, political and economic world around us, but this is a world that needs radical reimagining to overcome the global environmental and human challenges that confront us. Our challenge is whether to let the threads fall as they may, or to use them to weave a better future.

2025-05-Q4-2024-Investor-Presentation.pptx

2025-05-Q4-2024-Investor-Presentation.pptxSamuele Fogagnolo Cloudflare Q4 Financial Results Presentation

Cyber Awareness overview for 2025 month of security

Cyber Awareness overview for 2025 month of securityriccardosl1 Cyber awareness training educates employees on risk associated with internet and malicious emails

Linux Support for SMARC: How Toradex Empowers Embedded Developers

Linux Support for SMARC: How Toradex Empowers Embedded DevelopersToradex Toradex brings robust Linux support to SMARC (Smart Mobility Architecture), ensuring high performance and long-term reliability for embedded applications. Here’s how:

• Optimized Torizon OS & Yocto Support – Toradex provides Torizon OS, a Debian-based easy-to-use platform, and Yocto BSPs for customized Linux images on SMARC modules.

• Seamless Integration with i.MX 8M Plus and i.MX 95 – Toradex SMARC solutions leverage NXP’s i.MX 8 M Plus and i.MX 95 SoCs, delivering power efficiency and AI-ready performance.

• Secure and Reliable – With Secure Boot, over-the-air (OTA) updates, and LTS kernel support, Toradex ensures industrial-grade security and longevity.

• Containerized Workflows for AI & IoT – Support for Docker, ROS, and real-time Linux enables scalable AI, ML, and IoT applications.

• Strong Ecosystem & Developer Support – Toradex offers comprehensive documentation, developer tools, and dedicated support, accelerating time-to-market.

With Toradex’s Linux support for SMARC, developers get a scalable, secure, and high-performance solution for industrial, medical, and AI-driven applications.

Do you have a specific project or application in mind where you're considering SMARC? We can help with Free Compatibility Check and help you with quick time-to-market

For more information: https://www.toradex.com/computer-on-modules/smarc-arm-family

How analogue intelligence complements AI

How analogue intelligence complements AIPaul Rowe

Artificial Intelligence is providing benefits in many areas of work within the heritage sector, from image analysis, to ideas generation, and new research tools. However, it is more critical than ever for people, with analogue intelligence, to ensure the integrity and ethical use of AI. Including real people can improve the use of AI by identifying potential biases, cross-checking results, refining workflows, and providing contextual relevance to AI-driven results.

News about the impact of AI often paints a rosy picture. In practice, there are many potential pitfalls. This presentation discusses these issues and looks at the role of analogue intelligence and analogue interfaces in providing the best results to our audiences. How do we deal with factually incorrect results? How do we get content generated that better reflects the diversity of our communities? What roles are there for physical, in-person experiences in the digital world?

Complete Guide to Advanced Logistics Management Software in Riyadh.pdf

Complete Guide to Advanced Logistics Management Software in Riyadh.pdfSoftware Company Explore the benefits and features of advanced logistics management software for businesses in Riyadh. This guide delves into the latest technologies, from real-time tracking and route optimization to warehouse management and inventory control, helping businesses streamline their logistics operations and reduce costs. Learn how implementing the right software solution can enhance efficiency, improve customer satisfaction, and provide a competitive edge in the growing logistics sector of Riyadh.

#StandardsGoals for 2025: Standards & certification roundup - Tech Forum 2025

#StandardsGoals for 2025: Standards & certification roundup - Tech Forum 2025BookNet Canada Book industry standards are evolving rapidly. In the first part of this session, we’ll share an overview of key developments from 2024 and the early months of 2025. Then, BookNet’s resident standards expert, Tom Richardson, and CEO, Lauren Stewart, have a forward-looking conversation about what’s next.

Link to recording, transcript, and accompanying resource: https://bnctechforum.ca/sessions/standardsgoals-for-2025-standards-certification-roundup/

Presented by BookNet Canada on May 6, 2025 with support from the Department of Canadian Heritage.

Transcript: #StandardsGoals for 2025: Standards & certification roundup - Tec...

Transcript: #StandardsGoals for 2025: Standards & certification roundup - Tec...BookNet Canada Book industry standards are evolving rapidly. In the first part of this session, we’ll share an overview of key developments from 2024 and the early months of 2025. Then, BookNet’s resident standards expert, Tom Richardson, and CEO, Lauren Stewart, have a forward-looking conversation about what’s next.

Link to recording, presentation slides, and accompanying resource: https://bnctechforum.ca/sessions/standardsgoals-for-2025-standards-certification-roundup/

Presented by BookNet Canada on May 6, 2025 with support from the Department of Canadian Heritage.

Quantum Computing Quick Research Guide by Arthur Morgan

Quantum Computing Quick Research Guide by Arthur MorganArthur Morgan This is a Quick Research Guide (QRG).

QRGs include the following:

- A brief, high-level overview of the QRG topic.

- A milestone timeline for the QRG topic.

- Links to various free online resource materials to provide a deeper dive into the QRG topic.

- Conclusion and a recommendation for at least two books available in the SJPL system on the QRG topic.

QRGs planned for the series:

- Artificial Intelligence QRG

- Quantum Computing QRG

- Big Data Analytics QRG

- Spacecraft Guidance, Navigation & Control QRG (coming 2026)

- UK Home Computing & The Birth of ARM QRG (coming 2027)

Any questions or comments?

- Please contact Arthur Morgan at [email protected].

100% human made.

What is Model Context Protocol(MCP) - The new technology for communication bw...

What is Model Context Protocol(MCP) - The new technology for communication bw...Vishnu Singh Chundawat The MCP (Model Context Protocol) is a framework designed to manage context and interaction within complex systems. This SlideShare presentation will provide a detailed overview of the MCP Model, its applications, and how it plays a crucial role in improving communication and decision-making in distributed systems. We will explore the key concepts behind the protocol, including the importance of context, data management, and how this model enhances system adaptability and responsiveness. Ideal for software developers, system architects, and IT professionals, this presentation will offer valuable insights into how the MCP Model can streamline workflows, improve efficiency, and create more intuitive systems for a wide range of use cases.

Heap, Types of Heap, Insertion and Deletion

Heap, Types of Heap, Insertion and DeletionJaydeep Kale This pdf will explain what is heap, its type, insertion and deletion in heap and Heap sort

HCL Nomad Web – Best Practices and Managing Multiuser Environments

HCL Nomad Web – Best Practices and Managing Multiuser Environmentspanagenda Webinar Recording: https://www.panagenda.com/webinars/hcl-nomad-web-best-practices-and-managing-multiuser-environments/

HCL Nomad Web is heralded as the next generation of the HCL Notes client, offering numerous advantages such as eliminating the need for packaging, distribution, and installation. Nomad Web client upgrades will be installed “automatically” in the background. This significantly reduces the administrative footprint compared to traditional HCL Notes clients. However, troubleshooting issues in Nomad Web present unique challenges compared to the Notes client.

Join Christoph and Marc as they demonstrate how to simplify the troubleshooting process in HCL Nomad Web, ensuring a smoother and more efficient user experience.

In this webinar, we will explore effective strategies for diagnosing and resolving common problems in HCL Nomad Web, including

- Accessing the console

- Locating and interpreting log files

- Accessing the data folder within the browser’s cache (using OPFS)

- Understand the difference between single- and multi-user scenarios

- Utilizing Client Clocking

Dev Dives: Automate and orchestrate your processes with UiPath Maestro

Dev Dives: Automate and orchestrate your processes with UiPath MaestroUiPathCommunity This session is designed to equip developers with the skills needed to build mission-critical, end-to-end processes that seamlessly orchestrate agents, people, and robots.

📕 Here's what you can expect:

- Modeling: Build end-to-end processes using BPMN.

- Implementing: Integrate agentic tasks, RPA, APIs, and advanced decisioning into processes.

- Operating: Control process instances with rewind, replay, pause, and stop functions.

- Monitoring: Use dashboards and embedded analytics for real-time insights into process instances.

This webinar is a must-attend for developers looking to enhance their agentic automation skills and orchestrate robust, mission-critical processes.

👨🏫 Speaker:

Andrei Vintila, Principal Product Manager @UiPath

This session streamed live on April 29, 2025, 16:00 CET.

Check out all our upcoming Dev Dives sessions at https://community.uipath.com/dev-dives-automation-developer-2025/.

What is Model Context Protocol(MCP) - The new technology for communication bw...

What is Model Context Protocol(MCP) - The new technology for communication bw...Vishnu Singh Chundawat

Ad

FCN-Based 6D Robotic Grasping for Arbitrary Placed Objects

- 1. Hitoshi Kusano*, Ayaka Kume+, Eiichi Matsumoto+, Jethro Tan+ June 2, 2017 *Kyoto University +Preferred Networks, Inc. FCN-Based 6D Robotic Grasping for Arbitrary Placed Objects ※This work is the output of Preferred Networks internship program

- 2. Requirement for successful robotic grasping: Derive configurations of a robot and its end-effector e.g. Grasp pose, Grasp width, Grasp height, Joint angle ・Traditional approach decomposes grasping process into several stages, which require many heuristics ・Machine learning based end-to-end approach has emerged Background http://www.schunk-modular-robotics.com/ 1/9 Complex end-effector Cluttered environment

- 3. None of prior methods can predict 6D grasp Previous Work ~ Machine learning based end-to-end approach ~ Pinto2016 Levine2016 Araki2016 Guo2017 (x, y)height width 2/9 (x, y, z, roll, pitch, yaw)

- 4. Our purpose: End-to-End learning to grasp arbitrary placed objects Contribution: ○ Novel data collection strategy to obtain 6D grasp configurations using a teach tool by human ○ End-to-end CNN model predicting 6D grasp configurations Purpose and Contribution (x, y, z, w, p, r) 3/9

- 5. ● An extension for Fully Convolutional Networks ● Outputs two maps with scores: Location Map for graspability per pixel, and Configuration Map providing end-effector configurations (z, w, p, r) per pixel ● For Configuration Map, this network classifies valid grasp configurations to 300 classes, NOT regression Grasp Configuration Network (x, y, z, w, p, r) 4/9 Location MapConfiguration Map

- 6. Data Collection Simple teach tool Data Collection We demonstrated 11320 grasps for 7 objects 5/9 Robotic Gripper https://www.thk.com X

- 7. A. Intel Realsense SR300 RGB-D camera B. Arbitrary placed object C. THK TRX-S 3-finger gripper D. FANUC M-10iA 6 DOF robot arm Experiment Setup B C D A 6/9

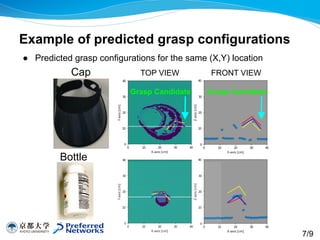

- 8. ● Predicted grasp configurations for the same (X,Y) location Example of predicted grasp configurations Cap Bottle TOP VIEW FRONT VIEW Grasp Candidate Grasp Candidate 7/9

- 9. Known Objects Unknown Objects Results of robotic experiment 70% 50% 60% 40% 20% 40% 60% Number under the figure means success rate for 10 trials 60% 20% 20% 40% 30% 8/9 _

- 10. System Test ※This video is double speed 9/9

- 11. Thank you for listening and I hope to talk to you in the interactive session