Feature Engineering - Getting most out of data for predictive models

- 1. Feature Engineering Gabriel Moreira @gspmoreira Getting the most out of data for predictive models Lead Data Scientist DSc. student 2017

- 2. Agenda ● Machine Learning Pipeline ● Data Munging ● Feature Engineering ○ Numerical features ○ Categorical features ○ Temporal and Spatial features ○ Textual features ● Feature Selection Extra slides marker

- 3. Data Models Features Useful attributes for your modeling task

- 4. "Feature engineering is the process of transforming raw data into features that better represent the underlying problem to the predictive models, resulting in improved model accuracy on unseen data." – Jason Brownlee

- 5. “Coming up with features is difficult, time-consuming, requires expert knowledge. 'Applied machine learning' is basically feature engineering.” – Andrew Ng

- 6. The Dream... Raw data Dataset Model Task

- 7. … The Reality ? ML Ready dataset Features Model ? Task Raw data

- 8. Here are some Feature Engineering techniques for your Data Science toolbox...

- 9. Case Study

- 10. Outbrain Click Prediction - Kaggle competition Dataset ● Sample of users page views and clicks during 14 days on June, 2016 ● 2 Billion page views ● 17 million click records ● 700 Million unique users ● 560 sites Can you predict which recommended content each user will click?

- 11. I got 19th position from about 1000 competitors (top 2%), mostly due to Feature Engineering techniques.

- 12. Data Munging

- 13. ● What does the data model look like? ● What is the features distribution? ● What are the features with missing or inconsistent values? ● What are the most predictive features? ● Conduct a Exploratory Data Analysis (EDA) First at all … a closer look at your data

- 14. Outbrain Click Prediction - Data Model Numerical Spatial Temporal Categorical Target

- 15. ML-Ready Dataset Fields (Features) Instances Tabular data (rows and columns) ● Usually denormalized in a single file/dataset ● Each row contains information about one instance ● Each column is a feature that describes a property of the instance

- 16. Data Cleansing Homogenize missing values and different types of in the same feature, fix input errors, types, etc. Original data Cleaned data

- 17. Aggregating Necessary when the entity to model is an aggregation from the provided data. Original data (list of playbacks) Aggregated data (list of users)

- 18. Pivoting Necessary when the entity to model is an aggregation from the provided data. Aggregated data with pivoted columns Original data # playbacks by device Play duration by device

- 20. Numerical features ● Usually easy to ingest by mathematical models, but feature engineering is indeed necessary. ● Can be floats, counts, ... ● Easier to impute missing data ● Distribution and scale matters to some models

- 21. Imputation for missing values ● Datasets contain missing values, often encoded as blanks, NaNs or other placeholders ● Ignoring rows and/or columns with missing values is possible, but at the price of loosing data which might be valuable ● Better strategy is to infer them from the known part of data ● Strategies ○ Mean: Basic approach ○ Median: More robust to outliers ○ Mode: Most frequent value ○ Using a model: Can expose algorithmic bias

- 22. >>> import numpy as np >>> from sklearn.preprocessing import Imputer >>> imp = Imputer(missing_values='NaN', strategy='mean', axis=0) >>> imp.fit([[1, 2], [np.nan, 3], [7, 6]]) Imputer(axis=0, copy=True, missing_values='NaN', strategy='mean', verbose=0) >>> X = [[np.nan, 2], [6, np.nan], [7, 6]] >>> print(imp.transform(X)) [[ 4. 2. ] [ 6. 3.666...] [ 7. 6. ]] Missing values imputation with scikit-learn Imputation for missing values

- 23. Rounding ● Form of lossy compression: retain most significant features of the data. ● Sometimes too much precision is just noise ● Rounded variables can be treated as categorical variables ● Example: Some models like Association Rules work only with categorical features. It is possible to convert a percentage into categorial feature this way Extra slides marker

- 24. Binarization ● Transform discrete or continuous numeric features in binary features Example: Number of user views of the same document >>> from sklearn import preprocessing >>> X = [[ 1., -1., 2.], ... [ 2., 0., 0.], ... [ 0., 1., -1.]] >>> binarizer = preprocessing.Binarizer(threshold=1.0) >>> binarizer.transform(X) array([[ 1., 0., 1.], [ 1., 0., 0.], [ 0., 1., 0.]]) Binarization with scikit-learn

- 25. Binning ● Split numerical values into bins and encode with a bin ID ● Can be set arbitrarily or based on distribution ● Fixed-width binning Does fixed-width binning make sense for this long-tailed distribution? Most users (458,234,809 ~ 5*10^8) had only 1 pageview during the period.

- 26. Binning ● Adaptative or Quantile binning Divides data into equal portions (eg. by median, quartiles, deciles) >>> deciles = dataframe['review_count'].quantile([.1, .2, .3, .4, .5, .6, .7, .8, .9]) >>> deciles 0.1 3.0 0.2 4.0 0.3 5.0 0.4 6.0 0.5 8.0 0.6 12.0 0.7 17.0 0.8 28.0 0.9 58.0 Quantile binning with Pandas

- 27. Log transformation Compresses the range of large numbers and expand the range of small numbers. Eg. The larger x is, the slower log(x) increments.

- 28. Log transformation Histogram of # views by user Histogram of # views by user smoothed by log(1+x) Smoothing long-tailed data with log

- 29. Scaling ● Models that are smooth functions of input features are sensitive to the scale of the input (eg. Linear Regression) ● Scale numerical variables into a certain range, dividing values by a normalization constant (no changes in single-feature distribution) ● Popular techniques ○ MinMax Scaling ○ Standard (Z) Scaling

- 30. Min-max scaling ● Squeezes (or stretches) all values within the range of [0, 1] to add robustness to very small standard deviations and preserving zeros for sparse data. >>> from sklearn import preprocessing >>> X_train = np.array([[ 1., -1., 2.], ... [ 2., 0., 0.], ... [ 0., 1., -1.]]) ... >>> min_max_scaler = preprocessing.MinMaxScaler() >>> X_train_minmax = min_max_scaler.fit_transform(X_train) >>> X_train_minmax array([[ 0.5 , 0. , 1. ], [ 1. , 0.5 , 0.33333333], [ 0. , 1. , 0. ]]) Min-max scaling with scikit-learn

- 31. Standard (Z) Scaling After Standardization, a feature has mean of 0 and variance of 1 (assumption of many learning algorithms) >>> from sklearn import preprocessing >>> import numpy as np >>> X = np.array([[ 1., -1., 2.], ... [ 2., 0., 0.], ... [ 0., 1., -1.]]) >>> X_scaled = preprocessing.scale(X) >>> X_scaled array([[ 0. ..., -1.22..., 1.33...], [ 1.22..., 0. ..., -0.26...], [-1.22..., 1.22..., -1.06...]]) >> X_scaled.mean(axis=0) array([ 0., 0., 0.]) >>> X_scaled.std(axis=0) array([ 1., 1., 1.]) Standardization with scikit-learn

- 32. ● Scales individual samples (rows) to have unit vector, dividing values by vector’s L2 norm, a.k.a. the Euclidean norm ● Useful for quadratic form (like dot-product) or any other kernel to quantify similarity of pairs of samples. This assumption is the base of the Vector Space Model often used in text classification and clustering contexts Normalization Normalized vector Euclidean (L2 ) norm

- 33. >>> from sklearn import preprocessing >>> X = [[ 1., -1., 2.], ... [ 2., 0., 0.], ... [ 0., 1., -1.]] >>> X_normalized = preprocessing.normalize(X, norm='l2') >>> X_normalized array([[ 0.40..., -0.40..., 0.81...], [ 1. ..., 0. ..., 0. ...], [ 0. ..., 0.70..., -0.70...]]) Normalization with scikit-learn Normalization

- 34. Interaction Features ● Simple linear models use a linear combination of the individual input features, x1 , x2 , ... xn to predict the outcome y. y = w1 x1 + w2 x2 + ... + wn xn ● An easy way to increase the complexity of the linear model is to create feature combinations (nonlinear features). ● Example: Degree 2 interaction features for vector x = (x1, x2 ) y = w1 x1 + w2 x2 + w3 x1 x2 + w4 x1 2 + w4 x2 2

- 35. Interaction Features >>> import numpy as np >>> from sklearn.preprocessing import PolynomialFeatures >>> X = np.arange(6).reshape(3, 2) >>> X array([[0, 1], [2, 3], [4, 5]]) >>> poly = poly = PolynomialFeatures(degree=2, interaction_only=False, include_bias=True) >>> poly.fit_transform(X) array([[ 1., 0., 1., 0., 0., 1.], [ 1., 2., 3., 4., 6., 9.], [ 1., 4., 5., 16., 20., 25.]]) Polynomial features with scikit-learn

- 36. Interaction Features - Vowpal Wabbit vw --loss_function logistic --link=logistic --ftrl --ftrl_alpha 0.005 --ftrl_beta 0.1 -q cc -q zc -q zm -l 0.01 --l1 1.0 --l2 1.0 -b 28 --hash all --compressed -d data/train_fv.vw -f output.model Feature interactions with VW Interacting (quadratic) features of some namespaces vw_line = '{} |i {} |m {} |z {} |c {}n'.format( label, ' '.join(integer_features), ' '.join(ctr_features), ' '.join(similarity_features), ' '.join(categorical_features)) Separating features in namespaces in Vowpal Wabbit (VW) sparse format 1 |i 12:5 18:126 |m 2:0.015 45:0.123 |z 32:0.576 17:0.121 |c 16:1 295:1 3554:1 Sample data point (line in VW format file)

- 38. Categorical Features ● Nearly always need some treatment to be suitable for models ● High cardinality can create very sparse data ● Difficult to impute missing ● Examples: Platform: [“desktop”, “tablet”, “mobile”] Document_ID or User_ID: [121545, 64845, 121545]

- 39. One-Hot Encoding (OHE) ● Transform a categorical feature with m possible values into m binary features. ● If the variable cannot be multiple categories at once, then only one bit in the group can be on. ● Sparse format is memory-friendly ● Example: “platform=tablet” can be sparsely encoded as “2:1”

- 40. One-hot encoding with Spark ML One-Hot Encoding (OHE) from pyspark.ml.feature import OneHotEncoder, StringIndexer df = spark.createDataFrame([ (0, "a"), (1, "b"), (2, "c"), (3, "a"), (4, "a"), (5, "c") ], ["id", "category"]) stringIndexer = StringIndexer(inputCol="category", outputCol="categoryIndex") model = stringIndexer.fit(df) indexed = model.transform(df) encoder = OneHotEncoder(inputCol="categoryIndex", outputCol="categoryVec") encoded = encoder.transform(indexed) encoded.show()

- 41. Large Categorical Variables ● Common in applications like targeted advertising and fraud detection ● Example: Some large categorical features from Outbrain Click Prediction competition

- 42. Feature hashing ● Hashes categorical values into vectors with fixed-length. ● Lower sparsity and higher compression compared to OHE ● Deals with new and rare categorical values (eg: new user-agents) ● May introduce collisions 100 hashed columns

- 43. Feature hashing import hashlib def hashstr(s, nr_bins): return int(hashlib.md5(s.encode('utf8')).hexdigest(), 16)%(nr_bins-1)+1 CATEGORICAL_VALUE='ad_id=354424' MAX_BINS=100000 >>> hashstr(CATEGORICAL_VALUE, MAX_BINS) 49389 Feature hashing with pure Python Original category Hashed category import tensorflow as tf ad_id_hashed = tf.contrib.layers.sparse_column_with_hash_bucket('ad_id', hash_bucket_size=250000, dtype=tf.int64, combiner="sum") Feature hashing with TensorFlow

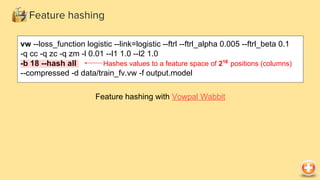

- 44. Feature hashing vw --loss_function logistic --link=logistic --ftrl --ftrl_alpha 0.005 --ftrl_beta 0.1 -q cc -q zc -q zm -l 0.01 --l1 1.0 --l2 1.0 -b 18 --hash all --compressed -d data/train_fv.vw -f output.model Feature hashing with Vowpal Wabbit Hashes values to a feature space of 218 positions (columns)

- 45. Bin-counting ● Instead of using the actual categorical value, use a global statistic of this category on historical data. ● Useful for both linear and non-linear algorithms ● May give collisions (same encoding for different categories) ● Be careful about leakage ● Strategies ○ Count ○ Average CTR

- 46. Bin-counting or or Counts Click-Through Rate P(click | ad) = ad_clicks / ad_views

- 47. LabelCount encoding ● Rank categorical variables by count in train set ● Useful for both linear and non-linear algorithms (eg: decision trees) ● Not sensitive to outliers ● Won’t give same encoding to different variables

- 48. Category Embedding ● Use a Neural Network to create dense embeddings from categorical variables. ● Map categorical variables in a function approximation problem into Euclidean spaces ● Faster model training. ● Less memory overhead. ● Can give better accuracy than 1-hot encoded.

- 49. Category Embedding Category Embedding using TensorFlow import tensorflow as tf def get_embedding_size(unique_val_count): return int(math.floor(6 * unique_val_count**0.25)) ad_id_hashed_feature = tf.contrib.layers.sparse_column_with_hash_bucket('ad_id', hash_bucket_size=250000, dtype=tf.int64, combiner="sum") embedding_size = get_embedding_size( ad_id_hashed_feature.length) ad_embedding_feature = tf.contrib.layers.embedding_column( ad_id_hashed_feature, dimension=embedding_size, combiner="sum")

- 51. Time Zone conversion Factors to consider: ● Multiple time zones in some countries ● Daylight Saving Time (DST) ○ Start and end DST dates

- 52. ● Apply binning on time data to make it categorial and more general. ● Binning a time in hours or periods of day, like below. ● Extraction: weekday/weekend, weeks, months, quarters, years... Hour range Bin ID Bin Description [5, 8) 1 Early Morning [8, 11) 2 Morning [11, 14) 3 Midday [14, 19) 4 Afternoon [19, 22) 5 Evening [22-24) and (00-05] 6 Night Time binning

- 53. ● Instead of encoding: total spend, encode things like: Spend in last week, spend in last month, spend in last year. ● Gives a trend to the algorithm: two customers with equal spend, can have wildly different behavior — one customer may be starting to spend more, while the other is starting to decline spending. Trendlines

- 54. ● Hardcode categorical features from dates ● Example: Factors that might have major influence on spending behavior ● Proximity to major events (holidays, major sports events) ○ Eg. date_X_days_before_holidays ● Proximity to wages payment date (monthly seasonality) ○ Eg. first_saturday_of_the_month Closeness to major events

- 55. ● Differences between dates might be relevant ● Examples: ○ user_interaction_date - published_doc_date To model how recent was the ad when the user viewed it. Hypothesis: user interests on a topic may decay over time ○ last_user_interaction_date - user_interaction_date To model how old was a given user interaction compared to his last interaction Time differences

- 56. Spatial Features

- 57. Spatial Variables ● Spatial variables encode a location in space, like: ○ GPS-coordinates (lat. / long.) - sometimes require projection to a different coordinate system ○ Street Addresses - require geocoding ○ ZipCodes, Cities, States, Countries - usually enriched with the centroid coordinate of the polygon (from external GIS data) ● Derived features ○ Distance between a user location and searched hotels (Expedia competition) ○ Impossible travel speed (fraud detection)

- 58. Spatial Enrichment Usually useful to enrich with external geographic data (eg. Census demographics) Beverage Containers Redemption Fraud Detection: Usage of # containers redeemed (red circles) by store and Census households median income by Census Tracts

- 59. Textual data

- 60. Natural Language Processing Cleaning • Lowercasing • Convert accented characters • Removing non-alphanumeric • Repairing Tokenizing • Encode punctuation marks • Tokenize • N-Grams • Skip-grams • Char-grams • Affixes Removing • Stopwords • Rare words • Common words Roots • Spelling correction • Chop • Stem • Lemmatize Enrich • Entity Insertion / Extraction • Parse Trees • Reading Level

- 61. Represent each document as a feature vector in the vector space, where each position represents a word (token) and the contained value is its relevance in the document. ● BoW (Bag of words) ● TF-IDF (Term Frequency - Inverse Document Frequency) ● Embeddings (eg. Word2Vec, Glove) ● Topic models (e.g LDA) Document Term Matrix - Bag of Words Text vectorization

- 63. from sklearn.feature_extraction.text import TfidfVectorizer vectorizer = TfidfVectorizer(max_df=0.5, max_features=1000, min_df=2, stop_words='english') tfidf_corpus = vectorizer.fit_transform(text_corpus) face person guide lock cat dog sleep micro pool gym 0 1 2 3 4 5 6 7 8 9 D1 0.05 0.25 D2 0.02 0.32 0.45 ... ... tokens documents TF-IDF sparse matrix example Text vectorization - TF-IDF TF-IDF with scikit-learn

- 64. Similarity metric between two vectors is cosine among the angle between them from sklearn.metrics.pairwise import cosine_similarity cosine_similarity(tfidf_matrix[0:1], tfidf_matrix) Cosine Similarity with scikit-learn Cosine Similarity

- 65. Textual Similarities • Token similarity: Count number of tokens that appear in two texts. • Levenshtein/Hamming/Jaccard Distance: Check similarity between two strings, by looking at number of operations needed to transform one in the other. • Word2Vec / Glove: Check cosine similarity between two word embedding vectors

- 66. Topic Modeling

- 67. ● Latent Dirichlet Allocation (LDA) -> Probabilistic ● Latent Semantic Indexing / Analysis (LSI / LSA) -> Matrix Factorization ● Non-Negative Matrix Factorization (NMF) -> Matrix Factorization Topic Modeling

- 69. Feature Selection Reduces model complexity and training time ● Filtering - Eg. Correlation our Mutual Information between each feature and the response variable ● Wrapper methods - Expensive, trying to optimize the best subset of features (eg. Stepwise Regression) ● Embedded methods - Feature selection as part of model training process (eg. Feature Importances of Decision Trees or Trees Ensembles)

- 70. “More data beats clever algorithms, but better data beats more data.” – Peter Norvig

- 71. Diverse set of Features and Models leads to different results! Outbrain Click Prediction - Leaderboard score of my approaches

- 72. Towards Automated Feature Engineering Deep Learning....

- 73. “...some machine learning projects succeed and some fail. Where is the difference? Easily the most important factor is the features used.” – Pedro Domingos

- 74. References Scikit-learn - Preprocessing data Spark ML - Feature extraction Discover Feature Engineering...

- 75. Thanks! Gabriel Moreira Lead Data Scientist Data Scientists wanted! bit.ly/ds4cit Slides: bit.ly/feature_eng Blog: medium.com/unstructured

![>>> import numpy as np

>>> from sklearn.preprocessing import Imputer

>>> imp = Imputer(missing_values='NaN', strategy='mean', axis=0)

>>> imp.fit([[1, 2], [np.nan, 3], [7, 6]])

Imputer(axis=0, copy=True, missing_values='NaN', strategy='mean',

verbose=0)

>>> X = [[np.nan, 2], [6, np.nan], [7, 6]]

>>> print(imp.transform(X))

[[ 4. 2. ]

[ 6. 3.666...]

[ 7. 6. ]]

Missing values imputation with scikit-learn

Imputation for missing values](https://image.slidesharecdn.com/qconsp17-featureengineering-170426171227/85/Feature-Engineering-Getting-most-out-of-data-for-predictive-models-22-320.jpg)

![Binarization

● Transform discrete or continuous numeric features in binary features

Example: Number of user views of the same document

>>> from sklearn import preprocessing

>>> X = [[ 1., -1., 2.],

... [ 2., 0., 0.],

... [ 0., 1., -1.]]

>>> binarizer =

preprocessing.Binarizer(threshold=1.0)

>>> binarizer.transform(X)

array([[ 1., 0., 1.],

[ 1., 0., 0.],

[ 0., 1., 0.]])

Binarization with scikit-learn](https://image.slidesharecdn.com/qconsp17-featureengineering-170426171227/85/Feature-Engineering-Getting-most-out-of-data-for-predictive-models-24-320.jpg)

![Binning

● Adaptative or Quantile binning

Divides data into equal portions (eg. by median, quartiles, deciles)

>>> deciles = dataframe['review_count'].quantile([.1, .2, .3, .4, .5, .6, .7,

.8, .9])

>>> deciles

0.1 3.0

0.2 4.0

0.3 5.0

0.4 6.0

0.5 8.0

0.6 12.0

0.7 17.0

0.8 28.0

0.9 58.0

Quantile binning with Pandas](https://image.slidesharecdn.com/qconsp17-featureengineering-170426171227/85/Feature-Engineering-Getting-most-out-of-data-for-predictive-models-26-320.jpg)

![Min-max scaling

● Squeezes (or stretches) all values within the range of [0, 1] to add robustness to

very small standard deviations and preserving zeros for sparse data.

>>> from sklearn import preprocessing

>>> X_train = np.array([[ 1., -1., 2.],

... [ 2., 0., 0.],

... [ 0., 1., -1.]])

...

>>> min_max_scaler =

preprocessing.MinMaxScaler()

>>> X_train_minmax =

min_max_scaler.fit_transform(X_train)

>>> X_train_minmax

array([[ 0.5 , 0. , 1. ],

[ 1. , 0.5 , 0.33333333],

[ 0. , 1. , 0. ]])

Min-max scaling with scikit-learn](https://image.slidesharecdn.com/qconsp17-featureengineering-170426171227/85/Feature-Engineering-Getting-most-out-of-data-for-predictive-models-30-320.jpg)

![Standard (Z) Scaling

After Standardization, a feature has mean of 0 and variance of 1 (assumption of

many learning algorithms)

>>> from sklearn import preprocessing

>>> import numpy as np

>>> X = np.array([[ 1., -1., 2.],

... [ 2., 0., 0.],

... [ 0., 1., -1.]])

>>> X_scaled = preprocessing.scale(X)

>>> X_scaled

array([[ 0. ..., -1.22..., 1.33...],

[ 1.22..., 0. ..., -0.26...],

[-1.22..., 1.22..., -1.06...]])

>> X_scaled.mean(axis=0)

array([ 0., 0., 0.])

>>> X_scaled.std(axis=0)

array([ 1., 1., 1.])

Standardization with scikit-learn](https://image.slidesharecdn.com/qconsp17-featureengineering-170426171227/85/Feature-Engineering-Getting-most-out-of-data-for-predictive-models-31-320.jpg)

![>>> from sklearn import preprocessing

>>> X = [[ 1., -1., 2.],

... [ 2., 0., 0.],

... [ 0., 1., -1.]]

>>> X_normalized = preprocessing.normalize(X, norm='l2')

>>> X_normalized

array([[ 0.40..., -0.40..., 0.81...],

[ 1. ..., 0. ..., 0. ...],

[ 0. ..., 0.70..., -0.70...]])

Normalization with scikit-learn

Normalization](https://image.slidesharecdn.com/qconsp17-featureengineering-170426171227/85/Feature-Engineering-Getting-most-out-of-data-for-predictive-models-33-320.jpg)

![Interaction Features

>>> import numpy as np

>>> from sklearn.preprocessing import PolynomialFeatures

>>> X = np.arange(6).reshape(3, 2)

>>> X

array([[0, 1],

[2, 3],

[4, 5]])

>>> poly = poly = PolynomialFeatures(degree=2, interaction_only=False,

include_bias=True)

>>> poly.fit_transform(X)

array([[ 1., 0., 1., 0., 0., 1.],

[ 1., 2., 3., 4., 6., 9.],

[ 1., 4., 5., 16., 20., 25.]])

Polynomial features with scikit-learn](https://image.slidesharecdn.com/qconsp17-featureengineering-170426171227/85/Feature-Engineering-Getting-most-out-of-data-for-predictive-models-35-320.jpg)

![Categorical Features

● Nearly always need some treatment to be suitable for models

● High cardinality can create very sparse data

● Difficult to impute missing

● Examples:

Platform: [“desktop”, “tablet”, “mobile”]

Document_ID or User_ID: [121545, 64845, 121545]](https://image.slidesharecdn.com/qconsp17-featureengineering-170426171227/85/Feature-Engineering-Getting-most-out-of-data-for-predictive-models-38-320.jpg)

![One-hot encoding with Spark ML

One-Hot Encoding (OHE)

from pyspark.ml.feature import OneHotEncoder, StringIndexer

df = spark.createDataFrame([

(0, "a"),

(1, "b"),

(2, "c"),

(3, "a"),

(4, "a"),

(5, "c")

], ["id", "category"])

stringIndexer = StringIndexer(inputCol="category", outputCol="categoryIndex")

model = stringIndexer.fit(df)

indexed = model.transform(df)

encoder = OneHotEncoder(inputCol="categoryIndex", outputCol="categoryVec")

encoded = encoder.transform(indexed)

encoded.show()](https://image.slidesharecdn.com/qconsp17-featureengineering-170426171227/85/Feature-Engineering-Getting-most-out-of-data-for-predictive-models-40-320.jpg)

![● Apply binning on time data to make it categorial and more general.

● Binning a time in hours or periods of day, like below.

● Extraction: weekday/weekend, weeks, months, quarters, years...

Hour range Bin ID Bin Description

[5, 8) 1 Early Morning

[8, 11) 2 Morning

[11, 14) 3 Midday

[14, 19) 4 Afternoon

[19, 22) 5 Evening

[22-24) and (00-05] 6 Night

Time binning](https://image.slidesharecdn.com/qconsp17-featureengineering-170426171227/85/Feature-Engineering-Getting-most-out-of-data-for-predictive-models-52-320.jpg)

![Similarity metric between two vectors is cosine among the angle between them

from sklearn.metrics.pairwise import cosine_similarity

cosine_similarity(tfidf_matrix[0:1], tfidf_matrix)

Cosine Similarity with scikit-learn

Cosine Similarity](https://image.slidesharecdn.com/qconsp17-featureengineering-170426171227/85/Feature-Engineering-Getting-most-out-of-data-for-predictive-models-64-320.jpg)

![[Phd Thesis Defense] CHAMELEON: A Deep Learning Meta-Architecture for News Re...](https://cdn.slidesharecdn.com/ss_thumbnails/chameleondefesadoutorado1-191209202516-thumbnail.jpg?width=560&fit=bounds)