Feature selection concepts and methods

- 1. Feature Selection Concepts and Methods Electronic & Computer Department Isfahan University Of Technology Reza Ramezani 1

- 2. What are Features? Features are attributes that their value make an instance. With features we can identify instances. Features are determinant values that determine instance belong to which class. 2

- 3. Classifying Features Relevance: These are features that have an influence on the output and whose role can not be assumed by the rest. Irrelevance: Features that don't have any influence on the output, and whose values are generated at random for each example. Redundance: A redundancy exists whenever a feature can take the role of another. 3

- 4. What is Feature Selection? Feature selection, is a preprocessing step to machine learning that choose a subset of original features according to a certain evaluation criterion and is effective in: Removing/Reduce effect of irrelevant data removing redundant data reducing dimensionality (binary model) increasing learning accuracy and improving result comprehensibility. 4

- 5. Other Definitions Process which select a subset of features defined by one of three approaches: 1) the subset with a specified size that optimizes an evaluation measure 2) the subset of smaller size that satisfies a certain restriction on the evaluation measure 3) the subset with the best compromise among its size and the value of its evaluation measure (general case). 5

- 7. Classifying FSAs The FSAs can be classified according to the kind of output they yield: 1) Algorithms that giving a weighed linear order of features. (Continuous feature selection problem) 2) Algorithms that giving a subset of the original features. (Binary feature selection problem) Note that both types can be seen in an unified way by noting that in (2) the weighting is binary. 7

- 8. Notation 8

- 9. Relevance of a feature The purpose of a FSA is to identify relevant features according to a definition of relevance. Unfortunately the notion of relevance in machine learning has not yet been rigorously defined on a common agreement. Let us to define Relevance in many aspect: 9

- 10. Relevance with respect to an objective 10

- 11. Strong relevance with respect to S 11

- 12. Tid Refund Marital Status Taxable Income Cheat 1 Yes Single 125K No 2 No Married 100K No 3 No Single 70K No 4 Yes Married 120K No 5 No Divorced 95K Yes 6 No Married 60K No 7 Yes Divorced 220K No 8 No Single 85K Yes 9 No Married 75K No 10 No Single 90K Yes 10 MarSt Refund TaxInc YESNO NO NO Yes No Married Single, Divorced < 80K > 80K 12

- 13. Strong relevance with respect to p 13

- 14. Weak relevance with respect to S 14

- 15. Weak relevance with respect to p 15

- 16. Strongly Relevant Features The strongly relevant features are, in theory, important to maintain a structure in the domain And they should be conserved by any feature selection algorithm in order to avoid the addition of ambiguity to the sample. 16

- 17. Weakly Relevant FeaturesWeakly relevant features could be important or not, depending on: The other features already selected. The evaluation measure that has been chosen (accuracy, simplicity, consistency, etc.). 17

- 18. Relevance as a complexity measure Define r(S,c) Smallest number of relevant features to c The error in S is the least possible for the inducer. In other words, it refers to the smallest number of features required by a specific inducer to reach optimum performance in the task of modeling c using S. 18

- 20. Example X1…………...X11…………....X21……………..X30 100000000000000000000000000000 + 111111111100000000000000000000 + 000000000011111111110000000000 + 000000000000000000001111111111 + 000000000000000000000000000000 – X1 is strongly relevant, the rest are weakly relevant. r(S,c) = 3 Incremental usefulness: after choosing {X1, X2}, none of X3…X10 would be incrementally useful, but any of X11…X30 would. 20

- 21. General Schemes for Feature Selection Relationship between a FSA and the inducer Inducer: • Chosen process to evaluate the usefulness of the features • Learning Process Filter Scheme Wrapper Scheme Embedded Scheme 21

- 22. Filter Scheme Feature selection process takes place before the induction step This scheme is independent of the induction algorithm. • High Speed • Low Accuracy 22

- 23. Wrapper Scheme Use the learning algorithm as a subroutine to evaluate the features subsets. Inducer must be known. • Low Speed • High Accuracy 23

- 24. Embedded Scheme Similar to the wrapper approach Features are specifically selected for a certain inducer Inducer selects the features in the process of learning (Explicitly or Implicitly). 24

- 25. MarSt Refund TaxInc YESNO NO NO Yes No Married Single, Divorced < 80K > 80K 25 Embedded Scheme Example Refund Marital Status Taxable Income Age Cheat Yes Single 125K 18 No No Married 100K 30 No No Single 70K 28 No Yes Married 120K 19 No No Divorced 95K 18 Yes No Married 60K 20 No Yes Divorced 220K 25 No No Single 85K 30 Yes No Married 75K 20 No No Single 90K 18 Yes 10 Decision Tree Maker Algorithm, will Automatically Remove ‘Age’ Feature.

- 26. Characterization of FSAs Search Organization: General strategy with which the space of hypothesis is explored. Generation of Successors: Mechanism by which possible successor candidates of the current state are proposed. Evaluation Measure: Function by which successor candidates are evaluated. 26

- 27. Types of Search OrganizationWe consider three types of search: Exponential Sequential Random 27

- 30. Random Search Use Randomness to avoid the algorithm to stay on a local minimum. Allow temporarily moving to other states with worse solutions. These are anytime algorithms. Can give several optimal subsets as solution. 30

- 31. Types of Successors Generation Forward Backward Compound Weighting Random 31

- 34. Forward and Backward Method, Stopping Criterion 34

- 36. Weighting Successors Generation In weighting operators (continuous features). All of the features are present in the solution to a certain degree. A successor state is a state with a different weighting. This is typically done by iteratively sampling the available set of instances. 36

- 37. Random Successors Generation Includes those operators that can potentially generate any other state in a single step. Restricted to some criterion of advance: • In the number of features • In improving the measure J at each step. 37

- 51. 51

- 52. General Algorithm for Feature Selection All FSA can be represented in a space of characteristics according to the criteria of: search organization (Org) Generation of successor states (GS) Evaluation measures (J) This space <•Org, GS, J> encompasses the whole spectrum of possibilities for a FSA. hybrid FSA when FSA requires more than a point in the same coordinate to be characterized. 52

- 53. 53

- 54. FCBF Feature Correlation Based Filter (Filter Mode) <Sequential, Compound, Infor mation> 54

- 55. Previous Works and Their Defects1) Huge Time Complexity Binary Mode: Subset search algorithms search through candidate feature subsets guided by a certain search strategy and a evaluation measure. Different search strategies, namely, exhaustive, heuristic, and random search, are combined with this evaluation measure to form different algorithms. 55

- 56. Previous Works and Their Defects The time complexity is exponential in terms of data dimensionality for exhaustive search and quadratic for heuristic search. The complexity can be linear to the number of iterations in a random search, but experiments show that in order to find best feature subset, the number of iterations required is mostly at least quadratic to the number of features. 56

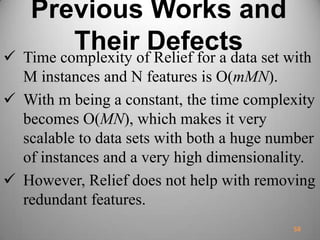

- 57. Previous Works and Their Defects2) Inability to recognize redundant features. Relief: The key idea of Relief is to estimate the relevance of features according to how well their values distinguish between the instances of the same and different classes that are near each other. Relief randomly samples a number (m) of instances from the training set and updates the relevance estimation of each feature based on the difference between the selected instance and the two nearest instances of the same and opposite classes. 57

- 58. Previous Works and Their Defects Time complexity of Relief for a data set with M instances and N features is O(mMN). With m being a constant, the time complexity becomes O(MN), which makes it very scalable to data sets with both a huge number of instances and a very high dimensionality. However, Relief does not help with removing redundant features. 58

- 59. Good Feature A feature is good if it is relevant to the class concept but is not redundant to any of the other relevant features. Correlation as Goodness Measure A feature is good if it is highly correlated to the class but not highly correlated to any of59

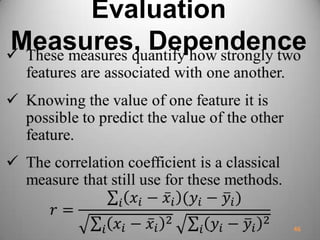

- 60. Approaches to Measure The Correlation Classical Linear Correlation (Linear Correlation Coefficient) Information theory (Entropy or Uncertainty) 60

- 62. Advantages It helps to remove features with near zero linear correlation to the class. It helps to reduce redundancy among selected features. Disadvantages It may not be able to capture correlations that are not linear in nature. Calculation requires all features contain numerical values. 62

- 63. Entropy 63

- 64. Entropy, Information Gain The amount by which the entropy of X decreases reflects additional information about X provided by Y: IG(X|Y) = H(X) - H(X|Y) Feature Y is regarded more correlated to feature X than to feature Z, if IG(X|Y) > IG(Z|Y) Information gain is symmetrical for two random variables X and Y: IG(X|Y) = IG(Y|X) 64

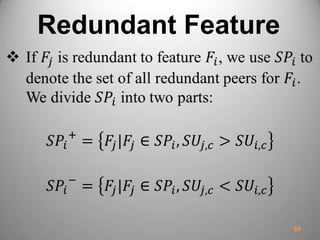

- 67. Algorithm Steps Aspects of developing a procedure to select good features for classification: 1) How to decide whether a feature is relevant to the class or not (C-correlation). 2) How to decide whether such a relevant feature is redundant or not when considering it with other relevant features (F-correlation). Select features with SU greater than a threshold. 67

- 70. Predominant Feature A feature is predominant to the class, iff: Its correlation to the class is predominant Or can become predominant after removing its redundant peers. Feature selection for classification is a process that identifies all predominant features to the class concept and removes the rest. 70

- 71. Heuristic 71

- 73. 73

- 74. 74

- 75. GA-SVM Generic Algorithm Support Vector Machine (Wrapper Mode) <Sequential, Compound, Clas sifier> 75

- 76. Support Vector Machine (SVM) SVM, one of the best techniques for pattern classification. Widely use in many application areas. SVM classifies data by determining a set of support vectors and their distance to hyperplane. SVM provides a generic mechanism that fits the hyperplane surface to the training data. 76

- 77. SVM Main Idea With this hypothesis that classes are linearly separable, make hyperplane with maximum margin to separate classes. When classes are not linearly separable, map them to high dimensional space to linearly separate them. 77Separating Surface: A+ A-

- 78. Support Vector 78 Class +1 Class -1 X2 X1 SV SV SV

- 79. Kernel 79 1 2 4 5 6 class 2 class 1class 1 1 Dimension 1 2 4 5 6 class 2 class 1class 1 2 Dimension

- 80. Kernel Data in higher dimensional! The user may select a kernel function for the SVM during the training process. The kernel parameters setting for SVM in a training process impacts on the classification accuracy. The parameters that should be optimized include penalty parameter C and the kernel function parameters. 80

- 81. Linear SVM 81

- 82. Linear SVM 82

- 83. Linear SVM 83

- 86. NonLinear SVM 86

- 89. Genetic Algorithm (GA) Genetic algorithms (GA), as a optimization search methodology is a promising alternative to conventional heuristic methods. GA work with a set of candidate solutions called a population. Based on the Darwinian principle of ‘survival of the fittest’, the GA obtains the optimal solution after a series of iterative computations. GA generates successive populations of alternate solutions that are represented by a chromosome. A fitness function assesses the quality of a solution in the evaluation step. 89

- 90. 90

- 92. Evaluation Measure Three criteria used to design a fitness function: Classification accuracy The number of selected features The feature cost Thus, for the individual (chromosome) with: High classification accuracy Small number of features Low total feature cost Produce a high fitness value. 92

- 94. 94