Final training course

- 1. Introduction for Deep Neural Network DNN with Python Asst. Prof. Dr. Noor Dhia Al-Shakarchy May 2021 Lecture 1

- 2. 2 Outlines Fundamentals of Deep Learning What is deep learning? Before we begin: the mathematical building blocks of neural networks Getting started with neural networks Fundamentals of machine learning

- 3. 3 What is deep learning Deep learning can also called Deep structured learning or hierarchical learning is part of the family of machine learning methods which are themselves a subset of the broader field of Artificial Intelligence. Deep learning is a class of machine learning algorithms that use several layers of nonlinear processing units for feature extraction and transformation. Each successive layer uses the output from the previous layer as input. Figure 1: Artificial intelligence, machine learning, and deep learning

- 4. 4 What is deep learning The main failure of traditional algorithms; which Deep learning is designed to overcome; can be presented as: 1- The Curse of Dimensionality 2- Local Constancy and Smoothness Regularization 3- Manifold Learning Deep Learning Algorithms and Networks based on the learning of multiple levels of features or representations of the data. Higher- level features are derived from lower level features to form a hierarchical representation by use some form of gradient descent for training. Many types of deep neural networks ( CNN, DBN, RNN) have been applied to fields such as computer vision, speech recognition, natural language processing, audio recognition, social network filtering, machine translation, and bioinformatics where they produced results comparable to and in some cases better than human experts have.

- 5. 5 Python Deep Learning Environment The first step to perform machine learning and deep learning is setting up the environment of Deep Learning to make a computer system powerful enough to handle the computing power necessary. Building a deep learning system can be intimidating and time-consuming, since the minimum requirements, to build a deep learning system, you would need: Graphics Processing Unit (GPU) :- NVIDIA GeForce GTX 1050 Ti 4 GB CPU:- Intel(R) Core (TM) i7-7700HQ CPU @ 2.80 GHz. RAM:- 16GB

- 6. 6 Python Deep Learning Environment With Python Deep Learning environment We have to install the following software for making deep learning algorithms. Python 2.7+ Scipy with Numpy Matplotlib Theano TensorFlow Keras To ensure that the different types of software are installed properly, go to our python command line and check python version and in this python command line can import the required libraries and print their versions Example: Import numpy print(numpy.__version__)

- 7. 7 Python Deep Learning Environment Installation of TensorFlow on Windows 10: With deep neural network can be works with CPU (but that have more limitation and time consuming). In this case the tensorflow installed directly with: >> pip install --upgrade tensorflow

- 8. 8 Python Deep Learning Environment Installation of TensorFlow with GPU on Windows 10: Pre-requisites Check the software you will need to install Microsoft Visual Studio CUDA for Windows 10 — 9.0 CUDNN for Windows 10 — 9.0 Tensorflow GPU — 1.5.0 Microsoft Visual Studio Install CUDA Toolkit 9.0 Copy cudnn64_7.dll from the cuDNN Library Add CUDA folder to the PATH Install Tensorflow GPU 1.5 Verify Tensorflow Installation

- 9. 9 Python Deep Learning Environment Checking your Windows GPU (from Device Manager>> Display adapter). NVIDIA has published a list of CUDA enabled GPU’s on their site (https://developer.nvidia.com/cuda-gpus)

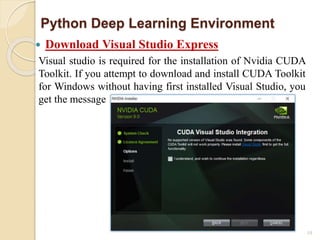

- 10. 10 Python Deep Learning Environment Download Visual Studio Express Visual studio is required for the installation of Nvidia CUDA Toolkit. If you attempt to download and install CUDA Toolkit for Windows without having first installed Visual Studio, you get the message

- 11. 11 Python Deep Learning Environment Installing the CUDA drivers: Download the CUDA Toolkit 9.0 version from the following link (https://developer.nvidia.com/cuda-downloads) then installation it: CUDA Toolkit 9.0 Downloads Note: be sure to download the right version of the driver

- 12. 12 Python Deep Learning Environment Download and Install the CuDNN libraries The CuDNN libraries are an update to CUDA for Deep Neural Nets, and used by TensorFlow to accelerate deep learning on NVidia GPUs. Tensorflow requires a file cudnn64_7.dll to work. This file is available from a zip file in the cuDNN archive. You can download them from: https://developer.nvidia.com/cudnn.

- 13. 13 Python Deep Learning Environment After you download the library, open the zip file, the file (cudnn64_7.dll) needs to be added to the bin folder in the CUDA folder. In my PC, the program was installed in the following location: C:Program FilesNVIDIA GPU Computing ToolkitCUDAv9.0bin Extract the dll file to the bin folder. r

- 14. 14 Python Deep Learning Environment Add CUDA folder to the PATH We need to edit the environment variables with the CUDA location.

- 15. 15 Python Deep Learning Environment Install Tensorflow GPU 1.5 Now open command prompt and type the following command: >> pip install tensorflow-gpu==1.5.0

- 16. 16 Python Deep Learning Environment Verify Tensorflow Installation You can verify the Tensorflow installation by using the following commands in the Windows command prompt: >> import tensorflow as tf >> print (tf.__version__) Installation and Verify Keras >> pip install keras (installation library) You can verify the keras: >>> import keras Using TensorFlow backend. >>> print (keras.__version__) 2.2.4

- 17. 17 Before we begin: the mathematical building blocks of neural networks Learning means finding a combination of model parameters that minimize a loss function for a given set of training data samples and their corresponding targets. Learning happens by drawing random batches of data samples and their targets and computing the gradient of the network parameters with respect to the loss on the batch. The network parameters are then moved a bit (the magnitude of the move is defined by the learning rate) in the opposite direction from the gradient. The entire learning process is made possible by the fact that neural networks are chains of differentiable tensor operations, and thus it’s possible to apply the chain rule of derivation to find the gradient function mapping the current parameters and current batch of data to a gradient value.

- 18. 18 Before we begin: the mathematical building blocks of neural networks Two key concepts are loss and optimizers. These are the two things you need to define before you begin feeding data into a network. The loss is the quantity you’ll attempt to minimize during training, so it should represent a measure of success for the task you’re trying to solve. The optimizer specifies the exact way in which the gradient of the loss will be used to update parameters: for instance, it could be the RMSProp optimizer, SGD with momentum, and so on.

- 19. Create a Keras model with TensorFlow Keras and TensorFlow 2.0 provide three methods to implement your own neural network architectures: Sequential API Functional API Model subclassing 19

- 20. Create a Keras model with TensorFlow A sequential model, as the name suggests, allows you to create models layer-by-layer in a step-by-step fashion. Keras Sequential API is by far the easiest way to get up and running with Keras, but it’s also the most limited, A sequential model is not appropriate when: Share layers Have branches (at least not easily) Have multiple inputs Have multiple outputs non-linear topology 20

- 21. Fundamentals of DNN Define the problem at hand and the data on which you’ll train. Collect this data, or annotate it with labels if need be. divide dataset to: Train subset 85% (actual train 80% and validation sets 20%) Test subset 15% 21

- 22. Fundamentals of DNN Choose how you’ll measure success on your problem. Which metrics will you monitor on your validation data? Determine your evaluation protocol: hold-out validation? K-fold validation? Which portion of the data should you use for validation? 22

- 23. Fundamentals of DNN Develop a first model that does better than a basic baseline: a model with statistical power. Develop a model that overfits. Regularize your model and tune its hyperparameters, based on performance on the validation data. 23

- 24. THANK YOU End of lecture 1

- 25. Introduction for Deep Neural Network DNN with Python Lecture 2

- 26. Outlines/ First Project in DNN The steps for first project are as follows: 1. Load Data. 2. Define Keras Model. 3. Compile Keras Model. 4. Fit Keras Model. 5. Evaluate Keras Model. 6. Make Predictions 26

- 27. First Project in DNN 1. Load Data. Download the dataset and place it in your local working directory, as your first project, The Pima Indians onset of diabetes dataset. It describes patient medical record data for Pima Indians and whether they had an onset of diabetes within five years. It is “csv file” contains 8 attributes, plus class The problem is a binary classification (onset of diabetes as 1 or not as 0). 27

- 28. First Project in DNN 28 The dataset is available from here: Dataset CSV File (pima-indians- diabetes.csv) Dataset Details • inside the file, you should see rows of data like the following

- 29. First Project in DNN 29 We can now load the file as a matrix of numbers using the NumPy function loadtxt(). There are eight input variables and one output variable (the last column). We will be learning a model to map rows of input variables (X) to an output variable (y), which we often summarize as: y = f(X).

- 30. First Project in DNN 30 Once the CSV file is loaded then split the columns of data into input and output variables. The data will be stored in a 2D array [rows, columns]. Split the array into two arrays. Select the first 8 columns from index 0 to index 7 via the slice 0:8. Select the output column (the 9th variable) via index 8.

- 31. code 31 # first neural network with keras tutorial from numpy import loadtxt from keras.models import Sequential from keras.layers import Dense # load the dataset dataset = loadtxt('pima-indians-diabetes.data.csv', delimiter=',') # split into input (X) and output (y) variables X = dataset[:,0:8] # training subset y = dataset[:,8] # label

- 32. 2. Define Keras Model Models in Keras are defined as a sequence of layers. We create a Sequential model and add layers one at a time until we are happy with our network architecture. 32

- 33. 2. Define Keras Model # define the keras model model = Sequential() model.add(Dense(8, input_dim=8, activation='relu')) model.add(Dense(6, activation='relu')) model.add(Dense(1, activation='sigmoid')) model.summary() The model summary table reports the strength of the relationship between the model and the dependent variable. 33 No. of nodes

- 34. 3. Compile Keras Model Now that the model is defined, we can compile it. Training a network means finding the best set of weights to map inputs to outputs in our dataset. We must specify the loss function to use to evaluate a set of weights, the optimizer is used to search through different weights for the network and any optional metrics we would like to collect and report during training. 34

- 35. 3. Compile Keras Model In a binary classification problems, we will use cross entropy as the loss argument, which is defined in Keras as “binary_crossentropy“. We will define the optimizer as the efficient stochastic gradient descent algorithm “adam“. This is a popular version of gradient descent because it automatically tunes itself and gives good results in a wide range of problems. Finally, because it is a classification problem, we will collect and report the classification accuracy, defined via the metrics argument 35

- 36. 3. Compile Keras Model # compile the keras model model.compile(loss='binary_crossentropy', optimizer='adam', metrics=['accuracy']) Or: sgd = SGD(lr=0.001, decay=1e-6, momentum=0.9, nesterov=True) model.compile(loss='mse', optimizer=sgd, metrics=['accuracy']) print("construction DNN done") 36

- 37. 4. Fit Keras Model It is ready for efficient computation. Now it is time to execute the model on some data by train or fit our model on our loaded data by calling the fit() function on the model. Training occurs over epochs and each epoch is split into batches. Epoch: One pass through all of the rows in the training dataset. Batch: One or more samples considered by the model within an epoch before weights are updated. 37

- 38. 4. Fit Keras Model It The training process will run for a fixed number of iterations through the dataset called epochs, that we must specify using the epochs argument. We must also set the number of dataset rows that are considered before the model weights are updated within each epoch, called the batch size and set using the batch_size argument. For this problem, number of epochs =150 batch size = 10. 38

- 39. 4. Fit Keras Model We want to train the model enough so that it learns a good (or good enough) mapping of rows of input data to the output classification. The model will always have some error, but the amount of error will level out after some point for a given model configuration. This is called model convergence. 39

- 40. 4. Fit Keras Model # fit the keras model on the dataset model.fit(X, y, epochs=150, batch_size=10) 40

- 41. 5. Evaluate Keras Model You can evaluate your model on your training dataset using the evaluate() function on your model and pass it the same input and output used to train the model. # evaluate the keras model _, accuracy = model.evaluate(X, y) print('Accuracy: %.2f' % (accuracy*100)) 41

- 42. 6. Make Predictions 42 how can use the model to make predictions on new data? Making predictions in a new dataset we have not seen before is as easy as calling the predict() function on the model. we can call the predict_classes() function on the model to predict crisp classes directly

- 43. 6. Make Predictions/ code 43 # make probability predictions with the model predictions = model.predict(X) for i in range(5): print('%s => %d (expected %d)' % (X[i].tolist(), predictions[i], y[i])) # make class predictions with the model predictions = model.predict_classes(X) for i in range(5): print('%s => %d (expected %d)' % (X[i].tolist(), predictions[i], y[i]))

- 44. THANK YOU End Lecture 2

- 45. Introduction for Deep Neural Network DNN with Python Lecture 3

- 46. Outlines Plotting the training process Regularization Batch normalization Saving and loading the weights and the architecture of a model Visualize a Deep Learning Neural Network Model in Keras 46

- 47. Plotting the training process Matplotlib is a cross-platform, data visualization and graphical plotting library for Python and its numerical extension NumPy. # Code: history = model.fit(X, y, epochs=10, batch_size=10, verbose=2) print(history.history.keys()) print(history.history['acc']) 47

- 48. Plotting the training process # Code: # summarize history for accuracy import matplotlib.pyplot matplotlib.pyplot.plot(history.history['acc']) matplotlib.pyplot.title('model accuracy') matplotlib.pyplot.ylabel('accuracy') matplotlib.pyplot.xlabel('epoch') matplotlib.pyplot.legend(['train'], loc='upper left') matplotlib.pyplot.show() 48

- 49. Plotting the training process # Code: import matplotlib.pyplot # summarize history for loss matplotlib.pyplot.plot(history.history['loss']) matplotlib.pyplot.title('model loss') matplotlib.pyplot.ylabel('loss') matplotlib.pyplot.xlabel('epoch') matplotlib.pyplot.legend(['train'], loc='upper left') matplotlib.pyplot.show() 49

- 50. normalization layers Batch normalization layers are added to accelerate the training process and coordinate the update of multiple layers in the model. the general process is sketched in figure below: 50 Batch Normalization sketch for simple network

- 51. normalization layer Code 51 from keras.layers import BatchNormalization …. model.add(BatchNormalization())

- 52. Regularization One of the most important problems accrues during training the model is overfitting, this issue occurs if the model fits into the training set too well. This caused the model becomes difficult to generalize to unseen examples. That means the model accuracy will be higher in the training set than the validation/test set. The model can deal with this problem by adding regularization layers 52

- 53. Regularization The list of regularization parameters commonly used for dense, and convolutional modules: kernel_regularizer: Regularizer function applied to the weight matrix bias_regularizer: Regularizer function applied to the bias vector activity_regularizer: Regularizer function applied to the output of the layer (its activation) The regularization layers mostly used are: Dropout, L1/L2 regularization 53

- 54. Regularization dropout layer The dropout layer is reducing correlation between neurons The model present dropout layer after some layers to avoid the overfitting and to effectively control noise during the training process. 54 The dropout process

- 55. dropout layer Code 55 from keras.layers import Dropout …. model.add(Dropout(.25))

- 56. Regularization L1/L2 regularization This layer which also called “Elastic Net Regularization” tend to decrease overfitting of deep learning neural network model by regularization the weight. 56

- 57. dropout layer Code 57 # in the Densew layer: from keras.layers import regularizers from keras.regularizers import l2 from keras.constraints import unit_norm keras.regularizers.l1(0.01) keras.regularizers.l2(0.01) keras.regularizers.l1_l2(l1=0.01, l2=0.01) model.add(Dense(15, activation='relu', name='fc1', kernel_constraint=unit_norm(), kernel_regularizer=l2(0.01), bias_regularizer=l2(0.01)))

- 58. Saving and loading the weights and the architecture of a model Model architectures can be easily saved and loaded as follows: # save as JSON json_string = model.to_json() # save as YAML yaml_string = model.to_yaml() 58

- 59. Saving and loading the weights and the architecture of a model # save model model_json = model.to_json() open('proposed_architecture.json', 'w').write(model_json) # And the weights learned by our DNN on the training set model.save_weights('proposed_weights.h5', overwrite=True) 59

- 60. Saving and loading the weights and the architecture of a model # load model model_architecture = ‘proposed_architecture.json' model_weights = ‘proposed_weights.h5' model = model_from_json(open(model_architecture).read()) model.load_weights(model_weights) print("load model done") 60

- 61. Visualize a Deep Learning Neural Network Model in Keras They are: Summarize Model Visualize Model 61

- 62. Summarize Model Keras provides a way to summarize a model. The summary is textual and includes information about: The layers and their order in the model. The output shape of each layer. The number of parameters (weights) in each layer. The total number of parameters (weights) in the model. The summary can be created by calling the summary() function on the model Model. summary() 62

- 63. THANK YOU

![First Project in DNN

30

Once the CSV file is loaded then split the columns of

data into input and output variables.

The data will be stored in a 2D array [rows,

columns].

Split the array into two arrays.

Select the first 8 columns from index 0 to index 7 via

the slice 0:8.

Select the output column (the 9th variable) via index

8.](https://image.slidesharecdn.com/finaltrainingcourse-210609095442/85/Final-training-course-30-320.jpg)

![code

31

# first neural network with keras tutorial

from numpy import loadtxt

from keras.models import Sequential

from keras.layers import Dense

# load the dataset

dataset = loadtxt('pima-indians-diabetes.data.csv', delimiter=',')

# split into input (X) and output (y) variables

X = dataset[:,0:8] # training subset

y = dataset[:,8] # label](https://image.slidesharecdn.com/finaltrainingcourse-210609095442/85/Final-training-course-31-320.jpg)

![3. Compile Keras Model

# compile the keras model

model.compile(loss='binary_crossentropy',

optimizer='adam', metrics=['accuracy'])

Or:

sgd = SGD(lr=0.001, decay=1e-6, momentum=0.9,

nesterov=True)

model.compile(loss='mse', optimizer=sgd,

metrics=['accuracy'])

print("construction DNN done")

36](https://image.slidesharecdn.com/finaltrainingcourse-210609095442/85/Final-training-course-36-320.jpg)

![6. Make Predictions/ code

43

# make probability predictions with the model

predictions = model.predict(X)

for i in range(5):

print('%s => %d (expected %d)' % (X[i].tolist(),

predictions[i], y[i]))

# make class predictions with the model

predictions = model.predict_classes(X)

for i in range(5):

print('%s => %d (expected %d)' % (X[i].tolist(),

predictions[i], y[i]))](https://image.slidesharecdn.com/finaltrainingcourse-210609095442/85/Final-training-course-43-320.jpg)

![Plotting the training process

Matplotlib is a cross-platform, data visualization and

graphical plotting library for Python and its numerical

extension NumPy.

# Code:

history = model.fit(X, y, epochs=10, batch_size=10,

verbose=2)

print(history.history.keys())

print(history.history['acc'])

47](https://image.slidesharecdn.com/finaltrainingcourse-210609095442/85/Final-training-course-47-320.jpg)

![Plotting the training process

# Code:

# summarize history for accuracy

import matplotlib.pyplot

matplotlib.pyplot.plot(history.history['acc'])

matplotlib.pyplot.title('model accuracy')

matplotlib.pyplot.ylabel('accuracy')

matplotlib.pyplot.xlabel('epoch')

matplotlib.pyplot.legend(['train'], loc='upper left')

matplotlib.pyplot.show()

48](https://image.slidesharecdn.com/finaltrainingcourse-210609095442/85/Final-training-course-48-320.jpg)

![Plotting the training process

# Code:

import matplotlib.pyplot

# summarize history for loss

matplotlib.pyplot.plot(history.history['loss'])

matplotlib.pyplot.title('model loss')

matplotlib.pyplot.ylabel('loss')

matplotlib.pyplot.xlabel('epoch')

matplotlib.pyplot.legend(['train'], loc='upper left')

matplotlib.pyplot.show()

49](https://image.slidesharecdn.com/finaltrainingcourse-210609095442/85/Final-training-course-49-320.jpg)