Flink Forward Berlin 2017: Stefan Richter - A look at Flink's internal data structures and algorithms for efficient checkpointing

- 1. 1 Stefan Richter @StefanRRichter September 13, 2017 A Look at Flink’s Internal Data Structures for Efficient Checkpointing

- 2. Flink State and Distributed Snapshots 2 State Backend Stateful Operation Source Event

- 3. 3 Trigger checkpoint Inject checkpoint barrier Stateful Operation Source Flink State and Distributed Snapshots „Asynchronous Barrier Snapshotting“

- 4. 4 Take state snapshot Synchronously trigger state snapshot (e.g. copy-on-write) Flink State and Distributed Snapshots Stateful Operation Source „Asynchronous Barrier Snapshotting“

- 5. 5 DFS Processing pipeline continues Durably persist full snapshots asynchronously For each state backend: one writer, n reader Flink State and Distributed Snapshots Stateful Operation Source

- 6. Challenges for Data Structures ▪ Asynchronous Checkpoints: ▪ Minimize pipeline stall time while taking the snapshot. ▪ Keep overhead (memory, CPU,…) as low as possible while writing the snapshot. ▪ Support multiple parallel checkpoints. 6

- 7. 7 DFS Durably persist incremental snapshots asynchronously Processing pipeline continues ΔΔ (differences from previous snapshots) Flink State and Distributed Snapshots Stateful Operation Source

- 8. Full Snapshots 8 K S 2 B 4 W 6 N K S 2 B 3 K 4 L 6 N K S 2 Q 3 K 6 N 9 S K S 2 B 4 W 6 N K S 2 B 3 K 4 L 6 N K S 2 Q 3 K 6 N 9 S Checkpoint 1 Checkpoint 2 Checkpoint 3 time State Backend (Task-local) Checkpoint Directory (DFS) Updates Updates

- 9. Checkpoint Directory (DFS) Incremental Snapshots 9 K S 2 B 4 W 6 N K S 2 B 3 K 4 L 6 N K S 2 Q 3 K 6 N 9 S K S 2 B 4 W 6 N K S 3 K 4 L K S 2 Q 4 - 9 S iCheckpoint 1 iCheckpoint 2 iCheckpoint 3 Δ(-, icp1) Δ(icp1, icp2) Δ(icp2, icp3) time State Backend (Task-local) Updates Updates

- 10. 5 TB 10 TB 15 TB Incremental Full Incremental vs Full Snapshots 10 Checkpoint Duration State Size

- 11. State Backend (Task-local) Incremental Recovery 11 K S 2 B 4 W 6 N K S 2 B 3 K 4 L 6 N K S 2 Q 3 K 6 N 9 S K S 2 B 4 W 6 N K S 3 K 4 L K S 2 Q 4 - 9 S time Recovery? + + iCheckpoint 1 iCheckpoint 2 iCheckpoint 3 Δ(-, icp1) Δ(icp1, icp2) Δ(icp2, icp3) Checkpoint Directory (DFS)

- 12. Challenges for Data Structures ▪ Incremental checkpoints: ▪ Efficiently detect the (minimal) set of state changes between two checkpoints. ▪ Avoid unbounded checkpoint history. 12

- 13. Two Keyed State Backends 13 HeapKeyedStateBackend RocksDBKeyedStateBackend • State lives in memory, on Java heap. • Operates on objects. • Think of a hash map {key obj -> state obj}. • Goes through ser/de during snapshot/restore. • Async snapshots supported. • State lives in off-heap memory and on disk. • Operates on serialized bytes. • Think of K/V store {key bytes -> state bytes}. • Based on log-structured-merge (LSM) tree. • Goes through ser/de for each state access. • Async and incremental snapshots.

- 14. Asynchronous and Incremental Checkpoints with the RocksDB Keyed-State Backend 14

- 15. What is RocksDB ▪ Ordered Key/Value store (bytes). ▪ Based on LSM trees. ▪ Embedded, written in C++, used via JNI. ▪ Write optimized (all bulk sequential), with acceptable read performance. 15

- 16. RocksDB Architecture (simplified) 16 Memory Local Disk K: 2 V: T K: 8 V: W Memtable - Mutable Memory Buffer for K/V Pairs (bytes) - All reads / writes go here first - Aggregating (overrides same unique keys) - Asynchronously flushed to disk when full

- 17. RocksDB Architecture (simplified) 17 Memory Local Disk K: 4 V: Q Memtable K: 2 V: T K: 8 V: WIdx SSTable-(1) BF-1 - Flushed Memtable becomes immutable - Sorted By Key (unique) - Read: first check Memtable, then SSTable - Bloomfilter & Index as optimisations

- 18. RocksDB Architecture (simplified) 18 Memory Local Disk K: 2 V: A K: 5 V: N Memtable K: 4 V: Q K: 7 V: SIdx SSTable-(2) K: 2 V: T K: 8 V: WIdx SSTable-(1) K: 2 V: C K: 7 -Idx SSTable-(3) BF-1 BF-2 BF-3 - Deletes are explicit entries - Natural support for snapshots - Iteration: online merge - SSTables can accumulate

- 19. RocksDB Compaction (simplified) 19 K: 2 V: C K: 7 - K: 4 V: Q K: 7 V: S K: 2 V: T K: 8 V: W K: 2 V: C K: 4 V: Q K: 8 V: W multiway sorted merge „Log Compaction“ SSTable-(3) SSTable-(1)SSTable-(2) SSTable-(1, 2, 3) (rm)

- 20. RocksDB Asynchronous Checkpoint ▪ Flush Memtable. (Simplified) ▪ Then create iterator over all current SSTables (they are immutable). ▪ New changes go to Memtable and future SSTables and are not considered by iterator. 20

- 21. RocksDB Incremental Checkpoint ▪ Flush Memtable. ▪ For all SSTable files in local working directory: upload new files since last checkpoint to DFS, re-reference other files. ▪ Do reference counting on JobManager for uploaded files. 21

- 22. Incremental Checkpoints - Example ▪ Illustrate interactions (simplified) between: ▪ Local RocksDB instance ▪ Remote Checkpoint Directory (DFS) ▪ Job Manager (Checkpoint Coordinator) ▪ In this example: 2 latest checkpoints retained 22

- 23. sstable-(1,2,3) sstable-(1) sstable-(2) sstable-(1) sstable-(2) sstable-(3) sstable-(4) sstable-(4) sstable-(5) CP 1 CP 2 CP 3 merge sstable-(1,2,3) sstable-(4,5,6)CP 4 merge +sstable-(6) sstable-(3) sstable-(4) sstable-(1) sstable-(2) sstable-(1,2,3) sstable-(5) sstable-(4,5,6) CP 1 CP 2CP 2 CP 3 CP 4 Incremental Checkpoints - Example TaskManager Local RocksDB Working Directory DFS Upload Job Manager

- 24. sstable-(1,2,3) sstable-(1) sstable-(2) sstable-(1) sstable-(2) sstable-(3) sstable-(4) sstable-(4) sstable-(5) CP 1 CP 2 CP 3 merge sstable-(1,2,3) sstable-(4,5,6)CP 4 merge +sstable-(6) sstable-(3) sstable-(4) sstable-(1) sstable-(2) sstable-(1,2,3) sstable-(5) sstable-(4,5,6) CP 1 CP 2CP 2 CP 3 CP 4 Incremental Checkpoints - Example TaskManager Local RocksDB Working Directory DFS Upload Job Manager

- 25. sstable-(1,2,3) sstable-(1) sstable-(2) sstable-(1) sstable-(2) sstable-(3) sstable-(4) sstable-(4) sstable-(5) CP 1 CP 2 CP 3 merge sstable-(1,2,3) sstable-(4,5,6)CP 4 merge +sstable-(6) sstable-(3) sstable-(4) sstable-(1) sstable-(2) sstable-(1,2,3) sstable-(5) sstable-(4,5,6) CP 1 CP 2CP 2 CP 3 CP 4 Incremental Checkpoints - Example TaskManager Local RocksDB Working Directory DFS Upload Job Manager

- 26. Incremental Checkpoints - Example sstable-(1,2,3) sstable-(1) sstable-(2) sstable-(1) sstable-(2) sstable-(3) sstable-(4) sstable-(4) sstable-(5) CP 1 CP 2 CP 3 sstable-(1,2,3) sstable-(4,5,6)CP 4 merge +sstable-(6) sstable-(3) sstable-(4) sstable-(1) sstable-(2) sstable-(1,2,3) sstable-(5) sstable-(4,5,6) CP 1 CP 2CP 2 CP 3 CP 4 TaskManager Local RocksDB Working Directory DFS Upload Job Manager

- 27. Incremental Checkpoints - Example sstable-(1,2,3) sstable-(1) sstable-(2) sstable-(1) sstable-(2) sstable-(3) sstable-(4) sstable-(4) sstable-(5) CP 1 CP 2 CP 3 merge sstable-(1,2,3) sstable-(4,5,6)CP 4 +sstable-(6) sstable-(3) sstable-(4) sstable-(1) sstable-(2) sstable-(1,2,3) sstable-(5) sstable-(4,5,6) CP 1 CP 2CP 2 CP 3 CP 4 TaskManager Local RocksDB Working Directory DFS Upload Job Manager

- 28. Incremental Checkpoints - Example sstable-(1,2,3) sstable-(1) sstable-(2) sstable-(1) sstable-(2) sstable-(3) sstable-(4) sstable-(4) sstable-(5) CP 1 CP 2 CP 3 merge sstable-(1,2,3) sstable-(4,5,6)CP 4 +sstable-(6) sstable-(3) sstable-(4) sstable-(1) sstable-(2) sstable-(1,2,3) sstable-(5) sstable-(4,5,6) CP 1 CP 2CP 2 CP 3 CP 4 TaskManager Local RocksDB Working Directory DFS Upload Job Manager

- 29. Incremental Checkpoints - Example 29 sstable-(1,2,3) sstable-(1) sstable-(2) sstable-(1) sstable-(2) sstable-(3) sstable-(4) sstable-(4) sstable-(5) CP 1 CP 2 CP 3 merge sstable-(1,2,3) sstable-(4,5,6)CP 4 merge +sstable-(6) sstable-(3) sstable-(4) sstable-(1) sstable-(2) sstable-(1,2,3) sstable-(5) sstable-(4,5,6) CP 1 CP 2CP 2 CP 3 CP 4 TaskManager Local RocksDB Working Directory DFS Upload Job Manager

- 30. Incremental Checkpoints - Example 30 sstable-(1,2,3) sstable-(1) sstable-(2) sstable-(1) sstable-(2) sstable-(3) sstable-(4) sstable-(4) sstable-(5) CP 1 CP 2 CP 3 merge sstable-(1,2,3) sstable-(4,5,6)CP 4 merge +sstable-(6) sstable-(3) sstable-(4) sstable-(1) sstable-(2) sstable-(1,2,3) sstable-(5) sstable-(4,5,6) CP 1 CP 2CP 2 CP 3 CP 4 TaskManager Local RocksDB Working Directory DFS Upload Job Manager

- 31. Summary RocksDB ▪ Asynchronous snapshots „for free“, immutable copy is already there. ▪ Trivially supports multiple concurrent snapshots. ▪ Detection of incremental changes easy: observe creation and deletion of SSTables. ▪ Bounded checkpoint history through compaction. 31

- 32. Asynchronous Checkpoints with the Heap Keyed-State Backend 32

- 33. Heap Backend: Chained Hash Map 33 S1 K1 Entry S2 K2 Entry next Map<K, S> { Entry<K, S>[] table; } Entry<K, S> { final K key; S state; Entry next; } Map<K, S>

- 34. 34 S1 K1 Entry S2 K2 Entry next Map<K, S> { Entry<K, S>[] table; } Entry<K, S> { final K key; S state; Entry next; } Map<K, S> Heap Backend: Chained Hash Map

- 35. 35 S1 K1 Entry S2 K2 Entry next Map<K, S> { Entry<K, S>[] table; } Entry<K, S> { final K key; S state; Entry next; } Map<K, S> Heap Backend: Chained Hash Map

- 36. 36 S1 K1 Entry S2 K2 Entry next Map<K, S> { Entry<K, S>[] table; } Entry<K, S> { final K key; S state; Entry next; } Map<K, S> Heap Backend: Chained Hash Map

- 37. 37 S1 K1 Entry S2 K2 Entry next Map<K, S> { Entry<K, S>[] table; } Entry<K, S> { final K key; S state; Entry next; } Map<K, S> „Structural changes to map“ (easy to detect) „User modifies state objects“ (hard to detect) Heap Backend: Chained Hash Map

- 38. Copy-on-Write Hash Map 38 S1 K1 Entry S2 K2 Entry next Map<K, S> „Structural changes to map“ (easy to detect) „User modifies state objects“ (hard to detect) Map<K, S> { Entry<K, S>[] table; int mapVersion; int requiredVersion; OrderedSet<Integer> snapshots; } Entry<K, S> { final K key; S state; Entry next; int stateVersion; int entryVersion; }

- 39. Copy-on-Write Hash Map - Snapshots 39 Map<K, S> { Entry<K, S>[] table; int mapVersion; int requiredVersion; OrderedSet<Integer> snapshots; } Entry<K, S> { final K key; S state; Entry next; int stateVersion; int entryVersion; } Create Snapshot: 1. Flat array-copy of table array 2. snapshots.add(mapVersion); 3. ++mapVersion; 4. requiredVersion = mapVersion; Release Snapshot: 1. snapshots.remove(snapVersion); 2. requiredVersion = snapshots.getMax();

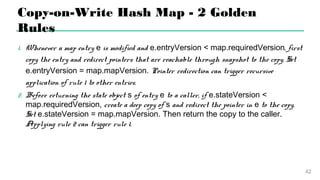

- 40. Copy-on-Write Hash Map - 2 Golden Rules 1.Whenever a map entry e is modified and e.entryVersion < map.requiredVersion, first copy the entry and redirect pointers that are reachable through snapshot to the copy. Set e.entryVersion = map.mapVersion. Pointer redirection can trigger recursive application of rule 1 to other entries. 2.Before returning the state object s of entry e to a caller, if e.stateVersion < map.requiredVersion, create a deep copy of s and redirect the pointer in e to the copy. Set e.stateVersion = map.mapVersion. Then return the copy to the caller. Applying rule 2 can trigger rule 1. 40

- 41. Copy-on-Write Hash Map - Example 41 sv:0 ev:0 sv:0 ev:0 K:42 S:7 K:23 S:3 mapVersion: 0 requiredVersion: 0

- 42. Copy-on-Write Hash Map - Example 42 sv:0 ev:0 sv:0 ev:0 K:42 S:7 K:23 S:3 snapshotVersion: 0 mapVersion: 1 requiredVersion: 1 snapshot() -> 0

- 43. sv:0 ev:0 sv:0 ev:0 S:7 S:3 Copy-on-Write Hash Map - Example 43 K:42 K:23 sv:1 ev:1 K:13 S:2 snapshotVersion: 0 mapVersion: 1 requiredVersion: 1 put(13, 2)

- 44. sv:0 ev:0 sv:0 ev:0 S:7 S:3 Copy-on-Write Hash Map - Example 44 K:42 K:23 K:13 sv:1 ev:1 S:2 snapshotVersion: 0 mapVersion: 1 requiredVersion: 1 get(23)

- 45. sv:0 ev:0 sv:0 ev:0 S:7 S:3 Copy-on-Write Hash Map - Example 45 K:42 K:23 K:13 sv:1 ev:1 S:2 snapshotVersion: 0 mapVersion: 1 requiredVersion: 1 get(23) Caller could modify state object!

- 46. sv:0 ev:0 sv:0 ev:0 S:7 S:3 Copy-on-Write Hash Map - Example 46 K:42 K:23 S:3 K:13 sv:1 ev:1 S:2 snapshotVersion: 0 mapVersion: 1 requiredVersion: 1 get(23) Trigger copy. How to connect? ?

- 47. sv:0 ev:0 sv:0 ev:0 S:7 S:3 Copy-on-Write Hash Map - Example 47 K:42 K:23 S:3 sv:1 ev:1 K:13 sv:1 ev:1 S:2 snapshotVersion: 0 mapVersion: 1 requiredVersion: 1 get(23) Copy entry. How to connect? ?

- 48. sv:0 ev:0 sv:0 ev:0 S:7 S:3K:23 S:3 sv:1 ev:1 Copy-on-Write Hash Map - Example 48 K:42K:13 sv:1 ev:1 S:2 sv:0 ev:1 snapshotVersion: 0 mapVersion: 1 requiredVersion: 1 get(23) Copy entry (recursive). Connect?

- 49. sv:0 ev:0 sv:0 ev:0 S:7 S:3 S:3 sv:1 ev:1 K:23 Copy-on-Write Hash Map - Example 49 K:42K:13 sv:1 ev:1 S:2 sv:0 ev:1 snapshotVersion: 0 mapVersion: 1 requiredVersion: 1 get(23)

- 50. S:3 sv:1 ev:1 sv:0 ev:0 sv:0 ev:0 S:7 S:3K:23 Copy-on-Write Hash Map - Example 50 K:42K:13 sv:1 ev:1 S:2 sv:0 ev:1 snapshotVersion: 0 mapVersion: 1 requiredVersion: 1 get(42)

- 51. S:3 sv:1 ev:1 sv:0 ev:0 sv:0 ev:0 S:7 S:3K:23 Copy-on-Write Hash Map - Example 51 K:42K:13 sv:1 ev:1 S:2 sv:0 ev:1 snapshotVersion: 0 mapVersion: 1 requiredVersion: 1 get(42) Copy required!

- 52. S:3 sv:1 ev:1 sv:0 ev:0 sv:0 ev:0 S:7 S:3K:23 Copy-on-Write Hash Map - Example 52 K:42 S:7 K:13 sv:1 ev:1 S:2 sv:1 ev:1 snapshotVersion: 0 mapVersion: 1 requiredVersion: 1 get(42)

- 53. K:23 S:3 sv:1 ev:1 Copy-on-Write Hash Map - Example 53 K:42 sv:1 ev:1 S:7 K:13 sv:1 ev:1 S:2 mapVersion: 1 requiredVersion: 0 release(0)

- 54. K:23 S:3 sv:1 ev:1 Copy-on-Write Hash Map - Example 54 sv:1 ev:1 S:2 K:42 sv:1 ev:1 S:7 K:13 snapshotVersion: 1 mapVersion: 2 requiredVersion: 2 snapshot() -> 1

- 55. K:23 S:3 sv:1 ev:1 Copy-on-Write Hash Map - Example 55 sv:1 ev:1 S:2 K:42 sv:1 ev:1 S:7 K:13 snapshotVersion: 1 mapVersion: 2 requiredVersion: 2 remove(23) Change next-pointer?

- 56. K:23 S:3 sv:1 ev:1 Copy-on-Write Hash Map - Example 56 sv:1 ev:1 S:2 K:42 sv:1 ev:1 S:7 K:13 snapshotVersion: 1 mapVersion: 2 requiredVersion: 2 remove(23) Change next-pointer?

- 57. sv:1 ev:2 K:23 S:3 sv:1 ev:1 Copy-on-Write Hash Map - Example 57 sv:1 ev:1 S:2 K:42 sv:1 ev:1 S:7 K:13 snapshotVersion: 1 mapVersion: 2 requiredVersion: 2 remove(23)

- 58. sv:1 ev:2 Copy-on-Write Hash Map - Example 58 K:42 S:7 K:13 sv:1 ev:1 S:2 mapVersion: 2 requiredVersion: 0 release(1)

- 59. Copy-on-Write Hash Map Benefits ▪ Fine granular, lazy copy-on-write. ▪ At most one copy per object, per snapshot. ▪ No thread synchronisation between readers and writer after array-copy step. ▪ Supports multiple concurrent snapshots. ▪ Also implements efficient incremental rehashing. ▪ Future: Could serve as basis for incremental snapshots. 59

- 60. Questions? 60

Editor's Notes

- #2: I would like to start with a very brief recap how state and checkpoints work in Flink to motivate the challenges the impose on the data structures of our backends.

- #5: State backend is local to operator so that we can operate up to the speed of local memory variables. But how can we get a consistent snapshot of the distributed state?

- #11: Users want to go to 10TB or even up to 50TB state! significant overhead! Beauty: self contained, can be easily deleted or recovered

- #75: RocksDB is an embeddable persistent k/v store for fast storage. If user modifies state under key 42 3 times, only the latest change is reflected in memtable.

- #77: Checkpoint coordinator lives in the JobMaster State backend working area is local memory / local disk Checkpointing area is DFS (stable, remote, replicated)

- #81: FLUSH memtable as first step, so that all deltas are on disk SST files are immutable, their creation and deletion is the delta. we have a shared folder for the deltas (sstables) that belong to the operator instance

- #86: Important new step, we can only reference successful incremental checkpoints in future checkpoints!

- #88: New set of sst tables, 225 is missing, so is 227… we can assume the got merged and consolidated in 228 or 229.

- #98: Think of events of user interactions, key is a user id and we store a click count for each user. We talk about keyed state.

![Heap Backend: Chained Hash Map

33

S1

K1

Entry

S2

K2

Entry

next

Map<K, S> {

Entry<K, S>[] table;

}

Entry<K, S> {

final K key;

S state;

Entry next;

}

Map<K, S>](https://image.slidesharecdn.com/ff17berlin-170914104549/85/Flink-Forward-Berlin-2017-Stefan-Richter-A-look-at-Flink-s-internal-data-structures-and-algorithms-for-efficient-checkpointing-33-320.jpg)

![34

S1

K1

Entry

S2

K2

Entry

next

Map<K, S> {

Entry<K, S>[] table;

}

Entry<K, S> {

final K key;

S state;

Entry next;

}

Map<K, S>

Heap Backend: Chained Hash Map](https://image.slidesharecdn.com/ff17berlin-170914104549/85/Flink-Forward-Berlin-2017-Stefan-Richter-A-look-at-Flink-s-internal-data-structures-and-algorithms-for-efficient-checkpointing-34-320.jpg)

![35

S1

K1

Entry

S2

K2

Entry

next

Map<K, S> {

Entry<K, S>[] table;

}

Entry<K, S> {

final K key;

S state;

Entry next;

}

Map<K, S>

Heap Backend: Chained Hash Map](https://image.slidesharecdn.com/ff17berlin-170914104549/85/Flink-Forward-Berlin-2017-Stefan-Richter-A-look-at-Flink-s-internal-data-structures-and-algorithms-for-efficient-checkpointing-35-320.jpg)

![36

S1

K1

Entry

S2

K2

Entry

next

Map<K, S> {

Entry<K, S>[] table;

}

Entry<K, S> {

final K key;

S state;

Entry next;

}

Map<K, S>

Heap Backend: Chained Hash Map](https://image.slidesharecdn.com/ff17berlin-170914104549/85/Flink-Forward-Berlin-2017-Stefan-Richter-A-look-at-Flink-s-internal-data-structures-and-algorithms-for-efficient-checkpointing-36-320.jpg)

![37

S1

K1

Entry

S2

K2

Entry

next

Map<K, S> {

Entry<K, S>[] table;

}

Entry<K, S> {

final K key;

S state;

Entry next;

}

Map<K, S>

„Structural

changes to map“

(easy to detect)

„User modifies

state objects“

(hard to

detect)

Heap Backend: Chained Hash Map](https://image.slidesharecdn.com/ff17berlin-170914104549/85/Flink-Forward-Berlin-2017-Stefan-Richter-A-look-at-Flink-s-internal-data-structures-and-algorithms-for-efficient-checkpointing-37-320.jpg)

![Copy-on-Write Hash Map

38

S1

K1

Entry

S2

K2

Entry

next

Map<K, S>

„Structural

changes to map“

(easy to detect)

„User modifies

state objects“

(hard to

detect)

Map<K, S> {

Entry<K, S>[] table;

int mapVersion;

int requiredVersion;

OrderedSet<Integer> snapshots;

}

Entry<K, S> {

final K key;

S state;

Entry next;

int stateVersion;

int entryVersion;

}](https://image.slidesharecdn.com/ff17berlin-170914104549/85/Flink-Forward-Berlin-2017-Stefan-Richter-A-look-at-Flink-s-internal-data-structures-and-algorithms-for-efficient-checkpointing-38-320.jpg)

![Copy-on-Write Hash Map - Snapshots

39

Map<K, S> {

Entry<K, S>[] table;

int mapVersion;

int requiredVersion;

OrderedSet<Integer> snapshots;

}

Entry<K, S> {

final K key;

S state;

Entry next;

int stateVersion;

int entryVersion;

}

Create Snapshot:

1. Flat array-copy of table array

2. snapshots.add(mapVersion);

3. ++mapVersion;

4. requiredVersion = mapVersion;

Release Snapshot:

1. snapshots.remove(snapVersion);

2. requiredVersion = snapshots.getMax();](https://image.slidesharecdn.com/ff17berlin-170914104549/85/Flink-Forward-Berlin-2017-Stefan-Richter-A-look-at-Flink-s-internal-data-structures-and-algorithms-for-efficient-checkpointing-39-320.jpg)