Ad

From SQL to Python - A Beginner's Guide to Making the Switch

- 1. FROM SQL TO PYTHON: HANDS-ON DATA ANALYTICS AND MACHINE LEARNING RACHEL BERRYMAN DATA SCIENTIST – TEMPUS ENERGY [email protected]

- 2. ABOUT ME MSc Sustainable Development, BA Economics Senior Energy Data Analyst Data Science Retreat Batch 12, 2017 Data Scientist at Tempus Energy. Instructor of Model Pipelines course at DSR

- 3. FROM DATA ANALYSIS TO DATA SCIENCE What’s the difference between data analysis and Data Science? How do I know if a career in Data Science is right for me? How do I make the switch to a career in Data Science?

- 4. WHAT IS DATA SCIENCE? • Data Science definition (Wikipedia): Data Science is a concept to unify statistics, data analysis, machine learning and their related methods in order to understand and analyze actual phenomena with data. It employs techniques and theories drawn from many fields within the broad areas of mathematics, statistics, information science, and computer science.

- 5. DATA ANALYSIS VS. DATA SCIENCE: WHAT’S THE DIFFERENCE? • Data Analysis mainly looks at the present and past. It answers questions like: • How much revenue did we bring in last year? • What is product does customer X buy most frequently? • Data Science mainly looks at the present and future. It answers questions like: • What products should we invest in expanding for the future? • What product should we recommend to customer X so that they buy more when they visit our site, based on their most-purchased products in the past?

- 6. DATA ANALYSIS VS. DATA SCIENCE: WHAT’S THE DIFFERENCE? • Data Analysis works mainly with proprietary tools • Oracle, MS SQL Server, Tableau. • Data Science works mainly with open-source tools • Open-source languages and packages: ie Python, scikit-learn, keras, matplotlib

- 7. DATA ANALYSIS VS. DATA SCIENCE: WHAT’S THE DIFFERENCE? • Data Analysis works with data from one or few sources • Ex: data from an in-house SQL database. • Data Science works with data from many varying sources • Ex: data from in-house SQL database, data scraped from the web, text data from customer surveys, data from across multiple departments

- 8. WHY DO COMPANIES NEED DATA SCIENTISTS? • More and more data comes in unstructured formats: ex, natural language in emails and social media posts, photos, audio files. • Data Scientists make use of this data and apply it to pressing business questions.

- 10. IS DATA SCIENCE RIGHT FOR ME? You like to code You like to work across many teams and find synergies You enjoy getting “stuck in” and working through challenges You consider yourself a life-long learner You like math You seek out work and answers to questions: you don’t wait for questions to be delegated to you

- 11. HOW CAN I MAKE THE SWITCH FROM DATA ANALYSIS TO DATA SCIENCE? • Learn the basics: • Practical • Command Line • Git and Github • A common Data Science programming language (Python, or R). • Theoretical • Machine Learning Algorithms • Supervised, Unsupervised • Go further: • MOOCs • Intensive deep-dive: Bootcamp/Retreat

- 12. LEARN THE BASICS: COMMAND LINE & GIT • Command line is how you directly interact with your computer (sans GUI). It is the “ultimate seat of power for your computer”. • Git is a distributed version control system. Git is responsible for keeping track of changes to content (usually source code files), and it provides mechanisms for sharing that content with others. GitHub is a company that provides Git repository hosting. • Learn how to use your command line to clone repositories (‘repos’) from GitHub. You will open up a world of learning opportunities!

- 13. LEARN THE BASICS: PYTHON FOR DATA MUNGING AND ANALYSIS • In SQL, you’re usually using a company-purchased software like Oracle SQL Developer, or MS SQL Server Management Studio.

- 14. LEARN THE BASICS: PYTHON FOR DATA MUNGING AND ANALYSIS Python Interpreter iPython IDE/Jupyter Get Coding!

- 15. LEARN THE BASICS: PYTHON FOR DATA MUNGING AND ANALYSIS Python Interpreter

- 16. LEARN THE BASICS: PYTHON FOR DATA MUNGING AND ANALYSIS iPython

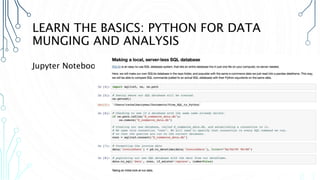

- 17. LEARN THE BASICS: PYTHON FOR DATA MUNGING AND ANALYSIS Jupyter Notebook

- 18. LEARN THE BASICS: PYTHON FOR DATA MUNGING AND ANALYSIS • IDEs: • Python-specific: • PyCharm • Spyder • Thonny • General, with support for Python: • Atom (also can add iPython with Hydrogen) • Sublime Text • Vim

- 19. LEARN THE BASICS: PYTHON FOR DATA MUNGING AND ANALYSIS

- 20. LEARN THE BASICS: PYTHON FOR DATA MUNGING AND ANALYSIS

- 21. PYTHON BEYOND DATA SCIENCE

- 22. LEARN THE BASICS: PYTHON FOR DATA MUNGING AND ANALYSIS • Start with what you know! • Write SQL commands in Python • Automate what you would have to do manually in Excel • Use matplotlib to make visualizations you would have done in Tableau • Learn what you don’t know • Python packages, modules, libraries • Object-Oriented Programming (OOP)

- 23. LEARN THE BASICS: PYTHON FOR DATA SCIENCE AND MACHINE LEARNING • Github Repo with practice for switching from SQL to Python • Clone repo: https://github.com/rachelkberryman/From_SQL_to_Python • cd into repo, and run command “jupyter notebook” (more information about jupyter notebooks here) • Includes: • sample SQL queries with Python equivalents • examples of Python functions for data manipulation and analysis • sample visualizations in Seaborn

- 24. LEARN THE BASICS: PYTHON FOR DATA SCIENCE AND MACHINE LEARNING • By applying machine learning algorithms (with code), you will learn them MUCH more quickly than by only reading about them • Even better, apply them to a sample dataset (work and/or passion project)

- 25. GOING FURTHER: MOOCS VS. DEEP DIVE • The jump from automating what you already know to working with predictive models is where most people get overwhelmed. • Need for more structured learning: MOOCs vs. Deep Dive

- 26. GOING FURTHER: MOOCS VS. DEEP DIVE MOOCs: • PROs: • On your own time • Little risk • CONs: • No or little help when stuck • No supportive community/job help Deep Dive: • PROs: • Structure and support • Faster learning rate • Network and hiring support • CONs: • Risk and opportunity cost

- 27. MAKING THE SWITCH: GOING FURTHER • MOOCs: • Andrew Ng’s Machine Learning course on Coursera • Explanation of machine learning algorithms • Applied Data Science with Python course on Coursera • Coding practice in notebooks, with explanation videos • Deep Dive: Data Science Retreat • 3 months of intensive data science teaching and training in Berlin • Culminates in final portfolio project

- 28. MORE RESOURCES • On Python: • Think Python: Thinking Like a Computer Scientist, Allan B. Downey • Fluent Python, Luciano Ramalho • On DS/ML: • Think Bayes: Bayesian Statistics in Python, Allan B. Downey • The Master Algorithm, Pedro Domingos • Pattern Recognition in Machine Learning, Christopher Bishop

Editor's Notes

- #3: SaaS platform for energy utility bills: got data in a lot of formats, and basically just fit it into the Database. Creative bit was getting to use tableau. Quickly realized I wanted to work on more intense analytics/topics. Thrown in the deep end at DSR, but I survived.

- #5: To process all of this unstructured data, you need to not only analyze it and be able to make predictions about what it will do in the future, but you also build solutions for managing it, storing it, and manipulating it. This is why many people see Data Scientists as first and foremost being Software Engineers. Source: Wikipedia.

- #6: DS: we don’t just query the data that’s already there, we use that data to create predictions for the future.

- #7: Could be argued, but I’ve found this to be true in industry. Proprietary tools cost money, and you’re (mostly) bound to only the data your company has.

- #8: Data Science is much more synergetic.

- #9: https://blog.samanage.com/insights/whats-the-difference-between-structured-and-unstructured-data/

- #10: https://blog.samanage.com/insights/whats-the-difference-between-structured-and-unstructured-data/

- #11: - Life long learner: because it’s such a new field, there are constantly new technologies and libraries coming out that you have to stay up on.

- #13: Command line is ESSENTIAL before learning any “proper” coding language. https://www.davidbaumgold.com/tutorials/command-line/ https://softwareengineering.stackexchange.com/questions/173321/conceptual-difference-between-git-and-github Git is a revision control system, a tool to manage your source code history. GitHub is a hosting service for Git repositories. So they are not the same thing: Git is the tool, GitHub is the service for projects that use Git.

- #14: It’s important to learn how different working in Python is from working in SQL. In SQL, you’re usually using a company-purchased software like Oracle SQL Developer, or MS SQL Server Management Studio. With these, you usually have authentications that let you run queries on various databases in the RDBMS. Because SQL is a QUERY language, it’s only as good as the data you have access to. The great thing about Python is that you’re not tied to one buggy editor for running your code. Also, you’re not tied to data from one source.

- #15: Python isn’t like this. Anyone can code in python if they have a computer, you just have to know how to get it started! To start learning Python, you have to get it working on your computer. To code in Python you have to have a python interpreter on your computer. This is the program that reads your python code and does what it says. Macs have this built in.

- #17: iPython is an interface to the python language. It lets you run small bits of code without writing entire programs. Usually, regular Python is used for scripts that you’ve already written. A script contains a list of commands to execute in order. It runs from start to finish and display some output. On the contrary, with IPython, you generally write one command at a time and you get the results instantly, and it has a lot of features to make it work better. Additional features: Tab autocompletion (on class names, functions, methods, variables) More explicit and colour-highlighted error messages Better history management Basic UNIX shell integration (you can run simple shell commands such as cp, ls, rm, cp, etc. directly from the IPython command line)

- #18: Another interface, but web-based. Makes it easy to share as you can save them as HTML files or PDFs. Lets you both run code (via an ipython kernel), and add text in markdown cells. Great for playing around and trying things.

- #19: IDE (or Integrated Development Environment) is a program dedicated to software development. (ideally) lets you work in both script and interactive modes. Good for when you’ve “graduated” from jupyter notebooks and need something to right more full length programs.

- #20: Anaconda is a distribution of Python, made specifically for data science. The goal is to make it easy to have everything you need to do data science in python. When you download it, you automatically get jupyter, and a lot of the core libraries. Now, about libraries…

- #21: There are a lot of libraries in python that directly deal with data: Ex: pandas There are also a LOT that don’t. Learn the ones that deal with data first! Also, learn the ones that deal with data that you already know how to work with first. Ex: learn pandas for working with CSVs before you learn beautifulsoup for scraping data off the web. Quick read on some of this biggest Python libraries: http://www.developintelligence.com/blog/python-ecosystem-2017/

- #23: When I started with python, I was frustrated not being able to do everything I could already do in SQL, as far as manipulating data. A lot of the tutorials are abstract and go too far in to the basics.

- #24: Once you have Python working, you can start using it on a real dataset. This is e-commerce data from Kaggle. Once you’ve played around a bit with the SQL-like and data-focused libraries, you can move on to learning about machine learning, and going beyond just analytics.

- #25: Get a rough idea, ex: If yours is a supervised or unsupervised learning problem, if it’s regression or classification, and then, read about each algorithm as you implement them.

- #26: MOOCs: Massive Open Online Course