Geep networking stack-linuxkernel

- 1. Linux Networking Stack 28th May 2014 Kiran Divekar

- 2. Agenda ● System calls in Networking world ● Client server model ● Linux networking stack ● Evolution of networking stack ● Driver Interface ● Introduction to Wifi Stack ● Wifi stack as an example ● Future...?

- 3. Simple router Control plane Network Driver Data Plane Control Plane Kernel Space Module 1 Module 2 Module n Intercard communication mechanism Interconnect Protocol Control Plane User Space Module 1 to Module n are processes on the CP

- 4. Problem definition ● All CP modules are communicating with each other IPC ● Control plane / Data plan communication happens over high speed network link ● Line cards can interact with other line cards or Control cards. ● ● ● And the router crashes ???

- 5. Things to look out for... ● Is kernel, network driver alive, kernel log, crash dump. ● see if there is a particular irq screaming in /proc/interrupts ● /proc/sys/net/* : networking information ● Check top, free output if any process is hogging cpu? ● Check ps to see expected processes/threads are alive : status of CP processes. ● Try to get some info from /proc/net/nf_conntrack_stats to see if a particular type of error packet is being reported ● Check firewall rules: iptables -L, ifconfig, route. ● Kernel/Application log indicating any error: /var/log/syslog

- 6. Going deeper... ● Check files, sockets owned by each process. ● cat /proc/$PID/* : fd, wchan ● /proc/net/tcp, /proc/net/udp ● netstat -apeen ● lsof (-i for networking) ● ● Socket Status [socket operation on non-socket] ● ● Kernel modules to spit information on data structures like task_struct, struct netdevice

- 7. Knowing the full stack... ● In order to understand the complete, kernel knowledge is necessary. – User space applications – Threads, socket types – Kernel interface through system calls – TCP IP stack inside the kernel – Interaction with network device driver. – And the kernel subsystems.

- 8. User space Kernel space Do you know this?

- 10. socket() bind() listen() accept() read() write() close() socket() bind() connect() write() read() close() The ‘server’ application The ‘client’ application 3-way handshake data flow to server data flow to client 4-way handshake Standard Socket Sequence

- 11. Socket() in kernel ● For every socket which is created by a userspace application, there is a corresponding socket struct and sock struct in the kernel int socket (int family, int type, int protocol); ● SOCK_STREAM : TCP, SOCK_DGRAM: UDP, SOCK_RAW. ● This system call eventually invokes the sock_create() method in the kernel. ● struct socket { /* ONLY important members */ socket_state state; unsigned long flags; struct fasync_struct *fasync_list; wait_queue_head_t wait; struct file *file; struct sock *sk; const struct proto_ops *ops; }

- 12. socket queues

- 13. bind() in kernel ● int bind(int sockfd, const struct sockaddr *addr, socklen_t addrlen); ● This system call eventually invokes the inet_bind() method in the kernel. ● The bind system call associates a local network transport address with a socket. For a client process, it is not mandatory to issue a bind call. The kernel takes care of doing an implicit binding when the client process issues the connect system call. ● The kernel function sys_bind() does the following: – sock = sockfd_lookup_light(fd, &err, &fput_needed); – sock->ops->bind(sock, (struct sockaddr *)address, addrlen); ● Point to note: – Binding to unprivileged ports (<1024)

- 14. listen() in kernel ● int listen(int sockfd, int backlog); ● backlog argument defines the maximum length to which the queue of pending connections for sockfd may grow. ● Linux uses two queues, a SYN queue (or incomplete connection queue) and an accept queue (or complete connection queue). Connections in state SYN RECEIVED are added to the SYN queue and later moved to the accept queue when their state changes to ESTABLISHED, i.e. when the ACK packet in the 3-way handshake is received. As the name implies, the accept call is then implemented simply to consume connections from the accept queue. In this case, the backlog argument of the listen syscall determines the size of the accept queue. ● SYN queue with a size specified by a system wide setting. – /proc/sys/net/ipv4/tcp_max_syn_backlog. ● accept queue with a size specified by the application. ● Implementation is in inet_listen() kernel function.

- 15. connect() in kernel ● int connect(int sockfd, const struct sockaddr *addr, socklen_t addrlen); ● Calls inet_autobind() to use the available source port as needed. ● Fills destination in inet_sock and calls ipv4_stream_connect or ipv4_datagram_connect (for IPV4). ● Routing is done by ip_route_connect function (L3)

- 16. accept() in kernel ● int accept(int sockfd, struct sockaddr *addr, socklen_t *addrlen); ● This system call eventually invokes the inet_accept() method in the kernel.

- 17. TCP 3-way handshake (SYN)

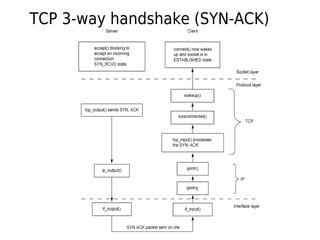

- 18. TCP 3-way handshake (SYN-ACK)

- 19. TCP 3-way handshake (SYN-ACK)

- 20. TCP 3-way handshake (ACK)

- 21. close() in kernel ● int shutdown(int sockfd, int how); ● int close(int sockfd); ● Shutdown can bring down the connection in half duplex mode. At the point, the queues associated with socket are not purged. Hence, it is necessary to call the close() function.

- 22. Network stack Architecture Kernel Socket Layer User Kernel UDP Hardware Application Intel E1000 Ethernet PF_INET TCP Network Device Layer IPV4 SOCK_ STREAM SOCK_ DGRAM SOCK _RAW PF_PACKET SOCK _RAW SOCK_ DGRAM PF_UNIX PF_... …. …. Socket Interface Protocol Layers ... PPP WLAN Device Layer

- 23. Socket Data Structures ● For every socket which is created by a user space application, there is a corresponding struct socket and struct sock in the kernel. ● ● struct socket: include/linux/net.h – Data common to the BSD socket layer – Has only 8 members – Any variable “sock” always refers to a struct socket ● struct sock : include/net/sock.h – Data common to the Network Protocol layer (i.e., AF_INET) – Any variable “sk” always refers to a struct sock.

- 24. AF Interface ● Main data structures – struct net_proto_family – struct proto_ops ● Key function sock_register(struct net_proto_family *ops) ● Each address family: – Implements the struct net _proto_family. – Calls the function sock_register( ) when the protocol family is initialized. – Implement the struct proto_ops for binding the BSD socket layer and protocol family layer.

- 25. INET and PACKET proto_family static const struct net_proto_family inet_family_ops = { .family = PF_INET, .create = inet_create, .owner = THIS_MODULE, /* af_inet.c */ }; static const struct net_proto_family packet_family_ops = { .family = PF_PACKET, .create = packet_create, .owner = THIS_MODULE, /* af_packet.c */ };

- 26. PF_INET proto_ops inet_stream_ops (TCP) inet_dgram_ops (UDP) inet_sockraw_ops (RAW) .family PF_INET PF_INET PF_INET .owner THIS_MODULE THIS_MODULE THIS_MODULE .release inet_release inet_release inet_release .bind inet_bind inet_bind inet_bind .connect inet_stream_connect inet_dgram_connect inet_dgram_connect .socketpair sock_no_socketpair sock_no_socketpair sock_no_socketpair .accept inet_accept sock_no_accept sock_no_accept .getname inet_getname inet_getname inet_getname .poll tcp_poll udp_poll datagram_poll .ioctl inet_ioctl inet_ioctl inet_ioctl .listen inet_listen sock_no_listen sock_no_listen .shutdown inet_shutdown inet_shutdown inet_shutdown .setsockopt sock_common_setsockopt sock_common_setsockopt sock_common_setsockopt .getsockopt sock_common_getsockop sock_common_getsockop sock_common_getsockop .sendmsg tcp_sendmsg inet_sendmsg inet_sendmsg .recvmsg sock_common_recvmsg sock_common_recvmsg sock_common_recvmsg .mmap sock_no_mmap sock_no_mmap sock_no_mmap .sendpage tcp_sendpage inet_sendpage inet_sendpage .splice_read tcp_splice_read -- -- net/ipv4/af_inet.c

- 27. udp_prot net/ipv4/af_inet.c struct proto udp_prot = { .name = "UDP", .owner = THIS_MODULE, .close = udp_lib_close, .connect = ip4_datagram_connect, .disconnect = udp_disconnect, .ioctl = udp_ioctl, .destroy = udp_destroy_sock, .setsockopt = udp_setsockopt, .getsockopt = udp_getsockopt, .sendmsg = udp_sendmsg, .recvmsg = udp_recvmsg, .sendpage = udp_sendpage, .backlog_rcv = __udp_queue_rcv_skb, .hash = udp_lib_hash, .unhash = udp_lib_unhash, .get_port = udp_v4_get_port, .memory_allocated = &udp_memory_allocated, .sysctl_mem = sysctl_udp_mem, .sysctl_wmem = &sysctl_udp_wmem_min, .sysctl_rmem = &sysctl_udp_rmem_min, .obj_size = sizeof(struct udp_sock), .slab_flags = SLAB_DESTROY_BY_RCU, .h.udp_table = &udp_table, #ifdef CONFIG_COMPAT .compat_setsockopt = compat_udp_setsockopt, .compat_getsockopt = compat_udp_getsockopt, #endif };

- 28. Relationship struct sock sk_commonsk_common sk_locksk_lock sk_backlogsk_backlog ...... struct socket statestate typetype flagsflags fasync_listfasync_list struct proto_ops inet_releaseinet_release inet_bindinet_bind inet_acceptinet_accept ...... waitwait filefile sksk proto_opsproto_ops struct proto udp_lib_closeudp_lib_close ipv4_dgram_connectipv4_dgram_connect udp_sendmsgudp_sendmsg udp_recvmsgudp_recvmsg ...... af_inet.caf_inet.c PF_INETPF_INET (*sk_prot_creator)(*sk_prot_creator) sk_socketsk_socket struct sock_common skc_nodeskc_node skc_refcntskc_refcnt skc_hashskc_hash ...... skc_protoskc_proto skc_netskc_net sk_send_headsk_send_head ......

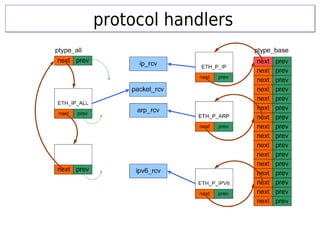

- 30. Key structure: packet_typeKey structure: packet_type struct packet_type { unsigned short type; htons(ether_type) struct net_device *dev; NULL means all dev int (*func) (...); handler address void *data; private data struct list_head list; }; There are two exported kernel functions for adding and removing handlers: void dev_add_pack(struct packet_type *pt) void dev_remove_pack(struct packet_type *pt)

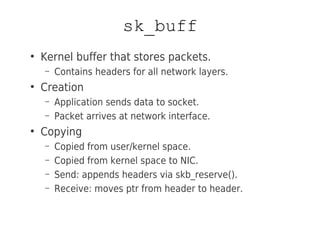

- 32. sk_buff ● Kernel buffer that stores packets. – Contains headers for all network layers. ● Creation – Application sends data to socket. – Packet arrives at network interface. ● Copying – Copied from user/kernel space. – Copied from kernel space to NIC. – Send: appends headers via skb_reserve(). – Receive: moves ptr from header to header.

- 33. sk_buff (cont...) ● sk_buff represents data and headers. ● sk_buff API (examples) – sk_buff allocation is done with alloc_skb() or dev_alloc_skb(); – drivers use dev_alloc_skb(); – (free by kfree_skb() and dev_kfree_skb(). ● unsigned char* data : points to the current header. ● skb_pull(int len) – removes data from the start of a buffer by advancing data to data+len and by decreasing len. ● Almost always sk_buff instances appear as “skb” in the kernel code

- 34. sk_buff functions ● skb_headroom(), skb_tailroom() Prototype / Description int skb_headroom(const struct sk_buff *skb); bytes at buffer head int skb_tailroom(const struct sk_buff *skb); bytes at buffer

- 35. sk_buff functions ● skb_reserve() Prototype void skb_reserve(struct sk_buff *skb, unsigned int len); Description adjust headroom. Used to make reservation for the header. When setting up receive packets that an ethernet device will DMA into, skb_reserve(skb, NET_IP_ALIGN) is called. This makes it so that, after the ethernet header, the protocol header will be aligned on at least a 4-byte boundary

- 36. sk_buff functions ● skb_push() Prototype unsigned char *skb_push(struct sk_buff *skb, unsigned int len); Description add data to the start of a buffer. skb_push() decrements 'skb- >data' and increments 'skb->len'. e.g. adding ethernet header before IP, TCP header.

- 37. sk_buff functions ● skb_pull() Prototype unsigned char *skb_pull(struct sk_buff *skb, unsigned int len); Description remove data from the start of a buffer

- 38. sk_buff functions ● skb_put() Prototype unsigned char *skb_put(struct sk_buff *skb, unsigned int len); Description add data to a buffer. skb_put() advances 'skb->tail' by the specified number of bytes, it also increments 'skb->len' by that number of bytes as well. Make sure, that enough tailroom is available, else skb_over_panic()

- 39. sk_buff functions ● skb_trim() Prototype void skb_trim(struct sk_buff *skb, unsigned int len); Description remove end from a buffer

- 40. Network device drivers ● net_device registration ● hard_start_xmit function pointer ● Interrupt handler for packet reception ● ● Bus Interaction (e.g. PCI) ● NAPI context

- 41. net_device structure ● net_device represents a network interface card. ● It is used to represent physical or virtual devices. e.g. loopback devices, bonding devices used for load balancing or high availability. ● Implemented using the private data of the device (the void *priv member of net_device); ● unsigned char* data : points to the current header. ● skb_pull(int len) – removes data from the start of a buffer by advancing data to data+len and by decreasing len. ● Almost always sk_buff instances appear as “skb” in the kernel code

- 42. net_device structure (cont...) ● unsigned int mtu – Maximum Transmission Unit: the maximum size of frame the device can handle. ● unsigned int flags, dev_addr[6]. ● void *ip_ptr: IPv4 specific data. This pointer is assigned to a pointer to in_device in inetdev_init() (net/ipv4/devinet.c) ● struct in_device: It contains a member named cnf (which is instance of ipv4_devconf). Setting /proc/sys/net/ipv4/conf/all/forwarding

- 43. Packet Transmission ● TCP/IP stack calls dev_queue_xmit function to queue the packet in the device queue. ● The device driver has a Tx handler registered as hard_start_xmit() function pointer. ● This function transmits the packet over wire or air and waits for completion callback. ● This completion callback is generally used to free the sk_buff associated with the packet.

- 44. Packet Transmission (cont...) ● Handling of sending a packet is done by ip_route_output_key(). ● Routing lookup also in the case of transmission. ● If the packet is for a remote host, set dst >output to ip_output() ● ip_output() will call ip_finish_output() – This is the NF_IP_POST_ROUTING point

- 45. Packet Reception ● When working in interrupt-driven model, the nic registers an interrupt handler with the IRQ with which the device works by calling request_irq(). ● This interrupt handler will be called when a frame is received. ● The same interrupt handler will be called when transmission of a frame is finished and under other conditions like errors. ● Interrupt handler should verify interrupt cause ● Control transferred to TCP/IP stack using netif_rx() or netif_rx_ni()

- 46. Packet Reception (cont...) ● Interrupt handler: sk_buff is allocated by calling dev_alloc_skb() ; also eth_type_trans() is called; It also advances the data pointer of the sk_buff to point to the IP header using skb_pull(skb, ETH_HLEN). ● This interrupt handler will be called when a frame is received. ● The same interrupt handler will be called when transmission of a frame is finished and under other conditions like errors. ● Interrupt handler should verify interrupt cause.

- 47. Network Packets HandlingNetwork Packets Handling

- 48. Physical ( Ethernet ) [L1] ● NIC generates an Interrupt Request ( IRQ ) ● The card driver is the Interrupt Service Routine ( ISR ) - disables interrupts – Allocates a new sk_buff structure – Fetches packet data from card buffer to freshly allocated sk_buff ( using DMA ) – Invokes netif_rx() – When netif_rx() returns, the Interrupts are re- enabled and the ISR is terminated

- 49. The picture: Ethernet Driver Low Lever Pkt Rx Deferred pkt rcptn Other Layer 3 Proc AF_INET ( IP ) AF_PACKET TCP Processing UDP ICMP Socket Level Receiving Process netif_rx() net_rx_action() packet_rcv()ip_rcv()*_rcv() tcp_rcv() udp_rcv() icmp_rcv() data_ready() wake_up_interruptible() Journey of a packet

- 50. TCP/IP stack ● Minimize copying ● Zero copy technique ● Page remapping ● Branch optimization ● Avoid process migration or cache misses ● Avoid dynamic assignment of interrupts to different CPUs ● Combine Operations within the same layer to minimize passes to the data

- 52. Wifi Programming Steps for programming the wireless extensions: • Open a network socket. (PF_INET, SOCK_DGRAM). • Setup the wireless request using struct iwreq. Set device name. Set wireless request data. Set subioctl_no. • Invoke device ioctl. • Wait for the response. [ Blocking Call ] • Wireless events are received over netlink socket. ( PF_NETLINK )

- 53. Wifi kernel handling Kernel space handling: * When kernel ioctl handler transfers control to the ioctl from the wireless device driver. * The driver invokes appropriate wireless extension call based on the ioctl command. * The wireless extension call transfers control to wireless firmware using special command interface over the USB/SDIO/MMC bus. * Wireless driver can receive events from firmware. ( e.g.Link_Loss Event)

- 54. Driver firmware interface What is a firmware ? * Firmware is wireless networking software that runs on the wireless chipset. * The wireless device driver downloads the firmware to the wireless chipset, upon initialization. * All low level wireless operations are performed by the firmware software. * It works in two modes Synchronous Request, response protocol Asynchronous Events from FW. * The firmware resides in /lib/firmware/ e.g. /lib/firmware/iwl-3945.ucode •

- 55. Need for NGW ● Next Generation Wireless ● Centralized control for all wireless work ● Drivers implement small set of configuration methods ● Semantics as per flows in the IEEE specifications ● Various modes of operation Station, AP, Monitor, IBSS, WDS, Mesh, P2P

- 56. Mac80211, cfg80211 ● Mac80211 is Linux kernel subsytem ● Implements shared code for soft MAC, half MAC devices ● Contains MLME (Media Access Control (MAC) Layer Management Entity) Authenticate, Deauthenticate, Associate, Disassociate Reassociate , Beacon , Probe Cfg80211 is the layer between user space and mac80211.

![Going deeper...

●

Check files, sockets owned by each process.

●

cat /proc/$PID/* : fd, wchan

●

/proc/net/tcp, /proc/net/udp

●

netstat -apeen

●

lsof (-i for networking)

●

●

Socket Status [socket operation on non-socket]

●

●

Kernel modules to spit information on data structures

like task_struct, struct netdevice](https://image.slidesharecdn.com/geep-networkingstack-linuxkernel-200229134309/85/Geep-networking-stack-linuxkernel-6-320.jpg)

![net_device structure (cont...)

●

unsigned int mtu – Maximum Transmission

Unit: the maximum size of frame the device

can handle.

●

unsigned int flags, dev_addr[6].

●

void *ip_ptr: IPv4 specific data. This pointer is

assigned to a pointer to in_device in

inetdev_init() (net/ipv4/devinet.c)

●

struct in_device: It contains a member named

cnf (which is instance of ipv4_devconf).

Setting /proc/sys/net/ipv4/conf/all/forwarding](https://image.slidesharecdn.com/geep-networkingstack-linuxkernel-200229134309/85/Geep-networking-stack-linuxkernel-42-320.jpg)

![Physical ( Ethernet ) [L1]

●

NIC generates an Interrupt Request ( IRQ )

●

The card driver is the Interrupt Service

Routine ( ISR ) - disables interrupts

– Allocates a new sk_buff structure

– Fetches packet data from card buffer to freshly

allocated sk_buff ( using DMA )

– Invokes netif_rx()

– When netif_rx() returns, the Interrupts are re-

enabled and the ISR is terminated](https://image.slidesharecdn.com/geep-networkingstack-linuxkernel-200229134309/85/Geep-networking-stack-linuxkernel-48-320.jpg)

![Wifi Programming

Steps for programming the wireless extensions:

• Open a network socket.

(PF_INET, SOCK_DGRAM).

• Setup the wireless request using struct iwreq.

Set device name.

Set wireless request data.

Set subioctl_no.

• Invoke device ioctl.

• Wait for the response. [ Blocking Call ]

• Wireless events are received over netlink socket.

( PF_NETLINK )](https://image.slidesharecdn.com/geep-networkingstack-linuxkernel-200229134309/85/Geep-networking-stack-linuxkernel-52-320.jpg)