Ad

Getting started with ibm tivoli workload scheduler v8.3 sg247237

- 1. Front cover Getting Started with IBM Tivoli Workload Scheduler V8.3 Best Practices and Performance Improvements Experiment with Tivoli Workload Scheduler 8.3 migration scenarios Practice with Tivoli Workload Scheduler 8.3 performance tuning Learn new JSC architecture and DB2 based object database Vasfi Gucer Carl Buehler Matt Dufner Clinton Easterling Pete Soto Douglas Gibbs Anthony Yen Warren Gill Carlos Wagner Dias Natalia Jojic Doroti Almeida Dias Garcia Rick Jones ibm.com/redbooks

- 3. International Technical Support Organization Getting Started with IBM Tivoli Workload Scheduler V8.3: Best Practices and Performance Improvements October 2006 SG24-7237-00

- 4. Note: Before using this information and the product it supports, read the information in “Notices” on page xxvii. First Edition (October 2006) This edition applies to IBM Tivoli Workload Scheduler Version 8.3. © Copyright International Business Machines Corporation 2006. All rights reserved. Note to U.S. Government Users Restricted Rights -- Use, duplication or disclosure restricted by GSA ADP Schedule Contract with IBM Corp.

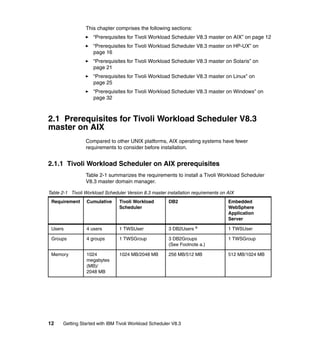

- 5. Contents Figures . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . xi Tables . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . xvii Examples. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . xix Notices . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .xxvii Trademarks . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . xxviii Preface . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . xxix The team that wrote this redbook. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . xxix Become a published author . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .xxxii Comments welcome. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . xxxiii Chapter 1. Introduction to Tivoli Workload Scheduler V8.3 . . . . . . . . . . . . 1 1.1 In the beginning: Running computer tasks . . . . . . . . . . . . . . . . . . . . . . . . . 2 1.2 Introduction to Job Scheduler . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2 1.3 Common Job Scheduler terminology . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3 1.4 Overview of Tivoli Workload Scheduler. . . . . . . . . . . . . . . . . . . . . . . . . . . . 4 1.5 Tivoli Workload Scheduler strengths. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 5 1.6 New features in Tivoli Workload Scheduler V8.3 . . . . . . . . . . . . . . . . . . . . 6 1.6.1 Improved infrastructure with WebSphere and DB2 or Oracle . . . . . . . 6 1.6.2 New J2EE Web services API . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 7 1.6.3 Advanced planning capabilities: JnextPlan replaces JNextDay . . . . . 7 1.6.4 Job Scheduling Console GUI enhancements . . . . . . . . . . . . . . . . . . . 8 1.6.5 Installation and upgrade facility. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 8 1.7 Conclusion. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 9 Chapter 2. Tivoli Workload Scheduler V8.3: Master prerequisites . . . . . . 11 2.1 Prerequisites for Tivoli Workload Scheduler V8.3 master on AIX . . . . . . . 12 2.1.1 Tivoli Workload Scheduler on AIX prerequisites . . . . . . . . . . . . . . . . 12 2.1.2 DB2 V8.2 on AIX prerequisites . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 13 2.1.3 AIX prerequisites for WebSphere Application Server 6.0.2 Embedded Express . . . . . . . . . . . . . . . . . . . . . . . . . . . 15 2.2 Prerequisites for Tivoli Workload Scheduler V8.3 master on HP-UX . . . . 16 2.2.1 Tivoli Workload Scheduler on HP-UX prerequisites . . . . . . . . . . . . . 16 2.2.2 DB2 V8.2 on HP-UX prerequisites . . . . . . . . . . . . . . . . . . . . . . . . . . 17 2.2.3 HP-UX prerequisites for WebSphere Application Server 6.0.2 Embedded Express . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 20 © Copyright IBM Corp. 2006. All rights reserved. iii

- 6. 2.3 Prerequisites for Tivoli Workload Scheduler V8.3 master on Solaris . . . . 21 2.3.1 Tivoli Workload Scheduler on Solaris prerequisites . . . . . . . . . . . . . 21 2.3.2 DB2 8.2 and 8.2.2 on Solaris prerequisites. . . . . . . . . . . . . . . . . . . . 22 2.3.3 Solaris prerequisites for WebSphere Application Server 6.0.2 Embedded Express . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 24 2.4 Prerequisites for Tivoli Workload Scheduler V8.3 master on Linux . . . . . 25 2.4.1 Tivoli Workload Scheduler on Linux prerequisites . . . . . . . . . . . . . . 25 2.4.2 DB2 V8.2 on Linux prerequisites. . . . . . . . . . . . . . . . . . . . . . . . . . . . 27 2.4.3 Linux prerequisites for WebSphere Application Server 6.0.2 Embedded Express . . . . . . . . . . . . . . . . . . . . . . . . . . . 31 2.5 Prerequisites for Tivoli Workload Scheduler V8.3 master on Windows . . 32 2.5.1 DB2 8.2 on Windows prerequisites . . . . . . . . . . . . . . . . . . . . . . . . . . 33 2.5.2 Windows prerequisites for WebSphere Application Server 6.0.2 Embedded Express . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 34 Chapter 3. Tivoli Workload Scheduler V8.3: New installation . . . . . . . . . . 35 3.1 Overview of the installation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 36 3.2 Installing new UNIX master using installation wizard . . . . . . . . . . . . . . . . 36 3.2.1 Installation procedure . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 37 3.2.2 Installing the Job Scheduling Console . . . . . . . . . . . . . . . . . . . . . . . 61 3.2.3 Creating scheduling objects for the new master . . . . . . . . . . . . . . . . 67 3.3 Installing new UNIX fault-tolerant agent using installation wizard . . . . . . . 82 3.4 Installing a Windows fault-tolerant agent. . . . . . . . . . . . . . . . . . . . . . . . . . 95 3.5 Installing the Tivoli Workload Scheduler command line client. . . . . . . . . 107 Chapter 4. Tivoli Workload Scheduler V8.3: Migration . . . . . . . . . . . . . . 115 4.1 Prerequisites for the upgrade . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 116 4.2 Overview of the upgrade . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 117 4.3 Before the upgrade . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 119 4.3.1 Data import methods. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 119 4.3.2 Extracting Tivoli Management Framework user data from the security file . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 120 4.3.3 Dumping existing objects from the database . . . . . . . . . . . . . . . . . 120 4.4 Upgrading Tivoli Workload Scheduler V8.1: Parallel upgrade . . . . . . . . 120 4.4.1 Dumping existing objects from the database . . . . . . . . . . . . . . . . . 121 4.4.2 Extracting Tivoli Framework user data from the security file. . . . . . 123 4.4.3 Installing a new master domain manager . . . . . . . . . . . . . . . . . . . . 127 4.4.4 Importing the migrated security file in the Tivoli Workload Scheduler V8.3 environment . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 128 4.4.5 Importing the object data. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 130 4.4.6 Switching a master domain manager . . . . . . . . . . . . . . . . . . . . . . . 139 4.4.7 Upgrading the backup master domain manager . . . . . . . . . . . . . . . 147 4.4.8 Upgrading agents . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 170 iv Getting Started with IBM Tivoli Workload Scheduler V8.3

- 7. 4.4.9 Unlinking and stopping Tivoli Workload Scheduler when upgrading agent workstations . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 194 4.5 Upgrading Tivoli Workload Scheduler V8.1: Direct upgrade . . . . . . . . . . 196 4.5.1 Upgrading a master domain manager. . . . . . . . . . . . . . . . . . . . . . . 197 4.5.2 Importing the object data. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 221 4.5.3 Configuring the upgraded master domain manager . . . . . . . . . . . . 221 4.5.4 Upgrading the backup master domain manager . . . . . . . . . . . . . . . 233 4.5.5 Importing the migrated security file in the Tivoli Workload Scheduler V8.3 environment . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 233 4.5.6 Upgrading agents . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 233 4.5.7 Installing the Job Scheduling Console . . . . . . . . . . . . . . . . . . . . . . 233 4.6 Upgrading Tivoli Workload Scheduler V8.2: Parallel upgrade . . . . . . . . 234 4.6.1 Dumping existing objects from the database . . . . . . . . . . . . . . . . . 234 4.6.2 Extracting Tivoli Framework user data from the security file. . . . . . 236 4.6.3 Installing a new master domain manager . . . . . . . . . . . . . . . . . . . . 240 4.6.4 Importing the migrated security file in the Tivoli Workload Scheduler V8.3 environment . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 241 4.6.5 Importing the object data. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 243 4.6.6 Switching a master domain manager . . . . . . . . . . . . . . . . . . . . . . . 252 4.6.7 Upgrading agents . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 260 4.6.8 Upgrading Windows 2003 agent using the installation wizard . . . . 261 4.7 Upgrading Tivoli Workload Scheduler V8.2: Direct upgrade . . . . . . . . . . 276 4.7.1 Upgrading a master domain manager. . . . . . . . . . . . . . . . . . . . . . . 277 4.7.2 Importing the object data. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 294 4.7.3 Configuring the upgraded master domain manager . . . . . . . . . . . . 294 4.7.4 Upgrading the backup master domain manager . . . . . . . . . . . . . . . 304 4.7.5 Importing the migrated security file in the Tivoli Workload Scheduler V8.3 environment . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 304 4.7.6 Upgrading agents . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 304 4.7.7 Installing the Job Scheduling Console . . . . . . . . . . . . . . . . . . . . . . 304 4.8 Upgrading Tivoli Workload Scheduler V8.2.1: Parallel upgrade . . . . . . . 304 4.8.1 Dumping existing objects from the database . . . . . . . . . . . . . . . . . 305 4.8.2 Extracting Tivoli Framework user data from security file. . . . . . . . . 307 4.8.3 Installing a new master domain manager . . . . . . . . . . . . . . . . . . . . 310 4.8.4 Importing the migrated security file in the Tivoli Workload Scheduler V8.3 environment . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 311 4.8.5 Importing the object data. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 313 4.8.6 Switching a master domain manager . . . . . . . . . . . . . . . . . . . . . . . 321 Chapter 5. DB2, WebSphere, and Lightweight Directory Access Protocol: Considerations . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 323 5.1 An overview of DB2 Universal Database . . . . . . . . . . . . . . . . . . . . . . . . 324 5.1.1 Deployment topologies . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 325 Contents v

- 8. 5.1.2 Database objects . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 325 5.1.3 Parallelism. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 327 5.2 DB2 Universal Database installation considerations . . . . . . . . . . . . . . . . 328 5.3 Creating a DB2 Universal Database instance . . . . . . . . . . . . . . . . . . . . . 337 5.3.1 Preparing for an instance creation . . . . . . . . . . . . . . . . . . . . . . . . . 337 5.3.2 Creating the instance . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 338 5.4 Creating a DB2 Universal Database . . . . . . . . . . . . . . . . . . . . . . . . . . . . 340 5.5 Backing up the database. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 341 5.6 Restoring a backup of the database . . . . . . . . . . . . . . . . . . . . . . . . . . . . 342 5.7 Referential integrity . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 344 5.8 Object and database locking . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 346 5.8.1 JSC locking features . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 347 5.8.2 Composer locking features . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 349 5.9 DB2 maintenance . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 352 5.10 WebSphere Application Server . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 354 5.11 Installing multiple application service instances . . . . . . . . . . . . . . . . . . 356 5.11.1 Starting the application server. . . . . . . . . . . . . . . . . . . . . . . . . . . . 356 5.11.2 Stopping the application server . . . . . . . . . . . . . . . . . . . . . . . . . . . 357 5.11.3 Checking the application server status . . . . . . . . . . . . . . . . . . . . . 358 5.11.4 Changing the trace properties. . . . . . . . . . . . . . . . . . . . . . . . . . . . 359 5.11.5 Backing up the application server configuration . . . . . . . . . . . . . . 360 5.11.6 Restoring the application server configuration . . . . . . . . . . . . . . . 362 5.11.7 Viewing the data source properties . . . . . . . . . . . . . . . . . . . . . . . . 363 5.11.8 Changing data source properties . . . . . . . . . . . . . . . . . . . . . . . . . 365 5.11.9 Viewing the host properties . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 366 5.11.10 Changing the host properties . . . . . . . . . . . . . . . . . . . . . . . . . . . 368 5.11.11 Viewing the security properties . . . . . . . . . . . . . . . . . . . . . . . . . . 370 5.11.12 Changing the security properties . . . . . . . . . . . . . . . . . . . . . . . . 372 5.11.13 Encrypting the profile properties . . . . . . . . . . . . . . . . . . . . . . . . . 375 5.12 Lightweight Directory Access Protocol . . . . . . . . . . . . . . . . . . . . . . . . . 376 5.12.1 Loading the user account details into the LDAP server . . . . . . . . 377 5.12.2 Creating the properties file . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 382 5.12.3 Updating the Tivoli Workload Scheduler security file . . . . . . . . . . 386 5.12.4 Using the Tivoli Workload Scheduler V8.3 command line utilities . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 388 5.12.5 Using the Job Scheduling Console . . . . . . . . . . . . . . . . . . . . . . . . 389 5.13 Configuring the application server to start and stop without a password . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 391 5.14 Network configuration for Job Scheduling Console. . . . . . . . . . . . . . . . 393 Chapter 6. Job Scheduling Console V8.3 . . . . . . . . . . . . . . . . . . . . . . . . . 395 6.1 Job Scheduling Console architecture . . . . . . . . . . . . . . . . . . . . . . . . . . . 396 6.2 Job Scheduling Console: Changes and new features. . . . . . . . . . . . . . . 399 vi Getting Started with IBM Tivoli Workload Scheduler V8.3

- 9. 6.2.1 Job Scheduling Console plan changes . . . . . . . . . . . . . . . . . . . . . . 400 6.2.2 Database object definitions changes . . . . . . . . . . . . . . . . . . . . . . . 401 6.3 Managing exclusive locking on database objects . . . . . . . . . . . . . . . . . . 412 6.4 Known problems and issues . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 413 Chapter 7. Planning your workload . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 415 7.1 Overview of production day. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 416 7.1.1 Identifying the start of the production day . . . . . . . . . . . . . . . . . . . . 421 7.1.2 Identifying the length of the production day . . . . . . . . . . . . . . . . . . 424 7.2 Plan cycle versus run cycle . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 429 7.3 Preproduction plan . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 432 7.4 Schedtime . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 433 7.5 Creating the production day . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 434 Chapter 8. Tuning Tivoli Workload Scheduler for best performance . . . 437 8.1 Global options parameters . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 438 8.2 Comparison of different versions of global options . . . . . . . . . . . . . . . . . 446 8.3 Local options parameters . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 447 8.4 Comparison of different versions of local options . . . . . . . . . . . . . . . . . . 465 8.5 Tivoli Workload Scheduler process flow . . . . . . . . . . . . . . . . . . . . . . . . . 467 8.6 Scenarios for this book . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 471 Chapter 9. Application programming interface . . . . . . . . . . . . . . . . . . . . 473 9.1 Introduction to application programming interfaces. . . . . . . . . . . . . . . . . 474 9.2 Getting started. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 474 9.3 Starting with the basics . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 475 9.3.1 Finding the API . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 476 9.3.2 Web Services Description Language templates . . . . . . . . . . . . . . . 479 Chapter 10. Tivoli Workload Scheduler V8.3: Troubleshooting . . . . . . . 483 10.1 Upgrading your whole environment. . . . . . . . . . . . . . . . . . . . . . . . . . . . 484 10.2 Keeping up-to-date fix packs. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 484 10.3 Built-in troubleshooting features . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 484 10.4 Installation logs . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 485 10.4.1 InstallShield wizard installation and uninstallation logs . . . . . . . . . 485 10.4.2 TWSINST logs . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 486 10.4.3 Software package block logs . . . . . . . . . . . . . . . . . . . . . . . . . . . . 486 10.4.4 DB2 installation logs . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 486 10.4.5 Installation logs for embedded version of WebSphere Application Server - Express . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 487 10.5 Recovering failed interactive InstallShield wizard installation . . . . . . . . 487 10.6 JnextPlan problems. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 502 Chapter 11. Making Tivoli Workload Scheduler highly available with Tivoli Contents vii

- 10. System Automation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 509 11.1 Introduction to IBM Tivoli System Automation for Multiplatforms . . . . . 510 11.2 High-availability scenarios. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 510 11.2.1 Scenario 1: Passive-active failover . . . . . . . . . . . . . . . . . . . . . . . . 510 11.2.2 Scenario 2: Switching domain managers . . . . . . . . . . . . . . . . . . . 512 11.2.3 Scenario 3: Fault-tolerant agent . . . . . . . . . . . . . . . . . . . . . . . . . . 513 11.2.4 Scenario 4: Tivoli System Automation end-to-end managing multiple Tivoli Workload Scheduler clusters. . . . . . . . . . 514 11.2.5 Scenario 5: Tivoli Workload Scheduler Managing Tivoli System Automation end-to-end . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 514 11.3 Implementing high availability for the Tivoli Workload Scheduler master domain manager. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 514 11.3.1 Prerequisites . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 515 11.3.2 Overview of the installation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 516 11.4 Installing and configuring DB2. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 516 11.5 Installing and configuring Tivoli System Automation . . . . . . . . . . . . . . . 527 11.6 Configuring DB2 and Tivoli System Automation . . . . . . . . . . . . . . . . . . 530 11.7 Installing and automating Tivoli Workload Scheduler . . . . . . . . . . . . . . 535 11.7.1 Installing Tivoli Workload Scheduler on the available environment . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 536 11.7.2 Preparing Tivoli Workload Scheduler for failover . . . . . . . . . . . . . 539 11.7.3 Configuring Tivoli System Automation policies for Tivoli Workload Scheduler . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 543 11.8 Testing the installation. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 545 Appendix A. Tivoli Workload Scheduler Job Notification Solution . . . . 547 Solution design. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 548 Requirements . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 548 Event flow . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 548 Solution deployment. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 551 Deploying the solution . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 551 Deploying directory structure . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 552 Solution schedules and jobs . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 555 Solution configuration. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 556 Creating the tws_event_mon.csv file . . . . . . . . . . . . . . . . . . . . . . . . . . . . 556 Configuring job abend notification . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 561 Configuring job done notification . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 562 Configuring job deadline notification . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 563 Configuring start deadline notification . . . . . . . . . . . . . . . . . . . . . . . . . . . . 565 Configuring job running long notification . . . . . . . . . . . . . . . . . . . . . . . . . . 569 Return code mapping . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 571 Solution monitoring. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 571 Solution regression testing . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 572 viii Getting Started with IBM Tivoli Workload Scheduler V8.3

- 11. CSV configuration . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 572 TWSMD_JNS2 schedule . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 573 TWSMD_JNS3-01 schedule . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 575 Solution troubleshooting. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 576 Tivoli Workload Scheduler infrastructure considerations . . . . . . . . . . . . . . . . 576 Tivoli Workload Scheduler production control considerations . . . . . . . . . . . . 579 Frequently asked questions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 580 CSV configuration . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 583 Scripts . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 584 Procedures . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 584 Solution procedures . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 585 Verifying notification completion. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 586 Related publications . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 587 IBM Redbooks . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 587 Other publications . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 587 Online resources . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 588 How to get IBM Redbooks . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 589 Help from IBM . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 589 Index . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 591 Contents ix

- 12. x Getting Started with IBM Tivoli Workload Scheduler V8.3

- 13. Figures 3-1 Setup command . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 38 3-2 Initializing the installation wizard . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 39 3-3 Language preference window . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 39 3-4 Tivoli Workload Scheduler V8.3 welcome window . . . . . . . . . . . . . . . . . . 40 3-5 License agreement window. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 40 3-6 Installing a new instance . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 41 3-7 Selecting the type of Tivoli Workload Scheduler instance V8.3 . . . . . . . . 41 3-8 Entering the Tivoli Workload Scheduler user name and password. . . . . . 42 3-9 Error message when Tivoli Workload Scheduler user does not exist . . . . 43 3-10 Configuring the workstation. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 44 3-11 Tivoli Workload Scheduler instance port specifications . . . . . . . . . . . . . 45 3-12 The Tivoli Workload Scheduler installation directory . . . . . . . . . . . . . . . 47 3-13 Selecting the DB2 installation type . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 48 3-14 DB2 users, password, and default port . . . . . . . . . . . . . . . . . . . . . . . . . . 49 3-15 Error message showing the port is in use by a service . . . . . . . . . . . . . . 50 3-16 DB2 instance options . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 50 3-17 Warning message showing the DB2 user does not exist . . . . . . . . . . . . 51 3-18 Tivoli Workload Scheduler installation options summary . . . . . . . . . . . . 52 3-19 List of the operations to be performed . . . . . . . . . . . . . . . . . . . . . . . . . . 53 3-20 Default DB2 path window . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 54 3-21 DB2 software location path . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 55 3-22 DB2 installation progress bar . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 56 3-23 The Tivoli Workload Scheduler installation progress bar . . . . . . . . . . . . 56 3-24 Installation completed . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 57 3-25 Example of a failed installation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 58 3-26 Installation error log. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 59 3-27 Reclassifying the step . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 60 3-28 New step status and restarting the installation . . . . . . . . . . . . . . . . . . . . 61 3-29 Job Scheduling Console setup icon . . . . . . . . . . . . . . . . . . . . . . . . . . . . 62 3-30 Installation wizard initialization process . . . . . . . . . . . . . . . . . . . . . . . . . 62 3-31 Selecting the language . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 63 3-32 The Job Scheduling Console welcome window . . . . . . . . . . . . . . . . . . . 63 3-33 License agreement . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 64 3-34 Specifying the Job Scheduling Console installation path . . . . . . . . . . . . 64 3-35 Selecting the Job Scheduling Console shortcut icons . . . . . . . . . . . . . . 65 3-36 The Job Scheduling Console installation path and disk space usage . . 65 3-37 The Job Scheduling Console installation progress bar . . . . . . . . . . . . . . 66 3-38 Job Scheduling Console installation completed . . . . . . . . . . . . . . . . . . . 66 © Copyright IBM Corp. 2006. All rights reserved. xi

- 14. 3-39 The Job Scheduling Console V8.3 icon . . . . . . . . . . . . . . . . . . . . . . . . . 67 3-40 Selecting Job Scheduling Console V8.3 icon from programs menu . . . . 68 3-41 The Job Scheduling Console splash window . . . . . . . . . . . . . . . . . . . . . 69 3-42 The load preferences files window . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 69 3-43 The Job Scheduling Console welcome window . . . . . . . . . . . . . . . . . . . 70 3-44 Warning window stating the engine does not exist . . . . . . . . . . . . . . . . . 70 3-45 The default engine definition window . . . . . . . . . . . . . . . . . . . . . . . . . . . 71 3-46 Specifying the Job Scheduling Console engine options . . . . . . . . . . . . . 73 3-47 Message showing the JSC is unable to connect to the engine. . . . . . . . 73 3-48 Job Scheduling Console engine view . . . . . . . . . . . . . . . . . . . . . . . . . . . 74 3-49 Defining the new workstation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 75 3-50 Specifying the UNIX master workstation options . . . . . . . . . . . . . . . . . . 77 3-51 Specifying the Windows fault-tolerant agent options . . . . . . . . . . . . . . . 78 3-52 Specifying the UNIX fault-tolerant agent options . . . . . . . . . . . . . . . . . . 79 3-53 Workstation definitions on M83-Paris engine . . . . . . . . . . . . . . . . . . . . . 80 3-54 Windows user for Windows fault-tolerant agent F83A . . . . . . . . . . . . . . 81 3-55 Defining the new Windows user . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 81 3-56 Initializing the installation wizard . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 83 3-57 Language preference window . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 83 3-58 Tivoli Workload Scheduler V8.3 welcome window . . . . . . . . . . . . . . . . . 84 3-59 License agreement window. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 84 3-60 Installing a new instance . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 85 3-61 Selecting the type of Tivoli Workload Scheduler V8.3 instance . . . . . . . 85 3-62 Entering the Tivoli Workload Scheduler user name and password. . . . . 86 3-63 Error message when Tivoli Workload Scheduler user does not exist . . . 87 3-64 Configuring the FTA workstation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 88 3-65 Tivoli Workload Scheduler installation directory . . . . . . . . . . . . . . . . . . . 89 3-66 Tivoli Workload Scheduler installation options summary . . . . . . . . . . . . 90 3-67 List of the operations to be performed . . . . . . . . . . . . . . . . . . . . . . . . . . 90 3-68 The Tivoli Workload Scheduler installation progress bar . . . . . . . . . . . . 91 3-69 Installation completed . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 91 3-70 Example of a failed installation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 92 3-71 Installation error log. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 93 3-72 Reclassifying the step . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 94 3-73 New step status and restarting the installation . . . . . . . . . . . . . . . . . . . . 95 3-74 Setup command . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 96 3-75 Language preference window . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 97 3-76 Tivoli Workload Scheduler V8.3 welcome window . . . . . . . . . . . . . . . . . 97 3-77 License agreement window. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 98 3-78 Installing a new instance . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 98 3-79 Selecting the type of Tivoli Workload Scheduler V8.3 instance . . . . . . . 99 3-80 Entering the Tivoli Workload Scheduler user name and password. . . . . 99 3-81 Configuring the FTA workstation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 100 xii Getting Started with IBM Tivoli Workload Scheduler V8.3

- 15. 3-82 User required permissions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 101 3-83 Tivoli Workload Scheduler installation directory . . . . . . . . . . . . . . . . . . 101 3-84 Tivoli Workload Scheduler installation summary. . . . . . . . . . . . . . . . . . 102 3-85 List of the operations to be performed . . . . . . . . . . . . . . . . . . . . . . . . . 102 3-86 The Tivoli Workload Scheduler installation progress bar . . . . . . . . . . . 103 3-87 Installation completed . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 103 3-88 Example of a failed installation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 104 3-89 Installation error log. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 105 3-90 Reclassifying the step . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 106 3-91 New step status and restarting the installation . . . . . . . . . . . . . . . . . . . 107 3-92 Setup command . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 108 3-93 Language preference window . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 108 3-94 Tivoli Workload Scheduler V8.3 welcome window . . . . . . . . . . . . . . . . 109 3-95 License agreement window. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 109 3-96 Selecting the command line client. . . . . . . . . . . . . . . . . . . . . . . . . . . . . 110 3-97 Installing the command line client . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 111 3-98 Installation directory window . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 112 3-99 Summary of the installation path and required disk space . . . . . . . . . . 112 3-100 List of the operations to be performed by the installation . . . . . . . . . . 113 3-101 Installation successful window . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 113 4-1 Lab environment for Tivoli Workload Scheduler V8.1 . . . . . . . . . . . . . . . 116 4-2 Lab environment for Tivoli Workload Scheduler V8.2 . . . . . . . . . . . . . . . 116 4-3 Lab environment for Tivoli Workload Scheduler V8.2.1 . . . . . . . . . . . . . 117 4-4 Selecting the installation wizard language . . . . . . . . . . . . . . . . . . . . . . . 148 4-5 Welcome information . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 149 4-6 License agreement . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 150 4-7 Previous instance and upgrading Tivoli Workload Scheduler . . . . . . . . . 151 4-8 Selecting Tivoli Workload Scheduler instance to be upgraded . . . . . . . . 152 4-9 Entering the password of the Tivoli Workload Scheduler user . . . . . . . . 153 4-10 Ports used by IBM WebSphere Application Server. . . . . . . . . . . . . . . . 154 4-11 Selecting the type of DB2 UDB installation . . . . . . . . . . . . . . . . . . . . . . 155 4-12 Information to configure DB2 UDB Administration Client version . . . . . 156 4-13 Specifying the DB2 UDB Administration Client directory . . . . . . . . . . . 157 4-14 Information data to create and configure Tivoli Workload Scheduler database. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 158 4-15 Information about db2inst1 user . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 159 4-16 Installation settings information: Part 1 . . . . . . . . . . . . . . . . . . . . . . . . . 160 4-17 Installation settings information: Part 2 . . . . . . . . . . . . . . . . . . . . . . . . . 161 4-18 Installation completed successfully . . . . . . . . . . . . . . . . . . . . . . . . . . . . 162 4-19 Selecting the installation wizard language . . . . . . . . . . . . . . . . . . . . . . 171 4-20 Welcome information . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 172 4-21 License agreement . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 173 4-22 Previous instance and upgrading Tivoli Workload Scheduler . . . . . . . . 174 Figures xiii

- 16. 4-23 Type of Tivoli Workload Scheduler instance to be upgraded . . . . . . . . 175 4-24 Entering the password of the Tivoli Workload Scheduler user . . . . . . . 176 4-25 Installation settings . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 177 4-26 Installation completed successfully . . . . . . . . . . . . . . . . . . . . . . . . . . . . 178 4-27 Selecting the installation wizard language . . . . . . . . . . . . . . . . . . . . . . 180 4-28 Welcome information . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 180 4-29 License agreement . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 181 4-30 Previous instance and upgrading Tivoli Workload Scheduler . . . . . . . . 182 4-31 Type of Tivoli Workload Scheduler instance to be upgraded . . . . . . . . 183 4-32 Entering the password of the Tivoli Workload Scheduler user . . . . . . . 184 4-33 Installation settings . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 185 4-34 Installation completed successfully . . . . . . . . . . . . . . . . . . . . . . . . . . . . 186 4-35 Selecting the installation wizard language . . . . . . . . . . . . . . . . . . . . . . 198 4-36 Welcome information . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 198 4-37 License agreement . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 199 4-38 Previous instance and upgrading Tivoli Workload Scheduler . . . . . . . . 200 4-39 Type of Tivoli Workload Scheduler instance to be upgraded . . . . . . . . 201 4-40 Previous backup and export destination directory . . . . . . . . . . . . . . . . 202 4-41 Entering the password of the Tivoli Workload Scheduler user . . . . . . . 203 4-42 Ports used by IBM WebSphere Application Server. . . . . . . . . . . . . . . . 204 4-43 Specifying the type of DB2 UDB installation . . . . . . . . . . . . . . . . . . . . . 205 4-44 Information to configure DB2 UDB Enterprise Server Edition V8.2 . . . 206 4-45 DB2 UDB Enterprise Server Edition V8.2 directory . . . . . . . . . . . . . . . 207 4-46 Reviewing information to configure Tivoli Workload Scheduler . . . . . . 208 4-47 Information about db2inst1 user . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 209 4-48 Installation settings: Part 1 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 210 4-49 Installation settings: Part 2 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 211 4-50 Installation completed successfully . . . . . . . . . . . . . . . . . . . . . . . . . . . . 212 4-51 Selecting the installation wizard language . . . . . . . . . . . . . . . . . . . . . . 261 4-52 Welcome information . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 262 4-53 License agreement . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 263 4-54 Previous instance and upgrading Tivoli Workload Scheduler . . . . . . . . 264 4-55 Type of Tivoli Workload Scheduler instance to be upgraded . . . . . . . . 265 4-56 Entering the password of the Tivoli Workload Scheduler user . . . . . . . 266 4-57 Installation settings . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 267 4-58 Installation completed successfully . . . . . . . . . . . . . . . . . . . . . . . . . . . . 268 4-59 Selecting the installation wizard language . . . . . . . . . . . . . . . . . . . . . . 278 4-60 Welcome information . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 279 4-61 License agreement . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 280 4-62 Previous instance and upgrading Tivoli Workload Scheduler . . . . . . . . 281 4-63 Type of Tivoli Workload Scheduler instance to be upgraded . . . . . . . . 282 4-64 Previous backup and export destination directory . . . . . . . . . . . . . . . . 283 4-65 Entering the password of the Tivoli Workload Scheduler user . . . . . . . 284 xiv Getting Started with IBM Tivoli Workload Scheduler V8.3

- 17. 4-66 Ports used by IBM WebSphere Application Server. . . . . . . . . . . . . . . . 285 4-67 Specifying the type of DB2 UDB installation . . . . . . . . . . . . . . . . . . . . . 286 4-68 Information to configure DB2 UDB Enterprise Server Edition V8.2 . . . 287 4-69 DB2 UDB Enterprise Server Edition V8.2 directory . . . . . . . . . . . . . . . 288 4-70 Reviewing information to configure Tivoli Workload Scheduler . . . . . . 289 4-71 Information about db2inst1 user . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 290 4-72 Installation settings: Part 1 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 291 4-73 Installation settings: Part 2 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 292 4-74 Installation completed successfully . . . . . . . . . . . . . . . . . . . . . . . . . . . . 293 5-1 Choosing DB2 Universal Database installation action panel . . . . . . . . . 329 5-2 Specifying the location of the DB2 sqllib directory . . . . . . . . . . . . . . . . . 331 5-3 DB2 server configuration information . . . . . . . . . . . . . . . . . . . . . . . . . . . 332 5-4 DB2 client configuration information: Part 1 . . . . . . . . . . . . . . . . . . . . . . 334 5-5 DB2 client configuration information: Part 2 . . . . . . . . . . . . . . . . . . . . . . 335 5-6 Database configuration information . . . . . . . . . . . . . . . . . . . . . . . . . . . . 336 5-7 JSC action menu including browse and unlock options . . . . . . . . . . . . . 348 5-8 JSC all job definitions list showing JOB1 as locked . . . . . . . . . . . . . . . . 348 5-9 JSC object unlocked while being edited: Warning message. . . . . . . . . . 349 5-10 JSC engine properties panel. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 390 6-1 Connection between JSC and Tivoli Workload Scheduler engine . . . . . 397 6-2 Tivoli Workload Scheduler environment with JSC . . . . . . . . . . . . . . . . . 398 6-3 Generating a new plan . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 400 6-4 Job scheduling instance plan view . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 401 6-5 Defining a new job. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 402 6-6 Job stream definition: General properties . . . . . . . . . . . . . . . . . . . . . . . . 404 6-7 Job Stream definition: Dependency resolution . . . . . . . . . . . . . . . . . . . . 406 6-8 Job stream definition: Creating a weekly run cycle . . . . . . . . . . . . . . . . . 408 6-9 Job stream: Explorer view. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 409 6-10 Job Stream Editor: Multiple job selection . . . . . . . . . . . . . . . . . . . . . . . 410 6-11 Master domain manager definition . . . . . . . . . . . . . . . . . . . . . . . . . . . . 411 6-12 Exclusive locking and JSC options . . . . . . . . . . . . . . . . . . . . . . . . . . . . 413 7-1 Three-and-a-half calendar days. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 416 7-2 Default production day . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 417 7-3 Production day that follows calendar day . . . . . . . . . . . . . . . . . . . . . . . . 418 7-4 Production day that is shorter than 24-hour calendar day . . . . . . . . . . . 419 7-5 Production day that is longer than 24-hour calendar day . . . . . . . . . . . . 420 7-6 Relationship between production day and run cycle for production day matching calendar day . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 430 7-7 Determining job stream start after changing start of production day. . . . 431 7-8 Relationship between production day and run cycle for a 10-hour production day relationship . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 433 7-9 Creating the production day on the master domain manager . . . . . . . . . 435 7-10 Relationship between JnextPlan, production control database, and Figures xv

- 18. production day . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 436 8-1 Tivoli Workload Scheduler process flow . . . . . . . . . . . . . . . . . . . . . . . . . 468 9-1 Integrating Tivoli Workload Scheduler with business processes through WebSphere Business Integration Modeler . . . . . . . . . . . . . . . . . . . . . . . 480 10-1 Wizard window after an installation failure . . . . . . . . . . . . . . . . . . . . . . 487 10-2 Step List window showing a failed step. . . . . . . . . . . . . . . . . . . . . . . . . 490 10-3 Step status tab . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 492 10-4 Step properties tab . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 493 10-5 Step output tab . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 494 11-1 Scenario 1 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 511 11-2 Scenario 2 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 513 11-3 DB2 setup . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 517 11-4 Installing DB2 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 517 11-5 Selecting the installation type . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 518 11-6 Selecting DB2 UDB Enterprise Server Edition . . . . . . . . . . . . . . . . . . . 518 11-7 Creating the DB2 Administration Server user . . . . . . . . . . . . . . . . . . . . 519 11-8 Creating a DB2 instance . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 519 11-9 Selecting the instance type . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 520 11-10 Instance owner, group, and home directory . . . . . . . . . . . . . . . . . . . . 520 11-11 Fenced user . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 521 11-12 Local contact list . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 521 11-13 DB2 installation progress . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 522 11-14 Status report . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 523 11-15 Setup installer . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 537 11-16 Configuring the agent . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 537 11-17 Checking the database . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 538 11-18 Defining database tables. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 539 A-1 Tivoli Workload Scheduler Notification Solution flow diagram . . . . . . . . 550 A-2 Job has been suspended . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 557 A-3 Job has been canceled. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 558 A-4 Job ended with an internal status of FAILED . . . . . . . . . . . . . . . . . . . . . 558 A-5 Job has continued. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 559 A-6 Job has been canceled. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 559 A-7 Configuring the termination deadline . . . . . . . . . . . . . . . . . . . . . . . . . . . 564 A-8 Configuring the Latest Start Time - Suppress. . . . . . . . . . . . . . . . . . . . . 566 A-9 Configuring the Latest Start Time - Continue . . . . . . . . . . . . . . . . . . . . . 567 A-10 Configuring the Latest Start Time - Cancel. . . . . . . . . . . . . . . . . . . . . . 568 A-11 Configuring the return code mapping . . . . . . . . . . . . . . . . . . . . . . . . . . 571 xvi Getting Started with IBM Tivoli Workload Scheduler V8.3

- 19. Tables 2-1 Tivoli Workload Scheduler Version 8.3 master installation requirements on AIX . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 12 2-2 AIX operating system prerequisites for IBM DB2 UDB Version 8.2 . . . . . 14 2-3 Tivoli Workload Scheduler Version 8.3 master installation requirements on HP-UX . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 16 2-4 HP-UX operating system prerequisites for IBM DB2 UDB Version 8.2. . . 18 2-5 HP-UX recommended values for kernel configuration parameters . . . . . . 19 2-6 Tivoli Workload Scheduler Version 8.3 master installation requirements on Solaris. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 21 2-7 Solaris operating system prerequisites for IBM DB2 UDB Version 8.2 . . . 23 2-8 Tivoli Workload Scheduler Version 8.3 master installation requirements on Linux . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 25 2-9 Tivoli Workload Scheduler Version 8.3 master installation requirements on Windows . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 32 2-10 Windows OS prerequisites for IBM DB2 UDB Version 8.2 . . . . . . . . . . . 33 3-1 Symbolic link variables and paths . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 46 3-2 Job Scheduling Console new engine options . . . . . . . . . . . . . . . . . . . . . . 72 3-3 The workstation definition options. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 75 3-4 Windows workstation definition options . . . . . . . . . . . . . . . . . . . . . . . . . . 80 3-5 Symbolic link variables and paths . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 89 3-6 Explanation of the fields . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 111 5-1 Rules applied when deleting an object referenced by another object . . . 344 5-2 WebSphere application server port numbers . . . . . . . . . . . . . . . . . . . . . 356 5-3 Trace modes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 359 8-1 Valid internal job states . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 439 8-2 Global options parameters in Tivoli Workload Scheduler . . . . . . . . . . . . 446 8-3 Shortcuts for encryption ciphers . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 458 8-4 Local options parameters in Tivoli Workload Scheduler . . . . . . . . . . . . . 465 8-5 Options for tuning job processing on a workstation . . . . . . . . . . . . . . . . 470 8-6 Maximum tweaked scenario . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 472 11-1 Substitute embedded variables. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 524 A-1 Naming convention used by the tws_event_mon.pl script . . . . . . . . . . . 549 A-2 Format of the JRL files . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 554 A-3 Format of the current_timestamp file . . . . . . . . . . . . . . . . . . . . . . . . . . . 555 © Copyright IBM Corp. 2006. All rights reserved. xvii

- 20. xviii Getting Started with IBM Tivoli Workload Scheduler V8.3

- 21. Examples 2-1 Sample output from db2osconf -r . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 24 2-2 Output from make install . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 29 2-3 Sample output from the ipcs -l command . . . . . . . . . . . . . . . . . . . . . . . . . 30 4-1 Copying CDn/operating_system/bin/composer81 to TWShome/bin . . . . 121 4-2 Setting permission, owner, and group of composer81 command . . . . . . 121 4-3 Dumping the data of Tivoli Workload Scheduler V8.1. . . . . . . . . . . . . . . 122 4-4 Copying the migrtool.tar file to TWShome directory . . . . . . . . . . . . . . . . 123 4-5 List of files in the migrtool.tar file and backups in TWShome . . . . . . . . . 123 4-6 Extracting the files from migrtool.tar into TWShome . . . . . . . . . . . . . . . . 124 4-7 Setting permissions, owner, and group of the files into TWShome. . . . . 124 4-8 Creating a symbolic link in /usr/lib directory . . . . . . . . . . . . . . . . . . . . . . 125 4-9 Setting Tivoli Workload Scheduler variables and running the dumpsec command . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 125 4-10 Running the migrfwkusr script . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 126 4-11 Removing the duplicated users and adding the tws83 user in output_security_file. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 128 4-12 Running dumpsec to back up the security file. . . . . . . . . . . . . . . . . . . . 128 4-13 Running makesec to add new users in the security file . . . . . . . . . . . . 129 4-14 Creating a symbolic link in the /usr/lib directory . . . . . . . . . . . . . . . . . . 130 4-15 Copying /Tivoli/tws83/bin/libHTTP*.a to /Tivoli/tws81/bin directory. . . . 131 4-16 Changing group permission of all files in /Tivoli/tws81/mozart to read . 131 4-17 Running the optman miggrunnb command . . . . . . . . . . . . . . . . . . . . . . 131 4-18 Running the optman miggopts command . . . . . . . . . . . . . . . . . . . . . . . 132 4-19 Running the optman ls command . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 133 4-20 Running the datamigrate command . . . . . . . . . . . . . . . . . . . . . . . . . . . 135 4-21 Running the composer display command . . . . . . . . . . . . . . . . . . . . . . . 136 4-22 Setting the new master domain manager properties . . . . . . . . . . . . . . 136 4-23 Checking jobs and job streams related to the previous cpuname. . . . . 136 4-24 Deleting FINAL job stream related to the previous cpuname . . . . . . . . 137 4-25 Deleting Jnextday job related to the previous cpuname . . . . . . . . . . . . 137 4-26 Deleting the previous cpuname from the new Tivoli Workload Scheduler Version 8.3 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 137 4-27 Running the datamigrate command . . . . . . . . . . . . . . . . . . . . . . . . . . . 137 4-28 Running the datamigrate command . . . . . . . . . . . . . . . . . . . . . . . . . . . 138 4-29 Running the conman switchmgr command . . . . . . . . . . . . . . . . . . . . . . 139 4-30 Running the optman chg cf=ALL command . . . . . . . . . . . . . . . . . . . . . 139 4-31 Running the optman ls command . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 140 4-32 Running JnextPlan in new master domain manager to create a plan . . 141 © Copyright IBM Corp. 2006. All rights reserved. xix

- 22. 4-33 Running composer add to add the new job stream called FINAL . . . . . 143 4-34 Running composer display to see the new job stream called FINAL . . 144 4-35 Running conman sbs to submit the new FINAL job stream . . . . . . . . . 146 4-36 Running conman ss to see the FINAL job stream. . . . . . . . . . . . . . . . . 146 4-37 Running conman sj to see the jobs of the FINAL job stream . . . . . . . . 147 4-38 Running the SETUP.bin command . . . . . . . . . . . . . . . . . . . . . . . . . . . . 148 4-39 Removing the Symphony, Sinfonia, Symnew and *msg files . . . . . . . . 163 4-40 Running the StartUp command. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 163 4-41 Running conman link cpu=bkm_cpuname . . . . . . . . . . . . . . . . . . . . . . 163 4-42 Running conman and providing Tivoli Workload Scheduler user and password . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 163 4-43 Copying TWS83_UPGRADE_BACKUP_BDM.txt. . . . . . . . . . . . . . . . . 164 4-44 Editing TWS83_UPGRADE_BACKUP_BDM.txt file . . . . . . . . . . . . . . . 164 4-45 Running SETUP.bin -options . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 170 4-46 Running the SETUP.exe command . . . . . . . . . . . . . . . . . . . . . . . . . . . 171 4-47 Removing the Symphony, Sinfonia, Symnew and *msg files . . . . . . . . 179 4-48 Running the StartUp command. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 179 4-49 Running conman link cpu=fta_cpuname . . . . . . . . . . . . . . . . . . . . . . . . 179 4-50 Running the SETUP.bin command . . . . . . . . . . . . . . . . . . . . . . . . . . . . 179 4-51 Removing the Symphony, Sinfonia, Symnew and *msg files . . . . . . . . 186 4-52 Running the StartUp command. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 187 4-53 Running conman link cpu=fta_cpuname . . . . . . . . . . . . . . . . . . . . . . . . 187 4-54 Copying TWS83_UPGRADE_Agent.txt . . . . . . . . . . . . . . . . . . . . . . . . 187 4-55 Editing TWS83_UPGRADE_Agent.txt file. . . . . . . . . . . . . . . . . . . . . . . 187 4-56 Running SETUP.bin -options . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 190 4-57 Changing the directory to TWShome . . . . . . . . . . . . . . . . . . . . . . . . . . 190 4-58 Running the twsinst script . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 190 4-59 Backup directory . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 191 4-60 Sample twsinst script . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 192 4-61 Rolling back to a previous instance. . . . . . . . . . . . . . . . . . . . . . . . . . . . 192 4-62 Running the winstsp command . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 194 4-63 Running the conman unlink command . . . . . . . . . . . . . . . . . . . . . . . . . 194 4-64 Running the conman stop command . . . . . . . . . . . . . . . . . . . . . . . . . . 194 4-65 Running the conman shut command . . . . . . . . . . . . . . . . . . . . . . . . . . 195 4-66 Running the SETUP.bin command . . . . . . . . . . . . . . . . . . . . . . . . . . . . 197 4-67 Message indicating that the data is imported . . . . . . . . . . . . . . . . . . . . 213 4-68 Copying TWS83_UPGRADE_MDM.txt . . . . . . . . . . . . . . . . . . . . . . . . . 213 4-69 Editing the TWS83_UPGRADE_MDM.txt file . . . . . . . . . . . . . . . . . . . . 213 4-70 Running SETUP.bin -options . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 220 4-71 Running the optman chg cf=ALL command . . . . . . . . . . . . . . . . . . . . . 221 4-72 Running the optman ls command . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 221 4-73 Running JnextPlan in new master domain manager to create a plan . . 222 4-74 Running the optman chg cf=YES command . . . . . . . . . . . . . . . . . . . . . 228 xx Getting Started with IBM Tivoli Workload Scheduler V8.3

- 23. 4-75 Running composer add to add new job stream called FINAL . . . . . . . . 228 4-76 Running composer display to see new FINAL job stream . . . . . . . . . . 229 4-77 Running conman cs to cancel the FINAL job stream . . . . . . . . . . . . . . 231 4-78 Running conman sbs to submit the new FINAL job stream . . . . . . . . . 231 4-79 Running conman ss to see the new FINAL job stream . . . . . . . . . . . . . 232 4-80 Running conman sj to see the jobs . . . . . . . . . . . . . . . . . . . . . . . . . . . . 232 4-81 Copying CDn/operating_system/bin/composer821 to TWShome/bin . . 235 4-82 Setting permission, owner and group of composer821 command . . . . 235 4-83 Dumping data of Tivoli Workload Scheduler V8.2. . . . . . . . . . . . . . . . . 235 4-84 Copying the migrtool.tar file to TWShome directory . . . . . . . . . . . . . . . 237 4-85 List of files in the migrtool.tar file and backups in TWShome . . . . . . . . 237 4-86 Extracting files from migrtool.tar into TWShome . . . . . . . . . . . . . . . . . . 237 4-87 Setting permissions, owner, and group of the files into TWShome. . . . 238 4-88 Setting Tivoli Workload Scheduler variables and running dumpsec command . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 238 4-89 Running the migrfwkusr script . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 239 4-90 Removing duplicated users and adding tws830 user in the output_security_file. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 241 4-91 Running dumpsec to back up the security file. . . . . . . . . . . . . . . . . . . . 241 4-92 Running makesec to add new users in the security file . . . . . . . . . . . . 242 4-93 Creating a symbolic link in /usr/lib directory . . . . . . . . . . . . . . . . . . . . . 243 4-94 Copying /usr/local/tws830/bin/libHTTP*.so to /usr/local/tws820/bin directory . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 244 4-95 Changing group permission of all files in /usr/local/tws830/mozart. . . . 244 4-96 Running the optman miggrunnb command . . . . . . . . . . . . . . . . . . . . . . 244 4-97 Running the optman miggopts command . . . . . . . . . . . . . . . . . . . . . . . 245 4-98 Running the optman ls command . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 246 4-99 Running the datamigrate command . . . . . . . . . . . . . . . . . . . . . . . . . . . 247 4-100 Running the composer display command . . . . . . . . . . . . . . . . . . . . . . 248 4-101 Setting the new master domain manager properties . . . . . . . . . . . . . 249 4-102 Checking jobs and job streams related to the previous cpuname. . . . 249 4-103 Deleting FINAL job stream related to the previous cpuname . . . . . . . 249 4-104 Deleting Jnextday job related to the previous cpuname . . . . . . . . . . . 249 4-105 Deleting the previous cpuname from the new Tivoli Workload Scheduler Version 8.3 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 250 4-106 Running the datamigrate command . . . . . . . . . . . . . . . . . . . . . . . . . . 250 4-107 Running the datamigrate command . . . . . . . . . . . . . . . . . . . . . . . . . . 251 4-108 Running the conman switchmgr command . . . . . . . . . . . . . . . . . . . . . 252 4-109 Running the optman cfg cf=ALL command . . . . . . . . . . . . . . . . . . . . . 252 4-110 Running the optman ls command . . . . . . . . . . . . . . . . . . . . . . . . . . . . 252 4-111 Running JnextPlan in new master domain manager to create a plan . 253 4-112 Running composer add to add the new job stream called FINAL . . . . 256 4-113 Running composer display to see the new job stream called FINAL . 257 Examples xxi

- 24. 4-114 Running conman sbs to submit new FINAL job stream . . . . . . . . . . . 258 4-115 Running conman ss to see the new FINAL job stream . . . . . . . . . . . . 259 4-116 Running conman sj to see the jobs of the new FINAL job stream . . . 259 4-117 Running the SETUP.bin command . . . . . . . . . . . . . . . . . . . . . . . . . . . 261 4-118 Removing the Symphony, Sinfonia, Symnew and *msg files . . . . . . . 269 4-119 Running the StartUp command. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 269 4-120 Running conman link cpu=fta_cpuname . . . . . . . . . . . . . . . . . . . . . . . 269 4-121 Copying TWS83_UPGRADE_Agent.txt . . . . . . . . . . . . . . . . . . . . . . . 269 4-122 Editing TWS83_UPGRADE_Agent.txt file. . . . . . . . . . . . . . . . . . . . . . 270 4-123 Running SETUP.bin -options . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 272 4-124 Changing the directory to TWShome . . . . . . . . . . . . . . . . . . . . . . . . . 272 4-125 Running the twsinst script . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 273 4-126 Backup directory . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 273 4-127 Sample twsinst script . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 274 4-128 Rolling back to a previous instance. . . . . . . . . . . . . . . . . . . . . . . . . . . 274 4-129 winstsp command . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 276 4-130 Running the SETUP.bin command . . . . . . . . . . . . . . . . . . . . . . . . . . . 278 4-131 Message indicating that the data is imported . . . . . . . . . . . . . . . . . . . 294 4-132 Running the optman chg cf=ALL command . . . . . . . . . . . . . . . . . . . . 294 4-133 Running the optman ls command . . . . . . . . . . . . . . . . . . . . . . . . . . . . 295 4-134 Running JnextPlan in new master domain manager to create a plan . 296 4-135 Running the optman chg cf=YES command . . . . . . . . . . . . . . . . . . . . 299 4-136 Running composer add to add the new FINAL job stream . . . . . . . . . 300 4-137 Running composer display to see the new FINAL job stream . . . . . . 300 4-138 Running conman cs to cancel the FINAL job stream . . . . . . . . . . . . . 302 4-139 Running conman sbs to submit the new FINAL job stream . . . . . . . . 302 4-140 Running conman ss to see the new job stream called FINAL. . . . . . . 303 4-141 Running conman sj to see the jobs . . . . . . . . . . . . . . . . . . . . . . . . . . . 303 4-142 Copying CDn/operating_system/bin/composer81 to TWShome/bin . . 305 4-143 Setting permission, owner and group of composer821 command . . . 306 4-144 Dumping data of Tivoli Workload Scheduler V8.2.1 . . . . . . . . . . . . . . 306 4-145 Copying migrtool.tar file to the TWShome directory . . . . . . . . . . . . . . 308 4-146 Backup of the files listed in migrtool.tar file . . . . . . . . . . . . . . . . . . . . . 308 4-147 Setting Tivoli Workload Scheduler variables and running dumpsec command . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 308 4-148 Running the migrfwkusr script. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 309 4-149 Removing the duplicated users and adding the tws83 user in output_security_file. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 311 4-150 Running dumpsec to back up security file. . . . . . . . . . . . . . . . . . . . . . 313 4-151 Running makesec to add new users in the security file . . . . . . . . . . . 313 4-152 Syntax of the datamigrate command . . . . . . . . . . . . . . . . . . . . . . . . . 314 4-153 Running optman miggrunnb to import run number . . . . . . . . . . . . . . . 314 4-154 Running optman miggrunnb to import run number . . . . . . . . . . . . . . . 315 xxii Getting Started with IBM Tivoli Workload Scheduler V8.3

- 25. 4-155 Running optman ls . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 317 4-156 Running the datamigrate command . . . . . . . . . . . . . . . . . . . . . . . . . . 319 4-157 Running the composer display command . . . . . . . . . . . . . . . . . . . . . . 319 4-158 Checking jobs and job streams related to the previous cpuname. . . . 319 4-159 Deleting the FINAL job stream related to the previous cpuname . . . . 319 4-160 Deleting Jnextday job related to the previous cpuname . . . . . . . . . . . 320 4-161 Deleting the previous cpuname from the new Tivoli Workload Scheduler Version 8.3 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 320 4-162 Running the datamigrate command . . . . . . . . . . . . . . . . . . . . . . . . . . 320 4-163 Running the datamigrate command . . . . . . . . . . . . . . . . . . . . . . . . . . 321 4-164 Running the JnextPlan command . . . . . . . . . . . . . . . . . . . . . . . . . . . . 322 5-1 Starting the new DB2 instance . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 339 5-2 The /etc/service entries created by db2icrt . . . . . . . . . . . . . . . . . . . . . . . 339 5-3 Making a backup copy of the Tivoli Workload Scheduler V8.3 database 342 5-4 Restoring the Tivoli Workload Scheduler database from a backup . . . . 343 5-5 Renaming database objects using composer rename . . . . . . . . . . . . . . 345 5-6 Locking a job using the composer lock command . . . . . . . . . . . . . . . . . 350 5-7 Unlocking a job using the composer unlock command . . . . . . . . . . . . . . 350 5-8 Forcing composer to unlock an object . . . . . . . . . . . . . . . . . . . . . . . . . . 351 5-9 Collecting current database statistics . . . . . . . . . . . . . . . . . . . . . . . . . . . 353 5-10 Reorganizing the database . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 353 5-11 Starting WebSphere Application Server Express . . . . . . . . . . . . . . . . . 356 5-12 Stopping WebSphere Application Server Express . . . . . . . . . . . . . . . . 357 5-13 Application server status when not running . . . . . . . . . . . . . . . . . . . . . 358 5-14 Application server status when running . . . . . . . . . . . . . . . . . . . . . . . . 359 5-15 Setting tracing level to tws_all. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 360 5-16 Backing up the application server configuration files . . . . . . . . . . . . . . 361 5-17 Restoring application server configuration files. . . . . . . . . . . . . . . . . . . 362 5-18 Viewing data source properties. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 363 5-19 Changing data source properties . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 366 5-20 Showing application server host properties . . . . . . . . . . . . . . . . . . . . . 367 5-21 Changing host properties . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 369 5-22 Viewing security properties . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 370 5-23 Changing security properties . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 373 5-24 Encrypting passwords in the soap.client.props properties file . . . . . . . 376 5-25 User account definitions in LDIF format . . . . . . . . . . . . . . . . . . . . . . . . 378 5-26 Adding the DN suffix . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 380 5-27 Importing the LDIF file. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 380 5-28 LDAP search results . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 381 5-29 Security properties template configured for an LDAP server . . . . . . . . 384 5-30 Security file template example . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 387 5-31 CLI useropts file . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 389 5-32 Stopping the application server. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 391 Examples xxiii

- 26. 5-33 Editing the soap.client.props file . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 391 5-34 Editing the sas.client.props file . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 391 5-35 Running the ./encryptProfileProperties.sh script . . . . . . . . . . . . . . . . . . 392 5-36 SOAP properties update . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 392 5-37 Updating the soap properties file . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 392 7-1 Sourcing Tivoli Workload Scheduler environment variables (UNIX) . . . . 421 7-2 Sourcing Tivoli Workload Scheduler environment variables (Windows) . 421 7-3 Using the optman command to determine the start of the production day421 7-4 Using the composer command to verify the start of the production day . 422 7-5 Sourcing Tivoli Workload Scheduler environment variables (UNIX) . . . . 424 7-6 Sourcing Tivoli Workload Scheduler environment variables (Windows) . 424 7-7 Using composer command to identify length of the production day . . . . 424 7-8 Sample ON statement for production day longer than one day . . . . . . . 426 7-9 Sample ON statement for production day shorter than one day . . . . . . . 426 7-10 Determining location of MakePlan script . . . . . . . . . . . . . . . . . . . . . . . . 426 7-11 Portion of MakePlan script that calls planman in default installation. . . 427 7-12 Portion of the MakePlan script that calls planman in a 48-hour production day . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 428 7-13 Sample output from a successful planman run . . . . . . . . . . . . . . . . . . . 428 8-1 localopts syntax. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 447 8-2 Local options file example. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 462 9-1 Using the Tivoli Workload Scheduler API classes . . . . . . . . . . . . . . . . . 477 9-2 Querying Tivoli Workload Scheduler jobs using PHP . . . . . . . . . . . . . . . 481 11-1 The /etc/hosts file . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 515 11-2 Example script for copying the database setup scripts and substituting the variables . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 524 11-3 Starting DB2 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 526 11-4 Connecting to the database . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 526 11-5 Creating Tivoli Workload Scheduler tables . . . . . . . . . . . . . . . . . . . . . . 526 11-6 Updating database configuration . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 527 11-7 Installing Tivoli System Automation on node A . . . . . . . . . . . . . . . . . . . 527 11-8 Installing Tivoli System Automation on node B . . . . . . . . . . . . . . . . . . . 527 11-9 Upgrading Tivoli System Automation on node A. . . . . . . . . . . . . . . . . . 528 11-10 Upgrading Tivoli System Automation on node B. . . . . . . . . . . . . . . . . 528 11-11 Installing Tivoli System Automation policies . . . . . . . . . . . . . . . . . . . . 528 11-12 Preparing the Tivoli System Automation domain . . . . . . . . . . . . . . . . 528 11-13 Viewing Tivoli System Automation domain status. . . . . . . . . . . . . . . . 529 11-14 Creating the network tie breaker . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 529 11-15 The netmon.cf file . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 529 11-16 Running the db2set command . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 530 11-17 Editing the /etc/services file. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 530 11-18 Enabling HADR on the primary database . . . . . . . . . . . . . . . . . . . . . . 531 11-19 Enabling HADR on the standby database. . . . . . . . . . . . . . . . . . . . . . 531 xxiv Getting Started with IBM Tivoli Workload Scheduler V8.3