GFS - Google File System

Download as PPT, PDF30 likes14,885 views

The Google File System (GFS) is designed to provide reliable, scalable storage for large files on commodity hardware. It uses a single master server to manage metadata and coordinate replication across multiple chunk servers. Files are split into 64MB chunks which are replicated across servers and stored as regular files. The system prioritizes high throughput over low latency and provides fault tolerance through replication and checksumming to detect data corruption.

1 of 28

Downloaded 1,328 times

Ad

Recommended

Google File System

Google File SystemAmgad Muhammad GFS is a distributed file system designed by Google to store and manage large files on commodity hardware. It is optimized for large streaming reads and writes, with files divided into 64MB chunks that are replicated across multiple servers. The master node manages metadata like file mappings and chunk locations, while chunk servers store and serve data to clients. The system is designed to be fault-tolerant by detecting and recovering from frequent hardware failures.

Google File System

Google File SystemJunyoung Jung The document summarizes the Google File System (GFS). It discusses the key points of GFS's design including:

- Files are divided into fixed-size 64MB chunks for efficiency.

- Metadata is stored on a master server while data chunks are stored on chunkservers.

- The master manages file system metadata and chunk locations while clients communicate with both the master and chunkservers.

- GFS provides features like leases to coordinate updates, atomic appends, and snapshots for consistency and fault tolerance.

Google file system GFS

Google file system GFSzihad164 Google File System is a distributed file system developed by Google to provide efficient and reliable access to large amounts of data across clusters of commodity hardware. It organizes clusters into clients that interface with the system, master servers that manage metadata, and chunkservers that store and serve file data replicated across multiple machines. Updates are replicated for fault tolerance, while the master and chunkservers work together for high performance streaming and random reads and writes of large files.

The Google File System (GFS)

The Google File System (GFS)Romain Jacotin This document summarizes a lecture on the Google File System (GFS). Some key points:

1. GFS was designed for large files and high scalability across thousands of servers. It uses a single master and multiple chunkservers to store and retrieve large file chunks.

2. Files are divided into 64MB chunks which are replicated across servers for reliability. The master manages metadata and chunk locations while clients access chunkservers directly for reads/writes.

3. Atomic record appends allow efficient concurrent writes. Snapshots create instantly consistent copies of files. Leases and replication order ensure consistency across servers.

google file system

google file systemdiptipan The document describes Google File System (GFS), which was designed by Google to store and manage large amounts of data across thousands of commodity servers. GFS consists of a master server that manages metadata and namespace, and chunkservers that store file data blocks. The master monitors chunkservers and maintains replication of data blocks for fault tolerance. GFS uses a simple design to allow it to scale incrementally with growth while providing high reliability and availability through replication and fast recovery from failures.

GOOGLE FILE SYSTEM

GOOGLE FILE SYSTEMJYoTHiSH o.s Designed by Sanjay Ghemawat , Howard Gobioff and Shun-Tak Leung of Google in 2002-03.

Provides fault tolerance, serving large number of clients with high aggregate performance.

The field of Google is beyond the searching.

Google store the data in more than 15 thousands commodity hardware.

Handles the exceptions of Google and other Google specific challenges in their distributed file system.

Google file system

Google file systemLalit Rastogi The document describes Google File System (GFS), which was designed by Google to store and manage large amounts of data across thousands of commodity servers. GFS consists of a master server that manages metadata and namespace, and chunkservers that store file data blocks. The master monitors chunkservers and maintains replication of data blocks for fault tolerance. GFS uses a simple design to allow it to scale incrementally with growth while providing high reliability and availability through replication and fast recovery from failures.

Google file system

Google file systemAnkit Thiranh It contains a little description about the Google File System, a presentation about the research paper written about Google file System.

Google file system

Google file systemRoopesh Jhurani The document describes the Google File System (GFS), which was developed by Google to handle its large-scale distributed data and storage needs. GFS uses a master-slave architecture with the master managing metadata and chunk servers storing file data in 64MB chunks that are replicated across machines. It is designed for high reliability and scalability handling failures through replication and fast recovery. Measurements show it can deliver high throughput to many concurrent readers and writers.

Introduction to Apache ZooKeeper

Introduction to Apache ZooKeeperSaurav Haloi An introductory talk on Apache ZooKeeper at gnuNify - 2013 on 16th Feb '13, organized by Symbiosis Institute of Computer Science & Research, Pune IN

Big data lecture notes

Big data lecture notesMohit Saini Big data is high-volume, high-velocity, and high-variety data that is difficult to process using traditional data management tools. It is characterized by 3Vs: volume of data is growing exponentially, velocity as data streams in real-time, and variety as data comes from many different sources and formats. The document discusses big data analytics techniques to gain insights from large and complex datasets and provides examples of big data sources and applications.

Map reduce in BIG DATA

Map reduce in BIG DATAGauravBiswas9 MapReduce is a programming framework that allows for distributed and parallel processing of large datasets. It consists of a map step that processes key-value pairs in parallel, and a reduce step that aggregates the outputs of the map step. As an example, a word counting problem is presented where words are counted by mapping each word to a key-value pair of the word and 1, and then reducing by summing the counts of each unique word. MapReduce jobs are executed on a cluster in a reliable way using YARN to schedule tasks across nodes, restarting failed tasks when needed.

Big Data Technologies.pdf

Big Data Technologies.pdfRAHULRAHU8 Big data technologies can be categorized as operational or analytical. Operational technologies deal with raw daily data like online transactions, while analytical technologies analyze operational data for business decisions. The document describes several examples of big data technologies categorized by data storage, mining, analytics, and visualization. Common storage technologies include Hadoop, MongoDB, and Cassandra. Data mining tools include Presto, RapidMiner, and Elasticsearch. Analytics are performed using Apache Kafka, Splunk, KNIME, Spark, and R. Popular visualization technologies are Tableau and Plotly.

PPT on Hadoop

PPT on HadoopShubham Parmar The document discusses Hadoop, an open-source software framework that allows distributed processing of large datasets across clusters of computers. It describes Hadoop as having two main components - the Hadoop Distributed File System (HDFS) which stores data across infrastructure, and MapReduce which processes the data in a parallel, distributed manner. HDFS provides redundancy, scalability, and fault tolerance. Together these components provide a solution for businesses to efficiently analyze the large, unstructured "Big Data" they collect.

Corba

CorbaSanoj Kumar CORBA and DCOM are specifications for distributed computing that allow objects to communicate across a network. CORBA uses an Object Request Broker (ORB) as middleware to locate and invoke remote objects transparently. It defines an Interface Definition Language (IDL) and supports location transparency. DCOM is Microsoft's version that extends COM to allow components to interact remotely. It uses proxies on the client side and stubs on the server side to marshal requests and responses.

20. Parallel Databases in DBMS

20. Parallel Databases in DBMSkoolkampus The document discusses different types of parallelism that can be utilized in parallel database systems: I/O parallelism to retrieve relations from multiple disks in parallel, interquery parallelism to run different queries simultaneously, intraquery parallelism to parallelize operations within a single query, and intraoperation parallelism to parallelize individual operations like sort and join. It also covers techniques for partitioning relations across disks and handling skew to balance the workload.

Cloud Computing: Hadoop

Cloud Computing: Hadoopdarugar Hadoop is an open-source software framework that allows for the distributed processing of large data sets across clusters of computers using simple programming models. It is designed to scale up from single servers to thousands of machines, each offering local computation and storage. Hadoop automatically manages data replication and platform failure to ensure very large data sets can be processed efficiently in a reliable, fault-tolerant manner. Common uses of Hadoop include log analysis, data warehousing, web indexing, machine learning, financial analysis, and scientific applications.

Introduction to HDFS

Introduction to HDFSBhavesh Padharia The Hadoop Distributed File System (HDFS) is the primary data storage system used by Hadoop applications. It employs a Master and Slave architecture with a NameNode that manages metadata and DataNodes that store data blocks. The NameNode tracks locations of data blocks and regulates access to files, while DataNodes store file blocks and manage read/write operations as directed by the NameNode. HDFS provides high-performance, scalable access to data across large Hadoop clusters.

Distributed file system

Distributed file systemAnamika Singh The document discusses key concepts related to distributed file systems including:

1. Files are accessed using location transparency where the physical location is hidden from users. File names do not reveal storage locations and names do not change when locations change.

2. Remote files can be mounted to local directories, making them appear local while maintaining location independence. Caching is used to reduce network traffic by storing recently accessed data locally.

3. Fault tolerance is improved through techniques like stateless server designs, file replication across failure independent machines, and read-only replication for consistency. Scalability is achieved by adding new nodes and using decentralized control through clustering.

Hadoop Distributed File System

Hadoop Distributed File SystemRutvik Bapat Hadoop DFS consists of HDFS for storage and MapReduce for processing. HDFS provides massive storage, fault tolerance through data replication, and high throughput access to data. It uses a master-slave architecture with a NameNode managing the file system namespace and DataNodes storing file data blocks. The NameNode ensures data reliability through policies that replicate blocks across racks and nodes. HDFS provides scalability, flexibility and low-cost storage of large datasets.

Seminar Report on Google File System

Seminar Report on Google File SystemVishal Polley Google has designed and implemented a scalable distributed file system for their large distributed data intensive applications. They named it Google File System, GFS.

HADOOP TECHNOLOGY ppt

HADOOP TECHNOLOGY pptsravya raju The most well known technology used for Big Data is Hadoop.

It is actually a large scale batch data processing system

Big Data and Hadoop

Big Data and HadoopFlavio Vit Big Data raises challenges about how to process such vast pool of raw data and how to aggregate value to our lives. For addressing these demands an ecosystem of tools named Hadoop was conceived.

Chapter 4

Chapter 4Ali Broumandnia This document discusses intelligent storage systems. It describes the key components of an intelligent storage system including the front end, cache, back end, and physical disks. It discusses concepts like front-end command queuing, cache structure and management, logical unit numbers (LUNs), and LUN masking. The document also provides examples of high-end and midrange intelligent storage arrays and describes EMC's CLARiiON and Symmetrix storage systems in particular.

MOBILE BI

MOBILE BIRaminder Pal Singh This document provides an overview of mobile business intelligence (BI) including:

- A definition of mobile BI as enabling insights through mobile-optimized analysis applications.

- Benefits like increased customer satisfaction, collaboration, agility, better time utilization and decision making.

- Trends showing growing adoption of tablets and mobile BI accessing one third of BI by 2013.

- Best practices like limiting dashboards, designing for smaller screens and on-the-go usage, focusing on operational data, and enabling collaboration.

- Security considerations like device security, transmission security, and authentication/authorization.

- Profiles of typical mobile BI users as information collaborators and consumers rather than producers.

Unit 2 - Grid and Cloud Computing

Unit 2 - Grid and Cloud Computingvimalraman The document discusses the Open Grid Services Architecture (OGSA) standard. It describes OGSA's layered architecture including the physical/logical resources layer, web services layer using OGSI, OGSA services layer for core, program execution and data services, and applications layer. It also outlines the functional requirements of OGSA such as interoperability, resource sharing, optimization, quality of service, job execution, data services, security, cost reduction, scalability, and availability.

Lecture1 introduction to big data

Lecture1 introduction to big datahktripathy This document provides a syllabus for a course on big data. The course introduces students to big data concepts like characteristics of data, structured and unstructured data sources, and big data platforms and tools. Students will learn data analysis using R software, big data technologies like Hadoop and MapReduce, mining techniques for frequent patterns and clustering, and analytical frameworks and visualization tools. The goal is for students to be able to identify domains suitable for big data analytics, perform data analysis in R, use Hadoop and MapReduce, apply big data to problems, and suggest ways to use big data to increase business outcomes.

DISTRIBUTED DATABASE WITH RECOVERY TECHNIQUES

DISTRIBUTED DATABASE WITH RECOVERY TECHNIQUESAAKANKSHA JAIN Distributed Database Designs are nothing but multiple, logically related Database systems, physically distributed over several sites, using a Computer Network, which is usually under a centralized site control.

Distributed database design refers to the following problem:

Given a database and its workload, how should the database be split and allocated to sites so as to optimize certain objective function

There are two issues:

(i) Data fragmentation which determines how the data should be fragmented.

(ii) Data allocation which determines how the fragments should be allocated.

tittle

tittleuvolodia The Google File System (GFS) was designed for large file storage with a focus on high bandwidth and reliability. It uses a single master node to manage metadata and multiple chunkservers to store file data replicas. Files are divided into 64MB chunks which are replicated across servers for fault tolerance. The design prioritizes streaming reads and append writes through a relaxed consistency model.

Ad

More Related Content

What's hot (20)

Google file system

Google file systemRoopesh Jhurani The document describes the Google File System (GFS), which was developed by Google to handle its large-scale distributed data and storage needs. GFS uses a master-slave architecture with the master managing metadata and chunk servers storing file data in 64MB chunks that are replicated across machines. It is designed for high reliability and scalability handling failures through replication and fast recovery. Measurements show it can deliver high throughput to many concurrent readers and writers.

Introduction to Apache ZooKeeper

Introduction to Apache ZooKeeperSaurav Haloi An introductory talk on Apache ZooKeeper at gnuNify - 2013 on 16th Feb '13, organized by Symbiosis Institute of Computer Science & Research, Pune IN

Big data lecture notes

Big data lecture notesMohit Saini Big data is high-volume, high-velocity, and high-variety data that is difficult to process using traditional data management tools. It is characterized by 3Vs: volume of data is growing exponentially, velocity as data streams in real-time, and variety as data comes from many different sources and formats. The document discusses big data analytics techniques to gain insights from large and complex datasets and provides examples of big data sources and applications.

Map reduce in BIG DATA

Map reduce in BIG DATAGauravBiswas9 MapReduce is a programming framework that allows for distributed and parallel processing of large datasets. It consists of a map step that processes key-value pairs in parallel, and a reduce step that aggregates the outputs of the map step. As an example, a word counting problem is presented where words are counted by mapping each word to a key-value pair of the word and 1, and then reducing by summing the counts of each unique word. MapReduce jobs are executed on a cluster in a reliable way using YARN to schedule tasks across nodes, restarting failed tasks when needed.

Big Data Technologies.pdf

Big Data Technologies.pdfRAHULRAHU8 Big data technologies can be categorized as operational or analytical. Operational technologies deal with raw daily data like online transactions, while analytical technologies analyze operational data for business decisions. The document describes several examples of big data technologies categorized by data storage, mining, analytics, and visualization. Common storage technologies include Hadoop, MongoDB, and Cassandra. Data mining tools include Presto, RapidMiner, and Elasticsearch. Analytics are performed using Apache Kafka, Splunk, KNIME, Spark, and R. Popular visualization technologies are Tableau and Plotly.

PPT on Hadoop

PPT on HadoopShubham Parmar The document discusses Hadoop, an open-source software framework that allows distributed processing of large datasets across clusters of computers. It describes Hadoop as having two main components - the Hadoop Distributed File System (HDFS) which stores data across infrastructure, and MapReduce which processes the data in a parallel, distributed manner. HDFS provides redundancy, scalability, and fault tolerance. Together these components provide a solution for businesses to efficiently analyze the large, unstructured "Big Data" they collect.

Corba

CorbaSanoj Kumar CORBA and DCOM are specifications for distributed computing that allow objects to communicate across a network. CORBA uses an Object Request Broker (ORB) as middleware to locate and invoke remote objects transparently. It defines an Interface Definition Language (IDL) and supports location transparency. DCOM is Microsoft's version that extends COM to allow components to interact remotely. It uses proxies on the client side and stubs on the server side to marshal requests and responses.

20. Parallel Databases in DBMS

20. Parallel Databases in DBMSkoolkampus The document discusses different types of parallelism that can be utilized in parallel database systems: I/O parallelism to retrieve relations from multiple disks in parallel, interquery parallelism to run different queries simultaneously, intraquery parallelism to parallelize operations within a single query, and intraoperation parallelism to parallelize individual operations like sort and join. It also covers techniques for partitioning relations across disks and handling skew to balance the workload.

Cloud Computing: Hadoop

Cloud Computing: Hadoopdarugar Hadoop is an open-source software framework that allows for the distributed processing of large data sets across clusters of computers using simple programming models. It is designed to scale up from single servers to thousands of machines, each offering local computation and storage. Hadoop automatically manages data replication and platform failure to ensure very large data sets can be processed efficiently in a reliable, fault-tolerant manner. Common uses of Hadoop include log analysis, data warehousing, web indexing, machine learning, financial analysis, and scientific applications.

Introduction to HDFS

Introduction to HDFSBhavesh Padharia The Hadoop Distributed File System (HDFS) is the primary data storage system used by Hadoop applications. It employs a Master and Slave architecture with a NameNode that manages metadata and DataNodes that store data blocks. The NameNode tracks locations of data blocks and regulates access to files, while DataNodes store file blocks and manage read/write operations as directed by the NameNode. HDFS provides high-performance, scalable access to data across large Hadoop clusters.

Distributed file system

Distributed file systemAnamika Singh The document discusses key concepts related to distributed file systems including:

1. Files are accessed using location transparency where the physical location is hidden from users. File names do not reveal storage locations and names do not change when locations change.

2. Remote files can be mounted to local directories, making them appear local while maintaining location independence. Caching is used to reduce network traffic by storing recently accessed data locally.

3. Fault tolerance is improved through techniques like stateless server designs, file replication across failure independent machines, and read-only replication for consistency. Scalability is achieved by adding new nodes and using decentralized control through clustering.

Hadoop Distributed File System

Hadoop Distributed File SystemRutvik Bapat Hadoop DFS consists of HDFS for storage and MapReduce for processing. HDFS provides massive storage, fault tolerance through data replication, and high throughput access to data. It uses a master-slave architecture with a NameNode managing the file system namespace and DataNodes storing file data blocks. The NameNode ensures data reliability through policies that replicate blocks across racks and nodes. HDFS provides scalability, flexibility and low-cost storage of large datasets.

Seminar Report on Google File System

Seminar Report on Google File SystemVishal Polley Google has designed and implemented a scalable distributed file system for their large distributed data intensive applications. They named it Google File System, GFS.

HADOOP TECHNOLOGY ppt

HADOOP TECHNOLOGY pptsravya raju The most well known technology used for Big Data is Hadoop.

It is actually a large scale batch data processing system

Big Data and Hadoop

Big Data and HadoopFlavio Vit Big Data raises challenges about how to process such vast pool of raw data and how to aggregate value to our lives. For addressing these demands an ecosystem of tools named Hadoop was conceived.

Chapter 4

Chapter 4Ali Broumandnia This document discusses intelligent storage systems. It describes the key components of an intelligent storage system including the front end, cache, back end, and physical disks. It discusses concepts like front-end command queuing, cache structure and management, logical unit numbers (LUNs), and LUN masking. The document also provides examples of high-end and midrange intelligent storage arrays and describes EMC's CLARiiON and Symmetrix storage systems in particular.

MOBILE BI

MOBILE BIRaminder Pal Singh This document provides an overview of mobile business intelligence (BI) including:

- A definition of mobile BI as enabling insights through mobile-optimized analysis applications.

- Benefits like increased customer satisfaction, collaboration, agility, better time utilization and decision making.

- Trends showing growing adoption of tablets and mobile BI accessing one third of BI by 2013.

- Best practices like limiting dashboards, designing for smaller screens and on-the-go usage, focusing on operational data, and enabling collaboration.

- Security considerations like device security, transmission security, and authentication/authorization.

- Profiles of typical mobile BI users as information collaborators and consumers rather than producers.

Unit 2 - Grid and Cloud Computing

Unit 2 - Grid and Cloud Computingvimalraman The document discusses the Open Grid Services Architecture (OGSA) standard. It describes OGSA's layered architecture including the physical/logical resources layer, web services layer using OGSI, OGSA services layer for core, program execution and data services, and applications layer. It also outlines the functional requirements of OGSA such as interoperability, resource sharing, optimization, quality of service, job execution, data services, security, cost reduction, scalability, and availability.

Lecture1 introduction to big data

Lecture1 introduction to big datahktripathy This document provides a syllabus for a course on big data. The course introduces students to big data concepts like characteristics of data, structured and unstructured data sources, and big data platforms and tools. Students will learn data analysis using R software, big data technologies like Hadoop and MapReduce, mining techniques for frequent patterns and clustering, and analytical frameworks and visualization tools. The goal is for students to be able to identify domains suitable for big data analytics, perform data analysis in R, use Hadoop and MapReduce, apply big data to problems, and suggest ways to use big data to increase business outcomes.

DISTRIBUTED DATABASE WITH RECOVERY TECHNIQUES

DISTRIBUTED DATABASE WITH RECOVERY TECHNIQUESAAKANKSHA JAIN Distributed Database Designs are nothing but multiple, logically related Database systems, physically distributed over several sites, using a Computer Network, which is usually under a centralized site control.

Distributed database design refers to the following problem:

Given a database and its workload, how should the database be split and allocated to sites so as to optimize certain objective function

There are two issues:

(i) Data fragmentation which determines how the data should be fragmented.

(ii) Data allocation which determines how the fragments should be allocated.

Similar to GFS - Google File System (20)

tittle

tittleuvolodia The Google File System (GFS) was designed for large file storage with a focus on high bandwidth and reliability. It uses a single master node to manage metadata and multiple chunkservers to store file data replicas. Files are divided into 64MB chunks which are replicated across servers for fault tolerance. The design prioritizes streaming reads and append writes through a relaxed consistency model.

Gfs google-file-system-13331

Gfs google-file-system-13331Fengchang Xie The Google File System (GFS) is a scalable distributed file system designed by Google to provide reliable, scalable storage and high performance for large datasets and workloads. It uses low-cost commodity hardware and is optimized for large files, streaming reads and writes, and high throughput. The key aspects of GFS include using a single master node to manage metadata, chunking files into 64MB chunks distributed across multiple chunk servers, replicating chunks for reliability, and optimizing for large sequential reads and appends. GFS provides high availability, fault tolerance, and data integrity through replication, fast recovery, and checksum verification.

Gfs介绍

Gfs介绍yiditushe GFS is a file system designed by Google to share data across large clusters of commodity servers and PCs. It uses a master server to manage metadata and chunk servers to store and serve file data in 64MB chunks. The design aims to detect and recover from failures automatically while supporting large files, streaming reads and writes, and concurrent appends across multiple clients. The client API mimics UNIX but provides only append semantics without consistency guarantees between clients.

Advance google file system

Advance google file systemLalit Rastogi This document summarizes the Google File System (GFS). It describes the key components of GFS including data flow, master operation for namespace management and locking, metadata management, enhanced operations like atomic record append and snapshots, garbage collection, and conclusions. The master manages metadata and locks for operations while data blocks are stored across chunkservers. GFS provides an incremental, fault-tolerant storage solution to meet Google's requirements.

Google File System

Google File SystemDreamJobs1 The document discusses the Google File System (GFS), which was developed by Google to handle large files across thousands of commodity servers. It provides three main functions: (1) dividing files into chunks and replicating chunks for fault tolerance, (2) using a master server to manage metadata and coordinate clients and chunkservers, and (3) prioritizing high throughput over low latency. The system is designed to reliably store very large files and enable high-speed streaming reads and writes.

Gfs

Gfsravi kiran The document describes Google File System (GFS), which was designed by Google to address the need for large scale data storage. GFS uses a master-chunk server architecture, where a single master manages metadata and chunk servers store file data in 64MB chunks that are replicated for fault tolerance. Key features include scalability, reliability, record appending for concurrent writes, and snapshots. The architecture is fault tolerant through replication and components like shadow masters can take over if the primary master fails.

Google

Googlerpaikrao The Google File System (GFS) is designed for large datasets and frequent component failures. It uses a single master node to track metadata for files broken into large chunks and stored across multiple chunkservers. The design prioritizes high throughput for large streaming reads and writes over small random access. Fault tolerance is achieved through replicating chunks across servers and recovering lost data from logs.

Luxun a Persistent Messaging System Tailored for Big Data Collecting & Analytics

Luxun a Persistent Messaging System Tailored for Big Data Collecting & AnalyticsWilliam Yang a high-throughput, persistent, distributed, publish-subscribe messaging system tailored for big data collecting and analytics

Distributed file systems (from Google)

Distributed file systems (from Google)Sri Prasanna This document summarizes a lecture on distributed file systems. It discusses Network File System (NFS) and the Google File System (GFS). NFS allows remote access to files on servers and uses client caching for efficiency. GFS was designed for Google's need to redundantly store massive amounts of data on unreliable hardware. It uses large file chunks, replication for reliability, and a single master for coordination to provide high throughput for streaming reads of huge files.

advanced Google file System

advanced Google file Systemdiptipan The Google File System (GFS) is a distributed file system designed to provide efficient, reliable access to data stored on commodity hardware. It consists of a single master node that manages metadata and chunk replication, and multiple chunkserver nodes that store file data in chunks. The master maintains metadata mapping files to variable-sized chunks, which are replicated across servers for fault tolerance. It performs tasks like chunk creation, rebalancing, and garbage collection to optimize storage usage and availability. Data flows linearly through servers to minimize latency. The system provides high throughput, incremental growth, and fault tolerance for Google's large-scale computing needs.

Gfs final

Gfs finalAmitSaha123 Google is a multi-billion dollar company. It's one of the big power players on the World Wide Web and beyond. The company relies on a distributed computing system to provide users with the infrastructure they need to access, create and alter data.

Surely Google buys state-of-the-art computers and servers to keep things running smoothly, right?

Wrong. The machines that power Google's operations aren't cutting-edge power computers with lots of bells and whistles. In fact, they're relatively inexpensive machines running on Linux operating systems. How can one of the most influential companies on the Web rely on cheap hardware? It's due to the Google File System (GFS), which capitalizes on the strengths of off-the-shelf servers while compensating for any hardware weaknesses. It's all in the design.

Google uses the GFS to organize and manipulate huge files and to allow application developers the research and development resources they require. The GFS is unique to Google and isn't for sale. But it could serve as a model for file systems for organizations with similar needs.

Lec3 Dfs

Lec3 Dfsmobius.cn The document summarizes a lecture on distributed file systems. It discusses Network File System (NFS), including its protocol, client caching, and tradeoffs. It also discusses the Google File System (GFS), including its assumptions, design decisions like single master architecture and chunk replication, relaxed consistency model, and responsibilities of the master in coordinating metadata and fault tolerance through replication.

Distributed computing seminar lecture 3 - distributed file systems

Distributed computing seminar lecture 3 - distributed file systemstugrulh The document summarizes a lecture on distributed file systems. It discusses Network File System (NFS), including its protocol, client caching, and tradeoffs. It also discusses the Google File System (GFS), including its assumptions, design decisions like single master architecture and chunk replication, relaxed consistency model, and responsibilities of the master in coordinating metadata and fault tolerance.

Lalit

Lalitdiptipan The document describes Google File System (GFS), which was designed by Google to store and manage large amounts of data across thousands of commodity servers. GFS consists of a master server that manages metadata and namespace, and chunkservers that store file data blocks. The master monitors chunkservers and maintains replication of data blocks for fault tolerance. GFS uses a simple design to allow it to scale incrementally with growth while providing high reliability and availability through replication and fast recovery from failures.

CH08.pdf

CH08.pdfImranKhan880955 This document discusses different techniques for organizing main memory, including contiguous allocation, segmentation, and paging. Contiguous allocation allocates each process to a single contiguous block of memory, limiting multiprogramming. Segmentation and paging allow non-contiguous allocation through memory mappings. Paging maps virtual to physical addresses using a page table, with pages typically being 4KB each. Context switch time can increase significantly if processes need to be swapped in from disk. Fragmentation also limits available memory as free spaces become scattered and non-contiguous.

Cloud computing UNIT 2.1 presentation in

Cloud computing UNIT 2.1 presentation inRahulBhole12 Cloud storage allows users to store files online through cloud storage providers like Apple iCloud, Dropbox, Google Drive, Amazon Cloud Drive, and Microsoft SkyDrive. These providers offer various amounts of free storage and options to purchase additional storage. They allow files to be securely uploaded, accessed, and synced across devices. The best cloud storage provider depends on individual needs and preferences regarding storage space requirements and features offered.

Dsm (Distributed computing)

Dsm (Distributed computing)Sri Prasanna The document summarizes distributed shared memory (DSM), which allows networked computers to share a region of virtual memory in a way that appears local. The key points are:

1) DSM uses physical memory on each node to cache pages of shared virtual address space, making it appear like local memory to processes.

2) On a page fault, a DSM protocol is used to retrieve the requested page from the remote node holding it.

3) Simple designs have a single node hold each page or use a centralized directory, but these can become bottlenecks. Distributed directories and replication improve performance.

4) Consistency models define when modifications are visible, from strict sequential consistency to more relaxed models like release consistency.

Cómo se diseña una base de datos que pueda ingerir más de cuatro millones de ...

Cómo se diseña una base de datos que pueda ingerir más de cuatro millones de ...javier ramirez En esta sesión voy a contar las decisiones técnicas que tomamos al desarrollar QuestDB, una base de datos Open Source para series temporales compatible con Postgres, y cómo conseguimos escribir más de cuatro millones de filas por segundo sin bloquear o enlentecer las consultas.

Hablaré de cosas como (zero) Garbage Collection, vectorización de instrucciones usando SIMD, reescribir en lugar de reutilizar para arañar microsegundos, aprovecharse de los avances en procesadores, discos duros y sistemas operativos, como por ejemplo el soporte de io_uring, o del balance entre experiencia de usuario y rendimiento cuando se plantean nuevas funcionalidades.

Serverless (Distributed computing)

Serverless (Distributed computing)Sri Prasanna The document discusses peer-to-peer and serverless networking models. It describes how clients in peer-to-peer networks can provide unused storage and computing resources. Examples of current peer-to-peer file sharing systems like BitTorrent are explained. The benefits of distributed and grid computing systems are discussed. Issues around security, privacy, and standards in peer-to-peer networks are also covered.

Ad

Recently uploaded (20)

Build Your Own Copilot & Agents For Devs

Build Your Own Copilot & Agents For DevsBrian McKeiver May 2nd, 2025 talk at StirTrek 2025 Conference.

IEDM 2024 Tutorial2_Advances in CMOS Technologies and Future Directions for C...

IEDM 2024 Tutorial2_Advances in CMOS Technologies and Future Directions for C...organizerofv IEDM 2024 Tutorial2

Role of Data Annotation Services in AI-Powered Manufacturing

Role of Data Annotation Services in AI-Powered ManufacturingAndrew Leo From predictive maintenance to robotic automation, AI is driving the future of manufacturing. But without high-quality annotated data, even the smartest models fall short.

Discover how data annotation services are powering accuracy, safety, and efficiency in AI-driven manufacturing systems.

Precision in data labeling = Precision on the production floor.

Linux Professional Institute LPIC-1 Exam.pdf

Linux Professional Institute LPIC-1 Exam.pdfRHCSA Guru Introduction to LPIC-1 Exam - overview, exam details, price and job opportunities

Into The Box Conference Keynote Day 1 (ITB2025)

Into The Box Conference Keynote Day 1 (ITB2025)Ortus Solutions, Corp This is the keynote of the Into the Box conference, highlighting the release of the BoxLang JVM language, its key enhancements, and its vision for the future.

Manifest Pre-Seed Update | A Humanoid OEM Deeptech In France

Manifest Pre-Seed Update | A Humanoid OEM Deeptech In Francechb3 The latest updates on Manifest's pre-seed stage progress.

HCL Nomad Web – Best Practices and Managing Multiuser Environments

HCL Nomad Web – Best Practices and Managing Multiuser Environmentspanagenda Webinar Recording: https://www.panagenda.com/webinars/hcl-nomad-web-best-practices-and-managing-multiuser-environments/

HCL Nomad Web is heralded as the next generation of the HCL Notes client, offering numerous advantages such as eliminating the need for packaging, distribution, and installation. Nomad Web client upgrades will be installed “automatically” in the background. This significantly reduces the administrative footprint compared to traditional HCL Notes clients. However, troubleshooting issues in Nomad Web present unique challenges compared to the Notes client.

Join Christoph and Marc as they demonstrate how to simplify the troubleshooting process in HCL Nomad Web, ensuring a smoother and more efficient user experience.

In this webinar, we will explore effective strategies for diagnosing and resolving common problems in HCL Nomad Web, including

- Accessing the console

- Locating and interpreting log files

- Accessing the data folder within the browser’s cache (using OPFS)

- Understand the difference between single- and multi-user scenarios

- Utilizing Client Clocking

Mobile App Development Company in Saudi Arabia

Mobile App Development Company in Saudi ArabiaSteve Jonas EmizenTech is a globally recognized software development company, proudly serving businesses since 2013. With over 11+ years of industry experience and a team of 200+ skilled professionals, we have successfully delivered 1200+ projects across various sectors. As a leading Mobile App Development Company In Saudi Arabia we offer end-to-end solutions for iOS, Android, and cross-platform applications. Our apps are known for their user-friendly interfaces, scalability, high performance, and strong security features. We tailor each mobile application to meet the unique needs of different industries, ensuring a seamless user experience. EmizenTech is committed to turning your vision into a powerful digital product that drives growth, innovation, and long-term success in the competitive mobile landscape of Saudi Arabia.

Heap, Types of Heap, Insertion and Deletion

Heap, Types of Heap, Insertion and DeletionJaydeep Kale This pdf will explain what is heap, its type, insertion and deletion in heap and Heap sort

Procurement Insights Cost To Value Guide.pptx

Procurement Insights Cost To Value Guide.pptxJon Hansen Procurement Insights integrated Historic Procurement Industry Archives, serves as a powerful complement — not a competitor — to other procurement industry firms. It fills critical gaps in depth, agility, and contextual insight that most traditional analyst and association models overlook.

Learn more about this value- driven proprietary service offering here.

#StandardsGoals for 2025: Standards & certification roundup - Tech Forum 2025

#StandardsGoals for 2025: Standards & certification roundup - Tech Forum 2025BookNet Canada Book industry standards are evolving rapidly. In the first part of this session, we’ll share an overview of key developments from 2024 and the early months of 2025. Then, BookNet’s resident standards expert, Tom Richardson, and CEO, Lauren Stewart, have a forward-looking conversation about what’s next.

Link to recording, transcript, and accompanying resource: https://bnctechforum.ca/sessions/standardsgoals-for-2025-standards-certification-roundup/

Presented by BookNet Canada on May 6, 2025 with support from the Department of Canadian Heritage.

Generative Artificial Intelligence (GenAI) in Business

Generative Artificial Intelligence (GenAI) in BusinessDr. Tathagat Varma My talk for the Indian School of Business (ISB) Emerging Leaders Program Cohort 9. In this talk, I discussed key issues around adoption of GenAI in business - benefits, opportunities and limitations. I also discussed how my research on Theory of Cognitive Chasms helps address some of these issues

Splunk Security Update | Public Sector Summit Germany 2025

Splunk Security Update | Public Sector Summit Germany 2025Splunk Splunk Security Update

Sprecher: Marcel Tanuatmadja

Big Data Analytics Quick Research Guide by Arthur Morgan

Big Data Analytics Quick Research Guide by Arthur MorganArthur Morgan This is a Quick Research Guide (QRG).

QRGs include the following:

- A brief, high-level overview of the QRG topic.

- A milestone timeline for the QRG topic.

- Links to various free online resource materials to provide a deeper dive into the QRG topic.

- Conclusion and a recommendation for at least two books available in the SJPL system on the QRG topic.

QRGs planned for the series:

- Artificial Intelligence QRG

- Quantum Computing QRG

- Big Data Analytics QRG

- Spacecraft Guidance, Navigation & Control QRG (coming 2026)

- UK Home Computing & The Birth of ARM QRG (coming 2027)

Any questions or comments?

- Please contact Arthur Morgan at [email protected].

100% human made.

AI Changes Everything – Talk at Cardiff Metropolitan University, 29th April 2...

AI Changes Everything – Talk at Cardiff Metropolitan University, 29th April 2...Alan Dix Talk at the final event of Data Fusion Dynamics: A Collaborative UK-Saudi Initiative in Cybersecurity and Artificial Intelligence funded by the British Council UK-Saudi Challenge Fund 2024, Cardiff Metropolitan University, 29th April 2025

https://alandix.com/academic/talks/CMet2025-AI-Changes-Everything/

Is AI just another technology, or does it fundamentally change the way we live and think?

Every technology has a direct impact with micro-ethical consequences, some good, some bad. However more profound are the ways in which some technologies reshape the very fabric of society with macro-ethical impacts. The invention of the stirrup revolutionised mounted combat, but as a side effect gave rise to the feudal system, which still shapes politics today. The internal combustion engine offers personal freedom and creates pollution, but has also transformed the nature of urban planning and international trade. When we look at AI the micro-ethical issues, such as bias, are most obvious, but the macro-ethical challenges may be greater.

At a micro-ethical level AI has the potential to deepen social, ethnic and gender bias, issues I have warned about since the early 1990s! It is also being used increasingly on the battlefield. However, it also offers amazing opportunities in health and educations, as the recent Nobel prizes for the developers of AlphaFold illustrate. More radically, the need to encode ethics acts as a mirror to surface essential ethical problems and conflicts.

At the macro-ethical level, by the early 2000s digital technology had already begun to undermine sovereignty (e.g. gambling), market economics (through network effects and emergent monopolies), and the very meaning of money. Modern AI is the child of big data, big computation and ultimately big business, intensifying the inherent tendency of digital technology to concentrate power. AI is already unravelling the fundamentals of the social, political and economic world around us, but this is a world that needs radical reimagining to overcome the global environmental and human challenges that confront us. Our challenge is whether to let the threads fall as they may, or to use them to weave a better future.

Cyber Awareness overview for 2025 month of security

Cyber Awareness overview for 2025 month of securityriccardosl1 Cyber awareness training educates employees on risk associated with internet and malicious emails

Technology Trends in 2025: AI and Big Data Analytics

Technology Trends in 2025: AI and Big Data AnalyticsInData Labs At InData Labs, we have been keeping an ear to the ground, looking out for AI-enabled digital transformation trends coming our way in 2025. Our report will provide a look into the technology landscape of the future, including:

-Artificial Intelligence Market Overview

-Strategies for AI Adoption in 2025

-Anticipated drivers of AI adoption and transformative technologies

-Benefits of AI and Big data for your business

-Tips on how to prepare your business for innovation

-AI and data privacy: Strategies for securing data privacy in AI models, etc.

Download your free copy nowand implement the key findings to improve your business.

Drupalcamp Finland – Measuring Front-end Energy Consumption

Drupalcamp Finland – Measuring Front-end Energy ConsumptionExove How to measure web front-end energy consumption using Firefox Profiler. Presented in DrupalCamp Finland on April 25th, 2025.

Special Meetup Edition - TDX Bengaluru Meetup #52.pptx

Special Meetup Edition - TDX Bengaluru Meetup #52.pptxshyamraj55 We’re bringing the TDX energy to our community with 2 power-packed sessions:

🛠️ Workshop: MuleSoft for Agentforce

Explore the new version of our hands-on workshop featuring the latest Topic Center and API Catalog updates.

📄 Talk: Power Up Document Processing

Dive into smart automation with MuleSoft IDP, NLP, and Einstein AI for intelligent document workflows.

Ad

GFS - Google File System

- 1. The Google File System Tut Chi Io

- 2. Design Overview – Assumption Inexpensive commodity hardware Large files: Multi-GB Workloads Large streaming reads Small random reads Large, sequential appends Concurrent append to the same file High Throughput > Low Latency

- 3. Design Overview – Interface Create Delete Open Close Read Write Snapshot Record Append

- 4. Design Overview – Architecture Single master, multiple chunk servers, multiple clients User-level process running on commodity Linux machine GFS client code linked into each client application to communicate File -> 64MB chunks -> Linux files on local disks of chunk servers replicated on multiple chunk servers (3r) Cache metadata but not chunk on clients

- 5. Design Overview – Single Master Why centralization? Simplicity! Global knowledge is needed for Chunk placement Replication decisions

- 6. Design Overview – Chunk Size 64MB – Much Larger than ordinary, why? Advantages Reduce client-master interaction Reduce network overhead Reduce the size of the metadata Disadvantages Internal fragmentation Solution: lazy space allocation Hot Spots – many clients accessing a 1-chunk file, e.g. executables Solution: Higher replication factor Stagger application start times Client-to-client communication

- 7. Design Overview – Metadata File & chunk namespaces In master’s memory In master’s and chunk servers’ storage File-chunk mapping In master’s memory In master’s and chunk servers’ storage Location of chunk replicas In master’s memory Ask chunk servers when Master starts Chunk server joins the cluster If persistent, master and chunk servers must be in sync

- 8. Design Overview – Metadata – In-memory DS Why in-memory data structure for the master? Fast! For GC and LB Will it pose a limit on the number of chunks -> total capacity? No, a 64MB chunk needs less than 64B metadata (640TB needs less than 640MB) Most chunks are full Prefix compression on file names

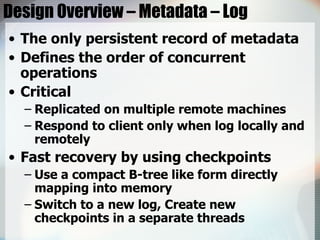

- 9. Design Overview – Metadata – Log The only persistent record of metadata Defines the order of concurrent operations Critical Replicated on multiple remote machines Respond to client only when log locally and remotely Fast recovery by using checkpoints Use a compact B-tree like form directly mapping into memory Switch to a new log, Create new checkpoints in a separate threads

- 10. Design Overview – Consistency Model Consistent All clients will see the same data, regardless of which replicas they read from Defined Consistent, and clients will see what the mutation writes in its entirety

- 11. Design Overview – Consistency Model After a sequence of success, a region is guaranteed to be defined Same order on all replicas Chunk version number to detect stale replicas Client cache stale chunk locations? Limited by cache entry’s timeout Most files are append-only A Stale replica return a premature end of chunk

- 12. System Interactions – Lease Minimized management overhead Granted by the master to one of the replicas to become the primary Primary picks a serial order of mutation and all replicas follow 60 seconds timeout, can be extended Can be revoked

- 13. System Interactions – Mutation Order Current lease holder? identity of primary location of replicas (cached by client) 3a. data 3b. data 3c. data Write request Primary assign s/n to mutations Applies it Forward write request Operation completed Operation completed Operation completed or Error report

- 14. System Interactions – Data Flow Decouple data flow and control flow Control flow Master -> Primary -> Secondaries Data flow Carefully picked chain of chunk servers Forward to the closest first Distances estimated from IP addresses Linear (not tree), to fully utilize outbound bandwidth (not divided among recipients) Pipelining, to exploit full-duplex links Time to transfer B bytes to R replicas = B/T + RL T: network throughput, L: latency

- 15. System Interactions – Atomic Record Append Concurrent appends are serializable Client specifies only data GFS appends at least once atomically Return the offset to the client Heavily used by Google to use files as multiple-producer/single-consumer queues Merged results from many different clients On failures, the client retries the operation Data are defined, intervening regions are inconsistent A Reader can identify and discard extra padding and record fragments using the checksums

- 16. System Interactions – Snapshot Makes a copy of a file or a directory tree almost instantaneously Use copy-on-write Steps Revokes lease Logs operations to disk Duplicates metadata, pointing to the same chunks Create real duplicate locally Disks are 3 times as fast as 100 Mb Ethernet links

- 17. Master Operation – Namespace Management No per-directory data structure No support for alias Lock over regions of namespace to ensure serialization Lookup table mapping full pathnames to metadata Prefix compression -> In-Memory

- 18. Master Operation – Namespace Locking Each node (file/directory) has a read-write lock Scenario: prevent /home/user/foo from being created while /home/user is being snapshotted to /save/user Snapshot Read locks on /home, /save Write locks on /home/user, /save/user Create Read locks on /home, /home/user Write lock on /home/user/foo

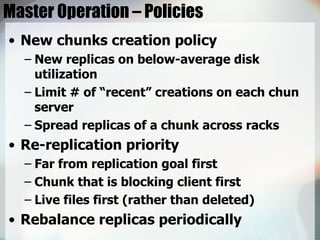

- 19. Master Operation – Policies New chunks creation policy New replicas on below-average disk utilization Limit # of “recent” creations on each chun server Spread replicas of a chunk across racks Re-replication priority Far from replication goal first Chunk that is blocking client first Live files first (rather than deleted) Rebalance replicas periodically

- 20. Master Operation – Garbage Collection Lazy reclamation Logs deletion immediately Rename to a hidden name Remove 3 days later Undelete by renaming back Regular scan for orphaned chunks Not garbage: All references to chunks: file-chunk mapping All chunk replicas: Linux files under designated directory on each chunk server Erase metadata HeartBeat message to tell chunk servers to delete chunks

- 21. Master Operation – Garbage Collection Advantages Simple & reliable Chunk creation may failed Deletion messages may be lost Uniform and dependable way to clean up unuseful replicas Done in batches and the cost is amortized Done when the master is relatively free Safety net against accidental, irreversible deletion

- 22. Master Operation – Garbage Collection Disadvantage Hard to fine tune when storage is tight Solution Delete twice explicitly -> expedite storage reclamation Different policies for different parts of the namespace Stale Replica Detection Master maintains a chunk version number

- 23. Fault Tolerance – High Availability Fast Recovery Restore state and start in seconds Do not distinguish normal and abnormal termination Chunk Replication Different replication levels for different parts of the file namespace Keep each chunk fully replicated as chunk servers go offline or detect corrupted replicas through checksum verification

- 24. Fault Tolerance – High Availability Master Replication Log & checkpoints are replicated Master failures? Monitoring infrastructure outside GFS starts a new master process “Shadow” masters Read-only access to the file system when the primary master is down Enhance read availability Reads a replica of the growing operation log

- 25. Fault Tolerance – Data Integrity Use checksums to detect data corruption A chunk(64MB) is broken up into 64KB blocks with 32-bit checksum Chunk server verifies the checksum before returning, no error propagation Record append Incrementally update the checksum for the last block, error will be detected when read Random write Read and verify the first and last block first Perform write, compute new checksums

- 26. Conclusion GFS supports large-scale data processing using commodity hardware Reexamine traditional file system assumption based on application workload and technological environment Treat component failures as the norm rather than the exception Optimize for huge files that are mostly appended Relax the stand file system interface

- 27. Conclusion Fault tolerance Constant monitoring Replicating crucial data Fast and automatic recovery Checksumming to detect data corruption at the disk or IDE subsystem level High aggregate throughput Decouple control and data transfer Minimize operations by large chunk size and by chunk lease

- 28. Reference Sanjay Ghemawat, Howard Gobioff, and Shun-Tak Leung, “The Google File System”